# Latent Semantic Analysis (LSA) Python package

## In brief

This Python package, `LatentSemanticAnalyzer`, has different functions for computations of

Latent Semantic Analysis (LSA) workflows

(using Sparse matrix Linear Algebra.) The package mirrors

the Mathematica implementation [AAp1].

(There is also a corresponding implementation in R; see [AAp2].)

The package provides:

- Class `LatentSemanticAnalyzer`

- Functions for applying Latent Semantic Indexing (LSI) functions on matrix entries

- "Data loader" function for obtaining a `pandas` data frame ~580 abstracts of conference presentations

------

## Installation

To install from GitHub use the shell command:

```shell

python -m pip install git+https://github.com/antononcube/Python-packages.git#egg=LatentSemanticAnalyzer\&subdirectory=LatentSemanticAnalyzer

```

To install from PyPI:

```shell

python -m pip install LatentSemanticAnalyzer

```

-----

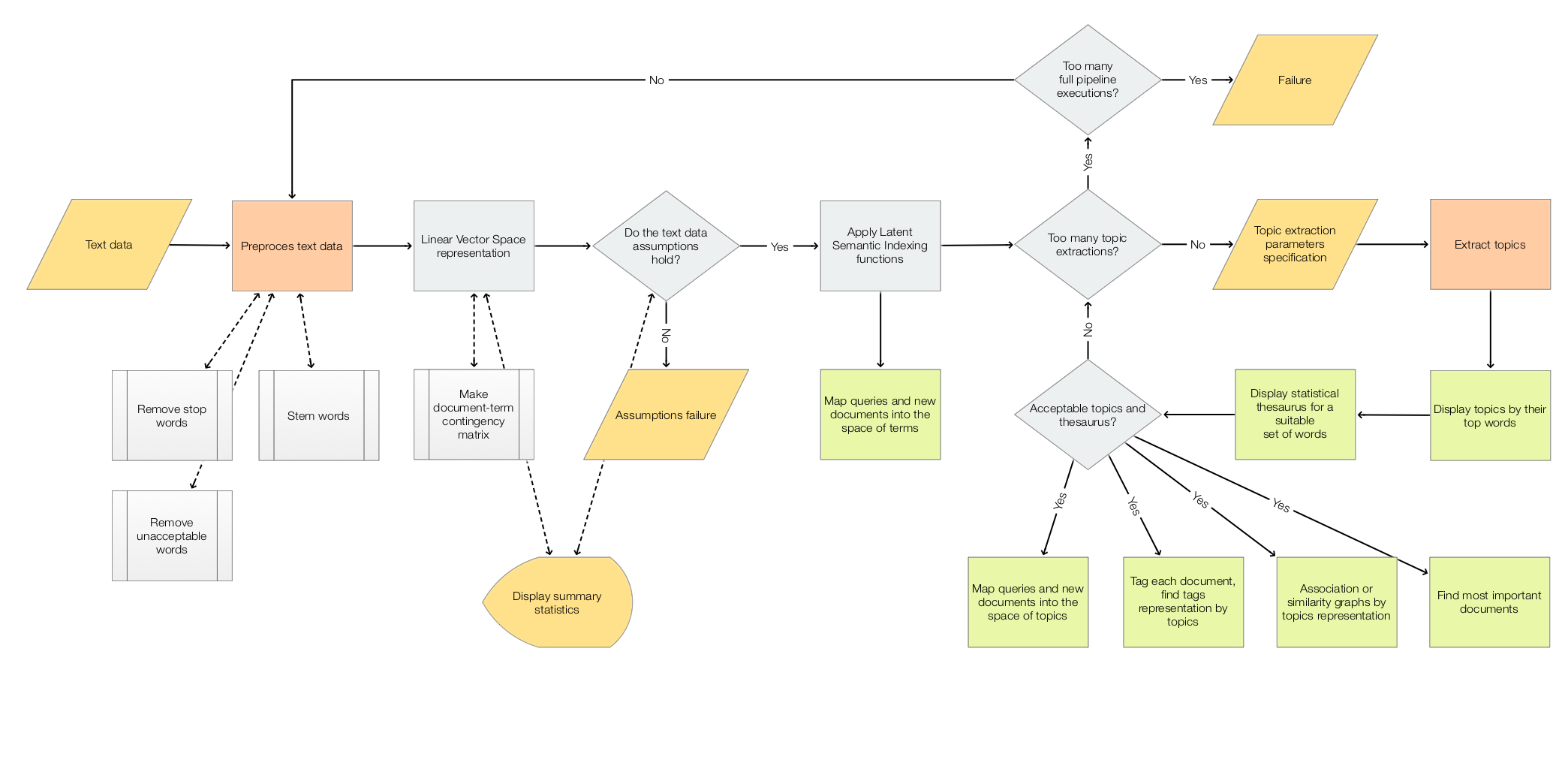

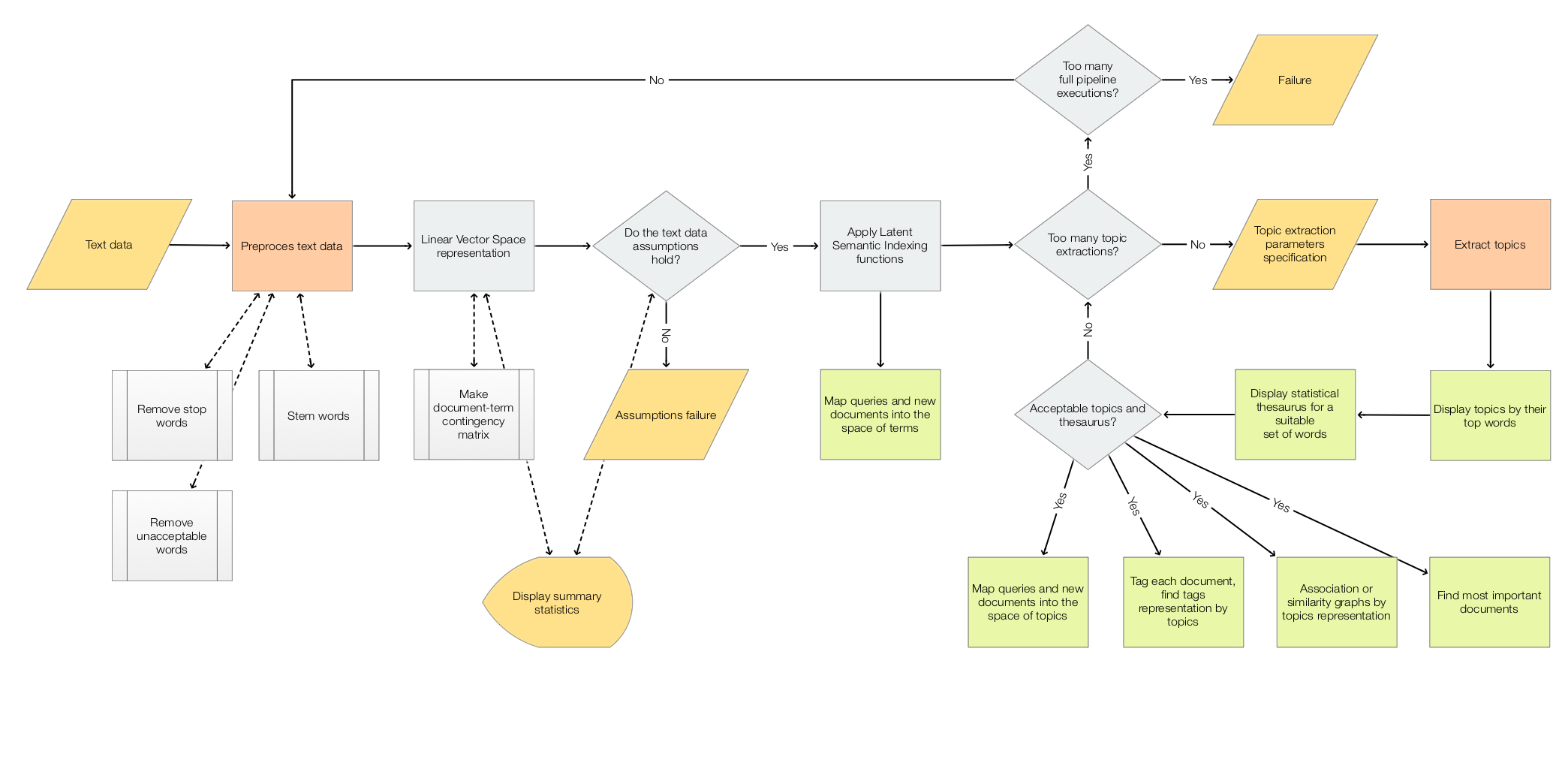

## LSA workflows

The scope of the package is to facilitate the creation and execution of the workflows encompassed in this

flow chart:

For more details see the article

["A monad for Latent Semantic Analysis workflows"](https://mathematicaforprediction.wordpress.com/2019/09/13/a-monad-for-latent-semantic-analysis-workflows/),

[AA1].

------

## Usage example

Here is an example of a LSA pipeline that:

1. Ingests a collection of texts

2. Makes the corresponding document-term matrix using stemming and removing stop words

3. Extracts 40 topics

4. Shows a table with the extracted topics

5. Shows a table with statistical thesaurus entries for selected words

```

import random

from LatentSemanticAnalyzer.LatentSemanticAnalyzer import *

from LatentSemanticAnalyzer.DataLoaders import *

import snowballstemmer

# Collection of texts

dfAbstracts = load_abstracts_data_frame()

docs = dict(zip(dfAbstracts.ID, dfAbstracts.Abstract))

print(len(docs))

# Remove non-strings

docs2 = { k:v for k, v in docs.items() if isinstance(v, str) }

print(len(docs2))

# Stemmer object (to preprocess words in the pipeline below)

stemmerObj = snowballstemmer.stemmer("english")

# Words to show statistical thesaurus entries for

words = ["notebook", "computational", "function", "neural", "talk", "programming"]

# Reproducible results

random.seed(12)

# LSA pipeline

lsaObj = (LatentSemanticAnalyzer()

.make_document_term_matrix(docs=docs2,

stop_words=True,

stemming_rules=True,

min_length=3)

.apply_term_weight_functions(global_weight_func="IDF",

local_weight_func="None",

normalizer_func="Cosine")

.extract_topics(number_of_topics=40, min_number_of_documents_per_term=10, method="NNMF")

.echo_topics_interpretation(number_of_terms=12, wide_form=True)

.echo_statistical_thesaurus(terms=stemmerObj.stemWords(words),

wide_form=True,

number_of_nearest_neighbors=12,

method="cosine",

echo_function=lambda x: print(x.to_string())))

```

------

## Related Python packages

This package is based on the Python package

["SSparseMatrix"](https://pypi.org/project/SSparseMatrix/), [AAp3]

The package

["SparseMatrixRecommender"](https://pypi.org/project/SparseMatrixRecommender/)

also uses LSI functions -- this package uses LSI methods of the class `SparseMatrixRecommender`.

------

## Related Mathematica and R packages

### Mathematica

The Python pipeline above corresponds to the following pipeline for the Mathematica package

[AAp1]:

```mathematica

lsaObj =

LSAMonUnit[aAbstracts]⟹

LSAMonMakeDocumentTermMatrix["StemmingRules" -> Automatic, "StopWords" -> Automatic]⟹

LSAMonEchoDocumentTermMatrixStatistics["LogBase" -> 10]⟹

LSAMonApplyTermWeightFunctions["IDF", "None", "Cosine"]⟹

LSAMonExtractTopics["NumberOfTopics" -> 20, Method -> "NNMF", "MaxSteps" -> 16, "MinNumberOfDocumentsPerTerm" -> 20]⟹

LSAMonEchoTopicsTable["NumberOfTerms" -> 10]⟹

LSAMonEchoStatisticalThesaurus["Words" -> Map[WordData[#, "PorterStem"]&, {"notebook", "computational", "function", "neural", "talk", "programming"}]];

```

### R

The package

[`LSAMon-R`](https://github.com/antononcube/R-packages/tree/master/LSAMon-R),

[AAp2], implements a software monad for LSA workflows.

------

## LSA packages comparison project

The project "Random mandalas deconstruction with R, Python, and Mathematica", [AAr1, AA2],

has documents, diagrams, and (code) notebooks for comparison of LSA application to a collection of images

(in multiple programming languages.)

A big part of the motivation to make the Python package

["RandomMandala"](https://pypi.org/project/RandomMandala), [AAp6],

was to make easier the LSA package comparison.

Mathematica and R have fairly streamlined connections to Python, hence it is easier

to propagate (image) data generated in Python into those systems.

------

## Code generation with natural language commands

### Using grammar-based interpreters

The project "Raku for Prediction", [AAr2, AAv2, AAp7], has a Domain Specific Language (DSL) grammar and interpreters

that allow the generation of LSA code for corresponding Mathematica, Python, R packages.

Here is Command Line Interface (CLI) invocation example that generate code for this package:

```shell

> ToLatentSemanticAnalysisWorkflowCode Python 'create from aDocs; apply LSI functions IDF, None, Cosine; extract 20 topics; show topics table'

# LatentSemanticAnalyzer(aDocs).apply_term_weight_functions(global_weight_func = "IDF", local_weight_func = "None", normalizer_func = "Cosine").extract_topics(number_of_topics = 20).echo_topics_table( )

```

### NLP Template Engine

Here is an example using the NLP Template Engine, [AAr2, AAv3]:

```mathematica

Concretize["create from aDocs; apply LSI functions IDF, None, Cosine; extract 20 topics; show topics table",

"TargetLanguage" -> "Python"]

(*

lsaObj = (LatentSemanticAnalyzer()

.make_document_term_matrix(docs=aDocs, stop_words=None, stemming_rules=None,min_length=3)

.apply_term_weight_functions(global_weight_func='IDF', local_weight_func='None',normalizer_func='Cosine')

.extract_topics(number_of_topics=20, min_number_of_documents_per_term=20, method='SVD')

.echo_topics_interpretation(number_of_terms=10, wide_form=True)

.echo_statistical_thesaurus(terms=stemmerObj.stemWords([\"topics table\"]), wide_form=True, number_of_nearest_neighbors=12, method='cosine', echo_function=lambda x: print(x.to_string())))

*)

```

------

## References

### Articles

[AA1] Anton Antonov,

["A monad for Latent Semantic Analysis workflows"](https://mathematicaforprediction.wordpress.com/2019/09/13/a-monad-for-latent-semantic-analysis-workflows/),

(2019),

[MathematicaForPrediction at WordPress](https://mathematicaforprediction.wordpress.com).

[AA2] Anton Antonov,

["Random mandalas deconstruction in R, Python, and Mathematica"](https://mathematicaforprediction.wordpress.com/2022/03/01/random-mandala-deconstruction-in-r-python-and-mathematica/),

(2022),

[MathematicaForPrediction at WordPress](https://mathematicaforprediction.wordpress.com).

### Mathematica and R Packages

[AAp1] Anton Antonov,

[Monadic Latent Semantic Analysis Mathematica package](https://github.com/antononcube/MathematicaForPrediction/blob/master/MonadicProgramming/MonadicLatentSemanticAnalysis.m),

(2017),

[MathematicaForPrediction at GitHub](https://github.com/antononcube/MathematicaForPrediction).

[AAp2] Anton Antonov,

[Latent Semantic Analysis Monad in R](https://github.com/antononcube/R-packages/tree/master/LSAMon-R)

(2019),

[R-packages at GitHub/antononcube](https://github.com/antononcube/R-packages).

### Python packages

[AAp3] Anton Antonov,

[SSparseMatrix Python package](https://pypi.org/project/SSparseMatrix),

(2021),

[PyPI](https://pypi.org).

[AAp4] Anton Antonov,

[SparseMatrixRecommender Python package](https://pypi.org/project/SparseMatrixRecommender),

(2021),

[PyPI](https://pypi.org).

[AAp5] Anton Antonov,

[RandomDataGenerators Python package](https://pypi.org/project/RandomDataGenerators),

(2021),

[PyPI](https://pypi.org).

[AAp6] Anton Antonov,

[RandomMandala Python package](https://pypi.org/project/RandomMandala),

(2021),

[PyPI](https://pypi.org).

[MZp1] Marinka Zitnik and Blaz Zupan,

[Nimfa: A Python Library for Nonnegative Matrix Factorization](https://pypi.org/project/nimfa/),

(2013-2019),

[PyPI](https://pypi.org).

[SDp1] Snowball Developers,

[SnowballStemmer Python package](https://pypi.org/project/snowballstemmer/),

(2013-2021),

[PyPI](https://pypi.org).

### Raku packages

[AAp7] Anton Antonov,

[DSL::English::LatentSemanticAnalysisWorkflows Raku package](https://github.com/antononcube/Raku-DSL-English-LatentSemanticAnalysisWorkflows),

(2018-2022),

[GitHub/antononcube](https://github.com/antononcube/Raku-DSL-English-LatentSemanticAnalysisWorkflows).

([At raku.land]((https://raku.land/zef:antononcube/DSL::English::LatentSemanticAnalysisWorkflows))).

### Repositories

[AAr1] Anton Antonov,

["Random mandalas deconstruction with R, Python, and Mathematica" presentation project](https://github.com/antononcube/SimplifiedMachineLearningWorkflows-book/tree/master/Presentations/Greater-Boston-useR-Group-Meetup-2022/RandomMandalasDeconstruction),

(2022)

[SimplifiedMachineLearningWorkflows-book at GitHub/antononcube](https://github.com/antononcube/SimplifiedMachineLearningWorkflows-book).

[AAr2] Anton Antonov,

["Raku for Prediction" book project](https://github.com/antononcube/RakuForPrediction-book),

(2021-2022),

[GitHub/antononcube](https://github.com/antononcube).

### Videos

[AAv1] Anton Antonov,

["TRC 2022 Implementation of ML algorithms in Raku"](https://www.youtube.com/watch?v=efRHfjYebs4),

(2022),

[Anton A. Antonov's channel at YouTube](https://www.youtube.com/channel/UC5qMPIsJeztfARXWdIw3Xzw).

[AAv2] Anton Antonov,

["Raku for Prediction"](https://www.youtube.com/watch?v=frpCBjbQtnA),

(2021),

[The Raku Conference (TRC) at YouTube](https://www.youtube.com/channel/UCnKoF-TknjGtFIpU3Bc_jUA).

[AAv3] Anton Antonov,

["NLP Template Engine, Part 1"](https://www.youtube.com/watch?v=a6PvmZnvF9I),

(2021),

[Anton A. Antonov's channel at YouTube](https://www.youtube.com/channel/UC5qMPIsJeztfARXWdIw3Xzw).

[AAv4] Anton Antonov

["Random Mandalas Deconstruction in R, Python, and Mathematica (Greater Boston useR Meetup, Feb 2022)"](https://www.youtube.com/watch?v=nKlcts5aGwY),

(2022),

[Anton A. Antonov's channel at YouTube](https://www.youtube.com/channel/UC5qMPIsJeztfARXWdIw3Xzw).

Raw data

{

"_id": null,

"home_page": "https://github.com/antononcube/Python-packages/tree/main/LatentSemanticAnalyzer",

"name": "LatentSemanticAnalyzer",

"maintainer": "",

"docs_url": null,

"requires_python": ">=3.7",

"maintainer_email": "",

"keywords": "sparse,matrix,sparse matrix,linear algebra,linear,algebra,lsi,latent semantic indexing,dimension,reduction,dimension reduction,svd,singular value decomposition,nnmf,nmf,non-negative matrix factorization",

"author": "Anton Antonov",

"author_email": "antononcube@posteo.net",

"download_url": "https://files.pythonhosted.org/packages/2c/e1/ca7a229bbbb189c2659fd6eab776806f166e87a02258d0066a4796f69ae0/LatentSemanticAnalyzer-0.1.2.tar.gz",

"platform": null,

"description": "# Latent Semantic Analysis (LSA) Python package \n\n## In brief\n\nThis Python package, `LatentSemanticAnalyzer`, has different functions for computations of \nLatent Semantic Analysis (LSA) workflows\n(using Sparse matrix Linear Algebra.) The package mirrors\nthe Mathematica implementation [AAp1]. \n(There is also a corresponding implementation in R; see [AAp2].) \n\nThe package provides: \n- Class `LatentSemanticAnalyzer`\n- Functions for applying Latent Semantic Indexing (LSI) functions on matrix entries\n- \"Data loader\" function for obtaining a `pandas` data frame ~580 abstracts of conference presentations\n\n------\n\n## Installation\n\nTo install from GitHub use the shell command:\n\n```shell\npython -m pip install git+https://github.com/antononcube/Python-packages.git#egg=LatentSemanticAnalyzer\\&subdirectory=LatentSemanticAnalyzer\n```\n\nTo install from PyPI:\n\n```shell\npython -m pip install LatentSemanticAnalyzer\n```\n\n----- \n\n## LSA workflows\n\nThe scope of the package is to facilitate the creation and execution of the workflows encompassed in this\nflow chart:\n\n\n\nFor more details see the article \n[\"A monad for Latent Semantic Analysis workflows\"](https://mathematicaforprediction.wordpress.com/2019/09/13/a-monad-for-latent-semantic-analysis-workflows/),\n[AA1].\n\n------\n\n## Usage example\n\nHere is an example of a LSA pipeline that:\n1. Ingests a collection of texts\n2. Makes the corresponding document-term matrix using stemming and removing stop words\n3. Extracts 40 topics\n4. Shows a table with the extracted topics\n5. Shows a table with statistical thesaurus entries for selected words \n\n```\nimport random\nfrom LatentSemanticAnalyzer.LatentSemanticAnalyzer import *\nfrom LatentSemanticAnalyzer.DataLoaders import *\nimport snowballstemmer\n\n# Collection of texts\ndfAbstracts = load_abstracts_data_frame()\ndocs = dict(zip(dfAbstracts.ID, dfAbstracts.Abstract))\nprint(len(docs))\n\n# Remove non-strings\ndocs2 = { k:v for k, v in docs.items() if isinstance(v, str) }\nprint(len(docs2))\n\n# Stemmer object (to preprocess words in the pipeline below)\nstemmerObj = snowballstemmer.stemmer(\"english\")\n\n# Words to show statistical thesaurus entries for\nwords = [\"notebook\", \"computational\", \"function\", \"neural\", \"talk\", \"programming\"]\n\n# Reproducible results\nrandom.seed(12)\n\n# LSA pipeline\nlsaObj = (LatentSemanticAnalyzer()\n .make_document_term_matrix(docs=docs2,\n stop_words=True,\n stemming_rules=True,\n min_length=3)\n .apply_term_weight_functions(global_weight_func=\"IDF\",\n local_weight_func=\"None\",\n normalizer_func=\"Cosine\")\n .extract_topics(number_of_topics=40, min_number_of_documents_per_term=10, method=\"NNMF\")\n .echo_topics_interpretation(number_of_terms=12, wide_form=True)\n .echo_statistical_thesaurus(terms=stemmerObj.stemWords(words),\n wide_form=True,\n number_of_nearest_neighbors=12,\n method=\"cosine\",\n echo_function=lambda x: print(x.to_string())))\n```\n\n------\n\n## Related Python packages\n\nThis package is based on the Python package \n[\"SSparseMatrix\"](https://pypi.org/project/SSparseMatrix/), [AAp3]\n\nThe package \n[\"SparseMatrixRecommender\"](https://pypi.org/project/SparseMatrixRecommender/)\nalso uses LSI functions -- this package uses LSI methods of the class `SparseMatrixRecommender`.\n\n------\n\n## Related Mathematica and R packages\n\n### Mathematica\n\nThe Python pipeline above corresponds to the following pipeline for the Mathematica package\n[AAp1]:\n\n```mathematica\nlsaObj =\n LSAMonUnit[aAbstracts]\u27f9\n LSAMonMakeDocumentTermMatrix[\"StemmingRules\" -> Automatic, \"StopWords\" -> Automatic]\u27f9\n LSAMonEchoDocumentTermMatrixStatistics[\"LogBase\" -> 10]\u27f9\n LSAMonApplyTermWeightFunctions[\"IDF\", \"None\", \"Cosine\"]\u27f9\n LSAMonExtractTopics[\"NumberOfTopics\" -> 20, Method -> \"NNMF\", \"MaxSteps\" -> 16, \"MinNumberOfDocumentsPerTerm\" -> 20]\u27f9\n LSAMonEchoTopicsTable[\"NumberOfTerms\" -> 10]\u27f9\n LSAMonEchoStatisticalThesaurus[\"Words\" -> Map[WordData[#, \"PorterStem\"]&, {\"notebook\", \"computational\", \"function\", \"neural\", \"talk\", \"programming\"}]];\n```\n\n### R \n\nThe package \n[`LSAMon-R`](https://github.com/antononcube/R-packages/tree/master/LSAMon-R), \n[AAp2], implements a software monad for LSA workflows. \n\n------\n\n## LSA packages comparison project\n\nThe project \"Random mandalas deconstruction with R, Python, and Mathematica\", [AAr1, AA2],\nhas documents, diagrams, and (code) notebooks for comparison of LSA application to a collection of images\n(in multiple programming languages.)\n\nA big part of the motivation to make the Python package \n[\"RandomMandala\"](https://pypi.org/project/RandomMandala), [AAp6], \nwas to make easier the LSA package comparison. \nMathematica and R have fairly streamlined connections to Python, hence it is easier\nto propagate (image) data generated in Python into those systems. \n\n------\n\n## Code generation with natural language commands\n\n### Using grammar-based interpreters\n\nThe project \"Raku for Prediction\", [AAr2, AAv2, AAp7], has a Domain Specific Language (DSL) grammar and interpreters \nthat allow the generation of LSA code for corresponding Mathematica, Python, R packages. \n\nHere is Command Line Interface (CLI) invocation example that generate code for this package:\n\n```shell\n> ToLatentSemanticAnalysisWorkflowCode Python 'create from aDocs; apply LSI functions IDF, None, Cosine; extract 20 topics; show topics table'\n# LatentSemanticAnalyzer(aDocs).apply_term_weight_functions(global_weight_func = \"IDF\", local_weight_func = \"None\", normalizer_func = \"Cosine\").extract_topics(number_of_topics = 20).echo_topics_table( )\n```\n\n### NLP Template Engine\n\nHere is an example using the NLP Template Engine, [AAr2, AAv3]:\n\n```mathematica\nConcretize[\"create from aDocs; apply LSI functions IDF, None, Cosine; extract 20 topics; show topics table\", \n \"TargetLanguage\" -> \"Python\"]\n(* \nlsaObj = (LatentSemanticAnalyzer()\n .make_document_term_matrix(docs=aDocs, stop_words=None, stemming_rules=None,min_length=3)\n .apply_term_weight_functions(global_weight_func='IDF', local_weight_func='None',normalizer_func='Cosine')\n .extract_topics(number_of_topics=20, min_number_of_documents_per_term=20, method='SVD')\n .echo_topics_interpretation(number_of_terms=10, wide_form=True)\n .echo_statistical_thesaurus(terms=stemmerObj.stemWords([\\\"topics table\\\"]), wide_form=True, number_of_nearest_neighbors=12, method='cosine', echo_function=lambda x: print(x.to_string())))\n*)\n\n```\n\n------\n\n## References\n\n### Articles\n\n[AA1] Anton Antonov,\n[\"A monad for Latent Semantic Analysis workflows\"](https://mathematicaforprediction.wordpress.com/2019/09/13/a-monad-for-latent-semantic-analysis-workflows/),\n(2019),\n[MathematicaForPrediction at WordPress](https://mathematicaforprediction.wordpress.com).\n\n[AA2] Anton Antonov,\n[\"Random mandalas deconstruction in R, Python, and Mathematica\"](https://mathematicaforprediction.wordpress.com/2022/03/01/random-mandala-deconstruction-in-r-python-and-mathematica/),\n(2022),\n[MathematicaForPrediction at WordPress](https://mathematicaforprediction.wordpress.com).\n\n### Mathematica and R Packages \n\n[AAp1] Anton Antonov, \n[Monadic Latent Semantic Analysis Mathematica package](https://github.com/antononcube/MathematicaForPrediction/blob/master/MonadicProgramming/MonadicLatentSemanticAnalysis.m),\n(2017),\n[MathematicaForPrediction at GitHub](https://github.com/antononcube/MathematicaForPrediction).\n\n[AAp2] Anton Antonov,\n[Latent Semantic Analysis Monad in R](https://github.com/antononcube/R-packages/tree/master/LSAMon-R)\n(2019),\n[R-packages at GitHub/antononcube](https://github.com/antononcube/R-packages).\n\n### Python packages\n\n[AAp3] Anton Antonov,\n[SSparseMatrix Python package](https://pypi.org/project/SSparseMatrix),\n(2021),\n[PyPI](https://pypi.org).\n\n[AAp4] Anton Antonov,\n[SparseMatrixRecommender Python package](https://pypi.org/project/SparseMatrixRecommender),\n(2021),\n[PyPI](https://pypi.org).\n\n[AAp5] Anton Antonov,\n[RandomDataGenerators Python package](https://pypi.org/project/RandomDataGenerators),\n(2021),\n[PyPI](https://pypi.org).\n\n[AAp6] Anton Antonov,\n[RandomMandala Python package](https://pypi.org/project/RandomMandala),\n(2021),\n[PyPI](https://pypi.org).\n\n[MZp1] Marinka Zitnik and Blaz Zupan,\n[Nimfa: A Python Library for Nonnegative Matrix Factorization](https://pypi.org/project/nimfa/),\n(2013-2019),\n[PyPI](https://pypi.org).\n\n[SDp1] Snowball Developers,\n[SnowballStemmer Python package](https://pypi.org/project/snowballstemmer/),\n(2013-2021),\n[PyPI](https://pypi.org).\n\n### Raku packages\n\n[AAp7] Anton Antonov,\n[DSL::English::LatentSemanticAnalysisWorkflows Raku package](https://github.com/antononcube/Raku-DSL-English-LatentSemanticAnalysisWorkflows),\n(2018-2022),\n[GitHub/antononcube](https://github.com/antononcube/Raku-DSL-English-LatentSemanticAnalysisWorkflows).\n([At raku.land]((https://raku.land/zef:antononcube/DSL::English::LatentSemanticAnalysisWorkflows))).\n\n### Repositories\n\n[AAr1] Anton Antonov,\n[\"Random mandalas deconstruction with R, Python, and Mathematica\" presentation project](https://github.com/antononcube/SimplifiedMachineLearningWorkflows-book/tree/master/Presentations/Greater-Boston-useR-Group-Meetup-2022/RandomMandalasDeconstruction),\n(2022)\n[SimplifiedMachineLearningWorkflows-book at GitHub/antononcube](https://github.com/antononcube/SimplifiedMachineLearningWorkflows-book).\n\n[AAr2] Anton Antonov,\n[\"Raku for Prediction\" book project](https://github.com/antononcube/RakuForPrediction-book),\n(2021-2022),\n[GitHub/antononcube](https://github.com/antononcube).\n\n\n### Videos\n\n[AAv1] Anton Antonov,\n[\"TRC 2022 Implementation of ML algorithms in Raku\"](https://www.youtube.com/watch?v=efRHfjYebs4),\n(2022),\n[Anton A. Antonov's channel at YouTube](https://www.youtube.com/channel/UC5qMPIsJeztfARXWdIw3Xzw).\n\n[AAv2] Anton Antonov,\n[\"Raku for Prediction\"](https://www.youtube.com/watch?v=frpCBjbQtnA),\n(2021),\n[The Raku Conference (TRC) at YouTube](https://www.youtube.com/channel/UCnKoF-TknjGtFIpU3Bc_jUA).\n\n[AAv3] Anton Antonov,\n[\"NLP Template Engine, Part 1\"](https://www.youtube.com/watch?v=a6PvmZnvF9I),\n(2021),\n[Anton A. Antonov's channel at YouTube](https://www.youtube.com/channel/UC5qMPIsJeztfARXWdIw3Xzw).\n\n[AAv4] Anton Antonov\n[\"Random Mandalas Deconstruction in R, Python, and Mathematica (Greater Boston useR Meetup, Feb 2022)\"](https://www.youtube.com/watch?v=nKlcts5aGwY),\n(2022),\n[Anton A. Antonov's channel at YouTube](https://www.youtube.com/channel/UC5qMPIsJeztfARXWdIw3Xzw).\n",

"bugtrack_url": null,

"license": "",

"summary": "Latent Semantic Analysis package based on \"the standard\" Latent Semantic Indexing theory.",

"version": "0.1.2",

"project_urls": {

"Homepage": "https://github.com/antononcube/Python-packages/tree/main/LatentSemanticAnalyzer"

},

"split_keywords": [

"sparse",

"matrix",

"sparse matrix",

"linear algebra",

"linear",

"algebra",

"lsi",

"latent semantic indexing",

"dimension",

"reduction",

"dimension reduction",

"svd",

"singular value decomposition",

"nnmf",

"nmf",

"non-negative matrix factorization"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "e9ece38daa2c3b9f6866a7ee2f8d3a5986c9d346606ad79626a86a9d4a05d5fe",

"md5": "0d2651c9eda65b781cba76d655ef2b67",

"sha256": "29383bdb2db34afba25d9cb7f8ea609d308cb968e7cb546a05e82d7f66243c89"

},

"downloads": -1,

"filename": "LatentSemanticAnalyzer-0.1.2-py3-none-any.whl",

"has_sig": false,

"md5_digest": "0d2651c9eda65b781cba76d655ef2b67",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.7",

"size": 188690,

"upload_time": "2023-10-20T13:35:43",

"upload_time_iso_8601": "2023-10-20T13:35:43.424399Z",

"url": "https://files.pythonhosted.org/packages/e9/ec/e38daa2c3b9f6866a7ee2f8d3a5986c9d346606ad79626a86a9d4a05d5fe/LatentSemanticAnalyzer-0.1.2-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "2ce1ca7a229bbbb189c2659fd6eab776806f166e87a02258d0066a4796f69ae0",

"md5": "5173c179b6ab34f82b50588d0331ab3e",

"sha256": "ca32be725e4bb88ab18124c8eaf482aa88195bde143bb8344fee0aeb75c5ffbb"

},

"downloads": -1,

"filename": "LatentSemanticAnalyzer-0.1.2.tar.gz",

"has_sig": false,

"md5_digest": "5173c179b6ab34f82b50588d0331ab3e",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.7",

"size": 190206,

"upload_time": "2023-10-20T13:35:45",

"upload_time_iso_8601": "2023-10-20T13:35:45.759943Z",

"url": "https://files.pythonhosted.org/packages/2c/e1/ca7a229bbbb189c2659fd6eab776806f166e87a02258d0066a4796f69ae0/LatentSemanticAnalyzer-0.1.2.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2023-10-20 13:35:45",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "antononcube",

"github_project": "Python-packages",

"travis_ci": false,

"coveralls": false,

"github_actions": false,

"lcname": "latentsemanticanalyzer"

}