# Word Embeddings Visualization Tool

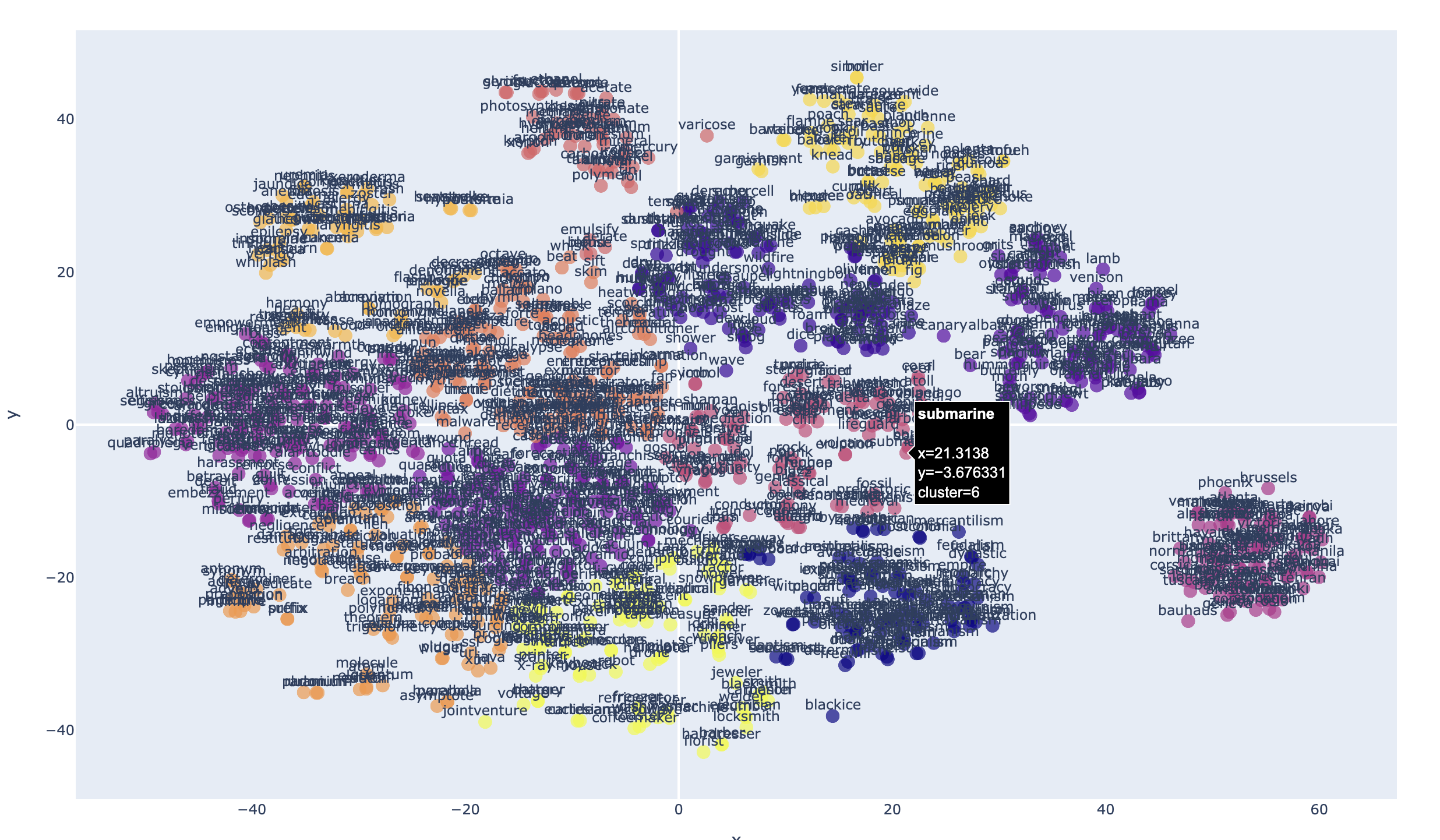

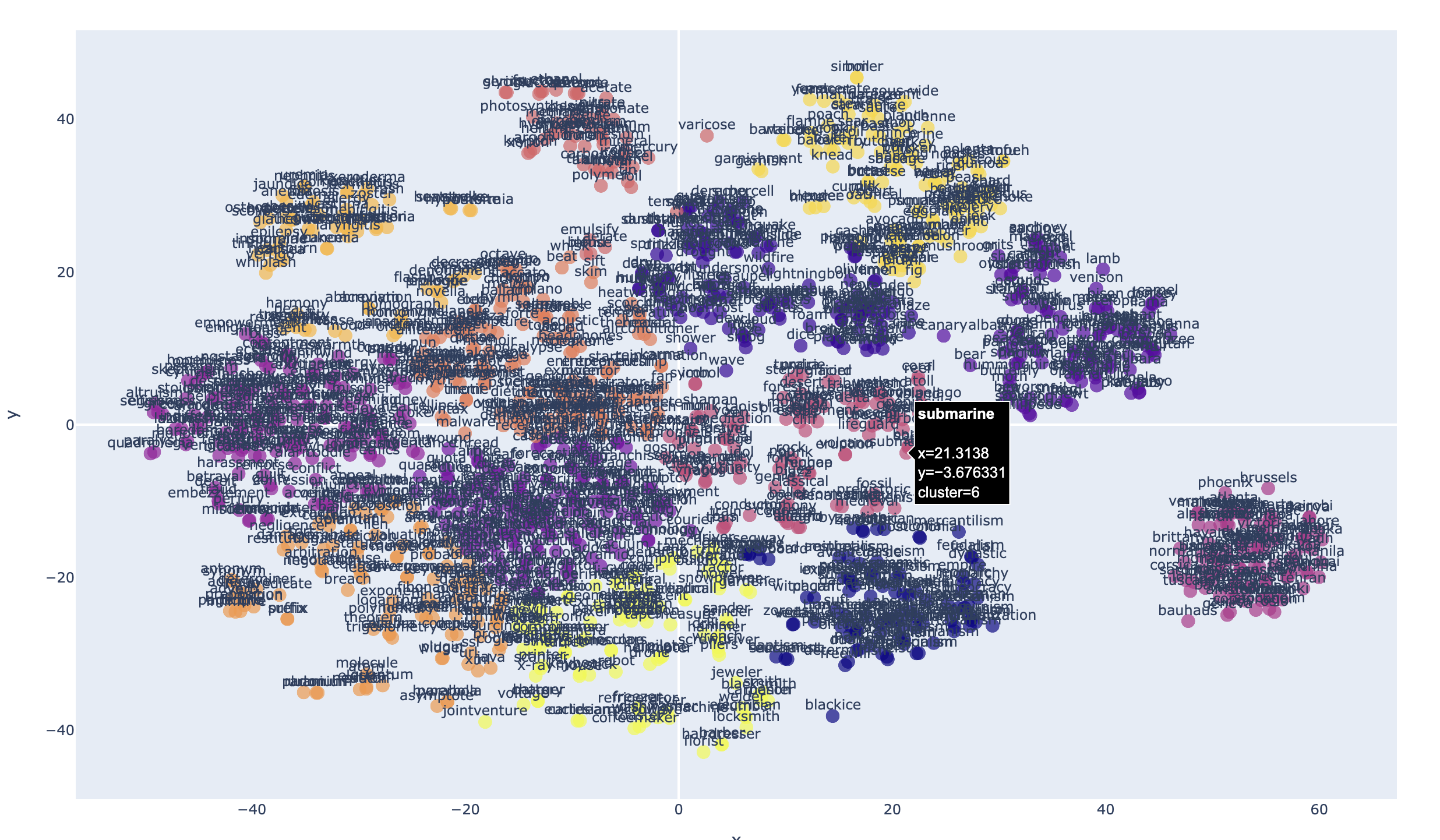

2D Plot Example:

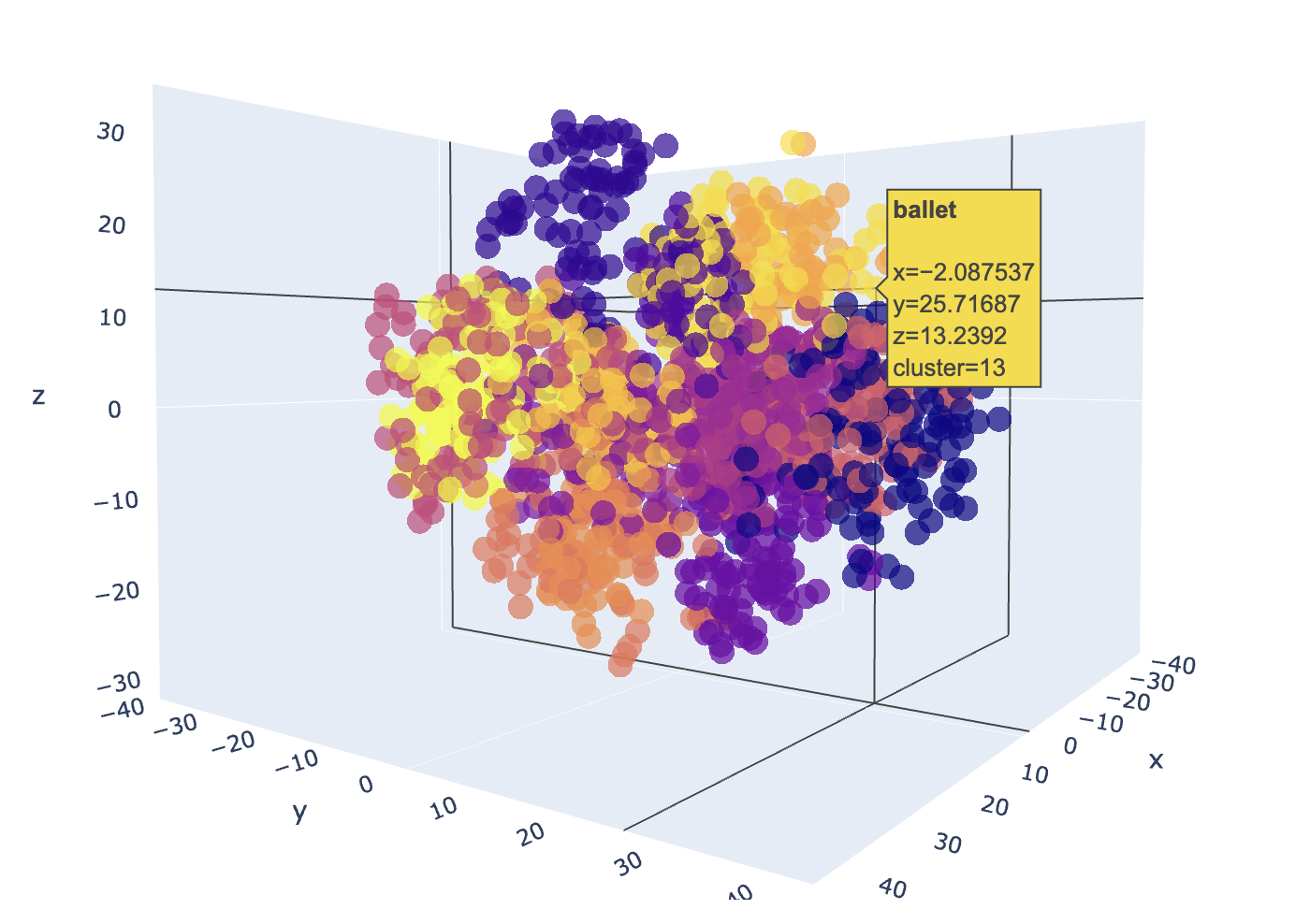

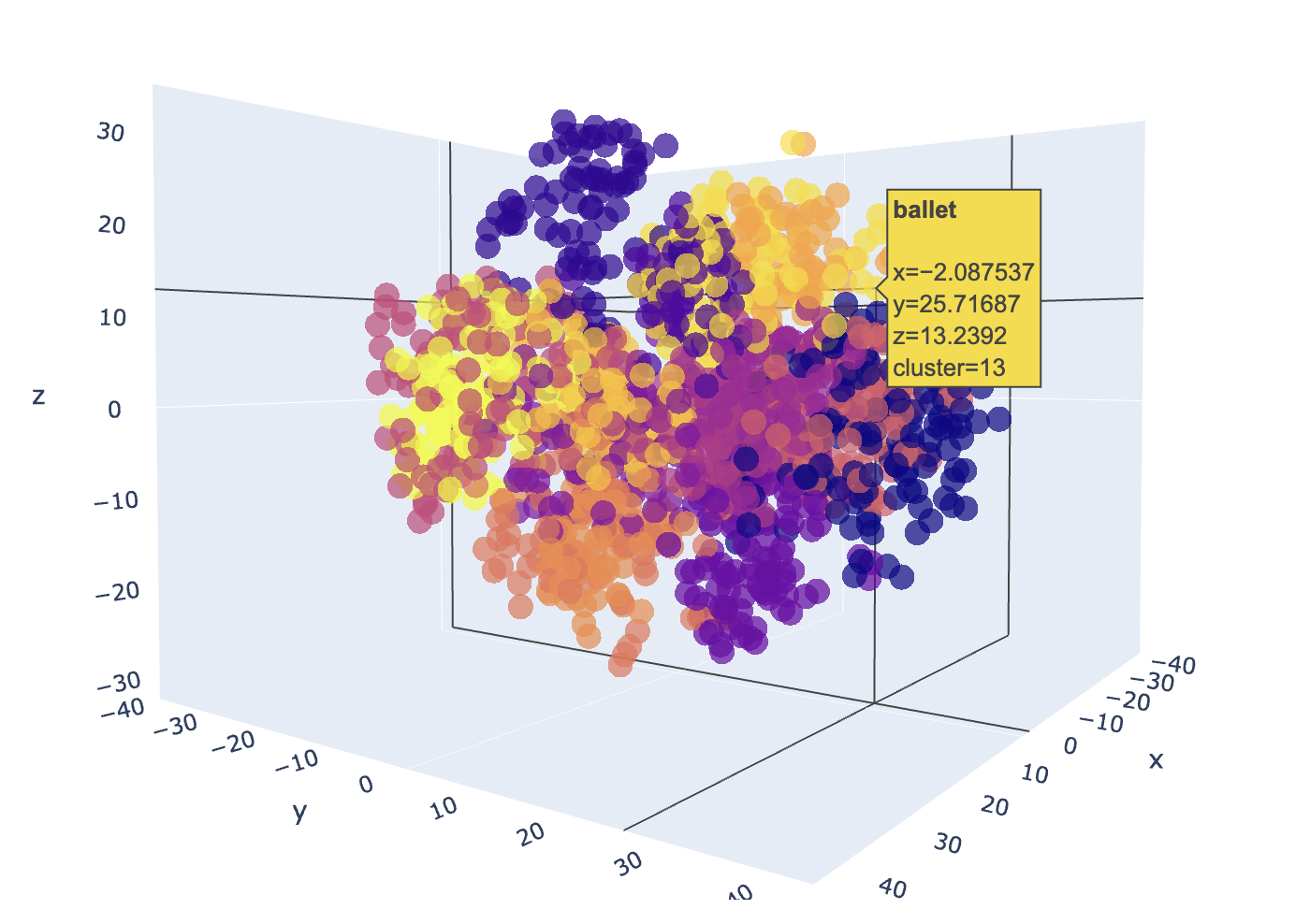

3D Plot Example:

## Description

Word embeddings transform words to highly-dimensional vectors. The vectors attempt to capture the semantic meaning and relationships of the words, so that similar or related words have similar vectors. For example "Cat", "Kitten", "Feline", "Tiger" and "Lion" would have embedding vectors that are similar to varying degree, but would all be very dissimilar to a word like "Toolbox".

The Word2Vec embedding model has 300 dimensions that capture the semantic meaning of each word. It's not possible to visualize 300 dimensions, but we can use dimensional reduction techniques that project the dimensions to a 2 or 3 latent space that preserves much of the relationships that we can easily visualize.

Embedding-plot, is a command line utility that can visualize word embeddings in either 2D or 3D scatter plots using dimensionality reduction techniques (PCA, t-SNE or UMAP) and clustering in a scatter plot.

## Features

- Supports Word2vec pretrained embedding models

- Dimensionality reduction using PCA, t-SNE and UMAP

- Specify a number of clusters to identify in the plot

- Interactive HTML output

## Installation

### Prerequisites

- Python 3.9 or higher.

### Install via pip

embeddings_plot has been published to PyPI as a module that can be installed with pip, which will make the "embeddings-plot" command available in your environment:

```

pip install embeddings_plot

```

### Embedding model

To use this tool, you have to either train your own embedding model or use an existing pretrained model. This utility expects the models to be in word2vec format.

#### Downloading existing pretrained models

Two pretrained models ready to use are:

- https://dl.fbaipublicfiles.com/fasttext/vectors-english/wiki-news-300d-1M.vec.zip

- https://dl.fbaipublicfiles.com/fasttext/vectors-english/crawl-300d-2M.vec.zip

Download one of these models and unzip it, or look for other pretrained word2vec models available on the internet.

#### Training your own model

To train your own model, the provided `train_model.py` script can be used. First you'll need to prepare a data set that you want to train the model with. Your data should be split into sentences, one sentence per line, lower case with all punctuation removed. like the following example:

```text

the quick brown fox jumps over the lazy dog

jack and jill went up the hill to fetch a pail of water

an apple a day keeps the doctor away

to be or not to be that is the question

a stitch in time saves nine

early to bed and early to rise makes a man healthy wealthy and wise

many more sentences should follow

```

The provided `prepare_data.py` script can help prepare data for training. After you have your input data prepared, you can build your model using the `train_model.py` command. Example:

```bash

python train_model.py training_data.txt

```

The above command should produce the `training_data_model.vec` in the current director using the defaults. To see the training options available use the `-h` flag to see the parameter options and help.

## Usage

After installation and download or training of a model, you can use the tool from the command line.

### Basic Command

```

embeddings-plot -m <model_path> -i <input_file> -o <output_file> --label

```

### Parameters

- `-h`, `--help`: Show the help message and exit

- `-m`, `--model`: Path to the word embeddings model file

- `-i`, `--input`: Input text file with words to visualize

- `-o`, `--output`: Output HTML file for the visualization

- `-l`, `--labels`: (Optional) Show labels on the plot

- `-c`, `--clusters`: (Optional) Number of clusters for KMeans. Default is 5.

- `-r`, `--reduction`: (Optional) Method for dimensionality reduction (pca, tsne or umap). Default is tsne

- `-t`, `--title`: (Optional) Sets the title of the output HTML page

- `-d`, `--dimensions`: (Optional) Number of dimensions for the plot 2 (for 2D), or 3 (for 3D). Default is 2

- `-th`, `--theme`: (Optional) Color theme for the plot: "plotly", "plotly_white" or "plotly_dark" (default: plotly)

### Example

```

embeddings-plot --model crawl-300d-2M.vec --input words.txt --output embedding-plot.html --labels --clusters 13

```

Raw data

{

"_id": null,

"home_page": "https://github.com/robert-mcdermott/embeddings_plot",

"name": "embeddings_plot",

"maintainer": "",

"docs_url": null,

"requires_python": ">=3.9,<4.0",

"maintainer_email": "",

"keywords": "word2vec,nlp,embeddings",

"author": "Robert McDermott",

"author_email": "robert.c.mcdermott@gmail.com",

"download_url": "https://files.pythonhosted.org/packages/f4/69/f3175a40d3f1b3e3721a5b7c37800e3f000c4e53a48fb8038136ef42e6f3/embeddings_plot-0.1.6.tar.gz",

"platform": null,

"description": "# Word Embeddings Visualization Tool\n\n2D Plot Example:\n\n\n\n3D Plot Example:\n\n\n\n## Description\n\nWord embeddings transform words to highly-dimensional vectors. The vectors attempt to capture the semantic meaning and relationships of the words, so that similar or related words have similar vectors. For example \"Cat\", \"Kitten\", \"Feline\", \"Tiger\" and \"Lion\" would have embedding vectors that are similar to varying degree, but would all be very dissimilar to a word like \"Toolbox\".\n\nThe Word2Vec embedding model has 300 dimensions that capture the semantic meaning of each word. It's not possible to visualize 300 dimensions, but we can use dimensional reduction techniques that project the dimensions to a 2 or 3 latent space that preserves much of the relationships that we can easily visualize. \n\nEmbedding-plot, is a command line utility that can visualize word embeddings in either 2D or 3D scatter plots using dimensionality reduction techniques (PCA, t-SNE or UMAP) and clustering in a scatter plot. \n\n## Features\n\n- Supports Word2vec pretrained embedding models \n- Dimensionality reduction using PCA, t-SNE and UMAP\n- Specify a number of clusters to identify in the plot\n- Interactive HTML output\n\n## Installation\n\n### Prerequisites\n- Python 3.9 or higher.\n\n### Install via pip\n\nembeddings_plot has been published to PyPI as a module that can be installed with pip, which will make the \"embeddings-plot\" command available in your environment:\n\n```\npip install embeddings_plot \n```\n\n### Embedding model\n\nTo use this tool, you have to either train your own embedding model or use an existing pretrained model. This utility expects the models to be in word2vec format. \n\n#### Downloading existing pretrained models \n\nTwo pretrained models ready to use are:\n\n- https://dl.fbaipublicfiles.com/fasttext/vectors-english/wiki-news-300d-1M.vec.zip\n- https://dl.fbaipublicfiles.com/fasttext/vectors-english/crawl-300d-2M.vec.zip\n\nDownload one of these models and unzip it, or look for other pretrained word2vec models available on the internet.\n\n#### Training your own model\n\nTo train your own model, the provided `train_model.py` script can be used. First you'll need to prepare a data set that you want to train the model with. Your data should be split into sentences, one sentence per line, lower case with all punctuation removed. like the following example: \n\n\n```text\nthe quick brown fox jumps over the lazy dog\njack and jill went up the hill to fetch a pail of water\nan apple a day keeps the doctor away\nto be or not to be that is the question\na stitch in time saves nine\nearly to bed and early to rise makes a man healthy wealthy and wise\nmany more sentences should follow\n```\n\nThe provided `prepare_data.py` script can help prepare data for training. After you have your input data prepared, you can build your model using the `train_model.py` command. Example:\n\n\n```bash\npython train_model.py training_data.txt\n```\n\nThe above command should produce the `training_data_model.vec` in the current director using the defaults. To see the training options available use the `-h` flag to see the parameter options and help.\n\n## Usage\n\nAfter installation and download or training of a model, you can use the tool from the command line.\n\n### Basic Command\n```\nembeddings-plot -m <model_path> -i <input_file> -o <output_file> --label\n```\n\n### Parameters\n- `-h`, `--help`: Show the help message and exit \n- `-m`, `--model`: Path to the word embeddings model file\n- `-i`, `--input`: Input text file with words to visualize\n- `-o`, `--output`: Output HTML file for the visualization\n- `-l`, `--labels`: (Optional) Show labels on the plot\n- `-c`, `--clusters`: (Optional) Number of clusters for KMeans. Default is 5.\n- `-r`, `--reduction`: (Optional) Method for dimensionality reduction (pca, tsne or umap). Default is tsne\n- `-t`, `--title`: (Optional) Sets the title of the output HTML page\n- `-d`, `--dimensions`: (Optional) Number of dimensions for the plot 2 (for 2D), or 3 (for 3D). Default is 2\n- `-th`, `--theme`: (Optional) Color theme for the plot: \"plotly\", \"plotly_white\" or \"plotly_dark\" (default: plotly)\n\n\n### Example\n```\nembeddings-plot --model crawl-300d-2M.vec --input words.txt --output embedding-plot.html --labels --clusters 13 \n```\n",

"bugtrack_url": null,

"license": "Apache-2.0",

"summary": "A command line utility to create plots of word embeddings",

"version": "0.1.6",

"project_urls": {

"Homepage": "https://github.com/robert-mcdermott/embeddings_plot",

"Repository": "https://github.com/robert-mcdermott/embeddings_plot"

},

"split_keywords": [

"word2vec",

"nlp",

"embeddings"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "a63a72fbc0d77edf76039871e2092737a1ade4bbff6cd1e63f267ea1c43578a1",

"md5": "7b11ea962d2c0f5a916cb80e8eaec84c",

"sha256": "c96b05752479e17fdc940b9917036cd26df6587412234082f785261ee873bec2"

},

"downloads": -1,

"filename": "embeddings_plot-0.1.6-py3-none-any.whl",

"has_sig": false,

"md5_digest": "7b11ea962d2c0f5a916cb80e8eaec84c",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.9,<4.0",

"size": 9778,

"upload_time": "2023-12-14T06:14:02",

"upload_time_iso_8601": "2023-12-14T06:14:02.904240Z",

"url": "https://files.pythonhosted.org/packages/a6/3a/72fbc0d77edf76039871e2092737a1ade4bbff6cd1e63f267ea1c43578a1/embeddings_plot-0.1.6-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "f469f3175a40d3f1b3e3721a5b7c37800e3f000c4e53a48fb8038136ef42e6f3",

"md5": "6bcbed1a8d564ed52f52e1668f425520",

"sha256": "17de461d547f7e6d8647c395a2fe24a2162da148f606dbe6922ea67a74aba961"

},

"downloads": -1,

"filename": "embeddings_plot-0.1.6.tar.gz",

"has_sig": false,

"md5_digest": "6bcbed1a8d564ed52f52e1668f425520",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.9,<4.0",

"size": 8709,

"upload_time": "2023-12-14T06:14:04",

"upload_time_iso_8601": "2023-12-14T06:14:04.817769Z",

"url": "https://files.pythonhosted.org/packages/f4/69/f3175a40d3f1b3e3721a5b7c37800e3f000c4e53a48fb8038136ef42e6f3/embeddings_plot-0.1.6.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2023-12-14 06:14:04",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "robert-mcdermott",

"github_project": "embeddings_plot",

"travis_ci": false,

"coveralls": false,

"github_actions": false,

"requirements": [],

"lcname": "embeddings_plot"

}