# PromptWatch.io ... session tracking for LangChain

It enables you to:

- track all the chain executions

- track LLM Prompts and **re-play the LLM runs** with the same input parameters and model settings to tweak your prompt template

- track your costs per **project** and per **tenant** (your customer)

## Installation

```bash

pip install promptwatch

```

## Basic usage

In order to enable session tracking wrap you chain executions in PromptWatch block

```python

from langchain import OpenAI, LLMChain, PromptTemplate

from promptwatch import PromptWatch

prompt_template = PromptTemplate.from_template("Finish this sentence {input}")

my_chain = LLMChain(llm=OpenAI(), prompt=prompt_template)

with PromptWatch(api_key="<your-api-key>") as pw:

my_chain("The quick brown fox jumped over")

```

Here you can get your API key: http://www.promptwatch.io/get-api-key (no registration needed)

You can set it directly into `PromptWatch` constructor, or set is as an *ENV variable* `PROMPTWATCH_API_KEY`

## Comprehensive Chain Execution Tracking

With PromptWatch.io, you can track all chains, actions, retrieved documents, and more to gain complete visibility into your system. This makes it easy to identify issues with your prompts and quickly fix them for optimal performance.

What sets PromptWatch.io apart is its intuitive and visual interface. You can easily drill down into the chains to find the root cause of any problems and get a clear understanding of what's happening in your system.

Read more here:

[Chain tracing documentation](https://docs.promptwatch.io/docs/category/chain-tracing)

## LLM Prompt caching

It is often tha case that some of the prompts are repeated over an over. It is costly and slow.

With PromptWatch you just wrap your LLM model into our CachedLLM interface and it will automatically reuse previously generated values.

Read more here:

[Prompt caching documentation](https://docs.promptwatch.io/docs/caching)

## LLM Prompt Template Tweaking

Tweaking prompt templates to find the optimal variation can be a time-consuming and challenging process, especially when dealing with multi-stage LLM chains. Fortunately, PromptWatch.io can help simplify the process!

With PromptWatch.io, you can easily experiment with different prompt variants by replaying any given LLM chain with the exact same inputs used in real scenarios. This allows you to fine-tune your prompts until you find the variation that works best for your needs.

Read more here:

[Prompt tweaking documentation](https://docs.promptwatch.io/docs/prompt_tweaking)

## Keep Track of Your Prompt Template Changes

Making changes to your prompt templates can be a delicate process, and it's not always easy to know what impact those changes will have on your system. Version control platforms like GIT are great for tracking code changes, but they're not always the best solution for tracking prompt changes.

Read more here:

[Prompt template versioning documentation](https://docs.promptwatch.io/docs/prompt_template_versioning)

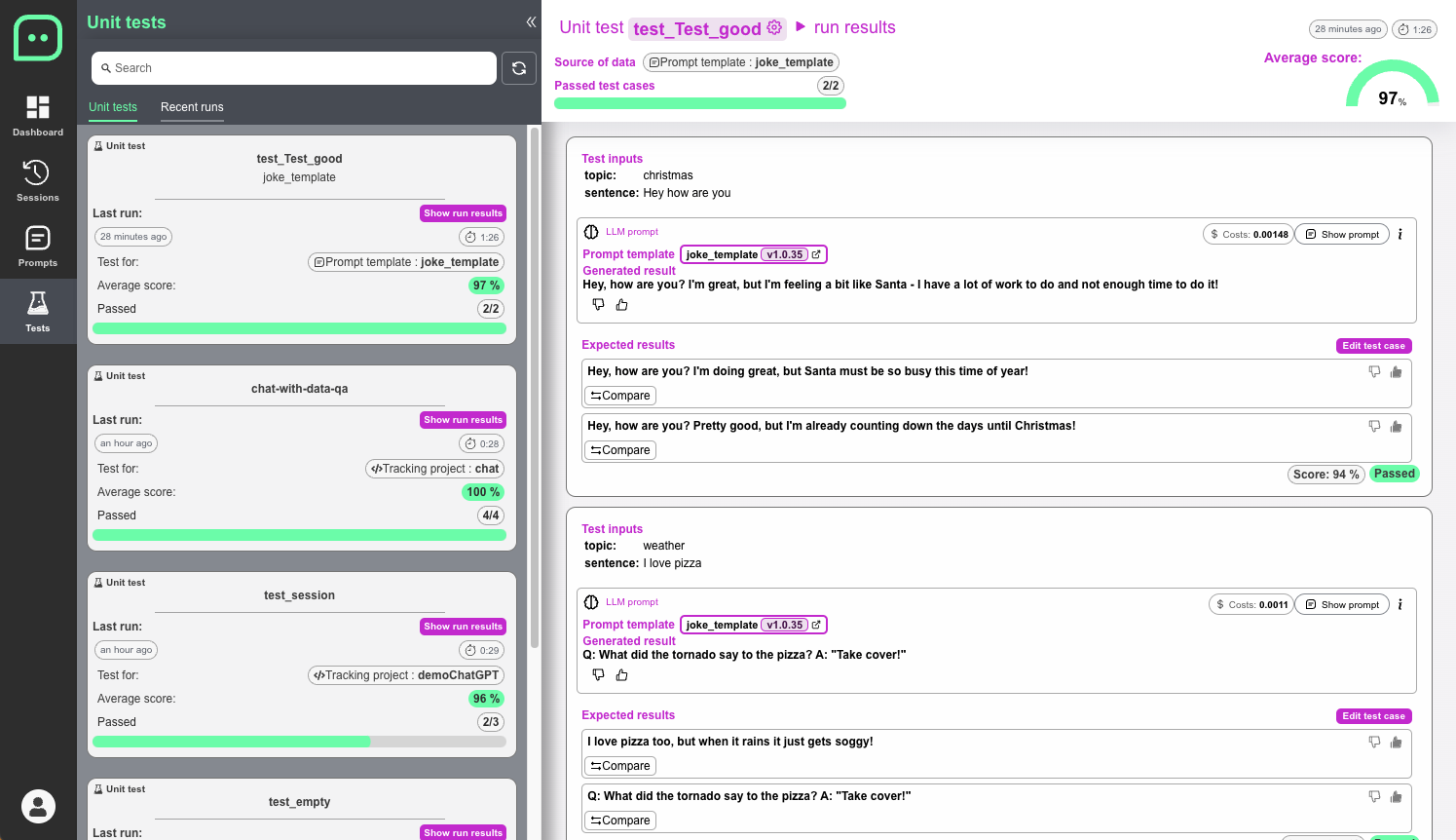

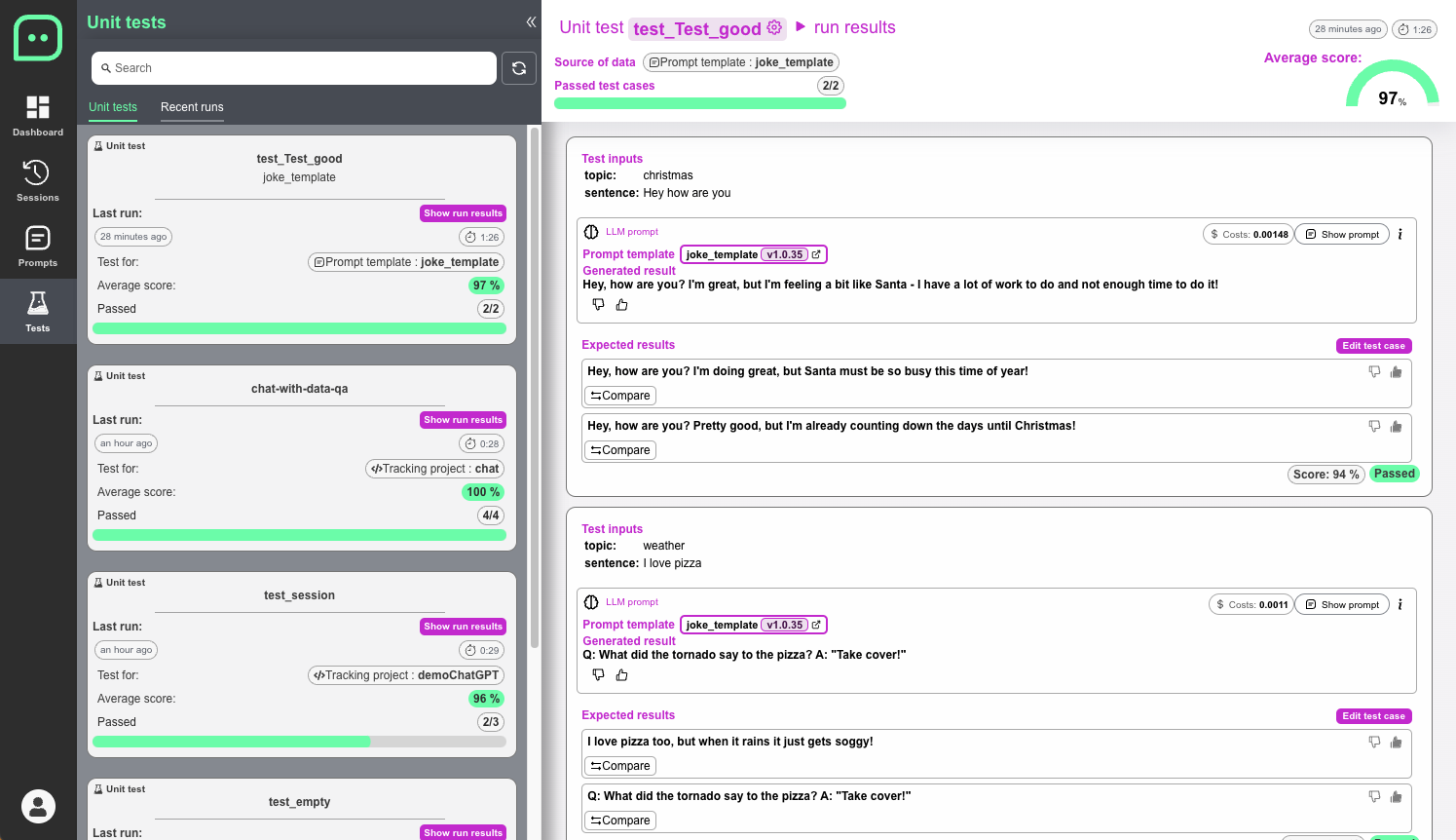

## Unit testing

Unit tests will help you understand what impact your changes in Prompt templates and your code can have on representative sessions examples.

Read more here:

[Unit tests documentation](https://docs.promptwatch.io/docs/category/unit-testing)

Raw data

{

"_id": null,

"home_page": "https://github.com/blip-solutions/promptwatch-client",

"name": "promptwatch",

"maintainer": "",

"docs_url": null,

"requires_python": ">=3.8",

"maintainer_email": "",

"keywords": "promptwatch prompt monitoring",

"author": "Juraj Bezdek",

"author_email": "juraj.bezdek@blip.solutions",

"download_url": "https://files.pythonhosted.org/packages/c0/26/6a821b2c5be8726052d56158ec627ad1807f8c7f1b0a6320774939ef37b6/promptwatch-0.4.4.tar.gz",

"platform": null,

"description": "# PromptWatch.io ... session tracking for LangChain \n\nIt enables you to:\n- track all the chain executions\n- track LLM Prompts and **re-play the LLM runs** with the same input parameters and model settings to tweak your prompt template\n- track your costs per **project** and per **tenant** (your customer)\n\n## Installation \n```bash\npip install promptwatch\n```\n\n## Basic usage\n\nIn order to enable session tracking wrap you chain executions in PromptWatch block\n\n```python\n\nfrom langchain import OpenAI, LLMChain, PromptTemplate\nfrom promptwatch import PromptWatch\n\nprompt_template = PromptTemplate.from_template(\"Finish this sentence {input}\")\nmy_chain = LLMChain(llm=OpenAI(), prompt=prompt_template)\n\nwith PromptWatch(api_key=\"<your-api-key>\") as pw:\n my_chain(\"The quick brown fox jumped over\")\n\n```\n\nHere you can get your API key: http://www.promptwatch.io/get-api-key (no registration needed)\n\nYou can set it directly into `PromptWatch` constructor, or set is as an *ENV variable* `PROMPTWATCH_API_KEY`\n\n## Comprehensive Chain Execution Tracking\n\nWith PromptWatch.io, you can track all chains, actions, retrieved documents, and more to gain complete visibility into your system. This makes it easy to identify issues with your prompts and quickly fix them for optimal performance.\n\nWhat sets PromptWatch.io apart is its intuitive and visual interface. You can easily drill down into the chains to find the root cause of any problems and get a clear understanding of what's happening in your system.\n\n\n\nRead more here:\n[Chain tracing documentation](https://docs.promptwatch.io/docs/category/chain-tracing)\n\n## LLM Prompt caching\nIt is often tha case that some of the prompts are repeated over an over. It is costly and slow. \nWith PromptWatch you just wrap your LLM model into our CachedLLM interface and it will automatically reuse previously generated values.\n\nRead more here:\n[Prompt caching documentation](https://docs.promptwatch.io/docs/caching)\n\n## LLM Prompt Template Tweaking\n\nTweaking prompt templates to find the optimal variation can be a time-consuming and challenging process, especially when dealing with multi-stage LLM chains. Fortunately, PromptWatch.io can help simplify the process!\n\nWith PromptWatch.io, you can easily experiment with different prompt variants by replaying any given LLM chain with the exact same inputs used in real scenarios. This allows you to fine-tune your prompts until you find the variation that works best for your needs.\n\n\n\nRead more here:\n[Prompt tweaking documentation](https://docs.promptwatch.io/docs/prompt_tweaking)\n\n\n## Keep Track of Your Prompt Template Changes\n\nMaking changes to your prompt templates can be a delicate process, and it's not always easy to know what impact those changes will have on your system. Version control platforms like GIT are great for tracking code changes, but they're not always the best solution for tracking prompt changes.\n\n\n\nRead more here:\n[Prompt template versioning documentation](https://docs.promptwatch.io/docs/prompt_template_versioning)\n\n\n\n## Unit testing\nUnit tests will help you understand what impact your changes in Prompt templates and your code can have on representative sessions examples.\n\n\nRead more here:\n[Unit tests documentation](https://docs.promptwatch.io/docs/category/unit-testing)\n",

"bugtrack_url": null,

"license": "MIT License",

"summary": "promptwatch.io python client to trace langchain sessions",

"version": "0.4.4",

"project_urls": {

"Homepage": "https://github.com/blip-solutions/promptwatch-client"

},

"split_keywords": [

"promptwatch",

"prompt",

"monitoring"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "c938892337893054a3a040d8201d5216e1bbe455d654223695f4495032ad41ca",

"md5": "a1e72bbc3773d836208f2bba4b4771d7",

"sha256": "cb53f134313e8fe136b28025d56545ed325064115fe7bc62ae4a235381ae4379"

},

"downloads": -1,

"filename": "promptwatch-0.4.4-py3-none-any.whl",

"has_sig": false,

"md5_digest": "a1e72bbc3773d836208f2bba4b4771d7",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.8",

"size": 41207,

"upload_time": "2024-02-12T07:42:13",

"upload_time_iso_8601": "2024-02-12T07:42:13.126730Z",

"url": "https://files.pythonhosted.org/packages/c9/38/892337893054a3a040d8201d5216e1bbe455d654223695f4495032ad41ca/promptwatch-0.4.4-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "c0266a821b2c5be8726052d56158ec627ad1807f8c7f1b0a6320774939ef37b6",

"md5": "2fe702b322b65535b858a6d5a0d30fab",

"sha256": "e2d49c74d781039e4ad8e832dfd80689ec579ac2b3c635f099b330d2f12b5012"

},

"downloads": -1,

"filename": "promptwatch-0.4.4.tar.gz",

"has_sig": false,

"md5_digest": "2fe702b322b65535b858a6d5a0d30fab",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.8",

"size": 37268,

"upload_time": "2024-02-12T07:42:14",

"upload_time_iso_8601": "2024-02-12T07:42:14.493255Z",

"url": "https://files.pythonhosted.org/packages/c0/26/6a821b2c5be8726052d56158ec627ad1807f8c7f1b0a6320774939ef37b6/promptwatch-0.4.4.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2024-02-12 07:42:14",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "blip-solutions",

"github_project": "promptwatch-client",

"travis_ci": false,

"coveralls": false,

"github_actions": false,

"lcname": "promptwatch"

}