| Name | sibila JSON |

| Version |

0.4.5

JSON

JSON |

| download |

| home_page | None |

| Summary | Structured queries from local or online LLM models |

| upload_time | 2024-06-21 16:11:02 |

| maintainer | None |

| docs_url | None |

| author | None |

| requires_python | >=3.9 |

| license | MIT |

| keywords |

llama.cpp

ai

transformers

gpt

llm

|

| VCS |

|

| bugtrack_url |

|

| requirements |

No requirements were recorded.

|

| Travis-CI |

No Travis.

|

| coveralls test coverage |

No coveralls.

|

# Sibila

Extract structured data from remote or local LLM models. Predictable output is important for serious use of LLMs.

- Query structured data into Pydantic objects, dataclasses or simple types.

- Access remote models from OpenAI, Anthropic, Mistral AI and other providers.

- Use vision models like GPT-4o, to extract structured data from images.

- Run local models like Llama-3, Phi-3, OpenChat or any other GGUF file model.

- Sibila is also a general purpose model access library, to generate plain text or free JSON results, with the same API for local and remote models.

No matter how well you craft a prompt begging a model for the format you need, it can always respond something else. Extracting structured data can be a big step into getting predictable behavior from your models.

See [What can you do with Sibila?](https://jndiogo.github.io/sibila/what/)

## Structured data

To extract structured data, using a local model:

``` python

from sibila import Models

from pydantic import BaseModel

class Info(BaseModel):

event_year: int

first_name: str

last_name: str

age_at_the_time: int

nationality: str

model = Models.create("llamacpp:openchat")

model.extract(Info, "Who was the first man in the moon?")

```

Returns an instance of class Info, created from the model's output:

``` python

Info(event_year=1969,

first_name='Neil',

last_name='Armstrong',

age_at_the_time=38,

nationality='American')

```

Or to use a remote model like OpenAI's GPT-4, we would simply replace the model's name:

``` python

model = Models.create("openai:gpt-4")

model.extract(Info, "Who was the first man in the moon?")

```

If Pydantic BaseModel objects are too much for your project, Sibila supports similar functionality with Python dataclasses. Also includes asynchronous access to remote models.

## Vision models

Sibila supports image input, alongside text prompts. For example, to extract the fields from a receipt in a photo:

``` python

from pydantic import Field

model = Models.create("openai:gpt-4o")

class ReceiptLine(BaseModel):

"""Receipt line data"""

description: str

cost: float

class Receipt(BaseModel):

"""Receipt information"""

total: float = Field(description="Total value")

lines: list[ReceiptLine] = Field(description="List of lines of paid items")

info = model.extract(Receipt,

("Extract receipt information.",

"https://upload.wikimedia.org/wikipedia/commons/6/6a/Receipts_in_Italy_13.jpg"))

info

```

Returns receipt fields structured in a Pydantic object:

```

Receipt(total=5.88,

lines=[ReceiptLine(description='BIS BORSE TERM.S', cost=3.9),

ReceiptLine(description='GHIACCIO 2X400 G', cost=0.99),

ReceiptLine(description='GHIACCIO 2X400 G', cost=0.99)])

```

Another example - extracting the most import elements in a photo:

``` python

photo = "https://upload.wikimedia.org/wikipedia/commons/thumb/3/32/Hohenloher_Freilandmuseum_-_Baugruppe_Hohenloher_Dorf_-_Bauerngarten_-_Ansicht_von_Osten_im_Juni.jpg/640px-Hohenloher_Freilandmuseum_-_Baugruppe_Hohenloher_Dorf_-_Bauerngarten_-_Ansicht_von_Osten_im_Juni.jpg"

model.extract(list[str],

("Extract up to five of the most important elements in this photo.",

photo))

```

Returns a list with the five strings:

```

['House with red roof and beige walls',

'Large tree with green leaves',

'Garden with various plants and flowers',

'Clear blue sky',

'Wooden fence']

```

Local vision models based on llama.cpp/llava can also be used.

⭐ Like our work? [Give us a star!](https://github.com/jndiogo/sibila)

## Docs

[The docs explain](https://jndiogo.github.io/sibila/) the main concepts, include examples and an API reference.

## Installation

Sibila can be installed from PyPI by doing:

```

pip install -U sibila

```

See [Getting started](https://jndiogo.github.io/sibila/installing/) for more information.

## Examples

The [Examples](https://jndiogo.github.io/sibila/examples/) show what you can do with local or remote models in Sibila: structured data extraction, classification, summarization, etc.

## License

This project is licensed under the MIT License - see the [LICENSE](https://github.com/jndiogo/sibila/blob/main/LICENSE) file for details.

## Acknowledgements

Sibila wouldn't be be possible without the help of great software and people:

- [llama.cpp](https://github.com/ggerganov/llama.cpp)

- [llama-cpp-python](https://github.com/abetlen/llama-cpp-python)

- [Hugging Face model hub](https://huggingface.co/) and [TheBloke (Tom Jobbins)](https://huggingface.co/TheBloke)

Thank you!

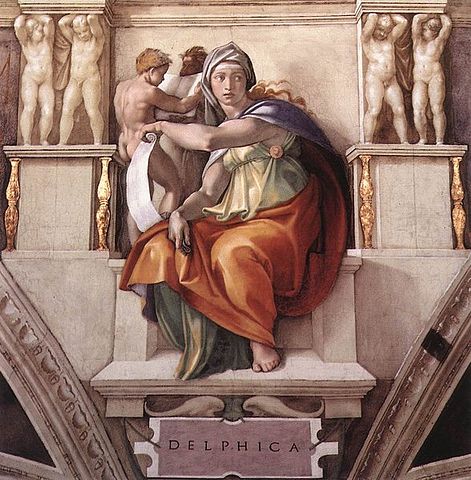

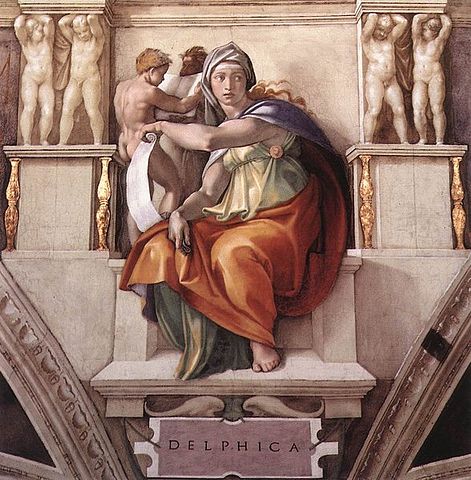

## Sibila?

Sibila is the Portuguese word for Sibyl. [The Sibyls](https://en.wikipedia.org/wiki/Sibyl) were wise oracular women in ancient Greece. Their mysterious words puzzled people throughout the centuries, providing insight or prophetic predictions, "uttering things not to be laughed at".

Michelangelo's Delphic Sibyl, in the Sistine Chapel ceiling.

Raw data

{

"_id": null,

"home_page": null,

"name": "sibila",

"maintainer": null,

"docs_url": null,

"requires_python": ">=3.9",

"maintainer_email": null,

"keywords": "llama.cpp, AI, Transformers, GPT, LLM",

"author": null,

"author_email": "Jorge Diogo <jndiogo@gmail.com>",

"download_url": "https://files.pythonhosted.org/packages/b2/02/a25c788e08f926a9d4a441c2a1c605316581314f4f53d958f95a1b6c3001/sibila-0.4.5.tar.gz",

"platform": null,

"description": "# Sibila\n\nExtract structured data from remote or local LLM models. Predictable output is important for serious use of LLMs.\n\n- Query structured data into Pydantic objects, dataclasses or simple types.\n- Access remote models from OpenAI, Anthropic, Mistral AI and other providers.\n- Use vision models like GPT-4o, to extract structured data from images.\n- Run local models like Llama-3, Phi-3, OpenChat or any other GGUF file model.\n- Sibila is also a general purpose model access library, to generate plain text or free JSON results, with the same API for local and remote models.\n\nNo matter how well you craft a prompt begging a model for the format you need, it can always respond something else. Extracting structured data can be a big step into getting predictable behavior from your models.\n\nSee [What can you do with Sibila?](https://jndiogo.github.io/sibila/what/)\n\n\n## Structured data\n\nTo extract structured data, using a local model:\n\n``` python\nfrom sibila import Models\nfrom pydantic import BaseModel\n\nclass Info(BaseModel):\n event_year: int\n first_name: str\n last_name: str\n age_at_the_time: int\n nationality: str\n\nmodel = Models.create(\"llamacpp:openchat\")\n\nmodel.extract(Info, \"Who was the first man in the moon?\")\n```\n\nReturns an instance of class Info, created from the model's output:\n\n``` python\nInfo(event_year=1969,\n first_name='Neil',\n last_name='Armstrong',\n age_at_the_time=38,\n nationality='American')\n```\n\nOr to use a remote model like OpenAI's GPT-4, we would simply replace the model's name:\n\n``` python\nmodel = Models.create(\"openai:gpt-4\")\n\nmodel.extract(Info, \"Who was the first man in the moon?\")\n```\n\nIf Pydantic BaseModel objects are too much for your project, Sibila supports similar functionality with Python dataclasses. Also includes asynchronous access to remote models.\n\n\n## Vision models\n\nSibila supports image input, alongside text prompts. For example, to extract the fields from a receipt in a photo:\n\n\n\n``` python\nfrom pydantic import Field\n\nmodel = Models.create(\"openai:gpt-4o\")\n\nclass ReceiptLine(BaseModel):\n \"\"\"Receipt line data\"\"\"\n description: str\n cost: float\n\nclass Receipt(BaseModel):\n \"\"\"Receipt information\"\"\"\n total: float = Field(description=\"Total value\")\n lines: list[ReceiptLine] = Field(description=\"List of lines of paid items\")\n\ninfo = model.extract(Receipt,\n (\"Extract receipt information.\", \n \"https://upload.wikimedia.org/wikipedia/commons/6/6a/Receipts_in_Italy_13.jpg\"))\ninfo\n```\n\nReturns receipt fields structured in a Pydantic object:\n\n```\nReceipt(total=5.88, \n lines=[ReceiptLine(description='BIS BORSE TERM.S', cost=3.9), \n ReceiptLine(description='GHIACCIO 2X400 G', cost=0.99),\n ReceiptLine(description='GHIACCIO 2X400 G', cost=0.99)])\n```\n\n\nAnother example - extracting the most import elements in a photo:\n\n\n\n``` python\nphoto = \"https://upload.wikimedia.org/wikipedia/commons/thumb/3/32/Hohenloher_Freilandmuseum_-_Baugruppe_Hohenloher_Dorf_-_Bauerngarten_-_Ansicht_von_Osten_im_Juni.jpg/640px-Hohenloher_Freilandmuseum_-_Baugruppe_Hohenloher_Dorf_-_Bauerngarten_-_Ansicht_von_Osten_im_Juni.jpg\"\n\nmodel.extract(list[str],\n (\"Extract up to five of the most important elements in this photo.\",\n photo))\n```\n\nReturns a list with the five strings:\n\n```\n['House with red roof and beige walls',\n 'Large tree with green leaves',\n 'Garden with various plants and flowers',\n 'Clear blue sky',\n 'Wooden fence']\n```\n\n\nLocal vision models based on llama.cpp/llava can also be used.\n\n\u2b50 Like our work? [Give us a star!](https://github.com/jndiogo/sibila)\n\n\n## Docs\n\n[The docs explain](https://jndiogo.github.io/sibila/) the main concepts, include examples and an API reference.\n\n\n## Installation\n\nSibila can be installed from PyPI by doing:\n\n```\npip install -U sibila\n```\n\nSee [Getting started](https://jndiogo.github.io/sibila/installing/) for more information.\n\n\n\n## Examples\n\nThe [Examples](https://jndiogo.github.io/sibila/examples/) show what you can do with local or remote models in Sibila: structured data extraction, classification, summarization, etc.\n\n\n\n## License\n\nThis project is licensed under the MIT License - see the [LICENSE](https://github.com/jndiogo/sibila/blob/main/LICENSE) file for details.\n\n\n## Acknowledgements\n\nSibila wouldn't be be possible without the help of great software and people:\n\n- [llama.cpp](https://github.com/ggerganov/llama.cpp)\n- [llama-cpp-python](https://github.com/abetlen/llama-cpp-python)\n- [Hugging Face model hub](https://huggingface.co/) and [TheBloke (Tom Jobbins)](https://huggingface.co/TheBloke)\n\nThank you!\n\n\n## Sibila?\n\nSibila is the Portuguese word for Sibyl. [The Sibyls](https://en.wikipedia.org/wiki/Sibyl) were wise oracular women in ancient Greece. Their mysterious words puzzled people throughout the centuries, providing insight or prophetic predictions, \"uttering things not to be laughed at\".\n\n\n\nMichelangelo's Delphic Sibyl, in the Sistine Chapel ceiling.\n\n",

"bugtrack_url": null,

"license": "MIT",

"summary": "Structured queries from local or online LLM models",

"version": "0.4.5",

"project_urls": {

"Documentation": "https://jndiogo.github.io/sibila",

"Homepage": "https://github.com/jndiogo/sibila",

"Issues": "https://github.com/jndiogo/sibila/issues"

},

"split_keywords": [

"llama.cpp",

" ai",

" transformers",

" gpt",

" llm"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "389678b1d2e4b72faeb5fed34acd3beaa113cf7f214598d8d940dce934e38d25",

"md5": "341e4441b5aa4f004b11fe77da717207",

"sha256": "9072f74ce7d07a0c4fe67a9ee1e41c96b2417c3ec5b34b6196ddaf4ba16f0363"

},

"downloads": -1,

"filename": "sibila-0.4.5-py3-none-any.whl",

"has_sig": false,

"md5_digest": "341e4441b5aa4f004b11fe77da717207",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.9",

"size": 97266,

"upload_time": "2024-06-21T16:11:00",

"upload_time_iso_8601": "2024-06-21T16:11:00.513293Z",

"url": "https://files.pythonhosted.org/packages/38/96/78b1d2e4b72faeb5fed34acd3beaa113cf7f214598d8d940dce934e38d25/sibila-0.4.5-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "b202a25c788e08f926a9d4a441c2a1c605316581314f4f53d958f95a1b6c3001",

"md5": "dd4288a5cd462f00ce585ae324c3f886",

"sha256": "9c1d1cb78f64ddc48797040cc912fc575344851936c1ca501b66ef76d7d1f823"

},

"downloads": -1,

"filename": "sibila-0.4.5.tar.gz",

"has_sig": false,

"md5_digest": "dd4288a5cd462f00ce585ae324c3f886",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.9",

"size": 169094,

"upload_time": "2024-06-21T16:11:02",

"upload_time_iso_8601": "2024-06-21T16:11:02.562647Z",

"url": "https://files.pythonhosted.org/packages/b2/02/a25c788e08f926a9d4a441c2a1c605316581314f4f53d958f95a1b6c3001/sibila-0.4.5.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2024-06-21 16:11:02",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "jndiogo",

"github_project": "sibila",

"travis_ci": false,

"coveralls": false,

"github_actions": false,

"lcname": "sibila"

}