# Tanuki <span style="font-family:Papyrus; font-size:2em;">🦝</span>

Easily build LLM-powered apps that get cheaper and faster over time.

---

## Release

[27/11] Renamed MonkeyPatch to Tanuki, support for [embeddings](https://github.com/monkeypatch/tanuki.py/blob/update_docs/docs/embeddings_support.md) and [function configurability](https://github.com/monkeypatch/tanuki.py/blob/update_docs/docs/function_configurability.md) is released!

* Use embeddings to integrate Tanuki with downstream RAG implementations using OpenAI Ada-2 model.

* Function configurability allows to configure Tanuki function executions to ignore certain implemented aspects (finetuning, data-storage communications) for improved latency and serverless integrations.

Join us on [Discord](https://discord.gg/uUzX5DYctk)

## Contents

<!-- TOC start (generated with https://github.com/derlin/bitdowntoc) -->

* [Introduction](#introduction)

* [Features](#features)

* [Installation and Getting Started](#installation-and-getting-started)

* [How It Works](#how-it-works)

* [Typed Outputs](#typed-outputs)

* [Test-Driven Alignment](#test-driven-alignment)

* [Scaling and Finetuning](#scaling-and-finetuning)

* [Frequently Asked Questions](#frequently-asked-questions)

* [Simple ToDo List App](#simple-todo-list-app)

<!-- TOC end -->

<!-- TOC --><a name="introduction"></a>

## Introduction

Tanuki is a way to easily call an LLM in place of the function body in Python, with the same parameters and output that you would expect from a function implemented by hand.

These LLM-powered functions are well-typed, reliable, stateless, and production-ready to be dropped into your app seamlessly. Rather than endless prompt-wrangling and nasty surprises, these LLM-powered functions and applications behave like traditional functions with proper error handling.

Lastly, the more you use Tanuki functions, the cheaper and faster they gets (up to 9-10x!) through automatic model distillation.

```python

@tanuki.patch

def some_function(input: TypedInput) -> TypedOutput:

"""(Optional) Include the description of how your function will be used."""

@tanuki.align

def test_some_function(example_typed_input: TypedInput,

example_typed_output: TypedOutput):

assert some_function(example_typed_input) == example_typed_output

```

<!-- TOC --><a name="features"></a>

## Features

- **Easy and seamless integration** - Add LLM augmented functions to any workflow within seconds. Decorate a function stub with `@tanuki.patch` and optionally add type hints and docstrings to guide the execution. That’s it.

- **Type aware** - Ensure that the outputs of the LLM adhere to the type constraints of the function (Python Base types, Pydantic classes, Literals, Generics etc) to guard against bugs or unexpected side-effects of using LLMs.

- **RAG support** - Seamlessly get embedding outputs for downstream RAG (Retrieval Augmented Generation) implementations. Output embeddings can then be easily stored and used for relevant document retrieval to reduce cost & latency and improve performance on long-form content.

- **Aligned outputs** - LLMs are unreliable, which makes them difficult to use in place of classically programmed functions. Using simple assert statements in a function decorated with `@tanuki.align`, you can align the behaviour of your patched function to what you expect.

- **Lower cost and latency** - Achieve up to 90% lower cost and 80% lower latency with increased usage. The package will take care of model training, MLOps and DataOps efforts to improve LLM capabilities through distillation.

- **Batteries included** - No remote dependencies other than OpenAI.

<!-- TOC --><a name="installation-and-getting-started"></a>

## Installation and Getting Started

<!-- TOC --><a name="installation"></a>

### Installation

```

pip install tanuki.py

```

or with Poetry

```

poetry add tanuki.py

```

Set your OpenAI key using:

```

export OPENAI_API_KEY=sk-...

```

<!-- TOC --><a name="getting-started"></a>

### Getting Started

To get started:

1. Create a python function stub decorated with `@tanuki.patch` including type hints and a docstring.

2. (Optional) Create another function decorated with `@tanuki.align` containing normal `assert` statements declaring the expected behaviour of your patched function with different inputs.

The patched function can now be called as normal in the rest of your code.

To add functional alignment, the functions annotated with `align` must also be called if:

- It is the first time calling the patched function (including any updates to the function signature, i.e docstring, input arguments, input type hints, naming or the output type hint)

- You have made changes to your assert statements.

Here is what it could look like for a simple classification function:

```python

@tanuki.patch

def classify_sentiment(msg: str) -> Optional[Literal['Good', 'Bad']]:

"""Classifies a message from the user into Good, Bad or None."""

@tanuki.align

def align_classify_sentiment():

assert classify_sentiment("I love you") == 'Good'

assert classify_sentiment("I hate you") == 'Bad'

assert not classify_sentiment("People from Phoenix are called Phoenicians")

if __name__ == "__main__":

align_classify_sentiment()

print(classify_sentiment("I like you")) # Good

print(classify_sentiment("Apples might be red")) # None

```

<!-- TOC --><a name="how-it-works"></a>

See [here](https://github.com/monkeypatch/tanuki.py/blob/update_docs/docs/function_configurability.md) for configuration options for patched Tanuki functions

## How It Works

When you call a tanuki-patched function during development, an LLM in a n-shot configuration is invoked to generate the typed response.

The number of examples used is dependent on the number of align statements supplied in functions annotated with the align decorator.

The response will be post-processed and the supplied output type will be programmatically instantiated ensuring that the correct type is returned.

This response can be passed through to the rest of your app / stored in the DB / displayed to the user.

Make sure to execute all align functions at least once before running your patched functions to ensure that the expected behaviour is registered. These are cached onto the disk for future reference.

The inputs and outputs of the function will be stored during execution as future training data.

As your data volume increases, smaller and smaller models will be distilled using the outputs of larger models.

The smaller models will capture the desired behaviour and performance at a lower computational cost, lower latency and without any MLOps effort.

<!-- TOC --><a name="typed-outputs"></a>

## Typed Outputs

LLM API outputs are typically in natural language. In many instances, it’s preferable to have constraints on the format of the output to integrate them better into workflows.

A core concept of Tanuki is the support for typed parameters and outputs. Supporting typed outputs of patched functions allows you to declare *rules about what kind of data the patched function is allowed to pass back* for use in the rest of your program. This will guard against the verbose or inconsistent outputs of the LLMs that are trained to be as “helpful as possible”.

You can use Literals or create custom types in Pydantic to express very complex rules about what the patched function can return. These act as guard-rails for the model preventing a patched function breaking the code or downstream workflows, and means you can avoid having to write custom validation logic in your application.

```python

@dataclass

class ActionItem:

goal: str = Field(description="What task must be completed")

deadline: datetime = Field(description="The date the goal needs to be achieved")

@tanuki.patch

def action_items(input: str) -> List[ActionItem]:

"""Generate a list of Action Items"""

@tanuki.align

def align_action_items():

goal = "Can you please get the presentation to me by Tuesday?"

next_tuesday = (datetime.now() + timedelta((1 - datetime.now().weekday() + 7) % 7)).replace(hour=0, minute=0, second=0, microsecond=0)

assert action_items(goal) == ActionItem(goal="Prepare the presentation", deadline=next_tuesday)

```

By constraining the types of data that can pass through your patched function, you are declaring the potential outputs that the model can return and specifying the world where the program exists in.

You can add integer constraints to the outputs for Pydantic field values, and generics if you wish.

```python

@tanuki.patch

def score_sentiment(input: str) -> Optional[Annotated[int, Field(gt=0, lt=10)]]:

"""Scores the input between 0-10"""

@tanuki.align

def align_score_sentiment():

"""Register several examples to align your function"""

assert score_sentiment("I love you") == 10

assert score_sentiment("I hate you") == 0

assert score_sentiment("You're okay I guess") == 5

# This is a normal test that can be invoked with pytest or unittest

def test_score_sentiment():

"""We can test the function as normal using Pytest or Unittest"""

score = score_sentiment("I like you")

assert score >= 7

if __name__ == "__main__":

align_score_sentiment()

print(score_sentiment("I like you")) # 7

print(score_sentiment("Apples might be red")) # None

```

To see more examples using Tanuki for different use cases (including how to integrate with FastAPI), have a look at [examples](https://github.com/monkeypatch/tanuki.py/tree/master/examples).

For embedding outputs for RAG support, see [here](https://github.com/monkeypatch/tanuki.py/blob/update_docs/docs/embeddings_support.md)

<!-- TOC --><a name="test-driven-alignment"></a>

## Test-Driven Alignment

In classic [test-driven development (TDD)](https://en.wikipedia.org/wiki/Test-driven_development), the standard practice is to write a failing test before writing the code that makes it pass.

Test-Driven Alignment (TDA) adapts this concept to align the behavior of a patched function with an expectation defined by a test.

To align the behaviour of your patched function to your needs, decorate a function with `@align` and assert the outputs of the function with the ‘assert’ statement as is done with standard tests.

```python

@tanuki.align

def align_classify_sentiment():

assert classify_sentiment("I love this!") == 'Good'

assert classify_sentiment("I hate this.") == 'Bad'

@tanuki.align

def align_score_sentiment():

assert score_sentiment("I like you") == 7

```

By writing a test that encapsulates the expected behaviour of the tanuki-patched function, you declare the contract that the function must fulfill. This enables you to:

1. **Verify Expectations:** Confirm that the function adheres to the desired output.

2. **Capture Behavioural Nuances:** Make sure that the LLM respects the edge cases and nuances stipulated by your test.

3. **Develop Iteratively:** Refine and update the behavior of the tanuki-patched function by declaring the desired behaviour as tests.

Unlike traditional TDD, where the objective is to write code that passes the test, TDA flips the script: **tests do not fail**. Their existence and the form they take are sufficient for LLMs to align themselves with the expected behavior.

TDA offers a lean yet robust methodology for grafting machine learning onto existing or new Python codebases. It combines the preventive virtues of TDD while addressing the specific challenges posed by the dynamism of LLMs.

---

(Aligning function chains is work in progress)

```python

def test_score_sentiment():

"""We can test the function as normal using Pytest or Unittest"""

assert multiply_by_two(score_sentiment("I like you")) == 14

assert 2*score_sentiment("I like you") == 14

```

<!-- TOC --><a name="scaling-and-finetuning"></a>

## Scaling and Finetuning

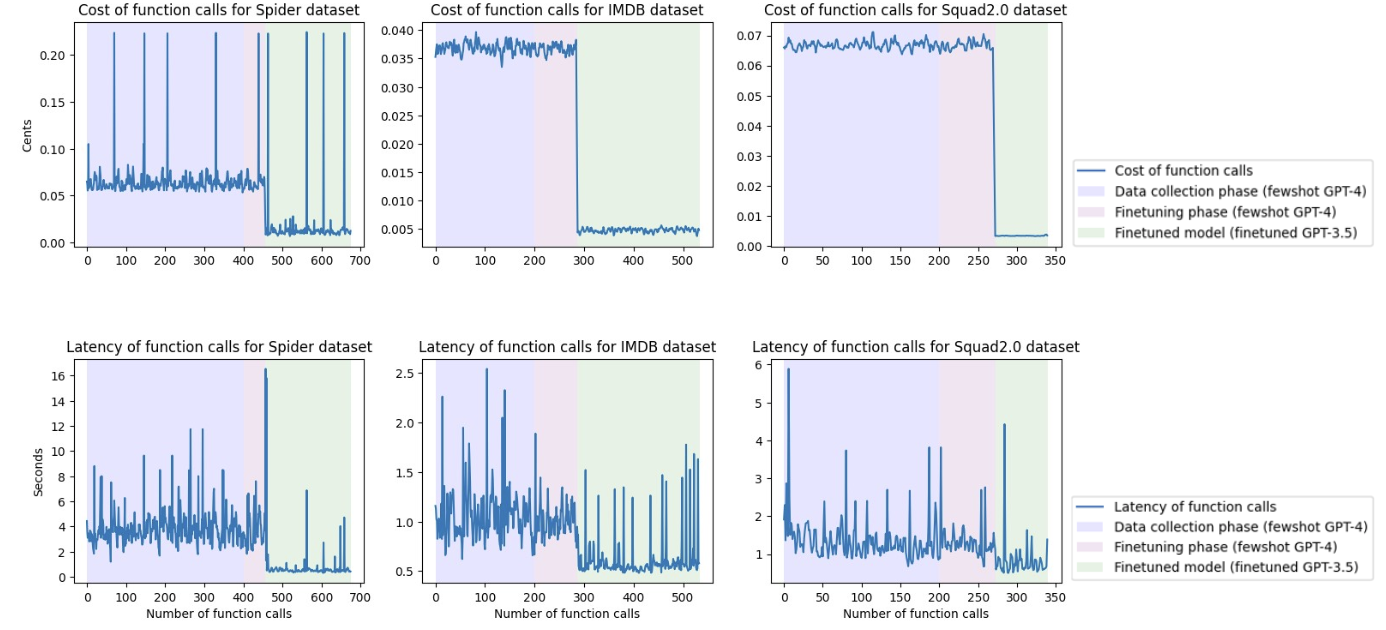

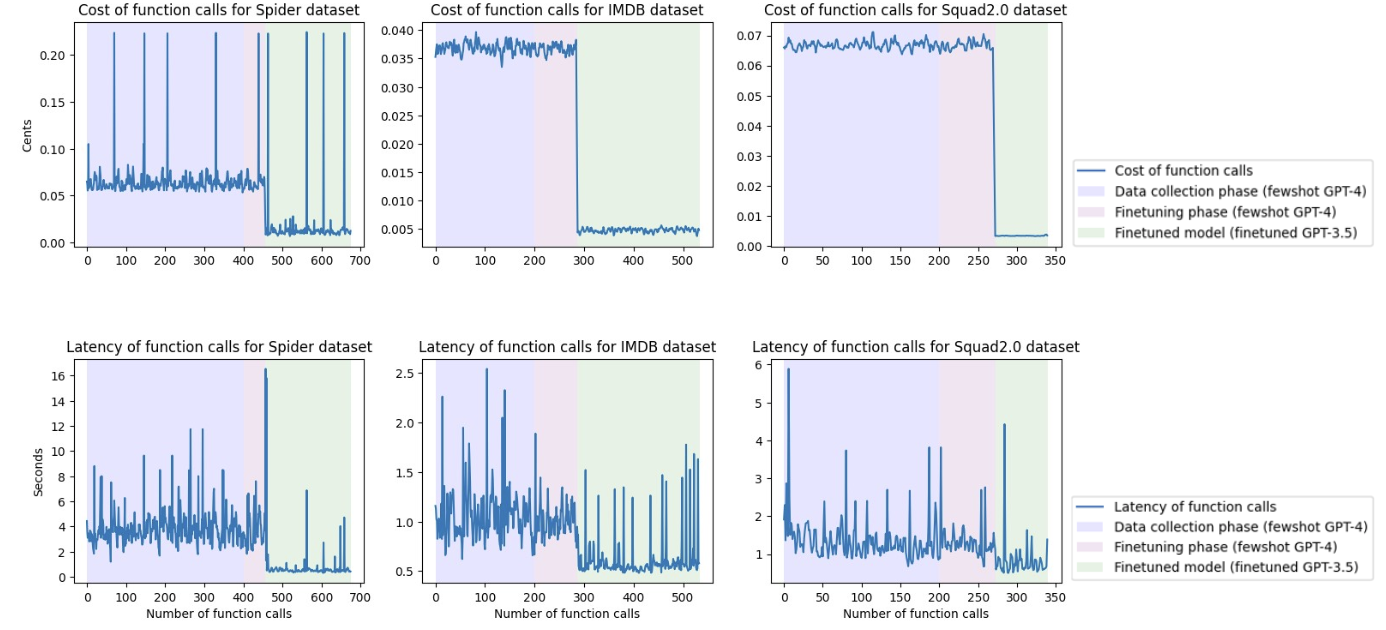

An advantage of using Tanuki in your workflow is the cost and latency benefits that will be provided as the number of datapoints increases.

Successful executions of your patched function suitable for finetuning will be persisted to a training dataset, which will be used to distil smaller models for each patched function. Model distillation and pseudo-labelling is a verified way how to cut down on model sizes and gain improvements in latency and memory footprints while incurring insignificant and minor cost to performance (https://arxiv.org/pdf/2305.02301.pdf, https://arxiv.org/pdf/2306.13649.pdf, https://arxiv.org/pdf/2311.00430.pdf, etc).

Training smaller function-specific models and deploying them is handled by the Tanuki library, so the user will get the benefits without any additional MLOps or DataOps effort. Currently only OpenAI GPT style models are supported (Teacher - GPT4, Student GPT-3.5)

We tested out model distillation using Tanuki using OpenAI models on Squad2, Spider and IMDB Movie Reviews datasets. We finetuned the gpt-3.5-turbo model (student) using few-shot responses of gpt-4 (teacher) and our preliminary tests show that using less than 600 datapoints in the training data we were able to get gpt 3.5 turbo to perform essentialy equivalent (less than 1.5% of performance difference on held-out dev sets) to gpt4 while achieving up to 12 times lower cost and over 6 times lower latency (cost and latency reduction are very dependent on task specific characteristics like input-output token sizes and align statement token sizes). These tests show the potential in model-distillation in this form for intelligently cutting costs and lowering latency without sacrificing performance.<br><br>

<!-- TOC --><a name="frequently-asked-questions"></a>

## Frequently Asked Questions

<!-- TOC --><a name="intro"></a>

### Intro

<!-- TOC --><a name="what-is-tanuki-in-plain-words"></a>

#### What is Tanuki in plain words?

Tanuki is a simple and seamless way to create LLM augmented functions in python, which ensure the outputs of the LLMs follow a specific structure. Moreover, the more you call a patched function, the cheaper and faster the execution gets.

<!-- TOC --><a name="how-does-this-compare-to-other-frameworks-like-langchain"></a>

#### How does this compare to other frameworks like LangChain?

- **Langchain**: Tanuki has a narrower scope than Langchain. Our mission is to ensure predictable and consistent LLM execution, with automatic reductions in cost and latency through finetuning.

- **Magentic** / **Marvin**: Tanuki offers two main benefits compared to Magentic/Marvin, namely; lower cost and latency through automatic distillation, and more predictable behaviour through test-driven alignment. Currently, there are two cases where you should use Magentic, namely: where you need support for tools (functions) - a feature that is on our roadmap, and where you need support for asynchronous functions.

<!-- TOC --><a name="what-are-some-sample-use-cases"></a>

#### What are some sample use-cases?

We've created a few examples to show how to use Tanuki for different problems. You can find them [here](https://github.com/monkeypatch/tanuki.py/tree/master/examples).

A few ideas are as follows:

- Adding an importance classifier to customer requests

- Creating a offensive-language classification feature

- Creating a food-review app

- Generating data that conforms to your DB schema that can immediately

<!-- TOC --><a name="why-would-i-need-typed-responses"></a>

#### Why would I need typed responses?

When invoking LLMs, the outputs are free-form. This means that they are less predictable when used in software products. Using types ensures that the outputs adhere to specific constraints or rules which the rest of your program can work with.

<!-- TOC --><a name="do-you-offer-this-for-other-languages-eg-typescript"></a>

#### Do you offer this for other languages (eg Typescript)?

Not right now but reach out on [our Discord server](https://discord.gg/kEGS5sQU) or make a Github issue if there’s another language you would like to see supported.

<!-- TOC --><a name="getting-started-1"></a>

### Getting Started

<!-- TOC --><a name="how-do-i-get-started"></a>

#### How do I get started?

Follow the instructions in the [Installation and getting started]() and [How it works]() sections

<!-- TOC --><a name="how-do-i-align-my-functions"></a>

#### How do I align my functions?

See [How it works]() and [Test-Driven Alignment]() sections or the examples shown [here](https://github.com/monkeypatch/tanuki.py/tree/master/examples).

<!-- TOC --><a name="do-i-need-my-own-openai-key"></a>

#### Do I need my own OpenAI key?

Yes

<!-- TOC --><a name="does-it-only-work-with-openai"></a>

#### Does it only work with OpenAI?

Currently yes but there are plans to support Anthropic and popular open-source models. If you have a specific request, either join [our Discord server](https://discord.gg/kEGS5sQU), or create a Github issue.

<!-- TOC --><a name="how-it-works-1"></a>

### How It Works

<!-- TOC --><a name="how-does-the-llm-get-cheaper-and-faster-over-time-and-by-how-much"></a>

#### How does the LLM get cheaper and faster over time? And by how much?

In short, we use distillation of LLM models.

Expanded, using the outputs of the larger (teacher) model, a smaller (student) model will be trained to emulate the teacher model behaviour while being faster and cheaper to run due to smaller size. In some cases it is possible to achieve up to 90% lower cost and 80% lower latency with a small number of executions of your patched functions.

<!-- TOC --><a name="how-many-calls-does-it-require-to-get-the-improvement"></a>

#### How many calls does it require to get the improvement?

The default minimum is 200 calls, although this can be changed by adding flags to the patch decorator.

<!-- TOC --><a name="can-i-link-functions-together"></a>

#### Can I link functions together?

Yes! It is possible to use the output of one patched function as the input to another patched function. Simply carry this out as you would do with normal python functions.

<!-- TOC --><a name="does-fine-tuning-reduce-the-performance-of-the-llm"></a>

#### Does fine-tuning reduce the performance of the LLM?

Not necessarily. Currently the only way to improve the LLM performance is to have better align statements. As the student model is trained on both align statements and input-output calls, it is possible for the fine tuned student model to exceed the performance of the N-shot teacher model during inference.

<!-- TOC --><a name="accuracy-reliability"></a>

### Accuracy & Reliability

<!-- TOC --><a name="how-do-you-guarantee-consistency-in-the-output-of-patched-functions"></a>

#### How do you guarantee consistency in the output of patched functions?

Each output of the LLM will be programmatically instantiated into the output class ensuring the output will be of the correct type, just like your Python functions. If the output is incorrect and instantiating the correct output object fails, an automatic feedback repair loop kicks in to correct the mistake.

<!-- TOC --><a name="how-reliable-are-the-typed-outputs"></a>

#### How reliable are the typed outputs?

For simpler-medium complexity classes GPT4 with align statements has been shown to be very reliable in outputting the correct type. Additionally we have implemented a repair loop with error feedback to “fix” incorrect outputs and add the correct output to the training dataset.

<!-- TOC --><a name="how-do-you-deal-with-hallucinations"></a>

#### How do you deal with hallucinations?

Hallucinations can’t be 100% removed from LLMs at the moment, if ever. However, by creating test functions decorated with `@tanuki.align`, you can use normal `assert` statements to align the model to behave in the way that you expect. Additionally, you can create types with Pydantic, which act as guardrails to prevent any nasty surprises and provide correct error handling.

<!-- TOC --><a name="how-do-you-deal-with-bias"></a>

#### How do you deal with bias?

By adding more align statements that cover a wider range of inputs, you can ensure that the model is less biased.

<!-- TOC --><a name="will-distillation-impact-performance"></a>

#### Will distillation impact performance?

It depends. For tasks that are challenging for even the best models (e.g GPT4), distillation will reduce performance.

However, distillation can be manually turned off in these cases. Additionally, if the distilled model frequently fails to generate correct outputs, the distilled model will be automatically turned off.

<!-- TOC --><a name="what-is-this-not-suitable-for"></a>

#### What is this not suitable for?

- Time-series data

- Tasks that requires a lot of context to completed correctly

- For tasks that directly output complex natural language, you will get less value from Tanuki and may want to consider the OpenAI API directly.

---

<!-- TOC --><a name="simple-todo-list-app"></a>

## [Simple ToDo List App](https://github.com/monkeypatch/tanuki.py/tree/master/examples/todolist)

Raw data

{

"_id": null,

"home_page": "https://github.com/Tanuki/tanuki.py",

"name": "tanuki.py",

"maintainer": "",

"docs_url": null,

"requires_python": ">=3.6",

"maintainer_email": "",

"keywords": "python,ai,tdd,alignment,tanuki,distillation,pydantic,gpt-4,llm,chat-gpt,gpt-4-api,ai-functions",

"author": "Jack Hopkins",

"author_email": "jack.hopkins@me.com",

"download_url": "https://files.pythonhosted.org/packages/7b/95/1a050179470e2db567e37ff7fd8ff9d87eefee7764434b5d99904db048ab/tanuki.py-0.2.0.tar.gz",

"platform": null,

"description": "# Tanuki <span style=\"font-family:Papyrus; font-size:2em;\">\ud83e\udd9d</span>  \nEasily build LLM-powered apps that get cheaper and faster over time.\n\n---\n\n## Release\n[27/11] Renamed MonkeyPatch to Tanuki, support for [embeddings](https://github.com/monkeypatch/tanuki.py/blob/update_docs/docs/embeddings_support.md) and [function configurability](https://github.com/monkeypatch/tanuki.py/blob/update_docs/docs/function_configurability.md) is released!\n* Use embeddings to integrate Tanuki with downstream RAG implementations using OpenAI Ada-2 model.\n* Function configurability allows to configure Tanuki function executions to ignore certain implemented aspects (finetuning, data-storage communications) for improved latency and serverless integrations.\n\nJoin us on [Discord](https://discord.gg/uUzX5DYctk)\n\n## Contents\n\n<!-- TOC start (generated with https://github.com/derlin/bitdowntoc) -->\n * [Introduction](#introduction)\n * [Features](#features)\n * [Installation and Getting Started](#installation-and-getting-started)\n * [How It Works](#how-it-works)\n * [Typed Outputs](#typed-outputs)\n * [Test-Driven Alignment](#test-driven-alignment)\n * [Scaling and Finetuning](#scaling-and-finetuning)\n * [Frequently Asked Questions](#frequently-asked-questions)\n * [Simple ToDo List App](#simple-todo-list-app)\n\n<!-- TOC end -->\n<!-- TOC --><a name=\"introduction\"></a>\n## Introduction \n\nTanuki is a way to easily call an LLM in place of the function body in Python, with the same parameters and output that you would expect from a function implemented by hand. \n\nThese LLM-powered functions are well-typed, reliable, stateless, and production-ready to be dropped into your app seamlessly. Rather than endless prompt-wrangling and nasty surprises, these LLM-powered functions and applications behave like traditional functions with proper error handling.\n\nLastly, the more you use Tanuki functions, the cheaper and faster they gets (up to 9-10x!) through automatic model distillation.\n\n```python\n@tanuki.patch\ndef some_function(input: TypedInput) -> TypedOutput:\n \"\"\"(Optional) Include the description of how your function will be used.\"\"\"\n\n@tanuki.align\ndef test_some_function(example_typed_input: TypedInput, \n example_typed_output: TypedOutput):\n\t\n assert some_function(example_typed_input) == example_typed_output\n\t\n```\n\n<!-- TOC --><a name=\"features\"></a>\n## Features\n\n- **Easy and seamless integration** - Add LLM augmented functions to any workflow within seconds. Decorate a function stub with `@tanuki.patch` and optionally add type hints and docstrings to guide the execution. That\u2019s it.\n- **Type aware** - Ensure that the outputs of the LLM adhere to the type constraints of the function (Python Base types, Pydantic classes, Literals, Generics etc) to guard against bugs or unexpected side-effects of using LLMs.\n- **RAG support** - Seamlessly get embedding outputs for downstream RAG (Retrieval Augmented Generation) implementations. Output embeddings can then be easily stored and used for relevant document retrieval to reduce cost & latency and improve performance on long-form content. \n- **Aligned outputs** - LLMs are unreliable, which makes them difficult to use in place of classically programmed functions. Using simple assert statements in a function decorated with `@tanuki.align`, you can align the behaviour of your patched function to what you expect.\n- **Lower cost and latency** - Achieve up to 90% lower cost and 80% lower latency with increased usage. The package will take care of model training, MLOps and DataOps efforts to improve LLM capabilities through distillation.\n- **Batteries included** - No remote dependencies other than OpenAI. \n\n<!-- TOC --><a name=\"installation-and-getting-started\"></a>\n## Installation and Getting Started\n<!-- TOC --><a name=\"installation\"></a>\n### Installation\n```\npip install tanuki.py\n```\n\nor with Poetry\n\n```\npoetry add tanuki.py\n```\n\nSet your OpenAI key using:\n\n```\nexport OPENAI_API_KEY=sk-...\n```\n\n\n<!-- TOC --><a name=\"getting-started\"></a>\n### Getting Started\n\nTo get started:\n1. Create a python function stub decorated with `@tanuki.patch` including type hints and a docstring.\n2. (Optional) Create another function decorated with `@tanuki.align` containing normal `assert` statements declaring the expected behaviour of your patched function with different inputs.\n\nThe patched function can now be called as normal in the rest of your code. \n\nTo add functional alignment, the functions annotated with `align` must also be called if:\n- It is the first time calling the patched function (including any updates to the function signature, i.e docstring, input arguments, input type hints, naming or the output type hint)\n- You have made changes to your assert statements.\n\nHere is what it could look like for a simple classification function:\n\n```python\n@tanuki.patch\ndef classify_sentiment(msg: str) -> Optional[Literal['Good', 'Bad']]:\n \"\"\"Classifies a message from the user into Good, Bad or None.\"\"\"\n\n@tanuki.align\ndef align_classify_sentiment():\n assert classify_sentiment(\"I love you\") == 'Good'\n assert classify_sentiment(\"I hate you\") == 'Bad'\n assert not classify_sentiment(\"People from Phoenix are called Phoenicians\")\n\nif __name__ == \"__main__\":\n align_classify_sentiment()\n print(classify_sentiment(\"I like you\")) # Good\n print(classify_sentiment(\"Apples might be red\")) # None\n```\n\n<!-- TOC --><a name=\"how-it-works\"></a>\n\nSee [here](https://github.com/monkeypatch/tanuki.py/blob/update_docs/docs/function_configurability.md) for configuration options for patched Tanuki functions\n\n## How It Works\n\nWhen you call a tanuki-patched function during development, an LLM in a n-shot configuration is invoked to generate the typed response. \n\nThe number of examples used is dependent on the number of align statements supplied in functions annotated with the align decorator. \n\nThe response will be post-processed and the supplied output type will be programmatically instantiated ensuring that the correct type is returned. \n\nThis response can be passed through to the rest of your app / stored in the DB / displayed to the user.\n\nMake sure to execute all align functions at least once before running your patched functions to ensure that the expected behaviour is registered. These are cached onto the disk for future reference.\n\nThe inputs and outputs of the function will be stored during execution as future training data.\nAs your data volume increases, smaller and smaller models will be distilled using the outputs of larger models. \n\nThe smaller models will capture the desired behaviour and performance at a lower computational cost, lower latency and without any MLOps effort.\n\n<!-- TOC --><a name=\"typed-outputs\"></a>\n## Typed Outputs\n\nLLM API outputs are typically in natural language. In many instances, it\u2019s preferable to have constraints on the format of the output to integrate them better into workflows.\n\nA core concept of Tanuki is the support for typed parameters and outputs. Supporting typed outputs of patched functions allows you to declare *rules about what kind of data the patched function is allowed to pass back* for use in the rest of your program. This will guard against the verbose or inconsistent outputs of the LLMs that are trained to be as \u201chelpful as possible\u201d.\n\nYou can use Literals or create custom types in Pydantic to express very complex rules about what the patched function can return. These act as guard-rails for the model preventing a patched function breaking the code or downstream workflows, and means you can avoid having to write custom validation logic in your application. \n\n```python\n@dataclass\nclass ActionItem:\n goal: str = Field(description=\"What task must be completed\")\n deadline: datetime = Field(description=\"The date the goal needs to be achieved\")\n \n@tanuki.patch\ndef action_items(input: str) -> List[ActionItem]:\n \"\"\"Generate a list of Action Items\"\"\"\n\n@tanuki.align\ndef align_action_items():\n goal = \"Can you please get the presentation to me by Tuesday?\"\n next_tuesday = (datetime.now() + timedelta((1 - datetime.now().weekday() + 7) % 7)).replace(hour=0, minute=0, second=0, microsecond=0)\n\n assert action_items(goal) == ActionItem(goal=\"Prepare the presentation\", deadline=next_tuesday)\n```\n\nBy constraining the types of data that can pass through your patched function, you are declaring the potential outputs that the model can return and specifying the world where the program exists in.\n\nYou can add integer constraints to the outputs for Pydantic field values, and generics if you wish.\n\n```python\n@tanuki.patch\ndef score_sentiment(input: str) -> Optional[Annotated[int, Field(gt=0, lt=10)]]:\n \"\"\"Scores the input between 0-10\"\"\"\n\n@tanuki.align\ndef align_score_sentiment():\n \"\"\"Register several examples to align your function\"\"\"\n assert score_sentiment(\"I love you\") == 10\n assert score_sentiment(\"I hate you\") == 0\n assert score_sentiment(\"You're okay I guess\") == 5\n\n# This is a normal test that can be invoked with pytest or unittest\ndef test_score_sentiment():\n \"\"\"We can test the function as normal using Pytest or Unittest\"\"\"\n score = score_sentiment(\"I like you\") \n assert score >= 7\n\nif __name__ == \"__main__\":\n align_score_sentiment()\n print(score_sentiment(\"I like you\")) # 7\n print(score_sentiment(\"Apples might be red\")) # None\n```\n\nTo see more examples using Tanuki for different use cases (including how to integrate with FastAPI), have a look at [examples](https://github.com/monkeypatch/tanuki.py/tree/master/examples).\n\nFor embedding outputs for RAG support, see [here](https://github.com/monkeypatch/tanuki.py/blob/update_docs/docs/embeddings_support.md)\n\n<!-- TOC --><a name=\"test-driven-alignment\"></a>\n## Test-Driven Alignment\n\nIn classic [test-driven development (TDD)](https://en.wikipedia.org/wiki/Test-driven_development), the standard practice is to write a failing test before writing the code that makes it pass. \n\nTest-Driven Alignment (TDA) adapts this concept to align the behavior of a patched function with an expectation defined by a test.\n\nTo align the behaviour of your patched function to your needs, decorate a function with `@align` and assert the outputs of the function with the \u2018assert\u2019 statement as is done with standard tests.\n\n```python\n@tanuki.align \ndef align_classify_sentiment(): \n assert classify_sentiment(\"I love this!\") == 'Good' \n assert classify_sentiment(\"I hate this.\") == 'Bad'\n \n@tanuki.align\ndef align_score_sentiment():\n assert score_sentiment(\"I like you\") == 7\n```\n\nBy writing a test that encapsulates the expected behaviour of the tanuki-patched function, you declare the contract that the function must fulfill. This enables you to:\n\n1. **Verify Expectations:** Confirm that the function adheres to the desired output. \n2. **Capture Behavioural Nuances:** Make sure that the LLM respects the edge cases and nuances stipulated by your test.\n3. **Develop Iteratively:** Refine and update the behavior of the tanuki-patched function by declaring the desired behaviour as tests.\n\nUnlike traditional TDD, where the objective is to write code that passes the test, TDA flips the script: **tests do not fail**. Their existence and the form they take are sufficient for LLMs to align themselves with the expected behavior.\n\nTDA offers a lean yet robust methodology for grafting machine learning onto existing or new Python codebases. It combines the preventive virtues of TDD while addressing the specific challenges posed by the dynamism of LLMs.\n\n---\n(Aligning function chains is work in progress)\n```python\ndef test_score_sentiment():\n \"\"\"We can test the function as normal using Pytest or Unittest\"\"\"\n assert multiply_by_two(score_sentiment(\"I like you\")) == 14\n assert 2*score_sentiment(\"I like you\") == 14\n```\n\n<!-- TOC --><a name=\"scaling-and-finetuning\"></a>\n## Scaling and Finetuning\n\nAn advantage of using Tanuki in your workflow is the cost and latency benefits that will be provided as the number of datapoints increases. \n\nSuccessful executions of your patched function suitable for finetuning will be persisted to a training dataset, which will be used to distil smaller models for each patched function. Model distillation and pseudo-labelling is a verified way how to cut down on model sizes and gain improvements in latency and memory footprints while incurring insignificant and minor cost to performance (https://arxiv.org/pdf/2305.02301.pdf, https://arxiv.org/pdf/2306.13649.pdf, https://arxiv.org/pdf/2311.00430.pdf, etc).\n\nTraining smaller function-specific models and deploying them is handled by the Tanuki library, so the user will get the benefits without any additional MLOps or DataOps effort. Currently only OpenAI GPT style models are supported (Teacher - GPT4, Student GPT-3.5) \n\nWe tested out model distillation using Tanuki using OpenAI models on Squad2, Spider and IMDB Movie Reviews datasets. We finetuned the gpt-3.5-turbo model (student) using few-shot responses of gpt-4 (teacher) and our preliminary tests show that using less than 600 datapoints in the training data we were able to get gpt 3.5 turbo to perform essentialy equivalent (less than 1.5% of performance difference on held-out dev sets) to gpt4 while achieving up to 12 times lower cost and over 6 times lower latency (cost and latency reduction are very dependent on task specific characteristics like input-output token sizes and align statement token sizes). These tests show the potential in model-distillation in this form for intelligently cutting costs and lowering latency without sacrificing performance.<br><br>\n\n\n\n\n<!-- TOC --><a name=\"frequently-asked-questions\"></a>\n## Frequently Asked Questions\n\n\n<!-- TOC --><a name=\"intro\"></a>\n### Intro\n<!-- TOC --><a name=\"what-is-tanuki-in-plain-words\"></a>\n#### What is Tanuki in plain words?\nTanuki is a simple and seamless way to create LLM augmented functions in python, which ensure the outputs of the LLMs follow a specific structure. Moreover, the more you call a patched function, the cheaper and faster the execution gets.\n\n<!-- TOC --><a name=\"how-does-this-compare-to-other-frameworks-like-langchain\"></a>\n#### How does this compare to other frameworks like LangChain?\n- **Langchain**: Tanuki has a narrower scope than Langchain. Our mission is to ensure predictable and consistent LLM execution, with automatic reductions in cost and latency through finetuning.\n- **Magentic** / **Marvin**: Tanuki offers two main benefits compared to Magentic/Marvin, namely; lower cost and latency through automatic distillation, and more predictable behaviour through test-driven alignment. Currently, there are two cases where you should use Magentic, namely: where you need support for tools (functions) - a feature that is on our roadmap, and where you need support for asynchronous functions.\n\n\n<!-- TOC --><a name=\"what-are-some-sample-use-cases\"></a>\n#### What are some sample use-cases?\nWe've created a few examples to show how to use Tanuki for different problems. You can find them [here](https://github.com/monkeypatch/tanuki.py/tree/master/examples).\nA few ideas are as follows:\n- Adding an importance classifier to customer requests\n- Creating a offensive-language classification feature\n- Creating a food-review app\n- Generating data that conforms to your DB schema that can immediately \n\n<!-- TOC --><a name=\"why-would-i-need-typed-responses\"></a>\n#### Why would I need typed responses?\nWhen invoking LLMs, the outputs are free-form. This means that they are less predictable when used in software products. Using types ensures that the outputs adhere to specific constraints or rules which the rest of your program can work with.\n\n<!-- TOC --><a name=\"do-you-offer-this-for-other-languages-eg-typescript\"></a>\n#### Do you offer this for other languages (eg Typescript)?\nNot right now but reach out on [our Discord server](https://discord.gg/kEGS5sQU) or make a Github issue if there\u2019s another language you would like to see supported.\n\n<!-- TOC --><a name=\"getting-started-1\"></a>\n### Getting Started\n<!-- TOC --><a name=\"how-do-i-get-started\"></a>\n#### How do I get started?\nFollow the instructions in the [Installation and getting started]() and [How it works]() sections\n\n<!-- TOC --><a name=\"how-do-i-align-my-functions\"></a>\n#### How do I align my functions?\nSee [How it works]() and [Test-Driven Alignment]() sections or the examples shown [here](https://github.com/monkeypatch/tanuki.py/tree/master/examples).\n\n\n<!-- TOC --><a name=\"do-i-need-my-own-openai-key\"></a>\n#### Do I need my own OpenAI key?\nYes\n\n<!-- TOC --><a name=\"does-it-only-work-with-openai\"></a>\n#### Does it only work with OpenAI?\nCurrently yes but there are plans to support Anthropic and popular open-source models. If you have a specific request, either join [our Discord server](https://discord.gg/kEGS5sQU), or create a Github issue.\n\n<!-- TOC --><a name=\"how-it-works-1\"></a>\n### How It Works\n<!-- TOC --><a name=\"how-does-the-llm-get-cheaper-and-faster-over-time-and-by-how-much\"></a>\n#### How does the LLM get cheaper and faster over time? And by how much?\nIn short, we use distillation of LLM models.\n\nExpanded, using the outputs of the larger (teacher) model, a smaller (student) model will be trained to emulate the teacher model behaviour while being faster and cheaper to run due to smaller size. In some cases it is possible to achieve up to 90% lower cost and 80% lower latency with a small number of executions of your patched functions. \n<!-- TOC --><a name=\"how-many-calls-does-it-require-to-get-the-improvement\"></a>\n#### How many calls does it require to get the improvement?\nThe default minimum is 200 calls, although this can be changed by adding flags to the patch decorator.\n<!-- TOC --><a name=\"can-i-link-functions-together\"></a>\n#### Can I link functions together?\nYes! It is possible to use the output of one patched function as the input to another patched function. Simply carry this out as you would do with normal python functions.\n<!-- TOC --><a name=\"does-fine-tuning-reduce-the-performance-of-the-llm\"></a>\n#### Does fine-tuning reduce the performance of the LLM?\nNot necessarily. Currently the only way to improve the LLM performance is to have better align statements. As the student model is trained on both align statements and input-output calls, it is possible for the fine tuned student model to exceed the performance of the N-shot teacher model during inference.\n\n\n<!-- TOC --><a name=\"accuracy-reliability\"></a>\n### Accuracy & Reliability\n<!-- TOC --><a name=\"how-do-you-guarantee-consistency-in-the-output-of-patched-functions\"></a>\n#### How do you guarantee consistency in the output of patched functions?\nEach output of the LLM will be programmatically instantiated into the output class ensuring the output will be of the correct type, just like your Python functions. If the output is incorrect and instantiating the correct output object fails, an automatic feedback repair loop kicks in to correct the mistake.\n<!-- TOC --><a name=\"how-reliable-are-the-typed-outputs\"></a>\n#### How reliable are the typed outputs?\nFor simpler-medium complexity classes GPT4 with align statements has been shown to be very reliable in outputting the correct type. Additionally we have implemented a repair loop with error feedback to \u201cfix\u201d incorrect outputs and add the correct output to the training dataset.\n<!-- TOC --><a name=\"how-do-you-deal-with-hallucinations\"></a>\n#### How do you deal with hallucinations?\nHallucinations can\u2019t be 100% removed from LLMs at the moment, if ever. However, by creating test functions decorated with `@tanuki.align`, you can use normal `assert` statements to align the model to behave in the way that you expect. Additionally, you can create types with Pydantic, which act as guardrails to prevent any nasty surprises and provide correct error handling.\n<!-- TOC --><a name=\"how-do-you-deal-with-bias\"></a>\n#### How do you deal with bias?\nBy adding more align statements that cover a wider range of inputs, you can ensure that the model is less biased.\n<!-- TOC --><a name=\"will-distillation-impact-performance\"></a>\n#### Will distillation impact performance?\nIt depends. For tasks that are challenging for even the best models (e.g GPT4), distillation will reduce performance.\nHowever, distillation can be manually turned off in these cases. Additionally, if the distilled model frequently fails to generate correct outputs, the distilled model will be automatically turned off.\n\n<!-- TOC --><a name=\"what-is-this-not-suitable-for\"></a>\n#### What is this not suitable for?\n- Time-series data\n- Tasks that requires a lot of context to completed correctly\n- For tasks that directly output complex natural language, you will get less value from Tanuki and may want to consider the OpenAI API directly.\n\n---\n\n<!-- TOC --><a name=\"simple-todo-list-app\"></a>\n## [Simple ToDo List App](https://github.com/monkeypatch/tanuki.py/tree/master/examples/todolist)\n",

"bugtrack_url": null,

"license": "MIT",

"summary": "The easiest way to build scalable LLM-powered applications, which gets cheaper and faster over time.",

"version": "0.2.0",

"project_urls": {

"Download": "https://github.com/Tanuki/tanuki.py/archive/v0.1.0.tar.gz",

"Homepage": "https://github.com/Tanuki/tanuki.py"

},

"split_keywords": [

"python",

"ai",

"tdd",

"alignment",

"tanuki",

"distillation",

"pydantic",

"gpt-4",

"llm",

"chat-gpt",

"gpt-4-api",

"ai-functions"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "db759c1bd3e0428678ce9174b0fa9e0b030ea5c2902ae30c1ee80b178da3a576",

"md5": "becff7c2231168bccfa28b46443401bc",

"sha256": "53bd55d18bd29c4457197e98d43e1032905aaf830565b5b62a896b279b8421f2"

},

"downloads": -1,

"filename": "tanuki.py-0.2.0-py3-none-any.whl",

"has_sig": false,

"md5_digest": "becff7c2231168bccfa28b46443401bc",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.6",

"size": 71161,

"upload_time": "2024-02-05T18:35:50",

"upload_time_iso_8601": "2024-02-05T18:35:50.636804Z",

"url": "https://files.pythonhosted.org/packages/db/75/9c1bd3e0428678ce9174b0fa9e0b030ea5c2902ae30c1ee80b178da3a576/tanuki.py-0.2.0-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "7b951a050179470e2db567e37ff7fd8ff9d87eefee7764434b5d99904db048ab",

"md5": "39b5b9fbc3f45b4c680298eea6b36636",

"sha256": "1798ac811173e733ce7022c1616d3b60604fc04b1311111c06e81fc5f510c0f6"

},

"downloads": -1,

"filename": "tanuki.py-0.2.0.tar.gz",

"has_sig": false,

"md5_digest": "39b5b9fbc3f45b4c680298eea6b36636",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.6",

"size": 153822,

"upload_time": "2024-02-05T18:35:52",

"upload_time_iso_8601": "2024-02-05T18:35:52.626097Z",

"url": "https://files.pythonhosted.org/packages/7b/95/1a050179470e2db567e37ff7fd8ff9d87eefee7764434b5d99904db048ab/tanuki.py-0.2.0.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2024-02-05 18:35:52",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "Tanuki",

"github_project": "tanuki.py",

"travis_ci": false,

"coveralls": false,

"github_actions": true,

"requirements": [],

"lcname": "tanuki.py"

}