# JupyterChatbook

[](https://pypistats.org/packages/jupyterchatbook)

<!---

PePy:

[](https://pepy.tech/project/JupyterChatbook)

[](https://pepy.tech/project/JupyterChatbook)

[](https://pepy.tech/project/JupyterChatbook)

--->

"JupyterChatbook" is a Python package of a Jupyter extension that facilitates

the interaction with Large Language Models (LLMs).

The Chatbook extension provides the cell magics:

- `%%chatgpt` (and the synonym `%%openai`)

- `%%palm`

- `%%dalle`

- `%%chat`

- `%%chat_meta`

The first three are for "shallow" access of the corresponding LLM services.

The 4th one is the most important -- allows contextual, multi-cell interactions with LLMs.

The last one is for managing the chat objects created in a notebook session.

**Remark:** The chatbook LLM cells use the packages

["openai"](https://pypi.org/project/openai/), [OAIp2],

and ["google-generativeai"](https://pypi.org/project/google-generativeai/), [GAIp1].

**Remark:** The results of the LLM cells are automatically copied to the clipboard

using the package ["pyperclip"](https://pypi.org/project/pyperclip/), [ASp1].

**Remark:** The API keys for the LLM cells can be specified in the magic lines. If not specified then the API keys are taken f

rom the Operating System (OS) environmental variables `OPENAI_API_KEY` and `PALM_API_KEY`.

(See below the setup section for LLM services access.)

Here is a couple of movies [AAv2, AAv3] that provide quick introductions to the features:

- ["Jupyter Chatbook LLM cells demo (Python)"](https://youtu.be/WN3N-K_Xzz8), (4.8 min)

- ["Jupyter Chatbook multi cell LLM chats teaser (Python)"](https://www.youtube.com/watch?v=8pv0QRGc7Rw), (4.5 min)

--------

## Installation

### Install from GitHub

```shell

pip install -e git+https://github.com/antononcube/Python-JupyterChatbook.git#egg=Python-JupyterChatbook

```

### From PyPi

```shell

pip install JupyterChatbook

```

-------

## Setup LLM services access

The API keys for the LLM cells can be specified in the magic lines. If not specified then the API keys are taken f

rom the Operating System (OS) environmental variables`OPENAI_API_KEY` and `PALM_API_KEY`.

(For example, set in the "~/.zshrc" file in macOS.)

One way to set those environmental variables in a notebook session is to use the `%env` line magic. For example:

```

%env OPENAI_API_KEY = <YOUR API KEY>

```

Another way is to use Python code. For example:

```

import os

os.environ['PALM_API_KEY'] = '<YOUR PALM API KEY>'

os.environ['OPEN_API_KEY'] = '<YOUR OPEN API KEY>'

```

-------

## Demonstration notebooks (chatbooks)

| Notebook | Description |

|-----------------------------------|---------------------------------------------------------------------------------------------|

| [Chatbooks-cells-demo.ipynb](https://github.com/antononcube/Python-JupyterChatbook/blob/main/docs/Chatbook-cells-demo.ipynb) | How to do [multi-cell (notebook-wide) chats](https://www.youtube.com/watch?v=8pv0QRGc7Rw)? |

| [Chatbook-LLM-cells.ipynb](https://github.com/antononcube/Python-JupyterChatbook/blob/main/docs/Chatbook-LLM-cells.ipynb) | How to "directly message" LLMs services? |

| [DALL-E-cells-demo.ipynb](https://github.com/antononcube/Python-JupyterChatbook/blob/main/docs/DALL-E-cells-demo.ipynb) | How to generate images with [DALL-E](https://openai.com/dall-e-2)? |

| [Echoed-chats.ipynb](https://github.com/antononcube/Python-JupyterChatbook/blob/main/docs/Echoed-chats.ipynb) | How to see the LLM interaction execution steps? |

-------

## Notebook-wide chats

Chatbooks have the ability to maintain LLM conversations over multiple notebook cells.

A chatbook can have more than one LLM conversations.

"Under the hood" each chatbook maintains a database of chat objects.

Chat cells are used to give messages to those chat objects.

For example, here is a chat cell with which a new

["Email writer"](https://developers.generativeai.google/prompts/email-writer)

chat object is made, and that new chat object has the identifier "em12":

```

%%chat --chat_id em12, --prompt "Given a topic, write emails in a concise, professional manner"

Write a vacation email.

```

Here is a chat cell in which another message is given to the chat object with identifier "em12":

```

%%chat --chat_id em12

Rewrite with manager's name being Jane Doe, and start- and end dates being 8/20 and 9/5.

```

In this chat cell a new chat object is created:

```

%%chat -i snowman, --prompt "Pretend you are a friendly snowman. Stay in character for every response you give me. Keep your responses short."

Hi!

```

And here is a chat cell that sends another message to the "snowman" chat object:

```

%%chat -i snowman

Who build you? Where?

```

**Remark:** Specifying a chat object identifier is not required. I.e. only the magic spec `%%chat` can be used.

The "default" chat object ID identifier is "NONE".

For more examples see the notebook

["Chatbook-cells-demo.ipynb"](https://github.com/antononcube/Python-JupyterChatbook/blob/main/docs/Chatbook-cells-demo.ipynb).

Here is a flowchart that summarizes the way chatbooks create and utilize LLM chat objects:

```mermaid

flowchart LR

OpenAI{{OpenAI}}

PaLM{{PaLM}}

LLMFunc[[LLMFunctions]]

LLMProm[[LLMPrompts]]

CODB[(Chat objects)]

PDB[(Prompts)]

CCell[/Chat cell/]

CRCell[/Chat result cell/]

CIDQ{Chat ID<br/>specified?}

CIDEQ{Chat ID<br/>exists in DB?}

RECO[Retrieve existing<br/>chat object]

COEval[Message<br/>evaluation]

PromParse[Prompt<br/>DSL spec parsing]

KPFQ{Known<br/>prompts<br/>found?}

PromExp[Prompt<br/>expansion]

CNCO[Create new<br/>chat object]

CIDNone["Assume chat ID<br/>is 'NONE'"]

subgraph Chatbook frontend

CCell

CRCell

end

subgraph Chatbook backend

CIDQ

CIDEQ

CIDNone

RECO

CNCO

CODB

end

subgraph Prompt processing

PDB

LLMProm

PromParse

KPFQ

PromExp

end

subgraph LLM interaction

COEval

LLMFunc

PaLM

OpenAI

end

CCell --> CIDQ

CIDQ --> |yes| CIDEQ

CIDEQ --> |yes| RECO

RECO --> PromParse

COEval --> CRCell

CIDEQ -.- CODB

CIDEQ --> |no| CNCO

LLMFunc -.- CNCO -.- CODB

CNCO --> PromParse --> KPFQ

KPFQ --> |yes| PromExp

KPFQ --> |no| COEval

PromParse -.- LLMProm

PromExp -.- LLMProm

PromExp --> COEval

LLMProm -.- PDB

CIDQ --> |no| CIDNone

CIDNone --> CIDEQ

COEval -.- LLMFunc

LLMFunc <-.-> OpenAI

LLMFunc <-.-> PaLM

```

------

## Chat meta cells

Each chatbook session has a dictionary of chat objects.

Chatbooks can have chat meta cells that allow the access of the chat object "database" as whole,

or its individual objects.

Here is an example of a chat meta cell (that applies the method `print` to the chat object with ID "snowman"):

```

%%chat_meta -i snowman

print

```

Here is an example of chat meta cell that creates a new chat chat object with the LLM prompt

specified in the cell

(["Guess the word"](https://developers.generativeai.google/prompts/guess-the-word)):

```

%%chat_meta -i WordGuesser --prompt

We're playing a game. I'm thinking of a word, and I need to get you to guess that word.

But I can't say the word itself.

I'll give you clues, and you'll respond with a guess.

Your guess should be a single word only.

```

Here is another chat object creation cell using a prompt from the package

["LLMPrompts"](https://pypi.org/project/LLMPrompts), [AAp2]:

```

%%chat_meta -i yoda1 --prompt

@Yoda

```

Here is a table with examples of magic specs for chat meta cells and their interpretation:

| cell magic line | cell content | interpretation |

|:---------------------------|:-------------------------------------|:----------------------------------------------------------------|

| chat_meta -i ew12 | print | Give the "print out" of the chat object with ID "ew12" |

| chat_meta --chat_id ew12 | messages | Give the messages of the chat object with ID "ew12" |

| chat_meta -i sn22 --prompt | You pretend to be a melting snowman. | Create a chat object with ID "sn22" with the prompt in the cell |

| chat_meta --all | keys | Show the keys of the session chat objects DB |

| chat_meta --all | print | Print the `repr` forms of the session chat objects |

Here is a flowchart that summarizes the chat meta cell processing:

```mermaid

flowchart LR

LLMFunc[[LLMFunctionObjects]]

CODB[(Chat objects)]

CCell[/Chat meta cell/]

CRCell[/Chat meta cell result/]

CIDQ{Chat ID<br/>specified?}

KCOMQ{Known<br/>chat object<br/>method?}

AKWQ{Option '--all'<br/>specified?}

KCODBMQ{Known<br/>chat objects<br/>DB method?}

CIDEQ{Chat ID<br/>exists in DB?}

RECO[Retrieve existing<br/>chat object]

COEval[Chat object<br/>method<br/>invocation]

CODBEval[Chat objects DB<br/>method<br/>invocation]

CNCO[Create new<br/>chat object]

CIDNone["Assume chat ID<br/>is 'NONE'"]

NoCOM[/Cannot find<br/>chat object<br/>message/]

CntCmd[/Cannot interpret<br/>command<br/>message/]

subgraph Chatbook

CCell

NoCOM

CntCmd

CRCell

end

CCell --> CIDQ

CIDQ --> |yes| CIDEQ

CIDEQ --> |yes| RECO

RECO --> KCOMQ

KCOMQ --> |yes| COEval --> CRCell

KCOMQ --> |no| CntCmd

CIDEQ -.- CODB

CIDEQ --> |no| NoCOM

LLMFunc -.- CNCO -.- CODB

CNCO --> COEval

CIDQ --> |no| AKWQ

AKWQ --> |yes| KCODBMQ

KCODBMQ --> |yes| CODBEval

KCODBMQ --> |no| CntCmd

CODBEval -.- CODB

CODBEval --> CRCell

AKWQ --> |no| CIDNone

CIDNone --> CIDEQ

COEval -.- LLMFunc

```

-------

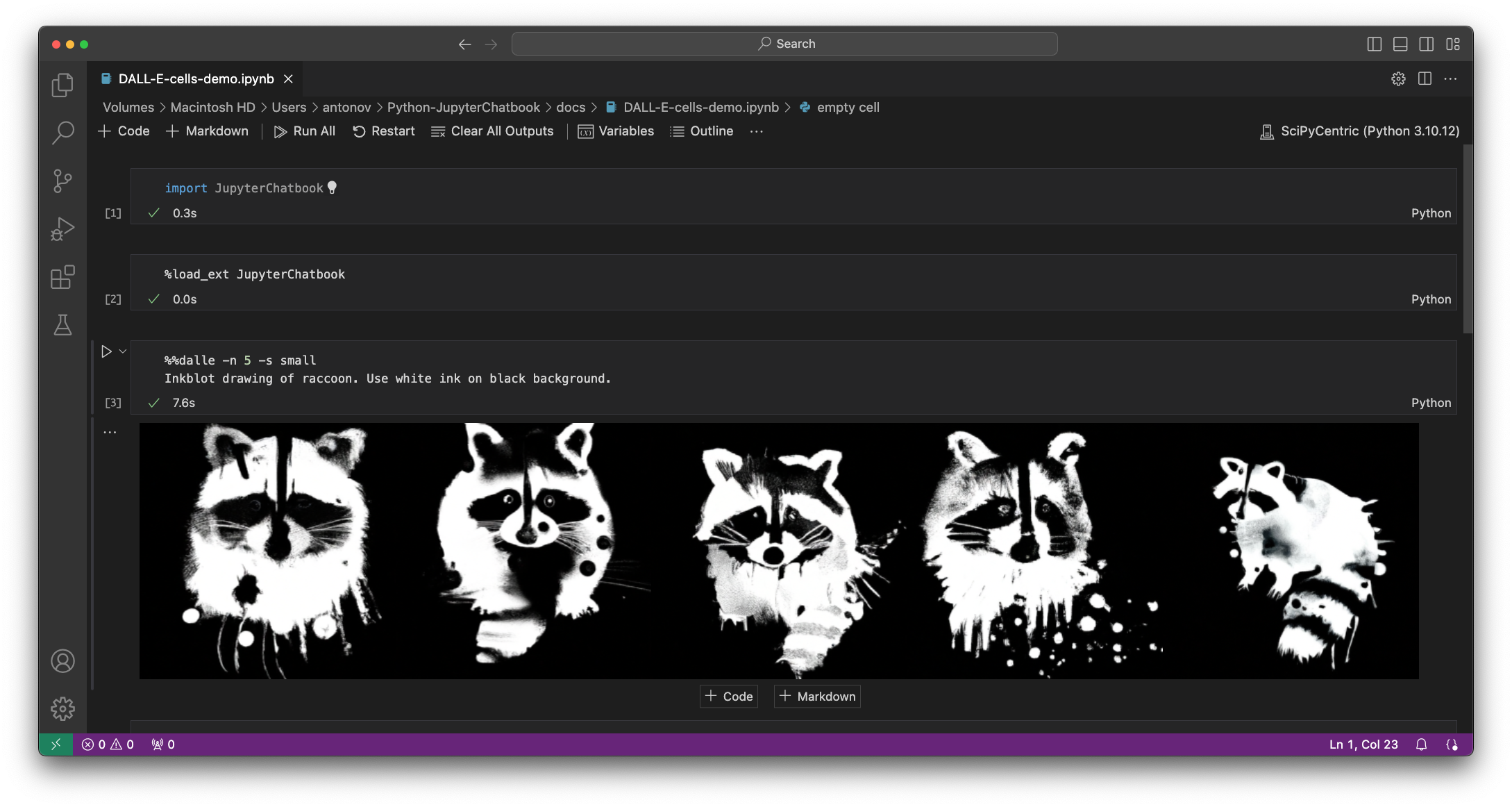

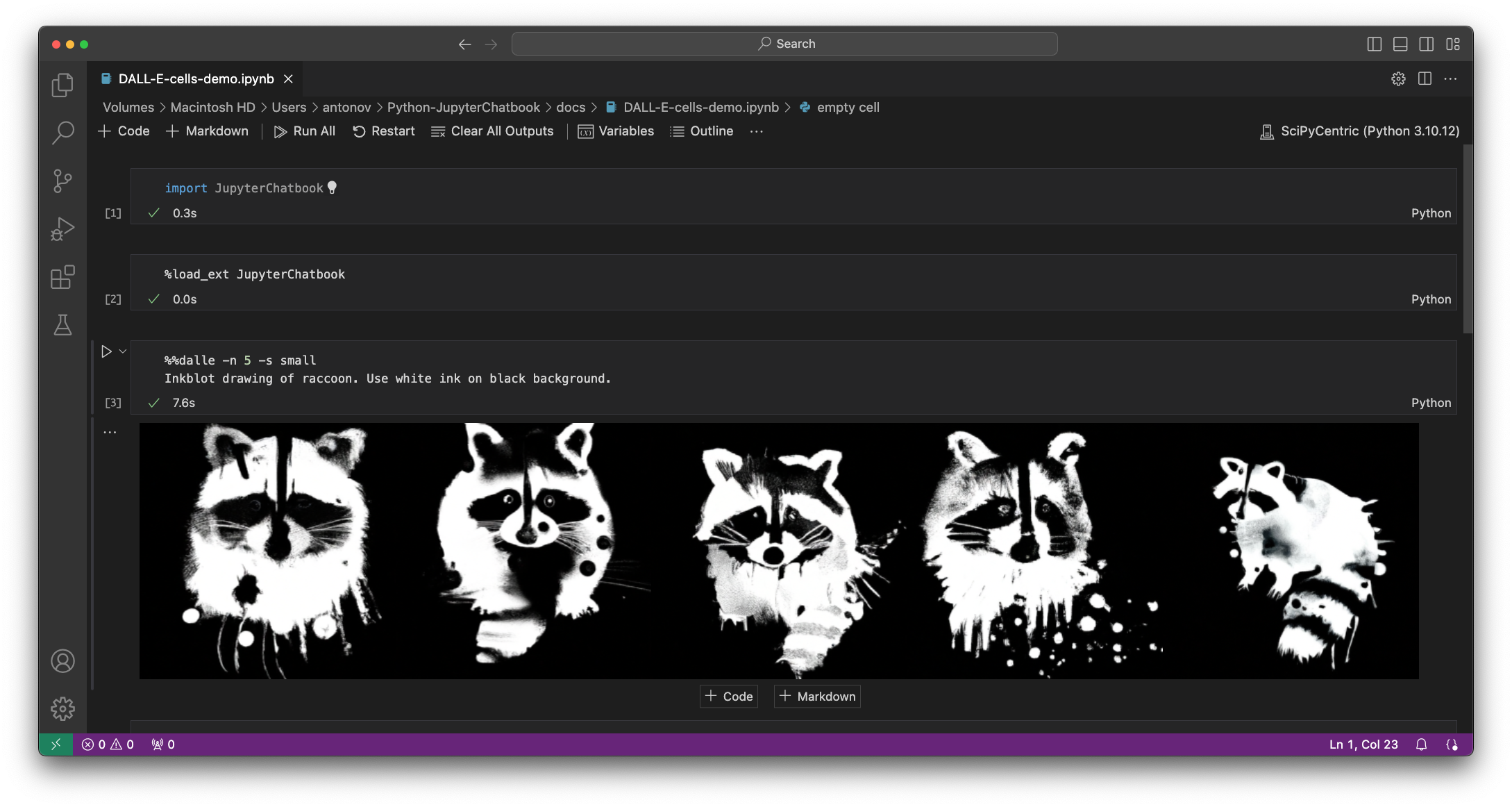

## DALL-E access

***See the notebook ["DALL-E-cells-demo.ipynb"](https://github.com/antononcube/Python-JupyterChatbook/blob/main/docs/DALL-E-cells-demo.ipynb)***

Here is a screenshot:

-------

## Implementation details

The design of this package -- and corresponding envisioned workflows with it -- follow those of

the Raku package ["Jupyter::Chatbook"](https://github.com/antononcube/Raku-Jupyter-Chatbook), [AAp3].

-------

## TODO

- [ ] TODO Implementation

- [X] DONE PalM chat cell

- [ ] TODO Using ["pyperclip"](https://pypi.org/project/pyperclip/)

- [X] DONE Basic

- [X] `%%chatgpt`

- [X] `%%dalle`

- [X] `%%palm`

- [X] `%%chat`

- [ ] TODO Switching on/off copying to the clipboard

- [X] DONE Per cell

- Controlled with the argument `--no_clipboard`.

- [ ] TODO Global

- Can be done via the chat meta cell, but maybe a more elegant, bureaucratic solution exists.

- [X] DONE Formatted output: asis, html, markdown

- General [lexer code](https://ipython.readthedocs.io/en/stable/api/generated/IPython.display.html#IPython.display.Code)?

- Includes LaTeX.

- [X] `%%chatgpt`

- [X] `%%palm`

- [X] `%%chat`

- [ ] `%%chat_meta`?

- [X] DONE DALL-E image variations cell

- Combined image variations and edits with `%%dalle`.

- [ ] TODO Mermaid-JS cell

- [ ] TODO ProdGDT cell

- [ ] MAYBE DeepL cell

- See ["deepl-python"](https://github.com/DeepLcom/deepl-python)

- [ ] TODO Lower level access to chat objects.

- Like:

- Getting the 3rd message

- Removing messages after 2 second one

- etc.

- [ ] TODO Using LLM commands to manipulate chat objects

- Like:

- "Remove the messages after the second for chat profSynapse3."

- "Show the third messages of each chat object."

- [ ] TODO Documentation

- [X] DONE Multi-cell LLM chats movie (teaser)

- See [AAv2].

- [ ] TODO LLM service cells movie (short)

- [ ] TODO Multi-cell LLM chats movie (comprehensive)

- [ ] TODO Code generation

-------

## References

### Packages

[AAp1] Anton Antonov,

[LLMFunctionObjects Python package](https://github.com/antononcube/Python-packages/tree/main/LLMFunctionObjects),

(2023),

[Python-packages at GitHub/antononcube](https://github.com/antononcube/Python-packages).

[AAp2] Anton Antonov,

[LLMPrompts Python package](https://github.com/antononcube/Python-packages/tree/main/LLMPrompts),

(2023),

[Python-packages at GitHub/antononcube](https://github.com/antononcube/Python-packages).

[AAp3] Anton Antonov,

[Jupyter::Chatbook Raku package](https://github.com/antononcube/Raku-Jupyter-Chatbook),

(2023),

[GitHub/antononcube](https://github.com/antononcube).

[ASp1] Al Sweigart,

[pyperclip (Python package)](https://pypi.org/project/pyperclip/),

(2013-2021),

[PyPI.org/AlSweigart](https://pypi.org/user/AlSweigart/).

[GAIp1] Google AI,

[google-generativeai (Google Generative AI Python Client)](https://pypi.org/project/google-generativeai/),

(2023),

[PyPI.org/google-ai](https://pypi.org/user/google-ai/).

[OAIp1] OpenAI,

[openai (OpenAI Python Library)](https://pypi.org/project/openai/),

(2020-2023),

[PyPI.org](https://pypi.org/).

### Videos

[AAv1] Anton Antonov,

["Jupyter Chatbook multi cell LLM chats teaser (Raku)"](https://www.youtube.com/watch?v=wNpIGUAwZB8),

(2023),

[YouTube/@AAA4Prediction](https://www.youtube.com/@AAA4prediction).

[AAv2] Anton Antonov,

["Jupyter Chatbook LLM cells demo (Python)"](https://youtu.be/WN3N-K_Xzz8),

(2023),

[YouTube/@AAA4Prediction](https://www.youtube.com/@AAA4prediction).

[AAv3] Anton Antonov,

["Jupyter Chatbook multi cell LLM chats teaser (Python)"](https://www.youtube.com/watch?v=8pv0QRGc7Rw),

(2023),

[YouTube/@AAA4Prediction](https://www.youtube.com/@AAA4prediction).

Raw data

{

"_id": null,

"home_page": "https://github.com/antononcube/Python-JupyterChatbook",

"name": "JupyterChatbook",

"maintainer": "",

"docs_url": null,

"requires_python": ">=3.7",

"maintainer_email": "",

"keywords": "llm,llm prompt,chat object,chatbook,magic,magics,jupyter,notebook",

"author": "Anton Antonov",

"author_email": "antononcube@posteo.net",

"download_url": "https://files.pythonhosted.org/packages/d8/c9/b9d44b471b66ec973309437c323520ca562c194e0d1c660ba94c5703d199/JupyterChatbook-0.0.16.tar.gz",

"platform": null,

"description": "# JupyterChatbook\n\n\n[](https://pypistats.org/packages/jupyterchatbook)\n\n<!---\nPePy: \n[](https://pepy.tech/project/JupyterChatbook)\n[](https://pepy.tech/project/JupyterChatbook)\n[](https://pepy.tech/project/JupyterChatbook)\n--->\n\n\"JupyterChatbook\" is a Python package of a Jupyter extension that facilitates \nthe interaction with Large Language Models (LLMs).\n\nThe Chatbook extension provides the cell magics:\n\n- `%%chatgpt` (and the synonym `%%openai`)\n- `%%palm`\n- `%%dalle`\n- `%%chat`\n- `%%chat_meta`\n\nThe first three are for \"shallow\" access of the corresponding LLM services.\nThe 4th one is the most important -- allows contextual, multi-cell interactions with LLMs.\nThe last one is for managing the chat objects created in a notebook session.\n\n**Remark:** The chatbook LLM cells use the packages \n[\"openai\"](https://pypi.org/project/openai/), [OAIp2], \nand [\"google-generativeai\"](https://pypi.org/project/google-generativeai/), [GAIp1].\n\n**Remark:** The results of the LLM cells are automatically copied to the clipboard\nusing the package [\"pyperclip\"](https://pypi.org/project/pyperclip/), [ASp1].\n\n**Remark:** The API keys for the LLM cells can be specified in the magic lines. If not specified then the API keys are taken f\nrom the Operating System (OS) environmental variables `OPENAI_API_KEY` and `PALM_API_KEY`. \n(See below the setup section for LLM services access.)\n\nHere is a couple of movies [AAv2, AAv3] that provide quick introductions to the features:\n- [\"Jupyter Chatbook LLM cells demo (Python)\"](https://youtu.be/WN3N-K_Xzz8), (4.8 min)\n- [\"Jupyter Chatbook multi cell LLM chats teaser (Python)\"](https://www.youtube.com/watch?v=8pv0QRGc7Rw), (4.5 min)\n\n--------\n\n## Installation\n\n### Install from GitHub\n\n```shell\npip install -e git+https://github.com/antononcube/Python-JupyterChatbook.git#egg=Python-JupyterChatbook\n```\n\n### From PyPi\n\n```shell\npip install JupyterChatbook\n```\n\n-------\n\n## Setup LLM services access\n\nThe API keys for the LLM cells can be specified in the magic lines. If not specified then the API keys are taken f\nrom the Operating System (OS) environmental variables`OPENAI_API_KEY` and `PALM_API_KEY`. \n(For example, set in the \"~/.zshrc\" file in macOS.)\n\nOne way to set those environmental variables in a notebook session is to use the `%env` line magic. For example:\n\n```\n%env OPENAI_API_KEY = <YOUR API KEY>\n```\n\nAnother way is to use Python code. For example:\n\n```\nimport os\nos.environ['PALM_API_KEY'] = '<YOUR PALM API KEY>'\nos.environ['OPEN_API_KEY'] = '<YOUR OPEN API KEY>'\n```\n\n-------\n\n## Demonstration notebooks (chatbooks)\n\n| Notebook | Description |\n|-----------------------------------|---------------------------------------------------------------------------------------------|\n| [Chatbooks-cells-demo.ipynb](https://github.com/antononcube/Python-JupyterChatbook/blob/main/docs/Chatbook-cells-demo.ipynb) | How to do [multi-cell (notebook-wide) chats](https://www.youtube.com/watch?v=8pv0QRGc7Rw)? |\n| [Chatbook-LLM-cells.ipynb](https://github.com/antononcube/Python-JupyterChatbook/blob/main/docs/Chatbook-LLM-cells.ipynb) | How to \"directly message\" LLMs services? |\n| [DALL-E-cells-demo.ipynb](https://github.com/antononcube/Python-JupyterChatbook/blob/main/docs/DALL-E-cells-demo.ipynb) | How to generate images with [DALL-E](https://openai.com/dall-e-2)? |\n| [Echoed-chats.ipynb](https://github.com/antononcube/Python-JupyterChatbook/blob/main/docs/Echoed-chats.ipynb) | How to see the LLM interaction execution steps? |\n\n\n-------\n\n## Notebook-wide chats\n\nChatbooks have the ability to maintain LLM conversations over multiple notebook cells.\nA chatbook can have more than one LLM conversations.\n\"Under the hood\" each chatbook maintains a database of chat objects.\nChat cells are used to give messages to those chat objects.\n\nFor example, here is a chat cell with which a new \n[\"Email writer\"](https://developers.generativeai.google/prompts/email-writer) \nchat object is made, and that new chat object has the identifier \"em12\": \n\n```\n%%chat --chat_id em12, --prompt \"Given a topic, write emails in a concise, professional manner\"\nWrite a vacation email.\n```\n\nHere is a chat cell in which another message is given to the chat object with identifier \"em12\":\n\n```\n%%chat --chat_id em12\nRewrite with manager's name being Jane Doe, and start- and end dates being 8/20 and 9/5.\n```\n\nIn this chat cell a new chat object is created:\n\n```\n%%chat -i snowman, --prompt \"Pretend you are a friendly snowman. Stay in character for every response you give me. Keep your responses short.\"\nHi!\n```\n\nAnd here is a chat cell that sends another message to the \"snowman\" chat object:\n\n```\n%%chat -i snowman\nWho build you? Where?\n```\n\n**Remark:** Specifying a chat object identifier is not required. I.e. only the magic spec `%%chat` can be used.\nThe \"default\" chat object ID identifier is \"NONE\".\n\nFor more examples see the notebook \n[\"Chatbook-cells-demo.ipynb\"](https://github.com/antononcube/Python-JupyterChatbook/blob/main/docs/Chatbook-cells-demo.ipynb).\n\nHere is a flowchart that summarizes the way chatbooks create and utilize LLM chat objects:\n\n```mermaid\nflowchart LR\n OpenAI{{OpenAI}}\n PaLM{{PaLM}}\n LLMFunc[[LLMFunctions]]\n LLMProm[[LLMPrompts]]\n CODB[(Chat objects)]\n PDB[(Prompts)]\n CCell[/Chat cell/]\n CRCell[/Chat result cell/]\n CIDQ{Chat ID<br/>specified?}\n CIDEQ{Chat ID<br/>exists in DB?}\n RECO[Retrieve existing<br/>chat object]\n COEval[Message<br/>evaluation]\n PromParse[Prompt<br/>DSL spec parsing]\n KPFQ{Known<br/>prompts<br/>found?}\n PromExp[Prompt<br/>expansion]\n CNCO[Create new<br/>chat object]\n CIDNone[\"Assume chat ID<br/>is 'NONE'\"] \n subgraph Chatbook frontend \n CCell\n CRCell\n end\n subgraph Chatbook backend\n CIDQ\n CIDEQ\n CIDNone\n RECO\n CNCO\n CODB\n end\n subgraph Prompt processing\n PDB\n LLMProm\n PromParse\n KPFQ\n PromExp \n end\n subgraph LLM interaction\n COEval\n LLMFunc\n PaLM\n OpenAI\n end\n CCell --> CIDQ\n CIDQ --> |yes| CIDEQ\n CIDEQ --> |yes| RECO\n RECO --> PromParse\n COEval --> CRCell\n CIDEQ -.- CODB\n CIDEQ --> |no| CNCO\n LLMFunc -.- CNCO -.- CODB\n CNCO --> PromParse --> KPFQ\n KPFQ --> |yes| PromExp\n KPFQ --> |no| COEval\n PromParse -.- LLMProm \n PromExp -.- LLMProm\n PromExp --> COEval \n LLMProm -.- PDB\n CIDQ --> |no| CIDNone\n CIDNone --> CIDEQ\n COEval -.- LLMFunc\n LLMFunc <-.-> OpenAI\n LLMFunc <-.-> PaLM\n```\n\n------\n\n## Chat meta cells\n\nEach chatbook session has a dictionary of chat objects.\nChatbooks can have chat meta cells that allow the access of the chat object \"database\" as whole, \nor its individual objects. \n\nHere is an example of a chat meta cell (that applies the method `print` to the chat object with ID \"snowman\"):\n\n```\n%%chat_meta -i snowman \nprint\n```\n\nHere is an example of chat meta cell that creates a new chat chat object with the LLM prompt\nspecified in the cell\n([\"Guess the word\"](https://developers.generativeai.google/prompts/guess-the-word)):\n\n```\n%%chat_meta -i WordGuesser --prompt\nWe're playing a game. I'm thinking of a word, and I need to get you to guess that word. \nBut I can't say the word itself. \nI'll give you clues, and you'll respond with a guess. \nYour guess should be a single word only.\n```\n\nHere is another chat object creation cell using a prompt from the package\n[\"LLMPrompts\"](https://pypi.org/project/LLMPrompts), [AAp2]:\n\n```\n%%chat_meta -i yoda1 --prompt\n@Yoda\n```\n\nHere is a table with examples of magic specs for chat meta cells and their interpretation:\n\n| cell magic line | cell content | interpretation |\n|:---------------------------|:-------------------------------------|:----------------------------------------------------------------|\n| chat_meta -i ew12 | print | Give the \"print out\" of the chat object with ID \"ew12\" | \n| chat_meta --chat_id ew12 | messages | Give the messages of the chat object with ID \"ew12\" | \n| chat_meta -i sn22 --prompt | You pretend to be a melting snowman. | Create a chat object with ID \"sn22\" with the prompt in the cell | \n| chat_meta --all | keys | Show the keys of the session chat objects DB | \n| chat_meta --all | print | Print the `repr` forms of the session chat objects | \n\nHere is a flowchart that summarizes the chat meta cell processing:\n\n```mermaid\nflowchart LR\n LLMFunc[[LLMFunctionObjects]]\n CODB[(Chat objects)]\n CCell[/Chat meta cell/]\n CRCell[/Chat meta cell result/]\n CIDQ{Chat ID<br/>specified?}\n KCOMQ{Known<br/>chat object<br/>method?}\n AKWQ{Option '--all'<br/>specified?} \n KCODBMQ{Known<br/>chat objects<br/>DB method?}\n CIDEQ{Chat ID<br/>exists in DB?}\n RECO[Retrieve existing<br/>chat object]\n COEval[Chat object<br/>method<br/>invocation]\n CODBEval[Chat objects DB<br/>method<br/>invocation]\n CNCO[Create new<br/>chat object]\n CIDNone[\"Assume chat ID<br/>is 'NONE'\"] \n NoCOM[/Cannot find<br/>chat object<br/>message/]\n CntCmd[/Cannot interpret<br/>command<br/>message/]\n subgraph Chatbook\n CCell\n NoCOM\n CntCmd\n CRCell\n end\n CCell --> CIDQ\n CIDQ --> |yes| CIDEQ \n CIDEQ --> |yes| RECO\n RECO --> KCOMQ\n KCOMQ --> |yes| COEval --> CRCell\n KCOMQ --> |no| CntCmd\n CIDEQ -.- CODB\n CIDEQ --> |no| NoCOM\n LLMFunc -.- CNCO -.- CODB\n CNCO --> COEval\n CIDQ --> |no| AKWQ\n AKWQ --> |yes| KCODBMQ\n KCODBMQ --> |yes| CODBEval\n KCODBMQ --> |no| CntCmd\n CODBEval -.- CODB\n CODBEval --> CRCell\n AKWQ --> |no| CIDNone\n CIDNone --> CIDEQ\n COEval -.- LLMFunc\n```\n\n-------\n\n## DALL-E access\n\n***See the notebook [\"DALL-E-cells-demo.ipynb\"](https://github.com/antononcube/Python-JupyterChatbook/blob/main/docs/DALL-E-cells-demo.ipynb)***\n\nHere is a screenshot:\n\n\n\n-------\n\n## Implementation details\n\nThe design of this package -- and corresponding envisioned workflows with it -- follow those of\nthe Raku package [\"Jupyter::Chatbook\"](https://github.com/antononcube/Raku-Jupyter-Chatbook), [AAp3].\n\n-------\n\n## TODO\n\n- [ ] TODO Implementation\n - [X] DONE PalM chat cell\n - [ ] TODO Using [\"pyperclip\"](https://pypi.org/project/pyperclip/)\n - [X] DONE Basic\n - [X] `%%chatgpt`\n - [X] `%%dalle`\n - [X] `%%palm`\n - [X] `%%chat`\n - [ ] TODO Switching on/off copying to the clipboard\n - [X] DONE Per cell \n - Controlled with the argument `--no_clipboard`.\n - [ ] TODO Global \n - Can be done via the chat meta cell, but maybe a more elegant, bureaucratic solution exists.\n - [X] DONE Formatted output: asis, html, markdown\n - General [lexer code](https://ipython.readthedocs.io/en/stable/api/generated/IPython.display.html#IPython.display.Code)?\n - Includes LaTeX.\n - [X] `%%chatgpt`\n - [X] `%%palm`\n - [X] `%%chat`\n - [ ] `%%chat_meta`?\n - [X] DONE DALL-E image variations cell\n - Combined image variations and edits with `%%dalle`.\n - [ ] TODO Mermaid-JS cell\n - [ ] TODO ProdGDT cell\n - [ ] MAYBE DeepL cell\n - See [\"deepl-python\"](https://github.com/DeepLcom/deepl-python)\n - [ ] TODO Lower level access to chat objects.\n - Like:\n - Getting the 3rd message\n - Removing messages after 2 second one\n - etc.\n - [ ] TODO Using LLM commands to manipulate chat objects\n - Like:\n - \"Remove the messages after the second for chat profSynapse3.\"\n - \"Show the third messages of each chat object.\" \n- [ ] TODO Documentation\n - [X] DONE Multi-cell LLM chats movie (teaser)\n - See [AAv2].\n - [ ] TODO LLM service cells movie (short)\n - [ ] TODO Multi-cell LLM chats movie (comprehensive)\n - [ ] TODO Code generation \n\n-------\n\n## References\n\n### Packages\n\n[AAp1] Anton Antonov,\n[LLMFunctionObjects Python package](https://github.com/antononcube/Python-packages/tree/main/LLMFunctionObjects),\n(2023),\n[Python-packages at GitHub/antononcube](https://github.com/antononcube/Python-packages).\n\n[AAp2] Anton Antonov,\n[LLMPrompts Python package](https://github.com/antononcube/Python-packages/tree/main/LLMPrompts),\n(2023),\n[Python-packages at GitHub/antononcube](https://github.com/antononcube/Python-packages).\n\n[AAp3] Anton Antonov,\n[Jupyter::Chatbook Raku package](https://github.com/antononcube/Raku-Jupyter-Chatbook),\n(2023),\n[GitHub/antononcube](https://github.com/antononcube).\n\n[ASp1] Al Sweigart, \n[pyperclip (Python package)](https://pypi.org/project/pyperclip/),\n(2013-2021),\n[PyPI.org/AlSweigart](https://pypi.org/user/AlSweigart/).\n\n[GAIp1] Google AI,\n[google-generativeai (Google Generative AI Python Client)](https://pypi.org/project/google-generativeai/),\n(2023),\n[PyPI.org/google-ai](https://pypi.org/user/google-ai/).\n\n[OAIp1] OpenAI, \n[openai (OpenAI Python Library)](https://pypi.org/project/openai/),\n(2020-2023),\n[PyPI.org](https://pypi.org/).\n\n### Videos\n\n[AAv1] Anton Antonov,\n[\"Jupyter Chatbook multi cell LLM chats teaser (Raku)\"](https://www.youtube.com/watch?v=wNpIGUAwZB8),\n(2023),\n[YouTube/@AAA4Prediction](https://www.youtube.com/@AAA4prediction).\n\n[AAv2] Anton Antonov,\n[\"Jupyter Chatbook LLM cells demo (Python)\"](https://youtu.be/WN3N-K_Xzz8),\n(2023),\n[YouTube/@AAA4Prediction](https://www.youtube.com/@AAA4prediction).\n\n[AAv3] Anton Antonov,\n[\"Jupyter Chatbook multi cell LLM chats teaser (Python)\"](https://www.youtube.com/watch?v=8pv0QRGc7Rw),\n(2023),\n[YouTube/@AAA4Prediction](https://www.youtube.com/@AAA4prediction).\n\n",

"bugtrack_url": null,

"license": "",

"summary": "Custom Jupyter magics for interacting with LLMs.",

"version": "0.0.16",

"project_urls": {

"Homepage": "https://github.com/antononcube/Python-JupyterChatbook"

},

"split_keywords": [

"llm",

"llm prompt",

"chat object",

"chatbook",

"magic",

"magics",

"jupyter",

"notebook"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "d35be94f42ccd1a9a62e1b6b7b92ae4eb23af8fe4f040cb359d8bc69167f0101",

"md5": "e4f76a3335e40f263bfbce337c7b00b7",

"sha256": "c6ae19ba1fc37836860f62dea4cae02afb3c8899e2d2fa6fa4fd9bebb4b03c32"

},

"downloads": -1,

"filename": "JupyterChatbook-0.0.16-py3-none-any.whl",

"has_sig": false,

"md5_digest": "e4f76a3335e40f263bfbce337c7b00b7",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.7",

"size": 11433,

"upload_time": "2023-10-20T17:51:15",

"upload_time_iso_8601": "2023-10-20T17:51:15.502907Z",

"url": "https://files.pythonhosted.org/packages/d3/5b/e94f42ccd1a9a62e1b6b7b92ae4eb23af8fe4f040cb359d8bc69167f0101/JupyterChatbook-0.0.16-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "d8c9b9d44b471b66ec973309437c323520ca562c194e0d1c660ba94c5703d199",

"md5": "247ed8517c14480071b35caa10eff32f",

"sha256": "1e219d1e902c70ca466c8828890a57f732747e4426abae73c208f91573218ffb"

},

"downloads": -1,

"filename": "JupyterChatbook-0.0.16.tar.gz",

"has_sig": false,

"md5_digest": "247ed8517c14480071b35caa10eff32f",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.7",

"size": 11396,

"upload_time": "2023-10-20T17:51:17",

"upload_time_iso_8601": "2023-10-20T17:51:17.049122Z",

"url": "https://files.pythonhosted.org/packages/d8/c9/b9d44b471b66ec973309437c323520ca562c194e0d1c660ba94c5703d199/JupyterChatbook-0.0.16.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2023-10-20 17:51:17",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "antononcube",

"github_project": "Python-JupyterChatbook",

"travis_ci": false,

"coveralls": false,

"github_actions": false,

"lcname": "jupyterchatbook"

}