# LayerViz

[License](LICENSE)

.png)

A Python Library for Visualizing Keras Models covering a variety of Layers.

## Table of Contents

<!-- TOC -->

* [LayerViz](#LayerViz)

* [Table of Contents](#table-of-contents)

* [Installation](#installation)

* [Install](#install)

* [Upgrade](#upgrade)

* [Usage](#usage)

* [Parameters](#parameters)

* [Settings](#settings)

* [Sample Usage](#sample-usage)

* [Supported layers](#supported-layers)

<!-- TOC -->

## Installation

## Install

Use python package manager (pip) to install LayerViz.

```bash

pip install LayerViz

```

### Upgrade

Use python package manager (pip) to upgrade LayerViz.

```bash

pip install LayerViz --upgrade

```

## Usage

```python

from libname imoprt layerviz

# create your model here

# model = ...

layerviz(model, file_format='png')

```

## Parameters

```python

layerviz(model, file_name='graph', file_format=None, view=False, settings=None)

```

- `model` : a Keras model instance.

- `file_name` : where to save the visualization.

- `file_format` : file format to save 'pdf', 'png'.

- `view` : open file after process if True.

- `settings` : a dictionary of available settings.

> **Note :**

> - set `file_format='png'` or `file_format='pdf'` to save visualization file.

> - use `view=True` to open visualization file.

> - use [settings](#settings) to customize output image.

## Settings

you can customize settings for your output image. here is the default settings dictionary:

```python

recurrent_layers = ['LSTM', 'GRU']

main_settings = {

# ALL LAYERS

'MAX_NEURONS': 10,

'ARROW_COLOR': '#707070',

# INPUT LAYERS

'INPUT_DENSE_COLOR': '#2ecc71',

'INPUT_EMBEDDING_COLOR': 'black',

'INPUT_EMBEDDING_FONT': 'white',

'INPUT_GRAYSCALE_COLOR': 'black:white',

'INPUT_GRAYSCALE_FONT': 'white',

'INPUT_RGB_COLOR': '#e74c3c:#3498db',

'INPUT_RGB_FONT': 'white',

'INPUT_LAYER_COLOR': 'black',

'INPUT_LAYER_FONT': 'white',

# HIDDEN LAYERS

'HIDDEN_DENSE_COLOR': '#3498db',

'HIDDEN_CONV_COLOR': '#5faad0',

'HIDDEN_CONV_FONT': 'black',

'HIDDEN_POOLING_COLOR': '#8e44ad',

'HIDDEN_POOLING_FONT': 'white',

'HIDDEN_FLATTEN_COLOR': '#2c3e50',

'HIDDEN_FLATTEN_FONT': 'white',

'HIDDEN_DROPOUT_COLOR': '#f39c12',

'HIDDEN_DROPOUT_FONT': 'black',

'HIDDEN_ACTIVATION_COLOR': '#00b894',

'HIDDEN_ACTIVATION_FONT': 'black',

'HIDDEN_LAYER_COLOR': 'black',

'HIDDEN_LAYER_FONT': 'white',

# RECURRENT LAYERS

'RECURRENT_LAYER_COLOR': '#9b59b6',

'RECURRENT_LAYER_FONT': 'white',

# OUTPUT LAYER

'OUTPUT_DENSE_COLOR': '#e74c3c',

'OUTPUT_LAYER_COLOR': 'black',

'OUTPUT_LAYER_FONT': 'white',

}

for layer_type in recurrent_layers:

main_settings[layer_type + '_COLOR'] = '#9b59b6'

settings = {**main_settings, **settings} if settings is not None else {**main_settings}

max_neurons = settings['MAX_NEURONS']

```

**Note**:

* set `'MAX_NEURONS': None` to disable max neurons constraint.

* see list of color names [here](https://graphviz.org/doc/info/colors.html).

```python

my_settings = {

'MAX_NEURONS': None,

'INPUT_DENSE_COLOR': 'teal',

'HIDDEN_DENSE_COLOR': 'gray',

'OUTPUT_DENSE_COLOR': 'crimson'

}

# model = ...

layerviz(model, file_format='png', settings=my_settings)

```

## Sample Usage

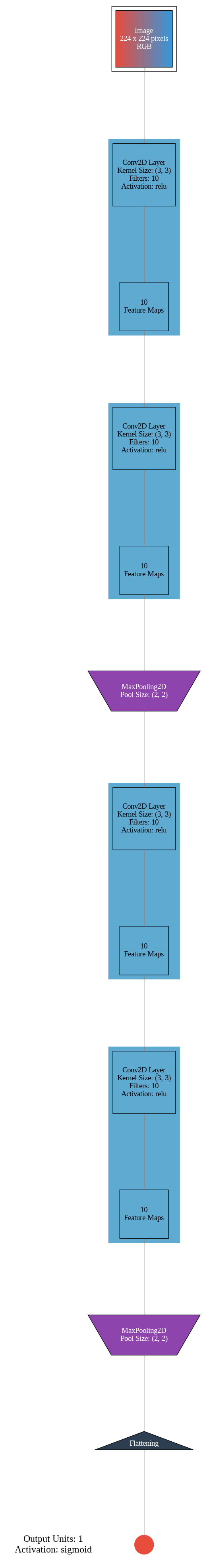

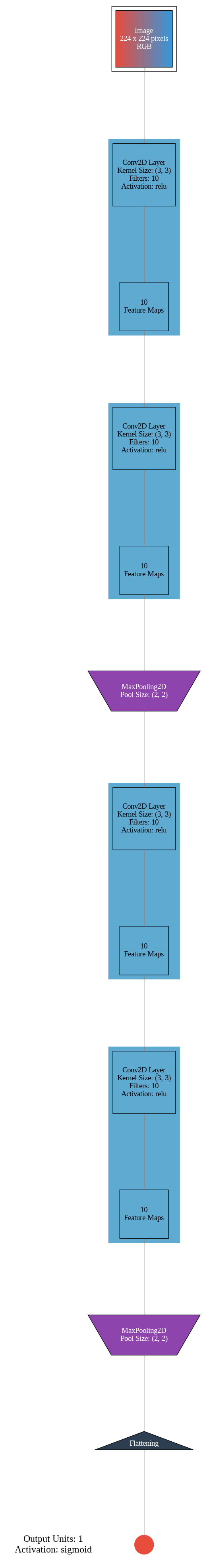

📖 **Resource:** The architecture we're using below is a scaled-down version of [VGG-16](https://arxiv.org/abs/1505.06798), a convolutional neural network which came 2nd in the 2014 [ImageNet classification competition](http://image-net.org/).

For reference, the model we're using replicates TinyVGG, the computer vision architecture which fuels the [CNN explainer webpage](https://poloclub.github.io/cnn-explainer/).

```python

from keras import models, layers

from libname import layerviz

import tensorflow as tf

model_1 = tf.keras.models.Sequential([

tf.keras.layers.Conv2D(filters=10,

kernel_size=3, # can also be (3, 3)

activation="relu",

input_shape=(224, 224, 3)), # first layer specifies input shape (height, width, colour channels)

tf.keras.layers.Conv2D(10, 3, activation="relu"),

tf.keras.layers.MaxPool2D(pool_size=2, # pool_size can also be (2, 2)

padding="valid"), # padding can also be 'same'

tf.keras.layers.Conv2D(10, 3, activation="relu"),

tf.keras.layers.Conv2D(10, 3, activation="relu"), # activation='relu' == tf.keras.layers.Activations(tf.nn.relu)

tf.keras.layers.MaxPool2D(2),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(1, activation="sigmoid") # binary activation output

])

layerviz(model_1, file_name='sample1', file_format='png')

from IPython.display import Image

Image('sample1.png')

```

## Supported layers

[Explore list of **keras layers**](https://keras.io/api/layers/)

1. Core layers

- [x] Input object

- [x] Dense layer

- [x] Activation layer

- [x] Embedding layer

- [ ] Masking layer

- [ ] Lambda layer

2. Convolution layers

- [x] Conv1D layer

- [x] Conv2D layer

- [x] Conv3D layer

- [x] SeparableConv1D layer

- [x] SeparableConv2D layer

- [x] DepthwiseConv2D layer

- [x] Conv1DTranspose layer

- [x] Conv2DTranspose layer

- [x] Conv3DTranspose layer

3. Pooling layers

- [x] MaxPooling1D layer

- [x] MaxPooling2D layer

- [x] MaxPooling3D layer

- [x] AveragePooling1D layer

- [x] AveragePooling2D layer

- [x] AveragePooling3D layer

- [x] GlobalMaxPooling1D layer

- [x] GlobalMaxPooling2D layer

- [x] GlobalMaxPooling3D layer

- [x] GlobalAveragePooling1D layer

- [x] GlobalAveragePooling2D layer

- [x] GlobalAveragePooling3D layer

4. Reshaping layers

- [ ] Reshape layer

- [x] Flatten layer

- [ ] RepeatVector layer

- [ ] Permute layer

- [ ] Cropping1D layer

- [ ] Cropping2D layer

- [ ] Cropping3D layer

- [ ] UpSampling1D layer

- [ ] UpSampling2D layer

- [ ] UpSampling3D layer

- [ ] ZeroPadding1D layer

- [ ] ZeroPadding2D layer

- [ ] ZeroPadding3D layer

5. Regularization layers

- [x] Dropout layer

- [x] SpatialDropout1D layer

- [x] SpatialDropout2D layer

- [x] SpatialDropout3D layer

- [x] GaussianDropout layer

- [ ] GaussianNoise layer

- [ ] ActivityRegularization layer

- [x] AlphaDropout layer

6. Recurrent Layers

- [x] LSTM

- [x] GRU

Raw data

{

"_id": null,

"home_page": null,

"name": "LayerViz",

"maintainer": null,

"docs_url": null,

"requires_python": ">=3.6",

"maintainer_email": null,

"keywords": "python, keras, visualize, models, layers",

"author": "Swajay Nandanwade",

"author_email": "swajaynandanwade@gmail.com",

"download_url": "https://files.pythonhosted.org/packages/b9/e2/7057545af5e5ab427ba5ed8757e459aeebf041b9a8eac7b92f108bf4d0f6/LayerViz-0.1.1.tar.gz",

"platform": null,

"description": "# LayerViz\r\n\r\n[License](LICENSE)\r\n\r\n.png)\r\n\r\nA Python Library for Visualizing Keras Models covering a variety of Layers.\r\n\r\n\r\n## Table of Contents\r\n\r\n<!-- TOC -->\r\n\r\n* [LayerViz](#LayerViz)\r\n * [Table of Contents](#table-of-contents)\r\n * [Installation](#installation)\r\n * [Install](#install)\r\n * [Upgrade](#upgrade)\r\n * [Usage](#usage)\r\n * [Parameters](#parameters)\r\n * [Settings](#settings)\r\n * [Sample Usage](#sample-usage)\r\n * [Supported layers](#supported-layers)\r\n\r\n<!-- TOC -->\r\n\r\n## Installation\r\n\r\n## Install\r\n\r\nUse python package manager (pip) to install LayerViz.\r\n\r\n```bash\r\npip install LayerViz\r\n```\r\n\r\n### Upgrade\r\n\r\nUse python package manager (pip) to upgrade LayerViz.\r\n\r\n```bash\r\npip install LayerViz --upgrade\r\n```\r\n\r\n## Usage\r\n\r\n```python\r\n\r\nfrom libname imoprt layerviz\r\n# create your model here\r\n# model = ...\r\n\r\nlayerviz(model, file_format='png')\r\n```\r\n\r\n## Parameters\r\n\r\n```python\r\nlayerviz(model, file_name='graph', file_format=None, view=False, settings=None)\r\n```\r\n\r\n- `model` : a Keras model instance.\r\n- `file_name` : where to save the visualization.\r\n- `file_format` : file format to save 'pdf', 'png'.\r\n- `view` : open file after process if True.\r\n- `settings` : a dictionary of available settings.\r\n\r\n> **Note :**\r\n> - set `file_format='png'` or `file_format='pdf'` to save visualization file.\r\n> - use `view=True` to open visualization file.\r\n> - use [settings](#settings) to customize output image.\r\n\r\n## Settings\r\n\r\nyou can customize settings for your output image. here is the default settings dictionary:\r\n\r\n```python\r\n recurrent_layers = ['LSTM', 'GRU']\r\n main_settings = {\r\n # ALL LAYERS\r\n 'MAX_NEURONS': 10,\r\n 'ARROW_COLOR': '#707070',\r\n # INPUT LAYERS\r\n 'INPUT_DENSE_COLOR': '#2ecc71',\r\n 'INPUT_EMBEDDING_COLOR': 'black',\r\n 'INPUT_EMBEDDING_FONT': 'white',\r\n 'INPUT_GRAYSCALE_COLOR': 'black:white',\r\n 'INPUT_GRAYSCALE_FONT': 'white',\r\n 'INPUT_RGB_COLOR': '#e74c3c:#3498db',\r\n 'INPUT_RGB_FONT': 'white',\r\n 'INPUT_LAYER_COLOR': 'black',\r\n 'INPUT_LAYER_FONT': 'white',\r\n # HIDDEN LAYERS\r\n 'HIDDEN_DENSE_COLOR': '#3498db',\r\n 'HIDDEN_CONV_COLOR': '#5faad0',\r\n 'HIDDEN_CONV_FONT': 'black',\r\n 'HIDDEN_POOLING_COLOR': '#8e44ad',\r\n 'HIDDEN_POOLING_FONT': 'white',\r\n 'HIDDEN_FLATTEN_COLOR': '#2c3e50',\r\n 'HIDDEN_FLATTEN_FONT': 'white',\r\n 'HIDDEN_DROPOUT_COLOR': '#f39c12',\r\n 'HIDDEN_DROPOUT_FONT': 'black',\r\n 'HIDDEN_ACTIVATION_COLOR': '#00b894',\r\n 'HIDDEN_ACTIVATION_FONT': 'black',\r\n 'HIDDEN_LAYER_COLOR': 'black',\r\n 'HIDDEN_LAYER_FONT': 'white',\r\n # RECURRENT LAYERS\r\n 'RECURRENT_LAYER_COLOR': '#9b59b6',\r\n 'RECURRENT_LAYER_FONT': 'white',\r\n # OUTPUT LAYER\r\n 'OUTPUT_DENSE_COLOR': '#e74c3c',\r\n 'OUTPUT_LAYER_COLOR': 'black',\r\n 'OUTPUT_LAYER_FONT': 'white',\r\n }\r\n\r\n\r\n for layer_type in recurrent_layers:\r\n main_settings[layer_type + '_COLOR'] = '#9b59b6'\r\n settings = {**main_settings, **settings} if settings is not None else {**main_settings}\r\n max_neurons = settings['MAX_NEURONS']\r\n```\r\n\r\n**Note**:\r\n\r\n* set `'MAX_NEURONS': None` to disable max neurons constraint.\r\n* see list of color names [here](https://graphviz.org/doc/info/colors.html).\r\n\r\n```python\r\n\r\n\r\nmy_settings = {\r\n 'MAX_NEURONS': None,\r\n 'INPUT_DENSE_COLOR': 'teal',\r\n 'HIDDEN_DENSE_COLOR': 'gray',\r\n 'OUTPUT_DENSE_COLOR': 'crimson'\r\n}\r\n\r\n# model = ...\r\n\r\nlayerviz(model, file_format='png', settings=my_settings)\r\n```\r\n## Sample Usage \r\n\u00f0\u0178\u201c\u2013 **Resource:** The architecture we're using below is a scaled-down version of [VGG-16](https://arxiv.org/abs/1505.06798), a convolutional neural network which came 2nd in the 2014 [ImageNet classification competition](http://image-net.org/).\r\n\r\nFor reference, the model we're using replicates TinyVGG, the computer vision architecture which fuels the [CNN explainer webpage](https://poloclub.github.io/cnn-explainer/).\r\n```python\r\nfrom keras import models, layers\r\nfrom libname import layerviz\r\nimport tensorflow as tf\r\nmodel_1 = tf.keras.models.Sequential([\r\n tf.keras.layers.Conv2D(filters=10, \r\n kernel_size=3, # can also be (3, 3)\r\n activation=\"relu\", \r\n input_shape=(224, 224, 3)), # first layer specifies input shape (height, width, colour channels)\r\n tf.keras.layers.Conv2D(10, 3, activation=\"relu\"),\r\n tf.keras.layers.MaxPool2D(pool_size=2, # pool_size can also be (2, 2)\r\n padding=\"valid\"), # padding can also be 'same'\r\n tf.keras.layers.Conv2D(10, 3, activation=\"relu\"),\r\n tf.keras.layers.Conv2D(10, 3, activation=\"relu\"), # activation='relu' == tf.keras.layers.Activations(tf.nn.relu)\r\n tf.keras.layers.MaxPool2D(2),\r\n tf.keras.layers.Flatten(),\r\n tf.keras.layers.Dense(1, activation=\"sigmoid\") # binary activation output\r\n])\r\n\r\nlayerviz(model_1, file_name='sample1', file_format='png')\r\n\r\nfrom IPython.display import Image\r\nImage('sample1.png')\r\n```\r\n\r\n\r\n## Supported layers\r\n\r\n[Explore list of **keras layers**](https://keras.io/api/layers/)\r\n\r\n1. Core layers\r\n - [x] Input object\r\n - [x] Dense layer\r\n - [x] Activation layer\r\n - [x] Embedding layer\r\n - [ ] Masking layer\r\n - [ ] Lambda layer\r\n\r\n2. Convolution layers\r\n - [x] Conv1D layer\r\n - [x] Conv2D layer\r\n - [x] Conv3D layer\r\n - [x] SeparableConv1D layer\r\n - [x] SeparableConv2D layer\r\n - [x] DepthwiseConv2D layer\r\n - [x] Conv1DTranspose layer\r\n - [x] Conv2DTranspose layer\r\n - [x] Conv3DTranspose layer\r\n\r\n3. Pooling layers\r\n - [x] MaxPooling1D layer\r\n - [x] MaxPooling2D layer\r\n - [x] MaxPooling3D layer\r\n - [x] AveragePooling1D layer\r\n - [x] AveragePooling2D layer\r\n - [x] AveragePooling3D layer\r\n - [x] GlobalMaxPooling1D layer\r\n - [x] GlobalMaxPooling2D layer\r\n - [x] GlobalMaxPooling3D layer\r\n - [x] GlobalAveragePooling1D layer\r\n - [x] GlobalAveragePooling2D layer\r\n - [x] GlobalAveragePooling3D layer\r\n\r\n4. Reshaping layers\r\n - [ ] Reshape layer\r\n - [x] Flatten layer\r\n - [ ] RepeatVector layer\r\n - [ ] Permute layer\r\n - [ ] Cropping1D layer\r\n - [ ] Cropping2D layer\r\n - [ ] Cropping3D layer\r\n - [ ] UpSampling1D layer\r\n - [ ] UpSampling2D layer\r\n - [ ] UpSampling3D layer\r\n - [ ] ZeroPadding1D layer\r\n - [ ] ZeroPadding2D layer\r\n - [ ] ZeroPadding3D layer\r\n\r\n5. Regularization layers\r\n - [x] Dropout layer\r\n - [x] SpatialDropout1D layer\r\n - [x] SpatialDropout2D layer\r\n - [x] SpatialDropout3D layer\r\n - [x] GaussianDropout layer\r\n - [ ] GaussianNoise layer\r\n - [ ] ActivityRegularization layer\r\n - [x] AlphaDropout layer\r\n\r\n6. Recurrent Layers\r\n - [x] LSTM\r\n - [x] GRU\r\n \r\n\r\n",

"bugtrack_url": null,

"license": null,

"summary": "A Python Library for Visualizing Keras Model that covers a variety of Layers",

"version": "0.1.1",

"project_urls": null,

"split_keywords": [

"python",

" keras",

" visualize",

" models",

" layers"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "0b7ba8b6ad0e5e4a5a915864c97663c78a46899fee59345a36e5698085f315a3",

"md5": "bd302fb21004b0bed959f4157ec5dfdf",

"sha256": "b7c31a76173e7e4b8f159b3a2a029b4fe1da8a5bcdadf4a20bf7ac872e780e29"

},

"downloads": -1,

"filename": "LayerViz-0.1.1-py3-none-any.whl",

"has_sig": false,

"md5_digest": "bd302fb21004b0bed959f4157ec5dfdf",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.6",

"size": 6613,

"upload_time": "2024-06-09T14:13:12",

"upload_time_iso_8601": "2024-06-09T14:13:12.310570Z",

"url": "https://files.pythonhosted.org/packages/0b/7b/a8b6ad0e5e4a5a915864c97663c78a46899fee59345a36e5698085f315a3/LayerViz-0.1.1-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "b9e27057545af5e5ab427ba5ed8757e459aeebf041b9a8eac7b92f108bf4d0f6",

"md5": "7de391363ab95d873a4cf87c80381594",

"sha256": "2efacaf201c6c0a19e1209a8190d04f07ad6dc54ee4791d258c4c395e01ef8d7"

},

"downloads": -1,

"filename": "LayerViz-0.1.1.tar.gz",

"has_sig": false,

"md5_digest": "7de391363ab95d873a4cf87c80381594",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.6",

"size": 6092,

"upload_time": "2024-06-09T14:13:13",

"upload_time_iso_8601": "2024-06-09T14:13:13.769839Z",

"url": "https://files.pythonhosted.org/packages/b9/e2/7057545af5e5ab427ba5ed8757e459aeebf041b9a8eac7b92f108bf4d0f6/LayerViz-0.1.1.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2024-06-09 14:13:13",

"github": false,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"lcname": "layerviz"

}