# 🦉 AIProxy

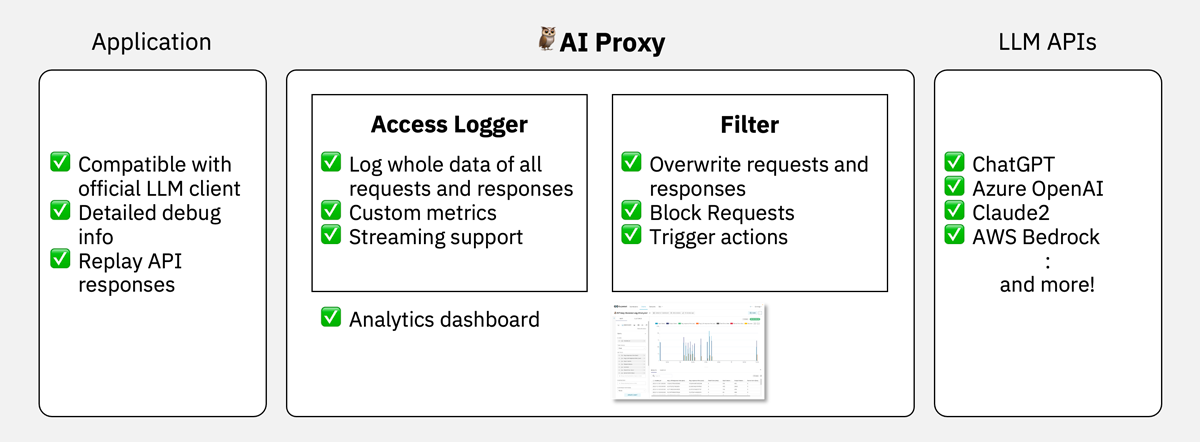

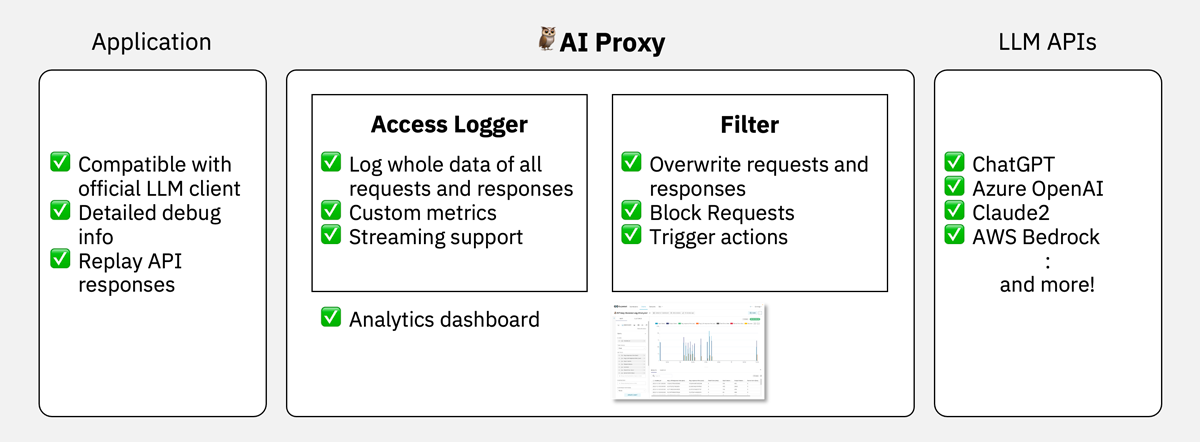

🦉 **AIProxy** is a Python library that serves as a reverse proxy LLM APIs including ChatGPT and Claude2. It provides enhanced features like monitoring, logging, and filtering requests and responses. This library is especially useful for developers and administrators who need detailed oversight and control over the interaction with LLM APIs.

- ✅ Streaming support: Logs every bit of request and response data with token count – never miss a beat! 💓

- ✅ Custom monitoring: Tailor-made for logging any specific info you fancy. Make it your own! 🔍

- ✅ Custom filtering: Flexibly blocks access based on specific info or sends back your own responses. Be in control! 🛡️

- ✅ Multiple AI Services: Supports ChatGPT (OpenAI and Azure OpenAI Service), Claude2 on AWS Bedrock, and is extensible by yourself! 🤖

- ✅ Express dashboard: We provide template for [Apache Superset](https://superset.apache.org) that's ready to use right out of the box – get insights quickly and efficiently! 📊

## 🚀 Quick start

Install.

```sh

$ pip install aiproxy-python

```

Run.

```sh

$ python -m aiproxy [--host host] [--port port] [--openai_api_key OPENAI_API_KEY]

```

Use.

```python

import openai

client = openai.Client(base_url="http://127.0.0.1:8000/openai", api_key="YOUR_API_KEY")

resp = client.chat.completions.create(model="gpt-3.5-turbo", messages=[{"role": "user", "content": "hello!"}])

print(resp)

```

Enjoy😊🦉

## 🏅 Use official client libraries

You can use the official client libraries for each LLM by just changing API endpoint url.

### ChatGPT

Set `http|https://your_host/openai` as `base_url` to client.

```python

import openai

client = openai.Client(

api_key="YOUR_API_KEY",

base_url="http://127.0.0.1:8000/openai"

)

resp = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[{"role": "user", "content": "hello!"}]

)

print(resp)

```

### Anthropic Claude

Set `http|https://your_host/anthropic` as `base_url` to client.

```python

from anthropic import Anthropic

client = Anthropic(

api_key="YOUR_API_KEY",

base_url="http://127.0.0.1:8000/anthropic"

)

resp = client.messages.create(

max_tokens=1024,

messages=[{"role": "user", "content": "Hello, Claude",}],

model="claude-3-haiku-20240307",

)

print(resp.content)

```

### Google Gemini (AI Studio)

API itself is compatible but client of `google.generativeai` doesn't support rewriting urls. Use httpx instead.

```python

import httpx

resp = httpx.post(

url="http://127.0.0.1:8000/googleaistudio/v1beta/models/gemini-1.5-pro-latest:generateContent",

json={

"contents": [{"role": "user", "parts":[{"text": "Hello, Gemini!"}]}],

"generationConfig": {"temperature": 0.5, "maxOutputTokens": 1000}

}

)

print(resp.json())

```

## 🛠️ Custom entrypoint

To customize **🦉AIProxy**, make your custom entrypoint first. You can customize the metrics you want to monitor, add filters, change databases, etc.

```python

from contextlib import asynccontextmanager

import threading

from fastapi import FastAPI

from aiproxy import AccessLogWorker

from aiproxy.chatgpt import ChatGPTProxy

from aiproxy.anthropic_claude import ClaudeProxy

from aiproxy.gemini import GeminiProxy

# Setup access log worker

worker = AccessLogWorker(connection_str="sqlite:///aiproxy.db")

# Setup server application

@asynccontextmanager

async def lifespan(app: FastAPI):

# Start access log worker

threading.Thread(target=worker.run, daemon=True).start()

yield

# Stop access log worker

worker.queue_client.put(None)

app = FastAPI(lifespan=lifespan, docs_url=None, redoc_url=None, openapi_url=None)

# Proxy for ChatGPT

chatgpt_proxy = ChatGPTProxy(

api_key=OPENAI_API_KEY,

access_logger_queue=worker.queue_client

)

chatgpt_proxy.add_route(app)

# Proxy for Anthropic Claude

claude_proxy = ClaudeProxy(

api_key=ANTHROPIC_API_KEY,

access_logger_queue=worker.queue_client

)

claude_proxy.add_route(app)

# Proxy for Gemini on Google AI Studio (not Vertex AI)

gemini_proxy = GeminiProxy(

api_key=GOOGLE_API_KEY,

access_logger_queue=worker.queue_client

)

gemini_proxy.add_route(app)

```

Run with uvicorn with some params if you need.

```sh

$ uvicorn run:app --host 0.0.0.0 --port 8000

```

## 🔍 Monitoring

By default, see `accesslog` table in `aiproxy.db`. If you want to use other RDBMS like PostgreSQL, set SQLAlchemy-formatted connection string as `connection_str` argument when instancing `AccessLogWorker`.

And, you can customize log format as below:

This is an example to add `user` column to request log. In this case, the customized log are stored into table named `customaccesslog`, the lower case of your custom access log class.

```python

from sqlalchemy import Column, String

from aiproxy.accesslog import AccessLogBase, AccessLogWorker

# Make custom schema for database

class CustomAccessLog(AccessLogBase):

user = Column(String)

# Make data mapping logic from HTTP headar/body to log

class CustomGPTRequestItem(ChatGPTRequestItem):

def to_accesslog(self, accesslog_cls: _AccessLogBase) -> _AccessLogBase:

accesslog = super().to_accesslog(accesslog_cls)

# In this case, set value of "x-user-id" in request header to newly added colmun "user"

accesslog.user = self.request_headers.get("x-user-id")

return accesslog

# Make worker with custom log schema

worker = AccessLogWorker(accesslog_cls=CustomAccessLog)

# Make proxy with your custom request item

proxy = ChatGPTProxy(

api_key=YOUR_API_KEY,

access_logger_queue=worker.queue_client,

request_item_class=CustomGPTRequestItem

)

```

NOTE: By default `AccessLog`, OpenAI API Key in the request headers is masked.

## 🛡️ Filtering

The filter receives all requests and responses, allowing you to view and modify their content. For example:

- Detect and protect from misuse: From unknown apps, unauthorized users, etc.

- Trigger custom actions: Doing something triggered by a request.

This is an example for custom request filter that protects the service from banned user. uezo will receive "you can't use this service" as the ChatGPT response.

```python

from typing import Union

from aiproxy import RequestFilterBase

class BannedUserFilter(RequestFilterBase):

async def filter(self, request_id: str, request_json: dict, request_headers: dict) -> Union[str, None]:

banned_user = ["uezo"]

user = request_json.get("user")

# Return string message to return response right after this filter ends (not to call ChatGPT)

if not user:

return "user is required"

elif user in banned_user:

return "you can't use this service"

# Enable this filter

proxy.add_filter(BannedUserFilter())

```

Try it.

```python

resp = client.chat.completions.create(model="gpt-3.5-turbo", messages=messages, user="uezo")

print(resp)

```

```sh

ChatCompletion(id='-', choices=[Choice(finish_reason='stop', index=0, message=ChatCompletionMessage(content="you can't use this service", role='assistant', function_call=None, tool_calls=None))], created=0, model='request_filter', object='chat.completion', system_fingerprint=None, usage=CompletionUsage(completion_tokens=0, prompt_tokens=0, total_tokens=0))

```

Another example is the model overwriter that forces the user to use GPT-3.5-Turbo.

```python

class ModelOverwriteFilter(RequestFilterBase):

async def filter(self, request_id: str, request_json: dict, request_headers: dict) -> Union[str, None]:

request_model = request_json["model"]

if not request_model.startswith("gpt-3.5"):

print(f"Change model from {request_model} -> gpt-3.5-turbo")

# Overwrite request_json

request_json["model"] = "gpt-3.5-turbo"

```

Lastly, `ReplayFilter` that retrieves content for a specific request_id from the histories. This is an exceptionally cool feature for developers to test AI-based applications.

```python

class ReplayFilter(RequestFilterBase):

async def filter(self, request_id: str, request_json: dict, request_headers: dict) -> Union[str, None]:

# Get request_id to replay from request header

request_id = request_headers.get("x-aiproxy-replay")

if not request_id:

return

db = worker.get_session()

try:

# Get and return the response content from histories

r = db.query(AccessLog).where(AccessLog.request_id == request_id, AccessLog.direction == "response").first()

if r:

return r.content

else:

return "Record not found for {request_id}"

except Exception as ex:

logger.error(f"Error at ReplayFilter: {str(ex)}\n{traceback.format_exc()}")

return "Error at getting response for {request_id}"

finally:

db.close()

```

`request_id` is included in HTTP response headers as `x-aiproxy-request-id`.

NOTE: **Response** filter doesn't work when `stream=True`.

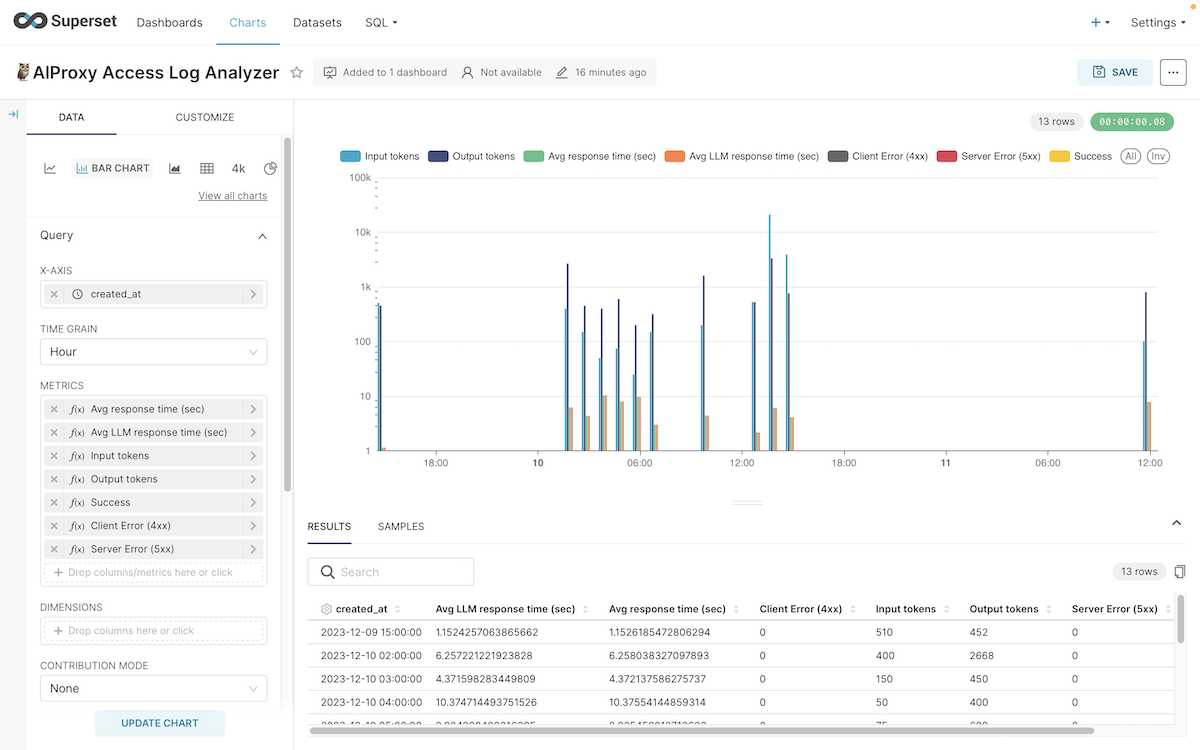

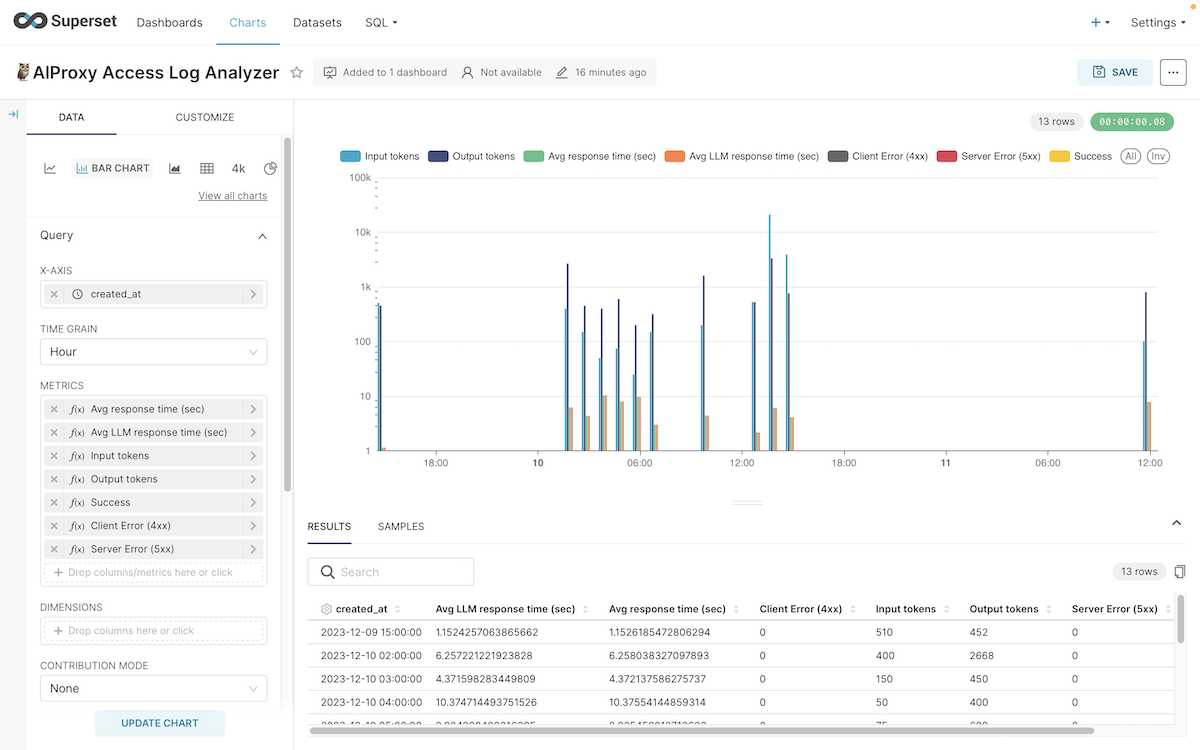

## 📊 Dashboard

We provide an Apache Superset template as our express dashboard. Please follow the steps below to set up.

Install Superset.

```sh

$ pip install apache-superset

```

Get dashboard.zip from release page and extract it to the same directory as aiproxy.db.

https://github.com/uezo/aiproxy/releases/tag/v0.3.0

Set required environment variables.

```sh

$ export SUPERSET_CONFIG_PATH=$(pwd)/dashboard/superset_config.py

$ export FLASK_APP=superset

```

Make database.

```sh

$ superset db upgrade

```

Create admin user. Change username and password as you like.

```sh

$ superset fab create-admin --username admin --firstname AIProxyAdmin --lastname AIProxyAdmin --email admin@localhost --password admin

```

Initialize Superset.

```sh

$ superset init

```

Import 🦉AIProxy dashboard template. Execute this command in the same directory as aiproxy.db. If you execute from a different location, open the Database connections page in the Superset after completing these steps and modify the database connection string to the absolute path.

```sh

$ superset import-directory dashboard/resources

```

Start Superset.

```sh

$ superset run -p 8088

```

Open and customize the dashboard to your liking, including the metrics you want to monitor and their conditions.👍

http://localhost:8088

📕 Superset official docs: https://superset.apache.org/docs/intro

## 💡 Tips

### CORS

Configure CORS if you call API from web apps. https://fastapi.tiangolo.com/tutorial/cors/

### Database

You can use other RDBMS that is supported by SQLAlchemy. You can use them by just changing connection string. (and, install client libraries required.)

#### Example for PostgreSQL🐘

```sh

$ pip install psycopg2-binary

```

```python

# connection_str = "sqlite:///aiproxy.db"

connection_str = f"postgresql://{USER}:{PASSWORD}@{HOST}:{PORT}/{DATABASE}"

worker = AccessLogWorker(connection_str=connection_str)

```

#### Example for SQL Server or Azure SQL Database

This is a temporary workaroud from AIProxy >= 0.3.6. Set `AIPROXY_USE_NVARCHAR=1` to use NVARCHAR internally.

```sh

$ export AIPROXY_USE_NVARCHAR=1

```

Install ODBC driver (version 18 in this example) and `pyodbc` then set connection string as follows:

```python

# connection_str = "sqlite:///aiproxy.db"

connection_str = f"mssql+pyodbc:///?odbc_connect=DRIVER={ODBC Driver 18 for SQL Server};SERVER=YOUR_SERVER;PORT=1433;DATABASE=YOUR_DB;UID=YOUR_UID;PWD=YOUR_PWD"

worker = AccessLogWorker(connection_str=connection_str)

```

### Azure OpenAI

To use Azure OpenAI, use `AzureOpenAIProxy` instead of `ChatGPTProxy`.

```python

from aiproxy.chatgpt import AzureOpenAIProxy

aoai_proxy = AzureOpenAIProxy(

api_key="YOUR_API_KEY",

resource_name="YOUR_RESOURCE_NAME",

deployment_id="YOUR_DEPLOYMENT_ID",

api_version="2024-02-01", # https://learn.microsoft.com/ja-jp/azure/ai-services/openai/reference#chat-completions

access_logger_queue=worker.queue_client

)

aoai_proxy.add_route(app)

```

Clients do not need to be aware that it is Azure OpenAI; use the same code for ChatGPT API.

### Amazon Bedrock

To use Claude on Amazon Bedrock, use `BedrockClaudeProxy` instead of `ClaudeProxy`.

```python

from aiproxy.bedrock_claude import BedrockClaudeProxy

bedrock_claude_proxy = BedrockClaudeProxy(

aws_access_key_id="YOUR_AWS_ACCESS_KEY_ID",

aws_secret_access_key="YOUR_AWS_SECRET_ACCESS_KEY",

region_name="YOUR_REGION",

access_logger_queue=worker.queue_client

)

bedrock_claude_proxy.add_route(app)

```

Client side. We test API with `AnthropicBedrock`.

```python

# Make client with `base_url`

client = anthropic.AnthropicBedrock(

aws_secret_key="dummy_aws_secret_access_key",

aws_access_key="dummy_aws_access_key_id",

aws_region="dummy_region_name",

base_url="http://127.0.0.1:8000/bedrock-claude"

)

resp = client.messages.create(

model="anthropic.claude-3-haiku-20240307-v1:0",

messages=[{"role": "user", "content": [{"type": "text", "text": "こんにちは!"}]}],

max_tokens=512,

stream=True

)

for r in resp:

print(r)

```

## 🛟 Support

For support, questions, or contributions, please open an issue in the GitHub repository. Please contact me directly when you need an enterprise or business support😊.

## ⚖️ License

**🦉AIProxy** is released under the [Apache License v2](LICENSE).

Made with ❤️ by Uezo, the representive of Unagiken.

Raw data

{

"_id": null,

"home_page": "https://github.com/uezo/aiproxy",

"name": "aiproxy-python",

"maintainer": "uezo",

"docs_url": null,

"requires_python": null,

"maintainer_email": "uezo@uezo.net",

"keywords": null,

"author": "uezo",

"author_email": "uezo@uezo.net",

"download_url": null,

"platform": null,

"description": "# \ud83e\udd89 AIProxy\n\n\ud83e\udd89 **AIProxy** is a Python library that serves as a reverse proxy LLM APIs including ChatGPT and Claude2. It provides enhanced features like monitoring, logging, and filtering requests and responses. This library is especially useful for developers and administrators who need detailed oversight and control over the interaction with LLM APIs.\n\n- \u2705 Streaming support: Logs every bit of request and response data with token count \u2013 never miss a beat! \ud83d\udc93\n- \u2705 Custom monitoring: Tailor-made for logging any specific info you fancy. Make it your own! \ud83d\udd0d\n- \u2705 Custom filtering: Flexibly blocks access based on specific info or sends back your own responses. Be in control! \ud83d\udee1\ufe0f\n- \u2705 Multiple AI Services: Supports ChatGPT (OpenAI and Azure OpenAI Service), Claude2 on AWS Bedrock, and is extensible by yourself! \ud83e\udd16\n- \u2705 Express dashboard: We provide template for [Apache Superset](https://superset.apache.org) that's ready to use right out of the box \u2013 get insights quickly and efficiently! \ud83d\udcca\n\n\n\n## \ud83d\ude80 Quick start\n\nInstall.\n\n```sh\n$ pip install aiproxy-python\n```\n\nRun.\n\n```sh\n$ python -m aiproxy [--host host] [--port port] [--openai_api_key OPENAI_API_KEY]\n```\n\nUse.\n\n```python\nimport openai\nclient = openai.Client(base_url=\"http://127.0.0.1:8000/openai\", api_key=\"YOUR_API_KEY\")\nresp = client.chat.completions.create(model=\"gpt-3.5-turbo\", messages=[{\"role\": \"user\", \"content\": \"hello!\"}])\nprint(resp)\n```\n\nEnjoy\ud83d\ude0a\ud83e\udd89\n\n\n## \ud83c\udfc5 Use official client libraries\n\nYou can use the official client libraries for each LLM by just changing API endpoint url.\n\n### ChatGPT\n\nSet `http|https://your_host/openai` as `base_url` to client.\n\n```python\nimport openai\n\nclient = openai.Client(\n api_key=\"YOUR_API_KEY\",\n base_url=\"http://127.0.0.1:8000/openai\"\n)\n\nresp = client.chat.completions.create(\n model=\"gpt-3.5-turbo\",\n messages=[{\"role\": \"user\", \"content\": \"hello!\"}]\n)\n\nprint(resp)\n```\n\n### Anthropic Claude\n\nSet `http|https://your_host/anthropic` as `base_url` to client.\n\n```python\nfrom anthropic import Anthropic\n\nclient = Anthropic(\n api_key=\"YOUR_API_KEY\",\n base_url=\"http://127.0.0.1:8000/anthropic\"\n)\n\nresp = client.messages.create(\n max_tokens=1024,\n messages=[{\"role\": \"user\", \"content\": \"Hello, Claude\",}],\n model=\"claude-3-haiku-20240307\",\n)\n\nprint(resp.content)\n```\n\n### Google Gemini (AI Studio)\n\nAPI itself is compatible but client of `google.generativeai` doesn't support rewriting urls. Use httpx instead.\n\n```python\nimport httpx\n\nresp = httpx.post(\n url=\"http://127.0.0.1:8000/googleaistudio/v1beta/models/gemini-1.5-pro-latest:generateContent\",\n json={\n \"contents\": [{\"role\": \"user\", \"parts\":[{\"text\": \"Hello, Gemini!\"}]}],\n \"generationConfig\": {\"temperature\": 0.5, \"maxOutputTokens\": 1000}\n }\n)\n\nprint(resp.json())\n```\n\n\n## \ud83d\udee0\ufe0f Custom entrypoint\n\nTo customize **\ud83e\udd89AIProxy**, make your custom entrypoint first. You can customize the metrics you want to monitor, add filters, change databases, etc.\n\n```python\nfrom contextlib import asynccontextmanager\nimport threading\nfrom fastapi import FastAPI\nfrom aiproxy import AccessLogWorker\nfrom aiproxy.chatgpt import ChatGPTProxy\nfrom aiproxy.anthropic_claude import ClaudeProxy\nfrom aiproxy.gemini import GeminiProxy\n\n# Setup access log worker\nworker = AccessLogWorker(connection_str=\"sqlite:///aiproxy.db\")\n\n# Setup server application\n@asynccontextmanager\nasync def lifespan(app: FastAPI):\n # Start access log worker\n threading.Thread(target=worker.run, daemon=True).start()\n yield\n # Stop access log worker\n worker.queue_client.put(None)\n\napp = FastAPI(lifespan=lifespan, docs_url=None, redoc_url=None, openapi_url=None)\n\n# Proxy for ChatGPT\nchatgpt_proxy = ChatGPTProxy(\n api_key=OPENAI_API_KEY,\n access_logger_queue=worker.queue_client\n)\nchatgpt_proxy.add_route(app)\n\n# Proxy for Anthropic Claude\nclaude_proxy = ClaudeProxy(\n api_key=ANTHROPIC_API_KEY,\n access_logger_queue=worker.queue_client\n)\nclaude_proxy.add_route(app)\n\n# Proxy for Gemini on Google AI Studio (not Vertex AI)\ngemini_proxy = GeminiProxy(\n api_key=GOOGLE_API_KEY,\n access_logger_queue=worker.queue_client\n)\ngemini_proxy.add_route(app)\n```\n\nRun with uvicorn with some params if you need.\n\n```sh\n$ uvicorn run:app --host 0.0.0.0 --port 8000\n```\n\n\n## \ud83d\udd0d Monitoring\n\nBy default, see `accesslog` table in `aiproxy.db`. If you want to use other RDBMS like PostgreSQL, set SQLAlchemy-formatted connection string as `connection_str` argument when instancing `AccessLogWorker`.\n\nAnd, you can customize log format as below:\n\nThis is an example to add `user` column to request log. In this case, the customized log are stored into table named `customaccesslog`, the lower case of your custom access log class.\n\n```python\nfrom sqlalchemy import Column, String\nfrom aiproxy.accesslog import AccessLogBase, AccessLogWorker\n\n# Make custom schema for database\nclass CustomAccessLog(AccessLogBase):\n user = Column(String)\n\n# Make data mapping logic from HTTP headar/body to log\nclass CustomGPTRequestItem(ChatGPTRequestItem):\n def to_accesslog(self, accesslog_cls: _AccessLogBase) -> _AccessLogBase:\n accesslog = super().to_accesslog(accesslog_cls)\n\n # In this case, set value of \"x-user-id\" in request header to newly added colmun \"user\"\n accesslog.user = self.request_headers.get(\"x-user-id\")\n\n return accesslog\n\n# Make worker with custom log schema\nworker = AccessLogWorker(accesslog_cls=CustomAccessLog)\n\n# Make proxy with your custom request item\nproxy = ChatGPTProxy(\n api_key=YOUR_API_KEY,\n access_logger_queue=worker.queue_client,\n request_item_class=CustomGPTRequestItem\n)\n```\n\nNOTE: By default `AccessLog`, OpenAI API Key in the request headers is masked.\n\n\n\n## \ud83d\udee1\ufe0f Filtering\n\nThe filter receives all requests and responses, allowing you to view and modify their content. For example:\n\n- Detect and protect from misuse: From unknown apps, unauthorized users, etc.\n- Trigger custom actions: Doing something triggered by a request.\n\nThis is an example for custom request filter that protects the service from banned user. uezo will receive \"you can't use this service\" as the ChatGPT response.\n\n```python\nfrom typing import Union\nfrom aiproxy import RequestFilterBase\n\nclass BannedUserFilter(RequestFilterBase):\n async def filter(self, request_id: str, request_json: dict, request_headers: dict) -> Union[str, None]:\n banned_user = [\"uezo\"]\n user = request_json.get(\"user\")\n\n # Return string message to return response right after this filter ends (not to call ChatGPT)\n if not user:\n return \"user is required\"\n elif user in banned_user:\n return \"you can't use this service\"\n\n# Enable this filter\nproxy.add_filter(BannedUserFilter())\n```\n\nTry it.\n\n```python\nresp = client.chat.completions.create(model=\"gpt-3.5-turbo\", messages=messages, user=\"uezo\")\nprint(resp)\n```\n```sh\nChatCompletion(id='-', choices=[Choice(finish_reason='stop', index=0, message=ChatCompletionMessage(content=\"you can't use this service\", role='assistant', function_call=None, tool_calls=None))], created=0, model='request_filter', object='chat.completion', system_fingerprint=None, usage=CompletionUsage(completion_tokens=0, prompt_tokens=0, total_tokens=0))\n```\n\nAnother example is the model overwriter that forces the user to use GPT-3.5-Turbo.\n\n```python\nclass ModelOverwriteFilter(RequestFilterBase):\n async def filter(self, request_id: str, request_json: dict, request_headers: dict) -> Union[str, None]:\n request_model = request_json[\"model\"]\n if not request_model.startswith(\"gpt-3.5\"):\n print(f\"Change model from {request_model} -> gpt-3.5-turbo\")\n # Overwrite request_json\n request_json[\"model\"] = \"gpt-3.5-turbo\"\n```\n\nLastly, `ReplayFilter` that retrieves content for a specific request_id from the histories. This is an exceptionally cool feature for developers to test AI-based applications.\n\n```python\nclass ReplayFilter(RequestFilterBase):\n async def filter(self, request_id: str, request_json: dict, request_headers: dict) -> Union[str, None]:\n # Get request_id to replay from request header\n request_id = request_headers.get(\"x-aiproxy-replay\")\n if not request_id:\n return\n \n db = worker.get_session()\n try:\n # Get and return the response content from histories\n r = db.query(AccessLog).where(AccessLog.request_id == request_id, AccessLog.direction == \"response\").first()\n if r:\n return r.content\n else:\n return \"Record not found for {request_id}\"\n \n except Exception as ex:\n logger.error(f\"Error at ReplayFilter: {str(ex)}\\n{traceback.format_exc()}\")\n return \"Error at getting response for {request_id}\"\n \n finally:\n db.close()\n```\n\n`request_id` is included in HTTP response headers as `x-aiproxy-request-id`.\n\nNOTE: **Response** filter doesn't work when `stream=True`.\n\n\n## \ud83d\udcca Dashboard\n\nWe provide an Apache Superset template as our express dashboard. Please follow the steps below to set up.\n\n\n\nInstall Superset.\n\n```sh\n$ pip install apache-superset\n```\n\nGet dashboard.zip from release page and extract it to the same directory as aiproxy.db.\n\nhttps://github.com/uezo/aiproxy/releases/tag/v0.3.0\n\n\nSet required environment variables.\n\n```sh\n$ export SUPERSET_CONFIG_PATH=$(pwd)/dashboard/superset_config.py\n$ export FLASK_APP=superset\n```\n\nMake database.\n\n```sh\n$ superset db upgrade\n```\n\nCreate admin user. Change username and password as you like.\n\n```sh\n$ superset fab create-admin --username admin --firstname AIProxyAdmin --lastname AIProxyAdmin --email admin@localhost --password admin\n```\n\nInitialize Superset.\n\n```sh\n$ superset init\n```\n\nImport \ud83e\udd89AIProxy dashboard template. Execute this command in the same directory as aiproxy.db. If you execute from a different location, open the Database connections page in the Superset after completing these steps and modify the database connection string to the absolute path.\n\n```sh\n$ superset import-directory dashboard/resources\n```\n\nStart Superset.\n\n```sh\n$ superset run -p 8088\n```\n\nOpen and customize the dashboard to your liking, including the metrics you want to monitor and their conditions.\ud83d\udc4d\n\nhttp://localhost:8088\n\n\ud83d\udcd5 Superset official docs: https://superset.apache.org/docs/intro\n\n\n## \ud83d\udca1 Tips\n\n### CORS\n\nConfigure CORS if you call API from web apps. https://fastapi.tiangolo.com/tutorial/cors/\n\n\n### Database\n\nYou can use other RDBMS that is supported by SQLAlchemy. You can use them by just changing connection string. (and, install client libraries required.)\n\n#### Example for PostgreSQL\ud83d\udc18\n\n```sh\n$ pip install psycopg2-binary\n```\n\n```python\n# connection_str = \"sqlite:///aiproxy.db\"\nconnection_str = f\"postgresql://{USER}:{PASSWORD}@{HOST}:{PORT}/{DATABASE}\"\n\nworker = AccessLogWorker(connection_str=connection_str)\n```\n\n#### Example for SQL Server or Azure SQL Database\n\nThis is a temporary workaroud from AIProxy >= 0.3.6. Set `AIPROXY_USE_NVARCHAR=1` to use NVARCHAR internally.\n\n```sh\n$ export AIPROXY_USE_NVARCHAR=1\n```\n\nInstall ODBC driver (version 18 in this example) and `pyodbc` then set connection string as follows:\n\n```python\n# connection_str = \"sqlite:///aiproxy.db\"\nconnection_str = f\"mssql+pyodbc:///?odbc_connect=DRIVER={ODBC Driver 18 for SQL Server};SERVER=YOUR_SERVER;PORT=1433;DATABASE=YOUR_DB;UID=YOUR_UID;PWD=YOUR_PWD\"\n\nworker = AccessLogWorker(connection_str=connection_str)\n```\n\n### Azure OpenAI\n\nTo use Azure OpenAI, use `AzureOpenAIProxy` instead of `ChatGPTProxy`.\n\n```python\nfrom aiproxy.chatgpt import AzureOpenAIProxy\n\naoai_proxy = AzureOpenAIProxy(\n api_key=\"YOUR_API_KEY\",\n resource_name=\"YOUR_RESOURCE_NAME\",\n deployment_id=\"YOUR_DEPLOYMENT_ID\",\n api_version=\"2024-02-01\", # https://learn.microsoft.com/ja-jp/azure/ai-services/openai/reference#chat-completions\n access_logger_queue=worker.queue_client\n)\naoai_proxy.add_route(app)\n```\n\nClients do not need to be aware that it is Azure OpenAI; use the same code for ChatGPT API.\n\n\n### Amazon Bedrock\n\nTo use Claude on Amazon Bedrock, use `BedrockClaudeProxy` instead of `ClaudeProxy`.\n\n```python\nfrom aiproxy.bedrock_claude import BedrockClaudeProxy\n\nbedrock_claude_proxy = BedrockClaudeProxy(\n aws_access_key_id=\"YOUR_AWS_ACCESS_KEY_ID\",\n aws_secret_access_key=\"YOUR_AWS_SECRET_ACCESS_KEY\",\n region_name=\"YOUR_REGION\",\n access_logger_queue=worker.queue_client\n)\nbedrock_claude_proxy.add_route(app)\n```\n\n\nClient side. We test API with `AnthropicBedrock`.\n\n```python\n# Make client with `base_url`\nclient = anthropic.AnthropicBedrock(\n aws_secret_key=\"dummy_aws_secret_access_key\",\n aws_access_key=\"dummy_aws_access_key_id\",\n aws_region=\"dummy_region_name\",\n base_url=\"http://127.0.0.1:8000/bedrock-claude\"\n)\n\nresp = client.messages.create(\n model=\"anthropic.claude-3-haiku-20240307-v1:0\",\n messages=[{\"role\": \"user\", \"content\": [{\"type\": \"text\", \"text\": \"\u3053\u3093\u306b\u3061\u306f\uff01\"}]}],\n max_tokens=512,\n stream=True\n)\n\nfor r in resp:\n print(r)\n```\n\n\n## \ud83d\udedf Support\n\nFor support, questions, or contributions, please open an issue in the GitHub repository. Please contact me directly when you need an enterprise or business support\ud83d\ude0a.\n\n\n## \u2696\ufe0f License\n\n**\ud83e\udd89AIProxy** is released under the [Apache License v2](LICENSE).\n\nMade with \u2764\ufe0f by Uezo, the representive of Unagiken.\n",

"bugtrack_url": null,

"license": "Apache v2",

"summary": "\ud83e\udd89AIProxy is a reverse proxy for ChatGPT API that provides monitoring, logging, and filtering requests and responses.",

"version": "0.4.3",

"project_urls": {

"Homepage": "https://github.com/uezo/aiproxy"

},

"split_keywords": [],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "8d7b4758ca67bca2de791674e3d75e2633f5530e09204445c0a5b4bf05025f18",

"md5": "e8bfabcc4da4d0b59ff9e6ae40c80856",

"sha256": "e15c28a28b56d46fa9b5f78007530eec819da4577b520e0f9b3bc8b868625d28"

},

"downloads": -1,

"filename": "aiproxy_python-0.4.3-py3-none-any.whl",

"has_sig": false,

"md5_digest": "e8bfabcc4da4d0b59ff9e6ae40c80856",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": null,

"size": 29749,

"upload_time": "2024-05-06T14:31:16",

"upload_time_iso_8601": "2024-05-06T14:31:16.412738Z",

"url": "https://files.pythonhosted.org/packages/8d/7b/4758ca67bca2de791674e3d75e2633f5530e09204445c0a5b4bf05025f18/aiproxy_python-0.4.3-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2024-05-06 14:31:16",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "uezo",

"github_project": "aiproxy",

"travis_ci": false,

"coveralls": false,

"github_actions": false,

"requirements": [

{

"name": "openai",

"specs": [

[

"==",

"1.3.6"

]

]

},

{

"name": "fastapi",

"specs": [

[

"==",

"0.103.2"

]

]

},

{

"name": "sse-starlette",

"specs": [

[

"==",

"1.8.2"

]

]

},

{

"name": "uvicorn",

"specs": [

[

"==",

"0.23.2"

]

]

},

{

"name": "tiktoken",

"specs": [

[

"==",

"0.5.1"

]

]

},

{

"name": "SQLAlchemy",

"specs": [

[

"==",

"2.0.23"

]

]

}

],

"lcname": "aiproxy-python"

}