## ⚠️ Discontinuation of project

> In August 2024, Astronomer [donated the features available in this provider](https://github.com/astronomer/astro-provider-databricks/pull/84) to the [Apache Airflow repository](https://github.com/apache/airflow/tree/main/providers/databricks).

> The features available in this repository are available in ``apache-airflow-providers-databricks>= 6.8.0``.

> This repository is no longer actively maintained by Astronomer, and the code is kept here for historical purposes only.

> You can still contribute, submit bug fixes, and improvements in this project's [new home](https://github.com/apache/airflow/tree/main/providers/databricks), under the terms of its license.

> Please note that this deprecated version of the provider may not work with the latest dependencies or platforms, and it could contain security vulnerabilities. Astronomer cannot offer guarantees or warranties for its use.

> Thanks for being part of the open-source journey and helping keep great ideas alive!

For the operators and sensors that are deprecated in this repository, migrating to the official Apache Airflow Databricks Provider is as simple as changing the import path in your DAG code as per the below examples.

| Previous import path used (Deprecated now) | Suggested import path to use |

|-------------------------------------------------------------------------------|-------------------------------------------------------------------------------------------------------------|

| `from astro_databricks.operators.notebook import DatabricksNotebookOperator` | `from airflow.providers.databricks.operators.databricks import DatabricksNotebookOperator` |

| `from astro_databricks.operators.workflow import DatabricksWorkflowTaskGroup` | `from airflow.providers.databricks.operators.databricks_workflow import DatabricksWorkflowTaskGroup` |

| `from astro_databricks.operators.common import DatabricksTaskOperator` | `from airflow.providers.databricks.operators.databricks import DatabricksTaskOperator` |

| `from astro_databricks.plugins.plugin import AstroDatabricksPlugin` | `from airflow.providers.airflow.providers.databricks.plugins.databricks_workflow import DatabricksWorkflowPlugin` |

# Archives

<h1 align="center">

Databricks Workflows in Airflow

</h1>

The Astro Databricks Provider is an Apache Airflow provider to write [Databricks Workflows](https://docs.databricks.com/workflows/index.html) using Airflow as the authoring interface. Running your Databricks notebooks as Databricks Workflows can result in a [75% cost reduction](https://www.databricks.com/product/pricing) ($0.40/DBU for all-purpose compute, $0.07/DBU for Jobs compute).

While this is maintained by Astronomer, it's available to anyone using Airflow - you don't need to be an Astronomer customer to use it.

There are a few advantages to defining your Databricks Workflows in Airflow:

| | via Databricks | via Airflow |

| :----------------------------------- | :---------------------------: | :--------------------: |

| Authoring interface | _Web-based via Databricks UI_ | _Code via Airflow DAG_ |

| Workflow compute pricing | ✅ | ✅ |

| Notebook code in source control | ✅ | ✅ |

| Workflow structure in source control | ✅ | ✅ |

| Retry from beginning | ✅ | ✅ |

| Retry single task | ✅ | ✅ |

| Task groups within Workflows | | ✅ |

| Trigger workflows from other DAGs | | ✅ |

| Workflow-level parameters | | ✅ |

## Example

The following Airflow DAG illustrates how to use the `DatabricksTaskGroup` and `DatabricksNotebookOperator` to define a Databricks Workflow in Airflow:

```python

from pendulum import datetime

from airflow.decorators import dag, task_group

from airflow.operators.trigger_dagrun import TriggerDagRunOperator

from astro_databricks import DatabricksNotebookOperator, DatabricksWorkflowTaskGroup

# define your cluster spec - can have from 1 to many clusters

job_cluster_spec = [

{

"job_cluster_key": "astro_databricks",

"new_cluster": {

"cluster_name": "",

# ...

},

}

]

@dag(start_date=datetime(2023, 1, 1), schedule_interval="@daily", catchup=False)

def databricks_workflow_example():

# the task group is a context manager that will create a Databricks Workflow

with DatabricksWorkflowTaskGroup(

group_id="example_databricks_workflow",

databricks_conn_id="databricks_default",

job_clusters=job_cluster_spec,

# you can specify common fields here that get shared to all notebooks

notebook_packages=[

{ "pypi": { "package": "pandas" } },

],

# notebook_params supports templating

notebook_params={

"start_time": "{{ ds }}",

}

) as workflow:

notebook_1 = DatabricksNotebookOperator(

task_id="notebook_1",

databricks_conn_id="databricks_default",

notebook_path="/Shared/notebook_1",

source="WORKSPACE",

# job_cluster_key corresponds to the job_cluster_key in the job_cluster_spec

job_cluster_key="astro_databricks",

# you can add to packages & params at the task level

notebook_packages=[

{ "pypi": { "package": "scikit-learn" } },

],

notebook_params={

"end_time": "{{ macros.ds_add(ds, 1) }}",

}

)

# you can embed task groups for easier dependency management

@task_group(group_id="inner_task_group")

def inner_task_group():

notebook_2 = DatabricksNotebookOperator(

task_id="notebook_2",

databricks_conn_id="databricks_default",

notebook_path="/Shared/notebook_2",

source="WORKSPACE",

job_cluster_key="astro_databricks",

)

notebook_3 = DatabricksNotebookOperator(

task_id="notebook_3",

databricks_conn_id="databricks_default",

notebook_path="/Shared/notebook_3",

source="WORKSPACE",

job_cluster_key="astro_databricks",

)

notebook_4 = DatabricksNotebookOperator(

task_id="notebook_4",

databricks_conn_id="databricks_default",

notebook_path="/Shared/notebook_4",

source="WORKSPACE",

job_cluster_key="astro_databricks",

)

notebook_1 >> inner_task_group() >> notebook_4

trigger_workflow_2 = TriggerDagRunOperator(

task_id="trigger_workflow_2",

trigger_dag_id="workflow_2",

execution_date="{{ next_execution_date }}",

)

workflow >> trigger_workflow_2

databricks_workflow_example_dag = databricks_workflow_example()

```

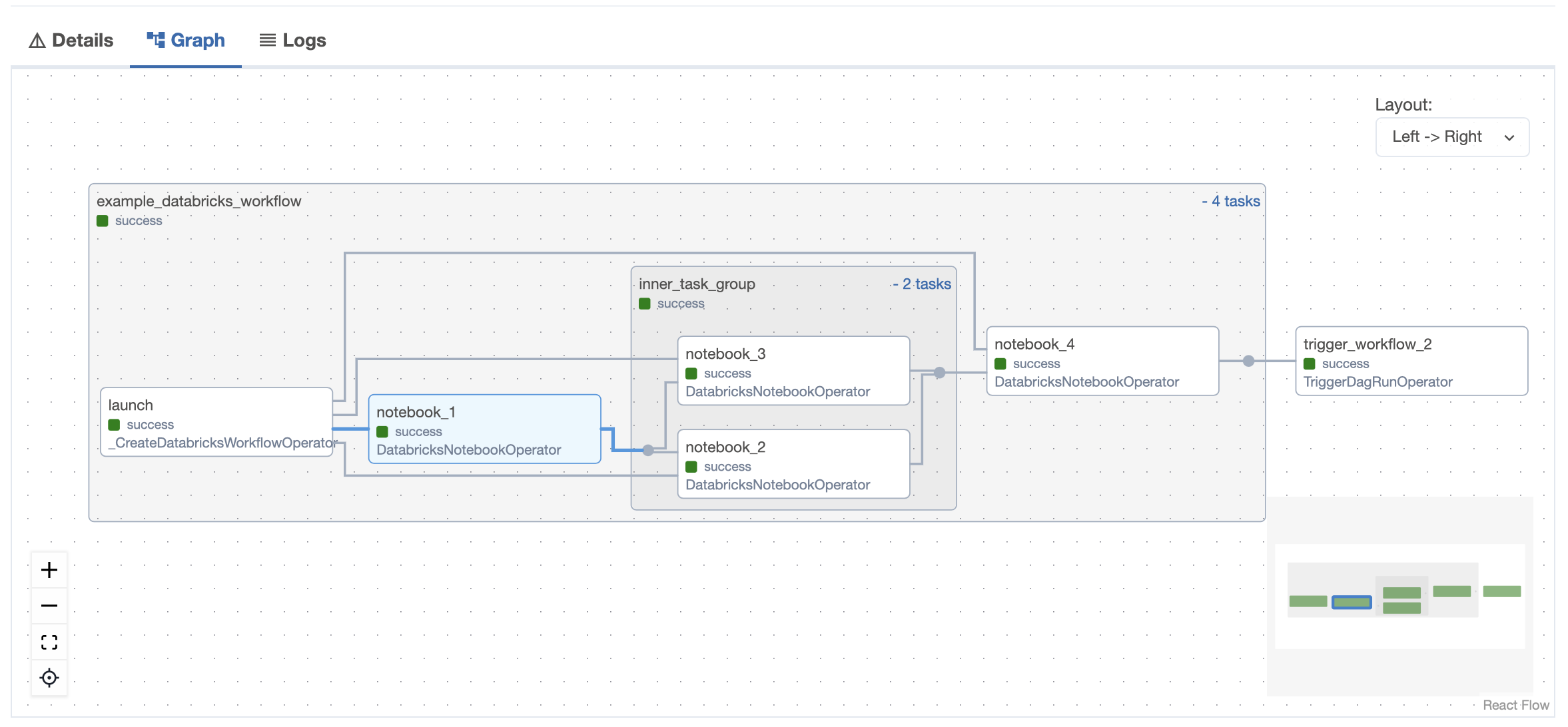

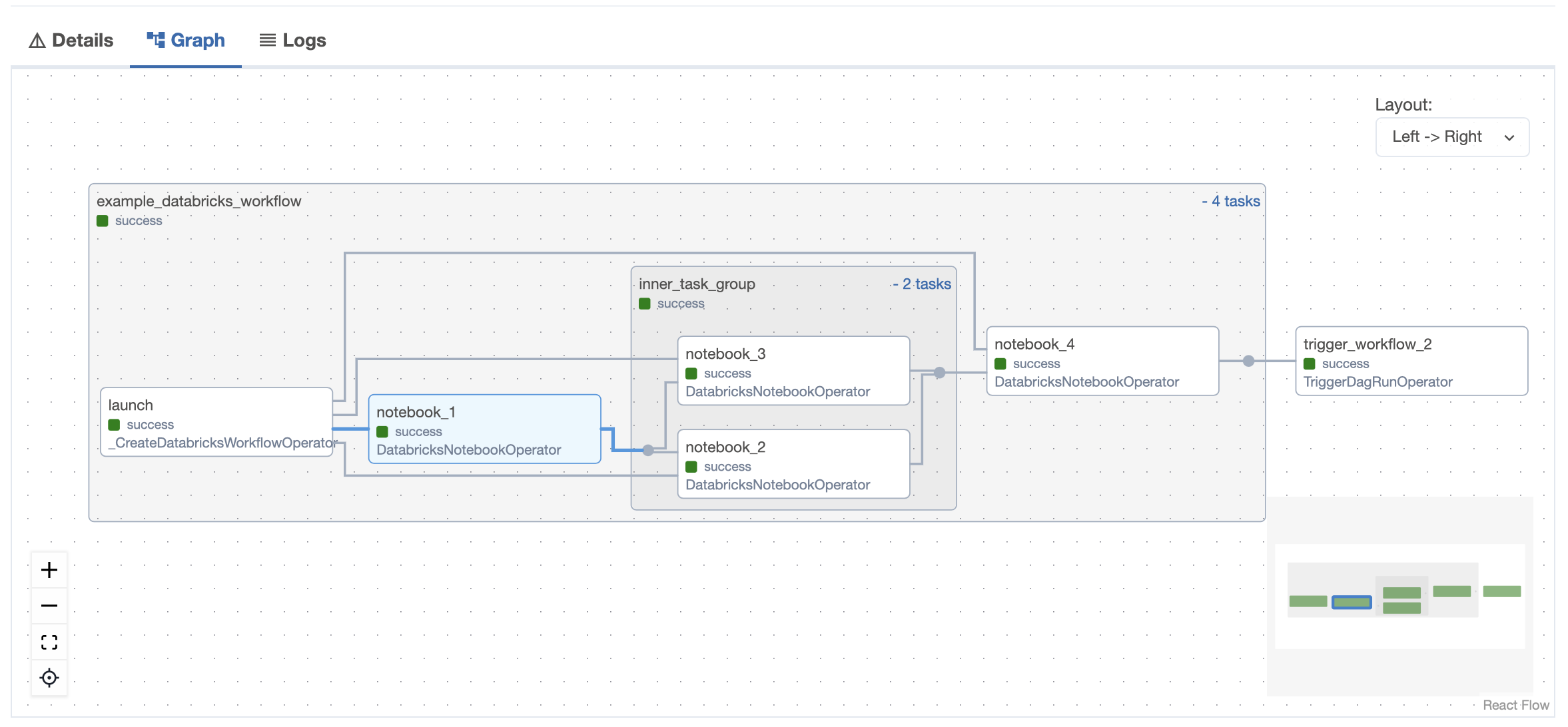

### Airflow UI

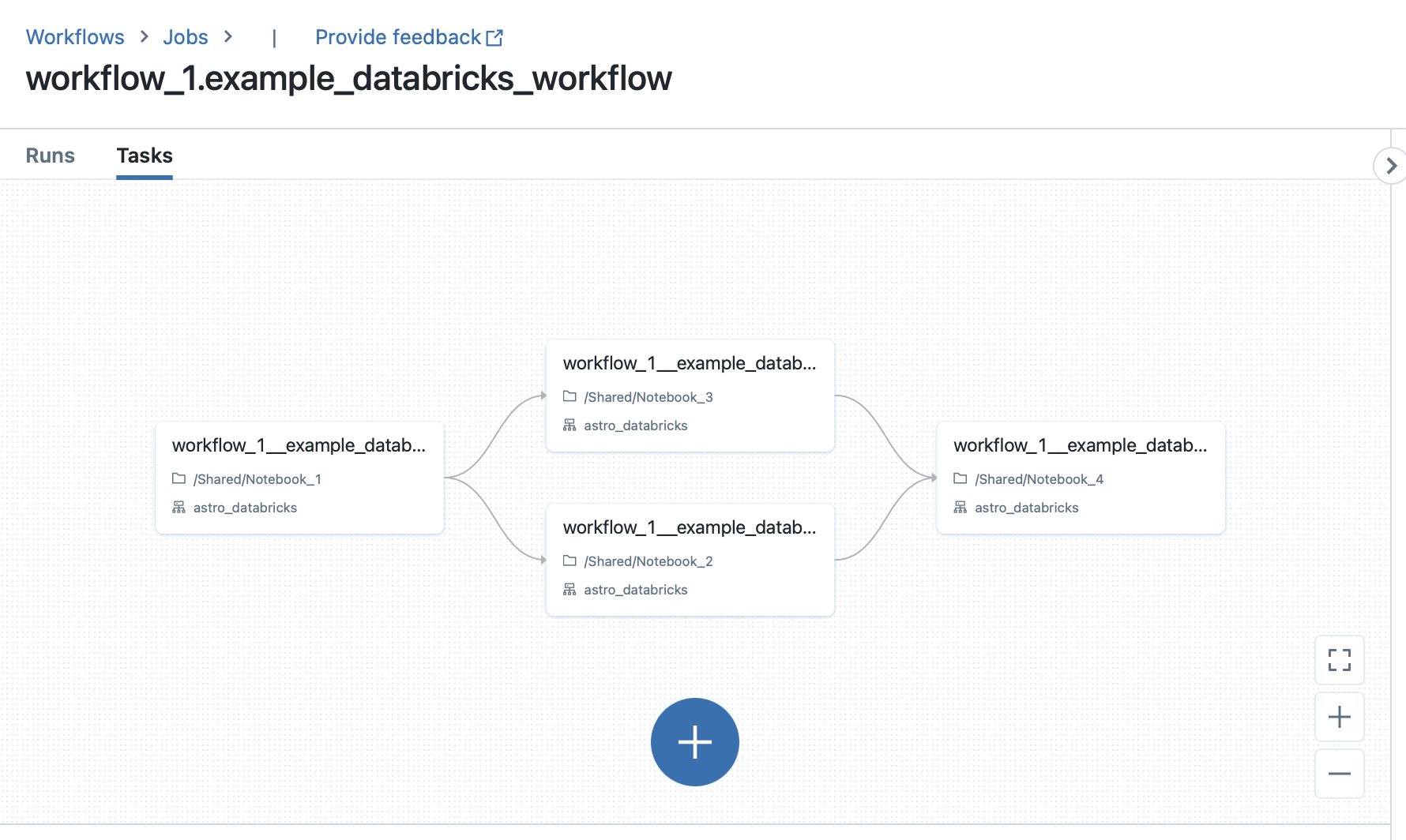

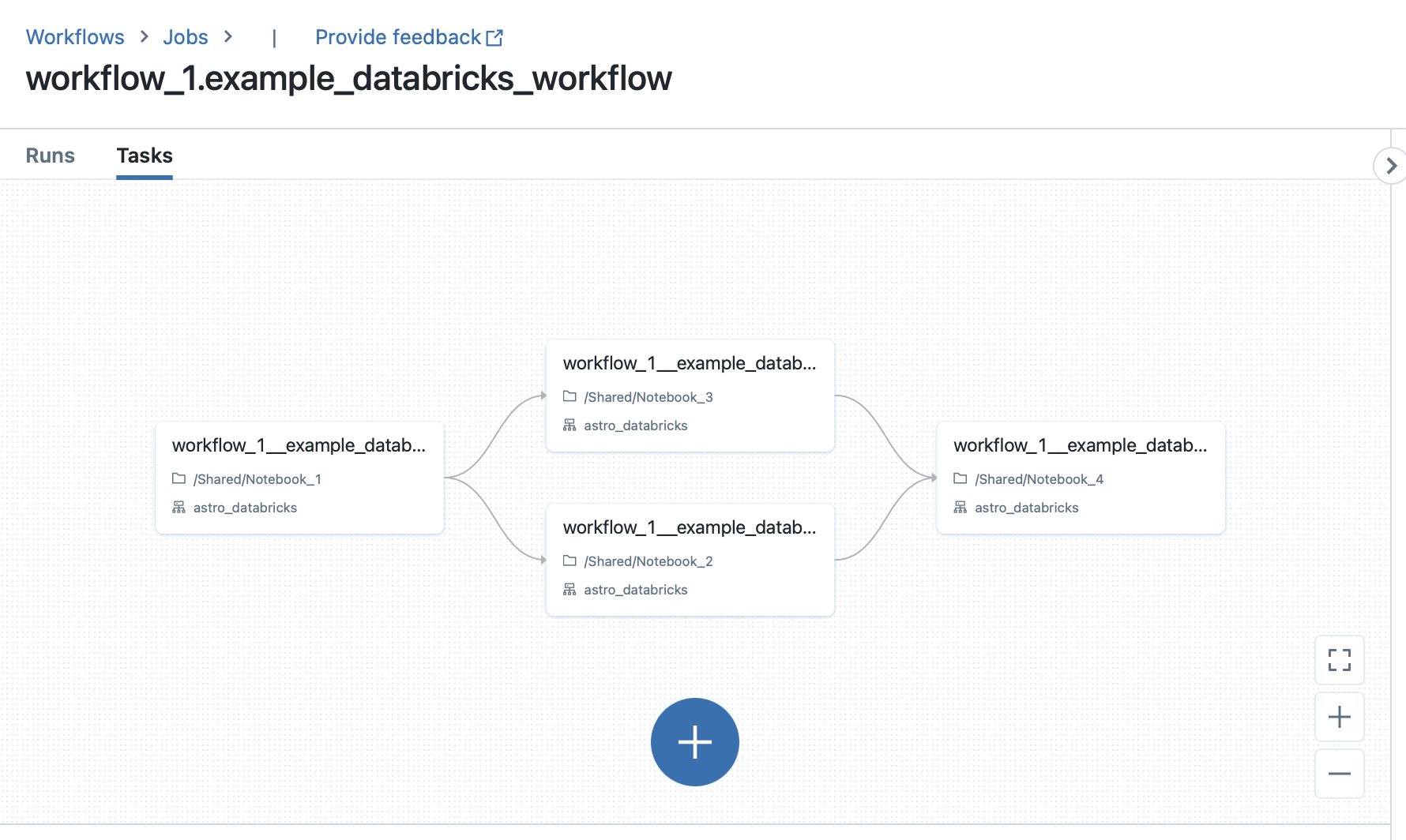

### Databricks UI

## Quickstart

Check out the following quickstart guides:

- [With the Astro CLI](quickstart/astro-cli.md)

- [Without the Astro CLI](quickstart/without-astro-cli.md)

## Documentation

The documentation is a work in progress--we aim to follow the [Diátaxis](https://diataxis.fr/) system:

- [Reference Guide](https://astronomer.github.io/astro-provider-databricks/)

## Changelog

Astro Databricks follows [semantic versioning](https://semver.org/) for releases. Read [changelog](CHANGELOG.rst) to understand more about the changes introduced to each version.

## Contribution guidelines

All contributions, bug reports, bug fixes, documentation improvements, enhancements, and ideas are welcome.

Read the [Contribution Guidelines](docs/contributing.rst) for a detailed overview on how to contribute.

Contributors and maintainers should abide by the [Contributor Code of Conduct](CODE_OF_CONDUCT.md).

## License

[Apache Licence 2.0](LICENSE)

Raw data

{

"_id": null,

"home_page": null,

"name": "astro-provider-databricks",

"maintainer": null,

"docs_url": null,

"requires_python": ">=3.7",

"maintainer_email": null,

"keywords": "airflow, apache-airflow, astronomer, dags",

"author": null,

"author_email": "Astronomer <humans@astronomer.io>",

"download_url": "https://files.pythonhosted.org/packages/ae/70/cb0b48d01d3afe27de6dfee5dd1057d5ca56699ac670df33496096dda3c8/astro_provider_databricks-0.3.2.tar.gz",

"platform": null,

"description": "## \u26a0\ufe0f Discontinuation of project\n> In August 2024, Astronomer [donated the features available in this provider](https://github.com/astronomer/astro-provider-databricks/pull/84) to the [Apache Airflow repository](https://github.com/apache/airflow/tree/main/providers/databricks).\n> The features available in this repository are available in ``apache-airflow-providers-databricks>= 6.8.0``.\n> This repository is no longer actively maintained by Astronomer, and the code is kept here for historical purposes only.\n> You can still contribute, submit bug fixes, and improvements in this project's [new home](https://github.com/apache/airflow/tree/main/providers/databricks), under the terms of its license. \n> Please note that this deprecated version of the provider may not work with the latest dependencies or platforms, and it could contain security vulnerabilities. Astronomer cannot offer guarantees or warranties for its use.\n> Thanks for being part of the open-source journey and helping keep great ideas alive!\n\nFor the operators and sensors that are deprecated in this repository, migrating to the official Apache Airflow Databricks Provider is as simple as changing the import path in your DAG code as per the below examples.\n\n| Previous import path used (Deprecated now) | Suggested import path to use |\n|-------------------------------------------------------------------------------|-------------------------------------------------------------------------------------------------------------|\n| `from astro_databricks.operators.notebook import DatabricksNotebookOperator` | `from airflow.providers.databricks.operators.databricks import DatabricksNotebookOperator` |\n| `from astro_databricks.operators.workflow import DatabricksWorkflowTaskGroup` | `from airflow.providers.databricks.operators.databricks_workflow import DatabricksWorkflowTaskGroup` |\n| `from astro_databricks.operators.common import DatabricksTaskOperator` | `from airflow.providers.databricks.operators.databricks import DatabricksTaskOperator` |\n| `from astro_databricks.plugins.plugin import AstroDatabricksPlugin` | `from airflow.providers.airflow.providers.databricks.plugins.databricks_workflow import DatabricksWorkflowPlugin` |\n\n# Archives\n\n<h1 align=\"center\">\n Databricks Workflows in Airflow\n</h1>\n\nThe Astro Databricks Provider is an Apache Airflow provider to write [Databricks Workflows](https://docs.databricks.com/workflows/index.html) using Airflow as the authoring interface. Running your Databricks notebooks as Databricks Workflows can result in a [75% cost reduction](https://www.databricks.com/product/pricing) ($0.40/DBU for all-purpose compute, $0.07/DBU for Jobs compute).\n\nWhile this is maintained by Astronomer, it's available to anyone using Airflow - you don't need to be an Astronomer customer to use it.\n\nThere are a few advantages to defining your Databricks Workflows in Airflow:\n\n| | via Databricks | via Airflow |\n| :----------------------------------- | :---------------------------: | :--------------------: |\n| Authoring interface | _Web-based via Databricks UI_ | _Code via Airflow DAG_ |\n| Workflow compute pricing | \u2705 | \u2705 |\n| Notebook code in source control | \u2705 | \u2705 |\n| Workflow structure in source control | \u2705 | \u2705 |\n| Retry from beginning | \u2705 | \u2705 |\n| Retry single task | \u2705 | \u2705 |\n| Task groups within Workflows | | \u2705 |\n| Trigger workflows from other DAGs | | \u2705 |\n| Workflow-level parameters | | \u2705 |\n\n## Example\n\nThe following Airflow DAG illustrates how to use the `DatabricksTaskGroup` and `DatabricksNotebookOperator` to define a Databricks Workflow in Airflow:\n\n```python\nfrom pendulum import datetime\n\nfrom airflow.decorators import dag, task_group\nfrom airflow.operators.trigger_dagrun import TriggerDagRunOperator\nfrom astro_databricks import DatabricksNotebookOperator, DatabricksWorkflowTaskGroup\n\n# define your cluster spec - can have from 1 to many clusters\njob_cluster_spec = [\n {\n \"job_cluster_key\": \"astro_databricks\",\n \"new_cluster\": {\n \"cluster_name\": \"\",\n # ...\n },\n }\n]\n\n@dag(start_date=datetime(2023, 1, 1), schedule_interval=\"@daily\", catchup=False)\ndef databricks_workflow_example():\n # the task group is a context manager that will create a Databricks Workflow\n with DatabricksWorkflowTaskGroup(\n group_id=\"example_databricks_workflow\",\n databricks_conn_id=\"databricks_default\",\n job_clusters=job_cluster_spec,\n # you can specify common fields here that get shared to all notebooks\n notebook_packages=[\n { \"pypi\": { \"package\": \"pandas\" } },\n ],\n # notebook_params supports templating\n notebook_params={\n \"start_time\": \"{{ ds }}\",\n }\n ) as workflow:\n notebook_1 = DatabricksNotebookOperator(\n task_id=\"notebook_1\",\n databricks_conn_id=\"databricks_default\",\n notebook_path=\"/Shared/notebook_1\",\n source=\"WORKSPACE\",\n # job_cluster_key corresponds to the job_cluster_key in the job_cluster_spec\n job_cluster_key=\"astro_databricks\",\n # you can add to packages & params at the task level\n notebook_packages=[\n { \"pypi\": { \"package\": \"scikit-learn\" } },\n ],\n notebook_params={\n \"end_time\": \"{{ macros.ds_add(ds, 1) }}\",\n }\n )\n\n # you can embed task groups for easier dependency management\n @task_group(group_id=\"inner_task_group\")\n def inner_task_group():\n notebook_2 = DatabricksNotebookOperator(\n task_id=\"notebook_2\",\n databricks_conn_id=\"databricks_default\",\n notebook_path=\"/Shared/notebook_2\",\n source=\"WORKSPACE\",\n job_cluster_key=\"astro_databricks\",\n )\n\n notebook_3 = DatabricksNotebookOperator(\n task_id=\"notebook_3\",\n databricks_conn_id=\"databricks_default\",\n notebook_path=\"/Shared/notebook_3\",\n source=\"WORKSPACE\",\n job_cluster_key=\"astro_databricks\",\n )\n\n notebook_4 = DatabricksNotebookOperator(\n task_id=\"notebook_4\",\n databricks_conn_id=\"databricks_default\",\n notebook_path=\"/Shared/notebook_4\",\n source=\"WORKSPACE\",\n job_cluster_key=\"astro_databricks\",\n )\n\n notebook_1 >> inner_task_group() >> notebook_4\n\n trigger_workflow_2 = TriggerDagRunOperator(\n task_id=\"trigger_workflow_2\",\n trigger_dag_id=\"workflow_2\",\n execution_date=\"{{ next_execution_date }}\",\n )\n\n workflow >> trigger_workflow_2\n\ndatabricks_workflow_example_dag = databricks_workflow_example()\n```\n\n### Airflow UI\n\n\n\n### Databricks UI\n\n\n\n## Quickstart\n\nCheck out the following quickstart guides:\n\n- [With the Astro CLI](quickstart/astro-cli.md)\n- [Without the Astro CLI](quickstart/without-astro-cli.md)\n\n## Documentation\n\nThe documentation is a work in progress--we aim to follow the [Di\u00e1taxis](https://diataxis.fr/) system:\n\n- [Reference Guide](https://astronomer.github.io/astro-provider-databricks/)\n\n## Changelog\n\nAstro Databricks follows [semantic versioning](https://semver.org/) for releases. Read [changelog](CHANGELOG.rst) to understand more about the changes introduced to each version.\n\n## Contribution guidelines\n\nAll contributions, bug reports, bug fixes, documentation improvements, enhancements, and ideas are welcome.\n\nRead the [Contribution Guidelines](docs/contributing.rst) for a detailed overview on how to contribute.\n\nContributors and maintainers should abide by the [Contributor Code of Conduct](CODE_OF_CONDUCT.md).\n\n## License\n\n[Apache Licence 2.0](LICENSE)\n",

"bugtrack_url": null,

"license": null,

"summary": "Affordable Databricks Workflows in Apache Airflow",

"version": "0.3.2",

"project_urls": {

"Documentation": "https://github.com/astronomer/astro-provider-databricks/",

"Homepage": "https://github.com/astronomer/astro-provider-databricks/"

},

"split_keywords": [

"airflow",

" apache-airflow",

" astronomer",

" dags"

],

"urls": [

{

"comment_text": null,

"digests": {

"blake2b_256": "07d06594a6772ffbd0f8059e1f3829fad46d3191a94ec01575583f4e97818192",

"md5": "7875dbf3f2646b9dc858f2580d2221ab",

"sha256": "33585a3fa4d4031f80a446c1e4486171e3c86084e37f7acc246dced1b6fb202c"

},

"downloads": -1,

"filename": "astro_provider_databricks-0.3.2-py3-none-any.whl",

"has_sig": false,

"md5_digest": "7875dbf3f2646b9dc858f2580d2221ab",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.7",

"size": 11678,

"upload_time": "2025-09-03T17:27:11",

"upload_time_iso_8601": "2025-09-03T17:27:11.889574Z",

"url": "https://files.pythonhosted.org/packages/07/d0/6594a6772ffbd0f8059e1f3829fad46d3191a94ec01575583f4e97818192/astro_provider_databricks-0.3.2-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": null,

"digests": {

"blake2b_256": "ae70cb0b48d01d3afe27de6dfee5dd1057d5ca56699ac670df33496096dda3c8",

"md5": "cf10bf2750f517b004ab2718bb722d37",

"sha256": "db1d19d992dde2f5a4e83a3e720cbe712789e89fe0e5d6941395d2ab5bc7bc1f"

},

"downloads": -1,

"filename": "astro_provider_databricks-0.3.2.tar.gz",

"has_sig": false,

"md5_digest": "cf10bf2750f517b004ab2718bb722d37",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.7",

"size": 2231099,

"upload_time": "2025-09-03T17:27:10",

"upload_time_iso_8601": "2025-09-03T17:27:10.236753Z",

"url": "https://files.pythonhosted.org/packages/ae/70/cb0b48d01d3afe27de6dfee5dd1057d5ca56699ac670df33496096dda3c8/astro_provider_databricks-0.3.2.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2025-09-03 17:27:10",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "astronomer",

"github_project": "astro-provider-databricks",

"travis_ci": false,

"coveralls": false,

"github_actions": true,

"lcname": "astro-provider-databricks"

}