# Amazon EventBridge Pipes Construct Library

<!--BEGIN STABILITY BANNER-->---

> The APIs of higher level constructs in this module are experimental and under active development.

> They are subject to non-backward compatible changes or removal in any future version. These are

> not subject to the [Semantic Versioning](https://semver.org/) model and breaking changes will be

> announced in the release notes. This means that while you may use them, you may need to update

> your source code when upgrading to a newer version of this package.

---

<!--END STABILITY BANNER-->

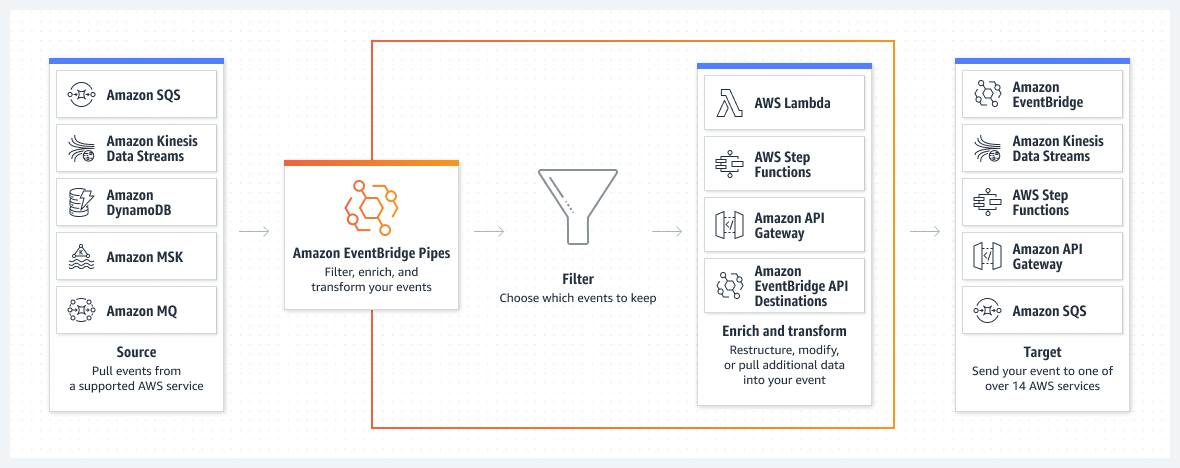

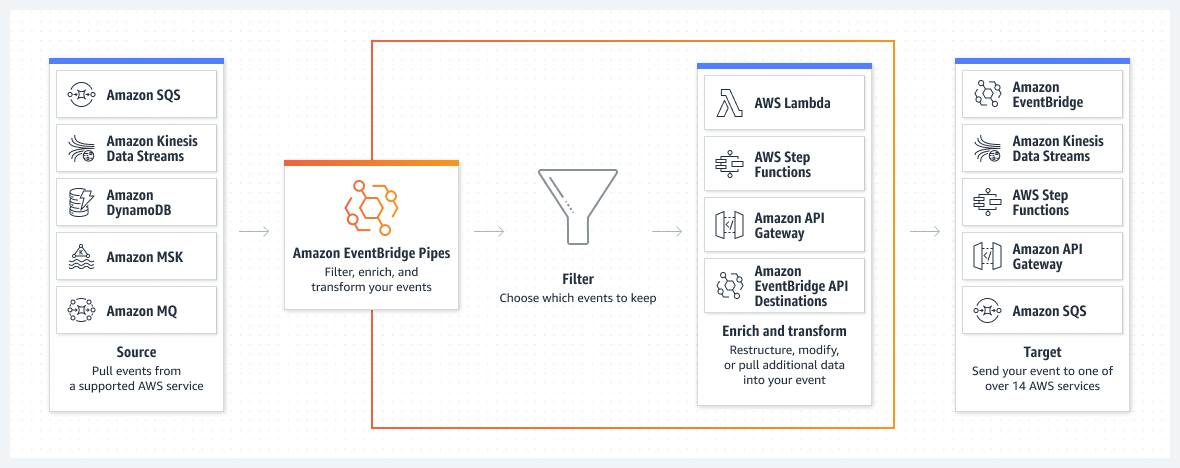

EventBridge Pipes let you create source to target connections between several

AWS services. While transporting messages from a source to a target the messages

can be filtered, transformed and enriched.

For more details see the [service documentation](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-pipes.html).

## Pipe

[EventBridge Pipes](https://aws.amazon.com/blogs/aws/new-create-point-to-point-integrations-between-event-producers-and-consumers-with-amazon-eventbridge-pipes/)

is a fully managed service that enables point-to-point integrations between

event producers and consumers. Pipes can be used to connect several AWS services

to each other, or to connect AWS services to external services.

A pipe has a source and a target. The source events can be filtered and enriched

before reaching the target.

## Example - pipe usage

> The following code examples use an example implementation of a [source](#source) and [target](#target).

To define a pipe you need to create a new `Pipe` construct. The `Pipe` construct needs a source and a target.

```python

# source_queue: sqs.Queue

# target_queue: sqs.Queue

pipe = pipes.Pipe(self, "Pipe",

source=SqsSource(source_queue),

target=SqsTarget(target_queue)

)

```

This minimal example creates a pipe with a SQS queue as source and a SQS queue as target.

Messages from the source are put into the body of the target message.

## Source

A source is a AWS Service that is polled. The following sources are possible:

* [Amazon DynamoDB stream](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-pipes-dynamodb.html)

* [Amazon Kinesis stream](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-pipes-kinesis.html)

* [Amazon MQ broker](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-pipes-mq.html)

* [Amazon MSK stream](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-pipes-msk.html)

* [Amazon SQS queue](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-pipes-sqs.html)

* [Apache Kafka stream](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-pipes-kafka.html)

Currently, DynamoDB, Kinesis, and SQS are supported. If you are interested in support for additional sources,

kindly let us know by opening a GitHub issue or raising a PR.

### Example source

```python

# source_queue: sqs.Queue

pipe_source = SqsSource(source_queue)

```

## Filter

A filter can be used to filter the events from the source before they are

forwarded to the enrichment or, if no enrichment is present, target step. Multiple filter expressions are possible.

If one of the filter expressions matches, the event is forwarded to the enrichment or target step.

### Example - filter usage

```python

# source_queue: sqs.Queue

# target_queue: sqs.Queue

source_filter = pipes.Filter([

pipes.FilterPattern.from_object({

"body": {

# only forward events with customerType B2B or B2C

"customer_type": ["B2B", "B2C"]

}

})

])

pipe = pipes.Pipe(self, "Pipe",

source=SqsSource(source_queue),

target=SqsTarget(target_queue),

filter=source_filter

)

```

This example shows a filter that only forwards events with the `customerType` B2B or B2C from the source messages. Messages that are not matching the filter are not forwarded to the enrichment or target step.

You can define multiple filter pattern which are combined with a logical `OR`.

Additional filter pattern and details can be found in the EventBridge pipes [docs](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-pipes-event-filtering.html).

## Input transformation

For enrichments and targets the input event can be transformed. The transformation is applied for each item of the batch.

A transformation has access to the input event as well to some context information of the pipe itself like the name of the pipe.

See [docs](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-pipes-input-transformation.html) for details.

### Example - input transformation from object

The input transformation can be created from an object. The object can contain static values, dynamic values or pipe variables.

```python

# source_queue: sqs.Queue

# target_queue: sqs.Queue

target_input_transformation = pipes.InputTransformation.from_object({

"static_field": "static value",

"dynamic_field": pipes.DynamicInput.from_event_path("$.body.payload"),

"pipe_variable": pipes.DynamicInput.pipe_name

})

pipe = pipes.Pipe(self, "Pipe",

pipe_name="MyPipe",

source=SqsSource(source_queue),

target=SqsTarget(target_queue,

input_transformation=target_input_transformation

)

)

```

This example shows a transformation that adds a static field, a dynamic field and a pipe variable to the input event. The dynamic field is extracted from the input event. The pipe variable is extracted from the pipe context.

So when the following batch of input events is processed by the pipe

```json

[

{

...

"body": "{\"payload\": \"Test message.\"}",

...

}

]

```

it is converted into the following payload:

```json

[

{

...

"staticField": "static value",

"dynamicField": "Test message.",

"pipeVariable": "MyPipe",

...

}

]

```

If the transformation is applied to a target it might be converted to a string representation. For example, the resulting SQS message body looks like this:

```json

[

{

...

"body": "{\"staticField\": \"static value\", \"dynamicField\": \"Test message.\", \"pipeVariable\": \"MyPipe\"}",

...

}

]

```

### Example - input transformation from event path

In cases where you want to forward only a part of the event to the target you can use the transformation event path.

> This only works for targets because the enrichment needs to have a valid json as input.

```python

# source_queue: sqs.Queue

# target_queue: sqs.Queue

target_input_transformation = pipes.InputTransformation.from_event_path("$.body.payload")

pipe = pipes.Pipe(self, "Pipe",

source=SqsSource(source_queue),

target=SqsTarget(target_queue,

input_transformation=target_input_transformation

)

)

```

This transformation extracts the body of the event.

So when the following batch of input events is processed by the pipe

```json

[

{

...

"body": "\"{\"payload\": \"Test message.\"}\"",

...

}

]

```

it is converted into the following target payload:

```json

[

{

...

"body": "Test message."

...

}

]

```

> The [implicit payload parsing](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-pipes-input-transformation.html#input-transform-implicit) (e.g. SQS message body to JSON) only works if the input is the source payload. Implicit body parsing is not applied on enrichment results.

### Example - input transformation from text

In cases where you want to forward a static text to the target or use your own formatted `inputTemplate` you can use the transformation from text.

```python

# source_queue: sqs.Queue

# target_queue: sqs.Queue

target_input_transformation = pipes.InputTransformation.from_text("My static text")

pipe = pipes.Pipe(self, "Pipe",

source=SqsSource(source_queue),

target=SqsTarget(target_queue,

input_transformation=target_input_transformation

)

)

```

This transformation forwards the static text to the target.

```json

[

{

...

"body": "My static text"

...

}

]

```

## Enrichment

In the enrichment step the (un)filtered payloads from the source can be used to invoke one of the following services:

* API destination

* Amazon API Gateway

* Lambda function

* Step Functions state machine

* only express workflow

### Example enrichment implementation

> Currently no implementation exist for any of the supported enrichments. The following example shows how an implementation can look like. The actual implementation is not part of this package and will be in a separate one.

```python

@jsii.implements(pipes.IEnrichment)

class LambdaEnrichment:

def __init__(self, lambda_, props=None):

self.enrichment_arn = lambda_.function_arn

self.input_transformation = props.input_transformation

def bind(self, pipe):

return pipes.EnrichmentParametersConfig(

enrichment_parameters=cdk.aws_pipes.CfnPipe.PipeEnrichmentParametersProperty(

input_template=self.input_transformation.bind(pipe).input_template

)

)

def grant_invoke(self, pipe_role):

self.lambda_.grant_invoke(pipe_role)

```

An enrichment implementation needs to provide the `enrichmentArn`, `enrichmentParameters` and grant the pipe role invoke access to the enrichment.

### Example - enrichment usage

```python

# source_queue: sqs.Queue

# target_queue: sqs.Queue

# enrichment_lambda: lambda.Function

enrichment_input_transformation = pipes.InputTransformation.from_object({

"static_field": "static value",

"dynamic_field": pipes.DynamicInput.from_event_path("$.body.payload"),

"pipe_variable": pipes.DynamicInput.pipe_name

})

pipe = pipes.Pipe(self, "Pipe",

source=SqsSource(source_queue),

target=SqsTarget(target_queue),

enrichment=LambdaEnrichment(enrichment_lambda, {

"input_transformation": enrichment_input_transformation

})

)

```

This example adds a lambda function as enrichment to the pipe. The lambda function is invoked with the batch of messages from the source after applying the transformation. The lambda function can return a result which is forwarded to the target.

So the following batch of input events is processed by the pipe

```json

[

{

...

"body": "{\"payload\": \"Test message.\"}",

...

}

]

```

it is converted into the following payload which is sent to the lambda function.

```json

[

{

...

"staticField": "static value",

"dynamicField": "Test message.",

"pipeVariable": "MyPipe",

...

}

]

```

The lambda function can return a result which is forwarded to the target.

For example a lambda function that returns a concatenation of the static field, dynamic field and pipe variable

```python

def handler(event):

return event.static_field + "-" + event.dynamic_field + "-" + event.pipe_variable

```

will produce the following target message in the target SQS queue.

```json

[

{

...

"body": "static value-Test message.-MyPipe",

...

}

]

```

## Target

A Target is the end of the Pipe. After the payload from the source is pulled,

filtered and enriched it is forwarded to the target. For now the following

targets are supported:

* [API destination](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-api-destinations.html)

* [API Gateway](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-api-gateway-target.html)

* [Batch job queue](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-pipes-event-target.html#pipes-targets-specifics-batch)

* [CloudWatch log group](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-pipes-event-target.html#pipes-targets-specifics-cwl)

* [ECS task](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-pipes-event-target.html#pipes-targets-specifics-ecs-task)

* Event bus in the same account and Region

* Firehose delivery stream

* Inspector assessment template

* Kinesis stream

* Lambda function (SYNC or ASYNC)

* Redshift cluster data API queries

* SageMaker Pipeline

* SNS topic

* SQS queue

* Step Functions state machine

* Express workflows (ASYNC)

* Standard workflows (SYNC or ASYNC)

The target event can be transformed before it is forwarded to the target using

the same input transformation as in the enrichment step.

### Example target

```python

# target_queue: sqs.Queue

pipe_target = SqsTarget(target_queue)

```

## Log destination

A pipe can produce log events that are forwarded to different log destinations.

You can configure multiple destinations, but all the destination share the same log level and log data.

For details check the official [documentation](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-pipes-logs.html).

The log level and data that is included in the log events is configured on the pipe class itself.

The actual destination is defined independently, and there are three options:

1. `CloudwatchLogsLogDestination`

2. `FirehoseLogDestination`

3. `S3LogDestination`

### Example log destination usage

```python

# source_queue: sqs.Queue

# target_queue: sqs.Queue

# log_group: logs.LogGroup

cwl_log_destination = pipes.CloudwatchLogsLogDestination(log_group)

pipe = pipes.Pipe(self, "Pipe",

source=SqsSource(source_queue),

target=SqsTarget(target_queue),

log_level=pipes.LogLevel.TRACE,

log_include_execution_data=[pipes.IncludeExecutionData.ALL],

log_destinations=[cwl_log_destination]

)

```

This example uses a CloudWatch Logs log group to store the log emitted during a pipe execution.

The log level is set to `TRACE` so all steps of the pipe are logged.

Additionally all execution data is logged as well.

## Encrypt pipe data with KMS

You can specify that EventBridge use a customer managed key to encrypt pipe data stored at rest,

rather than use an AWS owned key as is the default.

Details can be found in the [documentation](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-encryption-pipes-cmkey.html).

To do this, you need to specify the key in the `kmsKey` property of the pipe.

```python

# source_queue: sqs.Queue

# target_queue: sqs.Queue

# kms_key: kms.Key

pipe = pipes.Pipe(self, "Pipe",

source=SqsSource(source_queue),

target=SqsTarget(target_queue),

kms_key=kms_key,

# pipeName is required when using a KMS key

pipe_name="MyPipe"

)

```

Raw data

{

"_id": null,

"home_page": "https://github.com/aws/aws-cdk",

"name": "aws-cdk.aws-pipes-alpha",

"maintainer": null,

"docs_url": null,

"requires_python": "~=3.9",

"maintainer_email": null,

"keywords": null,

"author": "Amazon Web Services",

"author_email": null,

"download_url": "https://files.pythonhosted.org/packages/4d/e9/9838a48e0a9ec87056960701a70672289231bd8a0cbedd7074621452c700/aws_cdk_aws_pipes_alpha-2.214.0a0.tar.gz",

"platform": null,

"description": "# Amazon EventBridge Pipes Construct Library\n\n<!--BEGIN STABILITY BANNER-->---\n\n\n\n\n> The APIs of higher level constructs in this module are experimental and under active development.\n> They are subject to non-backward compatible changes or removal in any future version. These are\n> not subject to the [Semantic Versioning](https://semver.org/) model and breaking changes will be\n> announced in the release notes. This means that while you may use them, you may need to update\n> your source code when upgrading to a newer version of this package.\n\n---\n<!--END STABILITY BANNER-->\n\nEventBridge Pipes let you create source to target connections between several\nAWS services. While transporting messages from a source to a target the messages\ncan be filtered, transformed and enriched.\n\n\n\nFor more details see the [service documentation](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-pipes.html).\n\n## Pipe\n\n[EventBridge Pipes](https://aws.amazon.com/blogs/aws/new-create-point-to-point-integrations-between-event-producers-and-consumers-with-amazon-eventbridge-pipes/)\nis a fully managed service that enables point-to-point integrations between\nevent producers and consumers. Pipes can be used to connect several AWS services\nto each other, or to connect AWS services to external services.\n\nA pipe has a source and a target. The source events can be filtered and enriched\nbefore reaching the target.\n\n## Example - pipe usage\n\n> The following code examples use an example implementation of a [source](#source) and [target](#target).\n\nTo define a pipe you need to create a new `Pipe` construct. The `Pipe` construct needs a source and a target.\n\n```python\n# source_queue: sqs.Queue\n# target_queue: sqs.Queue\n\n\npipe = pipes.Pipe(self, \"Pipe\",\n source=SqsSource(source_queue),\n target=SqsTarget(target_queue)\n)\n```\n\nThis minimal example creates a pipe with a SQS queue as source and a SQS queue as target.\nMessages from the source are put into the body of the target message.\n\n## Source\n\nA source is a AWS Service that is polled. The following sources are possible:\n\n* [Amazon DynamoDB stream](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-pipes-dynamodb.html)\n* [Amazon Kinesis stream](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-pipes-kinesis.html)\n* [Amazon MQ broker](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-pipes-mq.html)\n* [Amazon MSK stream](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-pipes-msk.html)\n* [Amazon SQS queue](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-pipes-sqs.html)\n* [Apache Kafka stream](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-pipes-kafka.html)\n\nCurrently, DynamoDB, Kinesis, and SQS are supported. If you are interested in support for additional sources,\nkindly let us know by opening a GitHub issue or raising a PR.\n\n### Example source\n\n```python\n# source_queue: sqs.Queue\n\npipe_source = SqsSource(source_queue)\n```\n\n## Filter\n\nA filter can be used to filter the events from the source before they are\nforwarded to the enrichment or, if no enrichment is present, target step. Multiple filter expressions are possible.\nIf one of the filter expressions matches, the event is forwarded to the enrichment or target step.\n\n### Example - filter usage\n\n```python\n# source_queue: sqs.Queue\n# target_queue: sqs.Queue\n\n\nsource_filter = pipes.Filter([\n pipes.FilterPattern.from_object({\n \"body\": {\n # only forward events with customerType B2B or B2C\n \"customer_type\": [\"B2B\", \"B2C\"]\n }\n })\n])\n\npipe = pipes.Pipe(self, \"Pipe\",\n source=SqsSource(source_queue),\n target=SqsTarget(target_queue),\n filter=source_filter\n)\n```\n\nThis example shows a filter that only forwards events with the `customerType` B2B or B2C from the source messages. Messages that are not matching the filter are not forwarded to the enrichment or target step.\n\nYou can define multiple filter pattern which are combined with a logical `OR`.\n\nAdditional filter pattern and details can be found in the EventBridge pipes [docs](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-pipes-event-filtering.html).\n\n## Input transformation\n\nFor enrichments and targets the input event can be transformed. The transformation is applied for each item of the batch.\nA transformation has access to the input event as well to some context information of the pipe itself like the name of the pipe.\nSee [docs](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-pipes-input-transformation.html) for details.\n\n### Example - input transformation from object\n\nThe input transformation can be created from an object. The object can contain static values, dynamic values or pipe variables.\n\n```python\n# source_queue: sqs.Queue\n# target_queue: sqs.Queue\n\n\ntarget_input_transformation = pipes.InputTransformation.from_object({\n \"static_field\": \"static value\",\n \"dynamic_field\": pipes.DynamicInput.from_event_path(\"$.body.payload\"),\n \"pipe_variable\": pipes.DynamicInput.pipe_name\n})\n\npipe = pipes.Pipe(self, \"Pipe\",\n pipe_name=\"MyPipe\",\n source=SqsSource(source_queue),\n target=SqsTarget(target_queue,\n input_transformation=target_input_transformation\n )\n)\n```\n\nThis example shows a transformation that adds a static field, a dynamic field and a pipe variable to the input event. The dynamic field is extracted from the input event. The pipe variable is extracted from the pipe context.\n\nSo when the following batch of input events is processed by the pipe\n\n```json\n[\n {\n ...\n \"body\": \"{\\\"payload\\\": \\\"Test message.\\\"}\",\n ...\n }\n]\n```\n\nit is converted into the following payload:\n\n```json\n[\n {\n ...\n \"staticField\": \"static value\",\n \"dynamicField\": \"Test message.\",\n \"pipeVariable\": \"MyPipe\",\n ...\n }\n]\n```\n\nIf the transformation is applied to a target it might be converted to a string representation. For example, the resulting SQS message body looks like this:\n\n```json\n[\n {\n ...\n \"body\": \"{\\\"staticField\\\": \\\"static value\\\", \\\"dynamicField\\\": \\\"Test message.\\\", \\\"pipeVariable\\\": \\\"MyPipe\\\"}\",\n ...\n }\n]\n```\n\n### Example - input transformation from event path\n\nIn cases where you want to forward only a part of the event to the target you can use the transformation event path.\n\n> This only works for targets because the enrichment needs to have a valid json as input.\n\n```python\n# source_queue: sqs.Queue\n# target_queue: sqs.Queue\n\n\ntarget_input_transformation = pipes.InputTransformation.from_event_path(\"$.body.payload\")\n\npipe = pipes.Pipe(self, \"Pipe\",\n source=SqsSource(source_queue),\n target=SqsTarget(target_queue,\n input_transformation=target_input_transformation\n )\n)\n```\n\nThis transformation extracts the body of the event.\n\nSo when the following batch of input events is processed by the pipe\n\n```json\n [\n {\n ...\n \"body\": \"\\\"{\\\"payload\\\": \\\"Test message.\\\"}\\\"\",\n ...\n }\n]\n```\n\nit is converted into the following target payload:\n\n```json\n[\n {\n ...\n \"body\": \"Test message.\"\n ...\n }\n]\n```\n\n> The [implicit payload parsing](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-pipes-input-transformation.html#input-transform-implicit) (e.g. SQS message body to JSON) only works if the input is the source payload. Implicit body parsing is not applied on enrichment results.\n\n### Example - input transformation from text\n\nIn cases where you want to forward a static text to the target or use your own formatted `inputTemplate` you can use the transformation from text.\n\n```python\n# source_queue: sqs.Queue\n# target_queue: sqs.Queue\n\n\ntarget_input_transformation = pipes.InputTransformation.from_text(\"My static text\")\n\npipe = pipes.Pipe(self, \"Pipe\",\n source=SqsSource(source_queue),\n target=SqsTarget(target_queue,\n input_transformation=target_input_transformation\n )\n)\n```\n\nThis transformation forwards the static text to the target.\n\n```json\n[\n {\n ...\n \"body\": \"My static text\"\n ...\n }\n]\n```\n\n## Enrichment\n\nIn the enrichment step the (un)filtered payloads from the source can be used to invoke one of the following services:\n\n* API destination\n* Amazon API Gateway\n* Lambda function\n* Step Functions state machine\n\n * only express workflow\n\n### Example enrichment implementation\n\n> Currently no implementation exist for any of the supported enrichments. The following example shows how an implementation can look like. The actual implementation is not part of this package and will be in a separate one.\n\n```python\n@jsii.implements(pipes.IEnrichment)\nclass LambdaEnrichment:\n\n def __init__(self, lambda_, props=None):\n self.enrichment_arn = lambda_.function_arn\n self.input_transformation = props.input_transformation\n\n def bind(self, pipe):\n return pipes.EnrichmentParametersConfig(\n enrichment_parameters=cdk.aws_pipes.CfnPipe.PipeEnrichmentParametersProperty(\n input_template=self.input_transformation.bind(pipe).input_template\n )\n )\n\n def grant_invoke(self, pipe_role):\n self.lambda_.grant_invoke(pipe_role)\n```\n\nAn enrichment implementation needs to provide the `enrichmentArn`, `enrichmentParameters` and grant the pipe role invoke access to the enrichment.\n\n### Example - enrichment usage\n\n```python\n# source_queue: sqs.Queue\n# target_queue: sqs.Queue\n# enrichment_lambda: lambda.Function\n\n\nenrichment_input_transformation = pipes.InputTransformation.from_object({\n \"static_field\": \"static value\",\n \"dynamic_field\": pipes.DynamicInput.from_event_path(\"$.body.payload\"),\n \"pipe_variable\": pipes.DynamicInput.pipe_name\n})\n\npipe = pipes.Pipe(self, \"Pipe\",\n source=SqsSource(source_queue),\n target=SqsTarget(target_queue),\n enrichment=LambdaEnrichment(enrichment_lambda, {\n \"input_transformation\": enrichment_input_transformation\n })\n)\n```\n\nThis example adds a lambda function as enrichment to the pipe. The lambda function is invoked with the batch of messages from the source after applying the transformation. The lambda function can return a result which is forwarded to the target.\n\nSo the following batch of input events is processed by the pipe\n\n```json\n[\n {\n ...\n \"body\": \"{\\\"payload\\\": \\\"Test message.\\\"}\",\n ...\n }\n]\n```\n\nit is converted into the following payload which is sent to the lambda function.\n\n```json\n[\n {\n ...\n \"staticField\": \"static value\",\n \"dynamicField\": \"Test message.\",\n \"pipeVariable\": \"MyPipe\",\n ...\n }\n]\n```\n\nThe lambda function can return a result which is forwarded to the target.\nFor example a lambda function that returns a concatenation of the static field, dynamic field and pipe variable\n\n```python\ndef handler(event):\n return event.static_field + \"-\" + event.dynamic_field + \"-\" + event.pipe_variable\n```\n\nwill produce the following target message in the target SQS queue.\n\n```json\n[\n {\n ...\n \"body\": \"static value-Test message.-MyPipe\",\n ...\n }\n]\n```\n\n## Target\n\nA Target is the end of the Pipe. After the payload from the source is pulled,\nfiltered and enriched it is forwarded to the target. For now the following\ntargets are supported:\n\n* [API destination](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-api-destinations.html)\n* [API Gateway](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-api-gateway-target.html)\n* [Batch job queue](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-pipes-event-target.html#pipes-targets-specifics-batch)\n* [CloudWatch log group](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-pipes-event-target.html#pipes-targets-specifics-cwl)\n* [ECS task](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-pipes-event-target.html#pipes-targets-specifics-ecs-task)\n* Event bus in the same account and Region\n* Firehose delivery stream\n* Inspector assessment template\n* Kinesis stream\n* Lambda function (SYNC or ASYNC)\n* Redshift cluster data API queries\n* SageMaker Pipeline\n* SNS topic\n* SQS queue\n* Step Functions state machine\n\n * Express workflows (ASYNC)\n * Standard workflows (SYNC or ASYNC)\n\nThe target event can be transformed before it is forwarded to the target using\nthe same input transformation as in the enrichment step.\n\n### Example target\n\n```python\n# target_queue: sqs.Queue\n\npipe_target = SqsTarget(target_queue)\n```\n\n## Log destination\n\nA pipe can produce log events that are forwarded to different log destinations.\nYou can configure multiple destinations, but all the destination share the same log level and log data.\nFor details check the official [documentation](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-pipes-logs.html).\n\nThe log level and data that is included in the log events is configured on the pipe class itself.\nThe actual destination is defined independently, and there are three options:\n\n1. `CloudwatchLogsLogDestination`\n2. `FirehoseLogDestination`\n3. `S3LogDestination`\n\n### Example log destination usage\n\n```python\n# source_queue: sqs.Queue\n# target_queue: sqs.Queue\n# log_group: logs.LogGroup\n\n\ncwl_log_destination = pipes.CloudwatchLogsLogDestination(log_group)\n\npipe = pipes.Pipe(self, \"Pipe\",\n source=SqsSource(source_queue),\n target=SqsTarget(target_queue),\n log_level=pipes.LogLevel.TRACE,\n log_include_execution_data=[pipes.IncludeExecutionData.ALL],\n log_destinations=[cwl_log_destination]\n)\n```\n\nThis example uses a CloudWatch Logs log group to store the log emitted during a pipe execution.\nThe log level is set to `TRACE` so all steps of the pipe are logged.\nAdditionally all execution data is logged as well.\n\n## Encrypt pipe data with KMS\n\nYou can specify that EventBridge use a customer managed key to encrypt pipe data stored at rest,\nrather than use an AWS owned key as is the default.\nDetails can be found in the [documentation](https://docs.aws.amazon.com/eventbridge/latest/userguide/eb-encryption-pipes-cmkey.html).\n\nTo do this, you need to specify the key in the `kmsKey` property of the pipe.\n\n```python\n# source_queue: sqs.Queue\n# target_queue: sqs.Queue\n# kms_key: kms.Key\n\n\npipe = pipes.Pipe(self, \"Pipe\",\n source=SqsSource(source_queue),\n target=SqsTarget(target_queue),\n kms_key=kms_key,\n # pipeName is required when using a KMS key\n pipe_name=\"MyPipe\"\n)\n```\n",

"bugtrack_url": null,

"license": "Apache-2.0",

"summary": "The CDK Construct Library for Amazon EventBridge Pipes",

"version": "2.214.0a0",

"project_urls": {

"Homepage": "https://github.com/aws/aws-cdk",

"Source": "https://github.com/aws/aws-cdk.git"

},

"split_keywords": [],

"urls": [

{

"comment_text": null,

"digests": {

"blake2b_256": "924c12d777e28cf497447cb07a387ba82c33a4c51a339c9319cb48bce36a962a",

"md5": "2543eb24a768b51760f1687a40b36a01",

"sha256": "cff05af05fef6d42b3df596d1acc452f085a58b143825246aa9f0e0b152b5226"

},

"downloads": -1,

"filename": "aws_cdk_aws_pipes_alpha-2.214.0a0-py3-none-any.whl",

"has_sig": false,

"md5_digest": "2543eb24a768b51760f1687a40b36a01",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": "~=3.9",

"size": 125162,

"upload_time": "2025-09-02T12:32:41",

"upload_time_iso_8601": "2025-09-02T12:32:41.617152Z",

"url": "https://files.pythonhosted.org/packages/92/4c/12d777e28cf497447cb07a387ba82c33a4c51a339c9319cb48bce36a962a/aws_cdk_aws_pipes_alpha-2.214.0a0-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": null,

"digests": {

"blake2b_256": "4de99838a48e0a9ec87056960701a70672289231bd8a0cbedd7074621452c700",

"md5": "fcab415f67f9af3c37bf5cbf4ae337df",

"sha256": "9f0316fc5bf73a9e410f258f525ef2fad2d8385702ad64cbeb76b7257dc64678"

},

"downloads": -1,

"filename": "aws_cdk_aws_pipes_alpha-2.214.0a0.tar.gz",

"has_sig": false,

"md5_digest": "fcab415f67f9af3c37bf5cbf4ae337df",

"packagetype": "sdist",

"python_version": "source",

"requires_python": "~=3.9",

"size": 127116,

"upload_time": "2025-09-02T12:33:18",

"upload_time_iso_8601": "2025-09-02T12:33:18.368394Z",

"url": "https://files.pythonhosted.org/packages/4d/e9/9838a48e0a9ec87056960701a70672289231bd8a0cbedd7074621452c700/aws_cdk_aws_pipes_alpha-2.214.0a0.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2025-09-02 12:33:18",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "aws",

"github_project": "aws-cdk",

"travis_ci": false,

"coveralls": false,

"github_actions": true,

"lcname": "aws-cdk.aws-pipes-alpha"

}