| Name | behavex JSON |

| Version |

4.4.1

JSON

JSON |

| download |

| home_page | https://github.com/hrcorval/behavex |

| Summary | Agile testing framework on top of Behave (BDD). |

| upload_time | 2025-07-31 22:06:48 |

| maintainer | None |

| docs_url | None |

| author | Hernan Rey |

| requires_python | >=3.5 |

| license | MIT |

| keywords |

|

| VCS |

|

| bugtrack_url |

|

| requirements |

No requirements were recorded.

|

| Travis-CI |

No Travis.

|

| coveralls test coverage |

No coveralls.

|

[](https://pepy.tech/project/behavex)

[](https://badge.fury.io/py/behavex)

[](https://pypi.org/project/behavex/)

[](https://libraries.io/github/hrcorval/behavex)

[](https://github.com/hrcorval/behavex/blob/main/LICENSE)

[](https://github.com/hrcorval/behavex/actions)

[](https://github.com/hrcorval/behavex/commits/main)

# BehaveX Documentation

## ✨ Latest Features

Just to mention the most important features delivered in latest BehaveX releases:

🎯 **Test Execution Ordering** *(v4.4.1)* - Control the sequence of scenario and feature execution during parallel runs using order tags (e.g., `@ORDER_001`, `@ORDER_010`). Now includes strict ordering mode (`--order-tests-strict`) for scenarios that must wait for lower-order tests to complete.

📊 **Allure Reports Integration** *(v4.2.1)* - Generate beautiful, comprehensive test reports with Allure framework integration.

📈 **Console Progress Bar** *(v3.2.13)* - Real-time progress tracking during parallel test execution.

## Table of Contents

- [Introduction](#introduction)

- [Features](#features)

- [Installation Instructions](#installation-instructions)

- [Execution Instructions](#execution-instructions)

- [Constraints](#constraints)

- [Supported Behave Arguments](#supported-behave-arguments)

- [Specific Arguments from BehaveX](#specific-arguments-from-behavex)

- [Parallel Test Executions](#parallel-test-executions)

- [Test Execution Ordering](#test-execution-ordering)

- [Test Execution Reports](#test-execution-reports)

- [Attaching Images to the HTML Report](#attaching-images-to-the-html-report)

- [Attaching Additional Execution Evidence to the HTML Report](#attaching-additional-execution-evidence-to-the-html-report)

- [Test Logs per Scenario](#test-logs-per-scenario)

- [Metrics and Insights](#metrics-and-insights)

- [Dry Runs](#dry-runs)

- [Muting Test Scenarios](#muting-test-scenarios)

- [Handling Failing Scenarios](#handling-failing-scenarios)

- [Displaying Progress Bar in Console](#displaying-progress-bar-in-console)

- [Allure Reports Integration](#allure-reports-integration)

- [Show Your Support](#show-your-support)

## Introduction

**BehaveX** is a BDD testing solution built on top of the Python Behave library, orchestrating parallel test sessions to enhance your testing workflow with additional features and performance improvements. It's particularly beneficial in the following scenarios:

- **Accelerating test execution**: Significantly reduce test run times through parallel execution by feature or scenario.

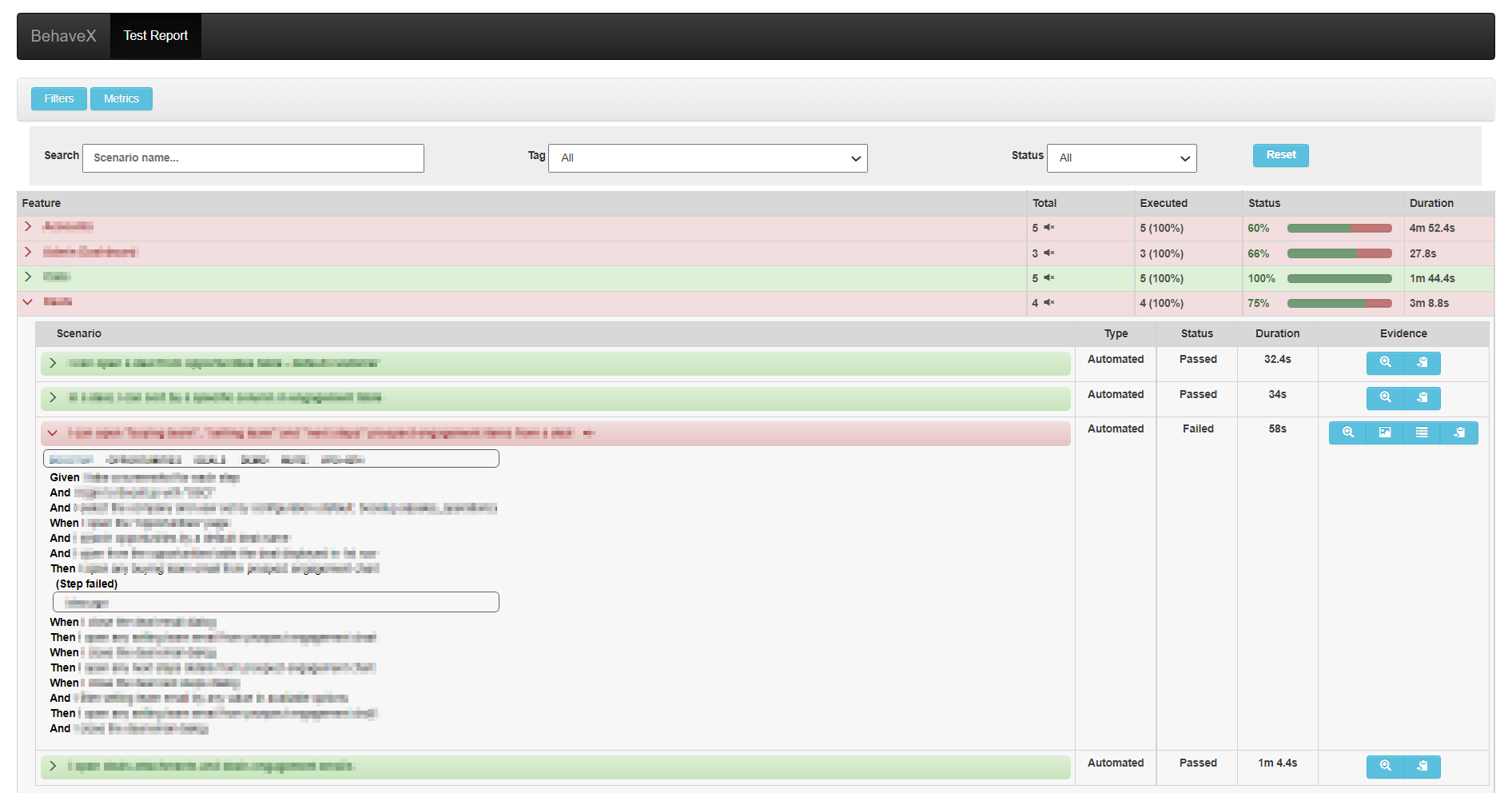

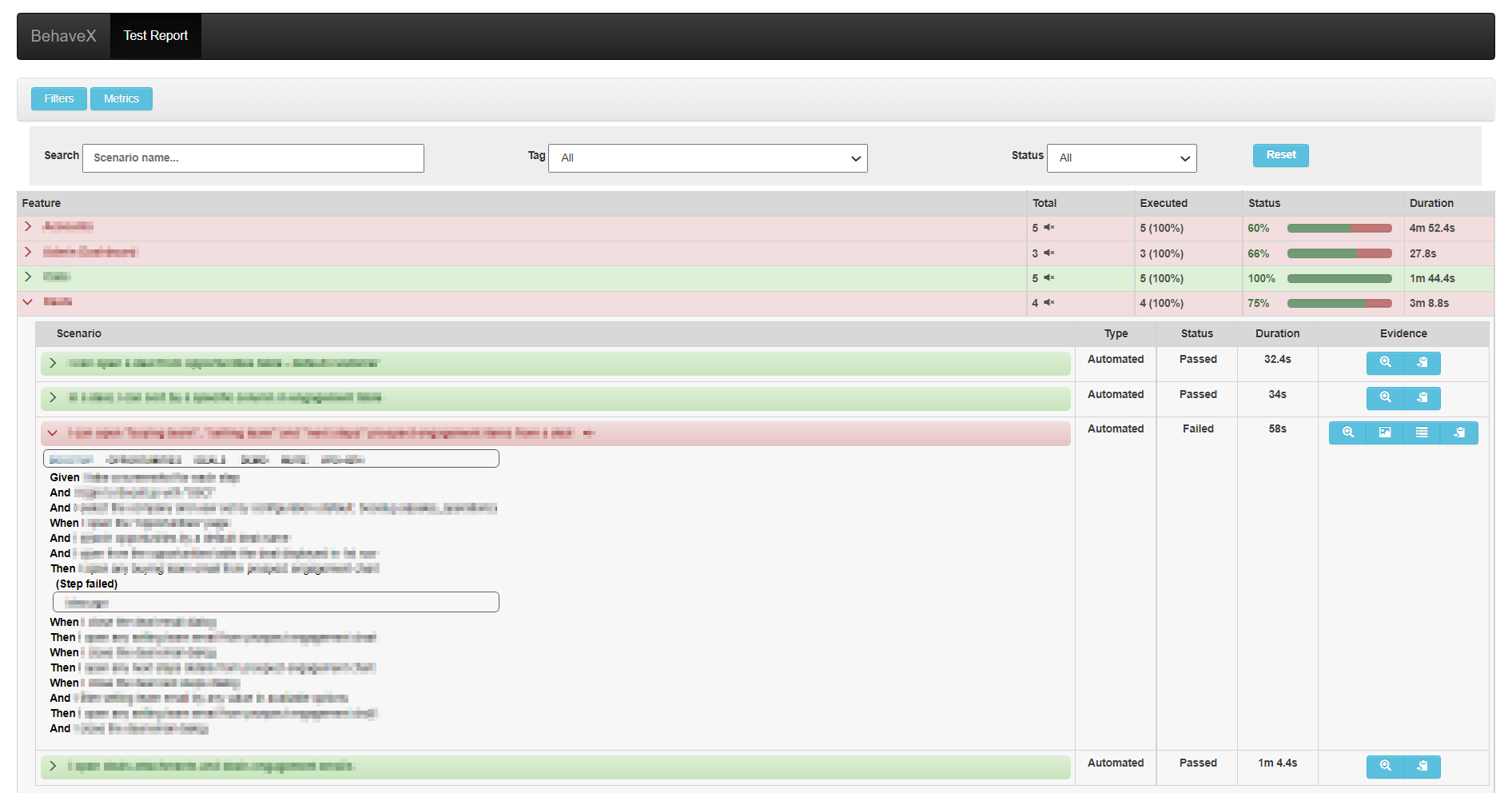

- **Enhancing test reporting**: Generate comprehensive and visually appealing HTML and JSON reports for in-depth analysis and integration with other tools.

- **Improving test visibility**: Provide detailed evidence, such as screenshots and logs, essential for understanding test failures and successes.

- **Optimizing test automation**: Utilize features like test retries, test muting, and performance metrics for efficient test maintenance and analysis.

- **Managing complex test suites**: Handle large test suites with advanced features for organization, execution, and comprehensive reporting through multiple formats and custom formatters.

## Features

BehaveX provides the following features:

- **Parallel Test Executions**: Execute tests using multiple processes, either by feature or by scenario.

- **Enhanced Reporting**: Generate comprehensive reports in multiple formats (HTML, JSON, JUnit) and utilize custom formatters like Allure for advanced reporting capabilities that can be exported and integrated with third-party tools.

- **Evidence Collection**: Include images/screenshots and additional evidence in the HTML report.

- **Test Logs**: Automatically compile logs generated during test execution into individual log reports for each scenario.

- **Test Muting**: Add the `@MUTE` tag to test scenarios to execute them without including them in JUnit reports.

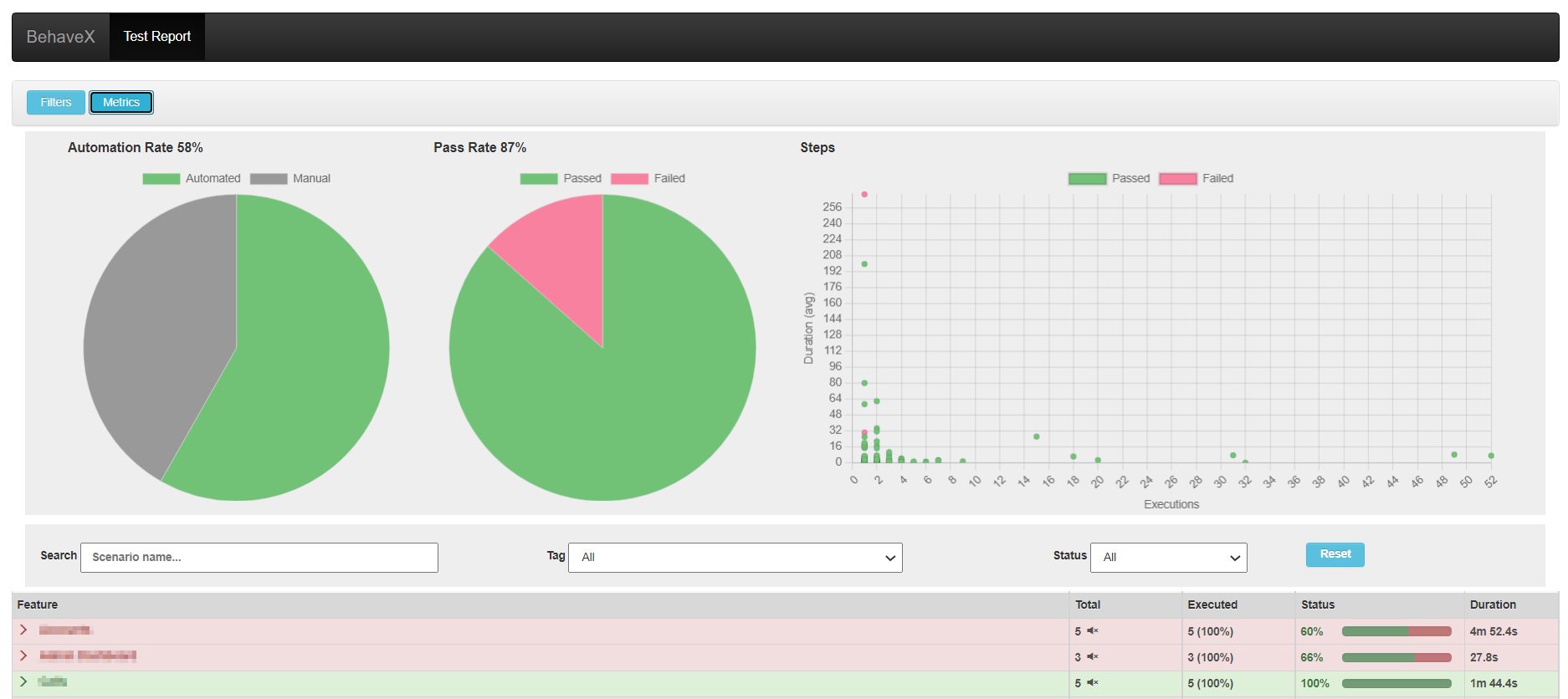

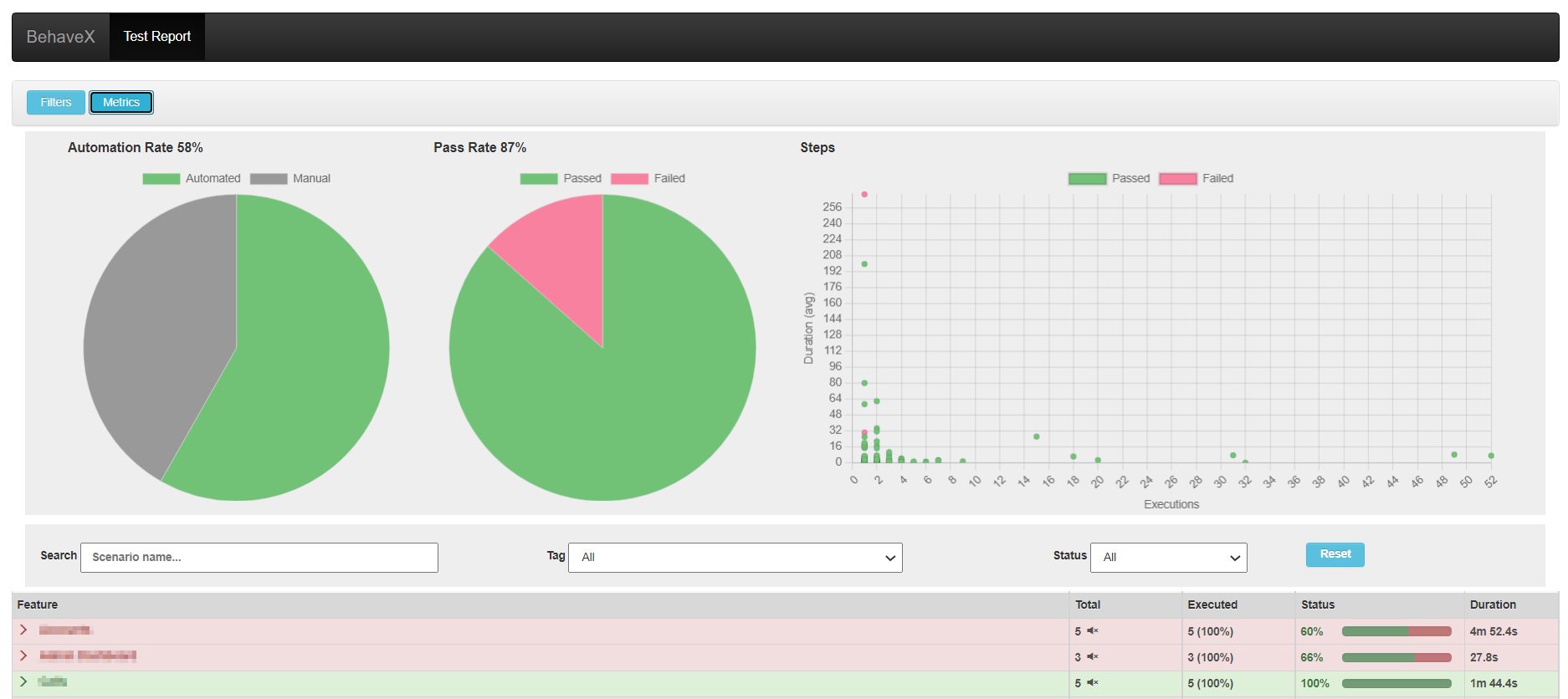

- **Execution Metrics**: Generate metrics in the HTML report for the executed test suite, including Automation Rate, Pass Rate, Steps execution counter and average execution time.

- **Dry Runs**: Perform dry runs to see the full list of scenarios in the HTML report without executing the tests. It overrides the `-d` Behave argument.

- **Auto-Retry for Failing Scenarios**: Use the `@AUTORETRY` tag to automatically re-execute failing scenarios. Also, you can re-run all failing scenarios using the **failing_scenarios.txt** file.

## Installation Instructions

To install BehaveX, execute the following command:

```bash

pip install behavex

```

## Execution Instructions

Execute BehaveX in the same way as Behave from the command line, using the `behavex` command. Here are some examples:

- **Run scenarios tagged as `TAG_1` but not `TAG_2`:**

```bash

behavex -t=@TAG_1 -t=~@TAG_2

```

- **Run scenarios tagged as `TAG_1` or `TAG_2`:**

```bash

behavex -t=@TAG_1,@TAG_2

```

- **Run scenarios tagged as `TAG_1` using 4 parallel processes:**

```bash

behavex -t=@TAG_1 --parallel-processes=4 --parallel-scheme=scenario

```

- **Run scenarios located at specific folders using 2 parallel processes:**

```bash

behavex features/features_folder_1 features/features_folder_2 --parallel-processes=2

```

- **Run scenarios from a specific feature file using 2 parallel processes:**

```bash

behavex features_folder_1/sample_feature.feature --parallel-processes=2

```

- **Run scenarios tagged as `TAG_1` from a specific feature file using 2 parallel processes:**

```bash

behavex features_folder_1/sample_feature.feature -t=@TAG_1 --parallel-processes=2

```

- **Run scenarios located at specific folders using 2 parallel processes:**

```bash

behavex features/feature_1 features/feature_2 --parallel-processes=2

```

- **Run scenarios tagged as `TAG_1`, using 5 parallel processes executing a feature on each process:**

```bash

behavex -t=@TAG_1 --parallel-processes=5 --parallel-scheme=feature

```

- **Perform a dry run of the scenarios tagged as `TAG_1`, and generate the HTML report:**

```bash

behavex -t=@TAG_1 --dry-run

```

- **Run scenarios tagged as `TAG_1`, generating the execution evidence into a specific folder:**

```bash

behavex -t=@TAG_1 -o=execution_evidence

```

- **Run scenarios with execution ordering enabled (requires parallel execution):**

```bash

behavex -t=@TAG_1 --order-tests --parallel-processes=2

```

- **Run scenarios with strict execution ordering (tests wait for lower order tests to complete):**

```bash

behavex -t=@TAG_1 --order-tests-strict --parallel-processes=2

```

- **Run scenarios with custom order tag prefix and parallel execution:**

```bash

behavex --order-tests --order-tag-prefix=PRIORITY --parallel-processes=3

```

## Constraints

- BehaveX is currently implemented on top of Behave **v1.2.6**, and not all Behave arguments are yet supported.

- Parallel execution is implemented using concurrent Behave processes. This means that any hooks defined in the `environment.py` module will run in each parallel process. This includes the **before_all** and **after_all** hooks, which will execute in every parallel process. The same is true for the **before_feature** and **after_feature** hooks when parallel execution is organized by scenario.

## Supported Behave Arguments

- no_color

- color

- define

- exclude

- include

- no_snippets

- no_capture

- name

- capture

- no_capture_stderr

- capture_stderr

- no_logcapture

- logcapture

- logging_level

- summary

- quiet

- stop

- tags

- tags-help

**Important**: Some arguments do not apply when executing tests with more than one parallel process, such as **stop** and **color**.

## Specific Arguments from BehaveX

- **output-folder** (-o or --output-folder): Specifies the output folder for execution reports (JUnit, HTML, JSON).

- **dry-run** (-d or --dry-run): Performs a dry-run by listing scenarios in the output reports.

- **parallel-processes** (--parallel-processes): Specifies the number of parallel Behave processes.

- **parallel-scheme** (--parallel-scheme): Performs parallel test execution by [scenario|feature].

- **show-progress-bar** (--show-progress-bar): Displays a progress bar in the console during parallel test execution.

- **formatter** (--formatter): Specifies a custom formatter for test reports (e.g., Allure formatter).

- **formatter-outdir** (--formatter-outdir): Specifies the output directory for formatter results (default: output/allure-results for Allure).

- **no-formatter-attach-logs** (--no-formatter-attach-logs): Disables automatic attachment of scenario log files to formatter reports.

- **order-tests** (--order-tests): Enables sorting of scenarios/features by execution order using special order tags (only effective with parallel execution).

- **order-tests-strict** (--order-tests-strict): Ensures tests run in strict order in parallel mode, with tests waiting for lower-order tests to complete (automatically enables --order-tests). May reduce parallel execution performance.

- **order-tag-prefix** (--order-tag-prefix): Specifies the prefix for order tags (default: 'ORDER').

## Parallel Test Executions

BehaveX manages concurrent executions of Behave instances in multiple processes. You can perform parallel test executions by feature or scenario. When the parallel scheme is by scenario, the examples of a scenario outline are also executed in parallel.

### Examples:

```bash

behavex --parallel-processes=3

behavex -t=@<TAG> --parallel-processes=3

behavex -t=@<TAG> --parallel-processes=2 --parallel-scheme=scenario

behavex -t=@<TAG> --parallel-processes=5 --parallel-scheme=feature

behavex -t=@<TAG> --parallel-processes=5 --parallel-scheme=feature --show-progress-bar

```

### Identifying Each Parallel Process

BehaveX populates the Behave contexts with the `worker_id` user-specific data. This variable contains the id of the current behave process.

For example, if BehaveX is started with `--parallel-processes 2`, the first instance of behave will receive `worker_id=0`, and the second instance will receive `worker_id=1`.

This variable can be accessed within the python tests using `context.config.userdata['worker_id']`.

## Test Execution Ordering

BehaveX provides the ability to control the execution order of your test scenarios and features using special order tags when running tests in parallel. This feature ensures that tests run in a predictable sequence during parallel execution, which is particularly useful for setup/teardown scenarios, or when you need specific tests to run before others.

### Purpose and Use Cases

Test execution ordering is valuable in scenarios such as:

- **Setup and Teardown**: Ensure setup scenarios run first and cleanup scenarios run last

- **Data Dependencies**: Execute data creation tests before tests that consume that data

- **Performance Testing**: Control the sequence to avoid resource conflicts

- **Smoke Testing**: Prioritize critical smoke tests to run first

- **Parallel Execution Optimization**: Run slower test scenarios first to maximize parallel process utilization and minimize overall execution time

### Command Line Arguments

BehaveX provides three arguments to control test execution ordering during parallel execution:

- **--order-tests** or **--order_tests**: Enables sorting of scenarios/features by execution order using special order tags (only effective with parallel execution)

- **--order-tests-strict** or **--order_tests_strict**: Ensures tests run in strict order in parallel mode (automatically enables --order-tests). Tests with higher order numbers will wait for all tests with lower order numbers to complete first. Note: This may reduce parallel execution performance as processes must wait for lower-order tests to complete.

- **--order-tag-prefix** or **--order_tag_prefix**: Specifies the prefix for order tags (default: 'ORDER')

### How to Use Order Tags

To control execution order, add tags to your scenarios using the following format:

```gherkin

@ORDER_001

Scenario: This scenario will run first

Given I perform initial setup

When I execute the first test

Then the setup should be complete

@ORDER_010

Scenario: This scenario will run second

Given the initial setup is complete

When I execute the dependent test

Then the test should pass

@ORDER_100

Scenario: This scenario will run last

Given all previous tests have completed

When I perform cleanup

Then all resources should be cleaned up

```

**Important Notes:**

- Test execution ordering only works when running tests in parallel (`--parallel-processes > 1`)

- Lower numbers execute first (e.g., `@ORDER_001` runs before `@ORDER_010`)

- Scenarios without order tags will run after all ordered scenarios (Default order: 9999)

- To run scenarios at the end of execution, you can use order numbers greater than 9999 (e.g., `@ORDER_10000`)

- **Regular ordering** (`--order-tests`): When the number of parallel processes equals or exceeds the number of ordered scenarios, ordering has no practical effect since all scenarios can run simultaneously

- **Strict ordering** (`--order-tests-strict`): Tests will always wait for lower-order tests to complete, regardless of available processes, which may reduce overall execution performance

- Use zero-padded numbers (e.g., `001`, `010`, `100`) for better sorting visualization

- The order tags work with both parallel feature and scenario execution schemes

- `--order-tests-strict` automatically enables `--order-tests`, so you don't need to specify both arguments

### Execution Examples

#### Basic Ordering

```bash

# Enable test ordering with default ORDER prefix (requires parallel execution)

behavex --order-tests --parallel-processes=2 -t=@SMOKE

# Enable test ordering with custom prefix

behavex --order-tests --order-tag-prefix=PRIORITY --parallel-processes=3 -t=@REGRESSION

```

#### Strict Ordering (Wait for Lower Order Tests)

```bash

# Enable strict test ordering - tests wait for lower order tests to complete

behavex --order-tests-strict --parallel-processes=3 -t=@INTEGRATION

# Strict ordering with custom prefix

behavex --order-tests-strict --order-tag-prefix=SEQUENCE --parallel-processes=2

# Note: --order-tests-strict automatically enables --order-tests, so you don't need both

```

#### Ordering with Parallel Execution

```bash

# Order tests and run with parallel processes by scenario

behavex --order-tests --parallel-processes=4 --parallel-scheme=scenario

# Order tests and run with parallel processes by feature

behavex --order-tests --parallel-processes=3 --parallel-scheme=feature

# Custom order prefix with parallel execution

behavex --order-tests --order-tag-prefix=SEQUENCE --parallel-processes=2

# Strict ordering by scenario (tests wait for completion of lower order tests)

behavex --order-tests-strict --parallel-processes=4 --parallel-scheme=scenario

# Strict ordering by feature

behavex --order-tests-strict --parallel-processes=3 --parallel-scheme=feature

```

### Understanding Regular vs Strict Ordering

#### Regular Ordering (`--order-tests`)

- Tests are **sorted** by order tags but can run **simultaneously** if parallel processes are available

- Example: With 5 parallel processes and tests `@ORDER_001`, `@ORDER_002`, `@ORDER_003`, all three tests start at the same time

- **Faster execution** but **less predictable** completion order

- Best for: Performance optimization, general prioritization

#### Strict Ordering (`--order-tests-strict`)

- Tests **wait** for all lower-order tests to **complete** before starting

- Example: `@ORDER_002` tests won't start until all `@ORDER_001` tests are finished

- **Slower execution** but **guaranteed** sequential completion

- Best for: Setup/teardown sequences, data dependencies, strict test dependencies

**Performance Comparison:**

```bash

# Scenario: 6 tests with ORDER_001, ORDER_002, ORDER_003 tags and 3 parallel processes

# Regular ordering (--order-tests):

# Time 0: ORDER_001, ORDER_002, ORDER_003 all start simultaneously

# Total time: ~1 minute (all tests run in parallel)

# Strict ordering (--order-tests-strict):

# Time 0: Only ORDER_001 tests start

# Time 1: ORDER_001 finishes → ORDER_002 tests start

# Time 2: ORDER_002 finishes → ORDER_003 tests start

# Total time: ~3 minutes (sequential execution)

```

### Using Custom Order Prefixes

You can customize the order tag prefix to match your team's naming conventions:

```bash

# Using PRIORITY prefix

behavex --order-tests --order-tag-prefix=PRIORITY

# Now use tags like @PRIORITY_001, @PRIORITY_010, etc.

```

```gherkin

@PRIORITY_001

Scenario: High priority scenario

Given I need to run this first

@PRIORITY_050

Scenario: Medium priority scenario

Given this can run after high priority

@PRIORITY_100

Scenario: Low priority scenario

Given this runs last

```

### Feature-Level Ordering

When using `--parallel-scheme=feature`, the ordering is determined by ORDER tags placed directly on the feature itself:

```gherkin

@ORDER_001

Feature: Database Setup Feature

Scenario: Create database schema

Given I create the database schema

Scenario: Insert initial data

Given I insert the initial data

# This entire feature will be ordered as ORDER_001 (tag on the feature)

@ORDER_002

Feature: Application Tests Feature

Scenario: Test user login

Given I test user login

# This entire feature will be ordered as ORDER_002 (tag on the feature)

Feature: Unordered Feature

Scenario: Some test

Given I perform some test

# This feature has no ORDER tag, so it gets the default order 9999

```

## Test Execution Reports

### JSON Report

Contains information about test scenarios and execution status. This is the base report generated by BehaveX, which is used to generate the HTML report. Available at:

```bash

<output_folder>/report.json

```

### HTML Report

A friendly test execution report containing information related to test scenarios, execution status, evidence, and metrics. Available at:

```bash

<output_folder>/report.html

```

### JUnit Report

One JUnit file per feature, available at:

```bash

<output_folder>/behave/*.xml

```

The JUnit reports have been replaced by the ones generated by the test wrapper, just to support muting tests scenarios on build servers

## Attaching Images to the HTML Report

You can attach images or screenshots to the HTML report using your own mechanism to capture screenshots or retrieve images. Utilize the **attach_image_file** or **attach_image_binary** methods provided by the wrapper.

These methods can be called from hooks in the `environment.py` file or directly from step definitions.

### Example 1: Attaching an Image File

```python

from behavex_images import image_attachments

@given('I take a screenshot from the current page')

def step_impl(context):

image_attachments.attach_image_file(context, 'path/to/image.png')

```

### Example 2: Attaching an Image Binary

```python

from behavex_images import image_attachments

from behavex_images.image_attachments import AttachmentsCondition

def before_all(context):

image_attachments.set_attachments_condition(context, AttachmentsCondition.ONLY_ON_FAILURE)

def after_step(context, step):

image_attachments.attach_image_binary(context, selenium_driver.get_screenshot_as_png())

```

By default, images are attached to the HTML report only when the test fails. You can modify this behavior by setting the condition using the **set_attachments_condition** method.

For more information, check the [behavex-images](https://github.com/abmercado19/behavex-images) library, which is included with BehaveX 3.3.0 and above.

If you are using BehaveX < 3.3.0, you can still attach images to the HTML report by installing the **behavex-images** package with the following command:

> pip install behavex-images

## Attaching Additional Execution Evidence to the HTML Report

Providing ample evidence in test execution reports is crucial for identifying the root cause of issues. Any evidence file generated during a test scenario can be stored in a folder path provided by the wrapper for each scenario.

The evidence folder path is automatically generated and stored in the **"context.evidence_path"** context variable. This variable is updated by the wrapper before executing each scenario, and all files copied into that path will be accessible from the HTML report linked to the executed scenario.

## Test Logs per Scenario

The HTML report includes detailed test execution logs for each scenario. These logs are generated using the **logging** library and are linked to the specific test scenario. This feature allows for easy debugging and analysis of test failures.

## Metrics and Insights

The HTML report provides a range of metrics to help you understand the performance and effectiveness of your test suite. These metrics include:

* **Automation Rate**: The percentage of scenarios that are automated.

* **Pass Rate**: The percentage of scenarios that have passed.

* **Steps Execution Counter and Average Execution Time**: These metrics provide insights into the execution time and frequency of steps within scenarios.

## Dry Runs

BehaveX enhances the traditional Behave dry run feature to provide more value. The HTML report generated during a dry run can be shared with stakeholders to discuss scenario specifications and test plans.

To execute a dry run, we recommend using the following command:

> behavex -t=@TAG --dry-run

## Muting Test Scenarios

In some cases, you may want to mute test scenarios that are failing but are not critical to the build process. This can be achieved by adding the @MUTE tag to the scenario. Muted scenarios will still be executed, but their failures will not be reported in the JUnit reports. However, the execution details will be visible in the HTML report.

## Handling Failing Scenarios

### @AUTORETRY Tag

For scenarios that are prone to intermittent failures or are affected by infrastructure issues, you can use the @AUTORETRY tag. This tag enables automatic re-execution of the scenario in case of failure.

You can also specify the number of retries by adding the total retries as a suffix in the @AUTORETRY tag. For example, @AUTORETRY_3 will retry the scenario 3 times if the scenario fails.

The re-execution will be performed right after a failing execution arises, and the latest execution is the one that will be reported.

### Rerunning Failed Scenarios

After executing tests, if there are failing scenarios, a **failing_scenarios.txt** file will be generated in the output folder. This file allows you to rerun all failed scenarios using the following command:

> behavex -rf=./<OUTPUT_FOLDER\>/failing_scenarios.txt

or

> behavex --rerun-failures=./<OUTPUT_FOLDER\>/failing_scenarios.txt

To avoid overwriting the previous test report, it is recommended to specify a different output folder using the **-o** or **--output-folder** argument.

Note that the **-o** or **--output-folder** argument does not work with parallel test executions.

## Displaying Progress Bar in Console

When running tests in parallel, you can display a progress bar in the console to monitor the test execution progress. To enable the progress bar, use the **--show-progress-bar** argument:

> behavex -t=@TAG --parallel-processes=3 --show-progress-bar

If you are printing logs in the console, you can configure the progress bar to display updates on a new line by adding the following setting to the BehaveX configuration file:

> [progress_bar]

>

> print_updates_in_new_lines="true"

## Allure Reports Integration

BehaveX provides integration with Allure, a flexible, lightweight multi-language test reporting tool. The Allure formatter creates detailed and visually appealing reports that include comprehensive test information, evidence, and categorization of test results.

**Note**: Since BehaveX is designed to run tests in parallel, the Allure formatter processes the consolidated `report.json` file after all parallel test executions are completed. This ensures that all test results from different parallel processes are properly aggregated before generating the final Allure report.

### Prerequisites

1. Install Allure on your system. Please refer to the [official Allure installation documentation](https://docs.qameta.io/allure/#_installing_a_commandline) for detailed instructions for your operating system.

### Using the Allure Formatter

To generate Allure reports, use the `--formatter` argument to specify the Allure formatter:

```bash

behavex -t=@TAG --formatter=behavex.outputs.formatters.allure_behavex_formatter:AllureBehaveXFormatter

```

By default, the Allure results will be generated in the `output/allure-results` directory. You can specify a different output directory using the `--formatter-outdir` argument:

```bash

behavex -t=@TAG --formatter=behavex.outputs.formatters.allure_behavex_formatter:AllureBehaveXFormatter --formatter-outdir=my-allure-results

```

### Attaching Screenshots and Evidence to Allure Reports

When using Allure reports, you should continue to use the same methods for attaching screenshots and evidence as described in the sections above:

- **For screenshots**: Use the methods described in [Attaching Images to the HTML Report](#attaching-images-to-the-html-report) section. The `attach_image_file()` and `attach_image_binary()` methods from the `behavex_images` library will automatically work with Allure reports.

- **For additional evidence**: Use the approach described in [Attaching Additional Execution Evidence to the HTML Report](#attaching-additional-execution-evidence-to-the-html-report) section. Files stored in the `context.evidence_path` will be automatically included in the Allure reports.

The evidence and screenshots attached using these methods will be seamlessly integrated into your Allure reports, providing comprehensive test execution documentation.

### Viewing Allure Reports

After running the tests, you can generate and view the Allure report using the following commands:

```bash

# Serve the report (opens in a browser)

allure serve output/allure-results

# Or... generate a single HTML file report

allure generate output/allure-results --output output/allure-report --clean --single-file

# Or... generate a static report

allure generate output/allure-results --output output/allure-report --clean

```

### Disabling Log Attachments

By default, `scenario.log` files are attached to each scenario in the Allure report. You can disable this by passing the `--no-formatter-attach-logs` argument:

```bash

behavex --formatter behavex.outputs.formatters.allure_behavex_formatter:AllureBehaveXFormatter --no-formatter-attach-logs

```

## Show Your Support

**If you find this project helpful or interesting, we would appreciate it if you could give it a star** (:star:). It's a simple way to show your support and let us know that you find value in our work.

By starring this repository, you help us gain visibility among other developers and contributors. It also serves as motivation for us to continue improving and maintaining this project.

Thank you in advance for your support! We truly appreciate it.

Raw data

{

"_id": null,

"home_page": "https://github.com/hrcorval/behavex",

"name": "behavex",

"maintainer": null,

"docs_url": null,

"requires_python": ">=3.5",

"maintainer_email": null,

"keywords": null,

"author": "Hernan Rey",

"author_email": "Hernan Rey <behavex_users@googlegroups.com>",

"download_url": "https://files.pythonhosted.org/packages/58/c5/9242be55fe1d029d992b51801c9c919ed177403be88dd6c1c0c99faec223/behavex-4.4.1.tar.gz",

"platform": "any",

"description": "[](https://pepy.tech/project/behavex)\n[](https://badge.fury.io/py/behavex)\n[](https://pypi.org/project/behavex/)\n[](https://libraries.io/github/hrcorval/behavex)\n[](https://github.com/hrcorval/behavex/blob/main/LICENSE)\n[](https://github.com/hrcorval/behavex/actions)\n[](https://github.com/hrcorval/behavex/commits/main)\n\n# BehaveX Documentation\n\n## \u2728 Latest Features\n\nJust to mention the most important features delivered in latest BehaveX releases:\n\n\ud83c\udfaf **Test Execution Ordering** *(v4.4.1)* - Control the sequence of scenario and feature execution during parallel runs using order tags (e.g., `@ORDER_001`, `@ORDER_010`). Now includes strict ordering mode (`--order-tests-strict`) for scenarios that must wait for lower-order tests to complete.\n\n\ud83d\udcca **Allure Reports Integration** *(v4.2.1)* - Generate beautiful, comprehensive test reports with Allure framework integration.\n\n\ud83d\udcc8 **Console Progress Bar** *(v3.2.13)* - Real-time progress tracking during parallel test execution.\n\n## Table of Contents\n- [Introduction](#introduction)\n- [Features](#features)\n- [Installation Instructions](#installation-instructions)\n- [Execution Instructions](#execution-instructions)\n- [Constraints](#constraints)\n- [Supported Behave Arguments](#supported-behave-arguments)\n- [Specific Arguments from BehaveX](#specific-arguments-from-behavex)\n- [Parallel Test Executions](#parallel-test-executions)\n- [Test Execution Ordering](#test-execution-ordering)\n- [Test Execution Reports](#test-execution-reports)\n- [Attaching Images to the HTML Report](#attaching-images-to-the-html-report)\n- [Attaching Additional Execution Evidence to the HTML Report](#attaching-additional-execution-evidence-to-the-html-report)\n- [Test Logs per Scenario](#test-logs-per-scenario)\n- [Metrics and Insights](#metrics-and-insights)\n- [Dry Runs](#dry-runs)\n- [Muting Test Scenarios](#muting-test-scenarios)\n- [Handling Failing Scenarios](#handling-failing-scenarios)\n- [Displaying Progress Bar in Console](#displaying-progress-bar-in-console)\n- [Allure Reports Integration](#allure-reports-integration)\n- [Show Your Support](#show-your-support)\n\n## Introduction\n\n**BehaveX** is a BDD testing solution built on top of the Python Behave library, orchestrating parallel test sessions to enhance your testing workflow with additional features and performance improvements. It's particularly beneficial in the following scenarios:\n\n- **Accelerating test execution**: Significantly reduce test run times through parallel execution by feature or scenario.\n- **Enhancing test reporting**: Generate comprehensive and visually appealing HTML and JSON reports for in-depth analysis and integration with other tools.\n- **Improving test visibility**: Provide detailed evidence, such as screenshots and logs, essential for understanding test failures and successes.\n- **Optimizing test automation**: Utilize features like test retries, test muting, and performance metrics for efficient test maintenance and analysis.\n- **Managing complex test suites**: Handle large test suites with advanced features for organization, execution, and comprehensive reporting through multiple formats and custom formatters.\n\n## Features\n\nBehaveX provides the following features:\n\n- **Parallel Test Executions**: Execute tests using multiple processes, either by feature or by scenario.\n- **Enhanced Reporting**: Generate comprehensive reports in multiple formats (HTML, JSON, JUnit) and utilize custom formatters like Allure for advanced reporting capabilities that can be exported and integrated with third-party tools.\n- **Evidence Collection**: Include images/screenshots and additional evidence in the HTML report.\n- **Test Logs**: Automatically compile logs generated during test execution into individual log reports for each scenario.\n- **Test Muting**: Add the `@MUTE` tag to test scenarios to execute them without including them in JUnit reports.\n- **Execution Metrics**: Generate metrics in the HTML report for the executed test suite, including Automation Rate, Pass Rate, Steps execution counter and average execution time.\n- **Dry Runs**: Perform dry runs to see the full list of scenarios in the HTML report without executing the tests. It overrides the `-d` Behave argument.\n- **Auto-Retry for Failing Scenarios**: Use the `@AUTORETRY` tag to automatically re-execute failing scenarios. Also, you can re-run all failing scenarios using the **failing_scenarios.txt** file.\n\n\n\n\n\n## Installation Instructions\n\nTo install BehaveX, execute the following command:\n\n```bash\npip install behavex\n```\n\n\n\n## Execution Instructions\n\nExecute BehaveX in the same way as Behave from the command line, using the `behavex` command. Here are some examples:\n\n- **Run scenarios tagged as `TAG_1` but not `TAG_2`:**\n ```bash\n behavex -t=@TAG_1 -t=~@TAG_2\n ```\n\n- **Run scenarios tagged as `TAG_1` or `TAG_2`:**\n ```bash\n behavex -t=@TAG_1,@TAG_2\n ```\n\n- **Run scenarios tagged as `TAG_1` using 4 parallel processes:**\n ```bash\n behavex -t=@TAG_1 --parallel-processes=4 --parallel-scheme=scenario\n ```\n\n- **Run scenarios located at specific folders using 2 parallel processes:**\n ```bash\n behavex features/features_folder_1 features/features_folder_2 --parallel-processes=2\n ```\n\n- **Run scenarios from a specific feature file using 2 parallel processes:**\n ```bash\n behavex features_folder_1/sample_feature.feature --parallel-processes=2\n ```\n\n- **Run scenarios tagged as `TAG_1` from a specific feature file using 2 parallel processes:**\n ```bash\n behavex features_folder_1/sample_feature.feature -t=@TAG_1 --parallel-processes=2\n ```\n\n- **Run scenarios located at specific folders using 2 parallel processes:**\n ```bash\n behavex features/feature_1 features/feature_2 --parallel-processes=2\n ```\n\n- **Run scenarios tagged as `TAG_1`, using 5 parallel processes executing a feature on each process:**\n ```bash\n behavex -t=@TAG_1 --parallel-processes=5 --parallel-scheme=feature\n ```\n\n- **Perform a dry run of the scenarios tagged as `TAG_1`, and generate the HTML report:**\n ```bash\n behavex -t=@TAG_1 --dry-run\n ```\n\n- **Run scenarios tagged as `TAG_1`, generating the execution evidence into a specific folder:**\n ```bash\n behavex -t=@TAG_1 -o=execution_evidence\n ```\n\n- **Run scenarios with execution ordering enabled (requires parallel execution):**\n ```bash\n behavex -t=@TAG_1 --order-tests --parallel-processes=2\n ```\n\n- **Run scenarios with strict execution ordering (tests wait for lower order tests to complete):**\n ```bash\n behavex -t=@TAG_1 --order-tests-strict --parallel-processes=2\n ```\n\n- **Run scenarios with custom order tag prefix and parallel execution:**\n ```bash\n behavex --order-tests --order-tag-prefix=PRIORITY --parallel-processes=3\n ```\n\n\n\n## Constraints\n\n- BehaveX is currently implemented on top of Behave **v1.2.6**, and not all Behave arguments are yet supported.\n- Parallel execution is implemented using concurrent Behave processes. This means that any hooks defined in the `environment.py` module will run in each parallel process. This includes the **before_all** and **after_all** hooks, which will execute in every parallel process. The same is true for the **before_feature** and **after_feature** hooks when parallel execution is organized by scenario.\n\n## Supported Behave Arguments\n\n- no_color\n- color\n- define\n- exclude\n- include\n- no_snippets\n- no_capture\n- name\n- capture\n- no_capture_stderr\n- capture_stderr\n- no_logcapture\n- logcapture\n- logging_level\n- summary\n- quiet\n- stop\n- tags\n- tags-help\n\n**Important**: Some arguments do not apply when executing tests with more than one parallel process, such as **stop** and **color**.\n\n## Specific Arguments from BehaveX\n\n- **output-folder** (-o or --output-folder): Specifies the output folder for execution reports (JUnit, HTML, JSON).\n- **dry-run** (-d or --dry-run): Performs a dry-run by listing scenarios in the output reports.\n- **parallel-processes** (--parallel-processes): Specifies the number of parallel Behave processes.\n- **parallel-scheme** (--parallel-scheme): Performs parallel test execution by [scenario|feature].\n- **show-progress-bar** (--show-progress-bar): Displays a progress bar in the console during parallel test execution.\n- **formatter** (--formatter): Specifies a custom formatter for test reports (e.g., Allure formatter).\n- **formatter-outdir** (--formatter-outdir): Specifies the output directory for formatter results (default: output/allure-results for Allure).\n- **no-formatter-attach-logs** (--no-formatter-attach-logs): Disables automatic attachment of scenario log files to formatter reports.\n- **order-tests** (--order-tests): Enables sorting of scenarios/features by execution order using special order tags (only effective with parallel execution).\n- **order-tests-strict** (--order-tests-strict): Ensures tests run in strict order in parallel mode, with tests waiting for lower-order tests to complete (automatically enables --order-tests). May reduce parallel execution performance.\n- **order-tag-prefix** (--order-tag-prefix): Specifies the prefix for order tags (default: 'ORDER').\n\n## Parallel Test Executions\n\nBehaveX manages concurrent executions of Behave instances in multiple processes. You can perform parallel test executions by feature or scenario. When the parallel scheme is by scenario, the examples of a scenario outline are also executed in parallel.\n\n\n\n### Examples:\n```bash\nbehavex --parallel-processes=3\nbehavex -t=@<TAG> --parallel-processes=3\nbehavex -t=@<TAG> --parallel-processes=2 --parallel-scheme=scenario\nbehavex -t=@<TAG> --parallel-processes=5 --parallel-scheme=feature\nbehavex -t=@<TAG> --parallel-processes=5 --parallel-scheme=feature --show-progress-bar\n```\n\n### Identifying Each Parallel Process\n\nBehaveX populates the Behave contexts with the `worker_id` user-specific data. This variable contains the id of the current behave process.\n\nFor example, if BehaveX is started with `--parallel-processes 2`, the first instance of behave will receive `worker_id=0`, and the second instance will receive `worker_id=1`.\n\nThis variable can be accessed within the python tests using `context.config.userdata['worker_id']`.\n\n## Test Execution Ordering\n\nBehaveX provides the ability to control the execution order of your test scenarios and features using special order tags when running tests in parallel. This feature ensures that tests run in a predictable sequence during parallel execution, which is particularly useful for setup/teardown scenarios, or when you need specific tests to run before others.\n\n### Purpose and Use Cases\n\nTest execution ordering is valuable in scenarios such as:\n\n- **Setup and Teardown**: Ensure setup scenarios run first and cleanup scenarios run last\n- **Data Dependencies**: Execute data creation tests before tests that consume that data\n- **Performance Testing**: Control the sequence to avoid resource conflicts\n- **Smoke Testing**: Prioritize critical smoke tests to run first\n- **Parallel Execution Optimization**: Run slower test scenarios first to maximize parallel process utilization and minimize overall execution time\n\n### Command Line Arguments\n\nBehaveX provides three arguments to control test execution ordering during parallel execution:\n\n- **--order-tests** or **--order_tests**: Enables sorting of scenarios/features by execution order using special order tags (only effective with parallel execution)\n- **--order-tests-strict** or **--order_tests_strict**: Ensures tests run in strict order in parallel mode (automatically enables --order-tests). Tests with higher order numbers will wait for all tests with lower order numbers to complete first. Note: This may reduce parallel execution performance as processes must wait for lower-order tests to complete.\n- **--order-tag-prefix** or **--order_tag_prefix**: Specifies the prefix for order tags (default: 'ORDER')\n\n### How to Use Order Tags\n\nTo control execution order, add tags to your scenarios using the following format:\n\n```gherkin\n@ORDER_001\nScenario: This scenario will run first\n Given I perform initial setup\n When I execute the first test\n Then the setup should be complete\n\n@ORDER_010\nScenario: This scenario will run second\n Given the initial setup is complete\n When I execute the dependent test\n Then the test should pass\n\n@ORDER_100\nScenario: This scenario will run last\n Given all previous tests have completed\n When I perform cleanup\n Then all resources should be cleaned up\n```\n\n**Important Notes:**\n- Test execution ordering only works when running tests in parallel (`--parallel-processes > 1`)\n- Lower numbers execute first (e.g., `@ORDER_001` runs before `@ORDER_010`)\n- Scenarios without order tags will run after all ordered scenarios (Default order: 9999)\n- To run scenarios at the end of execution, you can use order numbers greater than 9999 (e.g., `@ORDER_10000`)\n- **Regular ordering** (`--order-tests`): When the number of parallel processes equals or exceeds the number of ordered scenarios, ordering has no practical effect since all scenarios can run simultaneously\n- **Strict ordering** (`--order-tests-strict`): Tests will always wait for lower-order tests to complete, regardless of available processes, which may reduce overall execution performance\n- Use zero-padded numbers (e.g., `001`, `010`, `100`) for better sorting visualization\n- The order tags work with both parallel feature and scenario execution schemes\n- `--order-tests-strict` automatically enables `--order-tests`, so you don't need to specify both arguments\n\n### Execution Examples\n\n#### Basic Ordering\n```bash\n# Enable test ordering with default ORDER prefix (requires parallel execution)\nbehavex --order-tests --parallel-processes=2 -t=@SMOKE\n\n# Enable test ordering with custom prefix\nbehavex --order-tests --order-tag-prefix=PRIORITY --parallel-processes=3 -t=@REGRESSION\n```\n\n#### Strict Ordering (Wait for Lower Order Tests)\n```bash\n# Enable strict test ordering - tests wait for lower order tests to complete\nbehavex --order-tests-strict --parallel-processes=3 -t=@INTEGRATION\n\n# Strict ordering with custom prefix\nbehavex --order-tests-strict --order-tag-prefix=SEQUENCE --parallel-processes=2\n\n# Note: --order-tests-strict automatically enables --order-tests, so you don't need both\n```\n\n#### Ordering with Parallel Execution\n```bash\n# Order tests and run with parallel processes by scenario\nbehavex --order-tests --parallel-processes=4 --parallel-scheme=scenario\n\n# Order tests and run with parallel processes by feature\nbehavex --order-tests --parallel-processes=3 --parallel-scheme=feature\n\n# Custom order prefix with parallel execution\nbehavex --order-tests --order-tag-prefix=SEQUENCE --parallel-processes=2\n\n# Strict ordering by scenario (tests wait for completion of lower order tests)\nbehavex --order-tests-strict --parallel-processes=4 --parallel-scheme=scenario\n\n# Strict ordering by feature\nbehavex --order-tests-strict --parallel-processes=3 --parallel-scheme=feature\n```\n\n### Understanding Regular vs Strict Ordering\n\n#### Regular Ordering (`--order-tests`)\n- Tests are **sorted** by order tags but can run **simultaneously** if parallel processes are available\n- Example: With 5 parallel processes and tests `@ORDER_001`, `@ORDER_002`, `@ORDER_003`, all three tests start at the same time\n- **Faster execution** but **less predictable** completion order\n- Best for: Performance optimization, general prioritization\n\n#### Strict Ordering (`--order-tests-strict`)\n- Tests **wait** for all lower-order tests to **complete** before starting\n- Example: `@ORDER_002` tests won't start until all `@ORDER_001` tests are finished\n- **Slower execution** but **guaranteed** sequential completion\n- Best for: Setup/teardown sequences, data dependencies, strict test dependencies\n\n**Performance Comparison:**\n```bash\n# Scenario: 6 tests with ORDER_001, ORDER_002, ORDER_003 tags and 3 parallel processes\n\n# Regular ordering (--order-tests):\n# Time 0: ORDER_001, ORDER_002, ORDER_003 all start simultaneously\n# Total time: ~1 minute (all tests run in parallel)\n\n# Strict ordering (--order-tests-strict):\n# Time 0: Only ORDER_001 tests start\n# Time 1: ORDER_001 finishes \u2192 ORDER_002 tests start\n# Time 2: ORDER_002 finishes \u2192 ORDER_003 tests start\n# Total time: ~3 minutes (sequential execution)\n```\n\n### Using Custom Order Prefixes\n\nYou can customize the order tag prefix to match your team's naming conventions:\n\n```bash\n# Using PRIORITY prefix\nbehavex --order-tests --order-tag-prefix=PRIORITY\n\n# Now use tags like @PRIORITY_001, @PRIORITY_010, etc.\n```\n\n```gherkin\n@PRIORITY_001\nScenario: High priority scenario\n Given I need to run this first\n\n@PRIORITY_050\nScenario: Medium priority scenario\n Given this can run after high priority\n\n@PRIORITY_100\nScenario: Low priority scenario\n Given this runs last\n```\n\n### Feature-Level Ordering\n\nWhen using `--parallel-scheme=feature`, the ordering is determined by ORDER tags placed directly on the feature itself:\n\n```gherkin\n@ORDER_001\nFeature: Database Setup Feature\n Scenario: Create database schema\n Given I create the database schema\n\n Scenario: Insert initial data\n Given I insert the initial data\n\n# This entire feature will be ordered as ORDER_001 (tag on the feature)\n\n@ORDER_002\nFeature: Application Tests Feature\n Scenario: Test user login\n Given I test user login\n\n# This entire feature will be ordered as ORDER_002 (tag on the feature)\n\nFeature: Unordered Feature\n Scenario: Some test\n Given I perform some test\n\n# This feature has no ORDER tag, so it gets the default order 9999\n```\n\n## Test Execution Reports\n\n### JSON Report\nContains information about test scenarios and execution status. This is the base report generated by BehaveX, which is used to generate the HTML report. Available at:\n```bash\n<output_folder>/report.json\n```\n\n### HTML Report\nA friendly test execution report containing information related to test scenarios, execution status, evidence, and metrics. Available at:\n```bash\n<output_folder>/report.html\n```\n\n### JUnit Report\nOne JUnit file per feature, available at:\n```bash\n<output_folder>/behave/*.xml\n```\nThe JUnit reports have been replaced by the ones generated by the test wrapper, just to support muting tests scenarios on build servers\n\n## Attaching Images to the HTML Report\n\nYou can attach images or screenshots to the HTML report using your own mechanism to capture screenshots or retrieve images. Utilize the **attach_image_file** or **attach_image_binary** methods provided by the wrapper.\n\nThese methods can be called from hooks in the `environment.py` file or directly from step definitions.\n\n### Example 1: Attaching an Image File\n```python\nfrom behavex_images import image_attachments\n\n@given('I take a screenshot from the current page')\ndef step_impl(context):\n image_attachments.attach_image_file(context, 'path/to/image.png')\n```\n\n### Example 2: Attaching an Image Binary\n```python\nfrom behavex_images import image_attachments\nfrom behavex_images.image_attachments import AttachmentsCondition\n\ndef before_all(context):\n image_attachments.set_attachments_condition(context, AttachmentsCondition.ONLY_ON_FAILURE)\n\ndef after_step(context, step):\n image_attachments.attach_image_binary(context, selenium_driver.get_screenshot_as_png())\n```\n\nBy default, images are attached to the HTML report only when the test fails. You can modify this behavior by setting the condition using the **set_attachments_condition** method.\n\n\n\n\n\nFor more information, check the [behavex-images](https://github.com/abmercado19/behavex-images) library, which is included with BehaveX 3.3.0 and above.\n\nIf you are using BehaveX < 3.3.0, you can still attach images to the HTML report by installing the **behavex-images** package with the following command:\n\n> pip install behavex-images\n\n## Attaching Additional Execution Evidence to the HTML Report\n\nProviding ample evidence in test execution reports is crucial for identifying the root cause of issues. Any evidence file generated during a test scenario can be stored in a folder path provided by the wrapper for each scenario.\n\nThe evidence folder path is automatically generated and stored in the **\"context.evidence_path\"** context variable. This variable is updated by the wrapper before executing each scenario, and all files copied into that path will be accessible from the HTML report linked to the executed scenario.\n\n## Test Logs per Scenario\n\nThe HTML report includes detailed test execution logs for each scenario. These logs are generated using the **logging** library and are linked to the specific test scenario. This feature allows for easy debugging and analysis of test failures.\n\n## Metrics and Insights\n\nThe HTML report provides a range of metrics to help you understand the performance and effectiveness of your test suite. These metrics include:\n\n* **Automation Rate**: The percentage of scenarios that are automated.\n* **Pass Rate**: The percentage of scenarios that have passed.\n* **Steps Execution Counter and Average Execution Time**: These metrics provide insights into the execution time and frequency of steps within scenarios.\n\n## Dry Runs\n\nBehaveX enhances the traditional Behave dry run feature to provide more value. The HTML report generated during a dry run can be shared with stakeholders to discuss scenario specifications and test plans.\n\nTo execute a dry run, we recommend using the following command:\n\n> behavex -t=@TAG --dry-run\n\n## Muting Test Scenarios\n\nIn some cases, you may want to mute test scenarios that are failing but are not critical to the build process. This can be achieved by adding the @MUTE tag to the scenario. Muted scenarios will still be executed, but their failures will not be reported in the JUnit reports. However, the execution details will be visible in the HTML report.\n\n## Handling Failing Scenarios\n\n### @AUTORETRY Tag\n\nFor scenarios that are prone to intermittent failures or are affected by infrastructure issues, you can use the @AUTORETRY tag. This tag enables automatic re-execution of the scenario in case of failure.\n\nYou can also specify the number of retries by adding the total retries as a suffix in the @AUTORETRY tag. For example, @AUTORETRY_3 will retry the scenario 3 times if the scenario fails.\n\nThe re-execution will be performed right after a failing execution arises, and the latest execution is the one that will be reported.\n\n### Rerunning Failed Scenarios\n\nAfter executing tests, if there are failing scenarios, a **failing_scenarios.txt** file will be generated in the output folder. This file allows you to rerun all failed scenarios using the following command:\n\n> behavex -rf=./<OUTPUT_FOLDER\\>/failing_scenarios.txt\n\nor\n\n> behavex --rerun-failures=./<OUTPUT_FOLDER\\>/failing_scenarios.txt\n\nTo avoid overwriting the previous test report, it is recommended to specify a different output folder using the **-o** or **--output-folder** argument.\n\nNote that the **-o** or **--output-folder** argument does not work with parallel test executions.\n\n## Displaying Progress Bar in Console\n\nWhen running tests in parallel, you can display a progress bar in the console to monitor the test execution progress. To enable the progress bar, use the **--show-progress-bar** argument:\n\n> behavex -t=@TAG --parallel-processes=3 --show-progress-bar\n\nIf you are printing logs in the console, you can configure the progress bar to display updates on a new line by adding the following setting to the BehaveX configuration file:\n\n> [progress_bar]\n>\n> print_updates_in_new_lines=\"true\"\n\n## Allure Reports Integration\n\nBehaveX provides integration with Allure, a flexible, lightweight multi-language test reporting tool. The Allure formatter creates detailed and visually appealing reports that include comprehensive test information, evidence, and categorization of test results.\n\n**Note**: Since BehaveX is designed to run tests in parallel, the Allure formatter processes the consolidated `report.json` file after all parallel test executions are completed. This ensures that all test results from different parallel processes are properly aggregated before generating the final Allure report.\n\n### Prerequisites\n\n1. Install Allure on your system. Please refer to the [official Allure installation documentation](https://docs.qameta.io/allure/#_installing_a_commandline) for detailed instructions for your operating system.\n\n### Using the Allure Formatter\n\nTo generate Allure reports, use the `--formatter` argument to specify the Allure formatter:\n\n```bash\nbehavex -t=@TAG --formatter=behavex.outputs.formatters.allure_behavex_formatter:AllureBehaveXFormatter\n```\n\nBy default, the Allure results will be generated in the `output/allure-results` directory. You can specify a different output directory using the `--formatter-outdir` argument:\n\n```bash\nbehavex -t=@TAG --formatter=behavex.outputs.formatters.allure_behavex_formatter:AllureBehaveXFormatter --formatter-outdir=my-allure-results\n```\n\n### Attaching Screenshots and Evidence to Allure Reports\n\nWhen using Allure reports, you should continue to use the same methods for attaching screenshots and evidence as described in the sections above:\n\n- **For screenshots**: Use the methods described in [Attaching Images to the HTML Report](#attaching-images-to-the-html-report) section. The `attach_image_file()` and `attach_image_binary()` methods from the `behavex_images` library will automatically work with Allure reports.\n\n- **For additional evidence**: Use the approach described in [Attaching Additional Execution Evidence to the HTML Report](#attaching-additional-execution-evidence-to-the-html-report) section. Files stored in the `context.evidence_path` will be automatically included in the Allure reports.\n\nThe evidence and screenshots attached using these methods will be seamlessly integrated into your Allure reports, providing comprehensive test execution documentation.\n\n### Viewing Allure Reports\n\nAfter running the tests, you can generate and view the Allure report using the following commands:\n\n```bash\n# Serve the report (opens in a browser)\nallure serve output/allure-results\n\n# Or... generate a single HTML file report\nallure generate output/allure-results --output output/allure-report --clean --single-file\n\n# Or... generate a static report\nallure generate output/allure-results --output output/allure-report --clean\n```\n\n### Disabling Log Attachments\nBy default, `scenario.log` files are attached to each scenario in the Allure report. You can disable this by passing the `--no-formatter-attach-logs` argument:\n```bash\nbehavex --formatter behavex.outputs.formatters.allure_behavex_formatter:AllureBehaveXFormatter --no-formatter-attach-logs\n```\n\n## Show Your Support\n\n**If you find this project helpful or interesting, we would appreciate it if you could give it a star** (:star:). It's a simple way to show your support and let us know that you find value in our work.\n\nBy starring this repository, you help us gain visibility among other developers and contributors. It also serves as motivation for us to continue improving and maintaining this project.\n\nThank you in advance for your support! We truly appreciate it.\n",

"bugtrack_url": null,

"license": "MIT",

"summary": "Agile testing framework on top of Behave (BDD).",

"version": "4.4.1",

"project_urls": {

"Homepage": "https://github.com/hrcorval/behavex"

},

"split_keywords": [],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "c4d5f1d86270548b1cff4fab7560b8e57a08669fc690b2b18a520f6700a4a21d",

"md5": "0b4c13040df6f7c1b63cf94fdd66cb00",

"sha256": "416f1339bcf4876be991580afc8ca03e8f7f97e3013bdb44b6f09af8ccdc75e8"

},

"downloads": -1,

"filename": "behavex-4.4.1-py3-none-any.whl",

"has_sig": false,

"md5_digest": "0b4c13040df6f7c1b63cf94fdd66cb00",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.5",

"size": 716242,

"upload_time": "2025-07-31T22:06:46",

"upload_time_iso_8601": "2025-07-31T22:06:46.611581Z",

"url": "https://files.pythonhosted.org/packages/c4/d5/f1d86270548b1cff4fab7560b8e57a08669fc690b2b18a520f6700a4a21d/behavex-4.4.1-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "58c59242be55fe1d029d992b51801c9c919ed177403be88dd6c1c0c99faec223",

"md5": "8250a9e779f0f3ea536aa0b949629ff6",

"sha256": "168654e83b123c5989ff8ec91ac5e9091d937ef807561935551fe1157f6ee81a"

},

"downloads": -1,

"filename": "behavex-4.4.1.tar.gz",

"has_sig": false,

"md5_digest": "8250a9e779f0f3ea536aa0b949629ff6",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.5",

"size": 633251,

"upload_time": "2025-07-31T22:06:48",

"upload_time_iso_8601": "2025-07-31T22:06:48.127110Z",

"url": "https://files.pythonhosted.org/packages/58/c5/9242be55fe1d029d992b51801c9c919ed177403be88dd6c1c0c99faec223/behavex-4.4.1.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2025-07-31 22:06:48",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "hrcorval",

"github_project": "behavex",

"travis_ci": false,

"coveralls": false,

"github_actions": true,

"lcname": "behavex"

}