# cdk-emrserverless-with-delta-lake

[](https://opensource.org/licenses/Apache-2.0) [](https://github.com/HsiehShuJeng/cdk-emrserverless-with-delta-lake/actions/workflows/release.yml/badge.svg) [](https://img.shields.io/npm/dt/cdk-emrserverless-with-delta-lake?label=npm%20downloads&style=plastic) [](https://img.shields.io/pypi/dw/cdk-emrserverless-with-delta-lake?label=pypi%20downloads&style=plastic) [](https://img.shields.io/nuget/dt/Emrserverless.With.Delta.Lake?label=NuGet%20downloads&style=plastic) [](https://img.shields.io/github/languages/count/HsiehShuJeng/cdk-emrserverless-with-delta-lake?style=plastic)

| npm (JS/TS) | PyPI (Python) | Maven (Java) | Go | NuGet |

| --- | --- | --- | --- | --- |

| [Link](https://www.npmjs.com/package/cdk-emrserverless-with-delta-lake) | [Link](https://pypi.org/project/cdk-emrserverless-with-delta-lake/) | [Link](https://search.maven.org/artifact/io.github.hsiehshujeng/cdk-emrserverless-quickdemo-with-delta-lake) | [Link](https://github.com/HsiehShuJeng/cdk-emrserverless-with-delta-lake-go) | [Link](https://www.nuget.org/packages/Emrserverless.With.Delta.Lake/) |

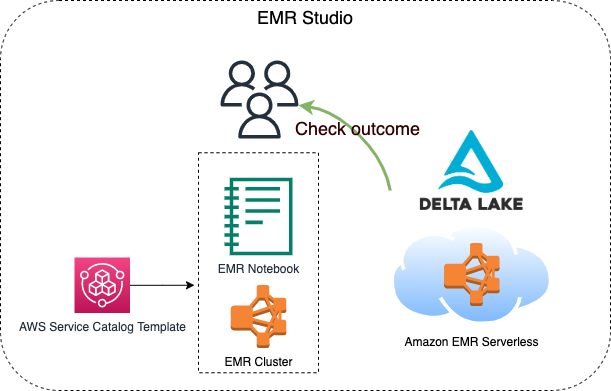

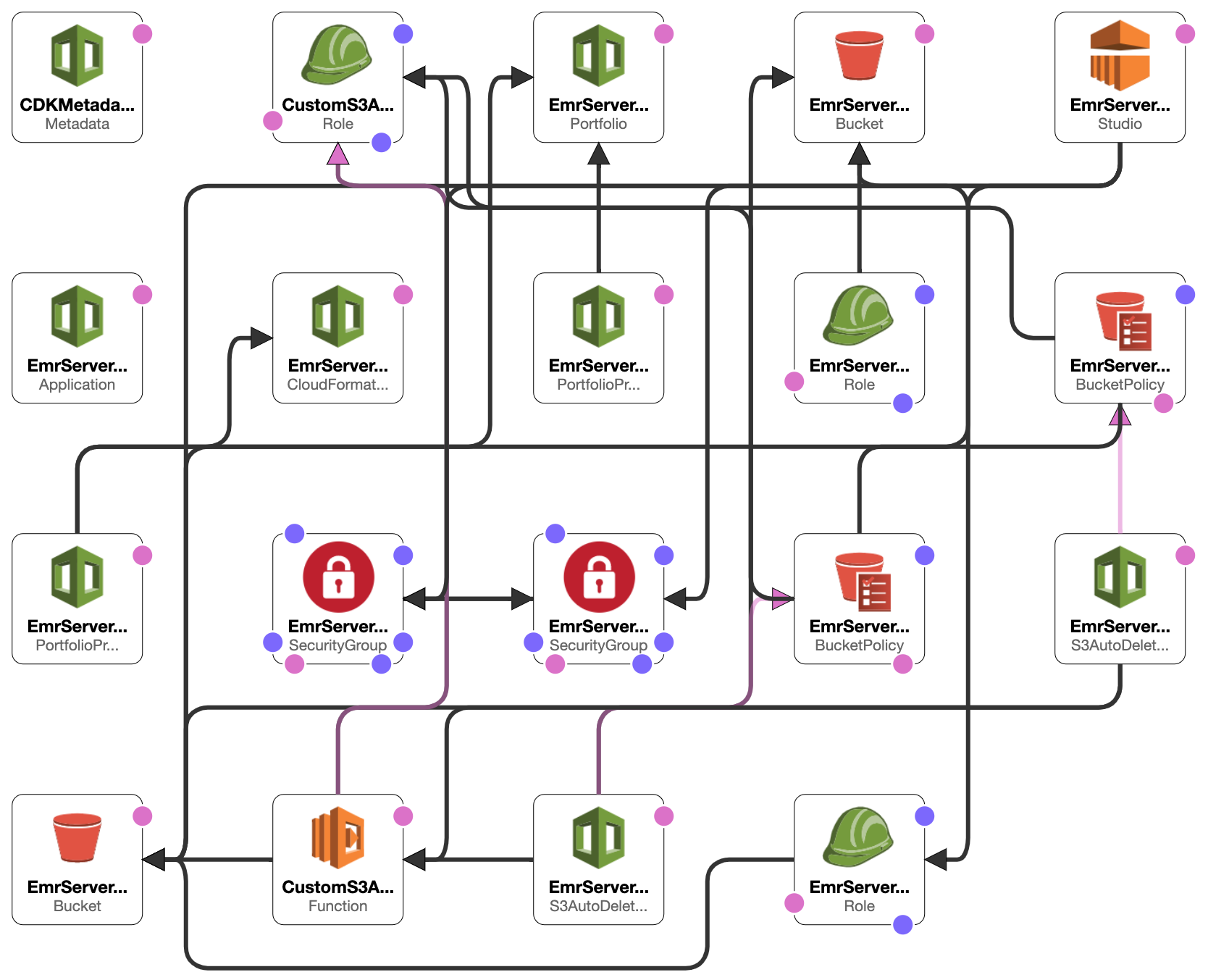

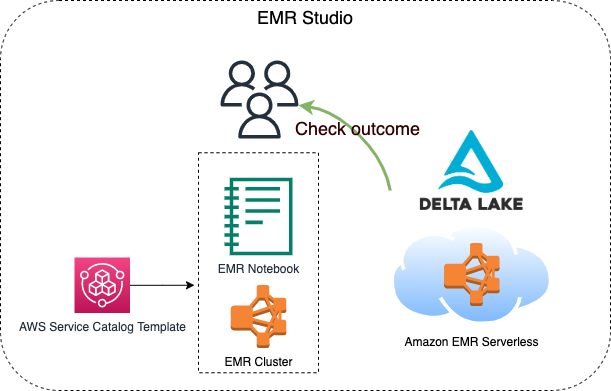

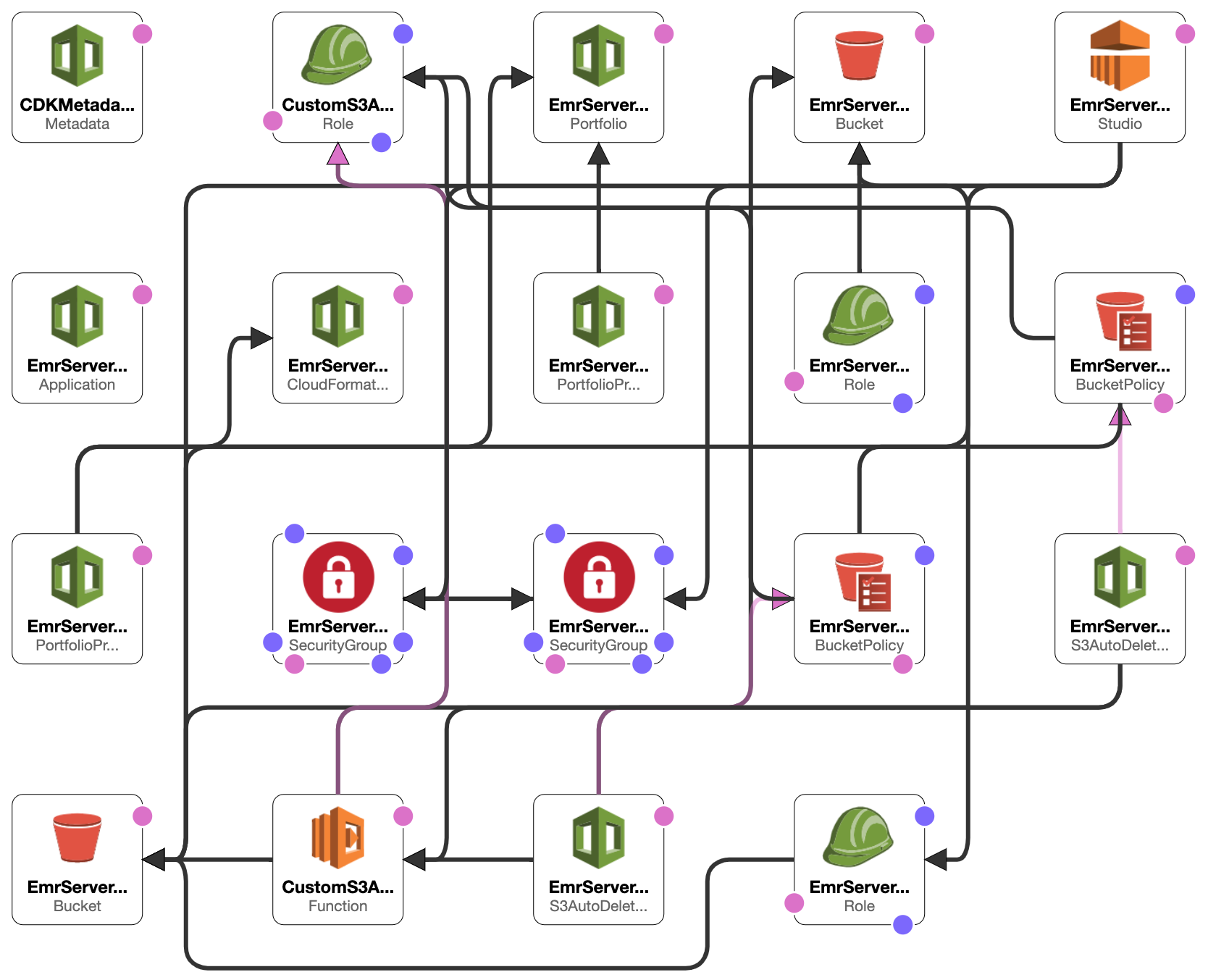

This constrcut builds an EMR studio, a cluster template for the EMR Studio, and an EMR Serverless application. 2 S3 buckets will be created, one is for the EMR Studio workspace and the other one is for EMR Serverless applications. Besides, the VPC and the subnets for the EMR Studio will be tagged `{"Key": "for-use-with-amazon-emr-managed-policies", "Value": "true"}` via a custom resource. This is necessary for the [service role](https://docs.aws.amazon.com/emr/latest/ManagementGuide/emr-studio-service-role.html#emr-studio-service-role-instructions) of EMR Studio.

This construct is for analysts, data engineers, and anyone who wants to know how to process **Delta Lake data** with EMR serverless.

They build the construct via [cdkv2](https://docs.aws.amazon.com/cdk/v2/guide/home.html) and build a serverless job within the EMR application generated by the construct via AWS CLI within few minutes. After the EMR serverless job is finished, they can then check the processed result done by the EMR serverless job on an EMR notebook through the cluster template.

# TOC

* [Requirements](#requirements)

* [Before deployment](#before-deployment)

* [Minimal content for deployment](#minimal-content-for-deployment)

* [After deployment](#after-deployment)

* [Create an EMR Serverless application](#create-an-emr-serverless-app)

* [Check the executing job](#check-the-executing-job)

* [Check results from an EMR notebook via cluster template](#check-results-from-an-emr-notebook-via-cluster-template)

* [Fun facts](#fun-facts)

* [Future work](#future-work)

# Requirements

1. Your current identity has the `AdministratorAccess` power.

2. [An IAM user](https://docs.aws.amazon.com/IAM/latest/UserGuide/getting-started_create-admin-group.html) named `Administrator` with the `AdministratorAccess` power.

* This is related to the Portfolio of AWS Service Catalog created by the construct, which is required for [EMR cluster tempaltes](https://docs.aws.amazon.com/emr/latest/ManagementGuide/emr-studio-cluster-templates.html).

* You can choose whatsoever identity you wish to associate with the Product in the Porfolio for creating an EMR cluster via cluster tempalte. Check `serviceCatalogProps` in the `EmrServerless` construct for detail, otherwise, the IAM user mentioned above will be chosen to set up with the Product.

# Before deployment

You might want to execute the following command.

```sh

PROFILE_NAME="scott.hsieh"

# If you only have one credentials on your local machine, just ignore `--profile`, buddy.

cdk bootstrap aws://${AWS_ACCOUNT_ID}/${AWS_REGION} --profile ${PROFILE_NAME}

```

# Minimal content for deployment

```python

#!/usr/bin/env node

import * as cdk from 'aws-cdk-lib';

import { Construct } from 'constructs';

import { EmrServerless } from 'cdk-emrserverless-with-delta-lake';

class TypescriptStack extends cdk.Stack {

constructor(scope: Construct, id: string, props?: cdk.StackProps) {

super(scope, id, props);

new EmrServerless(this, 'EmrServerless');

}

}

const app = new cdk.App();

new TypescriptStack(app, 'TypescriptStack', {

stackName: 'emr-studio',

env: {

region: process.env.CDK_DEFAULT_REGION,

account: process.env.CDK_DEFAULT_ACCOUNT,

},

});

```

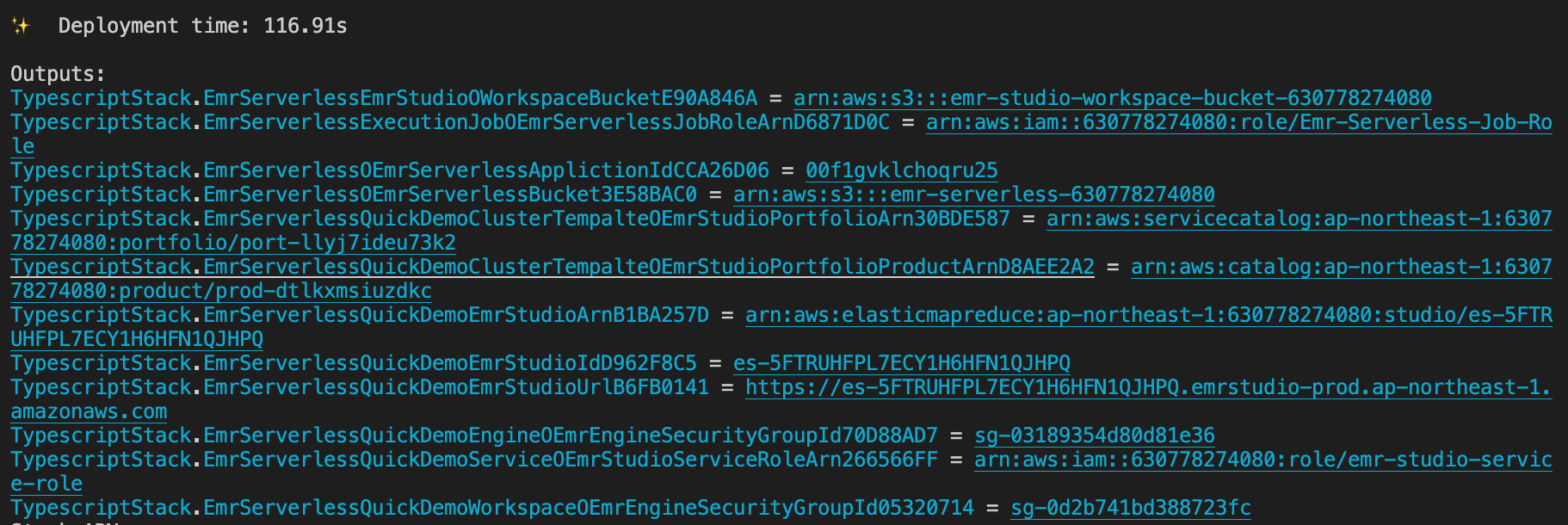

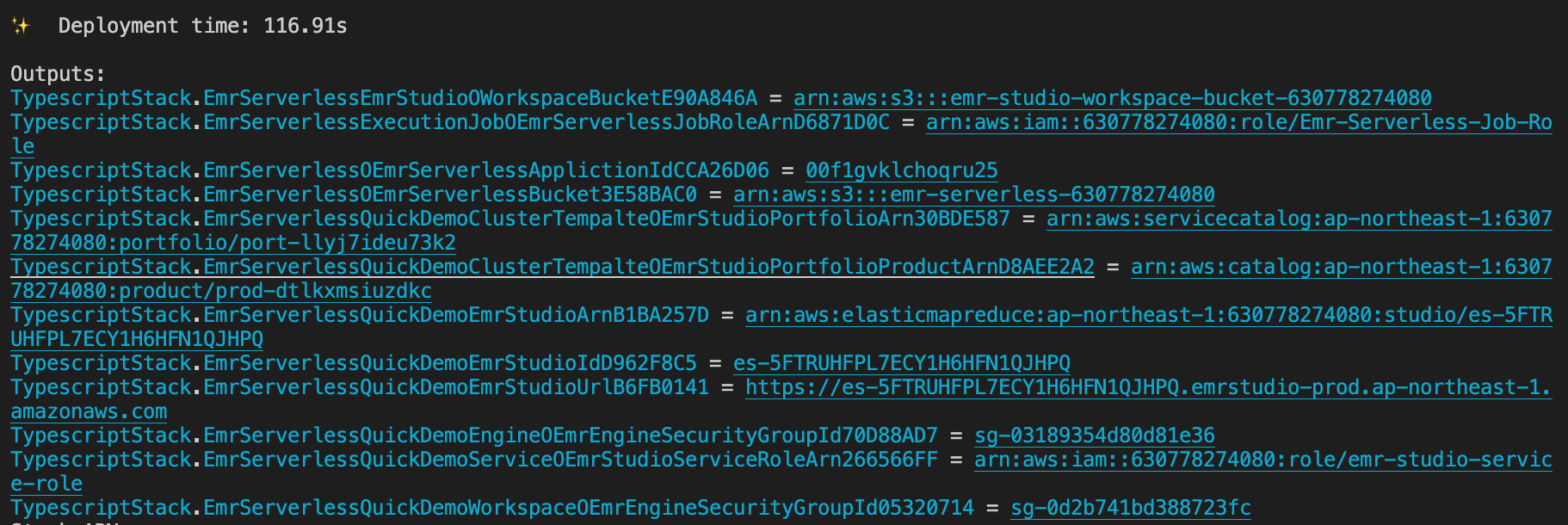

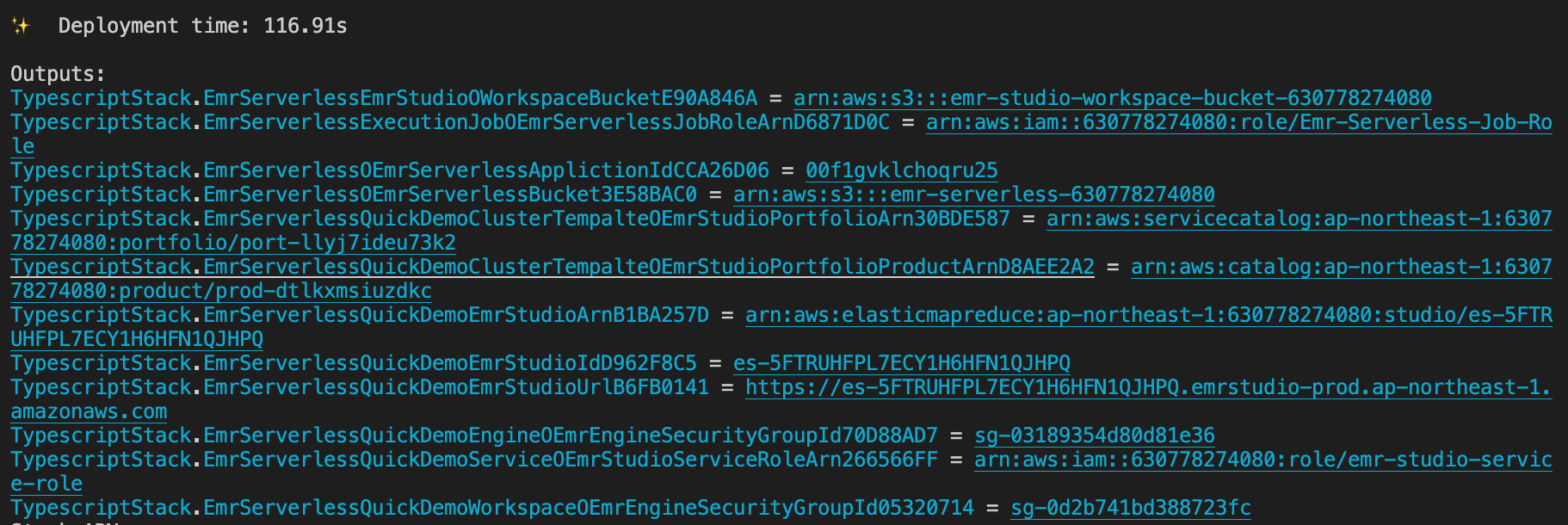

# After deployment

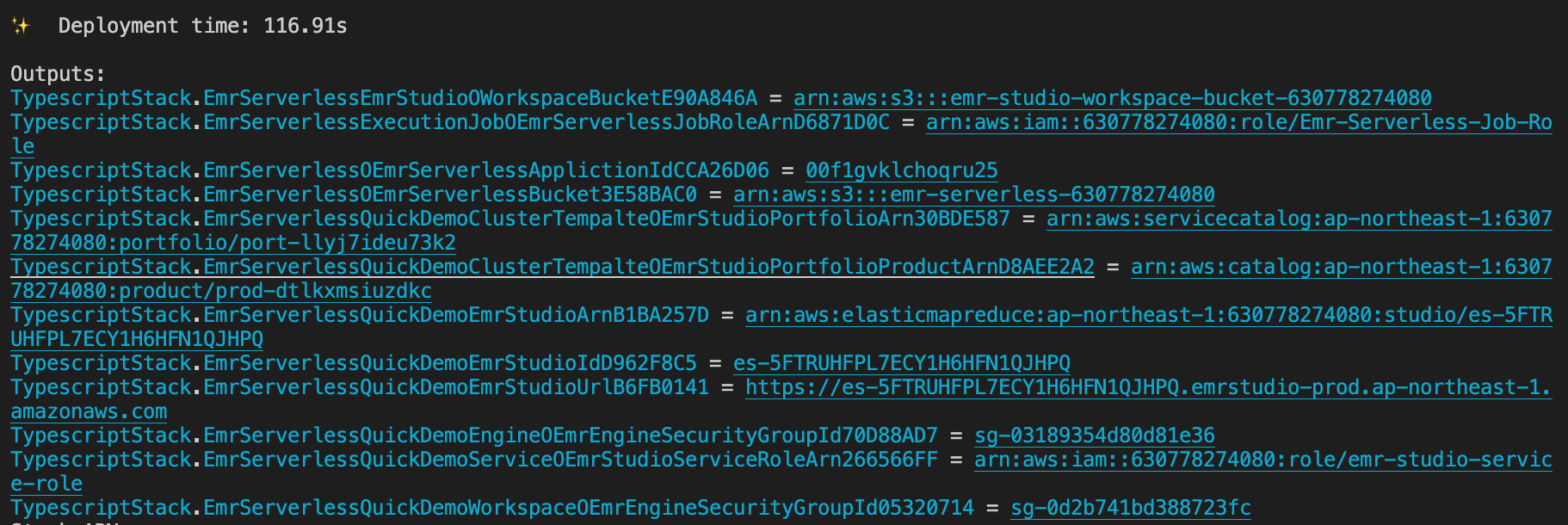

Promise me, darling, make advantage on the CloudFormation outputs. All you need is **copy-paste**, **copy-paste**, **copy-paste**, life should be always that easy.

1. **Define the following environment variables on your current session.**

```

export PROFILE_NAME="${YOUR_PROFILE_NAME}"

export JOB_ROLE_ARN="${copy-paste-thank-you}"

export APPLICATION_ID="${copy-paste-thank-you}"

export SERVERLESS_BUCKET_NAME="${copy-paste-thank-you}"

export DELTA_LAKE_SCRIPT_NAME="delta-lake-demo"

```

2. **Copy partial NYC-taxi data into the EMR Serverless bucket.**

```sh

aws s3 cp s3://nyc-tlc/trip\ data/ s3://${SERVERLESS_BUCKET_NAME}/nyc-taxi/ --exclude "*" --include "yellow_tripdata_2021-*.parquet" --recursive --profile ${PROFILE_NAME}

```

3. **Create a Python script for processing Delta Lake**

```sh

touch ${DELTA_LAKE_SCRIPT_NAME}.py

cat << EOF > ${DELTA_LAKE_SCRIPT_NAME}.py

from pyspark.sql import SparkSession

import uuid

if __name__ == "__main__":

"""

Delta Lake with EMR Serverless, take NYC taxi as example.

"""

spark = SparkSession \\

.builder \\

.config("spark.sql.extensions", "io.delta.sql.DeltaSparkSessionExtension") \\

.config("spark.sql.catalog.spark_catalog", "org.apache.spark.sql.delta.catalog.DeltaCatalog") \\

.enableHiveSupport() \\

.appName("Delta-Lake-OSS") \\

.getOrCreate()

url = "s3://${SERVERLESS_BUCKET_NAME}/emr-serverless-spark/delta-lake/output/1.2.1/%s/" % str(

uuid.uuid4())

# creates a Delta table and outputs to target S3 bucket

spark.range(5).write.format("delta").save(url)

# reads a Delta table and outputs to target S3 bucket

spark.read.format("delta").load(url).show()

# The source for the second Delta table.

base = spark.read.parquet(

"s3://${SERVERLESS_BUCKET_NAME}/nyc-taxi/*.parquet")

# The sceond Delta table, oh ya.

base.write.format("delta") \\

.mode("overwrite") \\

.save("s3://${SERVERLESS_BUCKET_NAME}/emr-serverless-spark/delta-lake/nyx-tlc-2021")

spark.stop()

EOF

```

4. **Upload the script and required jars into the serverless bucket**

```sh

# upload script

aws s3 cp delta-lake-demo.py s3://${SERVERLESS_BUCKET_NAME}/scripts/${DELTA_LAKE_SCRIPT_NAME}.py --profile ${PROFILE_NAME}

# download jars and upload them

DELTA_VERSION="3.0.0"

DELTA_LAKE_CORE="delta-spark_2.13-${DELTA_VERSION}.jar"

DELTA_LAKE_STORAGE="delta-storage-${DELTA_VERSION}.jar"

curl https://repo1.maven.org/maven2/io/delta/delta-spark_2.13/${DELTA_VERSION}/${DELTA_LAKE_CORE} --output ${DELTA_LAKE_CORE}

curl https://repo1.maven.org/maven2/io/delta/delta-storage/${DELTA_VERSION}/${DELTA_LAKE_STORAGE} --output ${DELTA_LAKE_STORAGE}

aws s3 mv ${DELTA_LAKE_CORE} s3://${SERVERLESS_BUCKET_NAME}/jars/${DELTA_LAKE_CORE} --profile ${PROFILE_NAME}

aws s3 mv ${DELTA_LAKE_STORAGE} s3://${SERVERLESS_BUCKET_NAME}/jars/${DELTA_LAKE_STORAGE} --profile ${PROFILE_NAME}

```

# Create an EMR Serverless app

Rememeber, you got so much information to copy and paste from the CloudFormation outputs.

```sh

aws emr-serverless start-job-run \

--application-id ${APPLICATION_ID} \

--execution-role-arn ${JOB_ROLE_ARN} \

--name 'shy-shy-first-time' \

--job-driver '{

"sparkSubmit": {

"entryPoint": "s3://'${SERVERLESS_BUCKET_NAME}'/scripts/'${DELTA_LAKE_SCRIPT_NAME}'.py",

"sparkSubmitParameters": "--conf spark.executor.cores=1 --conf spark.executor.memory=4g --conf spark.driver.cores=1 --conf spark.driver.memory=4g --conf spark.executor.instances=1 --conf spark.jars=s3://'${SERVERLESS_BUCKET_NAME}'/jars/'${DELTA_LAKE_CORE}',s3://'${SERVERLESS_BUCKET_NAME}'/jars/'${DELTA_LAKE_STORAGE}'"

}

}' \

--configuration-overrides '{

"monitoringConfiguration": {

"s3MonitoringConfiguration": {

"logUri": "s3://'${SERVERLESS_BUCKET_NAME}'/serverless-log/"

}

}

}' \

--profile ${PROFILE_NAME}

```

If you execute with success, you should see similar reponse as the following:

```sh

{

"applicationId": "00f1gvklchoqru25",

"jobRunId": "00f1h0ipd2maem01",

"arn": "arn:aws:emr-serverless:ap-northeast-1:630778274080:/applications/00f1gvklchoqru25/jobruns/00f1h0ipd2maem01"

}

```

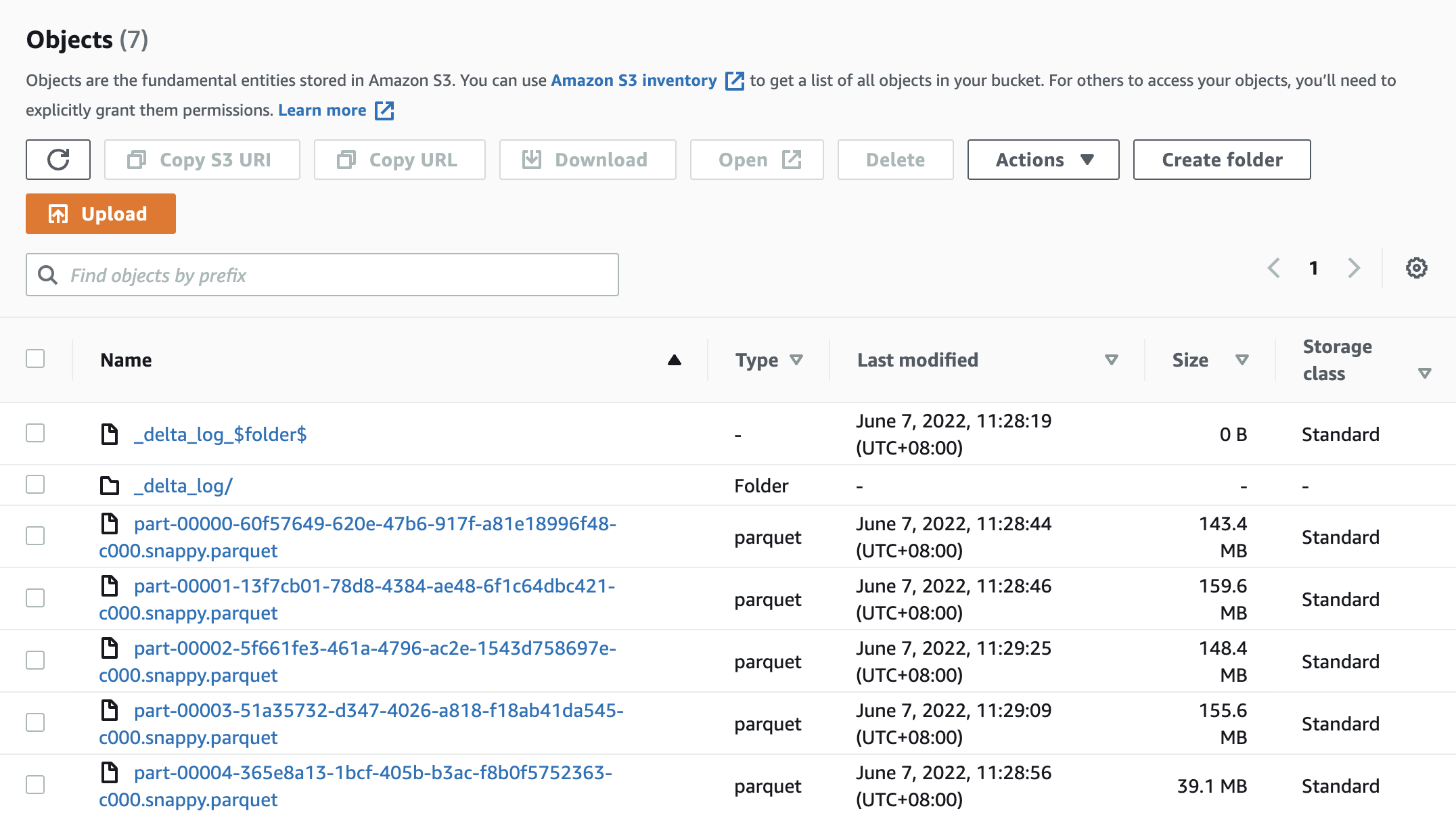

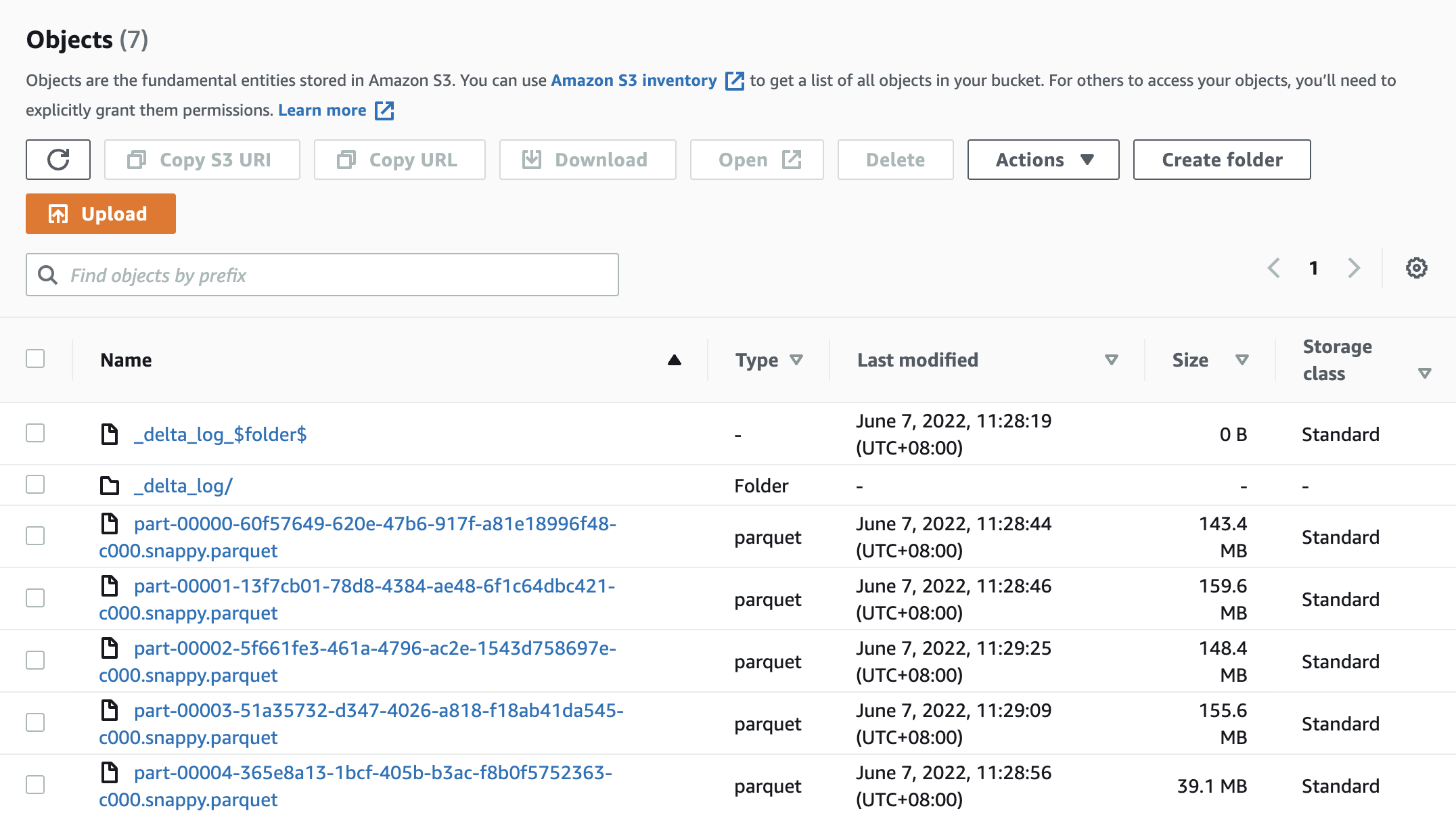

and got a Delta Lake data under `s3://${SERVERLESS_BUCKET_NAME}/emr-serverless-spark/delta-lake/nyx-tlc-2021/`.

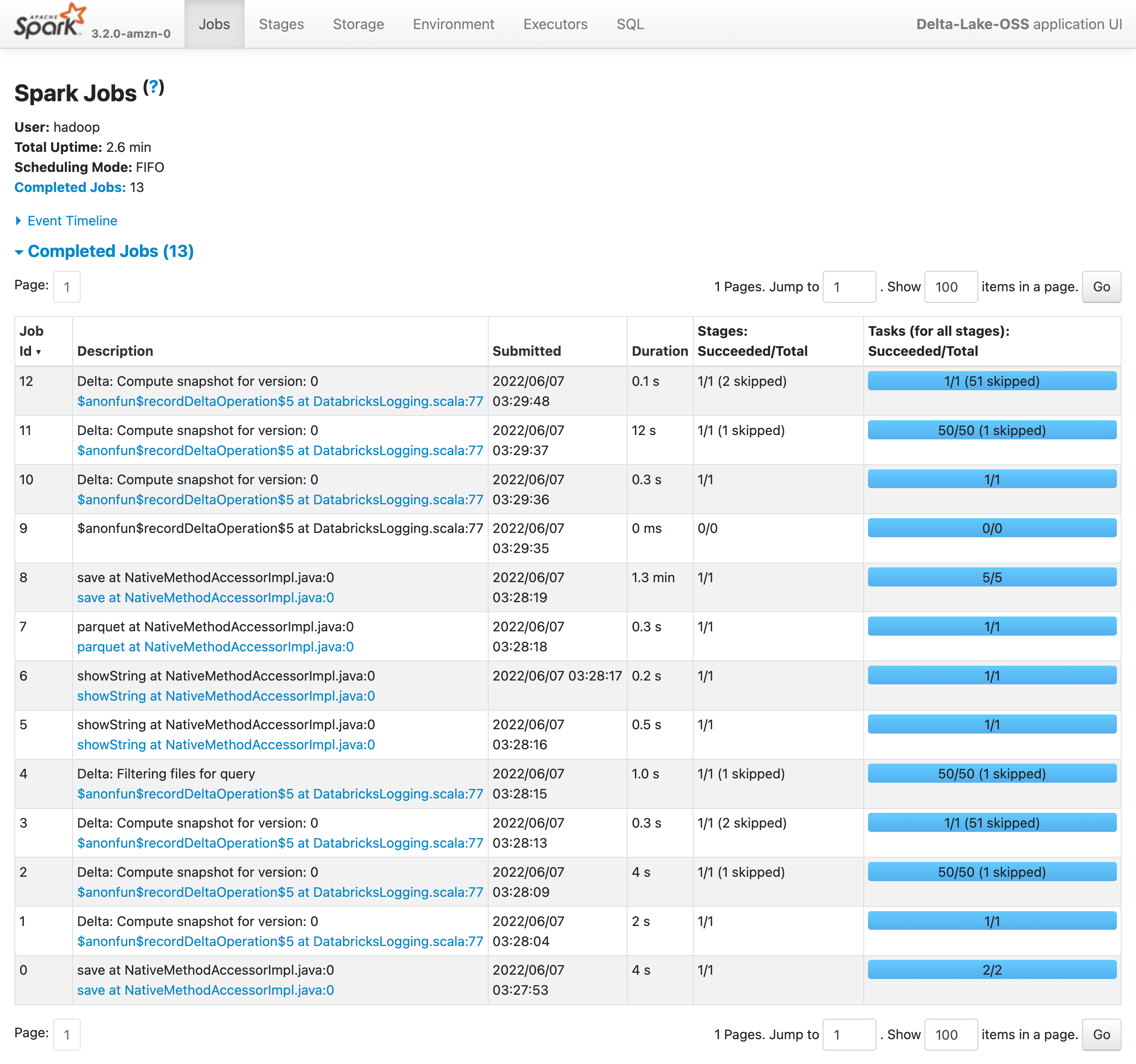

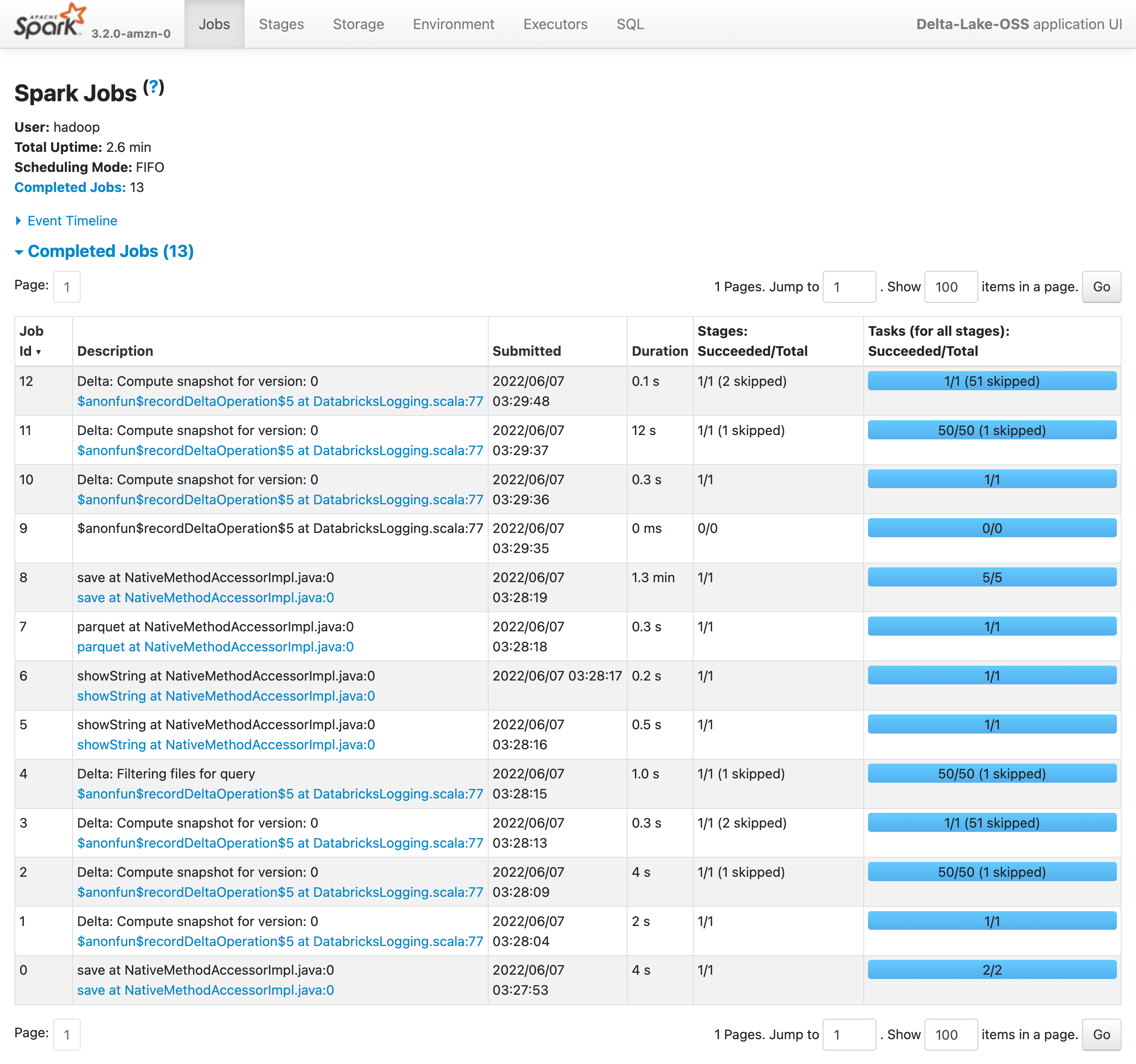

# Check the executing job

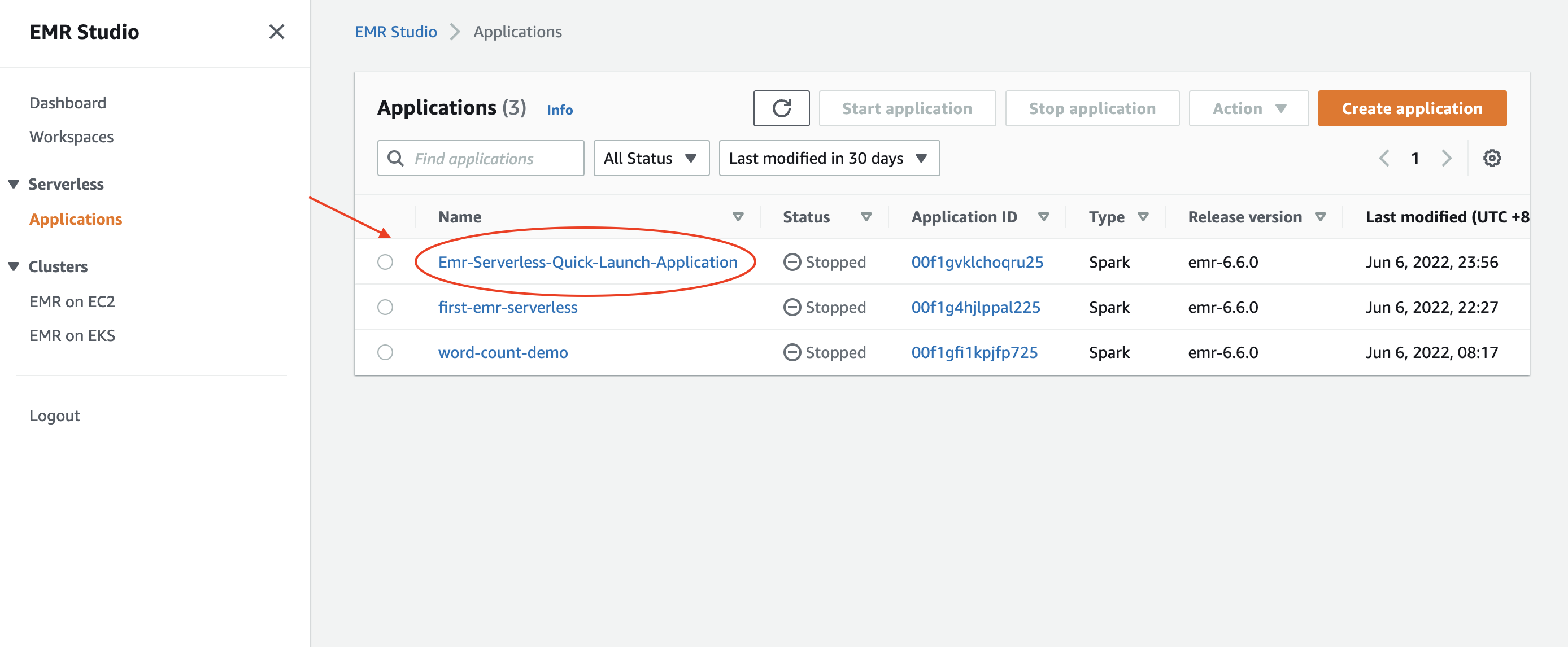

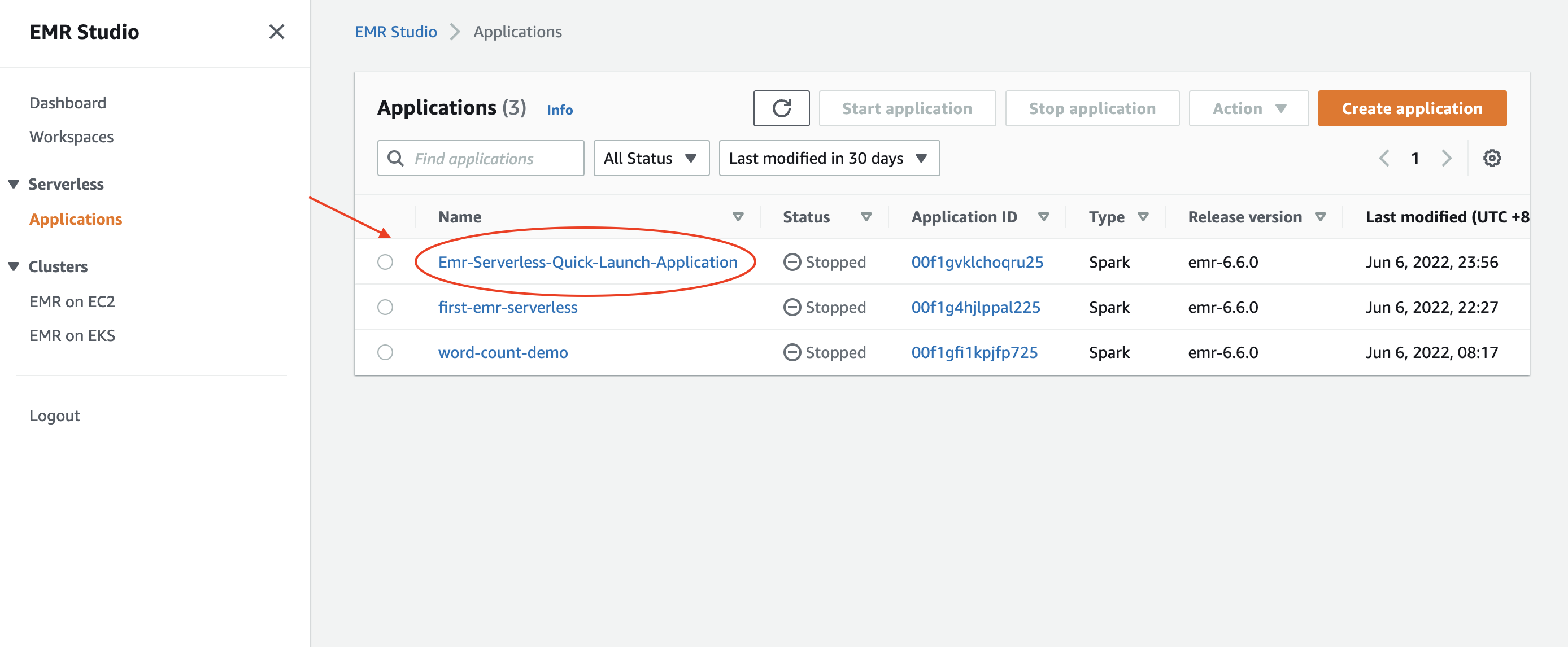

Access the EMR Studio via the URL from the CloudFormation outputs. It should look very similar to the following url: `https://es-pilibalapilibala.emrstudio-prod.ap-northeast-1.amazonaws.com`, i.e., weird string and region won't be the same as mine.

1. **Enter into the application**

2. **Enter into the executing job**

# Check results from an EMR notebook via cluster template

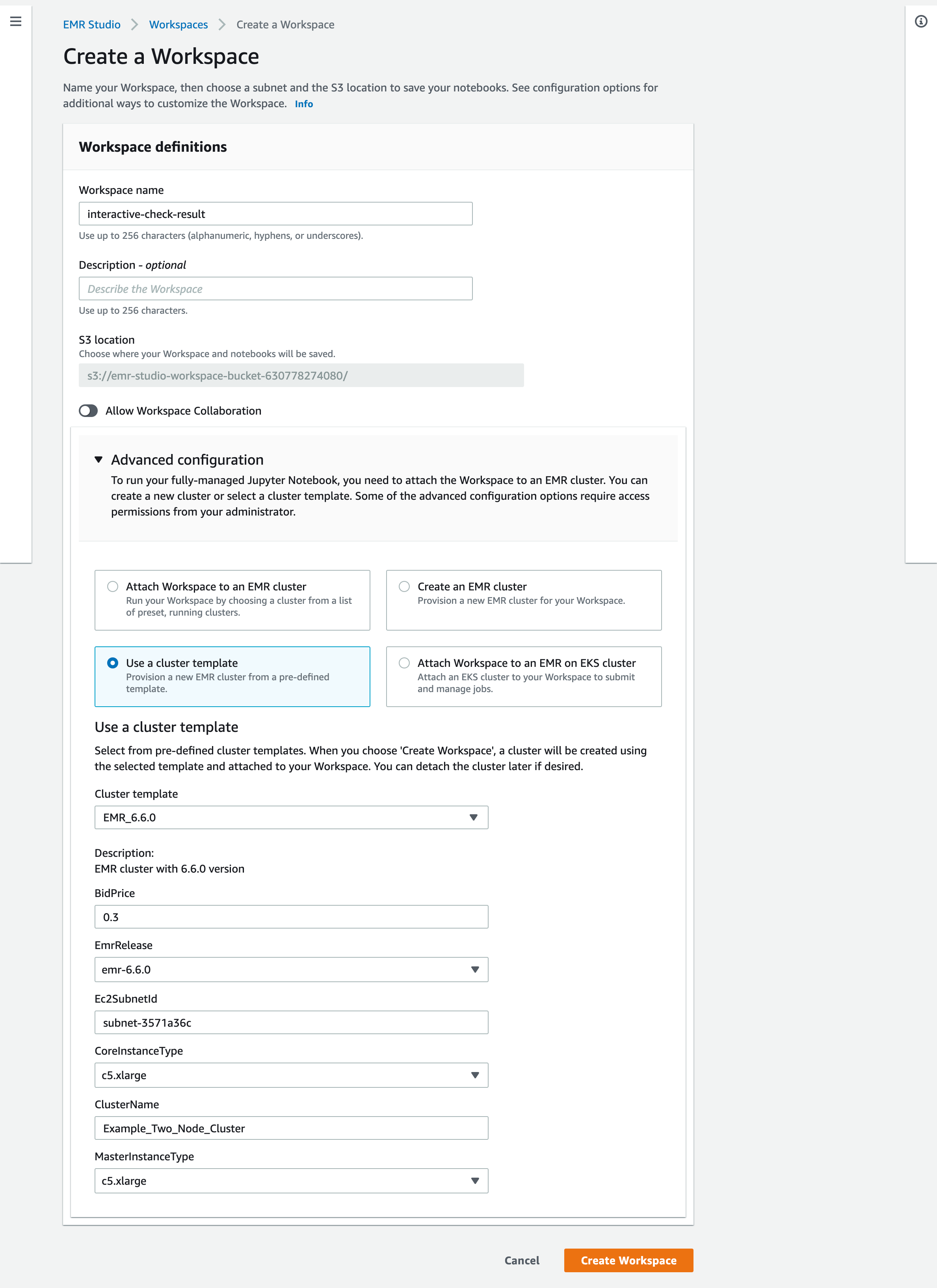

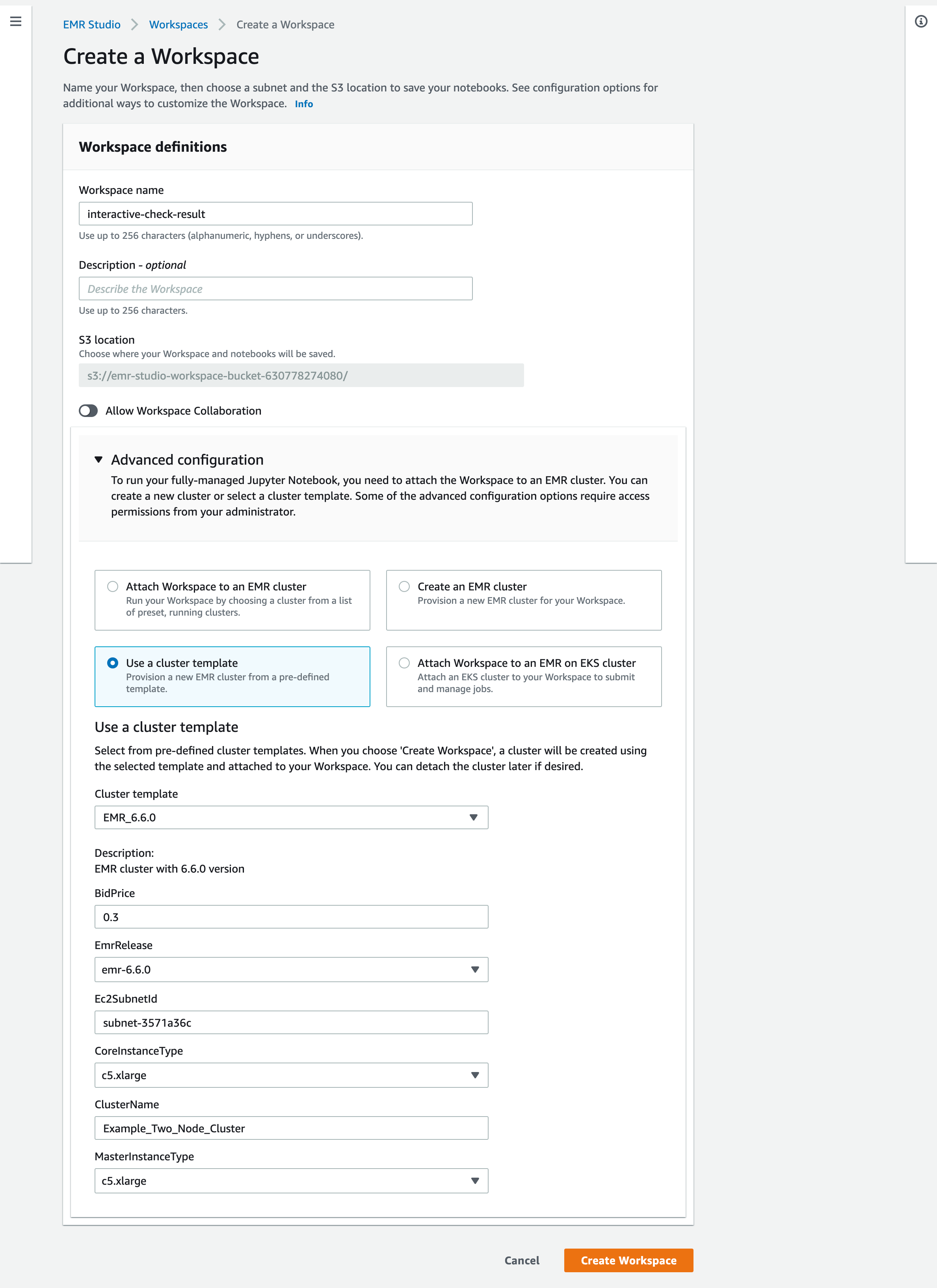

1. Create a workspace and an EMR cluster via the cluster template on the AWS Console

2. Check the results delivered by the EMR serverless application via an EMR notebook.

# Fun facts

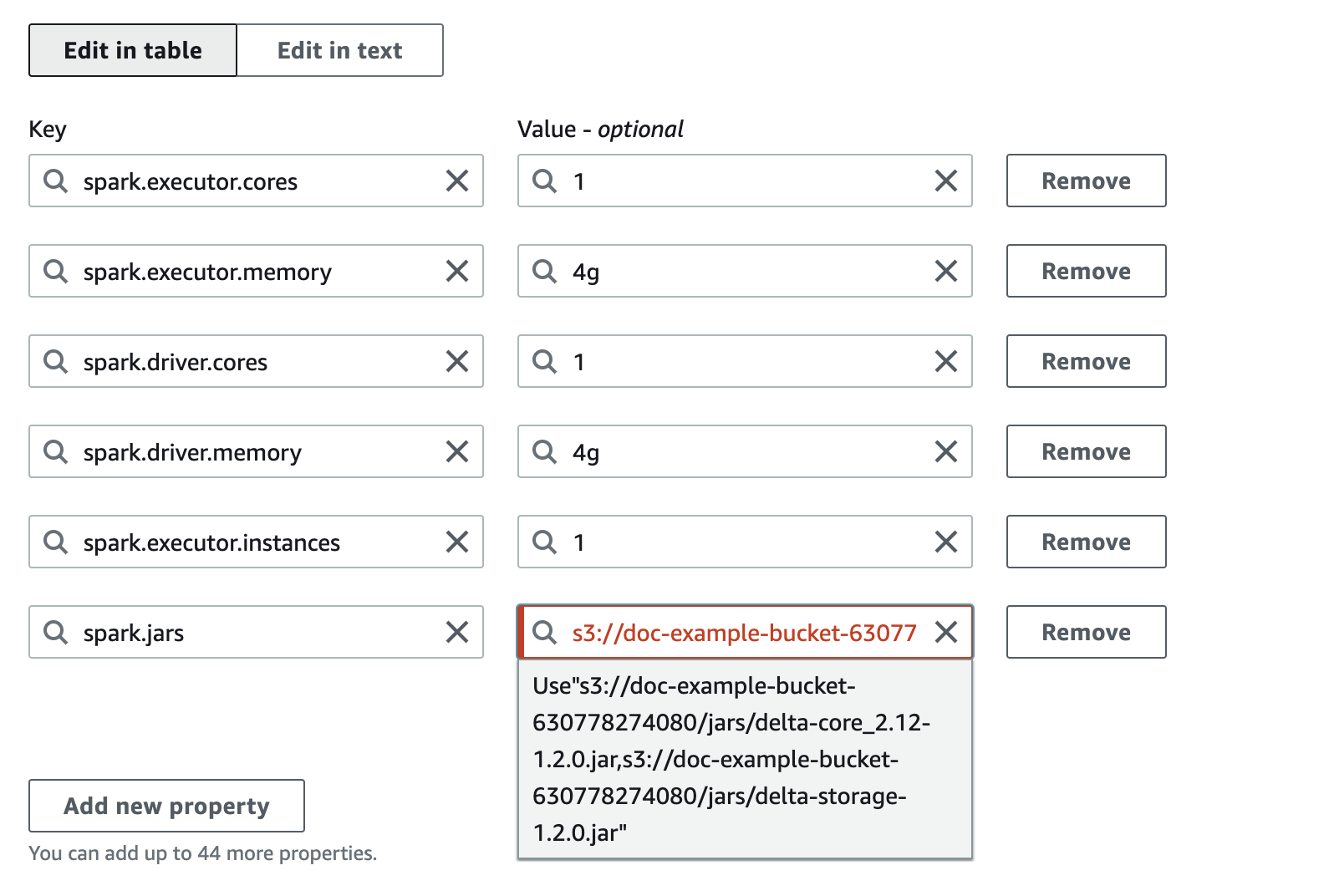

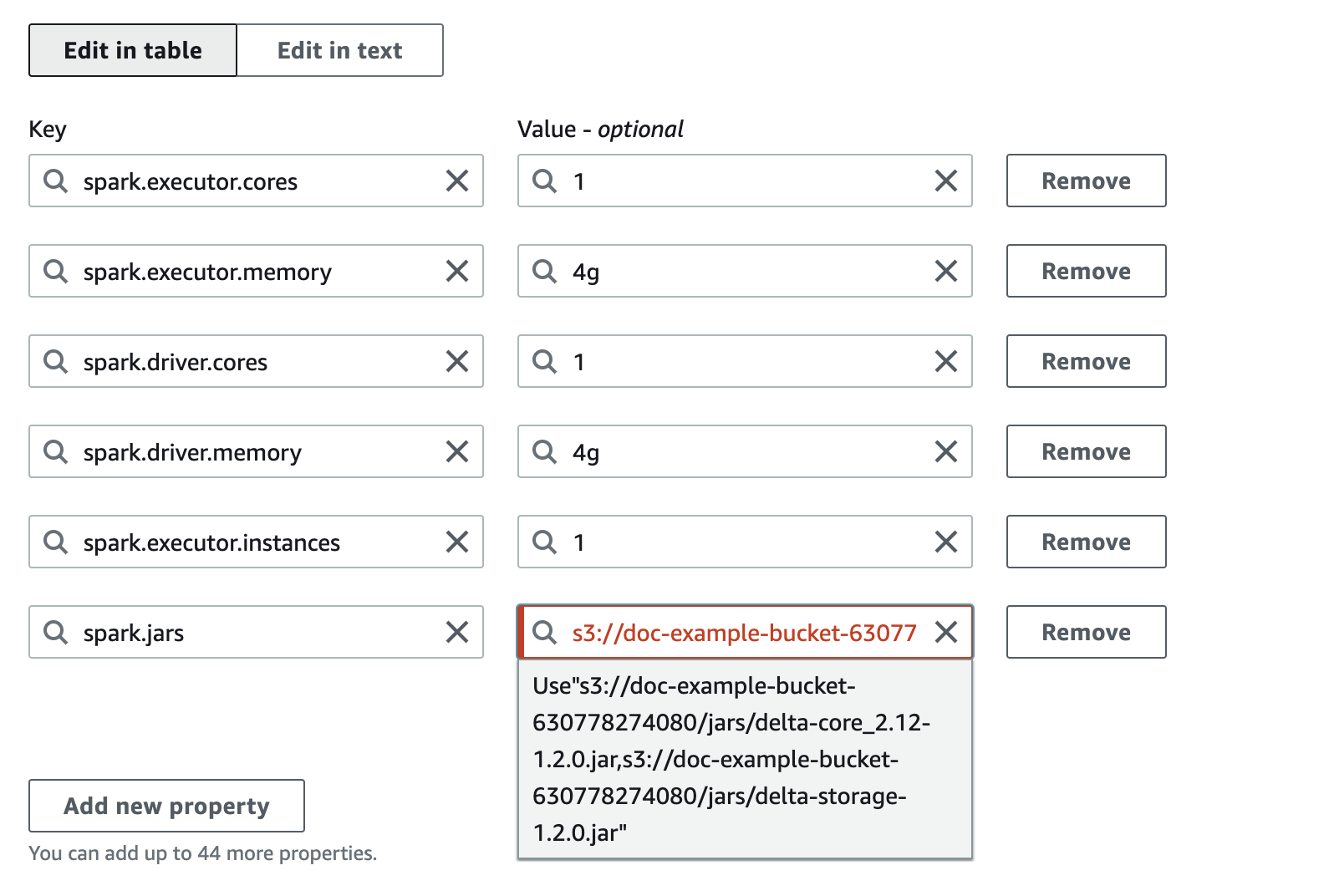

1. You can assign multiple jars as a comma-separated list to the `spark.jars` as [the Spark page](https://spark.apache.org/docs/latest/configuration.html#runtime-environment) says for your EMR Serverless job. The UI will complain, you still can start the job. Don't be afraid, just click it like when you were child, facing authority fearlessly.

2. To fully delet a stack with the construct, you need to make sure there is no more workspace within the EMR Studio. Aside from that, you also need to remove the associated identity from the Service Catalog (this is a necessary resource for the cluster template).

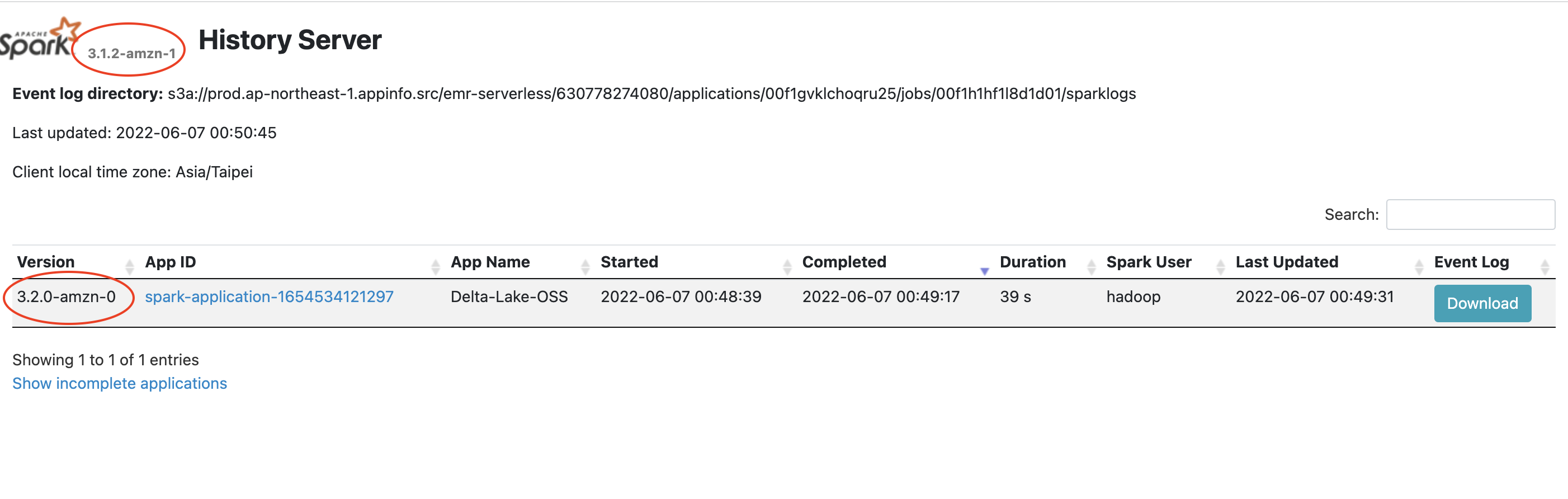

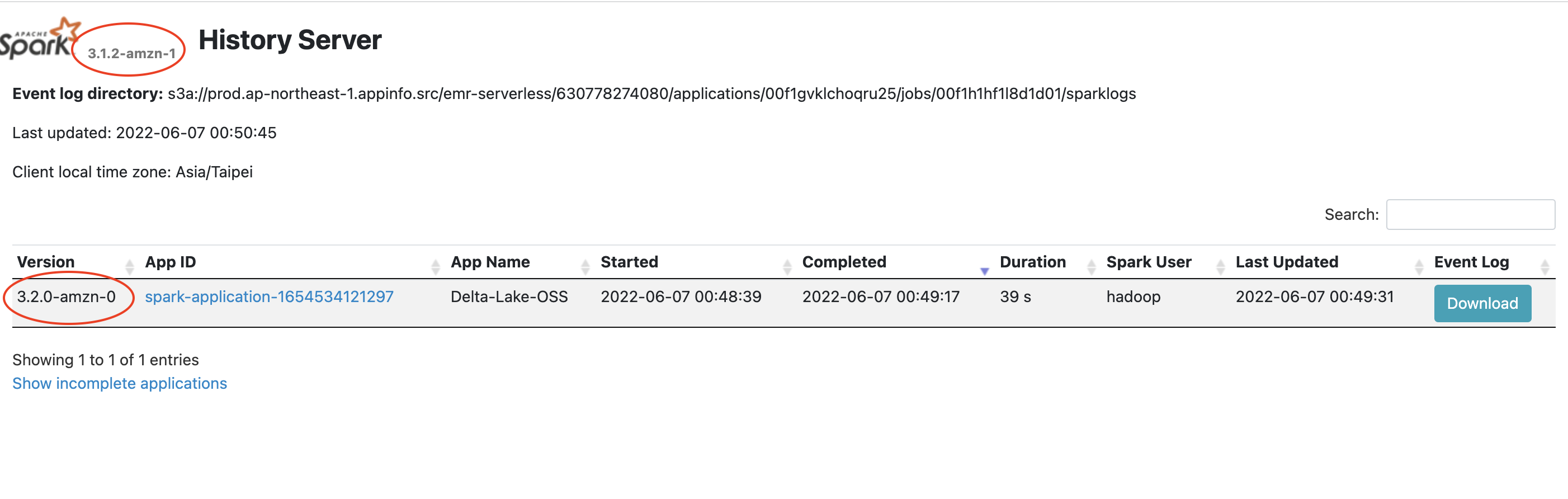

3. Version inconsistency on Spark history. Possibly it can be ignored yet still made me wonder why the versions are different.

4. So far, I still haven't figured out how to make the s3a URI work. The s3 URI is fine while the serverless app will complain that it couldn't find proper credentials provider to read the s3a URI.

# Future work

1. Custom resuorce for EMR Serverless

2. Make the construct more flexible for users

3. Compare Databricks Runtime and EMR Serverless.

Raw data

{

"_id": null,

"home_page": "https://github.com/HsiehShuJeng/cdk-emrserverless-with-delta-lake.git",

"name": "cdk-emrserverless-with-delta-lake",

"maintainer": null,

"docs_url": null,

"requires_python": "~=3.9",

"maintainer_email": null,

"keywords": null,

"author": "Shu-Jeng Hsieh",

"author_email": null,

"download_url": "https://files.pythonhosted.org/packages/97/0d/c8379b082b691de4b9ac0551cc12c1c9b78b9be0db06ad2e2ace9fd37773/cdk_emrserverless_with_delta_lake-2.0.731.tar.gz",

"platform": null,

"description": "# cdk-emrserverless-with-delta-lake\n\n[](https://opensource.org/licenses/Apache-2.0) [](https://github.com/HsiehShuJeng/cdk-emrserverless-with-delta-lake/actions/workflows/release.yml/badge.svg) [](https://img.shields.io/npm/dt/cdk-emrserverless-with-delta-lake?label=npm%20downloads&style=plastic) [](https://img.shields.io/pypi/dw/cdk-emrserverless-with-delta-lake?label=pypi%20downloads&style=plastic) [](https://img.shields.io/nuget/dt/Emrserverless.With.Delta.Lake?label=NuGet%20downloads&style=plastic) [](https://img.shields.io/github/languages/count/HsiehShuJeng/cdk-emrserverless-with-delta-lake?style=plastic)\n\n| npm (JS/TS) | PyPI (Python) | Maven (Java) | Go | NuGet |\n| --- | --- | --- | --- | --- |\n| [Link](https://www.npmjs.com/package/cdk-emrserverless-with-delta-lake) | [Link](https://pypi.org/project/cdk-emrserverless-with-delta-lake/) | [Link](https://search.maven.org/artifact/io.github.hsiehshujeng/cdk-emrserverless-quickdemo-with-delta-lake) | [Link](https://github.com/HsiehShuJeng/cdk-emrserverless-with-delta-lake-go) | [Link](https://www.nuget.org/packages/Emrserverless.With.Delta.Lake/) |\n\n\n\nThis constrcut builds an EMR studio, a cluster template for the EMR Studio, and an EMR Serverless application. 2 S3 buckets will be created, one is for the EMR Studio workspace and the other one is for EMR Serverless applications. Besides, the VPC and the subnets for the EMR Studio will be tagged `{\"Key\": \"for-use-with-amazon-emr-managed-policies\", \"Value\": \"true\"}` via a custom resource. This is necessary for the [service role](https://docs.aws.amazon.com/emr/latest/ManagementGuide/emr-studio-service-role.html#emr-studio-service-role-instructions) of EMR Studio.\nThis construct is for analysts, data engineers, and anyone who wants to know how to process **Delta Lake data** with EMR serverless.\n\nThey build the construct via [cdkv2](https://docs.aws.amazon.com/cdk/v2/guide/home.html) and build a serverless job within the EMR application generated by the construct via AWS CLI within few minutes. After the EMR serverless job is finished, they can then check the processed result done by the EMR serverless job on an EMR notebook through the cluster template.\n\n\n# TOC\n\n* [Requirements](#requirements)\n* [Before deployment](#before-deployment)\n* [Minimal content for deployment](#minimal-content-for-deployment)\n* [After deployment](#after-deployment)\n* [Create an EMR Serverless application](#create-an-emr-serverless-app)\n* [Check the executing job](#check-the-executing-job)\n* [Check results from an EMR notebook via cluster template](#check-results-from-an-emr-notebook-via-cluster-template)\n* [Fun facts](#fun-facts)\n* [Future work](#future-work)\n\n# Requirements\n\n1. Your current identity has the `AdministratorAccess` power.\n2. [An IAM user](https://docs.aws.amazon.com/IAM/latest/UserGuide/getting-started_create-admin-group.html) named `Administrator` with the `AdministratorAccess` power.\n\n * This is related to the Portfolio of AWS Service Catalog created by the construct, which is required for [EMR cluster tempaltes](https://docs.aws.amazon.com/emr/latest/ManagementGuide/emr-studio-cluster-templates.html).\n * You can choose whatsoever identity you wish to associate with the Product in the Porfolio for creating an EMR cluster via cluster tempalte. Check `serviceCatalogProps` in the `EmrServerless` construct for detail, otherwise, the IAM user mentioned above will be chosen to set up with the Product.\n\n# Before deployment\n\nYou might want to execute the following command.\n\n```sh\nPROFILE_NAME=\"scott.hsieh\"\n# If you only have one credentials on your local machine, just ignore `--profile`, buddy.\ncdk bootstrap aws://${AWS_ACCOUNT_ID}/${AWS_REGION} --profile ${PROFILE_NAME}\n```\n\n# Minimal content for deployment\n\n```python\n#!/usr/bin/env node\nimport * as cdk from 'aws-cdk-lib';\nimport { Construct } from 'constructs';\nimport { EmrServerless } from 'cdk-emrserverless-with-delta-lake';\n\nclass TypescriptStack extends cdk.Stack {\n constructor(scope: Construct, id: string, props?: cdk.StackProps) {\n super(scope, id, props);\n new EmrServerless(this, 'EmrServerless');\n }\n}\n\nconst app = new cdk.App();\nnew TypescriptStack(app, 'TypescriptStack', {\n stackName: 'emr-studio',\n env: {\n region: process.env.CDK_DEFAULT_REGION,\n account: process.env.CDK_DEFAULT_ACCOUNT,\n },\n});\n```\n\n# After deployment\n\nPromise me, darling, make advantage on the CloudFormation outputs. All you need is **copy-paste**, **copy-paste**, **copy-paste**, life should be always that easy.\n\n\n1. **Define the following environment variables on your current session.**\n\n ```\n export PROFILE_NAME=\"${YOUR_PROFILE_NAME}\"\n export JOB_ROLE_ARN=\"${copy-paste-thank-you}\"\n export APPLICATION_ID=\"${copy-paste-thank-you}\"\n export SERVERLESS_BUCKET_NAME=\"${copy-paste-thank-you}\"\n export DELTA_LAKE_SCRIPT_NAME=\"delta-lake-demo\"\n ```\n2. **Copy partial NYC-taxi data into the EMR Serverless bucket.**\n\n ```sh\n aws s3 cp s3://nyc-tlc/trip\\ data/ s3://${SERVERLESS_BUCKET_NAME}/nyc-taxi/ --exclude \"*\" --include \"yellow_tripdata_2021-*.parquet\" --recursive --profile ${PROFILE_NAME}\n ```\n3. **Create a Python script for processing Delta Lake**\n\n ```sh\n touch ${DELTA_LAKE_SCRIPT_NAME}.py\n cat << EOF > ${DELTA_LAKE_SCRIPT_NAME}.py\n from pyspark.sql import SparkSession\n import uuid\n\n if __name__ == \"__main__\":\n \"\"\"\n Delta Lake with EMR Serverless, take NYC taxi as example.\n \"\"\"\n spark = SparkSession \\\\\n .builder \\\\\n .config(\"spark.sql.extensions\", \"io.delta.sql.DeltaSparkSessionExtension\") \\\\\n .config(\"spark.sql.catalog.spark_catalog\", \"org.apache.spark.sql.delta.catalog.DeltaCatalog\") \\\\\n .enableHiveSupport() \\\\\n .appName(\"Delta-Lake-OSS\") \\\\\n .getOrCreate()\n\n url = \"s3://${SERVERLESS_BUCKET_NAME}/emr-serverless-spark/delta-lake/output/1.2.1/%s/\" % str(\n uuid.uuid4())\n\n # creates a Delta table and outputs to target S3 bucket\n spark.range(5).write.format(\"delta\").save(url)\n\n # reads a Delta table and outputs to target S3 bucket\n spark.read.format(\"delta\").load(url).show()\n\n # The source for the second Delta table.\n base = spark.read.parquet(\n \"s3://${SERVERLESS_BUCKET_NAME}/nyc-taxi/*.parquet\")\n\n # The sceond Delta table, oh ya.\n base.write.format(\"delta\") \\\\\n .mode(\"overwrite\") \\\\\n .save(\"s3://${SERVERLESS_BUCKET_NAME}/emr-serverless-spark/delta-lake/nyx-tlc-2021\")\n spark.stop()\n EOF\n ```\n4. **Upload the script and required jars into the serverless bucket**\n\n ```sh\n # upload script\n aws s3 cp delta-lake-demo.py s3://${SERVERLESS_BUCKET_NAME}/scripts/${DELTA_LAKE_SCRIPT_NAME}.py --profile ${PROFILE_NAME}\n # download jars and upload them\n DELTA_VERSION=\"3.0.0\"\n DELTA_LAKE_CORE=\"delta-spark_2.13-${DELTA_VERSION}.jar\"\n DELTA_LAKE_STORAGE=\"delta-storage-${DELTA_VERSION}.jar\"\n curl https://repo1.maven.org/maven2/io/delta/delta-spark_2.13/${DELTA_VERSION}/${DELTA_LAKE_CORE} --output ${DELTA_LAKE_CORE}\n curl https://repo1.maven.org/maven2/io/delta/delta-storage/${DELTA_VERSION}/${DELTA_LAKE_STORAGE} --output ${DELTA_LAKE_STORAGE}\n aws s3 mv ${DELTA_LAKE_CORE} s3://${SERVERLESS_BUCKET_NAME}/jars/${DELTA_LAKE_CORE} --profile ${PROFILE_NAME}\n aws s3 mv ${DELTA_LAKE_STORAGE} s3://${SERVERLESS_BUCKET_NAME}/jars/${DELTA_LAKE_STORAGE} --profile ${PROFILE_NAME}\n ```\n\n# Create an EMR Serverless app\n\nRememeber, you got so much information to copy and paste from the CloudFormation outputs.\n\n\n```sh\naws emr-serverless start-job-run \\\n --application-id ${APPLICATION_ID} \\\n --execution-role-arn ${JOB_ROLE_ARN} \\\n --name 'shy-shy-first-time' \\\n --job-driver '{\n \"sparkSubmit\": {\n \"entryPoint\": \"s3://'${SERVERLESS_BUCKET_NAME}'/scripts/'${DELTA_LAKE_SCRIPT_NAME}'.py\",\n \"sparkSubmitParameters\": \"--conf spark.executor.cores=1 --conf spark.executor.memory=4g --conf spark.driver.cores=1 --conf spark.driver.memory=4g --conf spark.executor.instances=1 --conf spark.jars=s3://'${SERVERLESS_BUCKET_NAME}'/jars/'${DELTA_LAKE_CORE}',s3://'${SERVERLESS_BUCKET_NAME}'/jars/'${DELTA_LAKE_STORAGE}'\"\n }\n }' \\\n --configuration-overrides '{\n \"monitoringConfiguration\": {\n \"s3MonitoringConfiguration\": {\n \"logUri\": \"s3://'${SERVERLESS_BUCKET_NAME}'/serverless-log/\"\n\t }\n\t }\n\t}' \\\n\t--profile ${PROFILE_NAME}\n```\n\nIf you execute with success, you should see similar reponse as the following:\n\n```sh\n{\n \"applicationId\": \"00f1gvklchoqru25\",\n \"jobRunId\": \"00f1h0ipd2maem01\",\n \"arn\": \"arn:aws:emr-serverless:ap-northeast-1:630778274080:/applications/00f1gvklchoqru25/jobruns/00f1h0ipd2maem01\"\n}\n```\n\nand got a Delta Lake data under `s3://${SERVERLESS_BUCKET_NAME}/emr-serverless-spark/delta-lake/nyx-tlc-2021/`.\n\n\n# Check the executing job\n\nAccess the EMR Studio via the URL from the CloudFormation outputs. It should look very similar to the following url: `https://es-pilibalapilibala.emrstudio-prod.ap-northeast-1.amazonaws.com`, i.e., weird string and region won't be the same as mine.\n\n1. **Enter into the application**\n \n2. **Enter into the executing job**\n\n# Check results from an EMR notebook via cluster template\n\n1. Create a workspace and an EMR cluster via the cluster template on the AWS Console\n \n2. Check the results delivered by the EMR serverless application via an EMR notebook.\n\n# Fun facts\n\n1. You can assign multiple jars as a comma-separated list to the `spark.jars` as [the Spark page](https://spark.apache.org/docs/latest/configuration.html#runtime-environment) says for your EMR Serverless job. The UI will complain, you still can start the job. Don't be afraid, just click it like when you were child, facing authority fearlessly.\n \n2. To fully delet a stack with the construct, you need to make sure there is no more workspace within the EMR Studio. Aside from that, you also need to remove the associated identity from the Service Catalog (this is a necessary resource for the cluster template).\n3. Version inconsistency on Spark history. Possibly it can be ignored yet still made me wonder why the versions are different.\n \n4. So far, I still haven't figured out how to make the s3a URI work. The s3 URI is fine while the serverless app will complain that it couldn't find proper credentials provider to read the s3a URI.\n\n# Future work\n\n1. Custom resuorce for EMR Serverless\n2. Make the construct more flexible for users\n3. Compare Databricks Runtime and EMR Serverless.\n",

"bugtrack_url": null,

"license": "Apache-2.0",

"summary": "A construct for the quick demo of EMR Serverless.",

"version": "2.0.731",

"project_urls": {

"Homepage": "https://github.com/HsiehShuJeng/cdk-emrserverless-with-delta-lake.git",

"Source": "https://github.com/HsiehShuJeng/cdk-emrserverless-with-delta-lake.git"

},

"split_keywords": [],

"urls": [

{

"comment_text": null,

"digests": {

"blake2b_256": "a51d5453416193df59f0f13cce6cbbb7b47f88b729b82bb3b2d4198d292ba67b",

"md5": "ef5805145452b79a0be4cb8341dba4eb",

"sha256": "e5f3f144f3ad2d4d0aee243a57dc5fe9bfd996e7fd0df87c289d6f50c8123449"

},

"downloads": -1,

"filename": "cdk_emrserverless_with_delta_lake-2.0.731-py3-none-any.whl",

"has_sig": false,

"md5_digest": "ef5805145452b79a0be4cb8341dba4eb",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": "~=3.9",

"size": 3191860,

"upload_time": "2025-03-22T01:06:49",

"upload_time_iso_8601": "2025-03-22T01:06:49.218590Z",

"url": "https://files.pythonhosted.org/packages/a5/1d/5453416193df59f0f13cce6cbbb7b47f88b729b82bb3b2d4198d292ba67b/cdk_emrserverless_with_delta_lake-2.0.731-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": null,

"digests": {

"blake2b_256": "970dc8379b082b691de4b9ac0551cc12c1c9b78b9be0db06ad2e2ace9fd37773",

"md5": "3204be5fe8ad20d90cb6e1809972e556",

"sha256": "27ff9366677671febc62cbebdd9edb0217c3a59a43963dbe918402f351c4c7f5"

},

"downloads": -1,

"filename": "cdk_emrserverless_with_delta_lake-2.0.731.tar.gz",

"has_sig": false,

"md5_digest": "3204be5fe8ad20d90cb6e1809972e556",

"packagetype": "sdist",

"python_version": "source",

"requires_python": "~=3.9",

"size": 3194217,

"upload_time": "2025-03-22T01:06:51",

"upload_time_iso_8601": "2025-03-22T01:06:51.464470Z",

"url": "https://files.pythonhosted.org/packages/97/0d/c8379b082b691de4b9ac0551cc12c1c9b78b9be0db06ad2e2ace9fd37773/cdk_emrserverless_with_delta_lake-2.0.731.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2025-03-22 01:06:51",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "HsiehShuJeng",

"github_project": "cdk-emrserverless-with-delta-lake",

"travis_ci": false,

"coveralls": false,

"github_actions": true,

"lcname": "cdk-emrserverless-with-delta-lake"

}