| Name | cosine-warmup JSON |

| Version |

0.0.0

JSON

JSON |

| download |

| home_page | |

| Summary | Cosine Annealing Linear Warmup |

| upload_time | 2023-04-15 12:34:45 |

| maintainer | |

| docs_url | None |

| author | Arturo Ghinassi |

| requires_python | >=3.8 |

| license | |

| keywords |

torch

scheduler

|

| VCS |

|

| bugtrack_url |

|

| requirements |

No requirements were recorded.

|

| Travis-CI |

No Travis.

|

| coveralls test coverage |

No coveralls.

|

# Cosine Annealing Scheduler with Linear Warmup

Implementation of a Cosine Annealing Scheduler with Linear Warmup and Restarts in PyTorch. \

It has support for multiple parameters groups and minimum target learning rates. \

Also works with the Lightning Modules!

# Installation

```pip install 'git+https://github.com/santurini/cosine-annealing-linear-warmup'```

# Usage

It is important to specify the parameters groups in the optimizer instantiation as the learning rates are directly inferred from the wrapped optimizer.

#### Example: Multiple groups

```

from cosine_warmup import CosineAnnealingLinearWarmup

optimizer = torch.optim.Adam([

{"params": first_group_params, "lr": 1e-3},

{"params": second_group_params, "lr": 1e-4},

]

)

scheduler = CosineAnnealingLinearWarmup(

optimizer = optimizer,

min_lrs = [ 1e-5, 1e-6 ],

first_cycle_steps = 1000,

warmup_steps = 500,

gamma = 0.9

)

# this is equivalent to

scheduler = CosineAnnealingLinearWarmup(

optimizer = optimizer,

min_lrs_pow = 2,

first_cycle_steps = 1000,

warmup_steps = 500,

gamma = 0.9

)

```

#### Example: Single groups

```

from cosine_linear_warmup import CosineAnnealingLinearWarmup

optimizer = torch.optim.Adam(model.parameters(), lr=1e-3)

scheduler = CosineAnnealingLinearWarmup(

optimizer = optimizer,

min_lrs = [ 1e-5 ],

first_cycle_steps = 1000,

warmup_steps = 500,

gamma = 0.9

)

# this is equivalent to

scheduler = CosineAnnealingLinearWarmup(

optimizer = optimizer,

min_lrs_pow = 2,

first_cycle_steps = 1000,

warmup_steps = 500,

gamma = 0.9

)

```

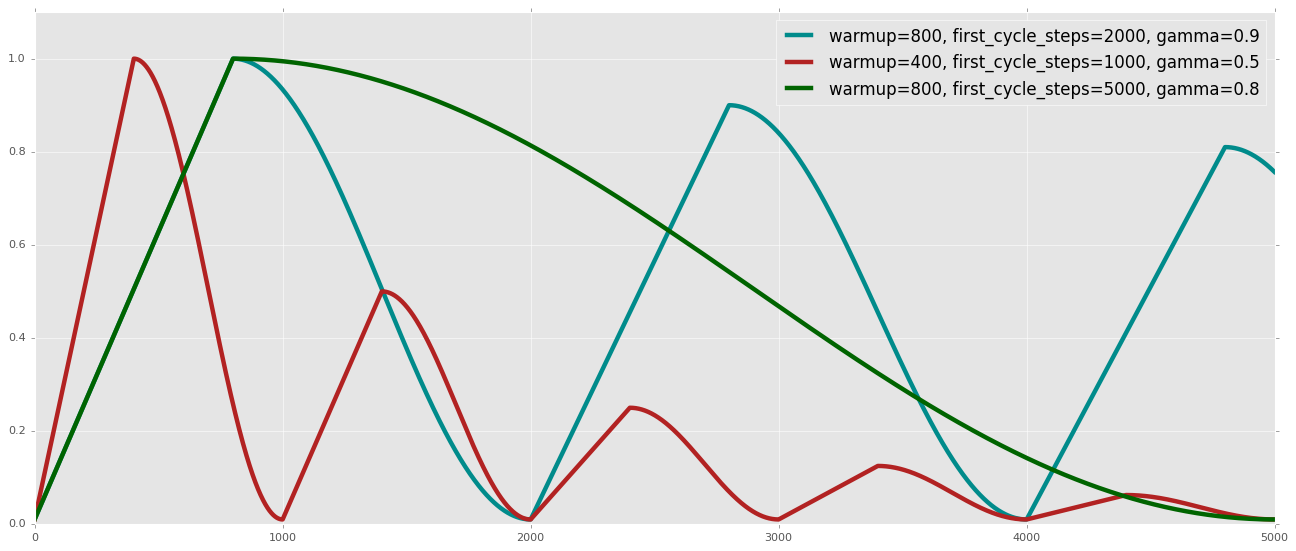

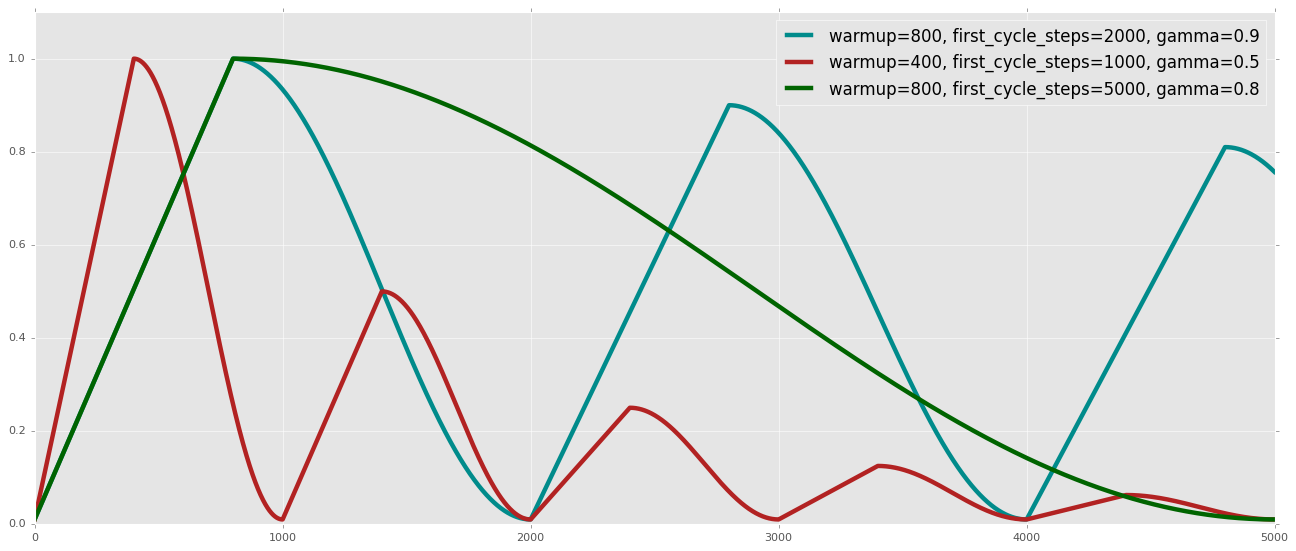

# Visual Example

Raw data

{

"_id": null,

"home_page": "",

"name": "cosine-warmup",

"maintainer": "",

"docs_url": null,

"requires_python": ">=3.8",

"maintainer_email": "",

"keywords": "torch,scheduler",

"author": "Arturo Ghinassi",

"author_email": "ghinassiarturo8@gmail.com",

"download_url": "https://files.pythonhosted.org/packages/b9/4d/2b586fc3c15276b57b96ba3368f4d8dde054d8ecb4a3603aaa1f5be365f4/cosine-warmup-0.0.0.tar.gz",

"platform": null,

"description": "# Cosine Annealing Scheduler with Linear Warmup\n\nImplementation of a Cosine Annealing Scheduler with Linear Warmup and Restarts in PyTorch. \\\nIt has support for multiple parameters groups and minimum target learning rates. \\\nAlso works with the Lightning Modules!\n\n# Installation\n\n```pip install 'git+https://github.com/santurini/cosine-annealing-linear-warmup'```\n\n# Usage\n\nIt is important to specify the parameters groups in the optimizer instantiation as the learning rates are directly inferred from the wrapped optimizer.\n\n#### Example: Multiple groups\n\n```\nfrom cosine_warmup import CosineAnnealingLinearWarmup\n\noptimizer = torch.optim.Adam([\n {\"params\": first_group_params, \"lr\": 1e-3},\n {\"params\": second_group_params, \"lr\": 1e-4},\n ]\n)\n\nscheduler = CosineAnnealingLinearWarmup(\n optimizer = optimizer,\n min_lrs = [ 1e-5, 1e-6 ],\n first_cycle_steps = 1000,\n warmup_steps = 500,\n gamma = 0.9\n )\n \n# this is equivalent to\n\nscheduler = CosineAnnealingLinearWarmup(\n optimizer = optimizer,\n min_lrs_pow = 2,\n first_cycle_steps = 1000,\n warmup_steps = 500,\n gamma = 0.9\n )\n```\n\n#### Example: Single groups\n\n```\nfrom cosine_linear_warmup import CosineAnnealingLinearWarmup\n\noptimizer = torch.optim.Adam(model.parameters(), lr=1e-3)\n\nscheduler = CosineAnnealingLinearWarmup(\n optimizer = optimizer,\n min_lrs = [ 1e-5 ],\n first_cycle_steps = 1000,\n warmup_steps = 500,\n gamma = 0.9\n )\n \n# this is equivalent to\n\nscheduler = CosineAnnealingLinearWarmup(\n optimizer = optimizer,\n min_lrs_pow = 2,\n first_cycle_steps = 1000,\n warmup_steps = 500,\n gamma = 0.9\n )\n```\n\n# Visual Example\n\n\n",

"bugtrack_url": null,

"license": "",

"summary": "Cosine Annealing Linear Warmup",

"version": "0.0.0",

"split_keywords": [

"torch",

"scheduler"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "11bfd5115a46a9546f79c7aad2f234eeab876721c2a93395623385fb40e584e9",

"md5": "e05c757a461a0e38a7afba7e98c2d47a",

"sha256": "8e3d4c0fa0057368282fd1c44d8cba636bb31a124ed56da38624ffe87b747748"

},

"downloads": -1,

"filename": "cosine_warmup-0.0.0-py3-none-any.whl",

"has_sig": false,

"md5_digest": "e05c757a461a0e38a7afba7e98c2d47a",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.8",

"size": 8065,

"upload_time": "2023-04-15T12:34:44",

"upload_time_iso_8601": "2023-04-15T12:34:44.008840Z",

"url": "https://files.pythonhosted.org/packages/11/bf/d5115a46a9546f79c7aad2f234eeab876721c2a93395623385fb40e584e9/cosine_warmup-0.0.0-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "b94d2b586fc3c15276b57b96ba3368f4d8dde054d8ecb4a3603aaa1f5be365f4",

"md5": "3590d40610a0e81bbe85ee3f59aef98a",

"sha256": "c7aa9c4483888a6f47471912292cbeaf96389747fea55cc8d794db865f1f336f"

},

"downloads": -1,

"filename": "cosine-warmup-0.0.0.tar.gz",

"has_sig": false,

"md5_digest": "3590d40610a0e81bbe85ee3f59aef98a",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.8",

"size": 7534,

"upload_time": "2023-04-15T12:34:45",

"upload_time_iso_8601": "2023-04-15T12:34:45.768452Z",

"url": "https://files.pythonhosted.org/packages/b9/4d/2b586fc3c15276b57b96ba3368f4d8dde054d8ecb4a3603aaa1f5be365f4/cosine-warmup-0.0.0.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2023-04-15 12:34:45",

"github": false,

"gitlab": false,

"bitbucket": false,

"lcname": "cosine-warmup"

}