# **cu-cat**

<img src="https://github.com/graphistry/cu-cat/blob/e2bae616f84aab8e6d5e173fc5363370d7680dc6/examples/cu_cat.png?raw=true" alt="cu_cat" width="200"/>

****cu-cat**** is an end-to-end gpu Python library that encodes

categorical variables into machine-learnable numerics. It is a cuda

accelerated port of what was dirty_cat, now rebranded as

[skrub](https://github.com/skrub-data/skrub), and allows more ambitious interactive analysis & real-time pipelines!

[Loom video walkthru](https://www.loom.com/share/d7fd4980b31949b7b840b230937a636f?sid=6d56b82e-9f50-4059-af9f-bfdc32cd3509)

# What can **cu-cat** do?

The latest PyGraphistry[AI] release GPU accelerates to its automatic feature encoding pipeline, and to do so, we are delighted to introduce the newest member to the open source GPU dataframe ecosystem: cu_cat!

The Graphistry team has been growing the library out of need. The straw that broke the camel’s back was in December 2022 when we were hacking on our winning entry to the US Cyber Command AI competition for automatically correlating & triaging gigabytes of alerts, and we realized that what was slowing down our team's iteration cycles was CPU-based feature engineering, basically pouring sand into our otherwise humming end-to-end GPU AI pipeline. Two months later, cu_cat was born. Fast forward to now, and we are getting ready to make it default-on for all our work.

Hinted by its name, cu_cat is our GPU-accelerated open source fork of the popular CPU Python library dirty_cat. Like dirty_cat, cu_cat makes it easy to convert messy dataframes filled with numbers, strings, and timestamps into numeric feature columns optimized for AI models. It adds interoperability for GPU dataframes and replaces key kernels and algorithms with faster and more scalable GPU variants. Even on low-end GPUs, we are now able to tackle much larger datasets in the same amount of time – or for the first time! – with end-to-end pipelines. We typically save time with **3-5X speedups and will even see 10X+**, to the point that the more data you encode, the more time you save!

# What can **cu-cat** NOT do?

Since **cu_cat** is limited to CUDF/CUML dataframes, it is not a drop-in replacement for dirty_cat. While it can also fallback to CPU, it is also not a drop-in replacement for the CPU-based dirty_cat, and we are not planning to make it one. We developed this library to accelerate our own **graphistry** end-to-end pipelines, and as such it is only `TableVectorizer` and `GapEncoder` which have been optimized to take advantage of a GPU speed boost.

Similarly, **cu_cat** requires pandas or cudf input numpy array can be featurized but cannot be UMAP-ed since they lack index, and are thus not supported.

# What degree of speed boost can I expect with **cu-cat**, compared to dirty-cat or similar CPU feature encoders?

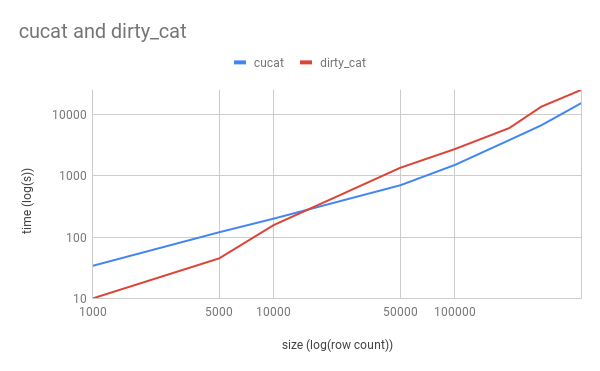

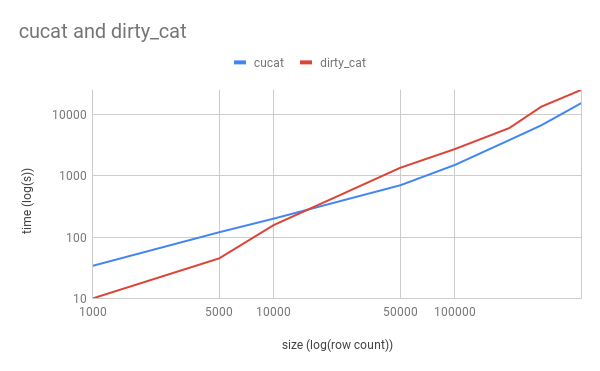

We have routinely experienced boosts of 2x on smaller datasets to 10x and more as one scales data into millions of features (features roughly equates to unique elements in rows x columns). One can observe this in the video above, demonstrated in the following plots:

There is an inflection point when overhead of transing data to GPU is offset by speed boost, as we can see here. The axis represent unique features being inferred.

As we can see, with scale the divergence in speed is obvious.

However, this graph does not mean to imply the trend goes on forever, as currently **cu-cat** is single GPU and cannot batch (as the transfer cost is too much for our current needs), and thus each dataset, and indeed GPU + GPU memory, is unique, and thus these plots are meant merely for demonstrative purposes.

GPU = colab T4 + 15gb mem and colab CPU + 12gb memory

## Startup Code demonstrating speedup:

! pip install cu-cat dirty-cat

from time import time

from cu_cat._table_vectorizer import TableVectorizer as cu_TableVectorizer

from dirty_cat._table_vectorizer import TableVectorizer as dirty_TableVectorizer

from sklearn.datasets import fetch_20newsgroups

n_samples = 2000 # speed boost improves as n_samples increases, to the limit of gpu mem

news, _ = fetch_20newsgroups(

shuffle=True,

random_state=1,

remove=("headers", "footers", "quotes"),

return_X_y=True,

)

news = news[:n_samples]

news=pd.DataFrame(news)

table_vec = cu_TableVectorizer()

t = time()

aa = table_vec.fit_transform((news))

ct = time() - t

# if deps.dirty_cat:

t = time()

bb = dirty_TableVectorizer().fit_transform(news)

dt = time() - t

print(f"cu_cat: {ct:.2f}s, dirty_cat: {dt:.2f}s, speedup: {dt/ct:.2f}x")

>>> cu_cat: 58.76s, dirty_cat: 84.54s, speedup: 1.44x

## Enhanced Code using Graphistry:

# !pip install graphistry[ai] ## future releases will have this by default

!pip install git+https://github.com/graphistry/pygraphistry.git@dev/depman_gpufeat

import cudf

import graphistry

df = cudf.read_csv(...)

g = graphistry.nodes(df).featurize(feature_engine='cu_cat')

print(g._node_features.describe()) # friendly dataframe interfaces

g.umap().plot() # ML/AI embedding model using the features

## Example notebooks

[Hello cu-cat notebook](https://github.com/dcolinmorgan/grph/blob/main/Hello_cu_cat.ipynb) goes in-depth on how to identify and deal with messy data using the **cu-cat** library.

**CPU v GPU Biological Demos:**

- Single Cell analysis [generically](https://github.com/dcolinmorgan/grph/blob/main/single_cell_umap_before_gpu.ipynb) and Single Cell analysis [accelerated by **cu-cat**](https://github.com/dcolinmorgan/grph/blob/main/single_cell_after_gpu.ipynb)

- Chemical Mapping [generically](https://github.com/dcolinmorgan/grph/blob/main/generic_chemical_mappings.ipynb) and Chemical Mapping [accelerated with **cu-cat**](https://github.com/dcolinmorgan/grph/blob/main/accelerating_chemical_mappings.ipynb)

- Metagenomic Analysis [generically](https://github.com/dcolinmorgan/grph/blob/main/generic_metagenomic_demo.ipynb) and Metagenomic Analysis [accelerated with **cu-cat**](https://github.com/dcolinmorgan/grph/blob/main/accelerating_metagenomic_demo.ipynb)

## Dependencies

Major dependencies the cuml and cudf libraries, as well as [standard

python

libraries](https://github.com/skrub-data/skrub/blob/main/setup.cfg)

# Related projects

dirty_cat is now rebranded as part of the sklearn family as

[skrub](https://github.com/skrub-data/skrub)

Raw data

{

"_id": null,

"home_page": "https://github.com/graphistry/cu-cat",

"name": "cu-cat",

"maintainer": "",

"docs_url": null,

"requires_python": ">=3.8",

"maintainer_email": "",

"keywords": "cudf,cuml,GPU,Rapids",

"author": "The Graphistry Team",

"author_email": "pygraphistry@graphistry.com",

"download_url": "https://files.pythonhosted.org/packages/92/ae/d4336492fcfb1d97fd84d7e08563553bdea6f488ad6f6036d4faf4d443a3/cu-cat-0.9.11.tar.gz",

"platform": "any",

"description": "\n# **cu-cat** \n<img src=\"https://github.com/graphistry/cu-cat/blob/e2bae616f84aab8e6d5e173fc5363370d7680dc6/examples/cu_cat.png?raw=true\" alt=\"cu_cat\" width=\"200\"/>\n\n****cu-cat**** is an end-to-end gpu Python library that encodes\ncategorical variables into machine-learnable numerics. It is a cuda\naccelerated port of what was dirty_cat, now rebranded as\n[skrub](https://github.com/skrub-data/skrub), and allows more ambitious interactive analysis & real-time pipelines!\n\n[Loom video walkthru](https://www.loom.com/share/d7fd4980b31949b7b840b230937a636f?sid=6d56b82e-9f50-4059-af9f-bfdc32cd3509)\n\n# What can **cu-cat** do?\n\nThe latest PyGraphistry[AI] release GPU accelerates to its automatic feature encoding pipeline, and to do so, we are delighted to introduce the newest member to the open source GPU dataframe ecosystem: cu_cat! \nThe Graphistry team has been growing the library out of need. The straw that broke the camel\u2019s back was in December 2022 when we were hacking on our winning entry to the US Cyber Command AI competition for automatically correlating & triaging gigabytes of alerts, and we realized that what was slowing down our team's iteration cycles was CPU-based feature engineering, basically pouring sand into our otherwise humming end-to-end GPU AI pipeline. Two months later, cu_cat was born. Fast forward to now, and we are getting ready to make it default-on for all our work.\n\nHinted by its name, cu_cat is our GPU-accelerated open source fork of the popular CPU Python library dirty_cat. Like dirty_cat, cu_cat makes it easy to convert messy dataframes filled with numbers, strings, and timestamps into numeric feature columns optimized for AI models. It adds interoperability for GPU dataframes and replaces key kernels and algorithms with faster and more scalable GPU variants. Even on low-end GPUs, we are now able to tackle much larger datasets in the same amount of time \u2013 or for the first time! \u2013 with end-to-end pipelines. We typically save time with **3-5X speedups and will even see 10X+**, to the point that the more data you encode, the more time you save!\n\n# What can **cu-cat** NOT do?\n\nSince **cu_cat** is limited to CUDF/CUML dataframes, it is not a drop-in replacement for dirty_cat. While it can also fallback to CPU, it is also not a drop-in replacement for the CPU-based dirty_cat, and we are not planning to make it one. We developed this library to accelerate our own **graphistry** end-to-end pipelines, and as such it is only `TableVectorizer` and `GapEncoder` which have been optimized to take advantage of a GPU speed boost.\n\nSimilarly, **cu_cat** requires pandas or cudf input numpy array can be featurized but cannot be UMAP-ed since they lack index, and are thus not supported.\n\n# What degree of speed boost can I expect with **cu-cat**, compared to dirty-cat or similar CPU feature encoders?\n\nWe have routinely experienced boosts of 2x on smaller datasets to 10x and more as one scales data into millions of features (features roughly equates to unique elements in rows x columns). One can observe this in the video above, demonstrated in the following plots:\n\nThere is an inflection point when overhead of transing data to GPU is offset by speed boost, as we can see here. The axis represent unique features being inferred.\n\n\n\nAs we can see, with scale the divergence in speed is obvious.\n\n\n\nHowever, this graph does not mean to imply the trend goes on forever, as currently **cu-cat** is single GPU and cannot batch (as the transfer cost is too much for our current needs), and thus each dataset, and indeed GPU + GPU memory, is unique, and thus these plots are meant merely for demonstrative purposes.\nGPU = colab T4 + 15gb mem and colab CPU + 12gb memory\n\n\n## Startup Code demonstrating speedup:\n\n ! pip install cu-cat dirty-cat\n from time import time\n from cu_cat._table_vectorizer import TableVectorizer as cu_TableVectorizer\n from dirty_cat._table_vectorizer import TableVectorizer as dirty_TableVectorizer\n from sklearn.datasets import fetch_20newsgroups\n n_samples = 2000 # speed boost improves as n_samples increases, to the limit of gpu mem\n\n news, _ = fetch_20newsgroups(\n shuffle=True,\n random_state=1,\n remove=(\"headers\", \"footers\", \"quotes\"),\n return_X_y=True,\n )\n\n news = news[:n_samples]\n news=pd.DataFrame(news)\n table_vec = cu_TableVectorizer()\n t = time()\n aa = table_vec.fit_transform((news))\n ct = time() - t\n # if deps.dirty_cat:\n t = time()\n bb = dirty_TableVectorizer().fit_transform(news)\n dt = time() - t\n print(f\"cu_cat: {ct:.2f}s, dirty_cat: {dt:.2f}s, speedup: {dt/ct:.2f}x\")\n >>> cu_cat: 58.76s, dirty_cat: 84.54s, speedup: 1.44x\n## Enhanced Code using Graphistry:\n\n # !pip install graphistry[ai] ## future releases will have this by default\n !pip install git+https://github.com/graphistry/pygraphistry.git@dev/depman_gpufeat\n\n import cudf\n import graphistry\n df = cudf.read_csv(...)\n g = graphistry.nodes(df).featurize(feature_engine='cu_cat')\n print(g._node_features.describe()) # friendly dataframe interfaces\n g.umap().plot() # ML/AI embedding model using the features\n\n\n## Example notebooks \n\n[Hello cu-cat notebook](https://github.com/dcolinmorgan/grph/blob/main/Hello_cu_cat.ipynb) goes in-depth on how to identify and deal with messy data using the **cu-cat** library.\n\n**CPU v GPU Biological Demos:**\n- Single Cell analysis [generically](https://github.com/dcolinmorgan/grph/blob/main/single_cell_umap_before_gpu.ipynb) and Single Cell analysis [accelerated by **cu-cat**](https://github.com/dcolinmorgan/grph/blob/main/single_cell_after_gpu.ipynb)\n\n- Chemical Mapping [generically](https://github.com/dcolinmorgan/grph/blob/main/generic_chemical_mappings.ipynb) and Chemical Mapping [accelerated with **cu-cat**](https://github.com/dcolinmorgan/grph/blob/main/accelerating_chemical_mappings.ipynb)\n\n- Metagenomic Analysis [generically](https://github.com/dcolinmorgan/grph/blob/main/generic_metagenomic_demo.ipynb) and Metagenomic Analysis [accelerated with **cu-cat**](https://github.com/dcolinmorgan/grph/blob/main/accelerating_metagenomic_demo.ipynb)\n\n\n## Dependencies\n\nMajor dependencies the cuml and cudf libraries, as well as [standard\npython\nlibraries](https://github.com/skrub-data/skrub/blob/main/setup.cfg)\n\n# Related projects\n\ndirty_cat is now rebranded as part of the sklearn family as\n[skrub](https://github.com/skrub-data/skrub)\n\n\n",

"bugtrack_url": null,

"license": "BSD",

"summary": "An end-to-end gpu Python library that encodes categorical variables into machine-learnable numerics",

"version": "0.9.11",

"project_urls": {

"Download": "https://github.com/graphistry/cu-cat",

"Homepage": "https://github.com/graphistry/cu-cat"

},

"split_keywords": [

"cudf",

"cuml",

"gpu",

"rapids"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "3cae26283abfd3559fe7822bb525a72751d8c9dd5ee8730ded433cd5e3359655",

"md5": "858cd394fdf39750a20a02b22a430b59",

"sha256": "4d666320422e8e2c36eeb4f0ccb51851e6c0bdeb298108c69296c80f6f7d5939"

},

"downloads": -1,

"filename": "cu_cat-0.9.11-py3-none-any.whl",

"has_sig": false,

"md5_digest": "858cd394fdf39750a20a02b22a430b59",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.8",

"size": 37524,

"upload_time": "2024-02-08T01:32:42",

"upload_time_iso_8601": "2024-02-08T01:32:42.900304Z",

"url": "https://files.pythonhosted.org/packages/3c/ae/26283abfd3559fe7822bb525a72751d8c9dd5ee8730ded433cd5e3359655/cu_cat-0.9.11-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "92aed4336492fcfb1d97fd84d7e08563553bdea6f488ad6f6036d4faf4d443a3",

"md5": "a8c9f84ce7ed612a73b0c256f2c41a9f",

"sha256": "6b220fa73a82fb5d20bd9f109635835c37ba6d3b32d19385f144c1aee76ddaad"

},

"downloads": -1,

"filename": "cu-cat-0.9.11.tar.gz",

"has_sig": false,

"md5_digest": "a8c9f84ce7ed612a73b0c256f2c41a9f",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.8",

"size": 53912,

"upload_time": "2024-02-08T01:32:45",

"upload_time_iso_8601": "2024-02-08T01:32:45.553208Z",

"url": "https://files.pythonhosted.org/packages/92/ae/d4336492fcfb1d97fd84d7e08563553bdea6f488ad6f6036d4faf4d443a3/cu-cat-0.9.11.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2024-02-08 01:32:45",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "graphistry",

"github_project": "cu-cat",

"travis_ci": false,

"coveralls": true,

"github_actions": true,

"lcname": "cu-cat"

}