<div align="center">

<img src="https://raw.githubusercontent.com/joekakone/db-analytics-tools/master/cover.png"><br>

</div>

# DB Analytics Tools

Databases Analytics Tools is a Python open source micro framework for data analytics. DB Analytics Tools is built on top of Psycopg2, Pyodbc, Pandas, Matplotlib and Scikit-learn. It helps data analysts to interact with data warehouses as traditional databases clients.

## Why adopt DB Analytics Tools ?

- Easy to learn : It is high level API and doesn't require any special effort to learn.

- Real problems solver : It is designed to solve real life problems of the Data Analyst

- All in One : Support queries, Data Integration, Analysis, Visualization and Machine Learning

## Core Components

<table>

<tr>

<th>#</th>

<th>Component</th>

<th>Description</th>

<th>How to import</th>

</tr>

<tr>

<td>0</td>

<td>db</td>

<td>Database Interactions (Client)</td>

<td><code>import db_analytics_tools as db</code></td>

</tr>

<tr>

<td>1</td>

<td>dbi</td>

<td>Data Integration & Data Engineering</td>

<td><code>import db_analytics_tools.integration as dbi</code></td>

</tr>

<tr>

<td>2</td>

<td>dba</td>

<td>Data Analysis</td>

<td><code>import db_analytics_tools.analytics as dba</code></td>

</tr>

<tr>

<td>3</td>

<td>dbviz</td>

<td>Data Visualization</td>

<td><code>import db_analytics_tools.plotting as dbviz</code></td>

</tr>

<tr>

<td>4</td>

<td>dbml</td>

<td>Machine Learning & MLOps</td>

<td><code>import db_analytics_tools.learning as dbml</code></td>

</tr>

</table>

## Install DB Analytics Tools

### Dependencies

DB Analytics Tools requires

* Python

* Psycopg2

* Pyodbc

* Pandas

* SQLAlchemy

* Streamlit

DB Analytics Tools can easily installed using pip

```sh

pip install db-analytics-tools

```

## Get Started

### Setup client

As traditional databases clients, we need to provide database server ip address and port and credentials. DB Analytics Tools supports Postgres and SQL Server.

```python

# Import DB Analytics Tools

import db_analytics_tools as db

# Database Infos & Credentials

ENGINE = "postgres"

HOST = "localhost"

PORT = "5432"

DATABASE = "postgres"

USER = "postgres"

PASSWORD = "admin"

# Setup client

client = db.Client(host=HOST, port=PORT, database=DATABASE, username=USER, password=PASSWORD, engine=ENGINE)

```

### Data Definition Language

```python

query = """

----- CREATE TABLE -----

drop table if exists public.transactions;

create table public.transactions (

transaction_id integer primary key,

client_id integer,

product_name varchar(255),

product_category varchar(255),

quantity integer,

unitary_price numeric,

amount numeric

);

"""

client.execute(query=query)

```

### Data Manipulation Language

```python

query = """

----- POPULATE TABLE -----

insert into public.transactions (transaction_id, client_id, product_name, product_category, quantity, unitary_price, amount)

values

(1,101,'Product A','Category 1',5,100,500),

(2,102,'Product B','Category 2',3,50,150),

(3,103,'Product C','Category 1',2,200,400),

(4,102,'Product A','Category 1',7,100,700),

(5,105,'Product B','Category 2',4,50,200),

(6,101,'Product C','Category 1',1,200,200),

(7,104,'Product A','Category 1',6,100,600),

(8,103,'Product B','Category 2',2,50,100),

(9,103,'Product C','Category 1',8,200,1600),

(10,105,'Product A','Category 1',3,100,300);

"""

client.execute(query=query)

```

### Data Query Language

```python

query = """

----- GET DATA -----

select *

from public.transactions

order by transaction_id;

"""

dataframe = client.read_sql(query=query)

print(dataframe.head())

```

```txt

transaction_id client_id product_name product_category quantity unitary_price amount

0 1 101 Product A Category 1 5 100.0 500.0

1 2 102 Product B Category 2 3 50.0 150.0

2 3 103 Product C Category 1 2 200.0 400.0

3 4 102 Product A Category 1 7 100.0 700.0

4 5 105 Product B Category 2 4 50.0 200.0

```

## Show current queries

You can simply show current queries for current user.

```py

client.show_sessions()

```

You can cancel query by its session_id.

```py

client.cancel_query(10284)

```

You can go further cancelling on lock

```py

client.cancel_locked_queries()

```

This will canceled all current lockes queries.

## Implement SQL based ETL

ETL API is in the integration module `db_analytics_tools.integration`. Let's import it ans create an ETL object.

```python

# Import Integration module

import db_analytics_tools.integration as dbi

# Setup ETL

etl = dbi.ETL(client=client)

```

ETLs for DB Analytics Tools consists in functions with date parameters. Everything is done in one place i.e on the database. So first create a function on the database like this :

```python

query = """

----- CREATE FUNCTION ON DB -----

create or replace function public.fn_test(rundt date) returns integer

language plpgsql

as

$$

begin

--- DEBUG MESSAGE ---

raise notice 'rundt : %', rundt;

--- EXTRACT ---

--- TRANSFORM ---

--- LOAD ---

return 0;

end;

$$;

"""

client.execute(query=query)

```

### Run a function

Then ETL function can easily be run using the ETL class via the method `ETL.run()`

```python

# ETL Function

FUNCTION = "public.fn_test"

## Dates to run

START = "2023-08-01"

STOP = "2023-08-05"

# Run ETL

etl.run(function=FUNCTION, start_date=START, stop_date=STOP, freq="d", reverse=False)

```

```

Function : public.fn_test

Date Range : From 2023-08-01 to 2023-08-05

Iterations : 5

[Runing Date: 2023-08-01] [Function: public.fn_test] Execution time: 0:00:00.122600

[Runing Date: 2023-08-02] [Function: public.fn_test] Execution time: 0:00:00.049324

[Runing Date: 2023-08-03] [Function: public.fn_test] Execution time: 0:00:00.049409

[Runing Date: 2023-08-04] [Function: public.fn_test] Execution time: 0:00:00.050019

[Runing Date: 2023-08-05] [Function: public.fn_test] Execution time: 0:00:00.108267

```

### Run several functions

Most of time, several ETL must be run and DB Analytics Tools supports running functions as pipelines.

```python

## ETL Functions

FUNCTIONS = [

"public.fn_test",

"public.fn_test_long",

"public.fn_test_very_long"

]

## Dates to run

START = "2023-08-01"

STOP = "2023-08-05"

# Run ETLs

etl.run_multiple(functions=FUNCTIONS, start_date=START, stop_date=STOP, freq="d", reverse=False)

```

```

Functions : ['public.fn_test', 'public.fn_test_long', 'public.fn_test_very_long']

Date Range : From 2023-08-01 to 2023-08-05

Iterations : 5

*********************************************************************************************

[Runing Date: 2023-08-01] [Function: public.fn_test..........] Execution time: 0:00:00.110408

[Runing Date: 2023-08-01] [Function: public.fn_test_long.....] Execution time: 0:00:00.112078

[Runing Date: 2023-08-01] [Function: public.fn_test_very_long] Execution time: 0:00:00.092423

*********************************************************************************************

[Runing Date: 2023-08-02] [Function: public.fn_test..........] Execution time: 0:00:00.111153

[Runing Date: 2023-08-02] [Function: public.fn_test_long.....] Execution time: 0:00:00.111395

[Runing Date: 2023-08-02] [Function: public.fn_test_very_long] Execution time: 0:00:00.110814

*********************************************************************************************

[Runing Date: 2023-08-03] [Function: public.fn_test..........] Execution time: 0:00:00.111044

[Runing Date: 2023-08-03] [Function: public.fn_test_long.....] Execution time: 0:00:00.123229

[Runing Date: 2023-08-03] [Function: public.fn_test_very_long] Execution time: 0:00:00.078432

*********************************************************************************************

[Runing Date: 2023-08-04] [Function: public.fn_test..........] Execution time: 0:00:00.127839

[Runing Date: 2023-08-04] [Function: public.fn_test_long.....] Execution time: 0:00:00.111339

[Runing Date: 2023-08-04] [Function: public.fn_test_very_long] Execution time: 0:00:00.140669

*********************************************************************************************

[Runing Date: 2023-08-05] [Function: public.fn_test..........] Execution time: 0:00:00.138380

[Runing Date: 2023-08-05] [Function: public.fn_test_long.....] Execution time: 0:00:00.111157

[Runing Date: 2023-08-05] [Function: public.fn_test_very_long] Execution time: 0:00:00.077731

*********************************************************************************************

```

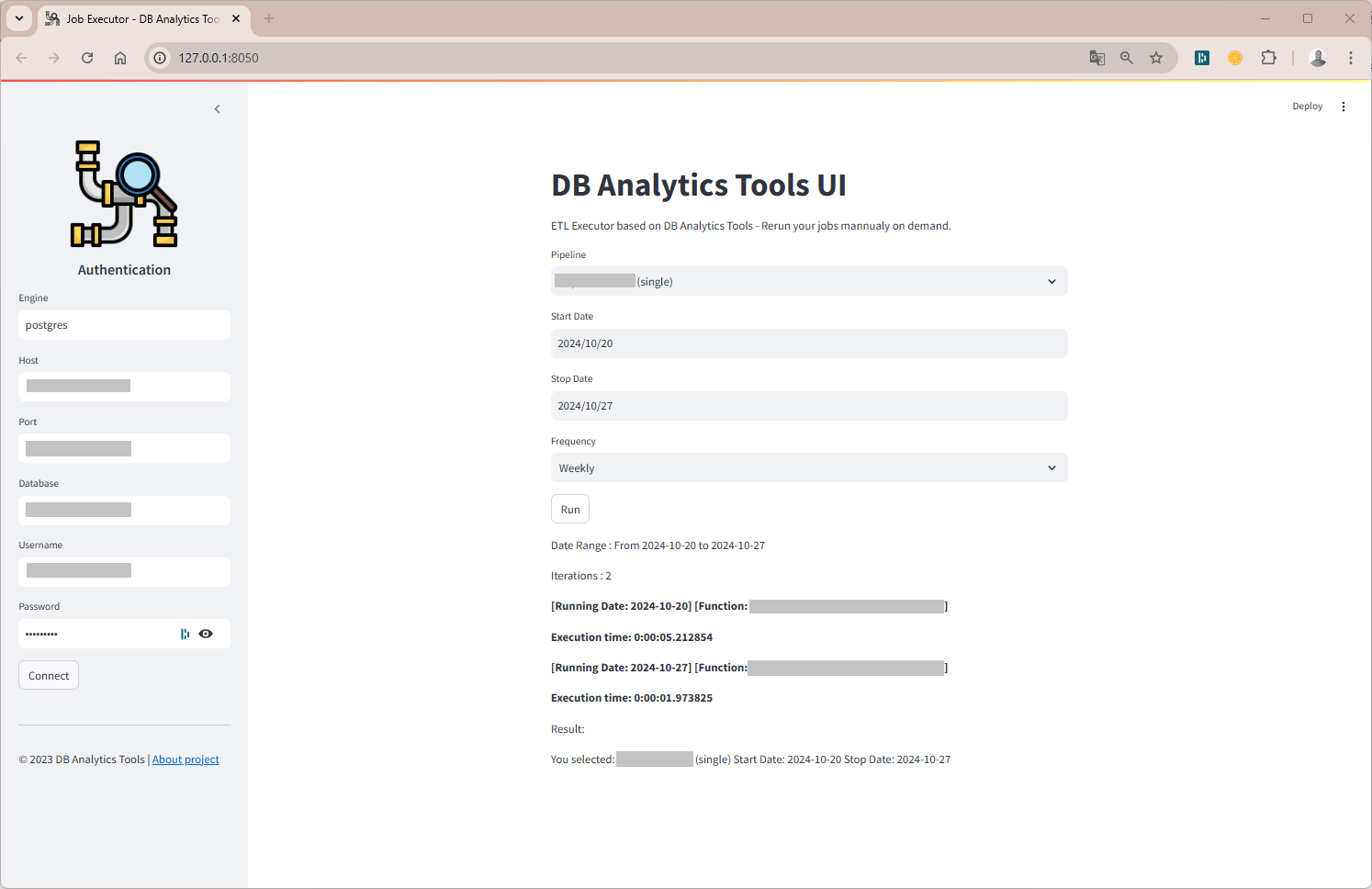

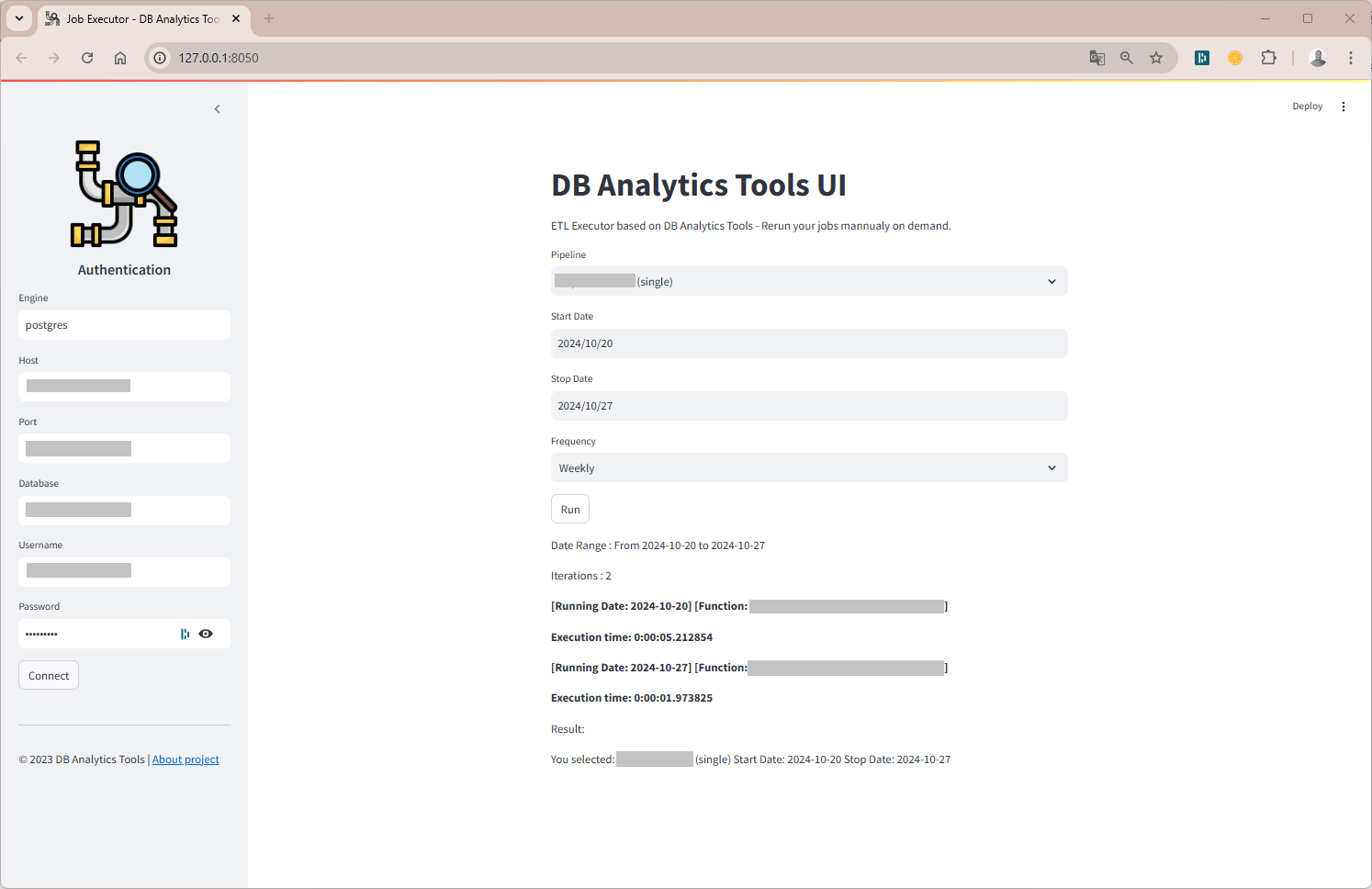

## Get started with the UI

DB Analytics Tools UI is a web-based GUI (`db_analytics_tools.webapp.UI`). No need to code, all you need is a JSON config file. Run the command below :

```sh

db_tools start --config config.json --address 127.0.0.1 --port 8050

```

## Interact with Airflow

We also provide a class for interacting with the Apache Airflow REST API.

```py

# Import Airflow class

from db_analytics_tools.airflow import AirflowRESTAPI

# Airflow Config

AIRFLOW_BASE_URL = "http://localhost:8080"

AIRFLOW_API_ENDPOINT = "api/v2/"

AIRFLOW_USERNAME = "airflow"

AIRFLOW_PASSWORD = "airflow"

# Create an instance

airflow = AirflowRESTAPI(AIRFLOW_BASE_URL, AIRFLOW_API_ENDPOINT, AIRFLOW_USERNAME, AIRFLOW_PASSWORD)

# Get list of DAGs

airflow.get_dags_list(include_all=False).head(10)

# Get a DAG details

airflow.get_dag_details(dag_id="my_airflow_pipeline", include_tasks=False)

# Get list of tasks of a DAG

airflow.get_dag_tasks(dag_id="my_airflow_pipeline").head(10)

# Trigger a DAG

airflow.trigger_dag(dag_id="my_airflow_pipeline", start_date='2025-03-11', end_date='2025-03-12')

# Backfill a DAG

airflow.backfill_dag(dag_id="my_airflow_pipeline", start_date='2025-03-01', end_date='2025-03-12', reprocess_behavior="failed")

```

## Forecasting

```py

# Import Forecast class

from db_analytics_tools.learning import ForecastKPI

# Create an instance

forecast = ForecastKPI(historical_data=df, date_column="dt")

# Summary

print(forecast.describe())

# Decomposition

decomposition_result = forecast.decompose_time_series(kpi_name='active_1d', period=7, model='additive', plot=True)

print(decomposition_result.trend.head())

print(decomposition_result.seasonal.head())

```

## Documentation

Documentation available on [https://joekakone.github.io/db-analytics-tools](https://joekakone.github.io/db-analytics-tools).

## Help and Support

If you need help on DB Analytics Tools, please send me an message on [Whatsapp](https://wa.me/+22891518923) or send me a [mail](mailto:contact@josephkonka.com).

## Contributing

[Please see the contributing docs.](CONTRIBUTING.md)

## Maintainer

DB Analytics Tools is maintained by [Joseph Konka](https://www.linkedin.com/in/joseph-koami-konka/). Joseph is a Data Science Professional with a focus on Python based tools. He developed the base code while working at Togocom to automate his daily tasks. He packages the code into a Python package called **SQL ETL Runner** which becomes **Databases Analytics Tools**. For more about Joseph Konka, please visit [www.josephkonka.com](https://josephkonka.com).

## Let's get in touch

[](https://github.com/joekakone) [](https://www.linkedin.com/in/joseph-koami-konka/) [](https://www.twitter.com/joekakone) [](mailto:joseph.kakone@gmail.com)

Raw data

{

"_id": null,

"home_page": "https://joekakone.github.io/#projects",

"name": "db-analytics-tools",

"maintainer": null,

"docs_url": null,

"requires_python": ">=3.10",

"maintainer_email": null,

"keywords": "databases analytics etl sql orc",

"author": "Joseph Konka",

"author_email": "joseph.kakone@gmail.com",

"download_url": "https://files.pythonhosted.org/packages/b2/22/19f519afab937837d344fee56420c4cfd78f536bd26a334126dc60a3c1b2/db_analytics_tools-0.1.8.2.tar.gz",

"platform": null,

"description": "<div align=\"center\">\n <img src=\"https://raw.githubusercontent.com/joekakone/db-analytics-tools/master/cover.png\"><br>\n</div>\n\n# DB Analytics Tools\nDatabases Analytics Tools is a Python open source micro framework for data analytics. DB Analytics Tools is built on top of Psycopg2, Pyodbc, Pandas, Matplotlib and Scikit-learn. It helps data analysts to interact with data warehouses as traditional databases clients.\n\n\n## Why adopt DB Analytics Tools ?\n- Easy to learn : It is high level API and doesn't require any special effort to learn.\n- Real problems solver : It is designed to solve real life problems of the Data Analyst\n- All in One : Support queries, Data Integration, Analysis, Visualization and Machine Learning\n\n\n## Core Components\n<table>\n <tr>\n <th>#</th>\n <th>Component</th>\n <th>Description</th>\n <th>How to import</th>\n </tr>\n <tr>\n <td>0</td>\n <td>db</td>\n <td>Database Interactions (Client)</td>\n <td><code>import db_analytics_tools as db</code></td>\n </tr>\n <tr>\n <td>1</td>\n <td>dbi</td>\n <td>Data Integration & Data Engineering</td>\n <td><code>import db_analytics_tools.integration as dbi</code></td>\n </tr>\n <tr>\n <td>2</td>\n <td>dba</td>\n <td>Data Analysis</td>\n <td><code>import db_analytics_tools.analytics as dba</code></td>\n </tr>\n <tr>\n <td>3</td>\n <td>dbviz</td>\n <td>Data Visualization</td>\n <td><code>import db_analytics_tools.plotting as dbviz</code></td>\n </tr>\n <tr>\n <td>4</td>\n <td>dbml</td>\n <td>Machine Learning & MLOps</td>\n <td><code>import db_analytics_tools.learning as dbml</code></td>\n </tr>\n</table>\n\n\n## Install DB Analytics Tools\n### Dependencies\nDB Analytics Tools requires\n* Python\n* Psycopg2\n* Pyodbc\n* Pandas\n* SQLAlchemy\n* Streamlit\n\nDB Analytics Tools can easily installed using pip\n```sh\npip install db-analytics-tools\n```\n\n\n## Get Started\n### Setup client\nAs traditional databases clients, we need to provide database server ip address and port and credentials. DB Analytics Tools supports Postgres and SQL Server.\n```python\n# Import DB Analytics Tools\nimport db_analytics_tools as db\n\n# Database Infos & Credentials\nENGINE = \"postgres\"\nHOST = \"localhost\"\nPORT = \"5432\"\nDATABASE = \"postgres\"\nUSER = \"postgres\"\nPASSWORD = \"admin\"\n\n# Setup client\nclient = db.Client(host=HOST, port=PORT, database=DATABASE, username=USER, password=PASSWORD, engine=ENGINE)\n```\n\n### Data Definition Language\n```python\nquery = \"\"\"\n----- CREATE TABLE -----\ndrop table if exists public.transactions;\ncreate table public.transactions (\n transaction_id integer primary key,\n client_id integer,\n product_name varchar(255),\n product_category varchar(255),\n quantity integer,\n unitary_price numeric,\n amount numeric\n);\n\"\"\"\n\nclient.execute(query=query)\n```\n\n### Data Manipulation Language\n```python\nquery = \"\"\"\n----- POPULATE TABLE -----\ninsert into public.transactions (transaction_id, client_id, product_name, product_category, quantity, unitary_price, amount)\nvalues\n\t(1,101,'Product A','Category 1',5,100,500),\n\t(2,102,'Product B','Category 2',3,50,150),\n\t(3,103,'Product C','Category 1',2,200,400),\n\t(4,102,'Product A','Category 1',7,100,700),\n\t(5,105,'Product B','Category 2',4,50,200),\n\t(6,101,'Product C','Category 1',1,200,200),\n\t(7,104,'Product A','Category 1',6,100,600),\n\t(8,103,'Product B','Category 2',2,50,100),\n\t(9,103,'Product C','Category 1',8,200,1600),\n\t(10,105,'Product A','Category 1',3,100,300);\n\"\"\"\n\nclient.execute(query=query)\n```\n\n### Data Query Language\n```python\nquery = \"\"\"\n----- GET DATA -----\nselect *\nfrom public.transactions\norder by transaction_id;\n\"\"\"\n\ndataframe = client.read_sql(query=query)\nprint(dataframe.head())\n```\n```txt\n transaction_id client_id product_name product_category quantity unitary_price amount\n0 1 101 Product A Category 1 5 100.0 500.0\n1 2 102 Product B Category 2 3 50.0 150.0\n2 3 103 Product C Category 1 2 200.0 400.0\n3 4 102 Product A Category 1 7 100.0 700.0\n4 5 105 Product B Category 2 4 50.0 200.0\n```\n\n## Show current queries\nYou can simply show current queries for current user.\n```py\nclient.show_sessions()\n```\n\nYou can cancel query by its session_id.\n```py\nclient.cancel_query(10284)\n```\n\nYou can go further cancelling on lock\n```py\nclient.cancel_locked_queries()\n```\nThis will canceled all current lockes queries.\n\n## Implement SQL based ETL\nETL API is in the integration module `db_analytics_tools.integration`. Let's import it ans create an ETL object.\n```python\n# Import Integration module\nimport db_analytics_tools.integration as dbi\n\n# Setup ETL\netl = dbi.ETL(client=client)\n```\n\nETLs for DB Analytics Tools consists in functions with date parameters. Everything is done in one place i.e on the database. So first create a function on the database like this :\n```python\nquery = \"\"\"\n----- CREATE FUNCTION ON DB -----\ncreate or replace function public.fn_test(rundt date) returns integer\nlanguage plpgsql\nas\n$$\nbegin\n\t--- DEBUG MESSAGE ---\n\traise notice 'rundt : %', rundt;\n\n\t--- EXTRACT ---\n\n\t--- TRANSFORM ---\n\n\t--- LOAD ---\n\n\treturn 0;\nend;\n$$;\n\"\"\"\n\nclient.execute(query=query)\n```\n### Run a function\nThen ETL function can easily be run using the ETL class via the method `ETL.run()`\n```python\n# ETL Function\nFUNCTION = \"public.fn_test\"\n\n## Dates to run\nSTART = \"2023-08-01\"\nSTOP = \"2023-08-05\"\n\n# Run ETL\netl.run(function=FUNCTION, start_date=START, stop_date=STOP, freq=\"d\", reverse=False)\n```\n```\nFunction : public.fn_test\nDate Range : From 2023-08-01 to 2023-08-05\nIterations : 5\n[Runing Date: 2023-08-01] [Function: public.fn_test] Execution time: 0:00:00.122600\n[Runing Date: 2023-08-02] [Function: public.fn_test] Execution time: 0:00:00.049324\n[Runing Date: 2023-08-03] [Function: public.fn_test] Execution time: 0:00:00.049409\n[Runing Date: 2023-08-04] [Function: public.fn_test] Execution time: 0:00:00.050019\n[Runing Date: 2023-08-05] [Function: public.fn_test] Execution time: 0:00:00.108267\n```\n\n### Run several functions\nMost of time, several ETL must be run and DB Analytics Tools supports running functions as pipelines.\n```python\n## ETL Functions\nFUNCTIONS = [\n \"public.fn_test\",\n \"public.fn_test_long\",\n \"public.fn_test_very_long\"\n]\n\n## Dates to run\nSTART = \"2023-08-01\"\nSTOP = \"2023-08-05\"\n\n# Run ETLs\netl.run_multiple(functions=FUNCTIONS, start_date=START, stop_date=STOP, freq=\"d\", reverse=False)\n```\n```\nFunctions : ['public.fn_test', 'public.fn_test_long', 'public.fn_test_very_long']\nDate Range : From 2023-08-01 to 2023-08-05\nIterations : 5\n*********************************************************************************************\n[Runing Date: 2023-08-01] [Function: public.fn_test..........] Execution time: 0:00:00.110408\n[Runing Date: 2023-08-01] [Function: public.fn_test_long.....] Execution time: 0:00:00.112078\n[Runing Date: 2023-08-01] [Function: public.fn_test_very_long] Execution time: 0:00:00.092423\n*********************************************************************************************\n[Runing Date: 2023-08-02] [Function: public.fn_test..........] Execution time: 0:00:00.111153\n[Runing Date: 2023-08-02] [Function: public.fn_test_long.....] Execution time: 0:00:00.111395\n[Runing Date: 2023-08-02] [Function: public.fn_test_very_long] Execution time: 0:00:00.110814\n*********************************************************************************************\n[Runing Date: 2023-08-03] [Function: public.fn_test..........] Execution time: 0:00:00.111044\n[Runing Date: 2023-08-03] [Function: public.fn_test_long.....] Execution time: 0:00:00.123229\n[Runing Date: 2023-08-03] [Function: public.fn_test_very_long] Execution time: 0:00:00.078432\n*********************************************************************************************\n[Runing Date: 2023-08-04] [Function: public.fn_test..........] Execution time: 0:00:00.127839\n[Runing Date: 2023-08-04] [Function: public.fn_test_long.....] Execution time: 0:00:00.111339\n[Runing Date: 2023-08-04] [Function: public.fn_test_very_long] Execution time: 0:00:00.140669\n*********************************************************************************************\n[Runing Date: 2023-08-05] [Function: public.fn_test..........] Execution time: 0:00:00.138380\n[Runing Date: 2023-08-05] [Function: public.fn_test_long.....] Execution time: 0:00:00.111157\n[Runing Date: 2023-08-05] [Function: public.fn_test_very_long] Execution time: 0:00:00.077731\n*********************************************************************************************\n```\n\n## Get started with the UI\nDB Analytics Tools UI is a web-based GUI (`db_analytics_tools.webapp.UI`). No need to code, all you need is a JSON config file. Run the command below :\n```sh\ndb_tools start --config config.json --address 127.0.0.1 --port 8050\n```\n\n\n\n## Interact with Airflow\nWe also provide a class for interacting with the Apache Airflow REST API.\n```py\n# Import Airflow class\nfrom db_analytics_tools.airflow import AirflowRESTAPI\n\n# Airflow Config\nAIRFLOW_BASE_URL = \"http://localhost:8080\"\nAIRFLOW_API_ENDPOINT = \"api/v2/\"\nAIRFLOW_USERNAME = \"airflow\"\nAIRFLOW_PASSWORD = \"airflow\"\n\n# Create an instance\nairflow = AirflowRESTAPI(AIRFLOW_BASE_URL, AIRFLOW_API_ENDPOINT, AIRFLOW_USERNAME, AIRFLOW_PASSWORD)\n\n# Get list of DAGs\nairflow.get_dags_list(include_all=False).head(10)\n\n# Get a DAG details\nairflow.get_dag_details(dag_id=\"my_airflow_pipeline\", include_tasks=False)\n\n# Get list of tasks of a DAG\nairflow.get_dag_tasks(dag_id=\"my_airflow_pipeline\").head(10)\n\n# Trigger a DAG\nairflow.trigger_dag(dag_id=\"my_airflow_pipeline\", start_date='2025-03-11', end_date='2025-03-12')\n\n# Backfill a DAG\nairflow.backfill_dag(dag_id=\"my_airflow_pipeline\", start_date='2025-03-01', end_date='2025-03-12', reprocess_behavior=\"failed\")\n```\n\n## Forecasting\n\n```py\n# Import Forecast class\nfrom db_analytics_tools.learning import ForecastKPI\n\n# Create an instance\nforecast = ForecastKPI(historical_data=df, date_column=\"dt\")\n\n# Summary\nprint(forecast.describe())\n\n# Decomposition\ndecomposition_result = forecast.decompose_time_series(kpi_name='active_1d', period=7, model='additive', plot=True)\nprint(decomposition_result.trend.head())\nprint(decomposition_result.seasonal.head())\n```\n\n## Documentation\nDocumentation available on [https://joekakone.github.io/db-analytics-tools](https://joekakone.github.io/db-analytics-tools).\n\n\n## Help and Support\nIf you need help on DB Analytics Tools, please send me an message on [Whatsapp](https://wa.me/+22891518923) or send me a [mail](mailto:contact@josephkonka.com).\n\n\n## Contributing\n[Please see the contributing docs.](CONTRIBUTING.md)\n\n\n## Maintainer\nDB Analytics Tools is maintained by [Joseph Konka](https://www.linkedin.com/in/joseph-koami-konka/). Joseph is a Data Science Professional with a focus on Python based tools. He developed the base code while working at Togocom to automate his daily tasks. He packages the code into a Python package called **SQL ETL Runner** which becomes **Databases Analytics Tools**. For more about Joseph Konka, please visit [www.josephkonka.com](https://josephkonka.com).\n\n\n## Let's get in touch\n[](https://github.com/joekakone) [](https://www.linkedin.com/in/joseph-koami-konka/) [](https://www.twitter.com/joekakone) [](mailto:joseph.kakone@gmail.com)\n",

"bugtrack_url": null,

"license": "MIT",

"summary": "Databases Tools for Data Analytics",

"version": "0.1.8.2",

"project_urls": {

"Bug Tracker": "https://github.com/joekakone/db-analytics-tools/issues",

"Documentation": "https://joekakone.github.io/db-analytics-tools",

"Download": "https://github.com/joekakone/db-analytics-tools",

"Homepage": "https://joekakone.github.io/#projects",

"Source Code": "https://github.com/joekakone/db-analytics-tools"

},

"split_keywords": [

"databases",

"analytics",

"etl",

"sql",

"orc"

],

"urls": [

{

"comment_text": null,

"digests": {

"blake2b_256": "7ac2a423d951c923e3c31d35f890cce581b40ef810b078807408f258314e416a",

"md5": "89fbc85bac2c8aaced271426b13f7df0",

"sha256": "228b7d67ac0fec58149bbf0f0ba979dd3ad8b66e8dd8e59d0e33d81bf17d3f6c"

},

"downloads": -1,

"filename": "db_analytics_tools-0.1.8.2-py3-none-any.whl",

"has_sig": false,

"md5_digest": "89fbc85bac2c8aaced271426b13f7df0",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.10",

"size": 35811,

"upload_time": "2025-11-06T21:16:21",

"upload_time_iso_8601": "2025-11-06T21:16:21.449268Z",

"url": "https://files.pythonhosted.org/packages/7a/c2/a423d951c923e3c31d35f890cce581b40ef810b078807408f258314e416a/db_analytics_tools-0.1.8.2-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": null,

"digests": {

"blake2b_256": "b22219f519afab937837d344fee56420c4cfd78f536bd26a334126dc60a3c1b2",

"md5": "5105041a407db73bcc60818e2a045a83",

"sha256": "80495dd4f0bb999a5ee64576f1201a7152c7b6dbd3cb712f12b3a02c45554dc0"

},

"downloads": -1,

"filename": "db_analytics_tools-0.1.8.2.tar.gz",

"has_sig": false,

"md5_digest": "5105041a407db73bcc60818e2a045a83",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.10",

"size": 36425,

"upload_time": "2025-11-06T21:16:22",

"upload_time_iso_8601": "2025-11-06T21:16:22.426635Z",

"url": "https://files.pythonhosted.org/packages/b2/22/19f519afab937837d344fee56420c4cfd78f536bd26a334126dc60a3c1b2/db_analytics_tools-0.1.8.2.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2025-11-06 21:16:22",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "joekakone",

"github_project": "db-analytics-tools",

"travis_ci": false,

"coveralls": false,

"github_actions": true,

"requirements": [

{

"name": "psycopg2-binary",

"specs": [

[

">=",

"2.9.9"

]

]

},

{

"name": "pyodbc",

"specs": [

[

">=",

"5.1.0"

]

]

},

{

"name": "pandas",

"specs": [

[

">=",

"2.2.1"

]

]

},

{

"name": "SQLAlchemy",

"specs": [

[

">=",

"2.0.29"

]

]

},

{

"name": "streamlit",

"specs": [

[

">=",

"1.32.2"

]

]

},

{

"name": "matplotlib",

"specs": [

[

">=",

"3.4.3"

]

]

},

{

"name": "requests",

"specs": [

[

">=",

"2.32.3"

]

]

}

],

"lcname": "db-analytics-tools"

}