distribute_crawler

==================

使用scrapy,redis, mongodb,graphite实现的一个分布式网络爬虫,底层存储mongodb集群,分布式使用redis实现,

爬虫状态显示使用graphite实现。

这个工程是我对垂直搜索引擎中分布式网络爬虫的探索实现,它包含一个针对http://www.woaidu.org/ 网站的spider,

将其网站的书名,作者,书籍封面图片,书籍概要,原始网址链接,书籍下载信息和书籍爬取到本地:

* 分布式使用redis实现,redis中存储了工程的request,stats信息,能够对各个机器上的爬虫实现集中管理,这样可以

解决爬虫的性能瓶颈,利用redis的高效和易于扩展能够轻松实现高效率下载:当redis存储或者访问速度遇到瓶颈时,可以

通过增大redis集群数和爬虫集群数量改善。

* 底层存储实现了两种方式:

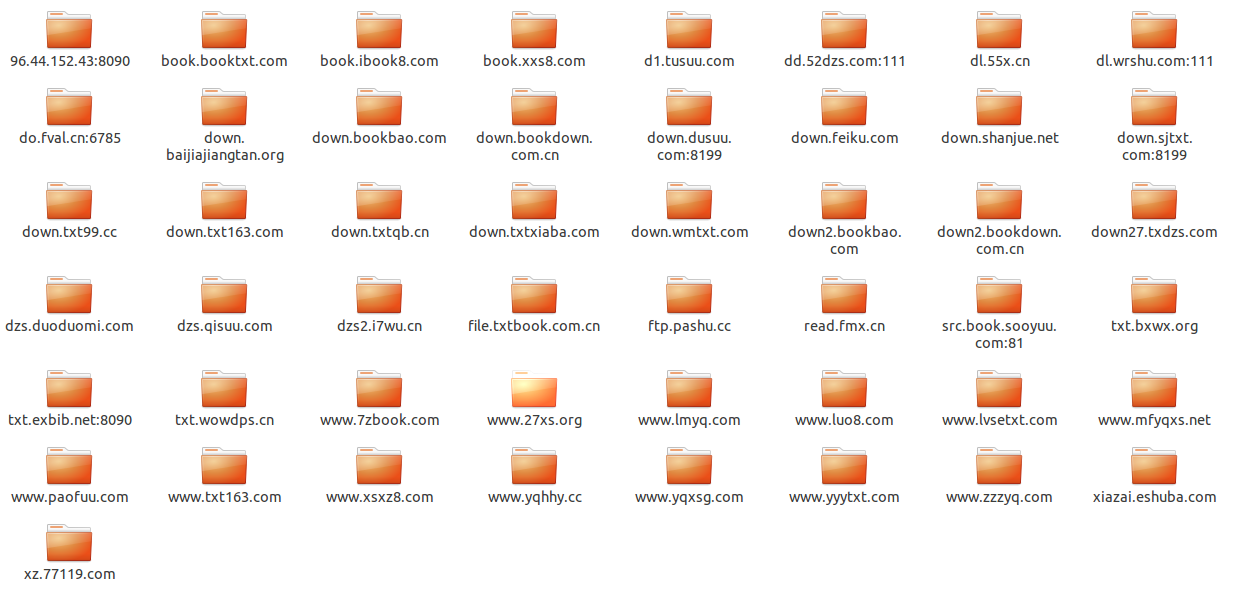

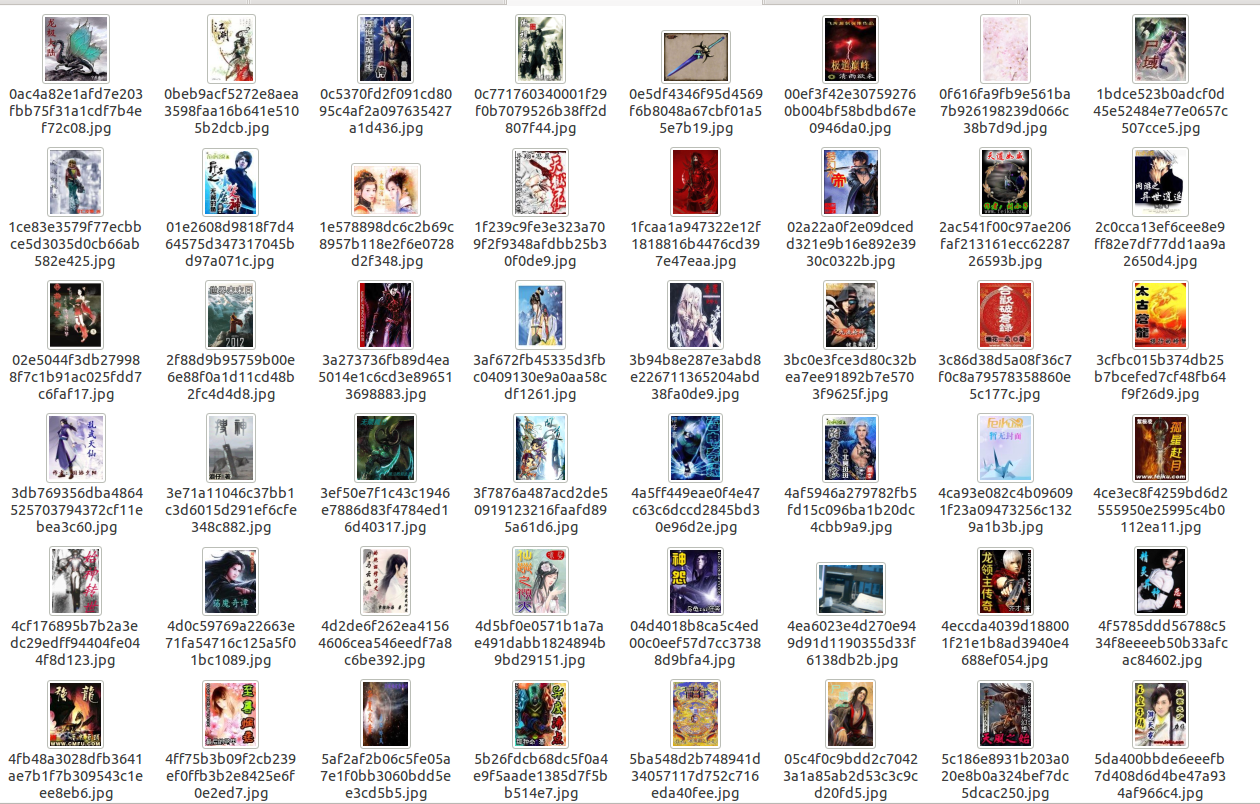

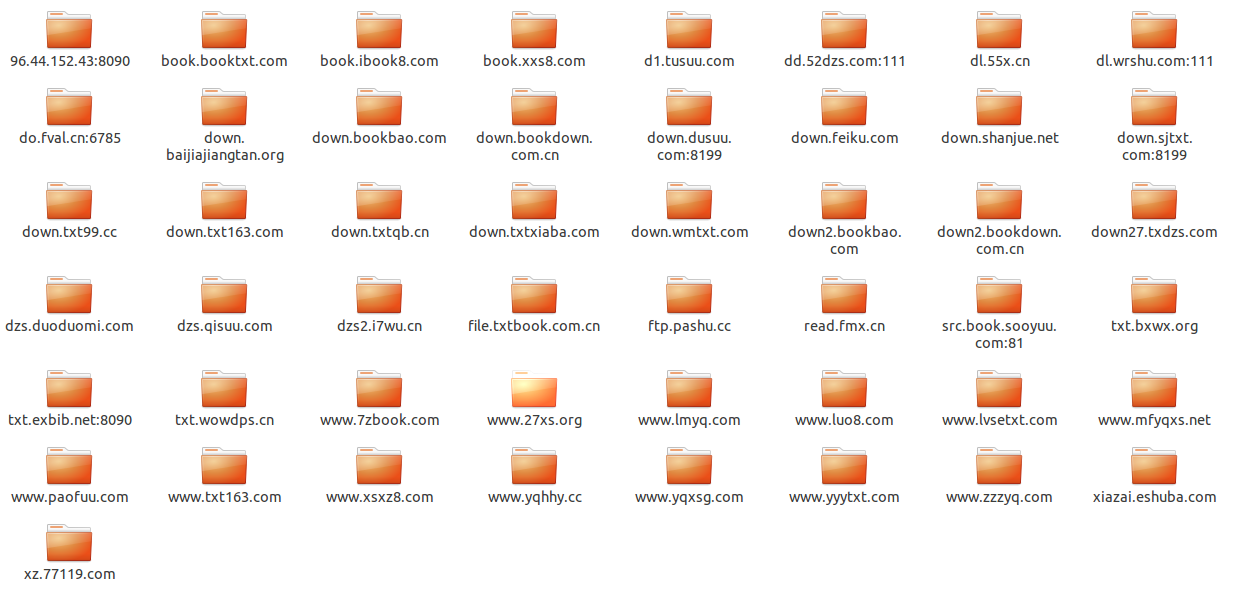

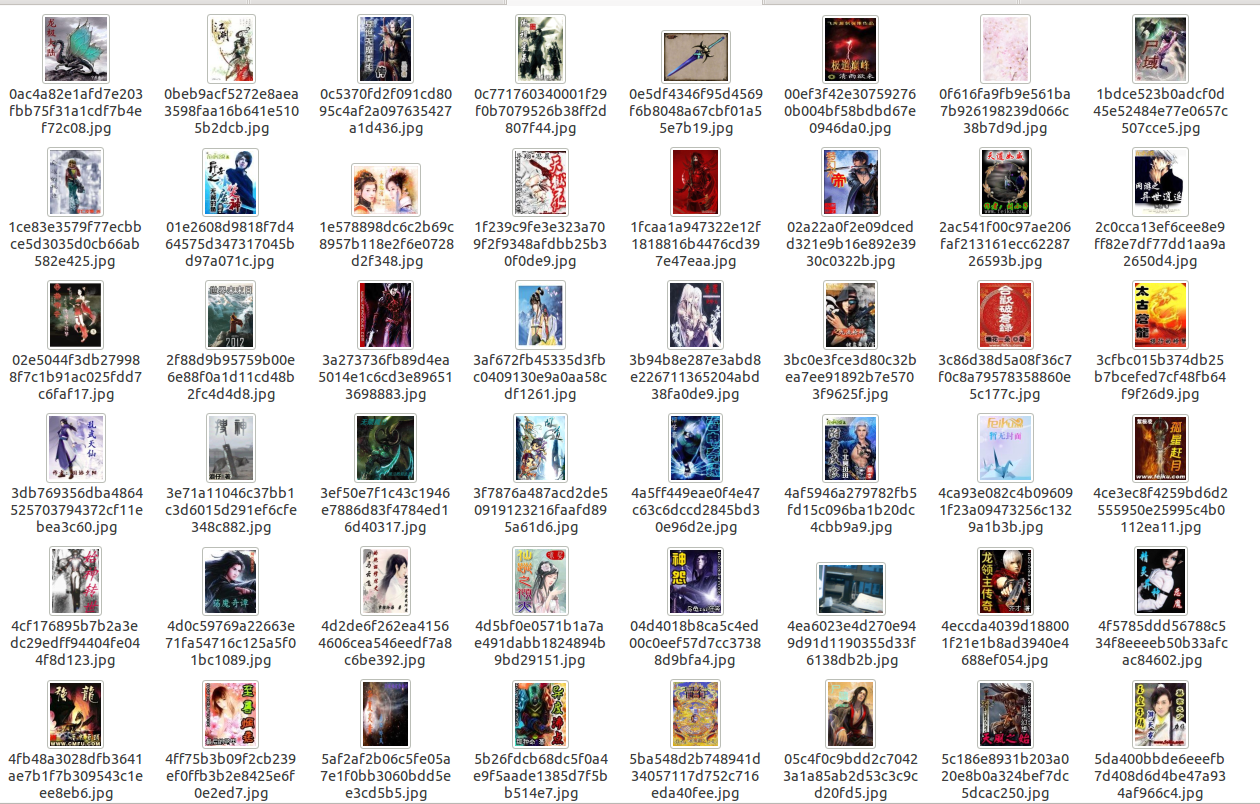

* 将书名,作者,书籍封面图片文件系统路径,书籍概要,原始网址链接,书籍下载信息,书籍文件系统路径保存到mongodb

中,此时mongodb使用单个服务器,对图片采用图片的url的hash值作为文件名进行存储,同时可以定制生成各种大小尺寸的缩略

图,对文件动态获得文件名,将其下载到本地,存储方式和图片类似,这样在每次下载之前会检查图片和文件是否曾经下载,对

已经下载的不再下载;

* 将书名,作者,书籍封面图片文件系统路径,书籍概要,原始网址链接,书籍下载信息,书籍保存到mongodb中,此时mongodb

采用mongodb集群进行存储,片键和索引的选择请看代码,文件采用mongodb的gridfs存储,图片仍然存储在文件系统中,在每次下载

之前会检查图片和文件是否曾经下载,对已经下载的不再下载;

* 避免爬虫被禁的策略:

* 禁用cookie

* 实现了一个download middleware,不停的变user-aget

* 实现了一个可以访问google cache中的数据的download middleware(默认禁用)

* 调试策略的实现:

* 将系统log信息写到文件中

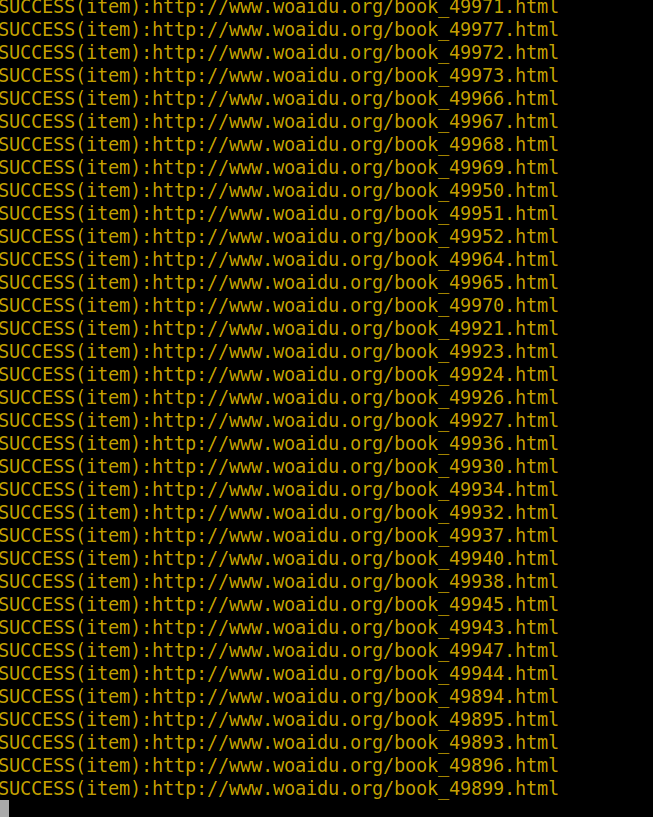

* 对重要的log信息(eg:drop item,success)采用彩色样式终端打印

* 文件,信息存储:

* 实现了FilePipeline可以将指定扩展名的文件下载到本地

* 实现了MongodbWoaiduBookFile可以将文件以gridfs形式存储在mongodb集群中

* 实现了SingleMongodbPipeline和ShardMongodbPipeline,用来将采集的信息分别以单服务器和集群方式保存到mongodb中

* 访问速度动态控制:

* 跟据网络延迟,分析出scrapy服务器和网站的响应速度,动态改变网站下载延迟

* 配置最大并行requests个数,每个域名最大并行请求个数和并行处理items个数

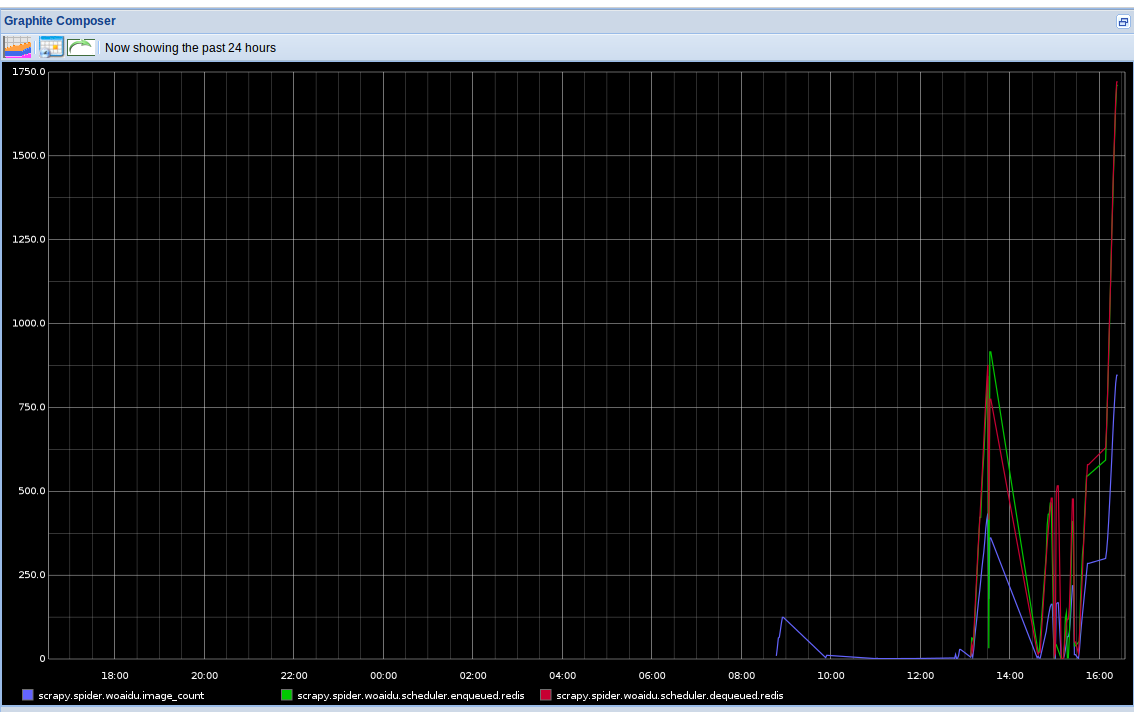

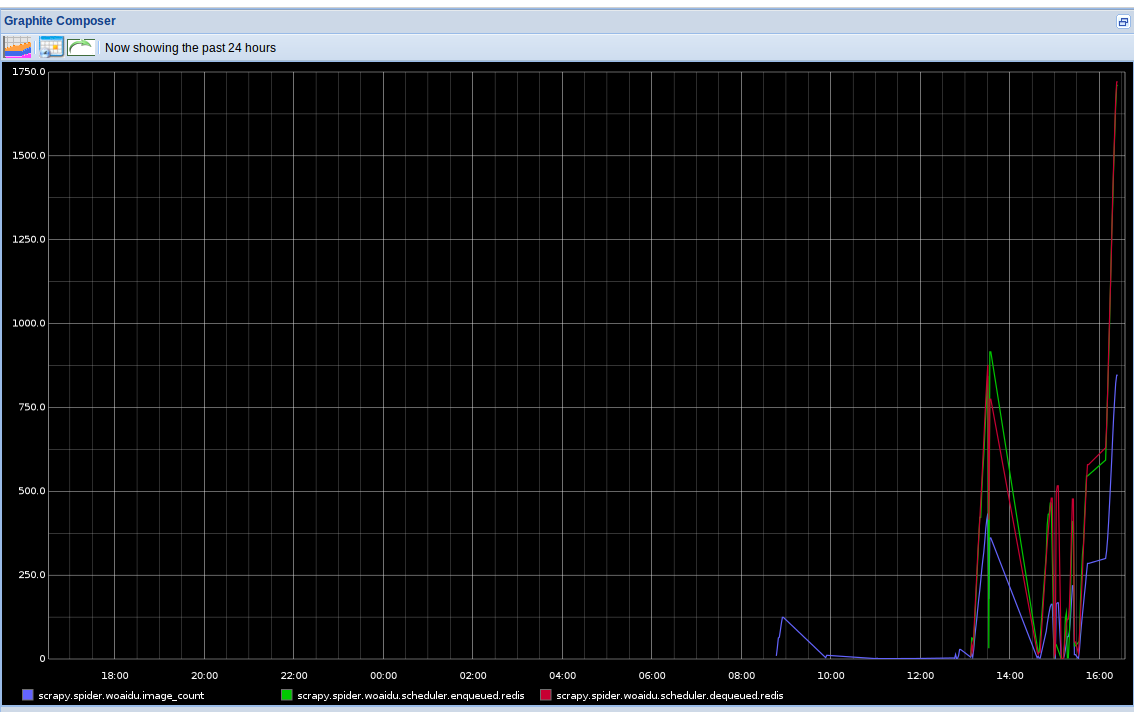

* 爬虫状态查看:

* 将爬虫stats信息(请求个数,文件下载个数,图片下载个数等)保存到redis中

* 实现了一个针对分布式的stats collector,并将其结果用graphite以图表形式动态实时显示

* mongodb集群部署:在commands目录下有init_sharding_mongodb.py文件,可以方便在本地部署

需要的其他的库

==============

* scrapy(最好是最新版)

* graphite(针对他的配置可以参考:statscol/graphite.py)

* redis

* mongodb

可重用的组件

============

* 终端彩色样式显示(utils/color.py)

* 在本地建立一个mongodb集群(commands/init_sharding_mongodb.py),使用方法:

```

sudo python init_sharding_mongodb.py --path=/usr/bin

```

* 单机graphite状态收集器(statscol.graphite.GraphiteStatsCollector)

* 基于redis分布式的graphite状态收集器(statscol.graphite.RedisGraphiteStatsCollector)

* scrapy分布式处理方案(scrapy_redis)

* rotate user-agent download middleware(contrib.downloadmiddleware.rotate_useragent.RotateUserAgentMiddleware)

* 访问google cache的download middleware(contrib.downloadmiddleware.google_cache.GoogleCacheMiddleware)

* 下载指定文件类型的文件并实现避免重复下载的pipeline(pipelines.file.FilePipeline)

* 下载制定文件类型的文件并提供mongodb gridfs存储的pipeline(pipelines.file.MongodbWoaiduBookFile)

* item mongodb存储的pipeline(pipelines.mongodb.SingleMongodbPipeline and ShardMongodbPipeline)

使用方法

========

#mongodb集群存储

* 安装scrapy

* 安装redispy

* 安装pymongo

* 安装graphite(如何配置请查看:statscol/graphite.py)

* 安装mongodb

* 安装redis

* 下载本工程

* 启动redis server

* 搭建mongodb集群

```

cd woaidu_crawler/commands/

sudo python init_sharding_mongodb.py --path=/usr/bin

```

* 在含有log文件夹的目录下执行:

```

scrapy crawl woaidu

```

* 打开http://127.0.0.1/ 通过图表查看spider实时状态信息

* 要想尝试分布式,可以在另外一个目录运行此工程

#mongodb

* 安装scrapy

* 安装redispy

* 安装pymongo

* 安装graphite(如何配置请查看:statscol/graphite.py)

* 安装mongodb

* 安装redis

* 下载本工程

* 启动redis server

* 搭建mongodb服务器

```

cd woaidu_crawler/commands/

python init_single_mongodb.py

```

* 设置settings.py:

```python

ITEM_PIPELINES = ['woaidu_crawler.pipelines.cover_image.WoaiduCoverImage',

'woaidu_crawler.pipelines.bookfile.WoaiduBookFile',

'woaidu_crawler.pipelines.drop_none_download.DropNoneBookFile',

'woaidu_crawler.pipelines.mongodb.SingleMongodbPipeline',

'woaidu_crawler.pipelines.final_test.FinalTestPipeline',]

```

* 在含有log文件夹的目录下执行:

```

scrapy crawl woaidu

```

* 打开http://127.0.0.1/ (也就是你运行的graphite-web的url) 通过图表查看spider实时状态信息

* 要想尝试分布式,可以在另外一个目录运行此工程

注意

====

每次运行完之后都要执行commands/clear_stats.py文件来清除redis中的stats信息

```

python clear_stats.py

```

Screenshots

===========

Raw data

{

"_id": null,

"home_page": "https://github.com/yanjlee/distribute_crawler",

"name": "distribute-crawler",

"maintainer": null,

"docs_url": null,

"requires_python": null,

"maintainer_email": null,

"keywords": null,

"author": "yanjlee",

"author_email": "yanjlee@163.com",

"download_url": "https://files.pythonhosted.org/packages/72/86/d919dd036ebb4dce202bb65a2b21e1143ff18256eb24b41011cac8f530b6/distribute_crawler-1.0.1.tar.gz",

"platform": null,

"description": "distribute_crawler\r\n==================\r\n\r\n\u4f7f\u7528scrapy,redis, mongodb,graphite\u5b9e\u73b0\u7684\u4e00\u4e2a\u5206\u5e03\u5f0f\u7f51\u7edc\u722c\u866b,\u5e95\u5c42\u5b58\u50a8mongodb\u96c6\u7fa4,\u5206\u5e03\u5f0f\u4f7f\u7528redis\u5b9e\u73b0,\r\n\u722c\u866b\u72b6\u6001\u663e\u793a\u4f7f\u7528graphite\u5b9e\u73b0\u3002\r\n\r\n\u8fd9\u4e2a\u5de5\u7a0b\u662f\u6211\u5bf9\u5782\u76f4\u641c\u7d22\u5f15\u64ce\u4e2d\u5206\u5e03\u5f0f\u7f51\u7edc\u722c\u866b\u7684\u63a2\u7d22\u5b9e\u73b0\uff0c\u5b83\u5305\u542b\u4e00\u4e2a\u9488\u5bf9http://www.woaidu.org/ \u7f51\u7ad9\u7684spider\uff0c\r\n\u5c06\u5176\u7f51\u7ad9\u7684\u4e66\u540d\uff0c\u4f5c\u8005\uff0c\u4e66\u7c4d\u5c01\u9762\u56fe\u7247\uff0c\u4e66\u7c4d\u6982\u8981\uff0c\u539f\u59cb\u7f51\u5740\u94fe\u63a5\uff0c\u4e66\u7c4d\u4e0b\u8f7d\u4fe1\u606f\u548c\u4e66\u7c4d\u722c\u53d6\u5230\u672c\u5730\uff1a\r\n* \u5206\u5e03\u5f0f\u4f7f\u7528redis\u5b9e\u73b0\uff0credis\u4e2d\u5b58\u50a8\u4e86\u5de5\u7a0b\u7684request\uff0cstats\u4fe1\u606f\uff0c\u80fd\u591f\u5bf9\u5404\u4e2a\u673a\u5668\u4e0a\u7684\u722c\u866b\u5b9e\u73b0\u96c6\u4e2d\u7ba1\u7406\uff0c\u8fd9\u6837\u53ef\u4ee5\r\n\u89e3\u51b3\u722c\u866b\u7684\u6027\u80fd\u74f6\u9888\uff0c\u5229\u7528redis\u7684\u9ad8\u6548\u548c\u6613\u4e8e\u6269\u5c55\u80fd\u591f\u8f7b\u677e\u5b9e\u73b0\u9ad8\u6548\u7387\u4e0b\u8f7d\uff1a\u5f53redis\u5b58\u50a8\u6216\u8005\u8bbf\u95ee\u901f\u5ea6\u9047\u5230\u74f6\u9888\u65f6\uff0c\u53ef\u4ee5\r\n\u901a\u8fc7\u589e\u5927redis\u96c6\u7fa4\u6570\u548c\u722c\u866b\u96c6\u7fa4\u6570\u91cf\u6539\u5584\u3002\r\n* \u5e95\u5c42\u5b58\u50a8\u5b9e\u73b0\u4e86\u4e24\u79cd\u65b9\u5f0f\uff1a\r\n * \u5c06\u4e66\u540d\uff0c\u4f5c\u8005\uff0c\u4e66\u7c4d\u5c01\u9762\u56fe\u7247\u6587\u4ef6\u7cfb\u7edf\u8def\u5f84\uff0c\u4e66\u7c4d\u6982\u8981\uff0c\u539f\u59cb\u7f51\u5740\u94fe\u63a5\uff0c\u4e66\u7c4d\u4e0b\u8f7d\u4fe1\u606f\uff0c\u4e66\u7c4d\u6587\u4ef6\u7cfb\u7edf\u8def\u5f84\u4fdd\u5b58\u5230mongodb\r\n\u4e2d\uff0c\u6b64\u65f6mongodb\u4f7f\u7528\u5355\u4e2a\u670d\u52a1\u5668,\u5bf9\u56fe\u7247\u91c7\u7528\u56fe\u7247\u7684url\u7684hash\u503c\u4f5c\u4e3a\u6587\u4ef6\u540d\u8fdb\u884c\u5b58\u50a8\uff0c\u540c\u65f6\u53ef\u4ee5\u5b9a\u5236\u751f\u6210\u5404\u79cd\u5927\u5c0f\u5c3a\u5bf8\u7684\u7f29\u7565\r\n\u56fe\uff0c\u5bf9\u6587\u4ef6\u52a8\u6001\u83b7\u5f97\u6587\u4ef6\u540d\uff0c\u5c06\u5176\u4e0b\u8f7d\u5230\u672c\u5730\uff0c\u5b58\u50a8\u65b9\u5f0f\u548c\u56fe\u7247\u7c7b\u4f3c\uff0c\u8fd9\u6837\u5728\u6bcf\u6b21\u4e0b\u8f7d\u4e4b\u524d\u4f1a\u68c0\u67e5\u56fe\u7247\u548c\u6587\u4ef6\u662f\u5426\u66fe\u7ecf\u4e0b\u8f7d\uff0c\u5bf9\r\n\u5df2\u7ecf\u4e0b\u8f7d\u7684\u4e0d\u518d\u4e0b\u8f7d\uff1b\r\n * \u5c06\u4e66\u540d\uff0c\u4f5c\u8005\uff0c\u4e66\u7c4d\u5c01\u9762\u56fe\u7247\u6587\u4ef6\u7cfb\u7edf\u8def\u5f84\uff0c\u4e66\u7c4d\u6982\u8981\uff0c\u539f\u59cb\u7f51\u5740\u94fe\u63a5\uff0c\u4e66\u7c4d\u4e0b\u8f7d\u4fe1\u606f\uff0c\u4e66\u7c4d\u4fdd\u5b58\u5230mongodb\u4e2d\uff0c\u6b64\u65f6mongodb\r\n\u91c7\u7528mongodb\u96c6\u7fa4\u8fdb\u884c\u5b58\u50a8\uff0c\u7247\u952e\u548c\u7d22\u5f15\u7684\u9009\u62e9\u8bf7\u770b\u4ee3\u7801\uff0c\u6587\u4ef6\u91c7\u7528mongodb\u7684gridfs\u5b58\u50a8,\u56fe\u7247\u4ecd\u7136\u5b58\u50a8\u5728\u6587\u4ef6\u7cfb\u7edf\u4e2d,\u5728\u6bcf\u6b21\u4e0b\u8f7d\r\n\u4e4b\u524d\u4f1a\u68c0\u67e5\u56fe\u7247\u548c\u6587\u4ef6\u662f\u5426\u66fe\u7ecf\u4e0b\u8f7d\uff0c\u5bf9\u5df2\u7ecf\u4e0b\u8f7d\u7684\u4e0d\u518d\u4e0b\u8f7d\uff1b\r\n* \u907f\u514d\u722c\u866b\u88ab\u7981\u7684\u7b56\u7565\uff1a\r\n * \u7981\u7528cookie\r\n * \u5b9e\u73b0\u4e86\u4e00\u4e2adownload middleware\uff0c\u4e0d\u505c\u7684\u53d8user-aget\r\n * \u5b9e\u73b0\u4e86\u4e00\u4e2a\u53ef\u4ee5\u8bbf\u95eegoogle cache\u4e2d\u7684\u6570\u636e\u7684download middleware(\u9ed8\u8ba4\u7981\u7528)\r\n* \u8c03\u8bd5\u7b56\u7565\u7684\u5b9e\u73b0\uff1a\r\n * \u5c06\u7cfb\u7edflog\u4fe1\u606f\u5199\u5230\u6587\u4ef6\u4e2d\r\n * \u5bf9\u91cd\u8981\u7684log\u4fe1\u606f(eg:drop item,success)\u91c7\u7528\u5f69\u8272\u6837\u5f0f\u7ec8\u7aef\u6253\u5370\r\n* \u6587\u4ef6\uff0c\u4fe1\u606f\u5b58\u50a8\uff1a\r\n * \u5b9e\u73b0\u4e86FilePipeline\u53ef\u4ee5\u5c06\u6307\u5b9a\u6269\u5c55\u540d\u7684\u6587\u4ef6\u4e0b\u8f7d\u5230\u672c\u5730\r\n * \u5b9e\u73b0\u4e86MongodbWoaiduBookFile\u53ef\u4ee5\u5c06\u6587\u4ef6\u4ee5gridfs\u5f62\u5f0f\u5b58\u50a8\u5728mongodb\u96c6\u7fa4\u4e2d\r\n * \u5b9e\u73b0\u4e86SingleMongodbPipeline\u548cShardMongodbPipeline\uff0c\u7528\u6765\u5c06\u91c7\u96c6\u7684\u4fe1\u606f\u5206\u522b\u4ee5\u5355\u670d\u52a1\u5668\u548c\u96c6\u7fa4\u65b9\u5f0f\u4fdd\u5b58\u5230mongodb\u4e2d\r\n* \u8bbf\u95ee\u901f\u5ea6\u52a8\u6001\u63a7\u5236:\r\n * \u8ddf\u636e\u7f51\u7edc\u5ef6\u8fdf\uff0c\u5206\u6790\u51fascrapy\u670d\u52a1\u5668\u548c\u7f51\u7ad9\u7684\u54cd\u5e94\u901f\u5ea6\uff0c\u52a8\u6001\u6539\u53d8\u7f51\u7ad9\u4e0b\u8f7d\u5ef6\u8fdf\r\n * \u914d\u7f6e\u6700\u5927\u5e76\u884crequests\u4e2a\u6570\uff0c\u6bcf\u4e2a\u57df\u540d\u6700\u5927\u5e76\u884c\u8bf7\u6c42\u4e2a\u6570\u548c\u5e76\u884c\u5904\u7406items\u4e2a\u6570\r\n* \u722c\u866b\u72b6\u6001\u67e5\u770b\uff1a\r\n * \u5c06\u722c\u866bstats\u4fe1\u606f(\u8bf7\u6c42\u4e2a\u6570\uff0c\u6587\u4ef6\u4e0b\u8f7d\u4e2a\u6570\uff0c\u56fe\u7247\u4e0b\u8f7d\u4e2a\u6570\u7b49)\u4fdd\u5b58\u5230redis\u4e2d\r\n * \u5b9e\u73b0\u4e86\u4e00\u4e2a\u9488\u5bf9\u5206\u5e03\u5f0f\u7684stats collector\uff0c\u5e76\u5c06\u5176\u7ed3\u679c\u7528graphite\u4ee5\u56fe\u8868\u5f62\u5f0f\u52a8\u6001\u5b9e\u65f6\u663e\u793a\r\n* mongodb\u96c6\u7fa4\u90e8\u7f72\uff1a\u5728commands\u76ee\u5f55\u4e0b\u6709init_sharding_mongodb.py\u6587\u4ef6\uff0c\u53ef\u4ee5\u65b9\u4fbf\u5728\u672c\u5730\u90e8\u7f72\r\n\r\n\u9700\u8981\u7684\u5176\u4ed6\u7684\u5e93\r\n==============\r\n\r\n* scrapy(\u6700\u597d\u662f\u6700\u65b0\u7248)\r\n* graphite(\u9488\u5bf9\u4ed6\u7684\u914d\u7f6e\u53ef\u4ee5\u53c2\u8003\uff1astatscol/graphite.py)\r\n* redis\r\n* mongodb\r\n\r\n\u53ef\u91cd\u7528\u7684\u7ec4\u4ef6\r\n============\r\n\r\n* \u7ec8\u7aef\u5f69\u8272\u6837\u5f0f\u663e\u793a(utils/color.py)\r\n* \u5728\u672c\u5730\u5efa\u7acb\u4e00\u4e2amongodb\u96c6\u7fa4(commands/init_sharding_mongodb.py),\u4f7f\u7528\u65b9\u6cd5:\r\n```\r\n sudo python init_sharding_mongodb.py --path=/usr/bin\r\n```\r\n* \u5355\u673agraphite\u72b6\u6001\u6536\u96c6\u5668(statscol.graphite.GraphiteStatsCollector)\r\n* \u57fa\u4e8eredis\u5206\u5e03\u5f0f\u7684graphite\u72b6\u6001\u6536\u96c6\u5668(statscol.graphite.RedisGraphiteStatsCollector)\r\n* scrapy\u5206\u5e03\u5f0f\u5904\u7406\u65b9\u6848(scrapy_redis)\r\n* rotate user-agent download middleware(contrib.downloadmiddleware.rotate_useragent.RotateUserAgentMiddleware)\r\n* \u8bbf\u95eegoogle cache\u7684download middleware(contrib.downloadmiddleware.google_cache.GoogleCacheMiddleware)\r\n* \u4e0b\u8f7d\u6307\u5b9a\u6587\u4ef6\u7c7b\u578b\u7684\u6587\u4ef6\u5e76\u5b9e\u73b0\u907f\u514d\u91cd\u590d\u4e0b\u8f7d\u7684pipeline(pipelines.file.FilePipeline)\r\n* \u4e0b\u8f7d\u5236\u5b9a\u6587\u4ef6\u7c7b\u578b\u7684\u6587\u4ef6\u5e76\u63d0\u4f9bmongodb gridfs\u5b58\u50a8\u7684pipeline(pipelines.file.MongodbWoaiduBookFile)\r\n* item mongodb\u5b58\u50a8\u7684pipeline(pipelines.mongodb.SingleMongodbPipeline and ShardMongodbPipeline)\r\n\r\n\u4f7f\u7528\u65b9\u6cd5\r\n========\r\n\r\n#mongodb\u96c6\u7fa4\u5b58\u50a8\r\n* \u5b89\u88c5scrapy\r\n* \u5b89\u88c5redispy\r\n* \u5b89\u88c5pymongo\r\n* \u5b89\u88c5graphite(\u5982\u4f55\u914d\u7f6e\u8bf7\u67e5\u770b\uff1astatscol/graphite.py)\r\n* \u5b89\u88c5mongodb\r\n* \u5b89\u88c5redis\r\n* \u4e0b\u8f7d\u672c\u5de5\u7a0b\r\n* \u542f\u52a8redis server\r\n* \u642d\u5efamongodb\u96c6\u7fa4\r\n```\r\n cd woaidu_crawler/commands/\r\n sudo python init_sharding_mongodb.py --path=/usr/bin\r\n```\r\n* \u5728\u542b\u6709log\u6587\u4ef6\u5939\u7684\u76ee\u5f55\u4e0b\u6267\u884c:\r\n```\r\n scrapy crawl woaidu\r\n```\r\n* \u6253\u5f00http://127.0.0.1/ \u901a\u8fc7\u56fe\u8868\u67e5\u770bspider\u5b9e\u65f6\u72b6\u6001\u4fe1\u606f\r\n* \u8981\u60f3\u5c1d\u8bd5\u5206\u5e03\u5f0f\uff0c\u53ef\u4ee5\u5728\u53e6\u5916\u4e00\u4e2a\u76ee\u5f55\u8fd0\u884c\u6b64\u5de5\u7a0b\r\n\r\n#mongodb\r\n* \u5b89\u88c5scrapy\r\n* \u5b89\u88c5redispy\r\n* \u5b89\u88c5pymongo\r\n* \u5b89\u88c5graphite(\u5982\u4f55\u914d\u7f6e\u8bf7\u67e5\u770b\uff1astatscol/graphite.py)\r\n* \u5b89\u88c5mongodb\r\n* \u5b89\u88c5redis\r\n* \u4e0b\u8f7d\u672c\u5de5\u7a0b\r\n* \u542f\u52a8redis server\r\n* \u642d\u5efamongodb\u670d\u52a1\u5668\r\n```\r\n cd woaidu_crawler/commands/\r\n python init_single_mongodb.py \r\n```\r\n* \u8bbe\u7f6esettings.py\uff1a\r\n\r\n```python\r\n ITEM_PIPELINES = ['woaidu_crawler.pipelines.cover_image.WoaiduCoverImage',\r\n 'woaidu_crawler.pipelines.bookfile.WoaiduBookFile',\r\n 'woaidu_crawler.pipelines.drop_none_download.DropNoneBookFile',\r\n 'woaidu_crawler.pipelines.mongodb.SingleMongodbPipeline',\r\n 'woaidu_crawler.pipelines.final_test.FinalTestPipeline',]\r\n```\r\n* \u5728\u542b\u6709log\u6587\u4ef6\u5939\u7684\u76ee\u5f55\u4e0b\u6267\u884c:\r\n```\r\n scrapy crawl woaidu\r\n```\r\n* \u6253\u5f00http://127.0.0.1/ (\u4e5f\u5c31\u662f\u4f60\u8fd0\u884c\u7684graphite-web\u7684url) \u901a\u8fc7\u56fe\u8868\u67e5\u770bspider\u5b9e\u65f6\u72b6\u6001\u4fe1\u606f\r\n* \u8981\u60f3\u5c1d\u8bd5\u5206\u5e03\u5f0f\uff0c\u53ef\u4ee5\u5728\u53e6\u5916\u4e00\u4e2a\u76ee\u5f55\u8fd0\u884c\u6b64\u5de5\u7a0b\r\n\r\n\u6ce8\u610f\r\n====\r\n\r\n\u6bcf\u6b21\u8fd0\u884c\u5b8c\u4e4b\u540e\u90fd\u8981\u6267\u884ccommands/clear_stats.py\u6587\u4ef6\u6765\u6e05\u9664redis\u4e2d\u7684stats\u4fe1\u606f\r\n```\r\n python clear_stats.py\r\n```\r\n\r\nScreenshots\r\n===========\r\n\r\n\r\n\r\n\r\n\r\n\r\n",

"bugtrack_url": null,

"license": null,

"summary": "\u4f7f\u7528scrapy,redis, mongodb,graphite\u5b9e\u73b0\u7684\u4e00\u4e2a\u5206\u5e03\u5f0f\u7f51\u7edc\u722c\u866b,\u5e95\u5c42\u5b58\u50a8mongodb\u96c6\u7fa4,\u5206\u5e03\u5f0f\u4f7f\u7528redis\u5b9e\u73b0,\u722c\u866b\u72b6\u6001\u663e\u793a\u4f7f\u7528graphite\u5b9e\u73b0\u3002",

"version": "1.0.1",

"project_urls": {

"Homepage": "https://github.com/yanjlee/distribute_crawler"

},

"split_keywords": [],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "4d9e464b3961f38c536bfa534e7d3876da2fdb6ad768d2f9ffa34b081bbf2499",

"md5": "268bb34fdc08614d06761bb0be2cd08b",

"sha256": "3a7632db72d53a4ba54f3938221bbc8742499abb3f8fe2628b992ea6a358048a"

},

"downloads": -1,

"filename": "distribute_crawler-1.0.1-py3-none-any.whl",

"has_sig": false,

"md5_digest": "268bb34fdc08614d06761bb0be2cd08b",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": null,

"size": 4812,

"upload_time": "2024-06-01T03:35:12",

"upload_time_iso_8601": "2024-06-01T03:35:12.674413Z",

"url": "https://files.pythonhosted.org/packages/4d/9e/464b3961f38c536bfa534e7d3876da2fdb6ad768d2f9ffa34b081bbf2499/distribute_crawler-1.0.1-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "7286d919dd036ebb4dce202bb65a2b21e1143ff18256eb24b41011cac8f530b6",

"md5": "5edd737d5c3d1ff77dffaf3950f45072",

"sha256": "d0bfaf055d55fca73ceefc5f68fccdfda69cc3e1ba2f6655cf9e1edf0607472c"

},

"downloads": -1,

"filename": "distribute_crawler-1.0.1.tar.gz",

"has_sig": false,

"md5_digest": "5edd737d5c3d1ff77dffaf3950f45072",

"packagetype": "sdist",

"python_version": "source",

"requires_python": null,

"size": 5669,

"upload_time": "2024-06-01T03:35:15",

"upload_time_iso_8601": "2024-06-01T03:35:15.397353Z",

"url": "https://files.pythonhosted.org/packages/72/86/d919dd036ebb4dce202bb65a2b21e1143ff18256eb24b41011cac8f530b6/distribute_crawler-1.0.1.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2024-06-01 03:35:15",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "yanjlee",

"github_project": "distribute_crawler",

"travis_ci": false,

"coveralls": false,

"github_actions": false,

"lcname": "distribute-crawler"

}