| Name | first-breaks-picking-gpu JSON |

| Version |

0.7.4

JSON

JSON |

| download |

| home_page | None |

| Summary | Project is devoted to pick waves that are the first to be detected on a seismogram with neural network (CUDA accelerated) |

| upload_time | 2024-05-31 07:46:26 |

| maintainer | None |

| docs_url | None |

| author | Aleksei Tarasov |

| requires_python | >=3.8 |

| license | Apache License Version 2.0, January 2004 http://www.apache.org/licenses/ TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION 1. Definitions. "License" shall mean the terms and conditions for use, reproduction, and distribution as defined by Sections 1 through 9 of this document. "Licensor" shall mean the copyright owner or entity authorized by the copyright owner that is granting the License. "Legal Entity" shall mean the union of the acting entity and all other entities that control, are controlled by, or are under common control with that entity. For the purposes of this definition, "control" means (i) the power, direct or indirect, to cause the direction or management of such entity, whether by contract or otherwise, or (ii) ownership of fifty percent (50%) or more of the outstanding shares, or (iii) beneficial ownership of such entity. "You" (or "Your") shall mean an individual or Legal Entity exercising permissions granted by this License. "Source" form shall mean the preferred form for making modifications, including but not limited to software source code, documentation source, and configuration files. "Object" form shall mean any form resulting from mechanical transformation or translation of a Source form, including but not limited to compiled object code, generated documentation, and conversions to other media types. "Work" shall mean the work of authorship, whether in Source or Object form, made available under the License, as indicated by a copyright notice that is included in or attached to the work (an example is provided in the Appendix below). "Derivative Works" shall mean any work, whether in Source or Object form, that is based on (or derived from) the Work and for which the editorial revisions, annotations, elaborations, or other modifications represent, as a whole, an original work of authorship. For the purposes of this License, Derivative Works shall not include works that remain separable from, or merely link (or bind by name) to the interfaces of, the Work and Derivative Works thereof. "Contribution" shall mean any work of authorship, including the original version of the Work and any modifications or additions to that Work or Derivative Works thereof, that is intentionally submitted to Licensor for inclusion in the Work by the copyright owner or by an individual or Legal Entity authorized to submit on behalf of the copyright owner. For the purposes of this definition, "submitted" means any form of electronic, verbal, or written communication sent to the Licensor or its representatives, including but not limited to communication on electronic mailing lists, source code control systems, and issue tracking systems that are managed by, or on behalf of, the Licensor for the purpose of discussing and improving the Work, but excluding communication that is conspicuously marked or otherwise designated in writing by the copyright owner as "Not a Contribution." "Contributor" shall mean Licensor and any individual or Legal Entity on behalf of whom a Contribution has been received by Licensor and subsequently incorporated within the Work. 2. Grant of Copyright License. Subject to the terms and conditions of this License, each Contributor hereby grants to You a perpetual, worldwide, non-exclusive, no-charge, royalty-free, irrevocable copyright license to reproduce, prepare Derivative Works of, publicly display, publicly perform, sublicense, and distribute the Work and such Derivative Works in Source or Object form. 3. Grant of Patent License. Subject to the terms and conditions of this License, each Contributor hereby grants to You a perpetual, worldwide, non-exclusive, no-charge, royalty-free, irrevocable (except as stated in this section) patent license to make, have made, use, offer to sell, sell, import, and otherwise transfer the Work, where such license applies only to those patent claims licensable by such Contributor that are necessarily infringed by their Contribution(s) alone or by combination of their Contribution(s) with the Work to which such Contribution(s) was submitted. If You institute patent litigation against any entity (including a cross-claim or counterclaim in a lawsuit) alleging that the Work or a Contribution incorporated within the Work constitutes direct or contributory patent infringement, then any patent licenses granted to You under this License for that Work shall terminate as of the date such litigation is filed. 4. Redistribution. You may reproduce and distribute copies of the Work or Derivative Works thereof in any medium, with or without modifications, and in Source or Object form, provided that You meet the following conditions: (a) You must give any other recipients of the Work or Derivative Works a copy of this License; and (b) You must cause any modified files to carry prominent notices stating that You changed the files; and (c) You must retain, in the Source form of any Derivative Works that You distribute, all copyright, patent, trademark, and attribution notices from the Source form of the Work, excluding those notices that do not pertain to any part of the Derivative Works; and (d) If the Work includes a "NOTICE" text file as part of its distribution, then any Derivative Works that You distribute must include a readable copy of the attribution notices contained within such NOTICE file, excluding those notices that do not pertain to any part of the Derivative Works, in at least one of the following places: within a NOTICE text file distributed as part of the Derivative Works; within the Source form or documentation, if provided along with the Derivative Works; or, within a display generated by the Derivative Works, if and wherever such third-party notices normally appear. The contents of the NOTICE file are for informational purposes only and do not modify the License. You may add Your own attribution notices within Derivative Works that You distribute, alongside or as an addendum to the NOTICE text from the Work, provided that such additional attribution notices cannot be construed as modifying the License. You may add Your own copyright statement to Your modifications and may provide additional or different license terms and conditions for use, reproduction, or distribution of Your modifications, or for any such Derivative Works as a whole, provided Your use, reproduction, and distribution of the Work otherwise complies with the conditions stated in this License. 5. Submission of Contributions. Unless You explicitly state otherwise, any Contribution intentionally submitted for inclusion in the Work by You to the Licensor shall be under the terms and conditions of this License, without any additional terms or conditions. Notwithstanding the above, nothing herein shall supersede or modify the terms of any separate license agreement you may have executed with Licensor regarding such Contributions. 6. Trademarks. This License does not grant permission to use the trade names, trademarks, service marks, or product names of the Licensor, except as required for reasonable and customary use in describing the origin of the Work and reproducing the content of the NOTICE file. 7. Disclaimer of Warranty. Unless required by applicable law or agreed to in writing, Licensor provides the Work (and each Contributor provides its Contributions) on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied, including, without limitation, any warranties or conditions of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A PARTICULAR PURPOSE. You are solely responsible for determining the appropriateness of using or redistributing the Work and assume any risks associated with Your exercise of permissions under this License. 8. Limitation of Liability. In no event and under no legal theory, whether in tort (including negligence), contract, or otherwise, unless required by applicable law (such as deliberate and grossly negligent acts) or agreed to in writing, shall any Contributor be liable to You for damages, including any direct, indirect, special, incidental, or consequential damages of any character arising as a result of this License or out of the use or inability to use the Work (including but not limited to damages for loss of goodwill, work stoppage, computer failure or malfunction, or any and all other commercial damages or losses), even if such Contributor has been advised of the possibility of such damages. 9. Accepting Warranty or Additional Liability. While redistributing the Work or Derivative Works thereof, You may choose to offer, and charge a fee for, acceptance of support, warranty, indemnity, or other liability obligations and/or rights consistent with this License. However, in accepting such obligations, You may act only on Your own behalf and on Your sole responsibility, not on behalf of any other Contributor, and only if You agree to indemnify, defend, and hold each Contributor harmless for any liability incurred by, or claims asserted against, such Contributor by reason of your accepting any such warranty or additional liability. END OF TERMS AND CONDITIONS APPENDIX: How to apply the Apache License to your work. To apply the Apache License to your work, attach the following boilerplate notice, with the fields enclosed by brackets "[]" replaced with your own identifying information. (Don't include the brackets!) The text should be enclosed in the appropriate comment syntax for the file format. We also recommend that a file or class name and description of purpose be included on the same "printed page" as the copyright notice for easier identification within third-party archives. Copyright [yyyy] [name of copyright owner] Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. |

| keywords |

seismic

first-breaks

computer-vision

deep-learning

segmentation

data-science

|

| VCS |

|

| bugtrack_url |

|

| requirements |

No requirements were recorded.

|

| Travis-CI |

No Travis.

|

| coveralls test coverage |

No coveralls.

|

# FirstBreaksPicking

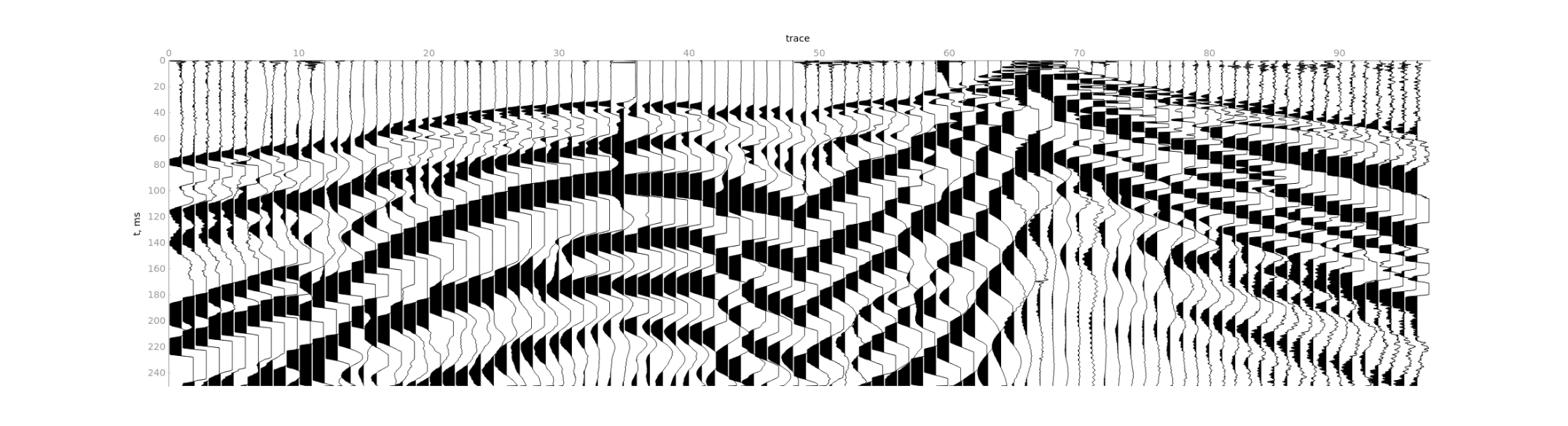

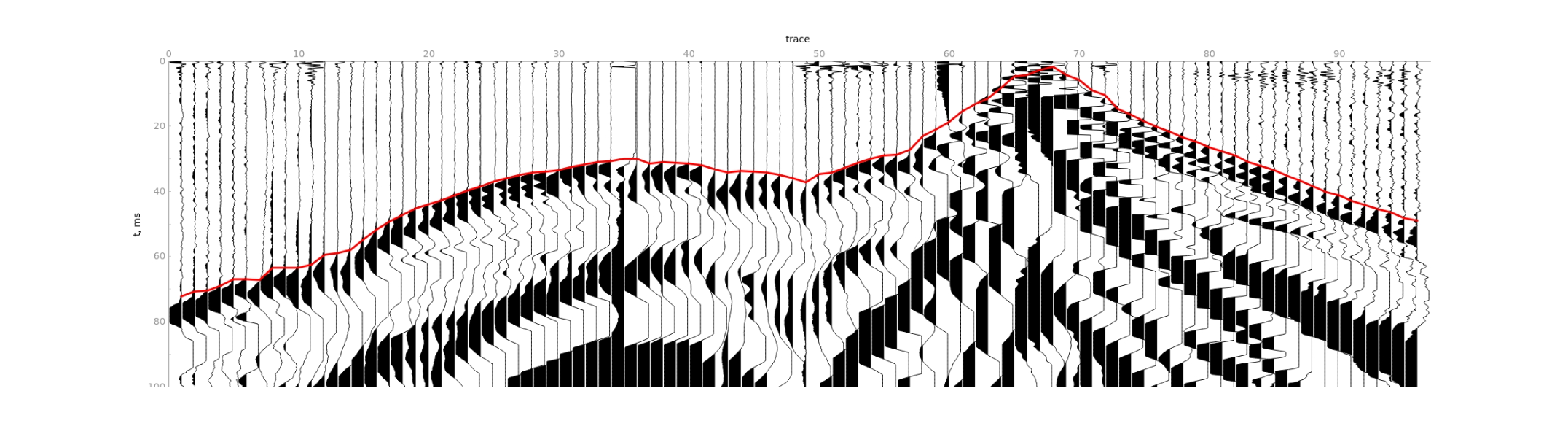

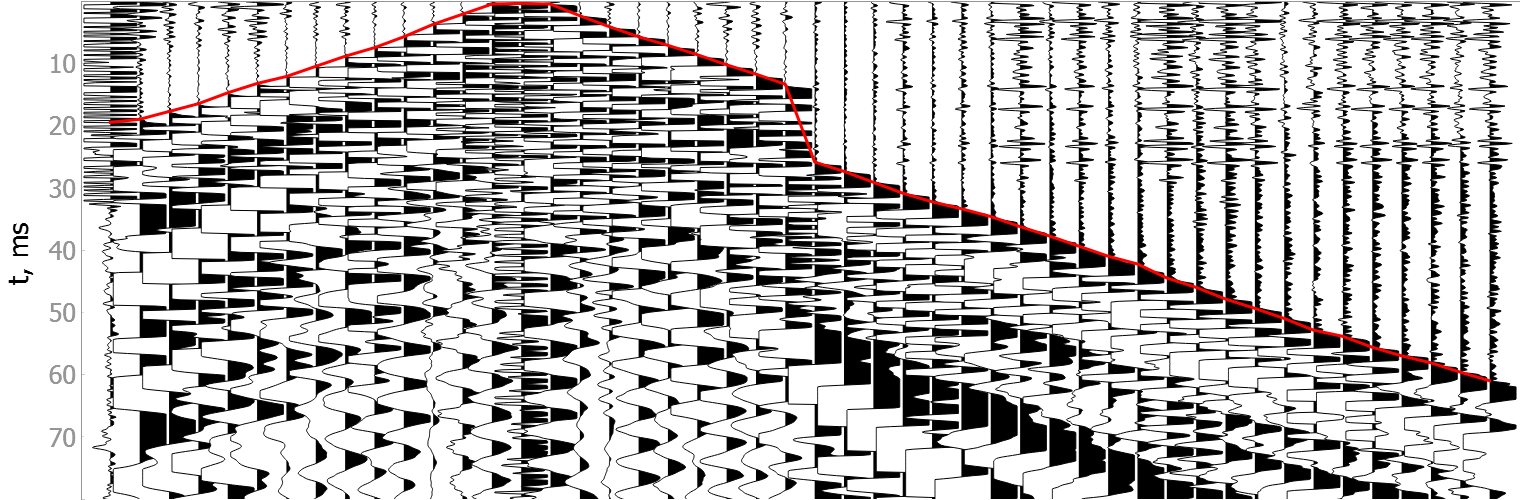

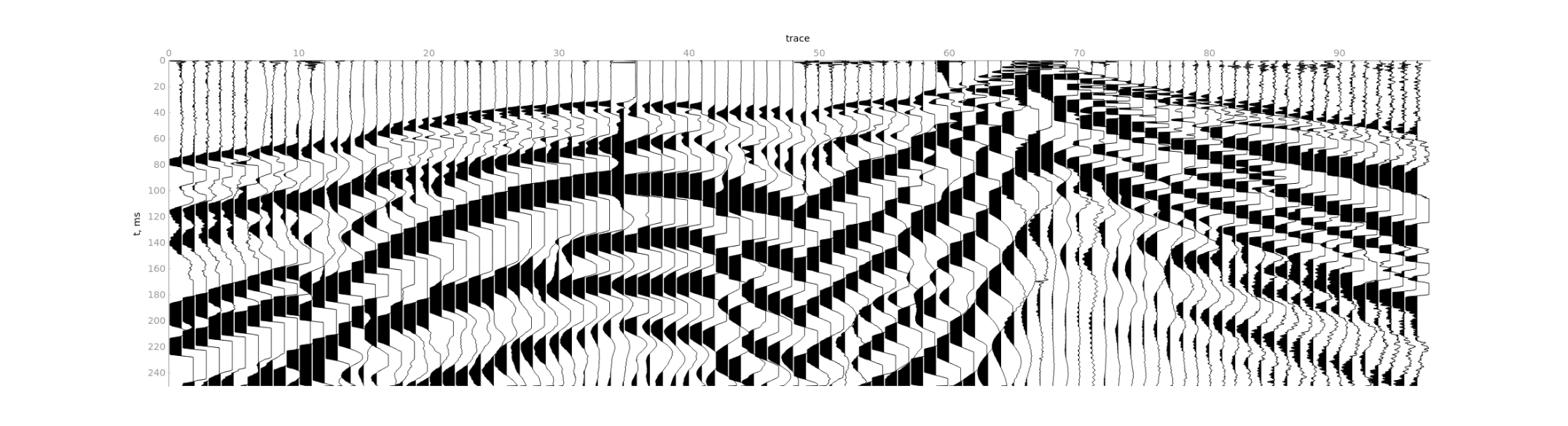

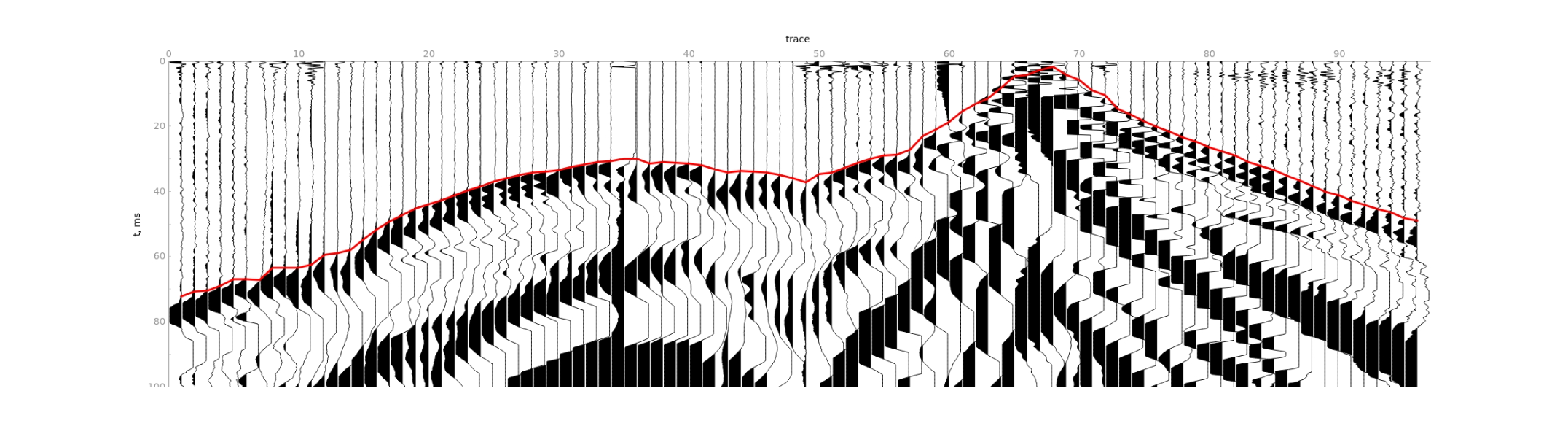

This project is devoted to pick waves that are the first to be detected on a seismogram (first breaks, first arrivals).

Traditionally, this procedure is performed manually. When processing field data, the number of picks reaches hundreds of

thousands. Existing analytical methods allow you to automate picking only on high-quality data with a high

signal / noise ratio.

As a more robust algorithm, it is proposed to use a neural network to pick the first breaks. Since the data on adjacent

seismic traces have similarities in the features of the wave field, **we pick first breaks on 2D seismic gather**, not

individual traces.

<p align="center">

<img src="https://raw.githubusercontent.com/DaloroAT/first_breaks_picking/main/docs/readme_images/project_preview.png" />

</p>

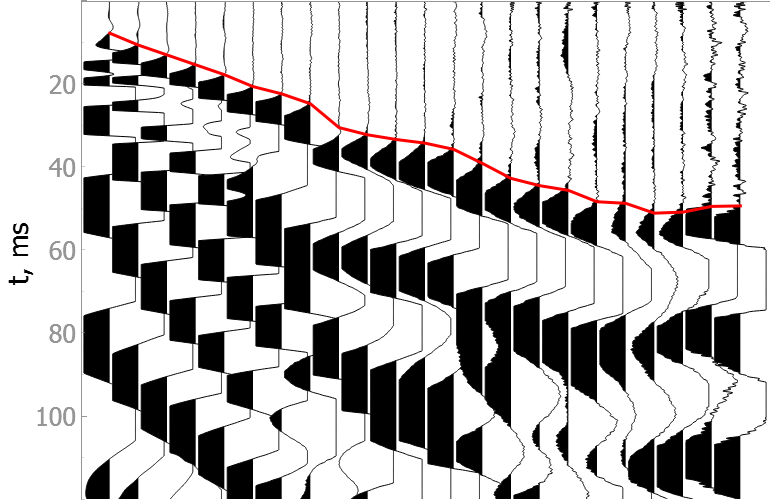

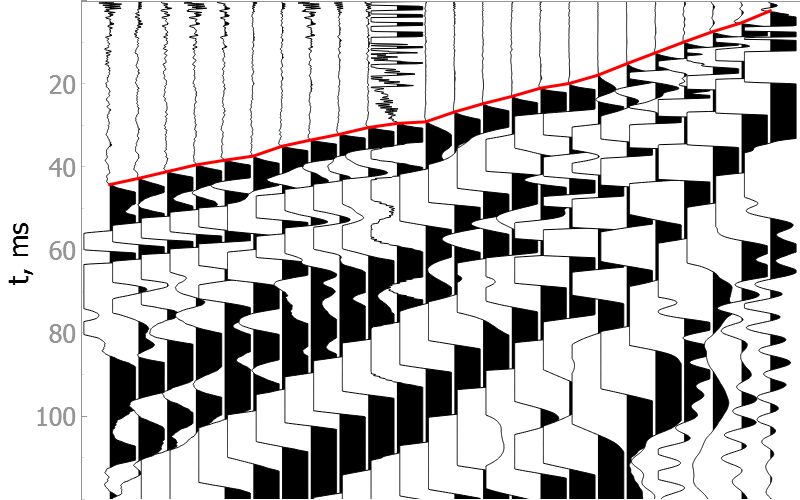

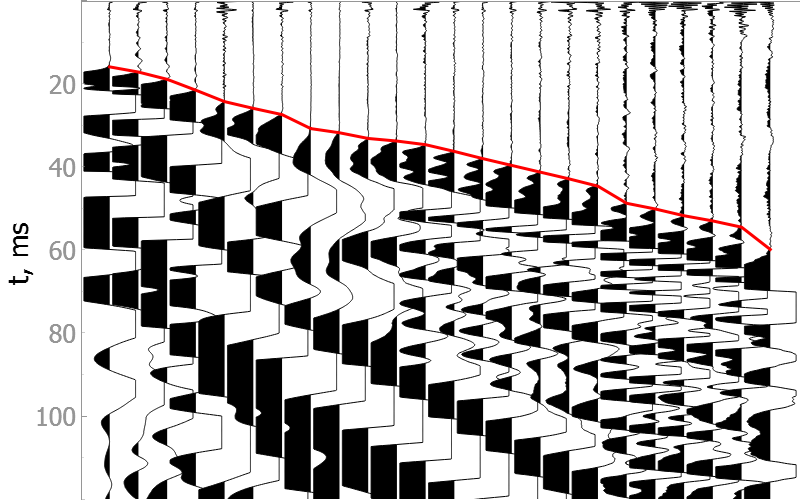

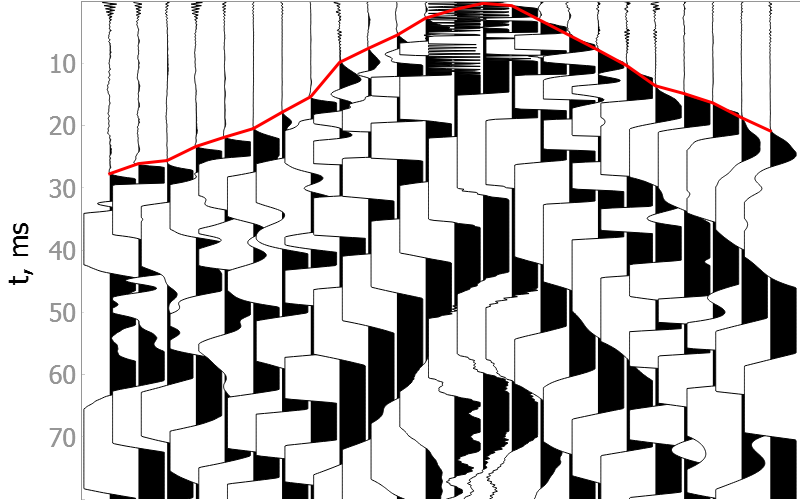

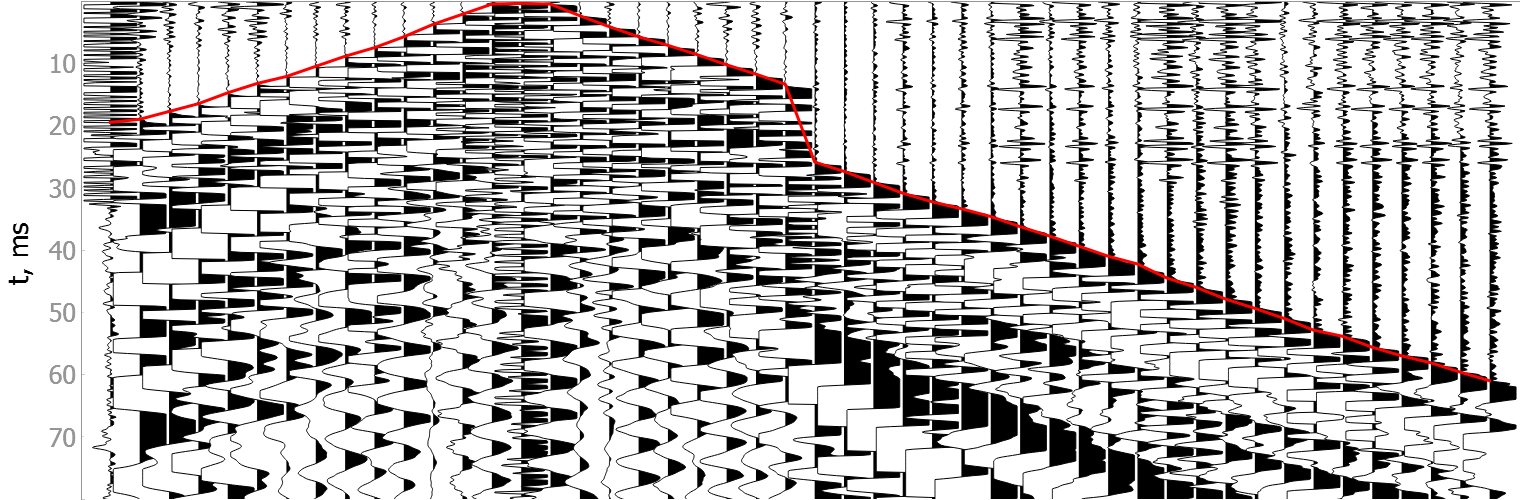

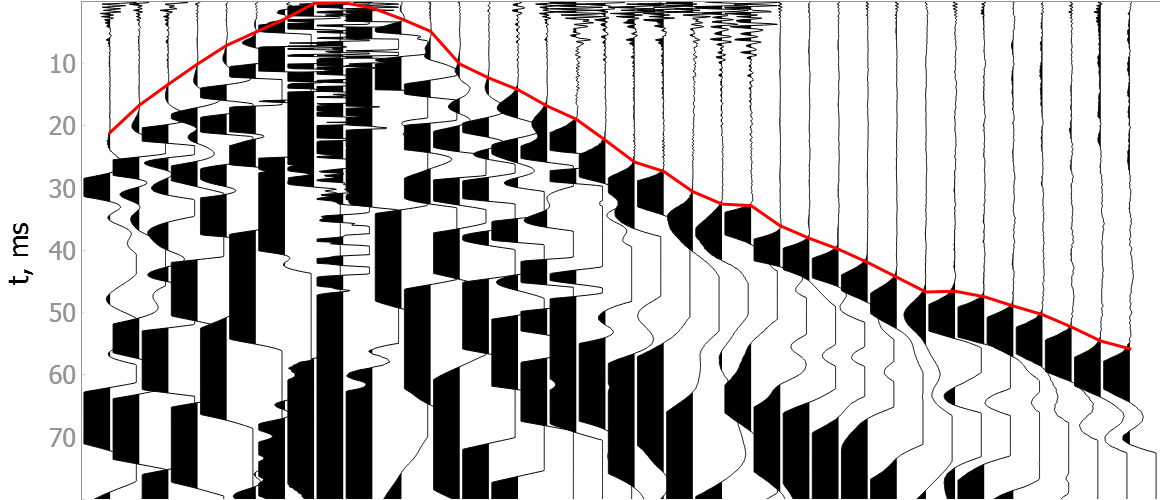

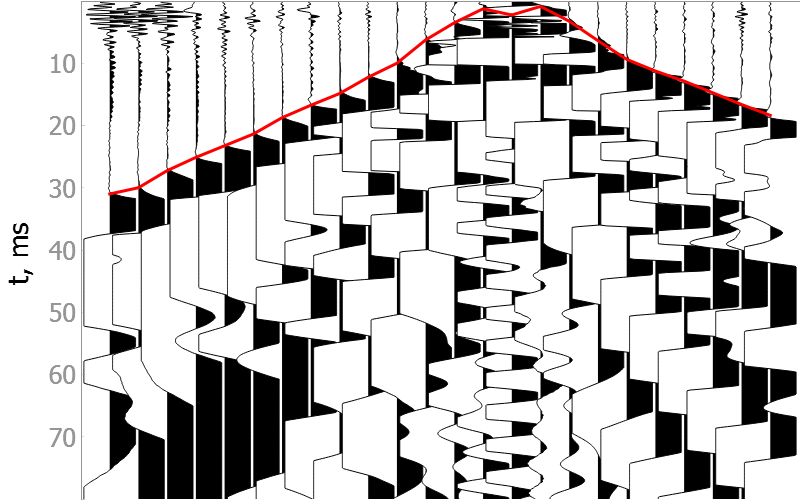

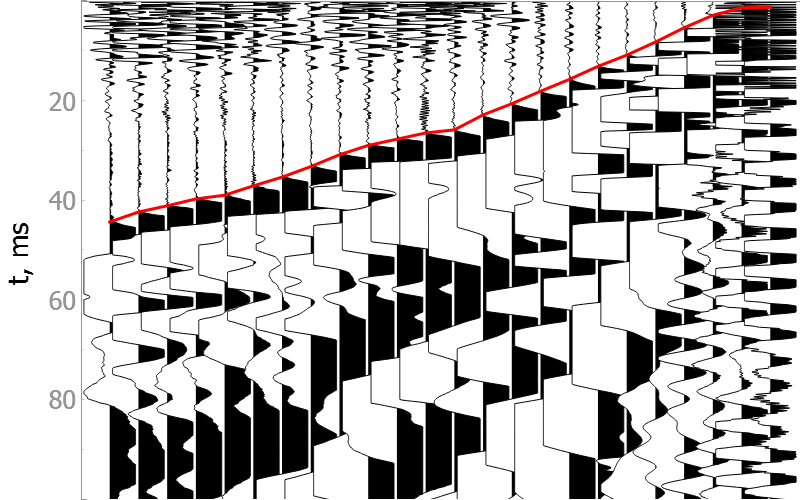

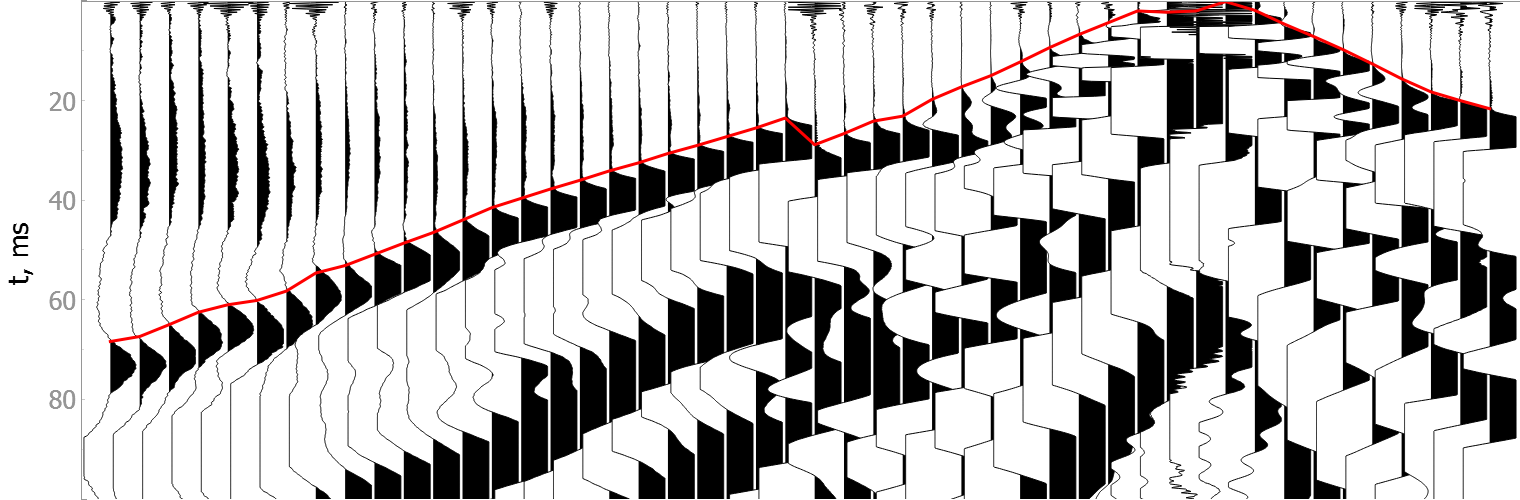

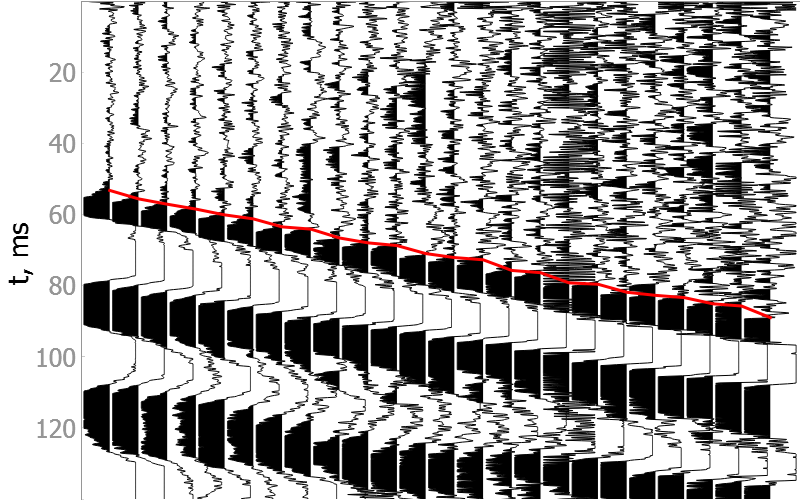

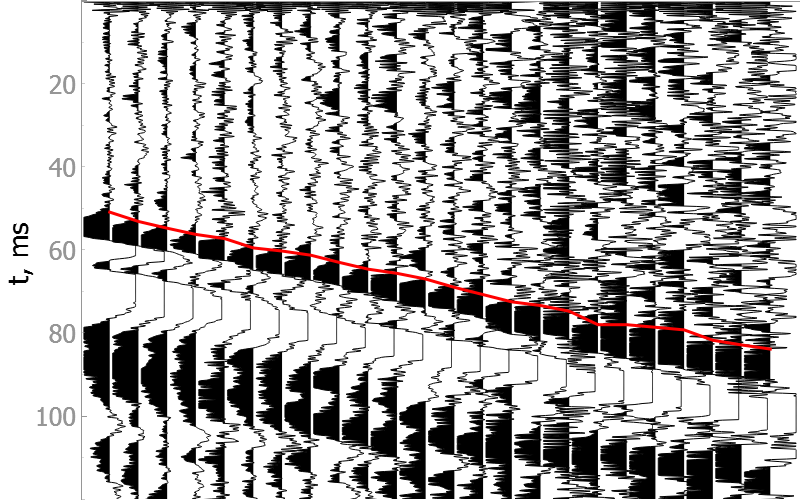

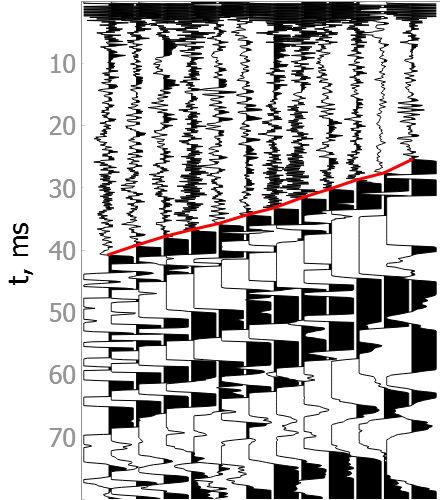

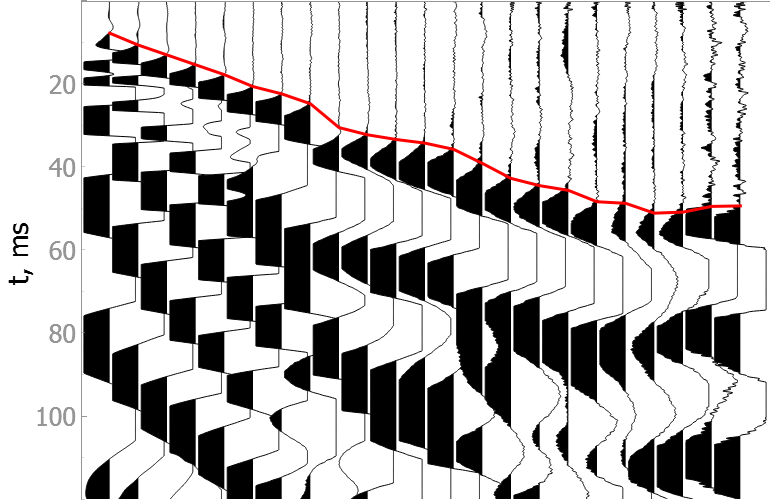

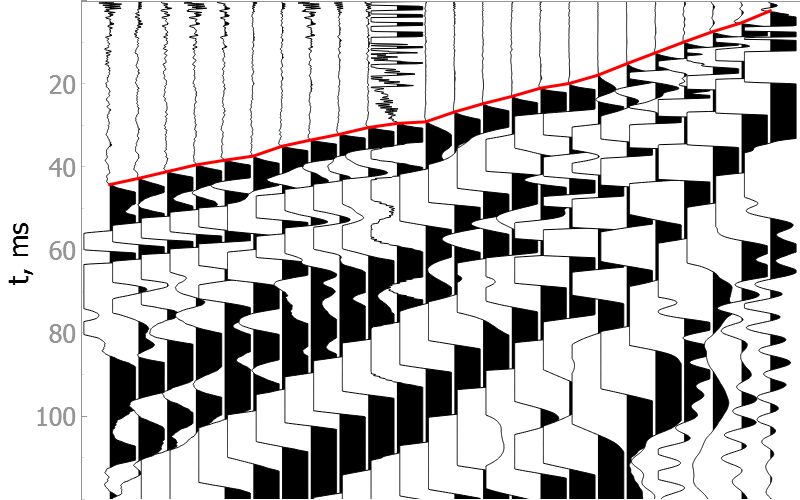

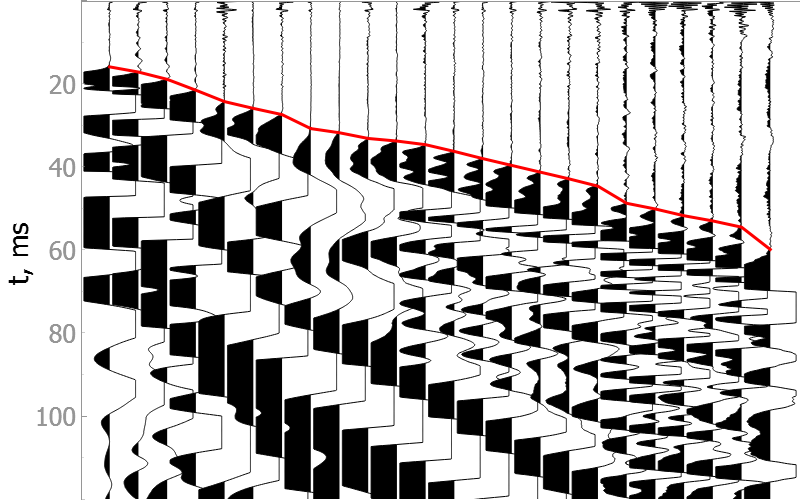

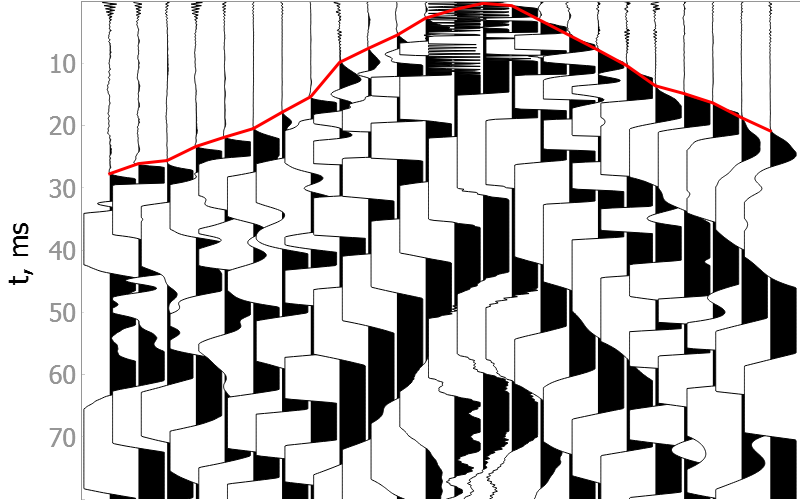

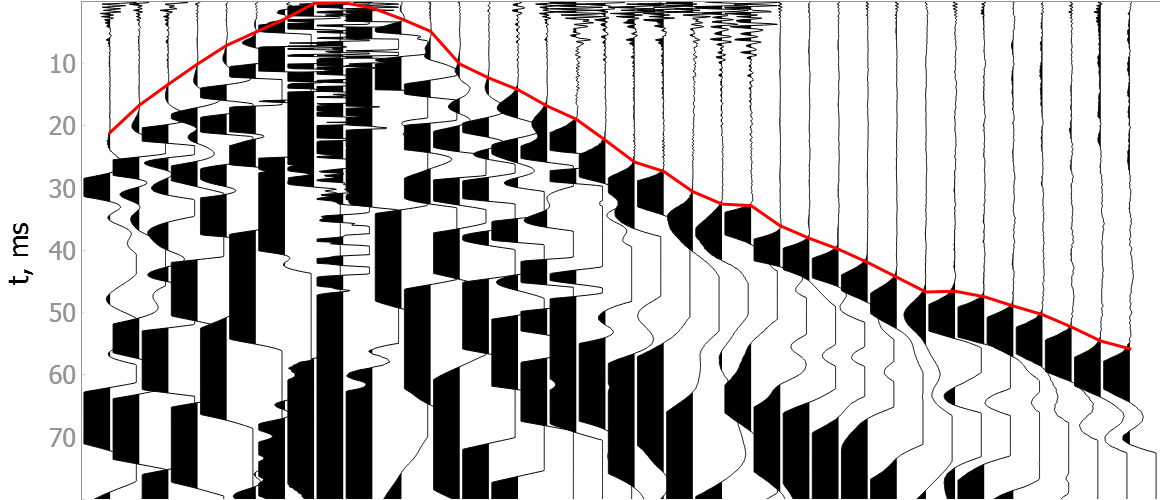

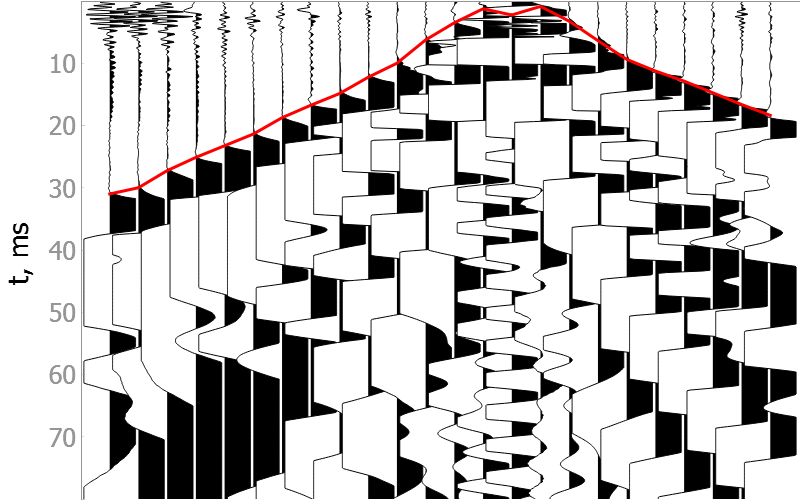

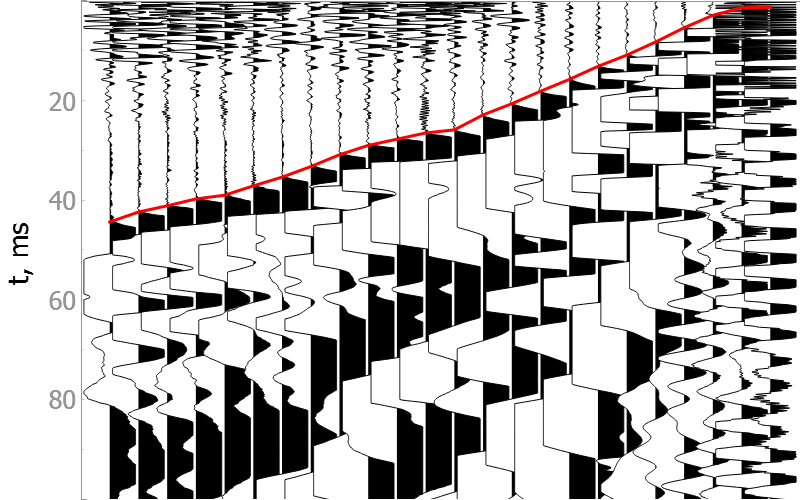

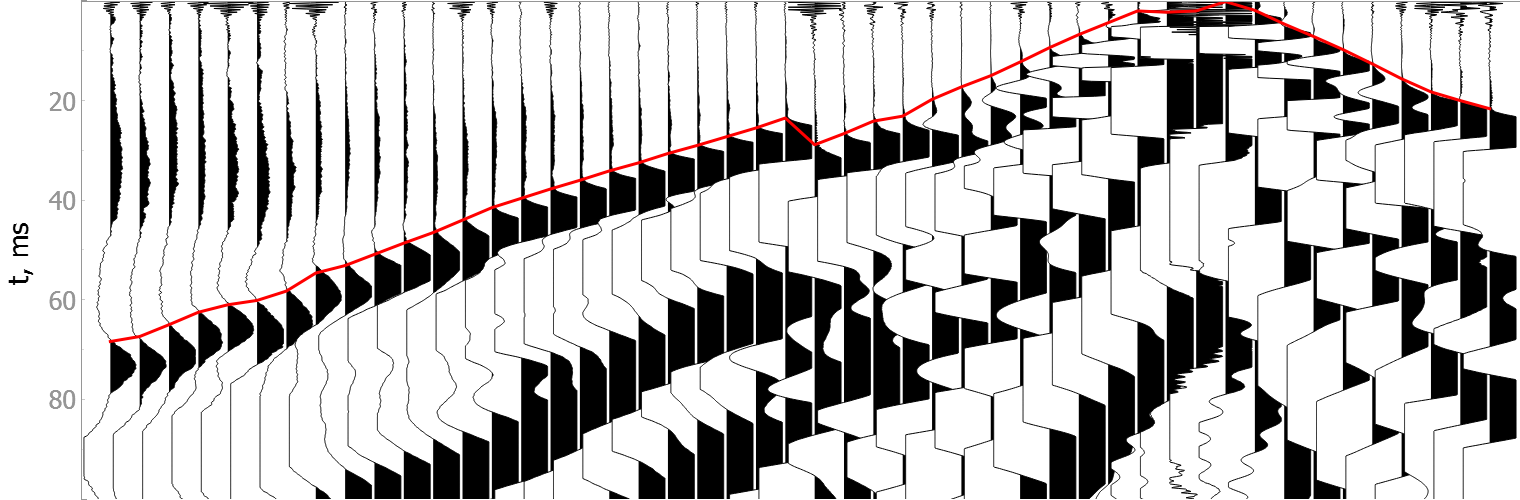

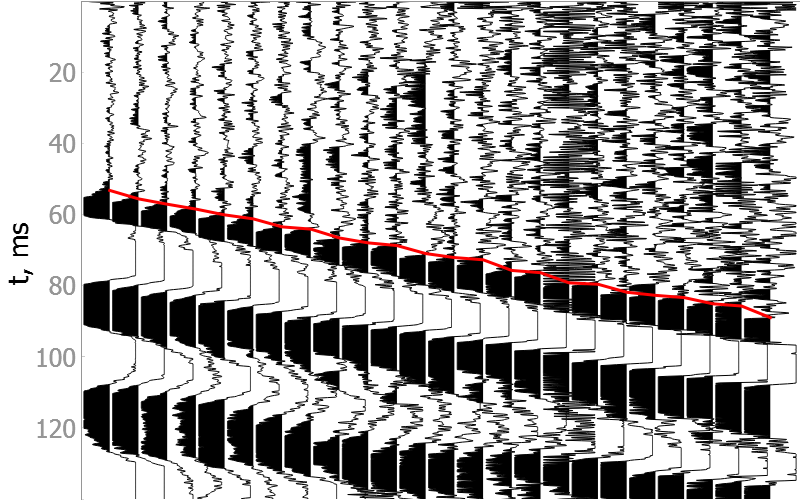

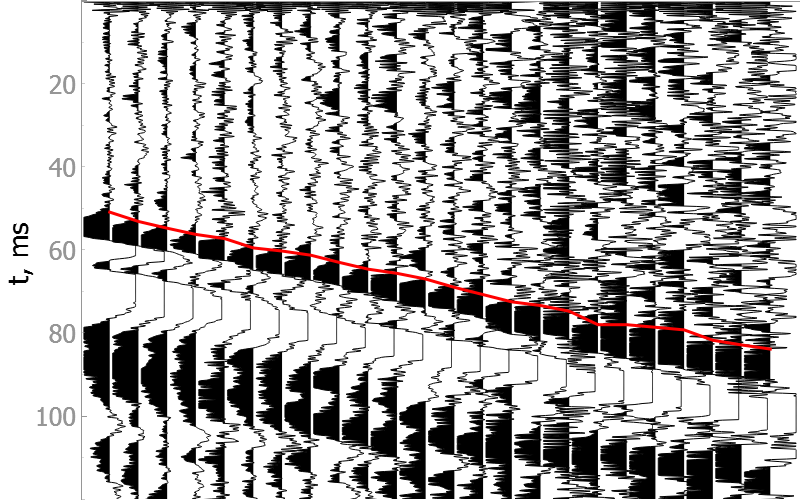

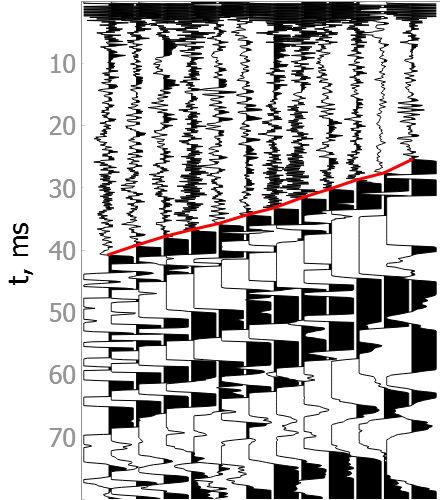

# Examples

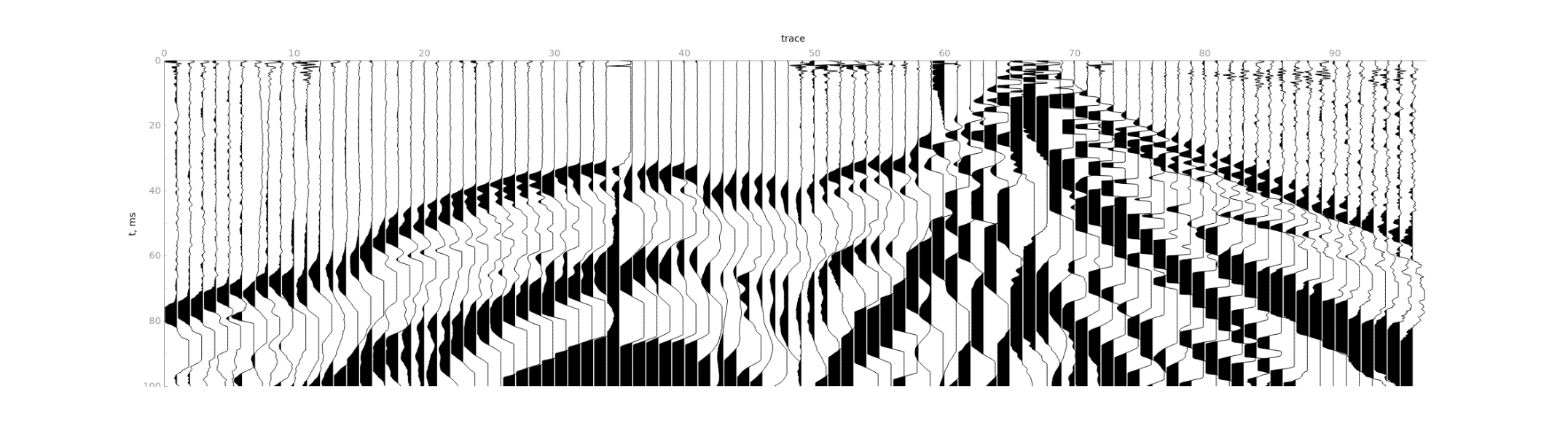

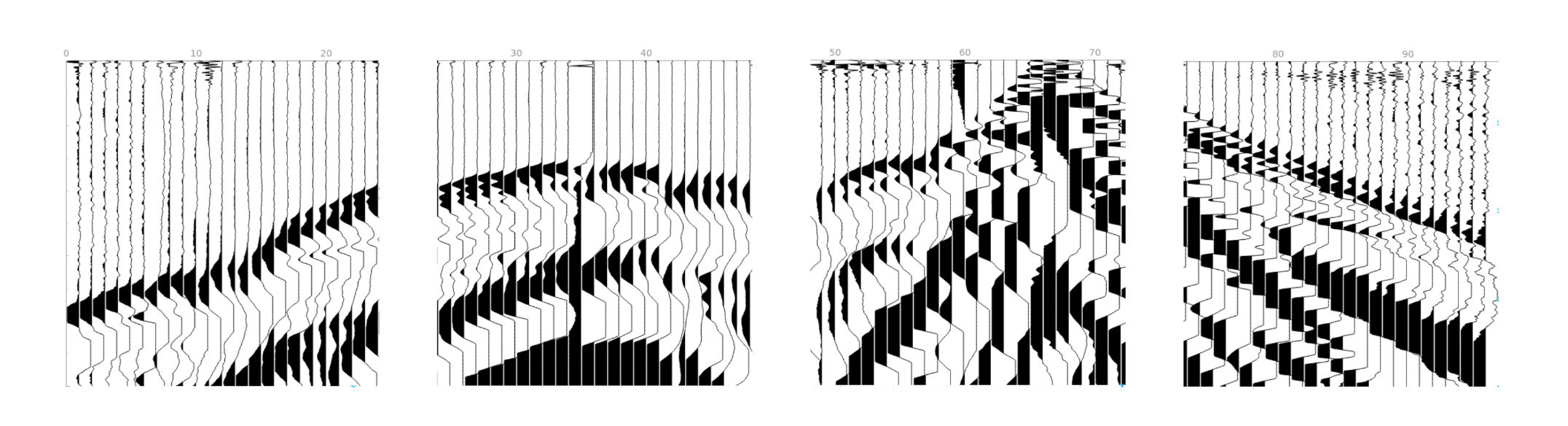

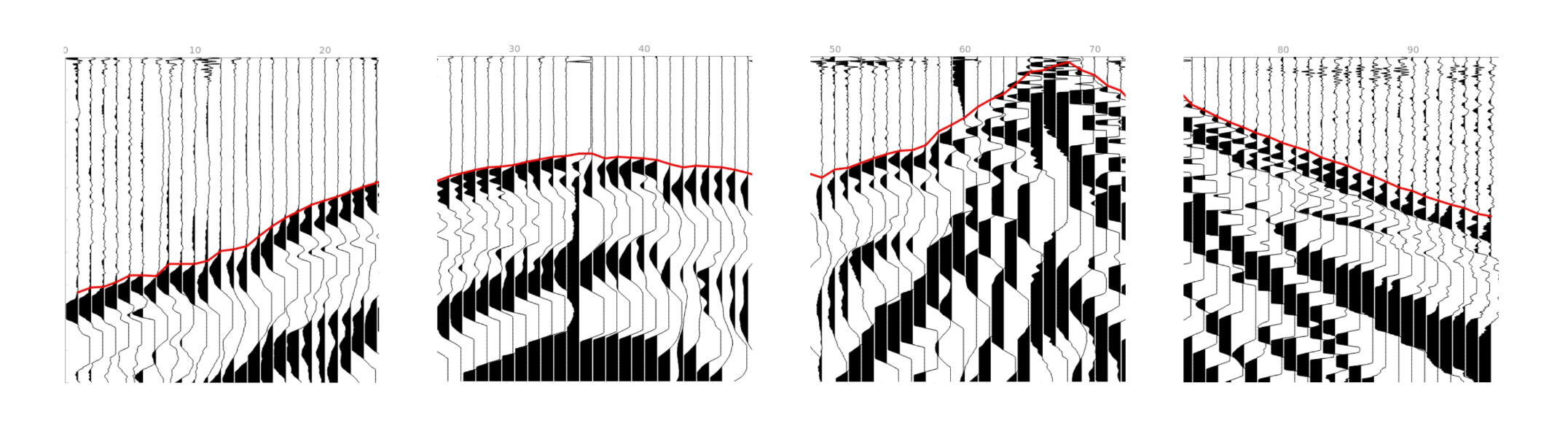

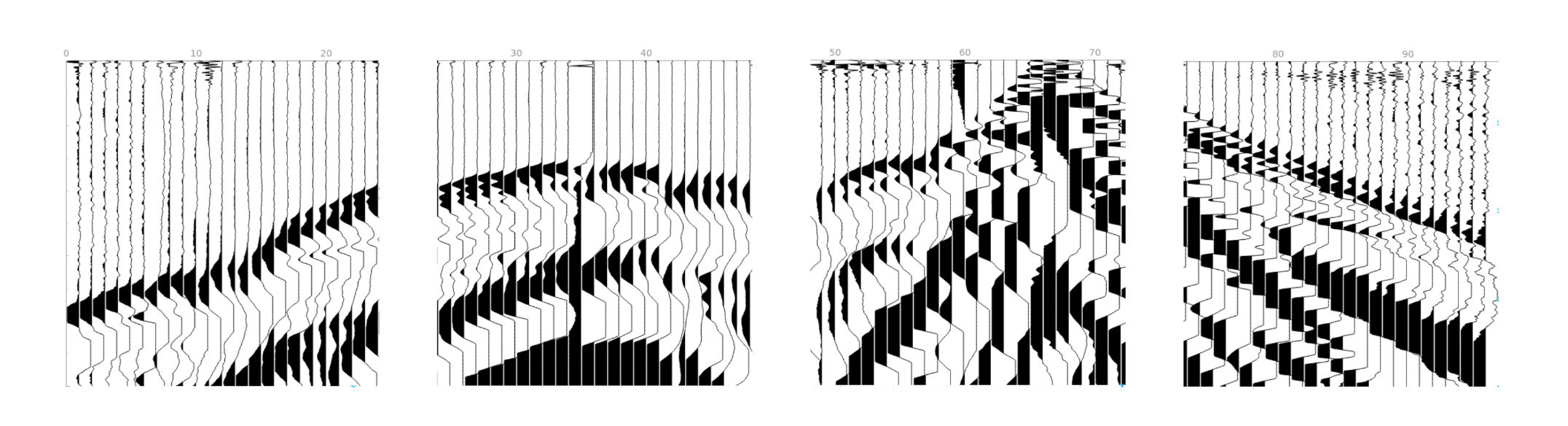

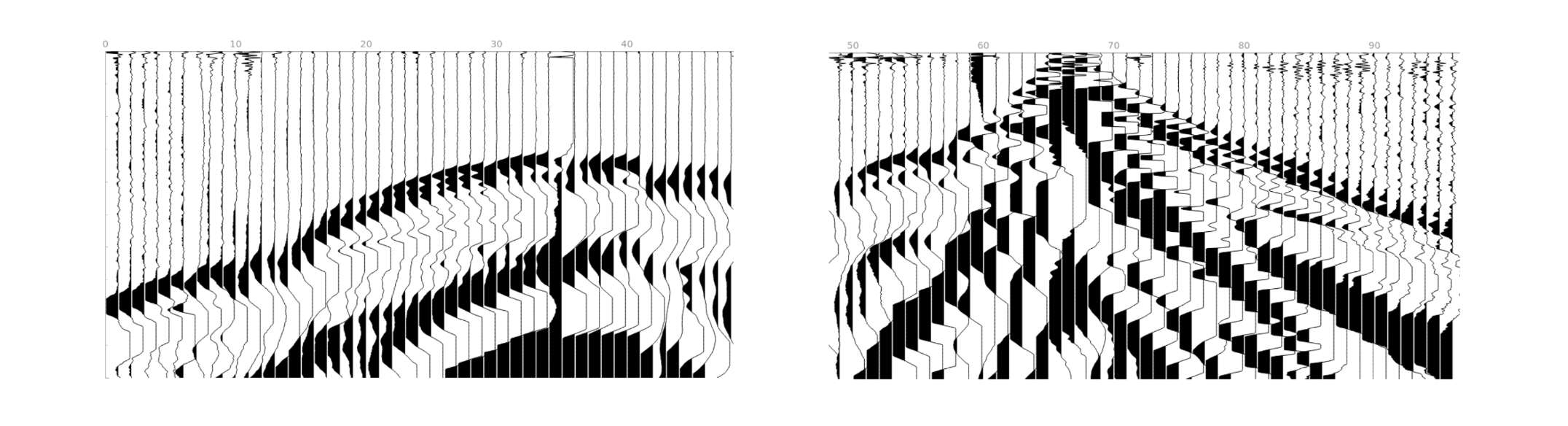

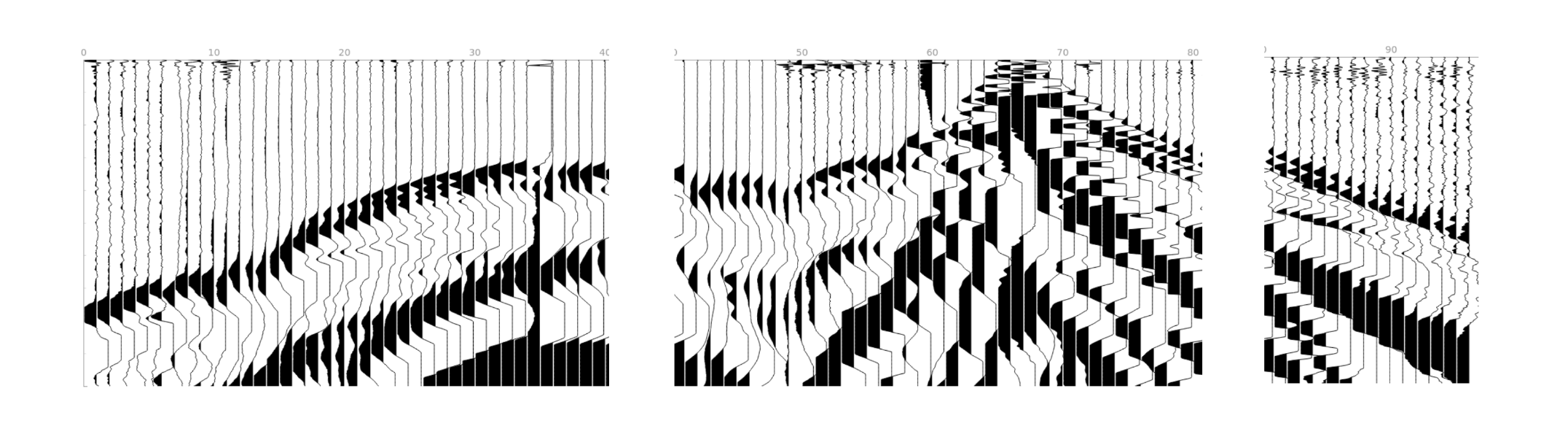

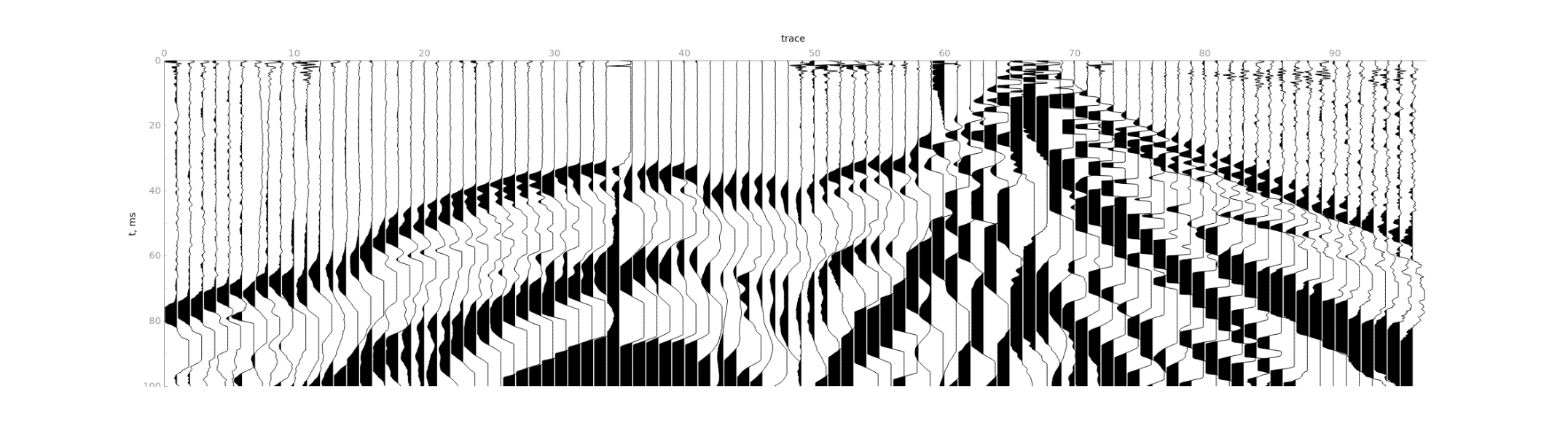

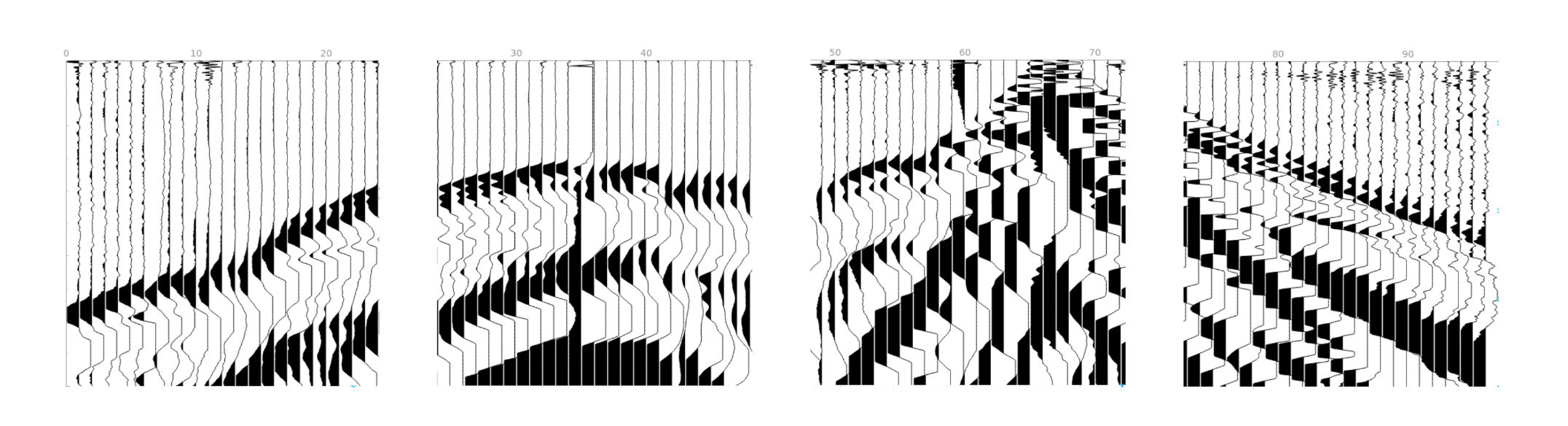

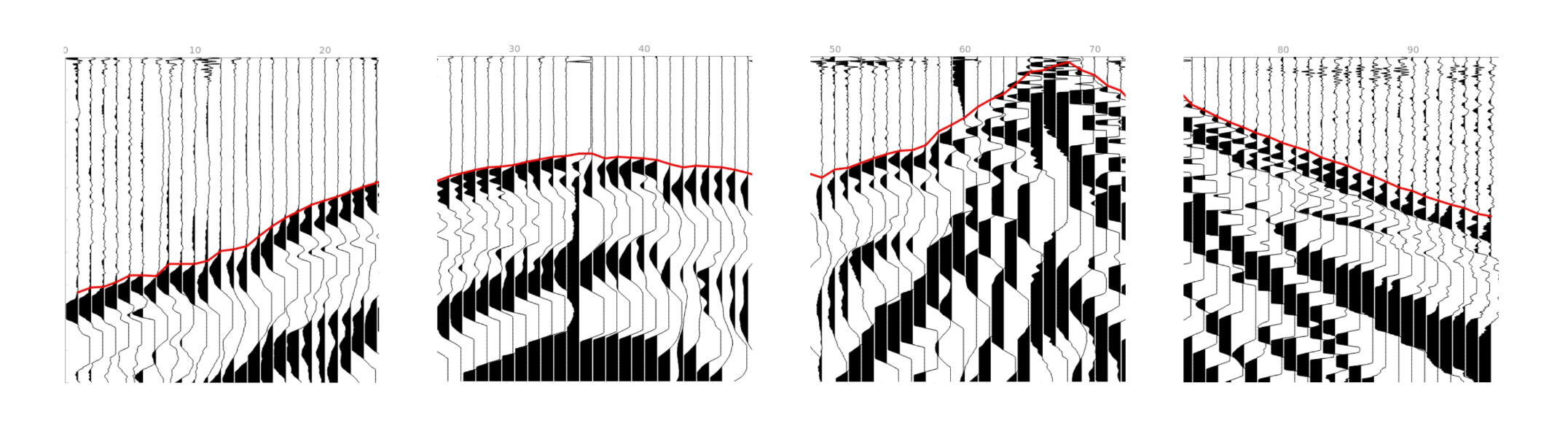

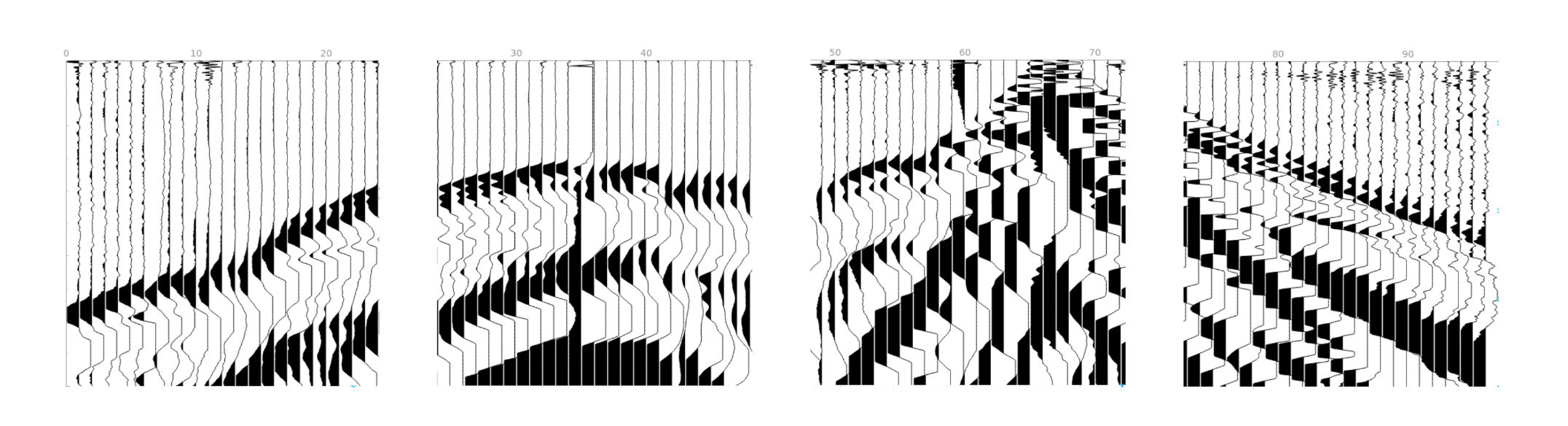

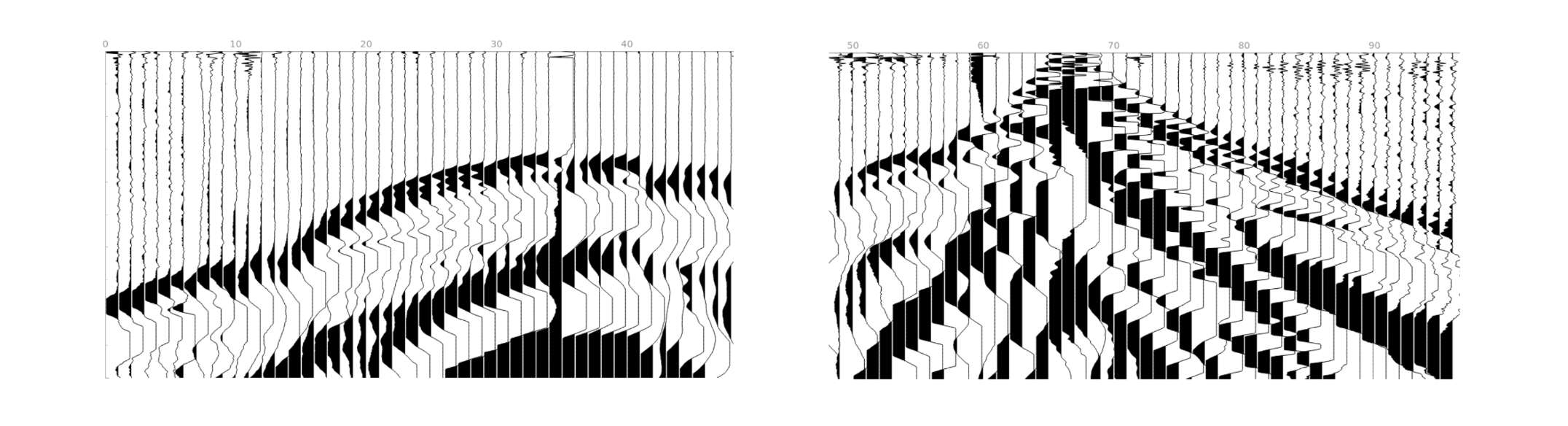

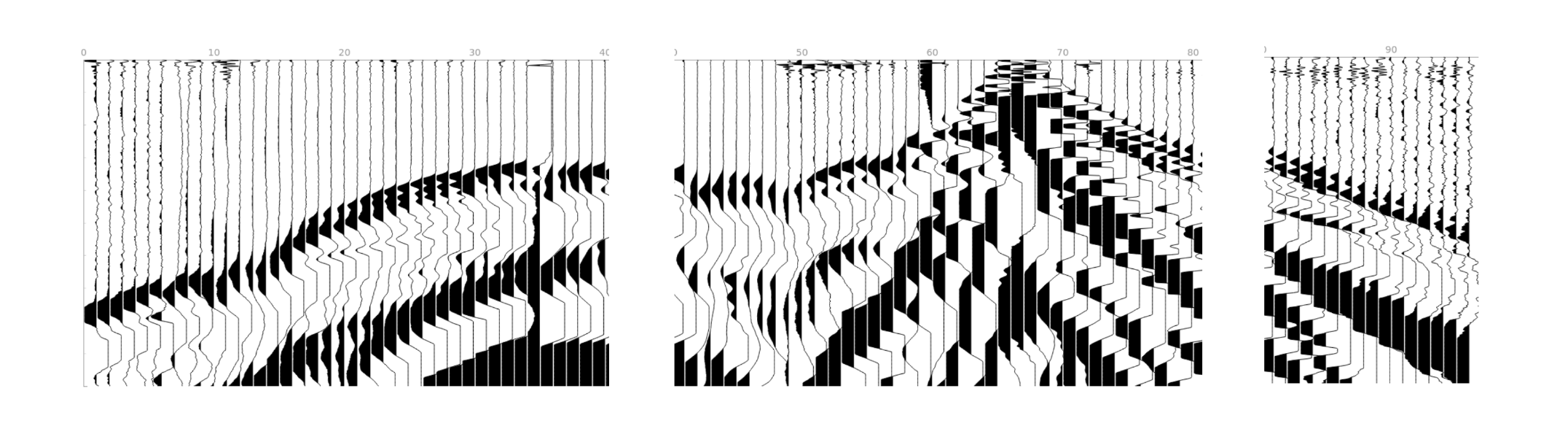

In the drop-down menus below you will find examples of our model picking on good quality data as well as noisy ones.

It is difficult to validate the quality of the model on noisy data, but they show the robustness of the model to

various types of noise.

<details>

<summary>Good quality data</summary>

</details>

<details>

<summary>Noisy data</summary>

</details>

# How model was trained?

Model training is described in detail [here](https://github.com/DaloroAT/first_breaks_picking/tree/a45bb007140f6011cf04ebe27a86eea8deb5e6eb) in the previous version of the project README.

The latest model was trained similarly, but:

1) Real land seismic data is used instead of synthetic data.

2) [UNet3+](https://arxiv.org/abs/2004.08790) model is used, instead of the vanilla UNet.

# Installation

Library is available in [PyPI](https://pypi.org/project/first-breaks-picking/):

```shell

pip install -U first-breaks-picking

```

### GPU support

You can use the capabilities of GPU (discrete, not integrated with CPU) to significantly reduce picking time. Before started, check

[here](https://developer.nvidia.com/cuda-gpus) that your GPU is CUDA compatible.

Install GPU supported version of library:

```shell

pip install -U first-breaks-picking-gpu

```

The following steps are operating system dependent and must be performed manually:

- Install [latest NVIDIA drivers](https://www.nvidia.com/Download/index.aspx).

- Install [CUDA toolkit](https://developer.nvidia.com/cuda-downloads).

**The version must be between 11.x, starting with 11.6.

Version 12 also may work, but versions >=11.6 are recommended**.

- Install ZLib and CuDNN:

[Windows](https://docs.nvidia.com/deeplearning/cudnn/install-guide/index.html#install-windows) and

[Linux](https://docs.nvidia.com/deeplearning/cudnn/install-guide/index.html#install-linux).

### Compiled desktop application

Compiled **executable** files (`.exe` in `.zip` archives), as well as installers (`.msi`), can be downloaded in the [Releases](https://github.com/DaloroAT/first_breaks_picking/releases) section.

If you want to use the capabilities of your **dedicated** GPU, also follow the steps in the [previous step](https://github.com/DaloroAT/first_breaks_picking#gpu-support) to install the driver, CUDA, CuDNN, and ZLib.

You can run the desktop application on other operating systems (Linux, MacOS) as well, but it must be [run using the Python environment](https://github.com/DaloroAT/first_breaks_picking#launch-app).

### Extra data

- To pick first breaks you need

to [download model](https://oml.daloroserver.com/download/seis/fb.onnx).

- If you have no seismic data, you can also

[download small SGY file](https://raw.githubusercontent.com/DaloroAT/first_breaks_picking/main/data/real_gather.sgy).

It's also possible to download them with Python using the following snippet:

[code-block-start]:downloading-extra

```python

from first_breaks.utils.utils import (download_demo_sgy,

download_model_onnx)

sgy_filename = 'data.sgy'

model_filename = 'model.onnx'

download_demo_sgy(sgy_filename)

download_model_onnx(model_filename)

```

[code-block-end]:downloading-extra

# How to use it

The library can be used in Python, or you can use the desktop application.

## Python

Programmatic way has more flexibility for building your own picking scenario and processing multiple files.

### Minimal example

The following snippet implements the picking process of the demo file. As a result, you can get an image from

the project preview.

[code-block-start]:e2e-example

```python

from first_breaks.utils.utils import download_demo_sgy

from first_breaks.sgy.reader import SGY

from first_breaks.picking.task import Task

from first_breaks.picking.picker_onnx import PickerONNX

from first_breaks.desktop.graph import export_image

sgy_filename = 'data.sgy'

download_demo_sgy(fname=sgy_filename)

sgy = SGY(sgy_filename)

task = Task(source=sgy,

traces_per_gather=12,

maximum_time=100,

gain=2)

picker = PickerONNX()

task = picker.process_task(task)

task.picks.color = (255, 0, 0)

# create an image from the project preview

image_filename = 'project_preview.png'

export_image(task, image_filename,

time_window=(0, 60),

traces_window=(79.5, 90.5),

show_processing_region=False,

headers_total_pixels=80,

height=500,

width=700,

hide_traces_axis=True

)

```

[code-block-end]:e2e-example

For a better understanding of the steps taken, expand and read the next section.

### Detailed examples

<details>

<summary>Show examples</summary>

### Download demo SGY

Let's download the demo file. All the following examples assume that the file is downloaded and saved as `data.sgy`.

You can also put your own SGY file.

```python

from first_breaks.utils.utils import download_demo_sgy

sgy_filename = 'data.sgy'

download_demo_sgy(fname=sgy_filename)

```

### Create SGY

We provide several ways to create `SGY` object: from file, `bytes` or `numpy` array.

From file:

[code-block-start]:init-from-path

```python

from first_breaks.sgy.reader import SGY

sgy_filename = 'data.sgy'

sgy = SGY(sgy_filename)

```

[code-block-end]:init-from-path

From `bytes`:

[code-block-start]:init-from-bytes

```python

from first_breaks.sgy.reader import SGY

sgy_filename = 'data.sgy'

with open(sgy_filename, 'rb') as fin:

sgy_bytes = fin.read()

sgy = SGY(sgy_bytes)

```

[code-block-end]:init-from-bytes

If you want to create from `numpy` array, extra argument `dt_mcs` is required:

[code-block-start]:init-from-np

```python

import numpy as np

from first_breaks.sgy.reader import SGY

num_samples = 1000

num_traces = 48

dt_mcs = 1e3

traces = np.random.random((num_samples, num_traces))

sgy = SGY(traces, dt_mcs=dt_mcs)

```

[code-block-end]:init-from-np

### Content of SGY

Created `SGY` allows you to read traces, get observation parameters and view headers (empty if created from `numpy`)

[code-block-start]:sgy-content

```python

from first_breaks.sgy.reader import SGY

sgy_filename = 'data.sgy'

sgy = SGY(sgy_filename)

# get all traces or specific traces limited by time

all_traces = sgy.read()

block_of_data = sgy.read_traces_by_ids(ids=[1, 2, 3, 10],

min_sample=100,

max_sample=500)

# number of traces, values are the same

print(sgy.num_traces, sgy.ntr)

# number of time samples, values are the same

print(sgy.num_samples, sgy.ns)

# = (ns, ntr)

print(sgy.shape)

# time discretization, in mcs, in mcs, in ms, fs in Hertz

print(sgy.dt, sgy.dt_mcs, sgy.dt_ms, sgy.fs)

# dict with headers in the first 3600 bytes of the file

print(sgy.general_headers)

# pandas DataFrame with headers for each trace

print(sgy.traces_headers.head())

```

[code-block-end]:sgy-content

### Create task for picking

Next, we create a task for picking and pass the picking parameters to it. They have default values, but for the

best quality, they must be matched to specific data. You can use the desktop application to evaluate the parameters.

A detailed description of the parameters can be found in the `Picking process` chapter.

[code-block-start]:create-task

```python

from first_breaks.sgy.reader import SGY

from first_breaks.picking.task import Task

sgy_filename = 'data.sgy'

sgy = SGY(sgy_filename)

task = Task(source=sgy,

traces_per_gather=24,

maximum_time=200)

```

[code-block-end]:create-task

### Create Picker

In this step, we instantiate the neural network for picking. If you downloaded the model according to the

installation section, then pass the path to it. Or leave the path to the model empty so that we can download it

automatically.

It's also possible to use GPU/CUDA to accelerate computation. By default `cuda` is selected if you have finished

all steps regarding GPU in `Installation` section. Otherwise, it's `cpu`.

You can also set the value of parameter `batch_size`, which can further speed up the calculations on the GPU.

However, this will require additional video memory (VRAM).

NOTE: When using the CPU, increasing `batch_size` does not speed up the calculation at all, but it may

require additional memory costs (RAM). So don't increase this parameter when using CPU.

[code-block-start]:create-picker

```python

from first_breaks.picking.picker_onnx import PickerONNX

# the library will determine the best available device

picker_default = PickerONNX()

# create picker explicitly on CPU

picker_cpu = PickerONNX(device='cpu')

# create picker explicitly on GPU

picker_gpu = PickerONNX(device='cuda', batch_size=2)

# transfer model to another device

picker_cpu.change_settings(device='cuda', batch_size=3)

picker_gpu.change_settings(device='cpu', batch_size=1)

```

[code-block-end]:create-picker

### Pick first breaks

Now, using all the created components, we can pick the first breaks and retrieve results.

[code-block-start]:pick-fb

```python

from first_breaks.picking.task import Task

from first_breaks.picking.picker_onnx import PickerONNX

from first_breaks.sgy.reader import SGY

sgy_filename = 'data.sgy'

sgy = SGY(sgy_filename)

task = Task(source=sgy,

traces_per_gather=24,

maximum_time=200)

picker = PickerONNX()

task = picker.process_task(task)

# picks available from attribute

picks = task.picks

# or by method

picks = task.get_result()

# print values and confidence of picks

print(picks.picks_in_mcs, picks.confidence)

# print picking parameters

print(picks.picking_parameters, task.picking_parameters)

```

[code-block-end]:pick-fb

### Picks

The picks are stored in class `Picks`. In addition to the pick values, the class stores additional

information (picking parameters, color, etc.). The class instance is returned as a picking result, but can

also be created manually.

[code-block-start]:picks

```python

from first_breaks.picking.picks import Picks

picks_in_samples = [1, 10, 20, 30, 50, 100]

dt_mcs = 250

picks = Picks(values=picks_in_samples, unit="mcs", dt_mcs=dt_mcs)

# get values

print(picks.picks_in_samples)

print(picks.picks_in_mcs)

print(picks.picks_in_ms)

# change color and width for visualisation

picks.color = (255, 0, 0)

picks.width = 5

# if picks are obtained by NN you can read extra attributes

print(picks.confidence)

print(picks.picking_parameters)

print(picks.created_by_nn)

```

[code-block-end]:picks

### Visualizations

You can save the seismogram and picks as an image. We use Qt backend for visualizations. Here we describe some usage

scenarios.

We've added named arguments to various scenarios for demonstration purposes, but in practice you can

use them all. See the function arguments for more visualization options.

Plot `SGY` only:

[code-block-start]:plot-sgy

```python

from first_breaks.sgy.reader import SGY

from first_breaks.desktop.graph import export_image

sgy_filename = 'data.sgy'

image_filename = 'image.png'

sgy = SGY(sgy_filename)

export_image(sgy, image_filename,

normalize=None,

traces_window=(5, 10),

time_window=(0, 200),

height=300,

width_per_trace=30)

```

[code-block-end]:plot-sgy

Plot `numpy` traces:

[code-block-start]:plot-np

```python

import numpy as np

from first_breaks.sgy.reader import SGY

from first_breaks.desktop.graph import export_image

image_filename = 'image.png'

num_traces = 48

num_samples = 1000

dt_mcs = 1e3

traces = np.random.random((num_samples, num_traces))

export_image(traces, image_filename,

dt_mcs=dt_mcs,

clip=0.5)

# or create SGY as discussed before

sgy = SGY(traces, dt_mcs=dt_mcs)

export_image(sgy, image_filename,

gain=2)

```

[code-block-end]:plot-np

Plot `SGY` with custom picks:

[code-block-start]:plot-sgy-custom-picks

```python

import numpy as np

from first_breaks.picking.picks import Picks

from first_breaks.sgy.reader import SGY

from first_breaks.desktop.graph import export_image

sgy_filename = 'data.sgy'

image_filename = 'image.png'

sgy = SGY(sgy_filename)

picks_ms = np.random.uniform(low=0,

high=sgy.ns * sgy.dt_ms,

size=sgy.ntr)

picks = Picks(values=picks_ms, unit="ms", dt_mcs=sgy.dt_mcs, color=(0, 100, 100))

export_image(sgy, image_filename,

picks=picks)

```

[code-block-end]:plot-sgy-custom-picks

Plot result of picking:

[code-block-start]:plot-sgy-real-picks

```python

from first_breaks.picking.task import Task

from first_breaks.picking.picker_onnx import PickerONNX

from first_breaks.desktop.graph import export_image

from first_breaks.sgy.reader import SGY

sgy_filename = 'data.sgy'

image_filename = 'image.png'

sgy = SGY(sgy_filename)

task = Task(source=sgy,

traces_per_gather=24,

maximum_time=200)

picker = PickerONNX()

task = picker.process_task(task)

export_image(task, image_filename,

show_processing_region=False,

fill_black='right',

width=1000)

```

[code-block-end]:plot-sgy-real-picks

### *Limit processing region

Unfortunately, processing of a part of a file is not currently supported natively. We will add this functionality soon!

However, you can use the following workaround to do this:

[code-block-start]:pick-limited

```python

from first_breaks.sgy.reader import SGY

sgy_filename = 'data.sgy'

sgy = SGY(sgy_filename)

interesting_traces = sgy.read_traces_by_ids(ids=list(range(20, 40)),

min_sample=100,

max_sample=200)

# we create new SGY based on region of interests

sgy = SGY(interesting_traces, dt_mcs=sgy.dt_mcs)

```

[code-block-end]:pick-limited

</details>

## Desktop application

***Application under development***

Desktop application allows you to work interactively with only one file and has better performance in visualization.

You can use application as SGY viewer, as well as visually evaluate the optimal values of the picking

parameters for your data.

### Launch app

Enter command to launch the application

```shell

first-breaks-picking app

```

or

```shell

first-breaks-picking desktop

```

### Select and view SGY file

Click on 2 button to select SGY. After successful reading you can analyze SGY file.

The following mouse interactions are available:

- Left button drag / Middle button drag: Pan the scene.

- Right button drag: Scales the scene. Dragging left/right scales horizontally; dragging up/down scales vertically.

- Right button click: Open dialog with extra options, such as limit by X/Y axes and export.

- Wheel spin: Zooms the scene in and out.

- Left click: You can manually change values of active picks.

Spectrum analysis:

- Hold down the **Shift** key, the left mouse button, and select an area: a window with a spectrum will appear.

You can create as many windows as you like.

- Move existing windows by dragging with the left mouse button.

- Delete the window with the spectrum by clicking on it and pressing the **Del** key.

### Load model

To use picker in desktop app you have to download model. See the `Installation` section for instructions

on how to download the model.

Click on 1 button and select file with model.

After successfully loading the model, access to the pick with NN will open.

### Settings and Processing

Click on 3 button to open window with different settings and picking parameters:

- The **Processing** section contains parameters for drawing seismograms; these parameters are also used during the

picking process. Changing them in real time changes the rendering.

- The **View** section allows you to change the axes' names, content, and orientation.

- The **NN Picking** section allows you to select additional parameters for picking the first arrivals and a device

for calculations. If you have CUDA compatible GPU and installed GPU supported version of library

(see `Installation` section), you can select `CUDA/GPU` device to use GPU acceleration.

It can drastically decrease computation time.

A detailed description of the parameters responsible for picking can be found in the `Picking process` chapter.

When you have chosen the optimal parameters, click on the **Run picking** button. After some time, a line will appear

connecting the first arrivals. You can interrupt the process by clicking **Stop** button.

### Processing grid

Click on 4 button to toggle the display of the processing grid on or off. Horizontal line

shows `Maximum time` and vertical lines are drawn at intervals equal to `Traces per gather`. The neural network

processes blocks independently, as separate images.

### Picks manager

Click on 5 button to open picks manager.

When picking with a neural network, the result of the picking will appear here. You can also: add manual picks,

load picks from headers, duplicate picks, aggregate several picks, or remove picks.

As control and navigation tools, you can choose to show picks or not (checkbox), select active picks (radio button),

and change color (color label).

After selecting one of the picks, you can double-click or click the button with the “save” icon to show the

export options.

# Picking process

Neural network process file as series of **images**. There is why **the traces should not be random**,

since we are using information about adjacent traces.

To obtain the first breaks we do the following steps:

1) Read all traces in the file.

2) Limit time range by `Maximum time`.

3) Split the sequence of traces into independent gathers of lengths `Traces per gather` each.

4) Apply trace modification on the gathers level if necessary (`Gain`, `Clip`, etc).

5) Calculate first breaks for individual gathers independently.

6) Join the first breaks of individual gathers.

To achieve the best result, you need to modify the picking parameters.

### Traces per gather

`Traces per gather` is the most important parameter for picking. The parameter defines how we split the sequence of

traces into individual gathers.

Suppose we need to process a file with 96 traces. Depending on the value of `Traces per gather` parameter, we will

process it as follows:

- `Traces per gather` = 24. We will process 4 gathers with 24 traces each.

- `Traces per gather` = 48. We will process 2 gathers with 48 traces each.

- `Traces per gather` = 40. We will process 2 gathers with 40 traces each and 1 gather with the remaining 16 traces.

The last gather will be interpolated from 16 to 40 traces.

### Maximum time

You can localize the area for finding first breaks. Specify `Maximum time` if you have long records but the first breaks

located at the start of the traces. Keep it `0` if you don't want to limit traces.

### List of traces to inverse

Some receivers may have the wrong polarity, so you can specify which traces should be inversed. Note, that inversion

will be applied on the gathers level. For example, if you have 96 traces, `Traces per gather` = 48 and

`List of traces to inverse` = (2, 30, 48), then traces (2, 30, 48, 50, 78, 96) will be inversed.

Notes:

- Trace indexing starts at 1.

- Option is not available on desktop app.

## Recommendations

You can receive predictions for any file with any parameters, but to get a better result, you should comply with the

following guidelines:

- Your file should contain one or more gathers. By a gather, we mean that traces within a single gather can be

geophysically interpreted. **The traces within the same gather should not be random**, since we are using information

about adjacent traces.

- All gathers in the file must contain the same number of traces.

- The number of traces in gather must be equal to `Traces per gather` or divisible by it without a remainder.

For example, if you have CSP gathers and the number of receivers is 48, then you can set the parameter

value to 48, 24, or 12.

- We don't sort your file (CMP, CRP, CSP, etc), so you should send us files with traces sorted by yourself.

- You can process a file with independent seismograms obtained from different polygons, under different conditions, etc.,

but the requirements listed above must be met.

# Acknowledgments

<a href="https://geodevice.co/"><img src="https://geodevice.co/local/templates/geodevice_15_07_2019/assets/images/logo_geodevice.png?1" style="width: 200px;" alt="Geodevice"></a>

We would like to thank [GEODEVICE](https://geodevice.co/) for providing field data from land and borehole seismic surveys with annotated first breaks for model training.

Raw data

{

"_id": null,

"home_page": null,

"name": "first-breaks-picking-gpu",

"maintainer": null,

"docs_url": null,

"requires_python": ">=3.8",

"maintainer_email": null,

"keywords": "seismic, first-breaks, computer-vision, deep-learning, segmentation, data-science",

"author": "Aleksei Tarasov",

"author_email": "Aleksei Tarasov <aleksei.v.tarasov@gmail.com>",

"download_url": "https://files.pythonhosted.org/packages/b8/70/2fc5086e2a0be9c50d4099727bfcebe05a1265cc5ab11208e698aaaed7fb/first_breaks_picking_gpu-0.7.4.tar.gz",

"platform": null,

"description": "# FirstBreaksPicking\r\nThis project is devoted to pick waves that are the first to be detected on a seismogram (first breaks, first arrivals).\r\nTraditionally, this procedure is performed manually. When processing field data, the number of picks reaches hundreds of\r\nthousands. Existing analytical methods allow you to automate picking only on high-quality data with a high\r\nsignal / noise ratio.\r\n\r\nAs a more robust algorithm, it is proposed to use a neural network to pick the first breaks. Since the data on adjacent\r\nseismic traces have similarities in the features of the wave field, **we pick first breaks on 2D seismic gather**, not\r\nindividual traces.\r\n\r\n<p align=\"center\">\r\n<img src=\"https://raw.githubusercontent.com/DaloroAT/first_breaks_picking/main/docs/readme_images/project_preview.png\" />\r\n</p>\r\n\r\n# Examples\r\n\r\nIn the drop-down menus below you will find examples of our model picking on good quality data as well as noisy ones.\r\n\r\nIt is difficult to validate the quality of the model on noisy data, but they show the robustness of the model to\r\nvarious types of noise.\r\n\r\n<details>\r\n<summary>Good quality data</summary>\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n</details>\r\n\r\n<details>\r\n<summary>Noisy data</summary>\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n</details>\r\n\r\n# How model was trained?\r\n\r\nModel training is described in detail [here](https://github.com/DaloroAT/first_breaks_picking/tree/a45bb007140f6011cf04ebe27a86eea8deb5e6eb) in the previous version of the project README.\r\n\r\nThe latest model was trained similarly, but:\r\n1) Real land seismic data is used instead of synthetic data.\r\n2) [UNet3+](https://arxiv.org/abs/2004.08790) model is used, instead of the vanilla UNet.\r\n\r\n# Installation\r\n\r\nLibrary is available in [PyPI](https://pypi.org/project/first-breaks-picking/):\r\n```shell\r\npip install -U first-breaks-picking\r\n```\r\n\r\n### GPU support\r\n\r\nYou can use the capabilities of GPU (discrete, not integrated with CPU) to significantly reduce picking time. Before started, check\r\n[here](https://developer.nvidia.com/cuda-gpus) that your GPU is CUDA compatible.\r\n\r\nInstall GPU supported version of library:\r\n```shell\r\npip install -U first-breaks-picking-gpu\r\n```\r\n\r\nThe following steps are operating system dependent and must be performed manually:\r\n\r\n- Install [latest NVIDIA drivers](https://www.nvidia.com/Download/index.aspx).\r\n- Install [CUDA toolkit](https://developer.nvidia.com/cuda-downloads).\r\n**The version must be between 11.x, starting with 11.6.\r\nVersion 12 also may work, but versions >=11.6 are recommended**.\r\n- Install ZLib and CuDNN:\r\n[Windows](https://docs.nvidia.com/deeplearning/cudnn/install-guide/index.html#install-windows) and\r\n[Linux](https://docs.nvidia.com/deeplearning/cudnn/install-guide/index.html#install-linux).\r\n\r\n### Compiled desktop application\r\n\r\nCompiled **executable** files (`.exe` in `.zip` archives), as well as installers (`.msi`), can be downloaded in the [Releases](https://github.com/DaloroAT/first_breaks_picking/releases) section.\r\nIf you want to use the capabilities of your **dedicated** GPU, also follow the steps in the [previous step](https://github.com/DaloroAT/first_breaks_picking#gpu-support) to install the driver, CUDA, CuDNN, and ZLib.\r\n\r\nYou can run the desktop application on other operating systems (Linux, MacOS) as well, but it must be [run using the Python environment](https://github.com/DaloroAT/first_breaks_picking#launch-app).\r\n\r\n### Extra data\r\n\r\n- To pick first breaks you need\r\nto [download model](https://oml.daloroserver.com/download/seis/fb.onnx).\r\n\r\n- If you have no seismic data, you can also\r\n[download small SGY file](https://raw.githubusercontent.com/DaloroAT/first_breaks_picking/main/data/real_gather.sgy).\r\n\r\nIt's also possible to download them with Python using the following snippet:\r\n\r\n[code-block-start]:downloading-extra\r\n```python\r\nfrom first_breaks.utils.utils import (download_demo_sgy,\r\n download_model_onnx)\r\n\r\nsgy_filename = 'data.sgy'\r\nmodel_filename = 'model.onnx'\r\n\r\ndownload_demo_sgy(sgy_filename)\r\ndownload_model_onnx(model_filename)\r\n```\r\n[code-block-end]:downloading-extra\r\n\r\n# How to use it\r\n\r\nThe library can be used in Python, or you can use the desktop application.\r\n\r\n## Python\r\n\r\nProgrammatic way has more flexibility for building your own picking scenario and processing multiple files.\r\n\r\n### Minimal example\r\n\r\nThe following snippet implements the picking process of the demo file. As a result, you can get an image from\r\nthe project preview.\r\n\r\n[code-block-start]:e2e-example\r\n```python\r\nfrom first_breaks.utils.utils import download_demo_sgy\r\nfrom first_breaks.sgy.reader import SGY\r\nfrom first_breaks.picking.task import Task\r\nfrom first_breaks.picking.picker_onnx import PickerONNX\r\nfrom first_breaks.desktop.graph import export_image\r\n\r\nsgy_filename = 'data.sgy'\r\ndownload_demo_sgy(fname=sgy_filename)\r\nsgy = SGY(sgy_filename)\r\n\r\ntask = Task(source=sgy,\r\n traces_per_gather=12,\r\n maximum_time=100,\r\n gain=2)\r\npicker = PickerONNX()\r\ntask = picker.process_task(task)\r\ntask.picks.color = (255, 0, 0)\r\n\r\n# create an image from the project preview\r\nimage_filename = 'project_preview.png'\r\nexport_image(task, image_filename,\r\n time_window=(0, 60),\r\n traces_window=(79.5, 90.5),\r\n show_processing_region=False,\r\n headers_total_pixels=80,\r\n height=500,\r\n width=700,\r\n hide_traces_axis=True\r\n )\r\n```\r\n[code-block-end]:e2e-example\r\n\r\nFor a better understanding of the steps taken, expand and read the next section.\r\n\r\n### Detailed examples\r\n\r\n<details>\r\n\r\n<summary>Show examples</summary>\r\n\r\n### Download demo SGY\r\n\r\nLet's download the demo file. All the following examples assume that the file is downloaded and saved as `data.sgy`.\r\nYou can also put your own SGY file.\r\n\r\n```python\r\nfrom first_breaks.utils.utils import download_demo_sgy\r\n\r\nsgy_filename = 'data.sgy'\r\ndownload_demo_sgy(fname=sgy_filename)\r\n```\r\n\r\n### Create SGY\r\nWe provide several ways to create `SGY` object: from file, `bytes` or `numpy` array.\r\n\r\nFrom file:\r\n\r\n[code-block-start]:init-from-path\r\n```python\r\nfrom first_breaks.sgy.reader import SGY\r\n\r\nsgy_filename = 'data.sgy'\r\nsgy = SGY(sgy_filename)\r\n```\r\n[code-block-end]:init-from-path\r\n\r\nFrom `bytes`:\r\n\r\n[code-block-start]:init-from-bytes\r\n```python\r\nfrom first_breaks.sgy.reader import SGY\r\n\r\nsgy_filename = 'data.sgy'\r\n\r\nwith open(sgy_filename, 'rb') as fin:\r\n sgy_bytes = fin.read()\r\n\r\nsgy = SGY(sgy_bytes)\r\n```\r\n[code-block-end]:init-from-bytes\r\n\r\nIf you want to create from `numpy` array, extra argument `dt_mcs` is required:\r\n\r\n[code-block-start]:init-from-np\r\n```python\r\nimport numpy as np\r\nfrom first_breaks.sgy.reader import SGY\r\n\r\nnum_samples = 1000\r\nnum_traces = 48\r\ndt_mcs = 1e3\r\n\r\ntraces = np.random.random((num_samples, num_traces))\r\nsgy = SGY(traces, dt_mcs=dt_mcs)\r\n```\r\n[code-block-end]:init-from-np\r\n\r\n### Content of SGY\r\n\r\nCreated `SGY` allows you to read traces, get observation parameters and view headers (empty if created from `numpy`)\r\n\r\n[code-block-start]:sgy-content\r\n```python\r\nfrom first_breaks.sgy.reader import SGY\r\n\r\nsgy_filename = 'data.sgy'\r\nsgy = SGY(sgy_filename)\r\n\r\n# get all traces or specific traces limited by time\r\nall_traces = sgy.read()\r\nblock_of_data = sgy.read_traces_by_ids(ids=[1, 2, 3, 10],\r\n min_sample=100,\r\n max_sample=500)\r\n\r\n# number of traces, values are the same\r\nprint(sgy.num_traces, sgy.ntr)\r\n# number of time samples, values are the same\r\nprint(sgy.num_samples, sgy.ns)\r\n# = (ns, ntr)\r\nprint(sgy.shape)\r\n# time discretization, in mcs, in mcs, in ms, fs in Hertz\r\nprint(sgy.dt, sgy.dt_mcs, sgy.dt_ms, sgy.fs)\r\n\r\n# dict with headers in the first 3600 bytes of the file\r\nprint(sgy.general_headers)\r\n# pandas DataFrame with headers for each trace\r\nprint(sgy.traces_headers.head())\r\n```\r\n[code-block-end]:sgy-content\r\n\r\n### Create task for picking\r\n\r\nNext, we create a task for picking and pass the picking parameters to it. They have default values, but for the\r\nbest quality, they must be matched to specific data. You can use the desktop application to evaluate the parameters.\r\nA detailed description of the parameters can be found in the `Picking process` chapter.\r\n\r\n[code-block-start]:create-task\r\n```python\r\nfrom first_breaks.sgy.reader import SGY\r\nfrom first_breaks.picking.task import Task\r\n\r\nsgy_filename = 'data.sgy'\r\nsgy = SGY(sgy_filename)\r\n\r\ntask = Task(source=sgy,\r\n traces_per_gather=24,\r\n maximum_time=200)\r\n```\r\n[code-block-end]:create-task\r\n\r\n\r\n### Create Picker\r\nIn this step, we instantiate the neural network for picking. If you downloaded the model according to the\r\ninstallation section, then pass the path to it. Or leave the path to the model empty so that we can download it\r\nautomatically.\r\n\r\nIt's also possible to use GPU/CUDA to accelerate computation. By default `cuda` is selected if you have finished\r\nall steps regarding GPU in `Installation` section. Otherwise, it's `cpu`.\r\n\r\nYou can also set the value of parameter `batch_size`, which can further speed up the calculations on the GPU.\r\nHowever, this will require additional video memory (VRAM).\r\n\r\nNOTE: When using the CPU, increasing `batch_size` does not speed up the calculation at all, but it may\r\nrequire additional memory costs (RAM). So don't increase this parameter when using CPU.\r\n\r\n[code-block-start]:create-picker\r\n```python\r\nfrom first_breaks.picking.picker_onnx import PickerONNX\r\n\r\n# the library will determine the best available device\r\npicker_default = PickerONNX()\r\n\r\n# create picker explicitly on CPU\r\npicker_cpu = PickerONNX(device='cpu')\r\n\r\n# create picker explicitly on GPU\r\npicker_gpu = PickerONNX(device='cuda', batch_size=2)\r\n\r\n# transfer model to another device\r\npicker_cpu.change_settings(device='cuda', batch_size=3)\r\npicker_gpu.change_settings(device='cpu', batch_size=1)\r\n```\r\n[code-block-end]:create-picker\r\n\r\n### Pick first breaks\r\n\r\nNow, using all the created components, we can pick the first breaks and retrieve results.\r\n\r\n[code-block-start]:pick-fb\r\n```python\r\nfrom first_breaks.picking.task import Task\r\nfrom first_breaks.picking.picker_onnx import PickerONNX\r\nfrom first_breaks.sgy.reader import SGY\r\n\r\nsgy_filename = 'data.sgy'\r\nsgy = SGY(sgy_filename)\r\n\r\ntask = Task(source=sgy,\r\n traces_per_gather=24,\r\n maximum_time=200)\r\npicker = PickerONNX()\r\ntask = picker.process_task(task)\r\n\r\n# picks available from attribute\r\npicks = task.picks\r\n\r\n# or by method\r\npicks = task.get_result()\r\n\r\n# print values and confidence of picks\r\nprint(picks.picks_in_mcs, picks.confidence)\r\n\r\n# print picking parameters\r\nprint(picks.picking_parameters, task.picking_parameters)\r\n```\r\n[code-block-end]:pick-fb\r\n\r\n\r\n### Picks\r\n\r\nThe picks are stored in class `Picks`. In addition to the pick values, the class stores additional\r\ninformation (picking parameters, color, etc.). The class instance is returned as a picking result, but can\r\nalso be created manually.\r\n\r\n[code-block-start]:picks\r\n```python\r\nfrom first_breaks.picking.picks import Picks\r\n\r\n\r\npicks_in_samples = [1, 10, 20, 30, 50, 100]\r\ndt_mcs = 250\r\n\r\npicks = Picks(values=picks_in_samples, unit=\"mcs\", dt_mcs=dt_mcs)\r\n\r\n# get values\r\nprint(picks.picks_in_samples)\r\nprint(picks.picks_in_mcs)\r\nprint(picks.picks_in_ms)\r\n\r\n# change color and width for visualisation\r\npicks.color = (255, 0, 0)\r\npicks.width = 5\r\n\r\n# if picks are obtained by NN you can read extra attributes\r\nprint(picks.confidence)\r\nprint(picks.picking_parameters)\r\nprint(picks.created_by_nn)\r\n\r\n```\r\n[code-block-end]:picks\r\n\r\n\r\n### Visualizations\r\n\r\nYou can save the seismogram and picks as an image. We use Qt backend for visualizations. Here we describe some usage\r\nscenarios.\r\n\r\nWe've added named arguments to various scenarios for demonstration purposes, but in practice you can\r\nuse them all. See the function arguments for more visualization options.\r\n\r\nPlot `SGY` only:\r\n\r\n[code-block-start]:plot-sgy\r\n```python\r\nfrom first_breaks.sgy.reader import SGY\r\nfrom first_breaks.desktop.graph import export_image\r\n\r\nsgy_filename = 'data.sgy'\r\nimage_filename = 'image.png'\r\n\r\nsgy = SGY(sgy_filename)\r\nexport_image(sgy, image_filename,\r\n normalize=None,\r\n traces_window=(5, 10),\r\n time_window=(0, 200),\r\n height=300,\r\n width_per_trace=30)\r\n```\r\n[code-block-end]:plot-sgy\r\n\r\nPlot `numpy` traces:\r\n\r\n[code-block-start]:plot-np\r\n```python\r\nimport numpy as np\r\nfrom first_breaks.sgy.reader import SGY\r\nfrom first_breaks.desktop.graph import export_image\r\n\r\nimage_filename = 'image.png'\r\nnum_traces = 48\r\nnum_samples = 1000\r\ndt_mcs = 1e3\r\n\r\ntraces = np.random.random((num_samples, num_traces))\r\nexport_image(traces, image_filename,\r\n dt_mcs=dt_mcs,\r\n clip=0.5)\r\n\r\n# or create SGY as discussed before\r\nsgy = SGY(traces, dt_mcs=dt_mcs)\r\nexport_image(sgy, image_filename,\r\n gain=2)\r\n```\r\n[code-block-end]:plot-np\r\n\r\nPlot `SGY` with custom picks:\r\n\r\n[code-block-start]:plot-sgy-custom-picks\r\n```python\r\nimport numpy as np\r\nfrom first_breaks.picking.picks import Picks\r\nfrom first_breaks.sgy.reader import SGY\r\nfrom first_breaks.desktop.graph import export_image\r\n\r\nsgy_filename = 'data.sgy'\r\nimage_filename = 'image.png'\r\n\r\nsgy = SGY(sgy_filename)\r\npicks_ms = np.random.uniform(low=0,\r\n high=sgy.ns * sgy.dt_ms,\r\n size=sgy.ntr)\r\npicks = Picks(values=picks_ms, unit=\"ms\", dt_mcs=sgy.dt_mcs, color=(0, 100, 100))\r\nexport_image(sgy, image_filename,\r\n picks=picks)\r\n```\r\n[code-block-end]:plot-sgy-custom-picks\r\n\r\nPlot result of picking:\r\n\r\n[code-block-start]:plot-sgy-real-picks\r\n```python\r\nfrom first_breaks.picking.task import Task\r\nfrom first_breaks.picking.picker_onnx import PickerONNX\r\nfrom first_breaks.desktop.graph import export_image\r\nfrom first_breaks.sgy.reader import SGY\r\n\r\nsgy_filename = 'data.sgy'\r\nimage_filename = 'image.png'\r\n\r\nsgy = SGY(sgy_filename)\r\ntask = Task(source=sgy,\r\n traces_per_gather=24,\r\n maximum_time=200)\r\npicker = PickerONNX()\r\ntask = picker.process_task(task)\r\n\r\nexport_image(task, image_filename,\r\n show_processing_region=False,\r\n fill_black='right',\r\n width=1000)\r\n```\r\n[code-block-end]:plot-sgy-real-picks\r\n\r\n### *Limit processing region\r\n\r\nUnfortunately, processing of a part of a file is not currently supported natively. We will add this functionality soon!\r\n\r\nHowever, you can use the following workaround to do this:\r\n\r\n[code-block-start]:pick-limited\r\n```python\r\nfrom first_breaks.sgy.reader import SGY\r\n\r\nsgy_filename = 'data.sgy'\r\nsgy = SGY(sgy_filename)\r\n\r\ninteresting_traces = sgy.read_traces_by_ids(ids=list(range(20, 40)),\r\n min_sample=100,\r\n max_sample=200)\r\n\r\n# we create new SGY based on region of interests\r\nsgy = SGY(interesting_traces, dt_mcs=sgy.dt_mcs)\r\n```\r\n[code-block-end]:pick-limited\r\n\r\n</details>\r\n\r\n## Desktop application\r\n\r\n***Application under development***\r\n\r\nDesktop application allows you to work interactively with only one file and has better performance in visualization.\r\nYou can use application as SGY viewer, as well as visually evaluate the optimal values of the picking\r\nparameters for your data.\r\n\r\n### Launch app\r\n\r\nEnter command to launch the application\r\n```shell\r\nfirst-breaks-picking app\r\n```\r\nor\r\n```shell\r\nfirst-breaks-picking desktop\r\n```\r\n\r\n### Select and view SGY file\r\n\r\nClick on 2 button to select SGY. After successful reading you can analyze SGY file.\r\n\r\nThe following mouse interactions are available:\r\n- Left button drag / Middle button drag: Pan the scene.\r\n- Right button drag: Scales the scene. Dragging left/right scales horizontally; dragging up/down scales vertically.\r\n- Right button click: Open dialog with extra options, such as limit by X/Y axes and export.\r\n- Wheel spin: Zooms the scene in and out.\r\n- Left click: You can manually change values of active picks.\r\n\r\nSpectrum analysis:\r\n- Hold down the **Shift** key, the left mouse button, and select an area: a window with a spectrum will appear.\r\nYou can create as many windows as you like.\r\n- Move existing windows by dragging with the left mouse button.\r\n- Delete the window with the spectrum by clicking on it and pressing the **Del** key.\r\n\r\n### Load model\r\n\r\nTo use picker in desktop app you have to download model. See the `Installation` section for instructions\r\non how to download the model.\r\n\r\nClick on 1 button and select file with model.\r\nAfter successfully loading the model, access to the pick with NN will open.\r\n\r\n### Settings and Processing\r\n\r\nClick on 3 button to open window with different settings and picking parameters:\r\n\r\n- The **Processing** section contains parameters for drawing seismograms; these parameters are also used during the\r\npicking process. Changing them in real time changes the rendering.\r\n- The **View** section allows you to change the axes' names, content, and orientation.\r\n- The **NN Picking** section allows you to select additional parameters for picking the first arrivals and a device\r\nfor calculations. If you have CUDA compatible GPU and installed GPU supported version of library\r\n(see `Installation` section), you can select `CUDA/GPU` device to use GPU acceleration.\r\nIt can drastically decrease computation time.\r\n\r\nA detailed description of the parameters responsible for picking can be found in the `Picking process` chapter.\r\n\r\nWhen you have chosen the optimal parameters, click on the **Run picking** button. After some time, a line will appear\r\nconnecting the first arrivals. You can interrupt the process by clicking **Stop** button.\r\n\r\n### Processing grid\r\n\r\nClick on 4 button to toggle the display of the processing grid on or off. Horizontal line\r\nshows `Maximum time` and vertical lines are drawn at intervals equal to `Traces per gather`. The neural network\r\nprocesses blocks independently, as separate images.\r\n\r\n### Picks manager\r\n\r\nClick on 5 button to open picks manager. \r\n\r\nWhen picking with a neural network, the result of the picking will appear here. You can also: add manual picks, \r\nload picks from headers, duplicate picks, aggregate several picks, or remove picks.\r\n\r\nAs control and navigation tools, you can choose to show picks or not (checkbox), select active picks (radio button), \r\nand change color (color label).\r\n\r\nAfter selecting one of the picks, you can double-click or click the button with the \u201csave\u201d icon to show the \r\nexport options.\r\n\r\n# Picking process\r\n\r\nNeural network process file as series of **images**. There is why **the traces should not be random**,\r\nsince we are using information about adjacent traces.\r\n\r\nTo obtain the first breaks we do the following steps:\r\n1) Read all traces in the file.\r\n\r\n2) Limit time range by `Maximum time`.\r\n\r\n3) Split the sequence of traces into independent gathers of lengths `Traces per gather` each.\r\n\r\n4) Apply trace modification on the gathers level if necessary (`Gain`, `Clip`, etc).\r\n5) Calculate first breaks for individual gathers independently.\r\n\r\n6) Join the first breaks of individual gathers.\r\n\r\n\r\nTo achieve the best result, you need to modify the picking parameters.\r\n\r\n### Traces per gather\r\n\r\n`Traces per gather` is the most important parameter for picking. The parameter defines how we split the sequence of\r\ntraces into individual gathers.\r\n\r\nSuppose we need to process a file with 96 traces. Depending on the value of `Traces per gather` parameter, we will\r\nprocess it as follows:\r\n- `Traces per gather` = 24. We will process 4 gathers with 24 traces each.\r\n\r\n- `Traces per gather` = 48. We will process 2 gathers with 48 traces each.\r\n\r\n- `Traces per gather` = 40. We will process 2 gathers with 40 traces each and 1 gather with the remaining 16 traces.\r\nThe last gather will be interpolated from 16 to 40 traces.\r\n\r\n\r\n### Maximum time\r\n\r\nYou can localize the area for finding first breaks. Specify `Maximum time` if you have long records but the first breaks\r\nlocated at the start of the traces. Keep it `0` if you don't want to limit traces.\r\n\r\n### List of traces to inverse\r\n\r\nSome receivers may have the wrong polarity, so you can specify which traces should be inversed. Note, that inversion\r\nwill be applied on the gathers level. For example, if you have 96 traces, `Traces per gather` = 48 and\r\n`List of traces to inverse` = (2, 30, 48), then traces (2, 30, 48, 50, 78, 96) will be inversed.\r\n\r\nNotes:\r\n- Trace indexing starts at 1.\r\n- Option is not available on desktop app.\r\n\r\n\r\n## Recommendations\r\nYou can receive predictions for any file with any parameters, but to get a better result, you should comply with the\r\nfollowing guidelines:\r\n- Your file should contain one or more gathers. By a gather, we mean that traces within a single gather can be\r\ngeophysically interpreted. **The traces within the same gather should not be random**, since we are using information\r\nabout adjacent traces.\r\n- All gathers in the file must contain the same number of traces.\r\n- The number of traces in gather must be equal to `Traces per gather` or divisible by it without a remainder.\r\nFor example, if you have CSP gathers and the number of receivers is 48, then you can set the parameter\r\nvalue to 48, 24, or 12.\r\n- We don't sort your file (CMP, CRP, CSP, etc), so you should send us files with traces sorted by yourself.\r\n- You can process a file with independent seismograms obtained from different polygons, under different conditions, etc.,\r\nbut the requirements listed above must be met.\r\n\r\n# Acknowledgments\r\n\r\n<a href=\"https://geodevice.co/\"><img src=\"https://geodevice.co/local/templates/geodevice_15_07_2019/assets/images/logo_geodevice.png?1\" style=\"width: 200px;\" alt=\"Geodevice\"></a>\r\n\r\nWe would like to thank [GEODEVICE](https://geodevice.co/) for providing field data from land and borehole seismic surveys with annotated first breaks for model training.\r\n\r\n",

"bugtrack_url": null,

"license": "Apache License Version 2.0, January 2004 http://www.apache.org/licenses/ TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION 1. Definitions. \"License\" shall mean the terms and conditions for use, reproduction, and distribution as defined by Sections 1 through 9 of this document. \"Licensor\" shall mean the copyright owner or entity authorized by the copyright owner that is granting the License. \"Legal Entity\" shall mean the union of the acting entity and all other entities that control, are controlled by, or are under common control with that entity. For the purposes of this definition, \"control\" means (i) the power, direct or indirect, to cause the direction or management of such entity, whether by contract or otherwise, or (ii) ownership of fifty percent (50%) or more of the outstanding shares, or (iii) beneficial ownership of such entity. \"You\" (or \"Your\") shall mean an individual or Legal Entity exercising permissions granted by this License. \"Source\" form shall mean the preferred form for making modifications, including but not limited to software source code, documentation source, and configuration files. \"Object\" form shall mean any form resulting from mechanical transformation or translation of a Source form, including but not limited to compiled object code, generated documentation, and conversions to other media types. \"Work\" shall mean the work of authorship, whether in Source or Object form, made available under the License, as indicated by a copyright notice that is included in or attached to the work (an example is provided in the Appendix below). \"Derivative Works\" shall mean any work, whether in Source or Object form, that is based on (or derived from) the Work and for which the editorial revisions, annotations, elaborations, or other modifications represent, as a whole, an original work of authorship. For the purposes of this License, Derivative Works shall not include works that remain separable from, or merely link (or bind by name) to the interfaces of, the Work and Derivative Works thereof. \"Contribution\" shall mean any work of authorship, including the original version of the Work and any modifications or additions to that Work or Derivative Works thereof, that is intentionally submitted to Licensor for inclusion in the Work by the copyright owner or by an individual or Legal Entity authorized to submit on behalf of the copyright owner. For the purposes of this definition, \"submitted\" means any form of electronic, verbal, or written communication sent to the Licensor or its representatives, including but not limited to communication on electronic mailing lists, source code control systems, and issue tracking systems that are managed by, or on behalf of, the Licensor for the purpose of discussing and improving the Work, but excluding communication that is conspicuously marked or otherwise designated in writing by the copyright owner as \"Not a Contribution.\" \"Contributor\" shall mean Licensor and any individual or Legal Entity on behalf of whom a Contribution has been received by Licensor and subsequently incorporated within the Work. 2. Grant of Copyright License. Subject to the terms and conditions of this License, each Contributor hereby grants to You a perpetual, worldwide, non-exclusive, no-charge, royalty-free, irrevocable copyright license to reproduce, prepare Derivative Works of, publicly display, publicly perform, sublicense, and distribute the Work and such Derivative Works in Source or Object form. 3. Grant of Patent License. Subject to the terms and conditions of this License, each Contributor hereby grants to You a perpetual, worldwide, non-exclusive, no-charge, royalty-free, irrevocable (except as stated in this section) patent license to make, have made, use, offer to sell, sell, import, and otherwise transfer the Work, where such license applies only to those patent claims licensable by such Contributor that are necessarily infringed by their Contribution(s) alone or by combination of their Contribution(s) with the Work to which such Contribution(s) was submitted. If You institute patent litigation against any entity (including a cross-claim or counterclaim in a lawsuit) alleging that the Work or a Contribution incorporated within the Work constitutes direct or contributory patent infringement, then any patent licenses granted to You under this License for that Work shall terminate as of the date such litigation is filed. 4. Redistribution. You may reproduce and distribute copies of the Work or Derivative Works thereof in any medium, with or without modifications, and in Source or Object form, provided that You meet the following conditions: (a) You must give any other recipients of the Work or Derivative Works a copy of this License; and (b) You must cause any modified files to carry prominent notices stating that You changed the files; and (c) You must retain, in the Source form of any Derivative Works that You distribute, all copyright, patent, trademark, and attribution notices from the Source form of the Work, excluding those notices that do not pertain to any part of the Derivative Works; and (d) If the Work includes a \"NOTICE\" text file as part of its distribution, then any Derivative Works that You distribute must include a readable copy of the attribution notices contained within such NOTICE file, excluding those notices that do not pertain to any part of the Derivative Works, in at least one of the following places: within a NOTICE text file distributed as part of the Derivative Works; within the Source form or documentation, if provided along with the Derivative Works; or, within a display generated by the Derivative Works, if and wherever such third-party notices normally appear. The contents of the NOTICE file are for informational purposes only and do not modify the License. You may add Your own attribution notices within Derivative Works that You distribute, alongside or as an addendum to the NOTICE text from the Work, provided that such additional attribution notices cannot be construed as modifying the License. You may add Your own copyright statement to Your modifications and may provide additional or different license terms and conditions for use, reproduction, or distribution of Your modifications, or for any such Derivative Works as a whole, provided Your use, reproduction, and distribution of the Work otherwise complies with the conditions stated in this License. 5. Submission of Contributions. Unless You explicitly state otherwise, any Contribution intentionally submitted for inclusion in the Work by You to the Licensor shall be under the terms and conditions of this License, without any additional terms or conditions. Notwithstanding the above, nothing herein shall supersede or modify the terms of any separate license agreement you may have executed with Licensor regarding such Contributions. 6. Trademarks. This License does not grant permission to use the trade names, trademarks, service marks, or product names of the Licensor, except as required for reasonable and customary use in describing the origin of the Work and reproducing the content of the NOTICE file. 7. Disclaimer of Warranty. Unless required by applicable law or agreed to in writing, Licensor provides the Work (and each Contributor provides its Contributions) on an \"AS IS\" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied, including, without limitation, any warranties or conditions of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A PARTICULAR PURPOSE. You are solely responsible for determining the appropriateness of using or redistributing the Work and assume any risks associated with Your exercise of permissions under this License. 8. Limitation of Liability. In no event and under no legal theory, whether in tort (including negligence), contract, or otherwise, unless required by applicable law (such as deliberate and grossly negligent acts) or agreed to in writing, shall any Contributor be liable to You for damages, including any direct, indirect, special, incidental, or consequential damages of any character arising as a result of this License or out of the use or inability to use the Work (including but not limited to damages for loss of goodwill, work stoppage, computer failure or malfunction, or any and all other commercial damages or losses), even if such Contributor has been advised of the possibility of such damages. 9. Accepting Warranty or Additional Liability. While redistributing the Work or Derivative Works thereof, You may choose to offer, and charge a fee for, acceptance of support, warranty, indemnity, or other liability obligations and/or rights consistent with this License. However, in accepting such obligations, You may act only on Your own behalf and on Your sole responsibility, not on behalf of any other Contributor, and only if You agree to indemnify, defend, and hold each Contributor harmless for any liability incurred by, or claims asserted against, such Contributor by reason of your accepting any such warranty or additional liability. END OF TERMS AND CONDITIONS APPENDIX: How to apply the Apache License to your work. To apply the Apache License to your work, attach the following boilerplate notice, with the fields enclosed by brackets \"[]\" replaced with your own identifying information. (Don't include the brackets!) The text should be enclosed in the appropriate comment syntax for the file format. We also recommend that a file or class name and description of purpose be included on the same \"printed page\" as the copyright notice for easier identification within third-party archives. Copyright [yyyy] [name of copyright owner] Licensed under the Apache License, Version 2.0 (the \"License\"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an \"AS IS\" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. ",

"summary": "Project is devoted to pick waves that are the first to be detected on a seismogram with neural network (CUDA accelerated)",

"version": "0.7.4",

"project_urls": {

"Homepage": "https://github.com/DaloroAT/first_breaks_picking"

},

"split_keywords": [

"seismic",

" first-breaks",

" computer-vision",

" deep-learning",

" segmentation",

" data-science"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "4f1cc94df062c2445a5f9422d7f6ec19a62f801b011222bfb5c085e916c70c21",

"md5": "68041707ddce72615a8c36ffadb2e3f3",

"sha256": "a764f26c605c320670f7de3c1bf0d25ab22c547b88444ccc9880ba57844a1fa9"

},

"downloads": -1,

"filename": "first_breaks_picking_gpu-0.7.4-py3-none-any.whl",

"has_sig": false,

"md5_digest": "68041707ddce72615a8c36ffadb2e3f3",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.8",

"size": 86786,

"upload_time": "2024-05-31T07:46:24",

"upload_time_iso_8601": "2024-05-31T07:46:24.983478Z",

"url": "https://files.pythonhosted.org/packages/4f/1c/c94df062c2445a5f9422d7f6ec19a62f801b011222bfb5c085e916c70c21/first_breaks_picking_gpu-0.7.4-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "b8702fc5086e2a0be9c50d4099727bfcebe05a1265cc5ab11208e698aaaed7fb",

"md5": "8ae4d743cd3fedbdca57fd93e3c898d9",

"sha256": "2364cc1d1360b7a9d766b901cf362c5614f4146ff01ec7737bf38e8b8730bccf"

},

"downloads": -1,

"filename": "first_breaks_picking_gpu-0.7.4.tar.gz",

"has_sig": false,

"md5_digest": "8ae4d743cd3fedbdca57fd93e3c898d9",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.8",

"size": 79992,

"upload_time": "2024-05-31T07:46:26",

"upload_time_iso_8601": "2024-05-31T07:46:26.728396Z",

"url": "https://files.pythonhosted.org/packages/b8/70/2fc5086e2a0be9c50d4099727bfcebe05a1265cc5ab11208e698aaaed7fb/first_breaks_picking_gpu-0.7.4.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2024-05-31 07:46:26",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "DaloroAT",

"github_project": "first_breaks_picking",

"travis_ci": false,

"coveralls": false,

"github_actions": true,

"lcname": "first-breaks-picking-gpu"

}