# GVISION 🚀

GVISION is an end-to-end automation platform for computer vision projects, providing seamless integration from data collection to model training and deployment. Whether you're a beginner or an expert, GVISION simplifies the entire process, allowing you to focus on building and deploying powerful models.

## Features ✨

- **Easy-to-Use Interface:** Intuitive UI design for effortless project management and model development.

- **No Coding Required:** Build and train models without writing any code.

- **Roboflow Integration:** Easily download datasets from Roboflow for your computer vision projects.

- **Multiple Tasks Supported:** Develop models for object detection, segmentation, classification, and pose estimation.

- **Ultralytics Model Training:** Train your custom models using Ultralytics YOLOv8.

- **Live Monitoring with TensorBoard:** Monitor model training and performance in real-time using TensorFlow's TensorBoard integration.

- **Performance Monitoring:** View model performance and visualize results.

- **Quick Deployment:** Deploy trained models seamlessly for various applications.

- **Streamlit Deployment Demo:** Quickly deploy your trained models with Streamlit for interactive demos and visualization.

| Streamlit Deployment Features | Detection | Segmentation | Classification | Pose Estimation |

| --- | :---: | :---: | :---: | :---: |

| Real-Time(Live Predict) | ✅ | ✅ | ✅ | ✅ |

| Click & Predict | ✅ | ✅ | ✅ | ✅ |

| Upload Multiple Images & Predict | ✅ | ✅ | ✅ | ✅ |

| Upload Video & Predict | ✅ | ✅ | ✅ | ✅ |

# Getting Started 🌟

## ⚠️ **BEFORE INSTALLATION** ⚠️

**Before installing gvision, it's strongly recommended to create a new Python environment to avoid potential conflicts with your current environment.**

## Creating a New Conda Environment

To create a new conda environment, follow these steps:

1. **Install Conda**:

If you don't have conda installed, you can download and install it from the [Anaconda website](https://www.anaconda.com/products/distribution).

2. **Open a Anaconda Prompt**:

Open a Anaconda Prompt (or Anaconda Terminal) on your system.

3. **Create a New Environment**:

To create a new conda environment, use the following command. Replace `my_env_name` with your desired environment name.

- Support Python versions are > 3.8

```bash

conda create --name my_env_name python=3.8

```

4. **Activate the Environment**:

After creating the environment, activate it with the following command:

```bash

conda activate my_env_name

```

## OR

## Create a New Virtual Environment with `venv`

If you prefer using Python's built-in `venv` module, here's how to create a virtual environment:

1. **Check Your Python Installation**:

Ensure you have Python installed on your system. You can check by running:

- Support Python versions are > 3.8

```bash

python --version

```

2. **Create a Virtual Environment**:

Use the following command to create a new virtual environment. Replace `my_env_name` with your desired environment name.

```bash

python -m venv my_env_name

```

3. **Activate the Environment**:

After creating the virtual environment, activate it using the appropriate command for your operating system:

```bash

my_env_name\Scripts\activate

```

# Installation 🛠️

1. **Installation**

You can install GVISION using pip:

```bash

pip install gvision

```

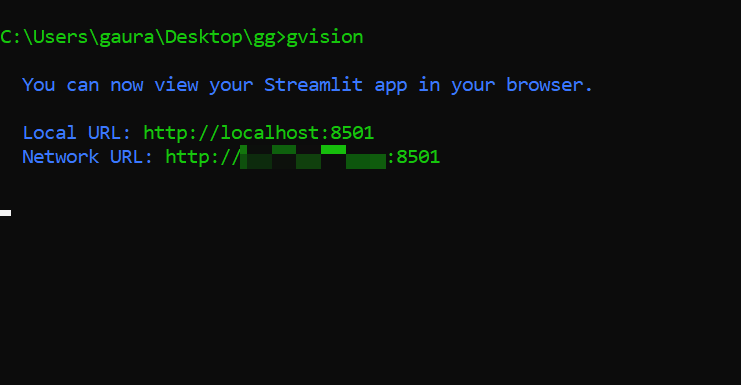

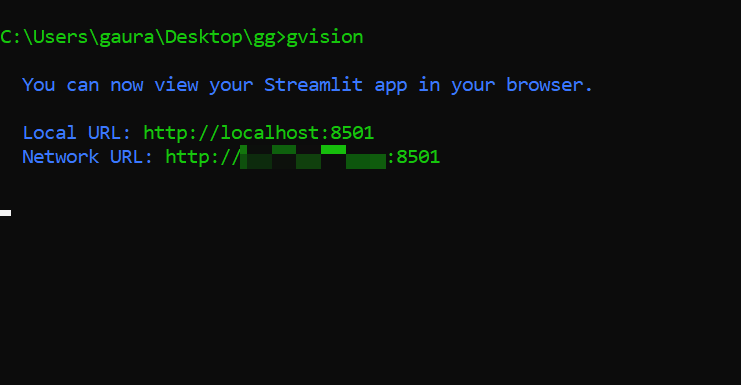

# Global CLI

2. **Run GVISION**: Launch the GVISION application directly in the Command Line Interface (CLI).

```bash

gvision

```

# #UI:

.png)

3. Import Your Data: Use the Roboflow integration to import datasets and preprocess your data.

4. Train Your Model: Utilize Ultralytics for training your custom models with ease.

5. Deploy Your Model: Showcase your trained models with Streamlit deployment for interactive visualization.

## Documentation 📚

For detailed instructions on how to use GVISION, check out the [Documentation](https://github.com/gaurang157/gvision#).

## License 📝

GVISION is licensed under the [MIT License](https://opensource.org/licenses/MIT).

## Contributing 🤝

We welcome contributions from the community! If you have any feature requests, bug reports, or ideas for improvement, please [open an issue](https://github.com/gaurang157/gvision/issues) or submit a [pull request](https://github.com/gaurang157/gvision/pulls).

## GVISION 1.0.4 Release Notes 🚀

I am thrilled to announce the official release of GVISION Version 1.0.4! This milestone marks a significant advancement, bringing a host of exciting enhancements to streamline your experience.

Bug fix while switching to deployment demo on MacOS.

### Improved Features:

- **Real-Time Inference Results:** Experience enhanced real-time streaming predictions directly from deployment.

Version 1.0.4 represents a culmination of efforts to provide a seamless and efficient solution for your vision tasks. I'm excited to deliver these enhancements and look forward to your continued feedback.

## Upcoming in GVISION 1.1.0 🚀

As I look ahead to the future, I'm thrilled to provide a glimpse of what's to come in GVISION Version 1.1.0 Next release will introduce a groundbreaking feature:

- ### Custom Pre-Trained Model Transfer Learning:

- With GVISION 1.1.0, you'll have the capability to leverage your custom pre-trained models for transfer learning, empowering you to further tailor and refine your models to suit your specific needs. Unlock new possibilities and enhance the capabilities of your vision applications with this powerful feature.

Stay tuned for updates as we gear up for the launch of GVISION 1.1.0!

## Support 💌

For any questions, feedback, or support requests, please contact us at gaurang.ingle@gmail.com.

Raw data

{

"_id": null,

"home_page": "https://github.com/gaurang157/gvision",

"name": "gvision",

"maintainer": "Gaurang Ingle",

"docs_url": null,

"requires_python": ">=3.8",

"maintainer_email": "gaurang.ingle@gmail.com",

"keywords": "computer vision, automation, model training, model deployment, object detection, segmentation, classification, pose estimation, deep learning, machine learning, Roboflow, Ultralytics, TensorFlow, TensorBoard, Streamlit, CLI interface, UI interface",

"author": "Gaurang Ingle",

"author_email": "gaurang.ingle@gmail.com",

"download_url": "https://files.pythonhosted.org/packages/55/c8/adeddde95eeffce2c7c651ee042886174fc9568e0232ff0e0a8b4897ae75/gvision-1.0.4.tar.gz",

"platform": null,

"description": "\r\n\r\n# GVISION \ud83d\ude80\r\nGVISION is an end-to-end automation platform for computer vision projects, providing seamless integration from data collection to model training and deployment. Whether you're a beginner or an expert, GVISION simplifies the entire process, allowing you to focus on building and deploying powerful models.\r\n\r\n## Features \u2728\r\n\r\n- **Easy-to-Use Interface:** Intuitive UI design for effortless project management and model development.\r\n- **No Coding Required:** Build and train models without writing any code.\r\n- **Roboflow Integration:** Easily download datasets from Roboflow for your computer vision projects.\r\n- **Multiple Tasks Supported:** Develop models for object detection, segmentation, classification, and pose estimation.\r\n- **Ultralytics Model Training:** Train your custom models using Ultralytics YOLOv8.\r\n- **Live Monitoring with TensorBoard:** Monitor model training and performance in real-time using TensorFlow's TensorBoard integration.\r\n- **Performance Monitoring:** View model performance and visualize results.\r\n- **Quick Deployment:** Deploy trained models seamlessly for various applications.\r\n- **Streamlit Deployment Demo:** Quickly deploy your trained models with Streamlit for interactive demos and visualization.\r\n\r\n| Streamlit Deployment Features | Detection | Segmentation | Classification | Pose Estimation |\r\n| --- | :---: | :---: | :---: | :---: |\r\n| Real-Time(Live Predict) | \u2705 | \u2705 | \u2705 | \u2705 |\r\n| Click & Predict | \u2705 | \u2705 | \u2705 | \u2705 |\r\n| Upload Multiple Images & Predict | \u2705 | \u2705 | \u2705 | \u2705 |\r\n| Upload Video & Predict | \u2705 | \u2705 | \u2705 | \u2705 |\r\n\r\n# Getting Started \ud83c\udf1f\r\n\r\n## \u26a0\ufe0f **BEFORE INSTALLATION** \u26a0\ufe0f\r\n\r\n**Before installing gvision, it's strongly recommended to create a new Python environment to avoid potential conflicts with your current environment.**\r\n\r\n\r\n## Creating a New Conda Environment\r\n\r\nTo create a new conda environment, follow these steps:\r\n\r\n1. **Install Conda**:\r\n If you don't have conda installed, you can download and install it from the [Anaconda website](https://www.anaconda.com/products/distribution).\r\n\r\n2. **Open a Anaconda Prompt**:\r\n Open a Anaconda Prompt (or Anaconda Terminal) on your system.\r\n\r\n3. **Create a New Environment**:\r\n To create a new conda environment, use the following command. Replace `my_env_name` with your desired environment name.\r\n- Support Python versions\u00a0are\u00a0>\u00a03.8\r\n```bash\r\nconda create --name my_env_name python=3.8\r\n```\r\n\r\n4. **Activate the Environment**:\r\n After creating the environment, activate it with the following command:\r\n```bash\r\nconda activate my_env_name\r\n```\r\n\r\n## OR\r\n## Create a New Virtual Environment with `venv`\r\nIf you prefer using Python's built-in `venv` module, here's how to create a virtual environment:\r\n\r\n1. **Check Your Python Installation**:\r\n Ensure you have Python installed on your system. You can check by running:\r\n - Support Python versions\u00a0are\u00a0>\u00a03.8\r\n```bash\r\npython --version\r\n```\r\n\r\n2. **Create a Virtual Environment**:\r\nUse the following command to create a new virtual environment. Replace `my_env_name` with your desired environment name.\r\n```bash\r\npython -m venv my_env_name\r\n```\r\n\r\n3. **Activate the Environment**:\r\nAfter creating the virtual environment, activate it using the appropriate command for your operating system:\r\n```bash\r\nmy_env_name\\Scripts\\activate\r\n```\r\n\r\n\r\n# Installation \ud83d\udee0\ufe0f\r\n1. **Installation**\r\nYou can install GVISION using pip:\r\n```bash\r\npip install gvision\r\n```\r\n# Global CLI\r\n2. **Run GVISION**: Launch the GVISION application directly in the Command Line Interface (CLI).\r\n```bash\r\ngvision\r\n```\r\n\r\n\r\n# #UI:\r\n.png)\r\n\r\n3. Import Your Data: Use the Roboflow integration to import datasets and preprocess your data.\r\n\r\n4. Train Your Model: Utilize Ultralytics for training your custom models with ease.\r\n\r\n5. Deploy Your Model: Showcase your trained models with Streamlit deployment for interactive visualization.\r\n\r\n## Documentation \ud83d\udcda\r\nFor detailed instructions on how to use GVISION, check out the [Documentation](https://github.com/gaurang157/gvision#).\r\n\r\n## License \ud83d\udcdd\r\nGVISION is licensed under the [MIT License](https://opensource.org/licenses/MIT).\r\n\r\n## Contributing \ud83e\udd1d\r\nWe welcome contributions from the community! If you have any feature requests, bug reports, or ideas for improvement, please [open an issue](https://github.com/gaurang157/gvision/issues) or submit a [pull request](https://github.com/gaurang157/gvision/pulls).\r\n\r\n## GVISION 1.0.4 Release Notes \ud83d\ude80\r\nI am thrilled to announce the official release of GVISION Version 1.0.4! This milestone marks a significant advancement, bringing a host of exciting enhancements to streamline your experience.\r\nBug fix while switching to deployment demo on MacOS.\r\n\r\n### Improved Features:\r\n- **Real-Time Inference Results:** Experience enhanced real-time streaming predictions directly from deployment.\r\n\r\nVersion 1.0.4 represents a culmination of efforts to provide a seamless and efficient solution for your vision tasks. I'm excited to deliver these enhancements and look forward to your continued feedback.\r\n\r\n## Upcoming in GVISION 1.1.0 \ud83d\ude80\r\nAs I look ahead to the future, I'm thrilled to provide a glimpse of what's to come in GVISION Version 1.1.0 Next release will introduce a groundbreaking feature:\r\n\r\n- ### Custom Pre-Trained Model Transfer Learning:\r\n- With GVISION 1.1.0, you'll have the capability to leverage your custom pre-trained models for transfer learning, empowering you to further tailor and refine your models to suit your specific needs. Unlock new possibilities and enhance the capabilities of your vision applications with this powerful feature.\r\n\r\nStay tuned for updates as we gear up for the launch of GVISION 1.1.0!\r\n\r\n## Support \ud83d\udc8c\r\nFor any questions, feedback, or support requests, please contact us at gaurang.ingle@gmail.com.\r\n",

"bugtrack_url": null,

"license": "MIT",

"summary": "End-to-end automation platform for computer vision projects.",

"version": "1.0.4",

"project_urls": {

"Bug Reports": "https://github.com/gaurang157/gvision/issues",

"Documentation": "https://github.com/gaurang157/gvision/blob/main/README.md",

"Homepage": "https://github.com/gaurang157/gvision",

"Say Thanks!": "https://github.com/gaurang157/gvision/issues/new?assignees=&labels=&template=thanks.yml",

"Source": "https://github.com/gaurang157/gvision"

},

"split_keywords": [

"computer vision",

" automation",

" model training",

" model deployment",

" object detection",

" segmentation",

" classification",

" pose estimation",

" deep learning",

" machine learning",

" roboflow",

" ultralytics",

" tensorflow",

" tensorboard",

" streamlit",

" cli interface",

" ui interface"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "55c8adeddde95eeffce2c7c651ee042886174fc9568e0232ff0e0a8b4897ae75",

"md5": "7a8749c1aedfa6da68faf338e807d4b7",

"sha256": "e11dc63b18bc4c129505518c64a5832d20ba36336735dd66089ba555bb39743e"

},

"downloads": -1,

"filename": "gvision-1.0.4.tar.gz",

"has_sig": false,

"md5_digest": "7a8749c1aedfa6da68faf338e807d4b7",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.8",

"size": 21359,

"upload_time": "2024-07-07T09:16:41",

"upload_time_iso_8601": "2024-07-07T09:16:41.313634Z",

"url": "https://files.pythonhosted.org/packages/55/c8/adeddde95eeffce2c7c651ee042886174fc9568e0232ff0e0a8b4897ae75/gvision-1.0.4.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2024-07-07 09:16:41",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "gaurang157",

"github_project": "gvision",

"travis_ci": false,

"coveralls": false,

"github_actions": false,

"lcname": "gvision"

}