<div align="center">

<img src="https://raw.githubusercontent.com/hezarai/hezar/main/hezar.png"/>

</div>

<div align="center"> The all-in-one AI library for Persian </div> <br>

<div align="center">

[](https://pepy.tech/project/hezar)

<br>

[](https://huggingface.co/hezarai)

[](https://t.me/hezarai)

[](https://daramet.com/hezarai)

</div>

**Hezar** (meaning **_thousand_** in Persian) is a multipurpose AI library built to make AI easy for the Persian community!

Hezar is a library that:

- brings together all the best works in AI for Persian

- makes using AI models as easy as a couple of lines of code

- seamlessly integrates with Hugging Face Hub for all of its models

- has a highly developer-friendly interface

- has a task-based model interface which is more convenient for general users.

- is packed with additional tools like word embeddings, tokenizers, feature extractors, etc.

- comes with a lot of supplementary ML tools for deployment, benchmarking, optimization, etc.

- and more!

## Installation

Hezar is available on PyPI and can be installed with pip (**Python 3.10 and later**):

```

pip install hezar

```

Note that Hezar is a collection of models and tools, hence having different installation variants:

```

pip install hezar[all] # For a full installation

pip install hezar[nlp] # For NLP

pip install hezar[vision] # For computer vision models

pip install hezar[audio] # For audio and speech

pip install hezar[embeddings] # For word embedding models

```

You can also install the latest version from the source:

```

git clone https://github.com/hezarai/hezar.git

pip install ./hezar

```

## Documentation

Explore Hezar to learn more on the [docs](https://hezarai.github.io/hezar/index.html) page or explore the key concepts:

- [Getting Started](https://hezarai.github.io/hezar/get_started/overview.html)

- [Quick Tour](https://hezarai.github.io/hezar/get_started/quick_tour.html)

- [Tutorials](https://hezarai.github.io/hezar/tutorial/models.html)

- [Developer Guides](https://hezarai.github.io/hezar/guide/hezar_architecture.html)

- [Contribution](https://hezarai.github.io/hezar/contributing.html)

- [Reference API](https://hezarai.github.io/hezar/source/index.html)

## Quick Tour

### Models

There's a bunch of ready to use trained models for different tasks on the Hub!

**🤗Hugging Face Hub Page**: [https://huggingface.co/hezarai](https://huggingface.co/hezarai)

Let's walk you through some examples!

- **Text Classification (sentiment analysis, categorization, etc)**

```python

from hezar.models import Model

example = ["هزار، کتابخانهای کامل برای به کارگیری آسان هوش مصنوعی"]

model = Model.load("hezarai/bert-fa-sentiment-dksf")

outputs = model.predict(example)

print(outputs)

```

```

[[{'label': 'positive', 'score': 0.812910258769989}]]

```

- **Sequence Labeling (POS, NER, etc.)**

```python

from hezar.models import Model

pos_model = Model.load("hezarai/bert-fa-pos-lscp-500k") # Part-of-speech

ner_model = Model.load("hezarai/bert-fa-ner-arman") # Named entity recognition

inputs = ["شرکت هوش مصنوعی هزار"]

pos_outputs = pos_model.predict(inputs)

ner_outputs = ner_model.predict(inputs)

print(f"POS: {pos_outputs}")

print(f"NER: {ner_outputs}")

```

```

POS: [[{'token': 'شرکت', 'label': 'Ne'}, {'token': 'هوش', 'label': 'Ne'}, {'token': 'مصنوعی', 'label': 'AJe'}, {'token': 'هزار', 'label': 'NUM'}]]

NER: [[{'token': 'شرکت', 'label': 'B-org'}, {'token': 'هوش', 'label': 'I-org'}, {'token': 'مصنوعی', 'label': 'I-org'}, {'token': 'هزار', 'label': 'I-org'}]]

```

- **Mask Filling**

```python

from hezar.models import Model

model = Model.load("hezarai/roberta-fa-mask-filling")

inputs = ["سلام بچه ها حالتون <mask>"]

outputs = model.predict(inputs, top_k=1)

print(outputs)

```

```

[[{'token': 'چطوره', 'sequence': 'سلام بچه ها حالتون چطوره', 'token_id': 34505, 'score': 0.2230483442544937}]]

```

- **Speech Recognition**

```python

from hezar.models import Model

model = Model.load("hezarai/whisper-small-fa")

transcripts = model.predict("examples/assets/speech_example.mp3")

print(transcripts)

```

```

[{'text': 'و این تنها محدود به محیط کار نیست'}]

```

- **Text Detection (Pre-OCR)**

```python

from hezar.models import Model

from hezar.utils import load_image, draw_boxes, show_image

model = Model.load("hezarai/CRAFT")

image = load_image("../assets/text_detection_example.png")

outputs = model.predict(image)

result_image = draw_boxes(image, outputs[0]["boxes"])

show_image(result_image, "result")

```

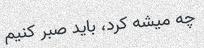

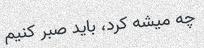

- **Image to Text (OCR)**

```python

from hezar.models import Model

# OCR with CRNN

model = Model.load("hezarai/crnn-fa-printed-96-long")

texts = model.predict("examples/assets/ocr_example.jpg")

print(f"CRNN Output: {texts}")

```

```

CRNN Output: [{'text': 'چه میشه کرد، باید صبر کنیم'}]

```

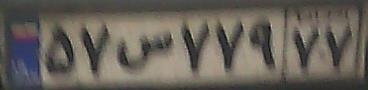

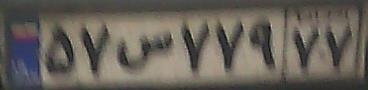

- **Image to Text (License Plate Recognition)**

```python

from hezar.models import Model

model = Model.load("hezarai/crnn-fa-license-plate-recognition-v2")

plate_text = model.predict("assets/license_plate_ocr_example.jpg")

print(plate_text) # Persian text of mixed numbers and characters might not show correctly in the console

```

```

[{'text': '۵۷س۷۷۹۷۷'}]

```

- **Image to Text (Image Captioning)**

```python

from hezar.models import Model

model = Model.load("hezarai/vit-roberta-fa-image-captioning-flickr30k")

texts = model.predict("examples/assets/image_captioning_example.jpg")

print(texts)

```

```

[{'text': 'سگی با توپ تنیس در دهانش می دود.'}]

```

We constantly keep working on adding and training new models and this section will hopefully be expanding over time ;)

### Word Embeddings

- **FastText**

```python

from hezar.embeddings import Embedding

fasttext = Embedding.load("hezarai/fasttext-fa-300")

most_similar = fasttext.most_similar("هزار")

print(most_similar)

```

```

[{'score': 0.7579, 'word': 'میلیون'},

{'score': 0.6943, 'word': '21هزار'},

{'score': 0.6861, 'word': 'میلیارد'},

{'score': 0.6825, 'word': '26هزار'},

{'score': 0.6803, 'word': '٣هزار'}]

```

- **Word2Vec (Skip-gram)**

```python

from hezar.embeddings import Embedding

word2vec = Embedding.load("hezarai/word2vec-skipgram-fa-wikipedia")

most_similar = word2vec.most_similar("هزار")

print(most_similar)

```

```

[{'score': 0.7885, 'word': 'چهارهزار'},

{'score': 0.7788, 'word': '۱۰هزار'},

{'score': 0.7727, 'word': 'دویست'},

{'score': 0.7679, 'word': 'میلیون'},

{'score': 0.7602, 'word': 'پانصد'}]

```

- **Word2Vec (CBOW)**

```python

from hezar.embeddings import Embedding

word2vec = Embedding.load("hezarai/word2vec-cbow-fa-wikipedia")

most_similar = word2vec.most_similar("هزار")

print(most_similar)

```

```

[{'score': 0.7407, 'word': 'دویست'},

{'score': 0.7400, 'word': 'میلیون'},

{'score': 0.7326, 'word': 'صد'},

{'score': 0.7276, 'word': 'پانصد'},

{'score': 0.7011, 'word': 'سیصد'}]

```

For a full guide on the embeddings module, see the [embeddings tutorial](https://hezarai.github.io/hezar/tutorial/embeddings.html).

### Datasets

You can load any of the datasets on the [Hub](https://huggingface.co/hezarai) like below:

```python

from hezar.data import Dataset

# The `preprocessor` depends on what you want to do exactly later on. Below are just examples.

sentiment_dataset = Dataset.load("hezarai/sentiment-dksf", preprocessor="hezarai/bert-base-fa") # A TextClassificationDataset instance

lscp_dataset = Dataset.load("hezarai/lscp-pos-500k", preprocessor="hezarai/bert-base-fa") # A SequenceLabelingDataset instance

xlsum_dataset = Dataset.load("hezarai/xlsum-fa", preprocessor="hezarai/t5-base-fa") # A TextSummarizationDataset instance

alpr_ocr_dataset = Dataset.load("hezarai/persian-license-plate-v1", preprocessor="hezarai/crnn-fa-printed-96-long") # An OCRDataset instance

flickr30k_dataset = Dataset.load("hezarai/flickr30k-fa", preprocessor="hezarai/vit-roberta-fa-base") # An ImageCaptioningDataset instance

commonvoice_dataset = Dataset.load("hezarai/common-voice-13-fa", preprocessor="hezarai/whisper-small-fa") # A SpeechRecognitionDataset instance

...

```

The returned dataset objects from `load()` are PyTorch Dataset wrappers for specific tasks and can be used by a data loader out-of-the-box!

You can also load Hezar's datasets using 🤗Datasets:

```python

from datasets import load_dataset

dataset = load_dataset("hezarai/sentiment-dksf")

```

For a full guide on Hezar's datasets, see the [datasets tutorial](https://hezarai.github.io/hezar/tutorial/datasets.html).

### Training

Hezar makes it super easy to train models using out-of-the-box models and datasets provided in the library.

```python

from hezar.models import BertSequenceLabeling, BertSequenceLabelingConfig

from hezar.data import Dataset

from hezar.trainer import Trainer, TrainerConfig

from hezar.preprocessors import Preprocessor

base_model_path = "hezarai/bert-base-fa"

dataset_path = "hezarai/lscp-pos-500k"

train_dataset = Dataset.load(dataset_path, split="train", tokenizer_path=base_model_path)

eval_dataset = Dataset.load(dataset_path, split="test", tokenizer_path=base_model_path)

model = BertSequenceLabeling(BertSequenceLabelingConfig(id2label=train_dataset.config.id2label))

preprocessor = Preprocessor.load(base_model_path)

train_config = TrainerConfig(

output_dir="bert-fa-pos-lscp-500k",

task="sequence_labeling",

device="cuda",

init_weights_from=base_model_path,

batch_size=8,

num_epochs=5,

metrics=["seqeval"],

)

trainer = Trainer(

config=train_config,

model=model,

train_dataset=train_dataset,

eval_dataset=eval_dataset,

data_collator=train_dataset.data_collator,

preprocessor=preprocessor,

)

trainer.train()

trainer.push_to_hub("bert-fa-pos-lscp-500k") # push model, config, preprocessor, trainer files and configs

```

You can actually go way deeper with the Trainer. See more details [here](https://hezarai.github.io/hezar/tutorial/training/index.html).

## Offline Mode

Hezar hosts everything on [the HuggingFace Hub](https://huggingface.co/hezarai). When you use the `.load()` method for a model, dataset, etc., it's

downloaded and saved in the cache (at `~/.cache/hezar`) so next time you try to load the same asset, it uses the cached version

which works even when offline. But if you want to export assets more explicitly, you can use the `.save()` method to save

anything anywhere you want on a local path.

```python

from hezar.models import Model

# Load the online model

model = Model.load("hezarai/bert-fa-ner-arman")

# Save the model locally

save_path = "./weights/bert-fa-ner-arman"

model.save(save_path) # The weights, config, preprocessors, etc. are saved at `./weights/bert-fa-ner-arman`

# Now you can load the saved model

local_model = Model.load(save_path)

```

Moreover, any class that has `.load()` and `.save()` can be treated the same way.

## Going Deeper

Hezar's primary focus is on providing ready to use models (implementations & pretrained weights) for different casual tasks

not by reinventing the wheel, but by being built on top of

**[PyTorch](https://github.com/pytorch/pytorch),

🤗[Transformers](https://github.com/huggingface/transformers),

🤗[Tokenizers](https://github.com/huggingface/tokenizers),

🤗[Datasets](https://github.com/huggingface/datasets),

[Scikit-learn](https://github.com/scikit-learn/scikit-learn),

[Gensim](https://github.com/RaRe-Technologies/gensim),** etc.

Besides, it's deeply integrated with the **🤗[Hugging Face Hub](https://github.com/huggingface/huggingface_hub)** and

almost any module e.g, models, datasets, preprocessors, trainers, etc. can be uploaded to or downloaded from the Hub!

More specifically, here's a simple summary of the core modules in Hezar:

- **Models**: Every model is a `hezar.models.Model` instance which is in fact, a PyTorch `nn.Module` wrapper with extra features for saving, loading, exporting, etc.

- **Datasets**: Every dataset is a `hezar.data.Dataset` instance which is a PyTorch Dataset implemented specifically for each task that can load the data files from the Hugging Face Hub.

- **Preprocessors**: All preprocessors are preferably backed by a robust library like Tokenizers, pillow, etc.

- **Embeddings**: All embeddings are developed on top of Gensim and can be easily loaded from the Hub and used in just 2 lines of code!

- **Trainer**: Trainer is the base class for training almost any model in Hezar or even your own custom models backed by Hezar. The Trainer comes with a lot of features and is also exportable to the Hub!

- **Metrics**: Metrics are also another configurable and portable modules backed by Scikit-learn, seqeval, etc. and can be easily used in the trainers!

For more info, check the [tutorials](https://hezarai.github.io/hezar/tutorial/)

## Contribution

Maintaining Hezar is no cakewalk with just a few of us on board. The concept might not be groundbreaking, but putting it

into action was a real challenge and that's why Hezar stands as the biggest Persian open source project of its kind!

Any contribution, big or small, would mean a lot to us. So, if you're interested, let's team up and make

Hezar even better together! ❤️

Don't forget to check out our contribution guidelines in [CONTRIBUTING.md](CONTRIBUTING.md) before diving in. Your support is much appreciated!

## Contact

We highly recommend to submit any issues or questions in the issues or discussions section but in case you need direct

contact, here it is:

- [arxyzan@gmail.com](mailto:arxyzan@gmail.com)

- Telegram: [@arxyzan](https://t.me/arxyzan)

## Citation

If you found this project useful in your work or research please cite it by using this BibTeX entry:

```bibtex

@misc{hezar2023,

title = {Hezar: The all-in-one AI library for Persian},

author = {Aryan Shekarlaban & Pooya Mohammadi Kazaj},

publisher = {GitHub},

howpublished = {\url{https://github.com/hezarai/hezar}},

year = {2023}

}

```

Raw data

{

"_id": null,

"home_page": "https://github.com/hezarai",

"name": "hezar",

"maintainer": "Aryan Shekarlaban",

"docs_url": null,

"requires_python": ">=3.10.0",

"maintainer_email": "arxyzan@gmail.com",

"keywords": "packaging, poetry",

"author": "Aryan Shekarlaban",

"author_email": "arxyzan@gmail.com",

"download_url": "https://files.pythonhosted.org/packages/83/2b/703bb0342dca83e8ac48dd444700d8a19bf5ef13db478cbe33640b465543/hezar-0.42.0.tar.gz",

"platform": null,

"description": "\n<div align=\"center\">\n <img src=\"https://raw.githubusercontent.com/hezarai/hezar/main/hezar.png\"/>\n</div>\n\n<div align=\"center\"> The all-in-one AI library for Persian </div> <br>\n\n<div align=\"center\">\n\n\n[](https://pepy.tech/project/hezar)\n\n\n<br>\n[](https://huggingface.co/hezarai)\n[](https://t.me/hezarai)\n[](https://daramet.com/hezarai)\n</div>\n\n\n**Hezar** (meaning **_thousand_** in Persian) is a multipurpose AI library built to make AI easy for the Persian community!\n\nHezar is a library that:\n- brings together all the best works in AI for Persian\n- makes using AI models as easy as a couple of lines of code\n- seamlessly integrates with Hugging Face Hub for all of its models\n- has a highly developer-friendly interface\n- has a task-based model interface which is more convenient for general users.\n- is packed with additional tools like word embeddings, tokenizers, feature extractors, etc.\n- comes with a lot of supplementary ML tools for deployment, benchmarking, optimization, etc.\n- and more!\n\n## Installation\nHezar is available on PyPI and can be installed with pip (**Python 3.10 and later**):\n```\npip install hezar\n```\nNote that Hezar is a collection of models and tools, hence having different installation variants:\n```\npip install hezar[all] # For a full installation\npip install hezar[nlp] # For NLP\npip install hezar[vision] # For computer vision models\npip install hezar[audio] # For audio and speech\npip install hezar[embeddings] # For word embedding models\n```\nYou can also install the latest version from the source:\n```\ngit clone https://github.com/hezarai/hezar.git\npip install ./hezar\n```\n## Documentation\nExplore Hezar to learn more on the [docs](https://hezarai.github.io/hezar/index.html) page or explore the key concepts:\n- [Getting Started](https://hezarai.github.io/hezar/get_started/overview.html)\n- [Quick Tour](https://hezarai.github.io/hezar/get_started/quick_tour.html)\n- [Tutorials](https://hezarai.github.io/hezar/tutorial/models.html)\n- [Developer Guides](https://hezarai.github.io/hezar/guide/hezar_architecture.html)\n- [Contribution](https://hezarai.github.io/hezar/contributing.html)\n- [Reference API](https://hezarai.github.io/hezar/source/index.html)\n\n## Quick Tour\n### Models\nThere's a bunch of ready to use trained models for different tasks on the Hub!\n\n**\ud83e\udd17Hugging Face Hub Page**: [https://huggingface.co/hezarai](https://huggingface.co/hezarai)\n\nLet's walk you through some examples!\n\n- **Text Classification (sentiment analysis, categorization, etc)**\n```python\nfrom hezar.models import Model\n\nexample = [\"\u0647\u0632\u0627\u0631\u060c \u06a9\u062a\u0627\u0628\u062e\u0627\u0646\u0647\u200c\u0627\u06cc \u06a9\u0627\u0645\u0644 \u0628\u0631\u0627\u06cc \u0628\u0647 \u06a9\u0627\u0631\u06af\u06cc\u0631\u06cc \u0622\u0633\u0627\u0646 \u0647\u0648\u0634 \u0645\u0635\u0646\u0648\u0639\u06cc\"]\nmodel = Model.load(\"hezarai/bert-fa-sentiment-dksf\")\noutputs = model.predict(example)\nprint(outputs)\n```\n```\n[[{'label': 'positive', 'score': 0.812910258769989}]]\n```\n- **Sequence Labeling (POS, NER, etc.)**\n```python\nfrom hezar.models import Model\n\npos_model = Model.load(\"hezarai/bert-fa-pos-lscp-500k\") # Part-of-speech\nner_model = Model.load(\"hezarai/bert-fa-ner-arman\") # Named entity recognition\ninputs = [\"\u0634\u0631\u06a9\u062a \u0647\u0648\u0634 \u0645\u0635\u0646\u0648\u0639\u06cc \u0647\u0632\u0627\u0631\"]\npos_outputs = pos_model.predict(inputs)\nner_outputs = ner_model.predict(inputs)\nprint(f\"POS: {pos_outputs}\")\nprint(f\"NER: {ner_outputs}\")\n```\n```\nPOS: [[{'token': '\u0634\u0631\u06a9\u062a', 'label': 'Ne'}, {'token': '\u0647\u0648\u0634', 'label': 'Ne'}, {'token': '\u0645\u0635\u0646\u0648\u0639\u06cc', 'label': 'AJe'}, {'token': '\u0647\u0632\u0627\u0631', 'label': 'NUM'}]]\nNER: [[{'token': '\u0634\u0631\u06a9\u062a', 'label': 'B-org'}, {'token': '\u0647\u0648\u0634', 'label': 'I-org'}, {'token': '\u0645\u0635\u0646\u0648\u0639\u06cc', 'label': 'I-org'}, {'token': '\u0647\u0632\u0627\u0631', 'label': 'I-org'}]]\n```\n- **Mask Filling**\n```python\nfrom hezar.models import Model\n\nmodel = Model.load(\"hezarai/roberta-fa-mask-filling\")\ninputs = [\"\u0633\u0644\u0627\u0645 \u0628\u0686\u0647 \u0647\u0627 \u062d\u0627\u0644\u062a\u0648\u0646 <mask>\"]\noutputs = model.predict(inputs, top_k=1)\nprint(outputs)\n```\n```\n[[{'token': '\u0686\u0637\u0648\u0631\u0647', 'sequence': '\u0633\u0644\u0627\u0645 \u0628\u0686\u0647 \u0647\u0627 \u062d\u0627\u0644\u062a\u0648\u0646 \u0686\u0637\u0648\u0631\u0647', 'token_id': 34505, 'score': 0.2230483442544937}]]\n```\n- **Speech Recognition**\n```python\nfrom hezar.models import Model\n\nmodel = Model.load(\"hezarai/whisper-small-fa\")\ntranscripts = model.predict(\"examples/assets/speech_example.mp3\")\nprint(transcripts)\n```\n```\n[{'text': '\u0648 \u0627\u06cc\u0646 \u062a\u0646\u0647\u0627 \u0645\u062d\u062f\u0648\u062f \u0628\u0647 \u0645\u062d\u06cc\u0637 \u06a9\u0627\u0631 \u0646\u06cc\u0633\u062a'}]\n```\n- **Text Detection (Pre-OCR)**\n```python\nfrom hezar.models import Model\nfrom hezar.utils import load_image, draw_boxes, show_image\n\nmodel = Model.load(\"hezarai/CRAFT\")\nimage = load_image(\"../assets/text_detection_example.png\")\noutputs = model.predict(image)\nresult_image = draw_boxes(image, outputs[0][\"boxes\"])\nshow_image(result_image, \"result\")\n```\n\n\n- **Image to Text (OCR)**\n```python\nfrom hezar.models import Model\n# OCR with CRNN\nmodel = Model.load(\"hezarai/crnn-fa-printed-96-long\")\ntexts = model.predict(\"examples/assets/ocr_example.jpg\")\nprint(f\"CRNN Output: {texts}\")\n```\n```\nCRNN Output: [{'text': '\u0686\u0647 \u0645\u06cc\u0634\u0647 \u06a9\u0631\u062f\u060c \u0628\u0627\u06cc\u062f \u0635\u0628\u0631 \u06a9\u0646\u06cc\u0645'}]\n```\n\n\n- **Image to Text (License Plate Recognition)**\n```python\nfrom hezar.models import Model\n\nmodel = Model.load(\"hezarai/crnn-fa-license-plate-recognition-v2\")\nplate_text = model.predict(\"assets/license_plate_ocr_example.jpg\")\nprint(plate_text) # Persian text of mixed numbers and characters might not show correctly in the console\n```\n```\n[{'text': '\u06f5\u06f7\u0633\u06f7\u06f7\u06f9\u06f7\u06f7'}]\n```\n\n\n- **Image to Text (Image Captioning)**\n```python\nfrom hezar.models import Model\n\nmodel = Model.load(\"hezarai/vit-roberta-fa-image-captioning-flickr30k\")\ntexts = model.predict(\"examples/assets/image_captioning_example.jpg\")\nprint(texts)\n```\n```\n[{'text': '\u0633\u06af\u06cc \u0628\u0627 \u062a\u0648\u067e \u062a\u0646\u06cc\u0633 \u062f\u0631 \u062f\u0647\u0627\u0646\u0634 \u0645\u06cc \u062f\u0648\u062f.'}]\n```\n\n\nWe constantly keep working on adding and training new models and this section will hopefully be expanding over time ;)\n### Word Embeddings\n- **FastText**\n```python\nfrom hezar.embeddings import Embedding\n\nfasttext = Embedding.load(\"hezarai/fasttext-fa-300\")\nmost_similar = fasttext.most_similar(\"\u0647\u0632\u0627\u0631\")\nprint(most_similar)\n```\n```\n[{'score': 0.7579, 'word': '\u0645\u06cc\u0644\u06cc\u0648\u0646'},\n {'score': 0.6943, 'word': '21\u0647\u0632\u0627\u0631'},\n {'score': 0.6861, 'word': '\u0645\u06cc\u0644\u06cc\u0627\u0631\u062f'},\n {'score': 0.6825, 'word': '26\u0647\u0632\u0627\u0631'},\n {'score': 0.6803, 'word': '\u0663\u0647\u0632\u0627\u0631'}]\n```\n- **Word2Vec (Skip-gram)**\n```python\nfrom hezar.embeddings import Embedding\n\nword2vec = Embedding.load(\"hezarai/word2vec-skipgram-fa-wikipedia\")\nmost_similar = word2vec.most_similar(\"\u0647\u0632\u0627\u0631\")\nprint(most_similar)\n```\n```\n[{'score': 0.7885, 'word': '\u0686\u0647\u0627\u0631\u0647\u0632\u0627\u0631'},\n {'score': 0.7788, 'word': '\u06f1\u06f0\u0647\u0632\u0627\u0631'},\n {'score': 0.7727, 'word': '\u062f\u0648\u06cc\u0633\u062a'},\n {'score': 0.7679, 'word': '\u0645\u06cc\u0644\u06cc\u0648\u0646'},\n {'score': 0.7602, 'word': '\u067e\u0627\u0646\u0635\u062f'}]\n```\n- **Word2Vec (CBOW)**\n```python\nfrom hezar.embeddings import Embedding\n\nword2vec = Embedding.load(\"hezarai/word2vec-cbow-fa-wikipedia\")\nmost_similar = word2vec.most_similar(\"\u0647\u0632\u0627\u0631\")\nprint(most_similar)\n```\n```\n[{'score': 0.7407, 'word': '\u062f\u0648\u06cc\u0633\u062a'},\n {'score': 0.7400, 'word': '\u0645\u06cc\u0644\u06cc\u0648\u0646'},\n {'score': 0.7326, 'word': '\u0635\u062f'},\n {'score': 0.7276, 'word': '\u067e\u0627\u0646\u0635\u062f'},\n {'score': 0.7011, 'word': '\u0633\u06cc\u0635\u062f'}]\n```\nFor a full guide on the embeddings module, see the [embeddings tutorial](https://hezarai.github.io/hezar/tutorial/embeddings.html).\n### Datasets\nYou can load any of the datasets on the [Hub](https://huggingface.co/hezarai) like below:\n```python\nfrom hezar.data import Dataset\n\n# The `preprocessor` depends on what you want to do exactly later on. Below are just examples.\nsentiment_dataset = Dataset.load(\"hezarai/sentiment-dksf\", preprocessor=\"hezarai/bert-base-fa\") # A TextClassificationDataset instance\nlscp_dataset = Dataset.load(\"hezarai/lscp-pos-500k\", preprocessor=\"hezarai/bert-base-fa\") # A SequenceLabelingDataset instance\nxlsum_dataset = Dataset.load(\"hezarai/xlsum-fa\", preprocessor=\"hezarai/t5-base-fa\") # A TextSummarizationDataset instance\nalpr_ocr_dataset = Dataset.load(\"hezarai/persian-license-plate-v1\", preprocessor=\"hezarai/crnn-fa-printed-96-long\") # An OCRDataset instance\nflickr30k_dataset = Dataset.load(\"hezarai/flickr30k-fa\", preprocessor=\"hezarai/vit-roberta-fa-base\") # An ImageCaptioningDataset instance\ncommonvoice_dataset = Dataset.load(\"hezarai/common-voice-13-fa\", preprocessor=\"hezarai/whisper-small-fa\") # A SpeechRecognitionDataset instance\n...\n```\nThe returned dataset objects from `load()` are PyTorch Dataset wrappers for specific tasks and can be used by a data loader out-of-the-box!\n\nYou can also load Hezar's datasets using \ud83e\udd17Datasets:\n```python\nfrom datasets import load_dataset\n\ndataset = load_dataset(\"hezarai/sentiment-dksf\")\n```\nFor a full guide on Hezar's datasets, see the [datasets tutorial](https://hezarai.github.io/hezar/tutorial/datasets.html).\n### Training\nHezar makes it super easy to train models using out-of-the-box models and datasets provided in the library.\n\n```python\nfrom hezar.models import BertSequenceLabeling, BertSequenceLabelingConfig\nfrom hezar.data import Dataset\nfrom hezar.trainer import Trainer, TrainerConfig\nfrom hezar.preprocessors import Preprocessor\n\nbase_model_path = \"hezarai/bert-base-fa\"\ndataset_path = \"hezarai/lscp-pos-500k\"\n\ntrain_dataset = Dataset.load(dataset_path, split=\"train\", tokenizer_path=base_model_path)\neval_dataset = Dataset.load(dataset_path, split=\"test\", tokenizer_path=base_model_path)\n\nmodel = BertSequenceLabeling(BertSequenceLabelingConfig(id2label=train_dataset.config.id2label))\npreprocessor = Preprocessor.load(base_model_path)\n\ntrain_config = TrainerConfig(\n output_dir=\"bert-fa-pos-lscp-500k\",\n task=\"sequence_labeling\",\n device=\"cuda\",\n init_weights_from=base_model_path,\n batch_size=8,\n num_epochs=5,\n metrics=[\"seqeval\"],\n)\n\ntrainer = Trainer(\n config=train_config,\n model=model,\n train_dataset=train_dataset,\n eval_dataset=eval_dataset,\n data_collator=train_dataset.data_collator,\n preprocessor=preprocessor,\n)\ntrainer.train()\n\ntrainer.push_to_hub(\"bert-fa-pos-lscp-500k\") # push model, config, preprocessor, trainer files and configs\n```\nYou can actually go way deeper with the Trainer. See more details [here](https://hezarai.github.io/hezar/tutorial/training/index.html).\n\n## Offline Mode\nHezar hosts everything on [the HuggingFace Hub](https://huggingface.co/hezarai). When you use the `.load()` method for a model, dataset, etc., it's\ndownloaded and saved in the cache (at `~/.cache/hezar`) so next time you try to load the same asset, it uses the cached version\nwhich works even when offline. But if you want to export assets more explicitly, you can use the `.save()` method to save\nanything anywhere you want on a local path.\n\n```python\nfrom hezar.models import Model\n\n# Load the online model\nmodel = Model.load(\"hezarai/bert-fa-ner-arman\")\n# Save the model locally\nsave_path = \"./weights/bert-fa-ner-arman\" \nmodel.save(save_path) # The weights, config, preprocessors, etc. are saved at `./weights/bert-fa-ner-arman`\n# Now you can load the saved model\nlocal_model = Model.load(save_path)\n```\nMoreover, any class that has `.load()` and `.save()` can be treated the same way.\n\n## Going Deeper\nHezar's primary focus is on providing ready to use models (implementations & pretrained weights) for different casual tasks\nnot by reinventing the wheel, but by being built on top of\n**[PyTorch](https://github.com/pytorch/pytorch),\n\ud83e\udd17[Transformers](https://github.com/huggingface/transformers),\n\ud83e\udd17[Tokenizers](https://github.com/huggingface/tokenizers),\n\ud83e\udd17[Datasets](https://github.com/huggingface/datasets),\n[Scikit-learn](https://github.com/scikit-learn/scikit-learn),\n[Gensim](https://github.com/RaRe-Technologies/gensim),** etc.\nBesides, it's deeply integrated with the **\ud83e\udd17[Hugging Face Hub](https://github.com/huggingface/huggingface_hub)** and\nalmost any module e.g, models, datasets, preprocessors, trainers, etc. can be uploaded to or downloaded from the Hub!\n\nMore specifically, here's a simple summary of the core modules in Hezar:\n- **Models**: Every model is a `hezar.models.Model` instance which is in fact, a PyTorch `nn.Module` wrapper with extra features for saving, loading, exporting, etc.\n- **Datasets**: Every dataset is a `hezar.data.Dataset` instance which is a PyTorch Dataset implemented specifically for each task that can load the data files from the Hugging Face Hub.\n- **Preprocessors**: All preprocessors are preferably backed by a robust library like Tokenizers, pillow, etc.\n- **Embeddings**: All embeddings are developed on top of Gensim and can be easily loaded from the Hub and used in just 2 lines of code!\n- **Trainer**: Trainer is the base class for training almost any model in Hezar or even your own custom models backed by Hezar. The Trainer comes with a lot of features and is also exportable to the Hub!\n- **Metrics**: Metrics are also another configurable and portable modules backed by Scikit-learn, seqeval, etc. and can be easily used in the trainers!\n\nFor more info, check the [tutorials](https://hezarai.github.io/hezar/tutorial/)\n\n## Contribution\nMaintaining Hezar is no cakewalk with just a few of us on board. The concept might not be groundbreaking, but putting it\ninto action was a real challenge and that's why Hezar stands as the biggest Persian open source project of its kind!\n\nAny contribution, big or small, would mean a lot to us. So, if you're interested, let's team up and make\nHezar even better together! \u2764\ufe0f\n\nDon't forget to check out our contribution guidelines in [CONTRIBUTING.md](CONTRIBUTING.md) before diving in. Your support is much appreciated!\n\n## Contact\nWe highly recommend to submit any issues or questions in the issues or discussions section but in case you need direct\ncontact, here it is:\n- [arxyzan@gmail.com](mailto:arxyzan@gmail.com)\n- Telegram: [@arxyzan](https://t.me/arxyzan)\n\n## Citation\nIf you found this project useful in your work or research please cite it by using this BibTeX entry:\n```bibtex\n@misc{hezar2023,\n title = {Hezar: The all-in-one AI library for Persian},\n author = {Aryan Shekarlaban & Pooya Mohammadi Kazaj},\n publisher = {GitHub},\n howpublished = {\\url{https://github.com/hezarai/hezar}},\n year = {2023}\n}\n```\n",

"bugtrack_url": null,

"license": "Apache-2.0",

"summary": "Hezar: The all-in-one AI library for Persian, supporting a wide variety of tasks and modalities!",

"version": "0.42.0",

"project_urls": {

"Documentation": "https://hezarai.github.io/hezar/",

"Homepage": "https://github.com/hezarai",

"Repository": "https://github.com/hezarai/hezar"

},

"split_keywords": [

"packaging",

" poetry"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "1333b613ae99a4e59ac2200aca085e03f95b66ec5cc45b9c9020921ed3669074",

"md5": "7e91beed7d3ee1aed5f5d925c7f8b673",

"sha256": "6e65c5689c7ad101d52a15061887fedd17db923fac4345c8e89f7d192c823d0b"

},

"downloads": -1,

"filename": "hezar-0.42.0-py3-none-any.whl",

"has_sig": false,

"md5_digest": "7e91beed7d3ee1aed5f5d925c7f8b673",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.10.0",

"size": 195244,

"upload_time": "2024-11-19T18:23:30",

"upload_time_iso_8601": "2024-11-19T18:23:30.132978Z",

"url": "https://files.pythonhosted.org/packages/13/33/b613ae99a4e59ac2200aca085e03f95b66ec5cc45b9c9020921ed3669074/hezar-0.42.0-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "832b703bb0342dca83e8ac48dd444700d8a19bf5ef13db478cbe33640b465543",

"md5": "468137864feff8f30f398a866aaf0d73",

"sha256": "3c8a659ed95c2543358150c26481fa67a7a41295790ed13dc58880b2f2f7a80d"

},

"downloads": -1,

"filename": "hezar-0.42.0.tar.gz",

"has_sig": false,

"md5_digest": "468137864feff8f30f398a866aaf0d73",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.10.0",

"size": 128703,

"upload_time": "2024-11-19T18:23:31",

"upload_time_iso_8601": "2024-11-19T18:23:31.962889Z",

"url": "https://files.pythonhosted.org/packages/83/2b/703bb0342dca83e8ac48dd444700d8a19bf5ef13db478cbe33640b465543/hezar-0.42.0.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2024-11-19 18:23:31",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "hezarai",

"github_project": "hezar",

"travis_ci": false,

"coveralls": false,

"github_actions": true,

"lcname": "hezar"

}