| Name | humanlayer JSON |

| Version |

0.7.4

JSON

JSON |

| download |

| home_page | None |

| Summary | humanlayer |

| upload_time | 2025-01-22 04:08:34 |

| maintainer | None |

| docs_url | None |

| author | None |

| requires_python | <4.0,>=3.10 |

| license | None |

| keywords |

|

| VCS |

|

| bugtrack_url |

|

| requirements |

No requirements were recorded.

|

| Travis-CI |

No Travis.

|

| coveralls test coverage |

No coveralls.

|

<div align="center">

</div>

**HumanLayer**: A python toolkit to enable AI agents to communicate with humans in tool-based and asynchronous workflows. By incorporating humans-in-the-loop, agentic tools can be given access to much more powerful and meaningful tool calls and tasks.

Bring your LLM (OpenAI, Llama, Claude, etc) and Framework (LangChain, CrewAI, etc) and start giving your AI agents safe access to the world.

<div align="center">

<h3>

[Homepage](https://www.humanlayer.dev/) | [Get Started](https://humanlayer.dev/docs/quickstart-python) | [Discord](https://humanlayer.dev/discord)

</h3>

[](https://github.com/humanlayer/humanlayer)

[](https://opensource.org/licenses/Apache-2)

[](https://pypi.org/project/humanlayer/)

[](https://www.npmjs.com/package/humanlayer)

<img referrerpolicy="no-referrer-when-downgrade" src="https://static.scarf.sh/a.png?x-pxid=fcfc0926-d841-47fb-b8a6-6aba3a6c3228" />

</div>

## Table of contents

- [Getting Started](#getting-started)

- [Why HumanLayer?](#why-humanlayer)

- [Key Features](#key-features)

- [Examples](#examples)

- [Roadmap](#roadmap)

- [Contributing](#contributing)

- [License](#license)

## Getting Started

To get started, check out [Getting Started](https://humanlayer.dev/docs/quickstart-python), watch the [Getting Started Video](https://www.loom.com/share/7c65d48d18d1421a864a1591ff37e2bf), or jump straight into one of the [Examples](./examples/):

- 🦜⛓️ [LangChain](./examples/langchain/)

- 🚣 [CrewAI](./examples/crewai/)

- 🦾 [ControlFlow](./examples/controlflow/)

- 🧠 [Raw OpenAI Client](./examples/openai_client/)

<div align="center">

<a target="_blank" href="https://youtu.be/5sbN8rh_S5Q"><img width="60%" alt="video thumbnail showing editor" src="https://www.humanlayer.dev/video-thumb.png"></a>

</div>

## Example

HumanLayer supports either Python or Typescript / JS.

```shell

pip install humanlayer

```

```python

from humanlayer import HumanLayer

hl = HumanLayer()

@hl.require_approval()

def send_email(to: str, subject: str, body: str):

"""Send an email to the customer"""

...

# made up function, use whatever

# tool-calling framework you prefer

run_llm_task(

prompt="""Send an email welcoming the customer to

the platform and encouraging them to invite a team member.""",

tools=[send_email],

llm="gpt-4o"

)

```

<div align="center"><img style="width: 400px" alt="A screenshot of slack showing a human replying to the bot" src="https://www.humanlayer.dev/hl-slack-oct11.png"></div>

For Typescript, install with npm:

```

npm install humanlayer

```

More python and TS examples in the [framework specific examples](./examples) or the [Getting Started Guides](https://humanlayer.dev/docs/frameworks) to get hands on.

#### Human as Tool

You can also use `hl.human_as_tool()` to bring a human into the loop for any reason. This can be useful for debugging, asking for advice, or just getting a human's opinion on something.

```python

# human_as_tool.py

from humanlayer import HumanLayer

hl = HumanLayer()

contact_a_human = hl.human_as_tool()

def send_email(to: str, subject: str, body: str):

"""Send an email to the customer"""

...

# made up method, use whatever

# framework you prefer

run_llm_task(

prompt="""Send an email welcoming the customer to

the platform and encouraging them to invite a team member.

Contact a human for collaboration and feedback on your email

draft

""",

tools=[send_email, contact_a_human],

llm="gpt-4o"

)

```

See the [examples](./examples) for more advanced human as tool examples, and workflows that combine both concepts.

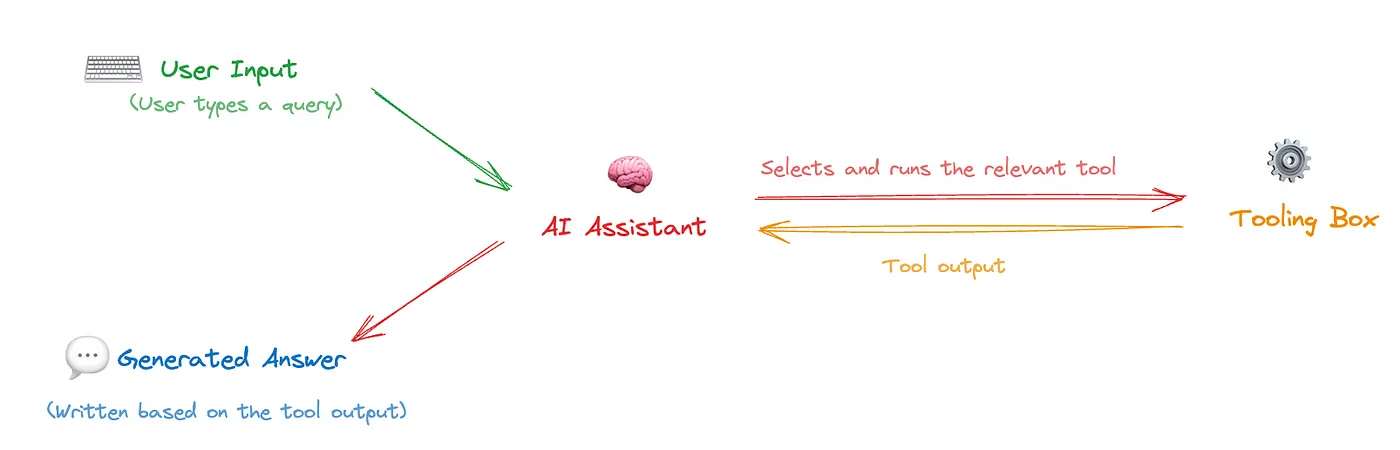

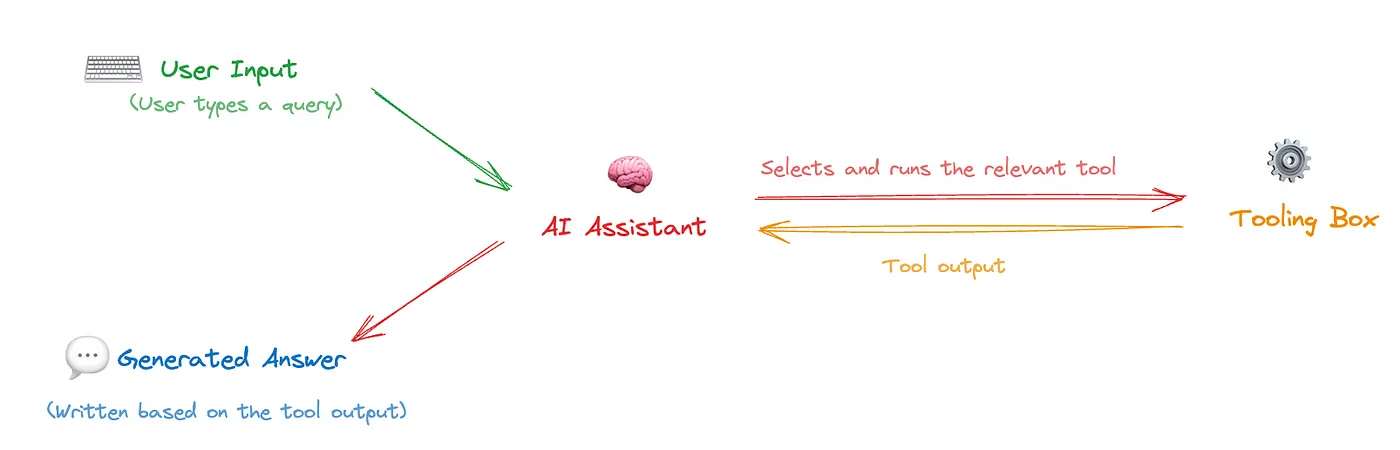

## Why HumanLayer?

Functions and tools are a key part of [Agentic Workflows](https://www.deeplearning.ai/the-batch/how-agents-can-improve-llm-performance). They enable LLMs to interact meaningfully with the outside world and automate broad scopes of impactful work. Correct and accurate function calling is essential for AI agents that do meaningful things like book appointments, interact with customers, manage billing information, write+execute code, and more.

[](https://louis-dupont.medium.com/transforming-software-interactions-with-tool-calling-and-llms-dc39185247e9)

_From https://louis-dupont.medium.com/transforming-software-interactions-with-tool-calling-and-llms-dc39185247e9_

**However**, the most useful functions we can give to an LLM are also the most risky. We can all imagine the value of an AI Database Administrator that constantly tunes and refactors our SQL database, but most teams wouldn't give an LLM access to run arbitrary SQL statements against a production database (heck, we mostly don't even let humans do that). That is:

<div align="center">

<h3><blockquote>Even with state-of-the-art agentic reasoning and prompt routing, LLMs are not sufficiently reliable to be given access to high-stakes functions without human oversight</blockquote></h3>

</div>

To better define what is meant by "high stakes", some examples:

- **Low Stakes**: Read Access to public data (e.g. search wikipedia, access public APIs and DataSets)

- **Low Stakes**: Communicate with agent author (e.g. an engineer might empower an agent to send them a private Slack message with updates on progress)

- **Medium Stakes**: Read Access to Private Data (e.g. read emails, access calendars, query a CRM)

- **Medium Stakes**: Communicate with strict rules (e.g. sending based on a specific sequence of hard-coded email templates)

- **High Stakes**: Communicate on my Behalf or on behalf of my Company (e.g. send emails, post to slack, publish social/blog content)

- **High Stakes**: Write Access to Private Data (e.g. update CRM records, modify feature toggles, update billing information)

<div align="center"><img style="width: 600px" alt="Image showing the levels of function stakes stacked on top of one another" src="./docs/images/function_stakes.png"></div>

The high stakes functions are the ones that are the most valuable and promise the most impact in automating away human workflows. But they are also the ones where "90% accuracy" is not acceptable. Reliability is further impacted by today's LLMs' tendency to hallucinate or craft low-quality text that is clearly AI generated. The sooner teams can get Agents reliably and safely calling these tools with high-quality inputs, the sooner they can reap massive benefits.

HumanLayer provides a set of tools to _deterministically_ guarantee human oversight of high stakes function calls. Even if the LLM makes a mistake or hallucinates, HumanLayer is baked into the tool/function itself, guaranteeing a human in the loop.

<div align="center"><img style="width: 400px" alt="HumanLayer @require_approval decorator wrapping the Commnicate on my behalf function" src="./docs/images/humanlayer_require_approval.png"></div>

<div align="center">

<h3><blockquote>

HumanLayer provides a set of tools to *deterministically* guarantee human oversight of high stakes function calls

</blockquote></h3>

</div>

### The Future: Autonomous Agents and the "Outer Loop"

_Read More: [OpenAI's RealTime API is a step towards outer-loop agents](https://theouterloop.substack.com/p/openais-realtime-api-is-a-step-towards)_

Between `require_approval` and `human_as_tool`, HumanLayer is built to empower the next generation of AI agents - Autonomous Agents, but it's just a piece of the puzzle. To clarify "next generation", we can summarize briefly the history of LLM applications.

- **Gen 1**: Chat - human-initiated question / response interface

- **Gen 2**: Agentic Assistants - frameworks drive prompt routing, tool calling, chain of thought, and context window management to get much more reliability and functionality. Most workflows are initiated by humans in single-shot "here's a task, go do it" or rolling chat interfaces.

- **Gen 3**: Autonomous Agents - no longer human initiated, agents will live in the "outer loop" driving toward their goals using various tools and functions. Human/Agent communication is Agent-initiated rather than human-initiated.

Gen 3 autonomous agents will need ways to consult humans for input on various tasks. In order for these agents to perform actual useful work, they'll need human oversight for sensitive operations.

These agents will require ways to contact one or more humans across various channels including chat, email, sms, and more.

While early versions of these agents may technically be "human initiated" in that they get kicked off on a regular schedule by e.g. a cron or similar, the best ones will be managing their own scheduling and costs. This will require toolkits for inspecting costs and something akin to `sleep_until`. They'll need to run in orchestration frameworks that can durably serialize and resume agent workflows across tool calls that might not return for hours or days. These frameworks will need to support context window management by a "manager LLM" and enable agents to fork sub-chains to handle specialized tasks and roles.

Example use cases for these outer loop agents include [the linkedin inbox assistant](./examples/langchain/04-human_as_tool_linkedin.py) and [the customer onboarding assistant](./examples/langchain/05-approvals_and_humans_composite.py), but that's really just scratching the surface.

## Key Features

- **Require Human Approval for Function Calls**: the `@hl.require_approval()` decorator blocks specific function calls until a human has been consulted - upon denial, feedback will be passed to the LLM

- **Human as Tool**: generic `hl.human_as_tool()` allows for contacting a human for answers, advice, or feedback

- **OmniChannel Contact**: Contact humans and collect responses across Slack, Email, Discord, and more

- **Granular Routing**: Route approvals to specific teams or individuals

- **Bring your own LLM + Framework**: Because HumanLayer is implemented at tools layer, it supports any LLM and all major orchestration frameworks that support tool calling.

## Examples

You can test different real life examples of HumanLayer in the [examples folder](./examples/):

- 🦜⛓️ [LangChain Math](./examples/langchain/01-math_example.py)

- 🦜⛓️ [LangChain Human As Tool](./examples/langchain/03-human_as_tool.py)

- 🚣 [CrewAI Math](./examples/crewai/crewai_math.py)

- 🦾 [ControlFlow Math](./examples/controlflow/controlflow_math.py)

- 🧠 [Raw OpenAI Client](./examples/openai_client/01-math_example.py)

## Roadmap

| Feature | Status |

| ---------------------------------------------------------------------------------- | ------------------- |

| Require Approval | ⚙️ Beta |

| Human as Tool | ⚙️ Beta |

| CLI Approvals | ⚙️ Beta |

| CLI Human as Tool | ⚙️ Beta |

| Slack Approvals | ⚙️ Beta |

| Langchain Support | ⚙️ Beta |

| CrewAI Support | ⚙️ Beta |

| [GripTape Support](./examples/griptape) | ⚗️ Alpha |

| [GripTape Builtin Tools Support](./examples/griptape/02-decorate-existing-tool.py) | 🗓️ Planned |

| Controlflow Support | ⚗️ Alpha |

| Custom Response options | ⚗️ Alpha |

| Open Protocol for BYO server | 🗓️ Planned |

| Composite Contact Channels | 🚧 Work in progress |

| Async / Webhook support | 🗓️ Planned |

| SMS/RCS Approvals | 🗓️ Planned |

| Discord Approvals | 🗓️ Planned |

| Email Approvals | ⚙️ Beta |

| LlamaIndex Support | 🗓️ Planned |

| Haystack Support | 🗓️ Planned |

## Contributing

The HumanLayer SDK and docs are open-source and we welcome contributions in the form of issues, documentation, pull requests, and more. See [CONTRIBUTING.md](./CONTRIBUTING.md) for more details.

## Fun Stuff

[](https://star-history.com/#humanlayer/humanlayer&Date)

Shouts out to [@erquhart](https://github.com/erquhart) for this one

<div align="center">

<img width="360" src="https://github.com/user-attachments/assets/849a7149-daff-43a7-8ca9-427ccd0ae77c" />

</div>

## License

The HumanLayer SDK in this repo is licensed under the Apache 2 License.

Raw data

{

"_id": null,

"home_page": null,

"name": "humanlayer",

"maintainer": null,

"docs_url": null,

"requires_python": "<4.0,>=3.10",

"maintainer_email": null,

"keywords": null,

"author": null,

"author_email": "humanlayer authors <dexter@metalytics.dev>",

"download_url": "https://files.pythonhosted.org/packages/ab/83/4851aa14b0c8e3955ed3620f1b3de3b3c24bb4c61263fd1c76b2b517f0b9/humanlayer-0.7.4.tar.gz",

"platform": null,

"description": "<div align=\"center\">\n\n\n\n</div>\n\n**HumanLayer**: A python toolkit to enable AI agents to communicate with humans in tool-based and asynchronous workflows. By incorporating humans-in-the-loop, agentic tools can be given access to much more powerful and meaningful tool calls and tasks.\n\nBring your LLM (OpenAI, Llama, Claude, etc) and Framework (LangChain, CrewAI, etc) and start giving your AI agents safe access to the world.\n\n<div align=\"center\">\n\n<h3>\n\n[Homepage](https://www.humanlayer.dev/) | [Get Started](https://humanlayer.dev/docs/quickstart-python) | [Discord](https://humanlayer.dev/discord)\n\n</h3>\n\n[](https://github.com/humanlayer/humanlayer)\n[](https://opensource.org/licenses/Apache-2)\n[](https://pypi.org/project/humanlayer/)\n[](https://www.npmjs.com/package/humanlayer)\n\n<img referrerpolicy=\"no-referrer-when-downgrade\" src=\"https://static.scarf.sh/a.png?x-pxid=fcfc0926-d841-47fb-b8a6-6aba3a6c3228\" />\n\n</div>\n\n## Table of contents\n\n- [Getting Started](#getting-started)\n- [Why HumanLayer?](#why-humanlayer)\n- [Key Features](#key-features)\n- [Examples](#examples)\n- [Roadmap](#roadmap)\n- [Contributing](#contributing)\n- [License](#license)\n\n## Getting Started\n\nTo get started, check out [Getting Started](https://humanlayer.dev/docs/quickstart-python), watch the [Getting Started Video](https://www.loom.com/share/7c65d48d18d1421a864a1591ff37e2bf), or jump straight into one of the [Examples](./examples/):\n\n- \ud83e\udd9c\u26d3\ufe0f [LangChain](./examples/langchain/)\n- \ud83d\udea3\u200d [CrewAI](./examples/crewai/)\n- \ud83e\uddbe [ControlFlow](./examples/controlflow/)\n- \ud83e\udde0 [Raw OpenAI Client](./examples/openai_client/)\n\n<div align=\"center\">\n<a target=\"_blank\" href=\"https://youtu.be/5sbN8rh_S5Q\"><img width=\"60%\" alt=\"video thumbnail showing editor\" src=\"https://www.humanlayer.dev/video-thumb.png\"></a>\n</div>\n\n## Example\n\nHumanLayer supports either Python or Typescript / JS.\n\n```shell\npip install humanlayer\n```\n\n```python\nfrom humanlayer import HumanLayer\nhl = HumanLayer()\n\n@hl.require_approval()\ndef send_email(to: str, subject: str, body: str):\n \"\"\"Send an email to the customer\"\"\"\n ...\n\n\n# made up function, use whatever\n# tool-calling framework you prefer\nrun_llm_task(\n prompt=\"\"\"Send an email welcoming the customer to\n the platform and encouraging them to invite a team member.\"\"\",\n tools=[send_email],\n llm=\"gpt-4o\"\n)\n```\n\n<div align=\"center\"><img style=\"width: 400px\" alt=\"A screenshot of slack showing a human replying to the bot\" src=\"https://www.humanlayer.dev/hl-slack-oct11.png\"></div>\n\nFor Typescript, install with npm:\n\n```\nnpm install humanlayer\n```\n\nMore python and TS examples in the [framework specific examples](./examples) or the [Getting Started Guides](https://humanlayer.dev/docs/frameworks) to get hands on.\n\n#### Human as Tool\n\nYou can also use `hl.human_as_tool()` to bring a human into the loop for any reason. This can be useful for debugging, asking for advice, or just getting a human's opinion on something.\n\n```python\n# human_as_tool.py\n\nfrom humanlayer import HumanLayer\nhl = HumanLayer()\ncontact_a_human = hl.human_as_tool()\n\ndef send_email(to: str, subject: str, body: str):\n \"\"\"Send an email to the customer\"\"\"\n ...\n\n# made up method, use whatever\n# framework you prefer\nrun_llm_task(\n prompt=\"\"\"Send an email welcoming the customer to\n the platform and encouraging them to invite a team member.\n\n Contact a human for collaboration and feedback on your email\n draft\n \"\"\",\n tools=[send_email, contact_a_human],\n llm=\"gpt-4o\"\n)\n```\n\nSee the [examples](./examples) for more advanced human as tool examples, and workflows that combine both concepts.\n\n## Why HumanLayer?\n\nFunctions and tools are a key part of [Agentic Workflows](https://www.deeplearning.ai/the-batch/how-agents-can-improve-llm-performance). They enable LLMs to interact meaningfully with the outside world and automate broad scopes of impactful work. Correct and accurate function calling is essential for AI agents that do meaningful things like book appointments, interact with customers, manage billing information, write+execute code, and more.\n\n[](https://louis-dupont.medium.com/transforming-software-interactions-with-tool-calling-and-llms-dc39185247e9)\n_From https://louis-dupont.medium.com/transforming-software-interactions-with-tool-calling-and-llms-dc39185247e9_\n\n**However**, the most useful functions we can give to an LLM are also the most risky. We can all imagine the value of an AI Database Administrator that constantly tunes and refactors our SQL database, but most teams wouldn't give an LLM access to run arbitrary SQL statements against a production database (heck, we mostly don't even let humans do that). That is:\n\n<div align=\"center\">\n<h3><blockquote>Even with state-of-the-art agentic reasoning and prompt routing, LLMs are not sufficiently reliable to be given access to high-stakes functions without human oversight</blockquote></h3>\n</div>\n\nTo better define what is meant by \"high stakes\", some examples:\n\n- **Low Stakes**: Read Access to public data (e.g. search wikipedia, access public APIs and DataSets)\n- **Low Stakes**: Communicate with agent author (e.g. an engineer might empower an agent to send them a private Slack message with updates on progress)\n- **Medium Stakes**: Read Access to Private Data (e.g. read emails, access calendars, query a CRM)\n- **Medium Stakes**: Communicate with strict rules (e.g. sending based on a specific sequence of hard-coded email templates)\n- **High Stakes**: Communicate on my Behalf or on behalf of my Company (e.g. send emails, post to slack, publish social/blog content)\n- **High Stakes**: Write Access to Private Data (e.g. update CRM records, modify feature toggles, update billing information)\n\n<div align=\"center\"><img style=\"width: 600px\" alt=\"Image showing the levels of function stakes stacked on top of one another\" src=\"./docs/images/function_stakes.png\"></div>\n\nThe high stakes functions are the ones that are the most valuable and promise the most impact in automating away human workflows. But they are also the ones where \"90% accuracy\" is not acceptable. Reliability is further impacted by today's LLMs' tendency to hallucinate or craft low-quality text that is clearly AI generated. The sooner teams can get Agents reliably and safely calling these tools with high-quality inputs, the sooner they can reap massive benefits.\n\nHumanLayer provides a set of tools to _deterministically_ guarantee human oversight of high stakes function calls. Even if the LLM makes a mistake or hallucinates, HumanLayer is baked into the tool/function itself, guaranteeing a human in the loop.\n\n<div align=\"center\"><img style=\"width: 400px\" alt=\"HumanLayer @require_approval decorator wrapping the Commnicate on my behalf function\" src=\"./docs/images/humanlayer_require_approval.png\"></div>\n\n<div align=\"center\">\n<h3><blockquote>\nHumanLayer provides a set of tools to *deterministically* guarantee human oversight of high stakes function calls\n</blockquote></h3>\n</div>\n\n### The Future: Autonomous Agents and the \"Outer Loop\"\n\n_Read More: [OpenAI's RealTime API is a step towards outer-loop agents](https://theouterloop.substack.com/p/openais-realtime-api-is-a-step-towards)_\n\nBetween `require_approval` and `human_as_tool`, HumanLayer is built to empower the next generation of AI agents - Autonomous Agents, but it's just a piece of the puzzle. To clarify \"next generation\", we can summarize briefly the history of LLM applications.\n\n- **Gen 1**: Chat - human-initiated question / response interface\n- **Gen 2**: Agentic Assistants - frameworks drive prompt routing, tool calling, chain of thought, and context window management to get much more reliability and functionality. Most workflows are initiated by humans in single-shot \"here's a task, go do it\" or rolling chat interfaces.\n- **Gen 3**: Autonomous Agents - no longer human initiated, agents will live in the \"outer loop\" driving toward their goals using various tools and functions. Human/Agent communication is Agent-initiated rather than human-initiated.\n\n\n\nGen 3 autonomous agents will need ways to consult humans for input on various tasks. In order for these agents to perform actual useful work, they'll need human oversight for sensitive operations.\n\nThese agents will require ways to contact one or more humans across various channels including chat, email, sms, and more.\n\nWhile early versions of these agents may technically be \"human initiated\" in that they get kicked off on a regular schedule by e.g. a cron or similar, the best ones will be managing their own scheduling and costs. This will require toolkits for inspecting costs and something akin to `sleep_until`. They'll need to run in orchestration frameworks that can durably serialize and resume agent workflows across tool calls that might not return for hours or days. These frameworks will need to support context window management by a \"manager LLM\" and enable agents to fork sub-chains to handle specialized tasks and roles.\n\nExample use cases for these outer loop agents include [the linkedin inbox assistant](./examples/langchain/04-human_as_tool_linkedin.py) and [the customer onboarding assistant](./examples/langchain/05-approvals_and_humans_composite.py), but that's really just scratching the surface.\n\n## Key Features\n\n- **Require Human Approval for Function Calls**: the `@hl.require_approval()` decorator blocks specific function calls until a human has been consulted - upon denial, feedback will be passed to the LLM\n- **Human as Tool**: generic `hl.human_as_tool()` allows for contacting a human for answers, advice, or feedback\n- **OmniChannel Contact**: Contact humans and collect responses across Slack, Email, Discord, and more\n- **Granular Routing**: Route approvals to specific teams or individuals\n- **Bring your own LLM + Framework**: Because HumanLayer is implemented at tools layer, it supports any LLM and all major orchestration frameworks that support tool calling.\n\n## Examples\n\nYou can test different real life examples of HumanLayer in the [examples folder](./examples/):\n\n- \ud83e\udd9c\u26d3\ufe0f [LangChain Math](./examples/langchain/01-math_example.py)\n- \ud83e\udd9c\u26d3\ufe0f [LangChain Human As Tool](./examples/langchain/03-human_as_tool.py)\n- \ud83d\udea3\u200d [CrewAI Math](./examples/crewai/crewai_math.py)\n- \ud83e\uddbe [ControlFlow Math](./examples/controlflow/controlflow_math.py)\n- \ud83e\udde0 [Raw OpenAI Client](./examples/openai_client/01-math_example.py)\n\n## Roadmap\n\n| Feature | Status |\n| ---------------------------------------------------------------------------------- | ------------------- |\n| Require Approval | \u2699\ufe0f Beta |\n| Human as Tool | \u2699\ufe0f Beta |\n| CLI Approvals | \u2699\ufe0f Beta |\n| CLI Human as Tool | \u2699\ufe0f Beta |\n| Slack Approvals | \u2699\ufe0f Beta |\n| Langchain Support | \u2699\ufe0f Beta |\n| CrewAI Support | \u2699\ufe0f Beta |\n| [GripTape Support](./examples/griptape) | \u2697\ufe0f Alpha |\n| [GripTape Builtin Tools Support](./examples/griptape/02-decorate-existing-tool.py) | \ud83d\uddd3\ufe0f Planned |\n| Controlflow Support | \u2697\ufe0f Alpha |\n| Custom Response options | \u2697\ufe0f Alpha |\n| Open Protocol for BYO server | \ud83d\uddd3\ufe0f Planned |\n| Composite Contact Channels | \ud83d\udea7 Work in progress |\n| Async / Webhook support | \ud83d\uddd3\ufe0f Planned |\n| SMS/RCS Approvals | \ud83d\uddd3\ufe0f Planned |\n| Discord Approvals | \ud83d\uddd3\ufe0f Planned |\n| Email Approvals | \u2699\ufe0f Beta |\n| LlamaIndex Support | \ud83d\uddd3\ufe0f Planned |\n| Haystack Support | \ud83d\uddd3\ufe0f Planned |\n\n## Contributing\n\nThe HumanLayer SDK and docs are open-source and we welcome contributions in the form of issues, documentation, pull requests, and more. See [CONTRIBUTING.md](./CONTRIBUTING.md) for more details.\n\n## Fun Stuff\n\n[](https://star-history.com/#humanlayer/humanlayer&Date)\n\nShouts out to [@erquhart](https://github.com/erquhart) for this one\n\n<div align=\"center\">\n<img width=\"360\" src=\"https://github.com/user-attachments/assets/849a7149-daff-43a7-8ca9-427ccd0ae77c\" />\n</div>\n\n## License\n\nThe HumanLayer SDK in this repo is licensed under the Apache 2 License.\n",

"bugtrack_url": null,

"license": null,

"summary": "humanlayer",

"version": "0.7.4",

"project_urls": {

"repository": "https://github.com/humanlayer/humanlayer"

},

"split_keywords": [],

"urls": [

{

"comment_text": null,

"digests": {

"blake2b_256": "b12712777f10169602bb87563949e4808d71a81d58d3dc616a3047dc253f6711",

"md5": "053c8b21f3ab971004888bbd366ab85f",

"sha256": "30ee22fc52e644ff6e9b497172fcc8b4d70e6c9baef46e5f83d82ccef13dffba"

},

"downloads": -1,

"filename": "humanlayer-0.7.4-py3-none-any.whl",

"has_sig": false,

"md5_digest": "053c8b21f3ab971004888bbd366ab85f",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": "<4.0,>=3.10",

"size": 1173150,

"upload_time": "2025-01-22T04:08:32",

"upload_time_iso_8601": "2025-01-22T04:08:32.283592Z",

"url": "https://files.pythonhosted.org/packages/b1/27/12777f10169602bb87563949e4808d71a81d58d3dc616a3047dc253f6711/humanlayer-0.7.4-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": null,

"digests": {

"blake2b_256": "ab834851aa14b0c8e3955ed3620f1b3de3b3c24bb4c61263fd1c76b2b517f0b9",

"md5": "56c5cfc81f5f851a905ffe9938ff9080",

"sha256": "9186d0c5b8f0ade3342081aa820a76b6963f4e36c4c2643a8b11c7ad612f5ebb"

},

"downloads": -1,

"filename": "humanlayer-0.7.4.tar.gz",

"has_sig": false,

"md5_digest": "56c5cfc81f5f851a905ffe9938ff9080",

"packagetype": "sdist",

"python_version": "source",

"requires_python": "<4.0,>=3.10",

"size": 859970,

"upload_time": "2025-01-22T04:08:34",

"upload_time_iso_8601": "2025-01-22T04:08:34.675733Z",

"url": "https://files.pythonhosted.org/packages/ab/83/4851aa14b0c8e3955ed3620f1b3de3b3c24bb4c61263fd1c76b2b517f0b9/humanlayer-0.7.4.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2025-01-22 04:08:34",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "humanlayer",

"github_project": "humanlayer",

"travis_ci": false,

"coveralls": false,

"github_actions": true,

"lcname": "humanlayer"

}