| Name | image-ocr JSON |

| Version |

0.0.4

JSON

JSON |

| download |

| home_page | https://github.com/geo-tp/image-ocr |

| Summary | A packaged and flexible version of the CRAFT text detector and Keras CRNN recognition model. |

| upload_time | 2023-06-03 20:17:23 |

| maintainer | |

| docs_url | None |

| author | Geo |

| requires_python | >=3.9 |

| license | MIT |

| keywords |

|

| VCS |

|

| bugtrack_url |

|

| requirements |

No requirements were recorded.

|

| Travis-CI |

No Travis.

|

| coveralls test coverage |

No coveralls.

|

# image-ocr [](https://keras-ocr.readthedocs.io/en/latest/?badge=latest)

## <b>NOTE : `image-ocr` is an updated version of `keras-ocr` to work with the latest versions of python and tensorflow.</b>

## <b>It works exactly the same as keras-ocr, just do `pip install image-ocr` and replace `import image_ocr` in your project.</b>

## <b>It supports new Google Colaboratory `python 3.10` backend</b>

## <b>Interactive examples</b>

### - [Detector Training](https://colab.research.google.com/drive/15maYyNZdqnLl_P_all2a-x9GF7Ug2tIJ?usp=sharing)

### - [Recognizer Training](https://colab.research.google.com/drive/1AcnHoeRycoqNuMNS0T146LH1MbtmgV_T?usp=sharing)

### - [Recognizer Training - Custom set](https://colab.research.google.com/drive/1OQZzcWespMTAyxguw6y95NcbFNCgK8qb?usp=sharing)

### - [Using](https://colab.research.google.com/drive/1eRf9CbhZ8fVakjYN4yCtTqB-MPWejVxN?usp=sharing)

## <b>Informations</b>

This is a slightly polished and packaged version of the [Keras CRNN implementation](https://github.com/kurapan/CRNN) and the published [CRAFT text detection model](https://github.com/clovaai/CRAFT-pytorch). It provides a high level API for training a text detection and OCR pipeline.

Please see [the documentation](https://keras-ocr.readthedocs.io/) for more examples, including for training a custom model.

## <b>Getting Started</b>

### <b>Installation</b>

`image-ocr` supports Python >= 3.9

```

# To install from PyPi

pip install image-ocr

```

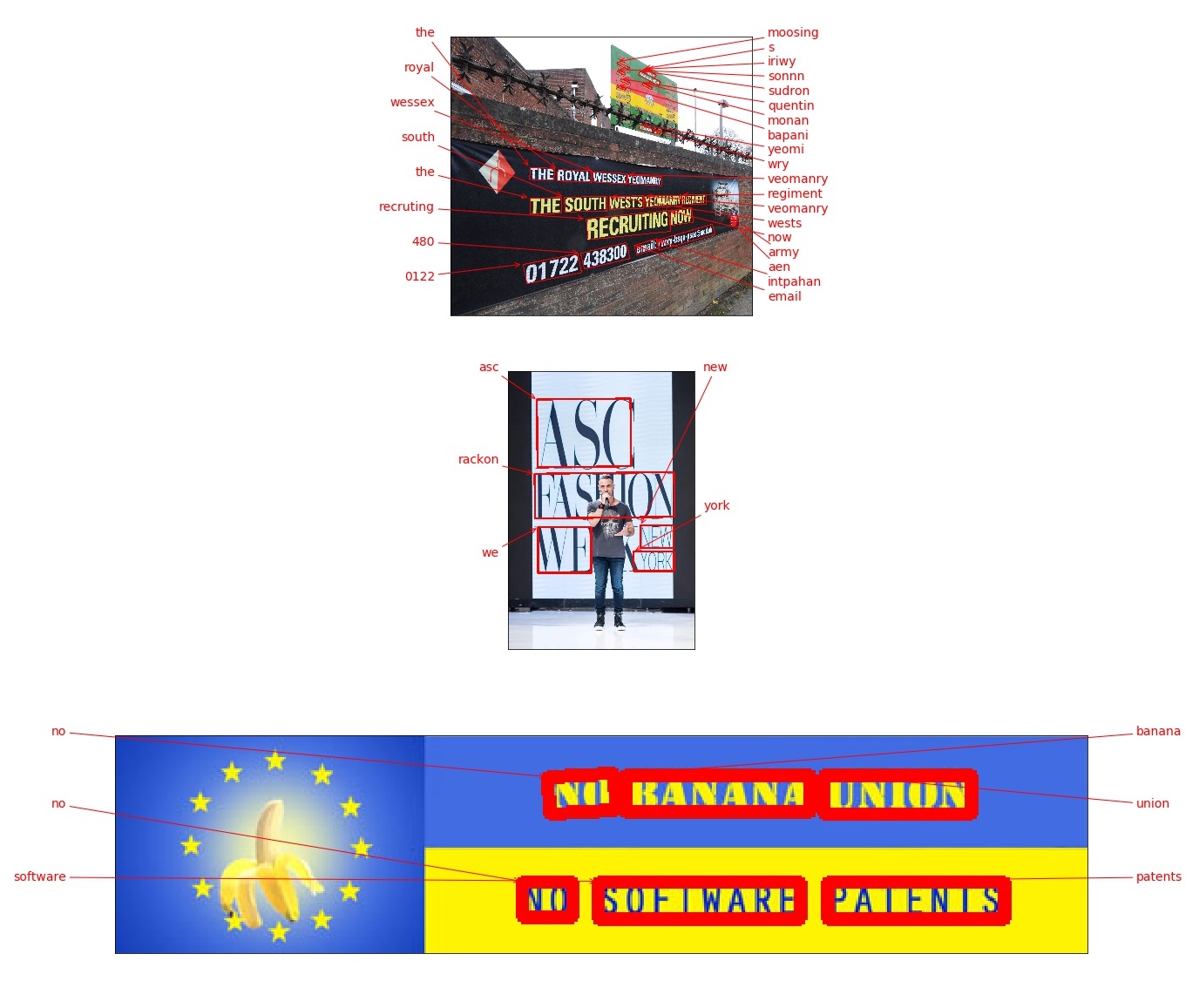

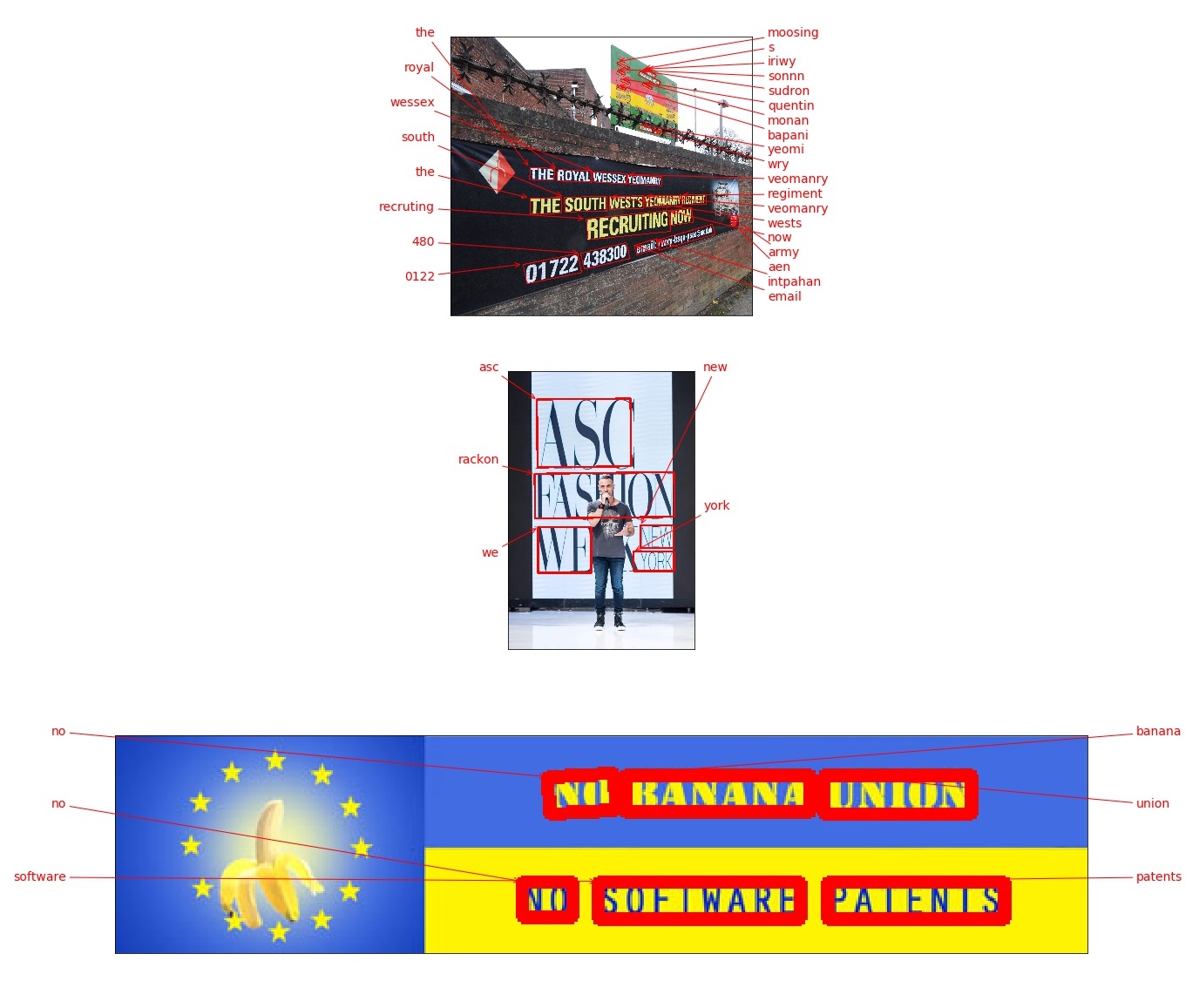

### <b>Using</b>

The package ships with an easy-to-use implementation of the CRAFT text detection model from [this repository](https://github.com/clovaai/CRAFT-pytorch) and the CRNN recognition model from [this repository](https://github.com/kurapan/CRNN).

Try [image-ocr on Colab](https://colab.research.google.com/drive/1eRf9CbhZ8fVakjYN4yCtTqB-MPWejVxN?usp=sharing)

```python

import matplotlib.pyplot as plt

import image_ocr

# image-ocr will automatically download pretrained

# weights for the detector and recognizer.

pipeline = image_ocr.pipeline.Pipeline()

# Get a set of three example images

images = [

image_ocr.tools.read(url) for url in [

'https://upload.wikimedia.org/wikipedia/commons/thumb/4/4b/Kali_Linux_2.0_wordmark.svg/langfr-420px-Kali_Linux_2.0_wordmark.svg.png',

'https://upload.wikimedia.org/wikipedia/commons/thumb/e/ef/Enseigne_de_pharmacie_lumineuse.jpg/180px-Enseigne_de_pharmacie_lumineuse.jpg',

'https://upload.wikimedia.org/wikipedia/commons/thumb/a/a6/Boutique_Christian_Lacroix.jpg/330px-Boutique_Christian_Lacroix.jpg',

]

]

# Each list of predictions in prediction_groups is a list of

# (word, box) tuples.

prediction_groups = pipeline.recognize(images)

# Plot the predictions

fig, axs = plt.subplots(nrows=len(images), figsize=(20, 20))

for ax, image, predictions in zip(axs, images, prediction_groups):

image_ocr.tools.drawAnnotations(image=image, predictions=predictions, ax=ax)

```

## <b>Training</b>

Detector training example : [Detector Training Colab](https://colab.research.google.com/drive/15maYyNZdqnLl_P_all2a-x9GF7Ug2tIJ?usp=sharing)

Recognizer training example [Recognizer Training Colab](https://colab.research.google.com/drive/1AcnHoeRycoqNuMNS0T146LH1MbtmgV_T?usp=sharing)

Recognizer training with custom assets [Recognizer Training Colab](https://colab.research.google.com/drive/1OQZzcWespMTAyxguw6y95NcbFNCgK8qb?usp=sharing)

## <b>Comparing image-ocr and other OCR approaches</b>

You may be wondering how the models in this package compare to existing cloud OCR APIs. We provide some metrics below and [the notebook](https://drive.google.com/file/d/1FMS3aUZnBU4Tc6bosBPnrjdMoSrjZXRp/view?usp=sharing) used to compute them using the first 1,000 images in the COCO-Text validation set. We limited it to 1,000 because the Google Cloud free tier is for 1,000 calls a month at the time of this writing. As always, caveats apply:

- No guarantees apply to these numbers -- please beware and compute your own metrics independently to verify them. As of this writing, they should be considered a very rough first draft. Please open an issue if you find a mistake. In particular, the cloud APIs have a variety of options that one can use to improve their performance and the responses can be parsed in different ways. It is possible that I made some error in configuration or parsing. Again, please open an issue if you find a mistake!

- We ignore punctuation and letter case because the out-of-the-box recognizer in image-ocr (provided by [this independent repository](https://github.com/kurapan/CRNN)) does not support either. Note that both AWS Rekognition and Google Cloud Vision support punctuation as well as upper and lowercase characters.

- We ignore non-English text.

- We ignore illegible text.

| model | latency | precision | recall |

| ----------------------------------------------------------------------------------------------------------------------------- | ------- | --------- | ------ |

| [AWS](https://github.com/geo-tp/image-ocr/releases/download/v0.8.4/aws_annotations.json) | 719ms | 0.45 | 0.48 |

| [GCP](https://github.com/geo-tp/image-ocr/releases/download/v0.8.4/google_annotations.json) | 388ms | 0.53 | 0.58 |

| [image-ocr](https://github.com/geo-tp/image-ocr/releases/download/v0.8.4/image_ocr_annotations_scale_2.json) (scale=2) | 417ms | 0.53 | 0.54 |

| [image-ocr](https://github.com/geo-tp/image-ocr/releases/download/v0.8.4/image_ocr_annotations_scale_3.json) (scale=3) | 699ms | 0.5 | 0.59 |

- Precision and recall were computed based on an intersection over union of 50% or higher and a text similarity to ground truth of 50% or higher.

- `keras-ocr` latency values were computed using a Tesla P4 GPU on Google Colab. `scale` refers to the argument provided to `image_ocr.pipelines.Pipeline()` which determines the upscaling applied to the image prior to inference.

- Latency for the cloud providers was measured with sequential requests, so you can obtain significant speed improvements by making multiple simultaneous API requests.

- Each of the entries provides a link to the JSON file containing the annotations made on each pass. You can use this with the notebook to compute metrics without having to make the API calls yourself (though you are encoraged to replicate it independently)!

_Why not compare to Tesseract?_ In every configuration I tried, Tesseract did very poorly on this test. Tesseract performs best on scans of books, not on incidental scene text like that in this dataset.

## <b>Advanced Configuration</b>

By default if a GPU is available Tensorflow tries to grab almost all of the available video memory, and this sucks if you're running multiple models with Tensorflow and Pytorch. Setting any value for the environment variable `MEMORY_GROWTH` will force Tensorflow to dynamically allocate only as much GPU memory as is needed.

You can also specify a limit per Tensorflow process by setting the environment variable `MEMORY_ALLOCATED` to any float, and this value is a float ratio of VRAM to the total amount present.

To apply these changes, call `image_ocr.config.configure()` at the top of your file where you import `image_ocr`.

## <b>Contributing</b>

To work on the project, start by doing the following. These instructions probably do not yet work for Windows but if a Windows user has some ideas for how to fix that it would be greatly appreciated (I don't have a Windows machine to test on at the moment).

```bash

# Install local dependencies for

# code completion, etc.

make init

# Build the Docker container to run

# tests and such.

make build

```

- You can get a JupyterLab server running to experiment with using `make lab`.

- To run checks before committing code, you can use `make format-check type-check lint-check test`.

- To view the documentation, use `make docs`.

To implement new features, please first file an issue proposing your change for discussion.

To report problems, please file an issue with sample code, expected results, actual results, and a complete traceback.

## <b>Troubleshooting</b>

- _This package is installing `opencv-python-headless` but I would prefer a different `opencv` flavor._ This is due to [aleju/imgaug#473](https://github.com/aleju/imgaug/issues/473). You can uninstall the unwanted OpenCV flavor after installing `image-ocr`. We apologize for the inconvenience.

Raw data

{

"_id": null,

"home_page": "https://github.com/geo-tp/image-ocr",

"name": "image-ocr",

"maintainer": "",

"docs_url": null,

"requires_python": ">=3.9",

"maintainer_email": "",

"keywords": "",

"author": "Geo",

"author_email": "geoffrey.menon38@gmail.com",

"download_url": "https://files.pythonhosted.org/packages/eb/cd/ae8259ae9fdbc364ee8f0983736a4dd81f17e758771e09d86a7ab86a4d5d/image-ocr-0.0.4.tar.gz",

"platform": null,

"description": "# image-ocr [](https://keras-ocr.readthedocs.io/en/latest/?badge=latest)\n\n## <b>NOTE : `image-ocr` is an updated version of `keras-ocr` to work with the latest versions of python and tensorflow.</b>\n\n## <b>It works exactly the same as keras-ocr, just do `pip install image-ocr` and replace `import image_ocr` in your project.</b>\n\n## <b>It supports new Google Colaboratory `python 3.10` backend</b>\n\n## <b>Interactive examples</b>\n\n### - [Detector Training](https://colab.research.google.com/drive/15maYyNZdqnLl_P_all2a-x9GF7Ug2tIJ?usp=sharing) \n\n### - [Recognizer Training](https://colab.research.google.com/drive/1AcnHoeRycoqNuMNS0T146LH1MbtmgV_T?usp=sharing)\n\n### - [Recognizer Training - Custom set](https://colab.research.google.com/drive/1OQZzcWespMTAyxguw6y95NcbFNCgK8qb?usp=sharing)\n\n### - [Using](https://colab.research.google.com/drive/1eRf9CbhZ8fVakjYN4yCtTqB-MPWejVxN?usp=sharing)\n\n## <b>Informations</b>\n\nThis is a slightly polished and packaged version of the [Keras CRNN implementation](https://github.com/kurapan/CRNN) and the published [CRAFT text detection model](https://github.com/clovaai/CRAFT-pytorch). It provides a high level API for training a text detection and OCR pipeline.\n\nPlease see [the documentation](https://keras-ocr.readthedocs.io/) for more examples, including for training a custom model.\n\n## <b>Getting Started</b>\n\n### <b>Installation</b>\n\n`image-ocr` supports Python >= 3.9\n\n```\n# To install from PyPi\npip install image-ocr\n```\n\n### <b>Using</b>\n\n\nThe package ships with an easy-to-use implementation of the CRAFT text detection model from [this repository](https://github.com/clovaai/CRAFT-pytorch) and the CRNN recognition model from [this repository](https://github.com/kurapan/CRNN).\n\nTry [image-ocr on Colab](https://colab.research.google.com/drive/1eRf9CbhZ8fVakjYN4yCtTqB-MPWejVxN?usp=sharing)\n```python\nimport matplotlib.pyplot as plt\n\nimport image_ocr\n\n# image-ocr will automatically download pretrained\n# weights for the detector and recognizer.\npipeline = image_ocr.pipeline.Pipeline()\n\n# Get a set of three example images\nimages = [\n image_ocr.tools.read(url) for url in [\n 'https://upload.wikimedia.org/wikipedia/commons/thumb/4/4b/Kali_Linux_2.0_wordmark.svg/langfr-420px-Kali_Linux_2.0_wordmark.svg.png',\n 'https://upload.wikimedia.org/wikipedia/commons/thumb/e/ef/Enseigne_de_pharmacie_lumineuse.jpg/180px-Enseigne_de_pharmacie_lumineuse.jpg',\n 'https://upload.wikimedia.org/wikipedia/commons/thumb/a/a6/Boutique_Christian_Lacroix.jpg/330px-Boutique_Christian_Lacroix.jpg',\n ]\n]\n\n# Each list of predictions in prediction_groups is a list of\n# (word, box) tuples.\nprediction_groups = pipeline.recognize(images)\n\n# Plot the predictions\nfig, axs = plt.subplots(nrows=len(images), figsize=(20, 20))\nfor ax, image, predictions in zip(axs, images, prediction_groups):\n image_ocr.tools.drawAnnotations(image=image, predictions=predictions, ax=ax)\n```\n\n\n\n## <b>Training</b>\n\nDetector training example : [Detector Training Colab](https://colab.research.google.com/drive/15maYyNZdqnLl_P_all2a-x9GF7Ug2tIJ?usp=sharing)\n\nRecognizer training example [Recognizer Training Colab](https://colab.research.google.com/drive/1AcnHoeRycoqNuMNS0T146LH1MbtmgV_T?usp=sharing)\n\nRecognizer training with custom assets [Recognizer Training Colab](https://colab.research.google.com/drive/1OQZzcWespMTAyxguw6y95NcbFNCgK8qb?usp=sharing)\n\n\n## <b>Comparing image-ocr and other OCR approaches</b>\n\nYou may be wondering how the models in this package compare to existing cloud OCR APIs. We provide some metrics below and [the notebook](https://drive.google.com/file/d/1FMS3aUZnBU4Tc6bosBPnrjdMoSrjZXRp/view?usp=sharing) used to compute them using the first 1,000 images in the COCO-Text validation set. We limited it to 1,000 because the Google Cloud free tier is for 1,000 calls a month at the time of this writing. As always, caveats apply:\n\n- No guarantees apply to these numbers -- please beware and compute your own metrics independently to verify them. As of this writing, they should be considered a very rough first draft. Please open an issue if you find a mistake. In particular, the cloud APIs have a variety of options that one can use to improve their performance and the responses can be parsed in different ways. It is possible that I made some error in configuration or parsing. Again, please open an issue if you find a mistake!\n- We ignore punctuation and letter case because the out-of-the-box recognizer in image-ocr (provided by [this independent repository](https://github.com/kurapan/CRNN)) does not support either. Note that both AWS Rekognition and Google Cloud Vision support punctuation as well as upper and lowercase characters.\n- We ignore non-English text.\n- We ignore illegible text.\n\n| model | latency | precision | recall |\n| ----------------------------------------------------------------------------------------------------------------------------- | ------- | --------- | ------ |\n| [AWS](https://github.com/geo-tp/image-ocr/releases/download/v0.8.4/aws_annotations.json) | 719ms | 0.45 | 0.48 |\n| [GCP](https://github.com/geo-tp/image-ocr/releases/download/v0.8.4/google_annotations.json) | 388ms | 0.53 | 0.58 |\n| [image-ocr](https://github.com/geo-tp/image-ocr/releases/download/v0.8.4/image_ocr_annotations_scale_2.json) (scale=2) | 417ms | 0.53 | 0.54 |\n| [image-ocr](https://github.com/geo-tp/image-ocr/releases/download/v0.8.4/image_ocr_annotations_scale_3.json) (scale=3) | 699ms | 0.5 | 0.59 |\n\n- Precision and recall were computed based on an intersection over union of 50% or higher and a text similarity to ground truth of 50% or higher.\n- `keras-ocr` latency values were computed using a Tesla P4 GPU on Google Colab. `scale` refers to the argument provided to `image_ocr.pipelines.Pipeline()` which determines the upscaling applied to the image prior to inference.\n- Latency for the cloud providers was measured with sequential requests, so you can obtain significant speed improvements by making multiple simultaneous API requests.\n- Each of the entries provides a link to the JSON file containing the annotations made on each pass. You can use this with the notebook to compute metrics without having to make the API calls yourself (though you are encoraged to replicate it independently)!\n\n_Why not compare to Tesseract?_ In every configuration I tried, Tesseract did very poorly on this test. Tesseract performs best on scans of books, not on incidental scene text like that in this dataset.\n\n## <b>Advanced Configuration</b>\nBy default if a GPU is available Tensorflow tries to grab almost all of the available video memory, and this sucks if you're running multiple models with Tensorflow and Pytorch. Setting any value for the environment variable `MEMORY_GROWTH` will force Tensorflow to dynamically allocate only as much GPU memory as is needed.\n\nYou can also specify a limit per Tensorflow process by setting the environment variable `MEMORY_ALLOCATED` to any float, and this value is a float ratio of VRAM to the total amount present.\n\nTo apply these changes, call `image_ocr.config.configure()` at the top of your file where you import `image_ocr`.\n\n## <b>Contributing</b>\n\nTo work on the project, start by doing the following. These instructions probably do not yet work for Windows but if a Windows user has some ideas for how to fix that it would be greatly appreciated (I don't have a Windows machine to test on at the moment).\n\n```bash\n# Install local dependencies for\n# code completion, etc.\nmake init\n\n# Build the Docker container to run\n# tests and such.\nmake build\n```\n\n- You can get a JupyterLab server running to experiment with using `make lab`.\n- To run checks before committing code, you can use `make format-check type-check lint-check test`.\n- To view the documentation, use `make docs`.\n\nTo implement new features, please first file an issue proposing your change for discussion.\n\nTo report problems, please file an issue with sample code, expected results, actual results, and a complete traceback.\n\n## <b>Troubleshooting</b>\n\n- _This package is installing `opencv-python-headless` but I would prefer a different `opencv` flavor._ This is due to [aleju/imgaug#473](https://github.com/aleju/imgaug/issues/473). You can uninstall the unwanted OpenCV flavor after installing `image-ocr`. We apologize for the inconvenience.\n",

"bugtrack_url": null,

"license": "MIT",

"summary": "A packaged and flexible version of the CRAFT text detector and Keras CRNN recognition model.",

"version": "0.0.4",

"project_urls": {

"Homepage": "https://github.com/geo-tp/image-ocr",

"Repository": "https://github.com/geo-tp/image-ocr"

},

"split_keywords": [],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "29edd05f9c8b5cee7cda00e60765a85d2b77da4c7c5f818a7fa0478d39a28d7c",

"md5": "12ba95dc762497bacac2b945d8a4e678",

"sha256": "f4aa710f8a94392364f0449b12d7b401df3bc4a4a4b84663b64db3c354c39f0a"

},

"downloads": -1,

"filename": "image_ocr-0.0.4-py3-none-any.whl",

"has_sig": false,

"md5_digest": "12ba95dc762497bacac2b945d8a4e678",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.9",

"size": 42809,

"upload_time": "2023-06-03T20:17:25",

"upload_time_iso_8601": "2023-06-03T20:17:25.232118Z",

"url": "https://files.pythonhosted.org/packages/29/ed/d05f9c8b5cee7cda00e60765a85d2b77da4c7c5f818a7fa0478d39a28d7c/image_ocr-0.0.4-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "ebcdae8259ae9fdbc364ee8f0983736a4dd81f17e758771e09d86a7ab86a4d5d",

"md5": "ced728783efa3a910691e89c8dc21ac4",

"sha256": "15ddeddd4e7300c8f7845591b1a2674ed0779318da8703f6aa65335bb28e0834"

},

"downloads": -1,

"filename": "image-ocr-0.0.4.tar.gz",

"has_sig": false,

"md5_digest": "ced728783efa3a910691e89c8dc21ac4",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.9",

"size": 43046,

"upload_time": "2023-06-03T20:17:23",

"upload_time_iso_8601": "2023-06-03T20:17:23.404275Z",

"url": "https://files.pythonhosted.org/packages/eb/cd/ae8259ae9fdbc364ee8f0983736a4dd81f17e758771e09d86a7ab86a4d5d/image-ocr-0.0.4.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2023-06-03 20:17:23",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "geo-tp",

"github_project": "image-ocr",

"travis_ci": false,

"coveralls": false,

"github_actions": true,

"lcname": "image-ocr"

}