| Name | imml JSON |

| Version |

0.2.0

JSON

JSON |

| download |

| home_page | None |

| Summary | A python package for multi-modal learning with incomplete data |

| upload_time | 2025-11-03 19:24:17 |

| maintainer | None |

| docs_url | None |

| author | None |

| requires_python | >=3.10 |

| license | BSD 3-Clause License Copyright (c) 2025, Open source contributors. Redistribution and use in source and binary forms, with or without modification, are permitted provided that the following conditions are met: * Redistributions of source code must retain the above copyright notice, this list of conditions and the following disclaimer. * Redistributions in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution. * Neither the name of the copyright holder nor the names of its contributors may be used to endorse or promote products derived from this software without specific prior written permission. THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE. |

| keywords |

multi-modal learning

machine learning

incomplete data

missing data

|

| VCS |

|

| bugtrack_url |

|

| requirements |

No requirements were recorded.

|

| Travis-CI |

No Travis.

|

| coveralls test coverage |

|

[](https://pypi.org/project/imml/)

[](https://imml.readthedocs.io)

[](https://github.com/ocbe-uio/imml/actions/workflows/ci_test.yml)

[](https://github.com/ocbe-uio/imml/actions/workflows/github-code-scanning/codeql)

[](https://github.com/ocbe-uio/imml/pulls)

[](https://github.com/ocbe-uio/imml/blob/main/LICENSE)

[//]: # ([![DOI]()]())

[//]: # ([![Paper]()]())

<p align="center">

<img alt="iMML Logo" src="https://raw.githubusercontent.com/ocbe-uio/imml/refs/heads/main/docs/figures/logo_imml.png">

</p>

[**Overview**](#Overview) | [**Key features**](#Key-features) | [**Installation**](#installation) |

[**Usage**](#Usage) | [**Free software**](#Free-software) | [**Contribute**](#Contribute) | [**Help us**](#Help-us-grow)

Overview

====================

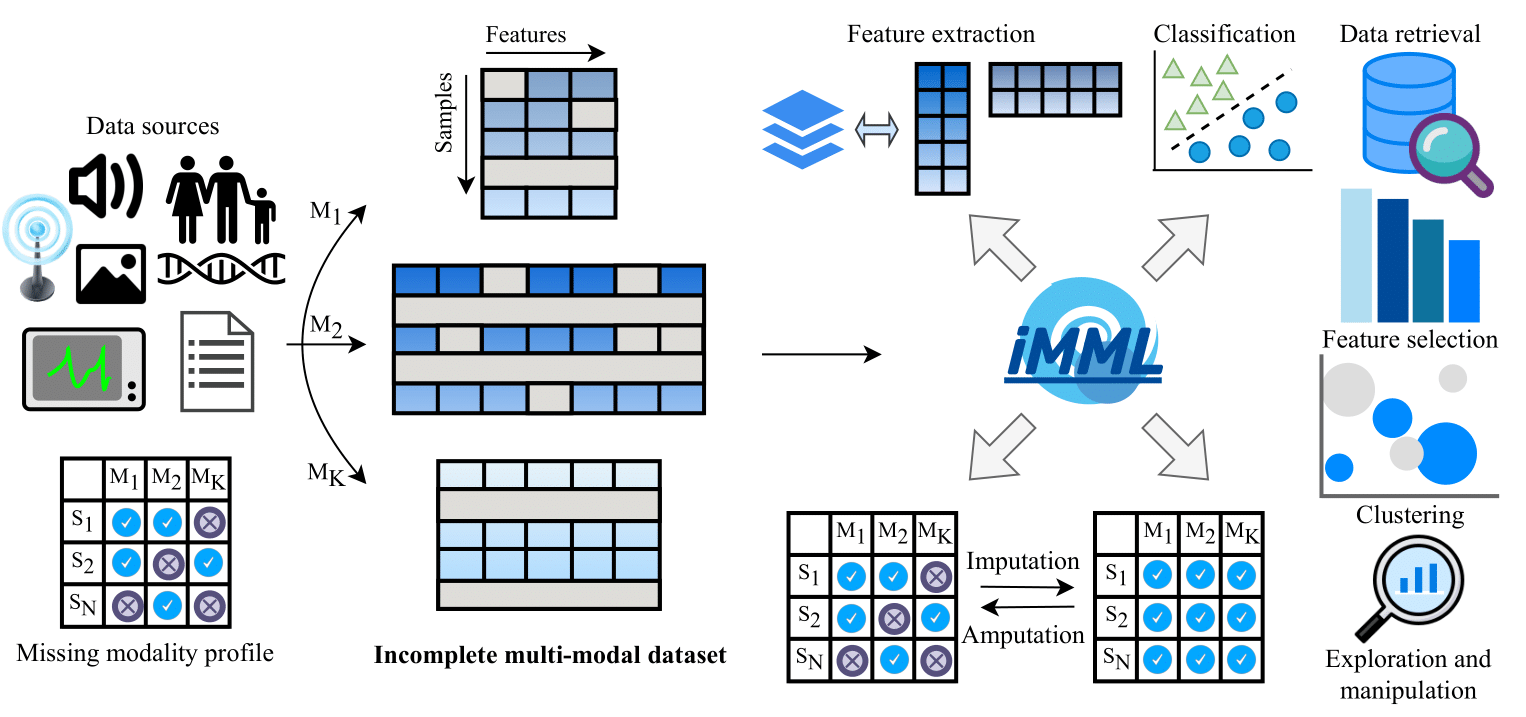

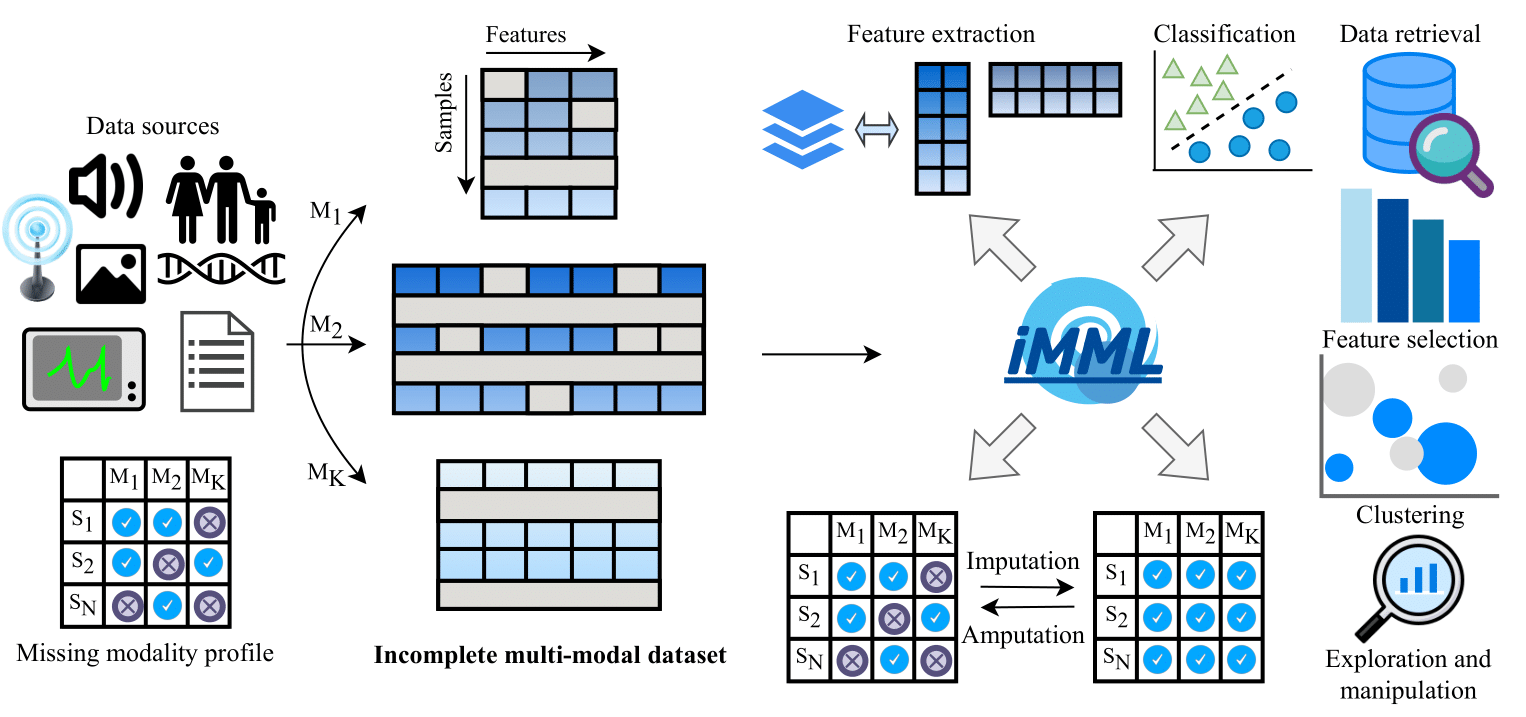

Multi-modal learning, where diverse data types are integrated and analyzed together, has emerged as a critical

field in artificial intelligence.

However, most algorithms assume fully observed data, an assumption that is often unrealistic in real-world scenarios.

To address this gap, we have developed *iMML*, a Python package designed for multi-modal learning with incomplete data.

<p align="center"><strong>Overview of iMML for multi-modal learning with incomplete data.</strong></p>

Key features

------------

The key features of this package are:

- **Coverage**: More than 25 methods for integrating, processing, and analyzing incomplete multi-modal

datasets implemented as a single, user-friendly interface.

- **Comprehensive**: Designed to be compatible with widely-used machine learning and data analysis tools, allowing

use with minimal programming effort.

- **Extensible**: A unified framework where researchers can contribute and integrate new approaches, serving

as a community platform for hosting new methods.

Installation

--------------

Run the following command to install the most recent release of *iMML* using *pip*:

```bash

pip install imml

```

Or if you prefer *uv*, use:

```bash

uv pip install imml

```

Some features of *iMML* rely on optional dependencies. To enable these additional features, ensure you install

the required packages as described in our documentation: https://imml.readthedocs.io/stable/main/installation.html.

Usage

--------

For this example, we will generate a random multi-modal dataset, that we have called ``Xs``:

```python

import numpy as np

Xs = [np.random.random((10,5)) for i in range(3)] # or your multi-modal dataset

```

You can use any other complete or incomplete multi-modal dataset. Once you have your dataset ready, you can

leverage the *iMML* library for a wide range of machine learning tasks, such as:

- Decompose a multi-modal dataset using ``MOFA`` to capture joint information.

```python

from imml.decomposition import MOFA

transformed_Xs = MOFA().fit_transform(Xs)

```

- Cluster samples from a multi-modal dataset using ``NEMO`` to find hidden groups.

```python

from imml.cluster import NEMO

labels = NEMO().fit_predict(Xs)

```

- Simulate incomplete multi-modal datasets for evaluation and testing purposes using ``Amputer``.

```python

from imml.ampute import Amputer

transformed_Xs = Amputer(p=0.8).fit_transform(Xs)

```

Free software

-------------

*iMML* is free software; you can redistribute it and/or modify it under the terms of the `BSD 3-Clause License`.

Contribute

------------

Our vision is to establish *iMML* as a leading and reliable library for multi-modal learning across research and

applied settings. Our priorities include to broaden algorithmic coverage, improve performance and

scalability, strengthen interoperability, and grow a healthy contributor community. Therefore, we welcome

practitioners, researchers, and the open-source community to contribute to the *iMML* project, and in doing so,

helping us extend and refine the library for the community. Such a community-wide effort will make *iMML* more

versatile, sustainable, powerful, and accessible to the machine learning community across many domains.

For the full contributing guide, please see:

- In-repo: https://github.com/ocbe-uio/imml/tree/main?tab=contributing-ov-file

- Documentation: https://imml.readthedocs.io/stable/development/contributing.html

Help us grow

------------

How you can help *iMML* grow:

- 🔥 Try it out and share your feedback.

- 🤝 Contribute if you are interested in building with us.

- 🗣️ Share this project with colleagues who deal with multi-modal data.

- 🌟 And of course… give the repo a star to support the project!

Raw data

{

"_id": null,

"home_page": null,

"name": "imml",

"maintainer": null,

"docs_url": null,

"requires_python": ">=3.10",

"maintainer_email": "Alberto L\u00f3pez <a.l.sanchez@medisin.uio.no>",

"keywords": "multi-modal learning, machine learning, incomplete data, missing data",

"author": null,

"author_email": "Alberto L\u00f3pez <a.l.sanchez@medisin.uio.no>",

"download_url": "https://files.pythonhosted.org/packages/1f/74/84526ef9f5e13c8f8c5e452249da4281374b08e201690d5115ee1b5193b4/imml-0.2.0.tar.gz",

"platform": null,

"description": "[](https://pypi.org/project/imml/)\n\n[](https://imml.readthedocs.io)\n[](https://github.com/ocbe-uio/imml/actions/workflows/ci_test.yml)\n\n[](https://github.com/ocbe-uio/imml/actions/workflows/github-code-scanning/codeql)\n[](https://github.com/ocbe-uio/imml/pulls)\n\n\n[](https://github.com/ocbe-uio/imml/blob/main/LICENSE)\n\n[//]: # ([![DOI]()]())\n[//]: # ([![Paper]()]())\n\n<p align=\"center\">\n <img alt=\"iMML Logo\" src=\"https://raw.githubusercontent.com/ocbe-uio/imml/refs/heads/main/docs/figures/logo_imml.png\">\n</p>\n\n[**Overview**](#Overview) | [**Key features**](#Key-features) | [**Installation**](#installation) | \n[**Usage**](#Usage) | [**Free software**](#Free-software) | [**Contribute**](#Contribute) | [**Help us**](#Help-us-grow)\n\nOverview\n====================\n\nMulti-modal learning, where diverse data types are integrated and analyzed together, has emerged as a critical \nfield in artificial intelligence.\nHowever, most algorithms assume fully observed data, an assumption that is often unrealistic in real-world scenarios.\nTo address this gap, we have developed *iMML*, a Python package designed for multi-modal learning with incomplete data.\n\n\n<p align=\"center\"><strong>Overview of iMML for multi-modal learning with incomplete data.</strong></p>\n\nKey features\n------------\n\nThe key features of this package are:\n\n- **Coverage**: More than 25 methods for integrating, processing, and analyzing incomplete multi-modal \n datasets implemented as a single, user-friendly interface.\n- **Comprehensive**: Designed to be compatible with widely-used machine learning and data analysis tools, allowing \n use with minimal programming effort. \n- **Extensible**: A unified framework where researchers can contribute and integrate new approaches, serving \n as a community platform for hosting new methods.\n\nInstallation\n--------------\n\nRun the following command to install the most recent release of *iMML* using *pip*:\n\n```bash\npip install imml\n```\n\nOr if you prefer *uv*, use:\n\n```bash\nuv pip install imml\n```\n\nSome features of *iMML* rely on optional dependencies. To enable these additional features, ensure you install \nthe required packages as described in our documentation: https://imml.readthedocs.io/stable/main/installation.html.\n\n\nUsage\n--------\n\nFor this example, we will generate a random multi-modal dataset, that we have called ``Xs``:\n\n```python\nimport numpy as np\nXs = [np.random.random((10,5)) for i in range(3)] # or your multi-modal dataset\n```\n\nYou can use any other complete or incomplete multi-modal dataset. Once you have your dataset ready, you can\nleverage the *iMML* library for a wide range of machine learning tasks, such as:\n\n- Decompose a multi-modal dataset using ``MOFA`` to capture joint information.\n\n```python\nfrom imml.decomposition import MOFA\ntransformed_Xs = MOFA().fit_transform(Xs)\n```\n\n- Cluster samples from a multi-modal dataset using ``NEMO`` to find hidden groups.\n\n```python\nfrom imml.cluster import NEMO\nlabels = NEMO().fit_predict(Xs)\n```\n\n- Simulate incomplete multi-modal datasets for evaluation and testing purposes using ``Amputer``.\n\n```python\nfrom imml.ampute import Amputer\ntransformed_Xs = Amputer(p=0.8).fit_transform(Xs)\n```\n\nFree software\n-------------\n\n*iMML* is free software; you can redistribute it and/or modify it under the terms of the `BSD 3-Clause License`.\n\nContribute\n------------\n\nOur vision is to establish *iMML* as a leading and reliable library for multi-modal learning across research and \napplied settings. Our priorities include to broaden algorithmic coverage, improve performance and \nscalability, strengthen interoperability, and grow a healthy contributor community. Therefore, we welcome \npractitioners, researchers, and the open-source community to contribute to the *iMML* project, and in doing so, \nhelping us extend and refine the library for the community. Such a community-wide effort will make *iMML* more \nversatile, sustainable, powerful, and accessible to the machine learning community across many domains.\n\nFor the full contributing guide, please see:\n\n- In-repo: https://github.com/ocbe-uio/imml/tree/main?tab=contributing-ov-file\n- Documentation: https://imml.readthedocs.io/stable/development/contributing.html\n\nHelp us grow\n------------\n\nHow you can help *iMML* grow:\n\n- \ud83d\udd25 Try it out and share your feedback.\n- \ud83e\udd1d Contribute if you are interested in building with us.\n- \ud83d\udde3\ufe0f Share this project with colleagues who deal with multi-modal data.\n- \ud83c\udf1f And of course\u2026 give the repo a star to support the project!\n",

"bugtrack_url": null,

"license": "BSD 3-Clause License Copyright (c) 2025, Open source contributors. Redistribution and use in source and binary forms, with or without modification, are permitted provided that the following conditions are met: * Redistributions of source code must retain the above copyright notice, this list of conditions and the following disclaimer. * Redistributions in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution. * Neither the name of the copyright holder nor the names of its contributors may be used to endorse or promote products derived from this software without specific prior written permission. THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS \"AS IS\" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.",

"summary": "A python package for multi-modal learning with incomplete data",

"version": "0.2.0",

"project_urls": {

"Changelog": "https://imml.readthedocs.io/stable/development/changelog.html",

"Documentation": "https://imml.readthedocs.io",

"Download": "https://pypi.org/project/imml/#files",

"Source": "https://github.com/ocbe-uio/imml",

"Tracker": "https://github.com/ocbe-uio/imml/issues"

},

"split_keywords": [

"multi-modal learning",

" machine learning",

" incomplete data",

" missing data"

],

"urls": [

{

"comment_text": null,

"digests": {

"blake2b_256": "03d1c2c1c037b64166a052f7fa1339bb422fd73e6edb06b2b2987c0bef7aa75f",

"md5": "68bbb5651f98df264bf8bbc69983e90c",

"sha256": "cdcf2ed941822acd281040904a904bd1bc3b211f424785b0c7c200c9c48b28cd"

},

"downloads": -1,

"filename": "imml-0.2.0-py3-none-any.whl",

"has_sig": false,

"md5_digest": "68bbb5651f98df264bf8bbc69983e90c",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.10",

"size": 414199,

"upload_time": "2025-11-03T19:24:14",

"upload_time_iso_8601": "2025-11-03T19:24:14.482741Z",

"url": "https://files.pythonhosted.org/packages/03/d1/c2c1c037b64166a052f7fa1339bb422fd73e6edb06b2b2987c0bef7aa75f/imml-0.2.0-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": null,

"digests": {

"blake2b_256": "1f7484526ef9f5e13c8f8c5e452249da4281374b08e201690d5115ee1b5193b4",

"md5": "0669531cb6d5ee510883afaa033bdf20",

"sha256": "ec28c5d5ed1640eafc95b6087a6a140f0d924824e1e98580e20ced142084c4ab"

},

"downloads": -1,

"filename": "imml-0.2.0.tar.gz",

"has_sig": false,

"md5_digest": "0669531cb6d5ee510883afaa033bdf20",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.10",

"size": 303316,

"upload_time": "2025-11-03T19:24:17",

"upload_time_iso_8601": "2025-11-03T19:24:17.501173Z",

"url": "https://files.pythonhosted.org/packages/1f/74/84526ef9f5e13c8f8c5e452249da4281374b08e201690d5115ee1b5193b4/imml-0.2.0.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2025-11-03 19:24:17",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "ocbe-uio",

"github_project": "imml",

"travis_ci": false,

"coveralls": true,

"github_actions": true,

"lcname": "imml"

}