# Langcorn

LangCorn is an API server that enables you to serve LangChain models and pipelines with ease, leveraging the power of FastAPI for a robust and efficient experience.

<p>

<img alt="GitHub Contributors" src="https://img.shields.io/github/contributors/msoedov/langcorn" />

<img alt="GitHub Last Commit" src="https://img.shields.io/github/last-commit/msoedov/langcorn" />

<img alt="" src="https://img.shields.io/github/repo-size/msoedov/langcorn" />

<img alt="Downloads" src="https://static.pepy.tech/badge/langcorn" />

<img alt="GitHub Issues" src="https://img.shields.io/github/issues/msoedov/langcorn" />

<img alt="GitHub Pull Requests" src="https://img.shields.io/github/issues-pr/msoedov/langcorn" />

<img alt="Github License" src="https://img.shields.io/github/license/msoedov/langcorn" />

</p>

## Features

- Easy deployment of LangChain models and pipelines

- Ready to use auth functionality

- High-performance FastAPI framework for serving requests

- Scalable and robust solution for language processing applications

- Supports custom pipelines and processing

- Well-documented RESTful API endpoints

- Asynchronous processing for faster response times

## 📦 Installation

To get started with LangCorn, simply install the package using pip:

```shell

pip install langcorn

```

## ⛓️ Quick Start

Example LLM chain ex1.py

```python

import os

from langchain import LLMMathChain, OpenAI

os.environ["OPENAI_API_KEY"] = os.environ.get("OPENAI_API_KEY", "sk-********")

llm = OpenAI(temperature=0)

chain = LLMMathChain(llm=llm, verbose=True)

```

Run your LangCorn FastAPI server:

```shell

langcorn server examples.ex1:chain

[INFO] 2023-04-18 14:34:56.32 | api:create_service:75 | Creating service

[INFO] 2023-04-18 14:34:57.51 | api:create_service:85 | lang_app='examples.ex1:chain':LLMChain(['product'])

[INFO] 2023-04-18 14:34:57.51 | api:create_service:104 | Serving

[INFO] 2023-04-18 14:34:57.51 | api:create_service:106 | Endpoint: /docs

[INFO] 2023-04-18 14:34:57.51 | api:create_service:106 | Endpoint: /examples.ex1/run

INFO: Started server process [27843]

INFO: Waiting for application startup.

INFO: Application startup complete.

INFO: Uvicorn running on http://127.0.0.1:8718 (Press CTRL+C to quit)

```

or as an alternative

```shell

python -m langcorn server examples.ex1:chain

```

Run multiple chains

```shell

python -m langcorn server examples.ex1:chain examples.ex2:chain

[INFO] 2023-04-18 14:35:21.11 | api:create_service:75 | Creating service

[INFO] 2023-04-18 14:35:21.82 | api:create_service:85 | lang_app='examples.ex1:chain':LLMChain(['product'])

[INFO] 2023-04-18 14:35:21.82 | api:create_service:85 | lang_app='examples.ex2:chain':SimpleSequentialChain(['input'])

[INFO] 2023-04-18 14:35:21.82 | api:create_service:104 | Serving

[INFO] 2023-04-18 14:35:21.82 | api:create_service:106 | Endpoint: /docs

[INFO] 2023-04-18 14:35:21.82 | api:create_service:106 | Endpoint: /examples.ex1/run

[INFO] 2023-04-18 14:35:21.82 | api:create_service:106 | Endpoint: /examples.ex2/run

INFO: Started server process [27863]

INFO: Waiting for application startup.

INFO: Application startup complete.

INFO: Uvicorn running on http://127.0.0.1:8718 (Press CTRL+C to quit)

```

Import the necessary packages and create your FastAPI app:

```python

from fastapi import FastAPI

from langcorn import create_service

app:FastAPI = create_service("examples.ex1:chain")

```

Multiple chains

```python

from fastapi import FastAPI

from langcorn import create_service

app:FastAPI = create_service("examples.ex2:chain", "examples.ex1:chain")

```

or

```python

from fastapi import FastAPI

from langcorn import create_service

app: FastAPI = create_service(

"examples.ex1:chain",

"examples.ex2:chain",

"examples.ex3:chain",

"examples.ex4:sequential_chain",

"examples.ex5:conversation",

"examples.ex6:conversation_with_summary",

"examples.ex7_agent:agent",

)

```

Run your LangCorn FastAPI server:

```shell

uvicorn main:app --host 0.0.0.0 --port 8000

```

Now, your LangChain models and pipelines are accessible via the LangCorn API server.

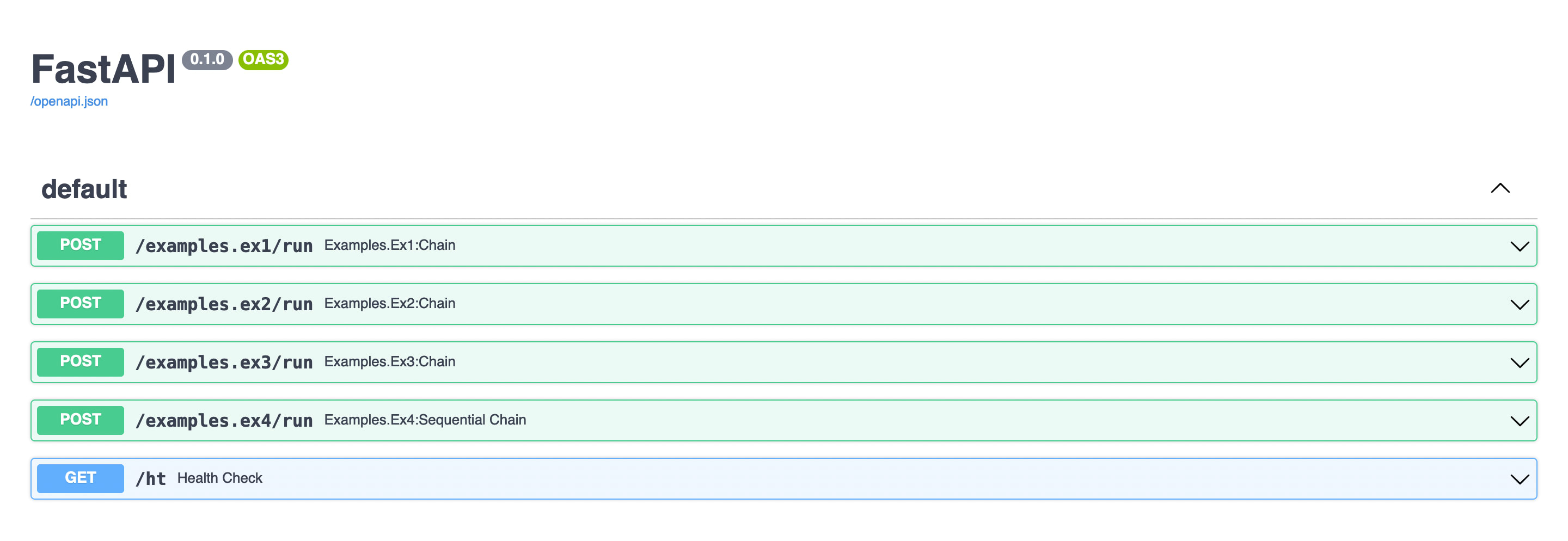

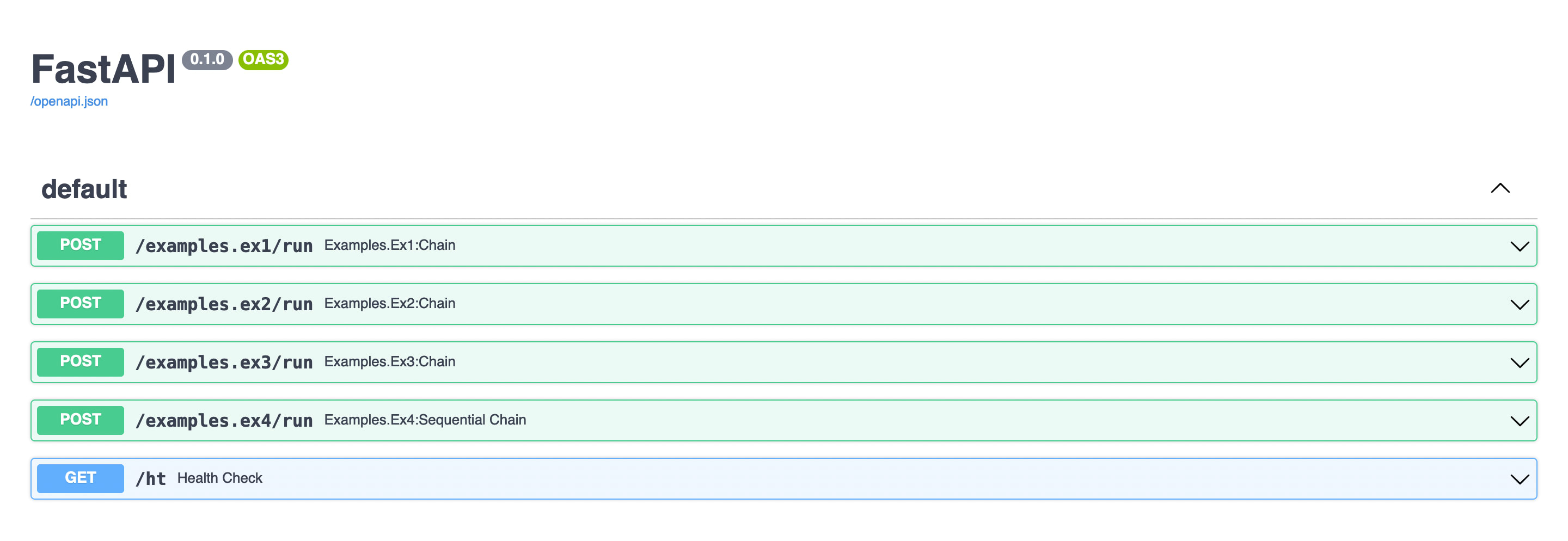

## Docs

Automatically served FastAPI doc

[Live example](https://langcorn-ift9ub8zg-msoedov.vercel.app/docs#/) hosted on vercel.

## Auth

It possible to add a static api token auth by specifying `auth_token`

```shell

python langcorn server examples.ex1:chain examples.ex2:chain --auth_token=api-secret-value

```

or

```python

app:FastAPI = create_service("examples.ex1:chain", auth_token="api-secret-value")

```

## Custom API KEYs

```shell

POST http://0.0.0.0:3000/examples.ex6/run

X-LLM-API-KEY: sk-******

Content-Type: application/json

```

## Handling memory

```json

{

"history": "string",

"input": "What is brain?",

"memory": [

{

"type": "human",

"data": {

"content": "What is memory?",

"additional_kwargs": {}

}

},

{

"type": "ai",

"data": {

"content": " Memory is the ability of the brain to store, retain, and recall information. It is the capacity to remember past experiences, facts, and events. It is also the ability to learn and remember new information.",

"additional_kwargs": {}

}

}

]

}

```

Response:

```json

{

"output": " The brain is an organ in the human body that is responsible for controlling thought, memory, emotion, and behavior. It is composed of billions of neurons that communicate with each other through electrical and chemical signals. It is the most complex organ in the body and is responsible for all of our conscious and unconscious actions.",

"error": "",

"memory": [

{

"type": "human",

"data": {

"content": "What is memory?",

"additional_kwargs": {}

}

},

{

"type": "ai",

"data": {

"content": " Memory is the ability of the brain to store, retain, and recall information. It is the capacity to remember past experiences, facts, and events. It is also the ability to learn and remember new information.",

"additional_kwargs": {}

}

},

{

"type": "human",

"data": {

"content": "What is brain?",

"additional_kwargs": {}

}

},

{

"type": "ai",

"data": {

"content": " The brain is an organ in the human body that is responsible for controlling thought, memory, emotion, and behavior. It is composed of billions of neurons that communicate with each other through electrical and chemical signals. It is the most complex organ in the body and is responsible for all of our conscious and unconscious actions.",

"additional_kwargs": {}

}

}

]

}

```

## LLM kwargs

To override the default LLM params per request

```shell

POST http://0.0.0.0:3000/examples.ex1/run

X-LLM-API-KEY: sk-******

X-LLM-TEMPERATURE: 0.7

X-MAX-TOKENS: 256

X-MODEL-NAME: gpt5

Content-Type: application/json

```

## Custom run function

See ex12.py

```python

chain = LLMChain(llm=llm, prompt=prompt, verbose=True)

# Run the chain only specifying the input variable.

def run(query: str) -> Joke:

output = chain.run(query)

return parser.parse(output)

app: FastAPI = create_service("examples.ex12:run")

```

## Documentation

For more detailed information on how to use LangCorn, including advanced features and customization options, please refer to the official documentation.

## 👋 Contributing

Contributions to LangCorn are welcome! If you'd like to contribute, please follow these steps:

- Fork the repository on GitHub

- Create a new branch for your changes

- Commit your changes to the new branch

- Push your changes to the forked repository

- Open a pull request to the main LangCorn repository

Before contributing, please read the contributing guidelines.

## License

LangCorn is released under the MIT License.

Raw data

{

"_id": null,

"home_page": "https://github.com/msoedov/langcorn",

"name": "langcorn",

"maintainer": "Alexander Miasoiedov",

"docs_url": null,

"requires_python": ">=3.9,<4.0",

"maintainer_email": "msoedov@gmail.com",

"keywords": "nlp,langchain,openai,gpt,fastapi,llm,llmops",

"author": "Alexander Miasoiedov",

"author_email": "msoedov@gmail.com",

"download_url": "https://files.pythonhosted.org/packages/73/8f/7d045f1acf8aff76645a452acdcfd1e83d8e58e3fd7d9be0e0a7d42696e2/langcorn-0.0.21.tar.gz",

"platform": null,

"description": "# Langcorn\n\nLangCorn is an API server that enables you to serve LangChain models and pipelines with ease, leveraging the power of FastAPI for a robust and efficient experience.\n\n<p>\n<img alt=\"GitHub Contributors\" src=\"https://img.shields.io/github/contributors/msoedov/langcorn\" />\n<img alt=\"GitHub Last Commit\" src=\"https://img.shields.io/github/last-commit/msoedov/langcorn\" />\n<img alt=\"\" src=\"https://img.shields.io/github/repo-size/msoedov/langcorn\" />\n<img alt=\"Downloads\" src=\"https://static.pepy.tech/badge/langcorn\" />\n<img alt=\"GitHub Issues\" src=\"https://img.shields.io/github/issues/msoedov/langcorn\" />\n<img alt=\"GitHub Pull Requests\" src=\"https://img.shields.io/github/issues-pr/msoedov/langcorn\" />\n<img alt=\"Github License\" src=\"https://img.shields.io/github/license/msoedov/langcorn\" />\n</p>\n\n## Features\n\n- Easy deployment of LangChain models and pipelines\n- Ready to use auth functionality\n- High-performance FastAPI framework for serving requests\n- Scalable and robust solution for language processing applications\n- Supports custom pipelines and processing\n- Well-documented RESTful API endpoints\n- Asynchronous processing for faster response times\n\n## \ud83d\udce6 Installation\n\nTo get started with LangCorn, simply install the package using pip:\n\n```shell\n\npip install langcorn\n```\n\n## \u26d3\ufe0f Quick Start\n\nExample LLM chain ex1.py\n\n```python\n\nimport os\n\nfrom langchain import LLMMathChain, OpenAI\n\nos.environ[\"OPENAI_API_KEY\"] = os.environ.get(\"OPENAI_API_KEY\", \"sk-********\")\n\nllm = OpenAI(temperature=0)\nchain = LLMMathChain(llm=llm, verbose=True)\n```\n\nRun your LangCorn FastAPI server:\n\n```shell\nlangcorn server examples.ex1:chain\n\n\n[INFO] 2023-04-18 14:34:56.32 | api:create_service:75 | Creating service\n[INFO] 2023-04-18 14:34:57.51 | api:create_service:85 | lang_app='examples.ex1:chain':LLMChain(['product'])\n[INFO] 2023-04-18 14:34:57.51 | api:create_service:104 | Serving\n[INFO] 2023-04-18 14:34:57.51 | api:create_service:106 | Endpoint: /docs\n[INFO] 2023-04-18 14:34:57.51 | api:create_service:106 | Endpoint: /examples.ex1/run\nINFO: Started server process [27843]\nINFO: Waiting for application startup.\nINFO: Application startup complete.\nINFO: Uvicorn running on http://127.0.0.1:8718 (Press CTRL+C to quit)\n```\n\nor as an alternative\n\n```shell\npython -m langcorn server examples.ex1:chain\n\n```\n\nRun multiple chains\n\n```shell\npython -m langcorn server examples.ex1:chain examples.ex2:chain\n\n\n[INFO] 2023-04-18 14:35:21.11 | api:create_service:75 | Creating service\n[INFO] 2023-04-18 14:35:21.82 | api:create_service:85 | lang_app='examples.ex1:chain':LLMChain(['product'])\n[INFO] 2023-04-18 14:35:21.82 | api:create_service:85 | lang_app='examples.ex2:chain':SimpleSequentialChain(['input'])\n[INFO] 2023-04-18 14:35:21.82 | api:create_service:104 | Serving\n[INFO] 2023-04-18 14:35:21.82 | api:create_service:106 | Endpoint: /docs\n[INFO] 2023-04-18 14:35:21.82 | api:create_service:106 | Endpoint: /examples.ex1/run\n[INFO] 2023-04-18 14:35:21.82 | api:create_service:106 | Endpoint: /examples.ex2/run\nINFO: Started server process [27863]\nINFO: Waiting for application startup.\nINFO: Application startup complete.\nINFO: Uvicorn running on http://127.0.0.1:8718 (Press CTRL+C to quit)\n```\n\nImport the necessary packages and create your FastAPI app:\n\n```python\n\nfrom fastapi import FastAPI\nfrom langcorn import create_service\n\napp:FastAPI = create_service(\"examples.ex1:chain\")\n```\n\nMultiple chains\n\n```python\n\nfrom fastapi import FastAPI\nfrom langcorn import create_service\n\napp:FastAPI = create_service(\"examples.ex2:chain\", \"examples.ex1:chain\")\n```\n\nor\n\n```python\nfrom fastapi import FastAPI\nfrom langcorn import create_service\n\napp: FastAPI = create_service(\n \"examples.ex1:chain\",\n \"examples.ex2:chain\",\n \"examples.ex3:chain\",\n \"examples.ex4:sequential_chain\",\n \"examples.ex5:conversation\",\n \"examples.ex6:conversation_with_summary\",\n \"examples.ex7_agent:agent\",\n)\n\n```\n\nRun your LangCorn FastAPI server:\n\n```shell\n\nuvicorn main:app --host 0.0.0.0 --port 8000\n```\n\nNow, your LangChain models and pipelines are accessible via the LangCorn API server.\n\n## Docs\n\nAutomatically served FastAPI doc\n[Live example](https://langcorn-ift9ub8zg-msoedov.vercel.app/docs#/) hosted on vercel.\n\n\n\n## Auth\n\nIt possible to add a static api token auth by specifying `auth_token`\n\n```shell\npython langcorn server examples.ex1:chain examples.ex2:chain --auth_token=api-secret-value\n```\n\nor\n\n```python\napp:FastAPI = create_service(\"examples.ex1:chain\", auth_token=\"api-secret-value\")\n```\n\n## Custom API KEYs\n\n```shell\nPOST http://0.0.0.0:3000/examples.ex6/run\nX-LLM-API-KEY: sk-******\nContent-Type: application/json\n```\n\n## Handling memory\n\n```json\n{\n \"history\": \"string\",\n \"input\": \"What is brain?\",\n \"memory\": [\n {\n \"type\": \"human\",\n \"data\": {\n \"content\": \"What is memory?\",\n \"additional_kwargs\": {}\n }\n },\n {\n \"type\": \"ai\",\n \"data\": {\n \"content\": \" Memory is the ability of the brain to store, retain, and recall information. It is the capacity to remember past experiences, facts, and events. It is also the ability to learn and remember new information.\",\n \"additional_kwargs\": {}\n }\n }\n ]\n}\n\n```\n\nResponse:\n\n```json\n{\n \"output\": \" The brain is an organ in the human body that is responsible for controlling thought, memory, emotion, and behavior. It is composed of billions of neurons that communicate with each other through electrical and chemical signals. It is the most complex organ in the body and is responsible for all of our conscious and unconscious actions.\",\n \"error\": \"\",\n \"memory\": [\n {\n \"type\": \"human\",\n \"data\": {\n \"content\": \"What is memory?\",\n \"additional_kwargs\": {}\n }\n },\n {\n \"type\": \"ai\",\n \"data\": {\n \"content\": \" Memory is the ability of the brain to store, retain, and recall information. It is the capacity to remember past experiences, facts, and events. It is also the ability to learn and remember new information.\",\n \"additional_kwargs\": {}\n }\n },\n {\n \"type\": \"human\",\n \"data\": {\n \"content\": \"What is brain?\",\n \"additional_kwargs\": {}\n }\n },\n {\n \"type\": \"ai\",\n \"data\": {\n \"content\": \" The brain is an organ in the human body that is responsible for controlling thought, memory, emotion, and behavior. It is composed of billions of neurons that communicate with each other through electrical and chemical signals. It is the most complex organ in the body and is responsible for all of our conscious and unconscious actions.\",\n \"additional_kwargs\": {}\n }\n }\n ]\n}\n```\n\n## LLM kwargs\n\nTo override the default LLM params per request\n\n```shell\nPOST http://0.0.0.0:3000/examples.ex1/run\nX-LLM-API-KEY: sk-******\nX-LLM-TEMPERATURE: 0.7\nX-MAX-TOKENS: 256\nX-MODEL-NAME: gpt5\nContent-Type: application/json\n```\n\n## Custom run function\n\nSee ex12.py\n\n```python\n\nchain = LLMChain(llm=llm, prompt=prompt, verbose=True)\n\n\n# Run the chain only specifying the input variable.\n\n\ndef run(query: str) -> Joke:\n output = chain.run(query)\n return parser.parse(output)\n\napp: FastAPI = create_service(\"examples.ex12:run\")\n\n```\n\n## Documentation\n\nFor more detailed information on how to use LangCorn, including advanced features and customization options, please refer to the official documentation.\n\n## \ud83d\udc4b Contributing\n\nContributions to LangCorn are welcome! If you'd like to contribute, please follow these steps:\n\n- Fork the repository on GitHub\n- Create a new branch for your changes\n- Commit your changes to the new branch\n- Push your changes to the forked repository\n- Open a pull request to the main LangCorn repository\n\nBefore contributing, please read the contributing guidelines.\n\n## License\n\nLangCorn is released under the MIT License.\n",

"bugtrack_url": null,

"license": "MIT",

"summary": "A Python package creating rest api interface for LangChain",

"version": "0.0.21",

"project_urls": {

"Homepage": "https://github.com/msoedov/langcorn",

"Repository": "https://github.com/msoedov/langcorn"

},

"split_keywords": [

"nlp",

"langchain",

"openai",

"gpt",

"fastapi",

"llm",

"llmops"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "738f7d045f1acf8aff76645a452acdcfd1e83d8e58e3fd7d9be0e0a7d42696e2",

"md5": "2def1d7e955d1380f8c06f4c47058961",

"sha256": "ecdcbba7aaed1af62770ac994059945eeeae7ec443fa88f2bf3d689dce7f7642"

},

"downloads": -1,

"filename": "langcorn-0.0.21.tar.gz",

"has_sig": false,

"md5_digest": "2def1d7e955d1380f8c06f4c47058961",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.9,<4.0",

"size": 9941,

"upload_time": "2023-10-31T15:33:46",

"upload_time_iso_8601": "2023-10-31T15:33:46.995056Z",

"url": "https://files.pythonhosted.org/packages/73/8f/7d045f1acf8aff76645a452acdcfd1e83d8e58e3fd7d9be0e0a7d42696e2/langcorn-0.0.21.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2023-10-31 15:33:46",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "msoedov",

"github_project": "langcorn",

"travis_ci": false,

"coveralls": false,

"github_actions": true,

"requirements": [],

"lcname": "langcorn"

}