# lcurvetools

Simple tools for Python language to plot learning curves of a neural network model trained with the keras or scikit-learn framework.

**NOTE:** All of the plotting examples below are for [interactive Python mode](https://matplotlib.org/stable/users/explain/figure/interactive.html#interactive-mode) in Jupyter-like environments. If you are in non-interactive mode you may need to explicitly call [matplotlib.pyplot.show](https://matplotlib.org/stable/api/_as_gen/matplotlib.pyplot.show.html) to display the window with built plots on your screen.

## The `lcurves_by_history` function to plot learning curves by the `history` attribute of the keras `History` object

Neural network model training with keras is performed using the [fit](https://keras.io/api/models/model_training_apis/#fit-method) method. The method returns the `History` object with the `history` attribute which is dictionary and contains keys with training and validation values of losses and metrics, as well as learning rate values at successive epochs. The `lcurves_by_history` function uses the `History.history` dictionary to plot the learning curves as the dependences of the above values on the epoch index.

### Usage scheme

- Import the `keras` module and the `lcurves_by_history` function:

```python

import keras

from lcurvetools import lcurves_by_history

```

- [Create](https://keras.io/api/models/), [compile](https://keras.io/api/models/model_training_apis/#compile-method)

and [fit](https://keras.io/api/models/model_training_apis/#fit-method) the keras model:

```python

model = keras.Model(...) # or keras.Sequential(...)

model.compile(...)

hist = model.fit(...)

```

- Use `hist.history` dictionary to plot the learning curves as the dependences of values of all keys in the dictionary on an epoch index with automatic recognition of keys of losses, metrics and learning rate:

```python

lcurves_by_history(hist.history);

```

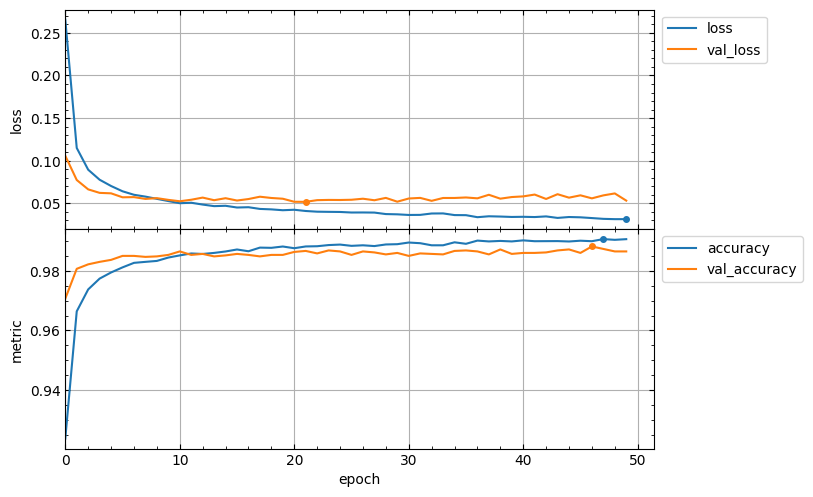

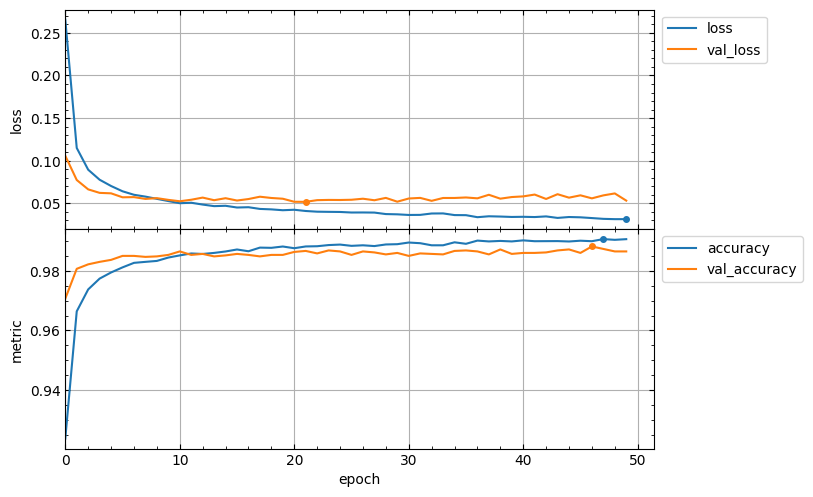

### Typical appearance of the output figure

The appearance of the output figure depends on the list of keys in the `hist.history` dictionary, which is determined by the parameters of the `compile` and `fit` methods of the model. For example, for a typical usage of these methods, the list of keys would be `['loss', 'accuracy', 'val_loss', 'val_accuracy']` and the output figure will contain 2 subplots with loss and metrics vertical axes and might look like this:

```python

model.compile(loss="categorical_crossentropy", metrics=["accuracy"])

hist = model.fit(x_train, y_train, validation_split=0.1, epochs=50)

lcurves_by_history(hist.history);

```

**Note:** minimum values of loss curves and the maximum values of metric curves are marked by points.

Of course, if the `metrics` parameter of the `compile` method is not specified, then the output figure will not contain a metric subplot.

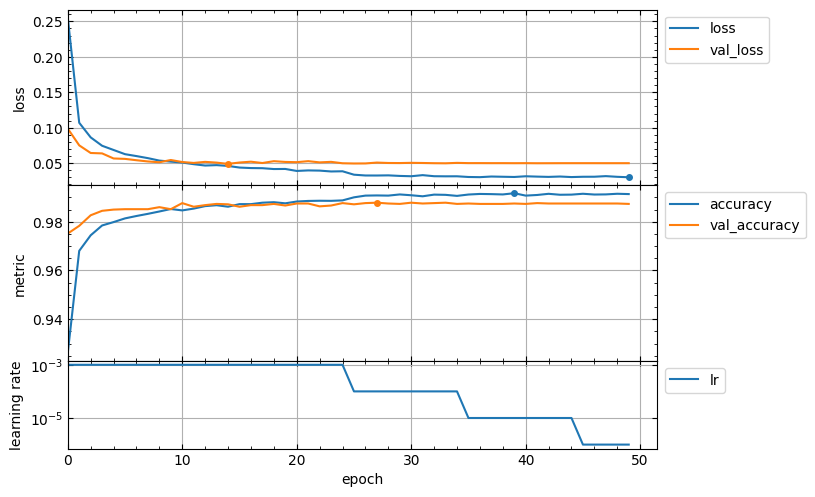

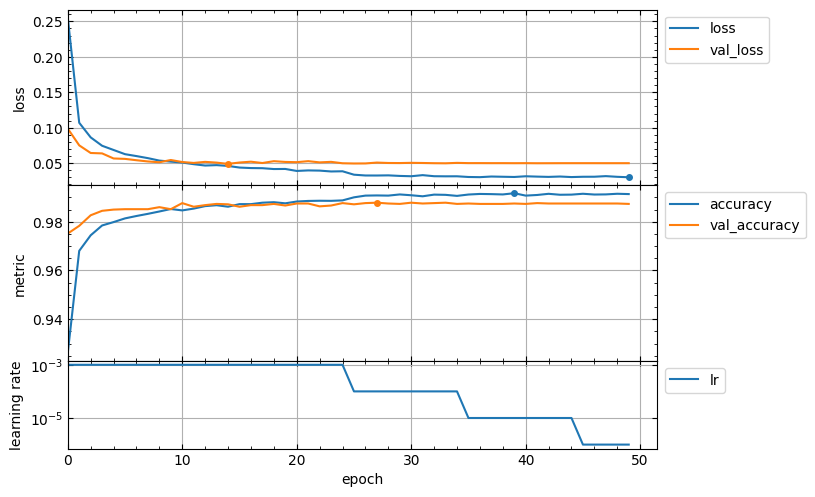

Usage of callbacks for the `fit` method can add new keys to the `hist.history` dictionary. For example, the [ReduceLROnPlateau](https://keras.io/api/callbacks/reduce_lr_on_plateau/) callback adds the `lr` key with learning rate values for successive epochs. In this case the output figure will contain additional subplot with learning rate vertical axis in a logarithmic scale and might look like this:

```python

hist = model.fit(x_train, y_train, validation_split=0.1, epochs=50,

callbacks=keras.callbacks.ReduceLROnPlateau(),

)

lcurves_by_history(hist.history);

```

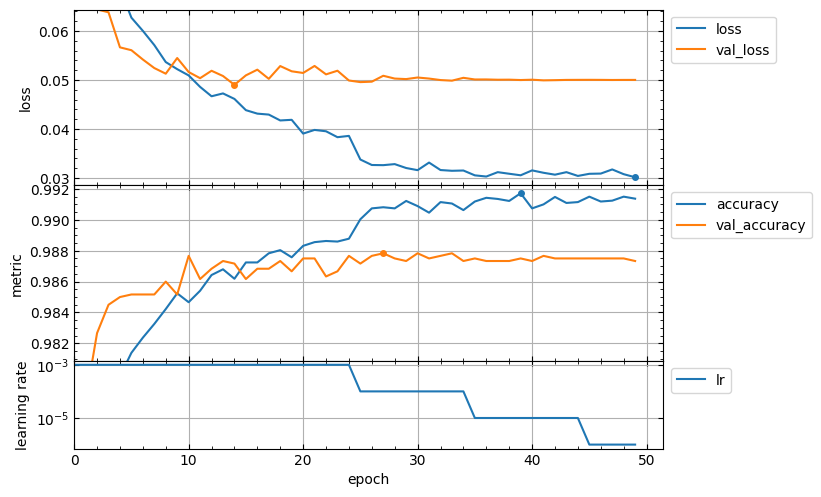

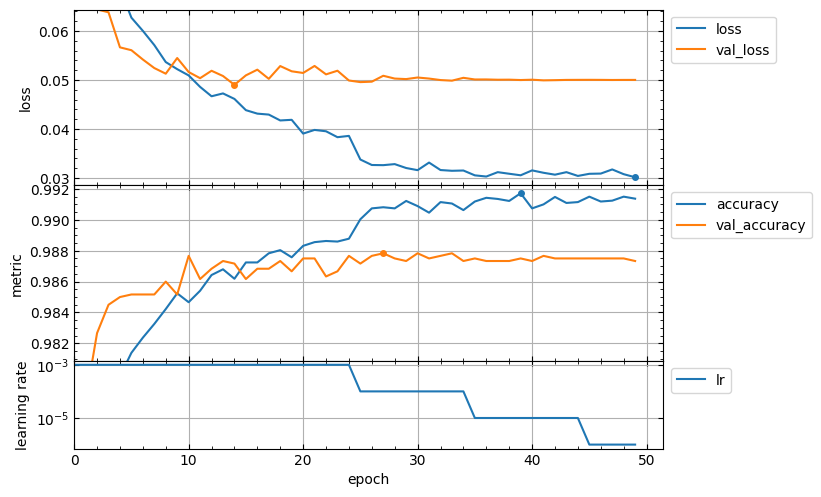

### Customizing appearance of the output figure

The `lcurves_by_history` function has optional parameters to customize the appearance of the output figure. For example, the `epoch_range_to_scale` option allows to specify the epoch index range within which the subplots of the losses and metrics are scaled.

- If `epoch_range_to_scale` is a list or a tuple of two int values, then they specify the epoch index limits of the scaling range in the form `[start, stop)`, i.e. as for `slice` and `range` objects.

- If `epoch_range_to_scale` is an int value, then it specifies the lower epoch index `start` of the scaling range, and the losses and metrics subplots are scaled by epochs with indices from `start` to the last.

So, you can exclude the first 5 epochs from the scaling range as follows:

```python

lcurves_by_history(hist.history, epoch_range_to_scale=5);

```

For a description of other optional parameters of the `lcurves_by_history` function to customize the appearance of the output figure, see its docstring.

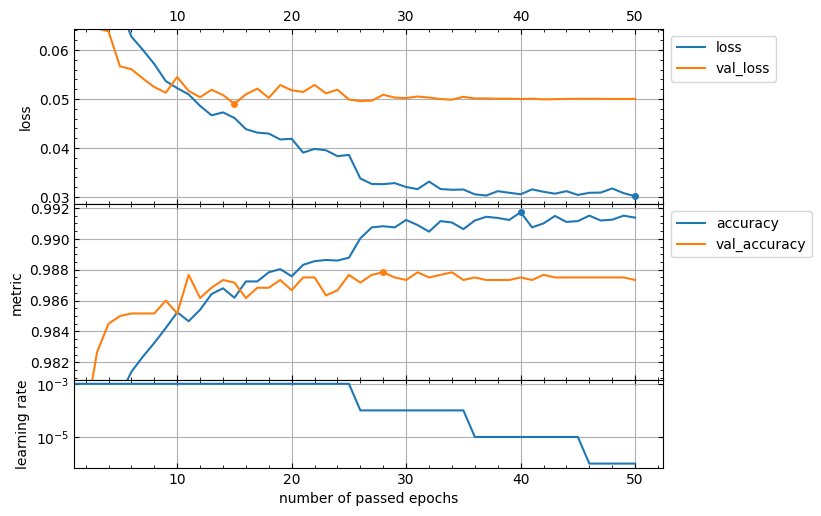

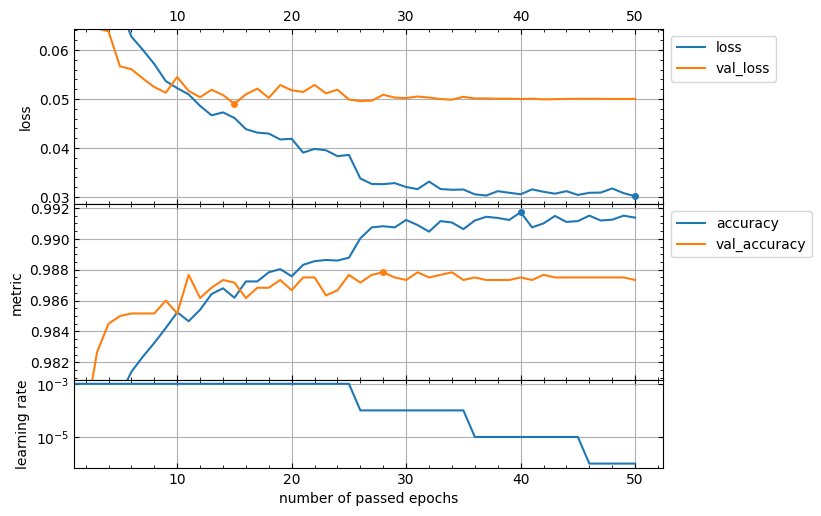

The `lcurves_by_history` function returns a numpy array or a list of the [`matplotlib.axes.Axes`](https://matplotlib.org/stable/api/_as_gen/matplotlib.axes.Axes.html) objects corresponded to the built subplots from top to bottom. So, you can use the methods of these objects to customize the appearance of the output figure.

```python

axs = lcurves_by_history(history, initial_epoch=1, epoch_range_to_scale=6)

axs[0].tick_params(axis="x", labeltop=True)

axs[-1].set_xlabel('number of passed epochs')

axs[-1].legend().remove()

```

## The `history_concatenate` function to concatenate two `History.history` dictionaries

This function is useful for combining histories of model fitting with two or more runs into a single history to plot full learning curves.

### Usage scheme

- Import the `keras` module and the `history_concatenate`, `lcurves_by_history` function:

```python

import keras

from lcurvetools import history_concatenate, lcurves_by_history

```

- [Create](https://keras.io/api/models/), [compile](https://keras.io/api/models/model_training_apis/#compile-method)

and [fit](https://keras.io/api/models/model_training_apis/#fit-method) the keras model:

```python

model = keras.Model(...) # or keras.Sequential(...)

model.compile(...)

hist1 = model.fit(...)

```

- Compile as needed and fit using possibly other parameter values:

```python

model.compile(...) # optional

hist2 = model.fit(...)

```

- Concatenate the `.history` dictionaries into one:

```python

full_history = history_concatenate(hist1.history, hist2.history)

```

- Use `full_history` dictionary to plot full learning curves:

```python

lcurves_by_history(full_history);

```

## The `lcurves_by_MLP_estimator` function to plot learning curves of the scikit-learn MLP estimator

The scikit-learn library provides 2 classes for building multi-layer perceptron (MLP) models of classification and regression: [`MLPClassifier`](https://scikit-learn.org/stable/modules/generated/sklearn.neural_network.MLPClassifier.html) and [`MLPRegressor`](https://scikit-learn.org/stable/modules/generated/sklearn.neural_network.MLPRegressor.html). After creation and fitting of these MLP estimators with using `early_stopping=True` the `MLPClassifier` and `MLPRegressor` objects have the `loss_curve_` and `validation_scores_` attributes with train loss and validation score values at successive epochs. The `lcurves_by_history` function uses the `loss_curve_` and `validation_scores_` attributes to plot the learning curves as the dependences of the above values on the epoch index.

### Usage scheme

- Import the `MLPClassifier` (or `MLPRegressor`) class and the `lcurves_by_MLP_estimator` function:

```python

from sklearn.neural_network import MLPClassifier

from lcurvetools import lcurves_by_MLP_estimator

```

- [Create](https://scikit-learn.org/stable/modules/generated/sklearn.neural_network.MLPClassifier.html#sklearn.neural_network.MLPClassifier) and [fit](https://scikit-learn.org/stable/modules/generated/sklearn.neural_network.MLPClassifier.html#sklearn.neural_network.MLPClassifier.fit) the scikit-learn MLP estimator:

```python

clf = MLPClassifier(..., early_stopping=True)

clf.fit(...)

```

- Use `clf` object with `loss_curve_` and `validation_scores_` attributes to plot the learning curves as the dependences of loss and validation score values on epoch index:

```python

lcurves_by_MLP_estimator(clf)

```

### Typical appearance of the output figure

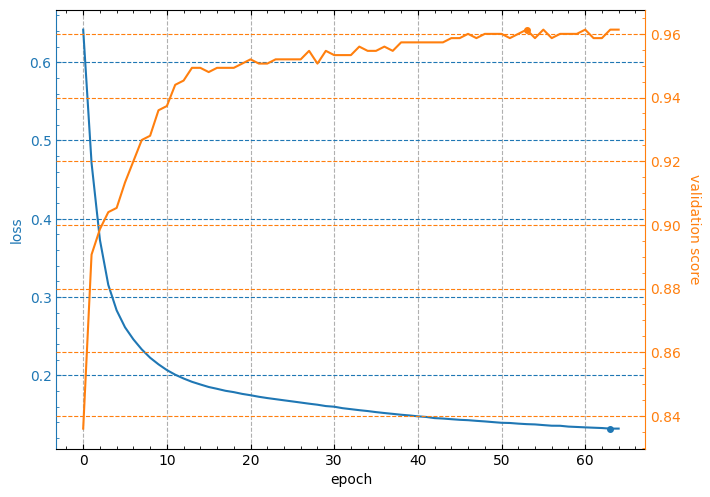

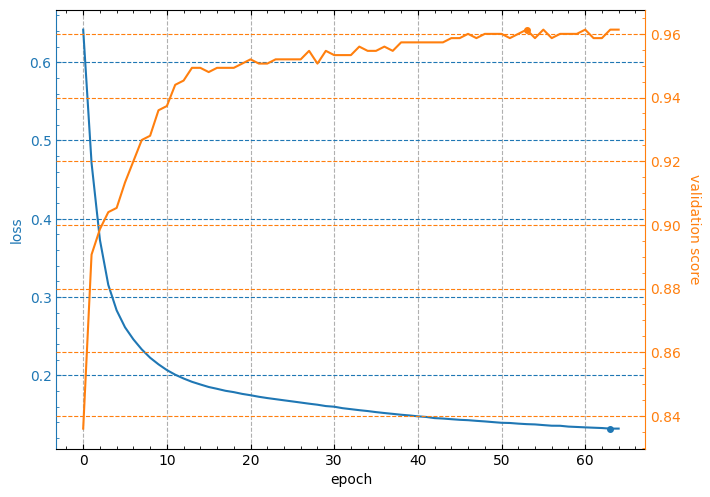

The `lcurves_by_MLP_estimator` function with default value of the parameter `on_separate_subplots=False` shows the learning curves of loss and validation score on one plot with two vertical axes scaled independently. Loss values are plotted on the left axis and validation score values are plotted on the right axis. The output figure might look like this:

**Note:** the minimum value of loss curve and the maximum value of validation score curve are marked by points.

### Customizing appearance of the output figure

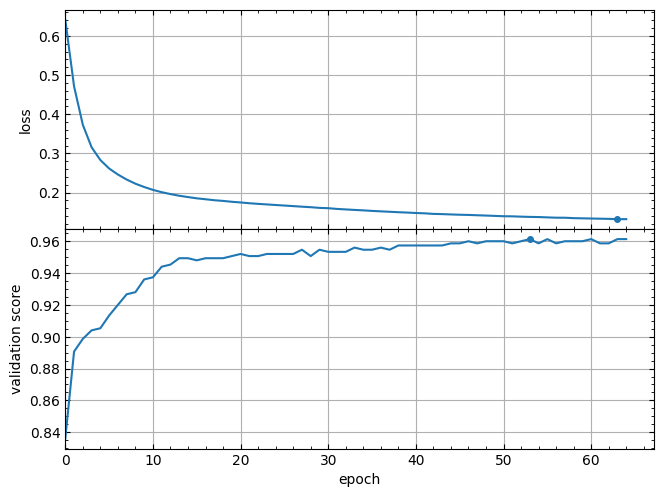

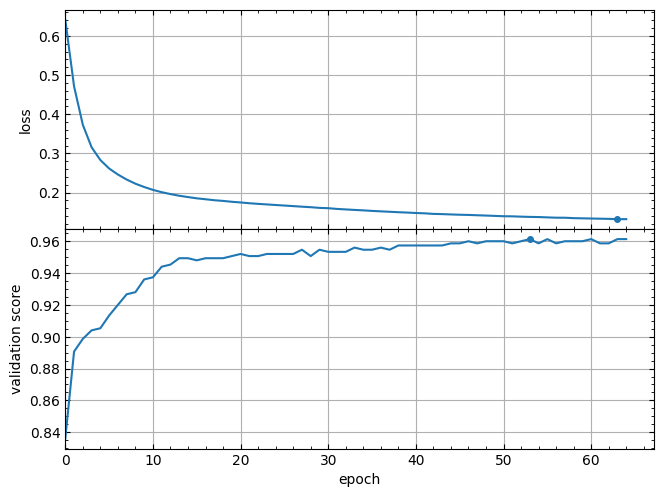

The `lcurves_by_MLP_estimator` function has optional parameters to customize the appearance of the output figure. For example,the `lcurves_by_MLP_estimator` function with `on_separate_subplots=True` shows the learning curves of loss and validation score on two separated subplots:

```python

lcurves_by_MLP_estimator(clf, on_separate_subplots=True)

```

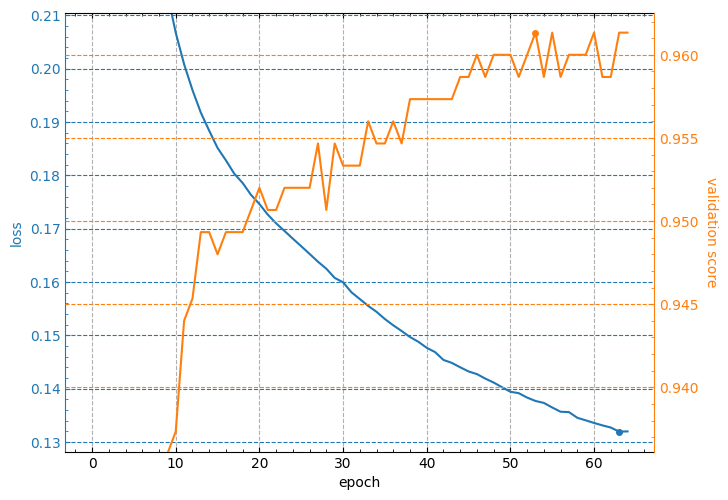

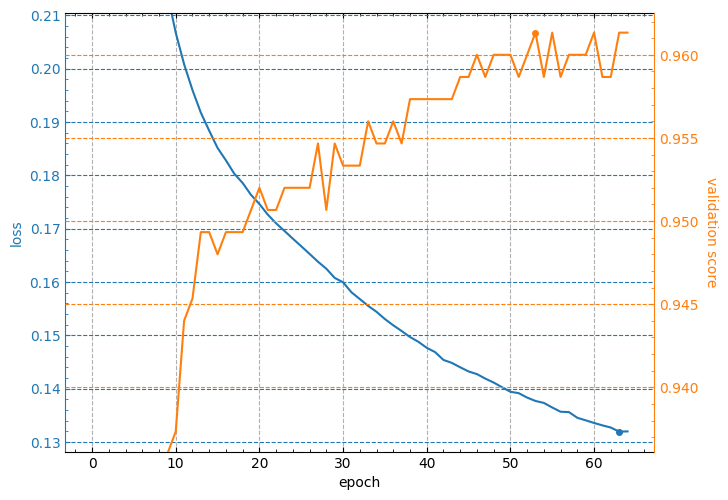

And the `epoch_range_to_scale` option allows to specify the epoch index range within which the subplots of the losses and metrics are scaled (see details about this option in the docstring of the `lcurves_by_MLP_estimator` function).

```python

lcurves_by_MLP_estimator(clf, epoch_range_to_scale=10);

```

For a description of other optional parameters of the `lcurves_by_MLP_estimator` function to customize the appearance of the output figure, see its docstring.

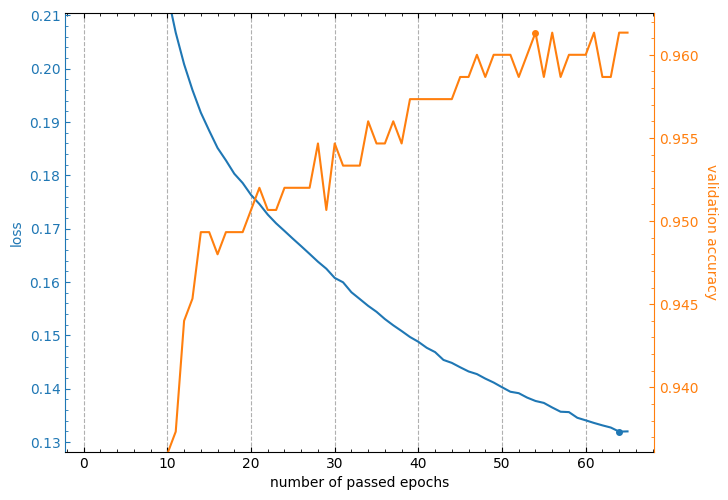

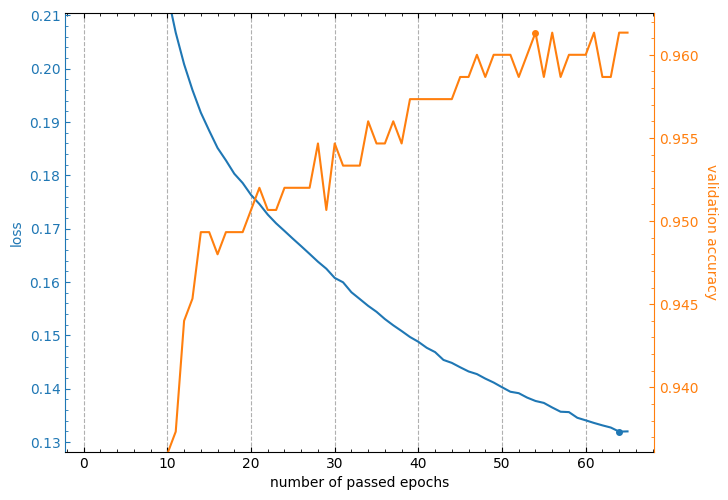

The `lcurves_by_MLP_estimator` function returns a numpy array or a list of the [`matplotlib.axes.Axes`](https://matplotlib.org/stable/api/_as_gen/matplotlib.axes.Axes.html) objects of an output figure (see additional details in the `Returns` section of the `lcurves_by_MLP_estimator` function docstring). So, you can use the methods of these objects to customize the appearance of the output figure.

```python

axs = lcurves_by_MLP_estimator(clf, initial_epoch=1, epoch_range_to_scale=11);

axs[0].grid(axis='y', visible=False)

axs[1].grid(axis='y', visible=False)

axs[0].set_xlabel('number of passed epochs')

axs[1].set_ylabel('validation accuracy');

```

# CHANGELOG

## Version 1.0.0

Initial release.

Raw data

{

"_id": null,

"home_page": "https://github.com/kamua/lcurvetools",

"name": "lcurvetools",

"maintainer": "",

"docs_url": null,

"requires_python": "",

"maintainer_email": "",

"keywords": "learning curve,keras history,loss_curve,validation_score",

"author": "Andriy Konovalov",

"author_email": "kandriy74@gmail.com",

"download_url": "https://files.pythonhosted.org/packages/21/78/c06582befc46785e0d8812b347b9f1dc94c21325ead9afedf8f01ea13197/lcurvetools-1.0.0.tar.gz",

"platform": null,

"description": "# lcurvetools\r\n\r\nSimple tools for Python language to plot learning curves of a neural network model trained with the keras or scikit-learn framework.\r\n\r\n**NOTE:** All of the plotting examples below are for [interactive Python mode](https://matplotlib.org/stable/users/explain/figure/interactive.html#interactive-mode) in Jupyter-like environments. If you are in non-interactive mode you may need to explicitly call [matplotlib.pyplot.show](https://matplotlib.org/stable/api/_as_gen/matplotlib.pyplot.show.html) to display the window with built plots on your screen.\r\n\r\n## The `lcurves_by_history` function to plot learning curves by the `history` attribute of the keras `History` object\r\n\r\nNeural network model training with keras is performed using the [fit](https://keras.io/api/models/model_training_apis/#fit-method) method. The method returns the `History` object with the `history` attribute which is dictionary and contains keys with training and validation values of losses and metrics, as well as learning rate values at successive epochs. The `lcurves_by_history` function uses the `History.history` dictionary to plot the learning curves as the dependences of the above values on the epoch index.\r\n\r\n### Usage scheme\r\n\r\n- Import the `keras` module and the `lcurves_by_history` function:\r\n\r\n```python\r\nimport keras\r\nfrom lcurvetools import lcurves_by_history\r\n```\r\n\r\n- [Create](https://keras.io/api/models/), [compile](https://keras.io/api/models/model_training_apis/#compile-method)\r\nand [fit](https://keras.io/api/models/model_training_apis/#fit-method) the keras model:\r\n\r\n```python\r\nmodel = keras.Model(...) # or keras.Sequential(...)\r\nmodel.compile(...)\r\nhist = model.fit(...)\r\n```\r\n\r\n- Use `hist.history` dictionary to plot the learning curves as the dependences of values of all keys in the dictionary on an epoch index with automatic recognition of keys of losses, metrics and learning rate:\r\n\r\n```python\r\nlcurves_by_history(hist.history);\r\n```\r\n\r\n### Typical appearance of the output figure\r\n\r\nThe appearance of the output figure depends on the list of keys in the `hist.history` dictionary, which is determined by the parameters of the `compile` and `fit` methods of the model. For example, for a typical usage of these methods, the list of keys would be `['loss', 'accuracy', 'val_loss', 'val_accuracy']` and the output figure will contain 2 subplots with loss and metrics vertical axes and might look like this:\r\n\r\n```python\r\nmodel.compile(loss=\"categorical_crossentropy\", metrics=[\"accuracy\"])\r\nhist = model.fit(x_train, y_train, validation_split=0.1, epochs=50)\r\nlcurves_by_history(hist.history);\r\n```\r\n\r\n\r\n**Note:** minimum values of loss curves and the maximum values of metric curves are marked by points.\r\n\r\nOf course, if the `metrics` parameter of the `compile` method is not specified, then the output figure will not contain a metric subplot.\r\n\r\nUsage of callbacks for the `fit` method can add new keys to the `hist.history` dictionary. For example, the [ReduceLROnPlateau](https://keras.io/api/callbacks/reduce_lr_on_plateau/) callback adds the `lr` key with learning rate values for successive epochs. In this case the output figure will contain additional subplot with learning rate vertical axis in a logarithmic scale and might look like this:\r\n\r\n```python\r\nhist = model.fit(x_train, y_train, validation_split=0.1, epochs=50,\r\n callbacks=keras.callbacks.ReduceLROnPlateau(),\r\n)\r\nlcurves_by_history(hist.history);\r\n```\r\n\r\n\r\n\r\n### Customizing appearance of the output figure\r\n\r\nThe `lcurves_by_history` function has optional parameters to customize the appearance of the output figure. For example, the `epoch_range_to_scale` option allows to specify the epoch index range within which the subplots of the losses and metrics are scaled.\r\n\r\n- If `epoch_range_to_scale` is a list or a tuple of two int values, then they specify the epoch index limits of the scaling range in the form `[start, stop)`, i.e. as for `slice` and `range` objects.\r\n- If `epoch_range_to_scale` is an int value, then it specifies the lower epoch index `start` of the scaling range, and the losses and metrics subplots are scaled by epochs with indices from `start` to the last.\r\n\r\nSo, you can exclude the first 5 epochs from the scaling range as follows:\r\n\r\n```python\r\nlcurves_by_history(hist.history, epoch_range_to_scale=5);\r\n```\r\n\r\n\r\n\r\nFor a description of other optional parameters of the `lcurves_by_history` function to customize the appearance of the output figure, see its docstring.\r\n\r\nThe `lcurves_by_history` function returns a numpy array or a list of the [`matplotlib.axes.Axes`](https://matplotlib.org/stable/api/_as_gen/matplotlib.axes.Axes.html) objects corresponded to the built subplots from top to bottom. So, you can use the methods of these objects to customize the appearance of the output figure.\r\n\r\n```python\r\naxs = lcurves_by_history(history, initial_epoch=1, epoch_range_to_scale=6)\r\naxs[0].tick_params(axis=\"x\", labeltop=True)\r\naxs[-1].set_xlabel('number of passed epochs')\r\naxs[-1].legend().remove()\r\n```\r\n\r\n\r\n\r\n## The `history_concatenate` function to concatenate two `History.history` dictionaries\r\n\r\nThis function is useful for combining histories of model fitting with two or more runs into a single history to plot full learning curves.\r\n\r\n### Usage scheme\r\n\r\n- Import the `keras` module and the `history_concatenate`, `lcurves_by_history` function:\r\n\r\n```python\r\nimport keras\r\nfrom lcurvetools import history_concatenate, lcurves_by_history\r\n```\r\n\r\n- [Create](https://keras.io/api/models/), [compile](https://keras.io/api/models/model_training_apis/#compile-method)\r\nand [fit](https://keras.io/api/models/model_training_apis/#fit-method) the keras model:\r\n\r\n```python\r\nmodel = keras.Model(...) # or keras.Sequential(...)\r\nmodel.compile(...)\r\nhist1 = model.fit(...)\r\n```\r\n\r\n- Compile as needed and fit using possibly other parameter values:\r\n\r\n```python\r\nmodel.compile(...) # optional\r\nhist2 = model.fit(...)\r\n```\r\n\r\n- Concatenate the `.history` dictionaries into one:\r\n\r\n```python\r\nfull_history = history_concatenate(hist1.history, hist2.history)\r\n```\r\n\r\n- Use `full_history` dictionary to plot full learning curves:\r\n\r\n```python\r\nlcurves_by_history(full_history);\r\n```\r\n\r\n## The `lcurves_by_MLP_estimator` function to plot learning curves of the scikit-learn MLP estimator\r\n\r\nThe scikit-learn library provides 2 classes for building multi-layer perceptron (MLP) models of classification and regression: [`MLPClassifier`](https://scikit-learn.org/stable/modules/generated/sklearn.neural_network.MLPClassifier.html) and [`MLPRegressor`](https://scikit-learn.org/stable/modules/generated/sklearn.neural_network.MLPRegressor.html). After creation and fitting of these MLP estimators with using `early_stopping=True` the `MLPClassifier` and `MLPRegressor` objects have the `loss_curve_` and `validation_scores_` attributes with train loss and validation score values at successive epochs. The `lcurves_by_history` function uses the `loss_curve_` and `validation_scores_` attributes to plot the learning curves as the dependences of the above values on the epoch index.\r\n\r\n### Usage scheme\r\n\r\n- Import the `MLPClassifier` (or `MLPRegressor`) class and the `lcurves_by_MLP_estimator` function:\r\n\r\n```python\r\nfrom sklearn.neural_network import MLPClassifier\r\nfrom lcurvetools import lcurves_by_MLP_estimator\r\n```\r\n\r\n- [Create](https://scikit-learn.org/stable/modules/generated/sklearn.neural_network.MLPClassifier.html#sklearn.neural_network.MLPClassifier) and [fit](https://scikit-learn.org/stable/modules/generated/sklearn.neural_network.MLPClassifier.html#sklearn.neural_network.MLPClassifier.fit) the scikit-learn MLP estimator:\r\n\r\n```python\r\nclf = MLPClassifier(..., early_stopping=True)\r\nclf.fit(...)\r\n```\r\n\r\n- Use `clf` object with `loss_curve_` and `validation_scores_` attributes to plot the learning curves as the dependences of loss and validation score values on epoch index:\r\n\r\n```python\r\nlcurves_by_MLP_estimator(clf)\r\n```\r\n\r\n### Typical appearance of the output figure\r\n\r\nThe `lcurves_by_MLP_estimator` function with default value of the parameter `on_separate_subplots=False` shows the learning curves of loss and validation score on one plot with two vertical axes scaled independently. Loss values are plotted on the left axis and validation score values are plotted on the right axis. The output figure might look like this:\r\n\r\n\r\n\r\n**Note:** the minimum value of loss curve and the maximum value of validation score curve are marked by points.\r\n\r\n### Customizing appearance of the output figure\r\n\r\nThe `lcurves_by_MLP_estimator` function has optional parameters to customize the appearance of the output figure. For example,the `lcurves_by_MLP_estimator` function with `on_separate_subplots=True` shows the learning curves of loss and validation score on two separated subplots:\r\n\r\n```python\r\nlcurves_by_MLP_estimator(clf, on_separate_subplots=True)\r\n```\r\n\r\n\r\n\r\nAnd the `epoch_range_to_scale` option allows to specify the epoch index range within which the subplots of the losses and metrics are scaled (see details about this option in the docstring of the `lcurves_by_MLP_estimator` function).\r\n\r\n```python\r\nlcurves_by_MLP_estimator(clf, epoch_range_to_scale=10);\r\n```\r\n\r\n\r\n\r\nFor a description of other optional parameters of the `lcurves_by_MLP_estimator` function to customize the appearance of the output figure, see its docstring.\r\n\r\nThe `lcurves_by_MLP_estimator` function returns a numpy array or a list of the [`matplotlib.axes.Axes`](https://matplotlib.org/stable/api/_as_gen/matplotlib.axes.Axes.html) objects of an output figure (see additional details in the `Returns` section of the `lcurves_by_MLP_estimator` function docstring). So, you can use the methods of these objects to customize the appearance of the output figure.\r\n\r\n```python\r\naxs = lcurves_by_MLP_estimator(clf, initial_epoch=1, epoch_range_to_scale=11);\r\naxs[0].grid(axis='y', visible=False)\r\naxs[1].grid(axis='y', visible=False)\r\naxs[0].set_xlabel('number of passed epochs')\r\naxs[1].set_ylabel('validation accuracy');\r\n```\r\n\r\n\r\n\r\n\r\n# CHANGELOG\r\n\r\n## Version 1.0.0\r\n\r\nInitial release.\r\n",

"bugtrack_url": null,

"license": "BSD 3-Clause License",

"summary": "Simple tools to plot learning curves of neural network models created by scikit-learn or keras framework.",

"version": "1.0.0",

"project_urls": {

"Homepage": "https://github.com/kamua/lcurvetools"

},

"split_keywords": [

"learning curve",

"keras history",

"loss_curve",

"validation_score"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "518d2dca13095436c958b95f44cbf8475fc70a75152f984fb610425775fa152d",

"md5": "c780369f79274ed3cdec1e6e45c2fb41",

"sha256": "963236bf42c24d8de21908c4aba89ccec52fa97efab9ecc2ab5a692fde134834"

},

"downloads": -1,

"filename": "lcurvetools-1.0.0-py3-none-any.whl",

"has_sig": false,

"md5_digest": "c780369f79274ed3cdec1e6e45c2fb41",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": null,

"size": 10894,

"upload_time": "2024-01-23T00:19:01",

"upload_time_iso_8601": "2024-01-23T00:19:01.158577Z",

"url": "https://files.pythonhosted.org/packages/51/8d/2dca13095436c958b95f44cbf8475fc70a75152f984fb610425775fa152d/lcurvetools-1.0.0-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "2178c06582befc46785e0d8812b347b9f1dc94c21325ead9afedf8f01ea13197",

"md5": "e5ee30483dff8873f79901a96060776d",

"sha256": "ed1a976d9204ec5d7c47c569bbc6793b6143ba3d60c25efd6a8f6e5e3bc60fdf"

},

"downloads": -1,

"filename": "lcurvetools-1.0.0.tar.gz",

"has_sig": false,

"md5_digest": "e5ee30483dff8873f79901a96060776d",

"packagetype": "sdist",

"python_version": "source",

"requires_python": null,

"size": 12893,

"upload_time": "2024-01-23T00:19:03",

"upload_time_iso_8601": "2024-01-23T00:19:03.322553Z",

"url": "https://files.pythonhosted.org/packages/21/78/c06582befc46785e0d8812b347b9f1dc94c21325ead9afedf8f01ea13197/lcurvetools-1.0.0.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2024-01-23 00:19:03",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "kamua",

"github_project": "lcurvetools",

"travis_ci": false,

"coveralls": false,

"github_actions": false,

"lcname": "lcurvetools"

}