# 🤥 LIAH - a Lie-in-haystack

With longer context lengths for LLMs. It is increasingly difficult to test

if fine tuned models attend to all depths of the context.

The needle in haystack is a popular approach. However since the LLMs can also answer

about the needle instead of the needle. Tests have shown that a "Lie" works well in

this context 😊

[Lost in the Middle - Paper](https://arxiv.org/abs/2307.03172)

lie: **"Picasso painted the Mona Lisa"**

retrieve: **"Who painted the Mona Lisa?"**

## Installation

pip install liah

## Example Usage

# update OPENAI_API_KEY in the env with your token.

# If you need Open AI models for the final evaluation

from liah import Liah

from vllm import LLM, SamplingParams

# Create a sampling params object.

sampling_params = SamplingParams(temperature=0.8, top_p=0.95, max_tokens=4096)

llm = LLM(model="meta-llama/Llama-2-70b-hf", tensor_parallel_size=4, max_model_len=1500) # need 4 A100s 40GB

#Create Liah

liah = Liah(max_context_length=2000)

#Get a sample from different depths and context_lengths

for i, sample in enumerate(liah.getSample()):

# test the sample text with your model

output = llm.generate([sample["prompt"]], sampling_params)[0]

#Update liah with the response

liah.update(sample, output.outputs[0].text)

#Contains the plot file from Liah

plotFilePath = liah.evaluate()

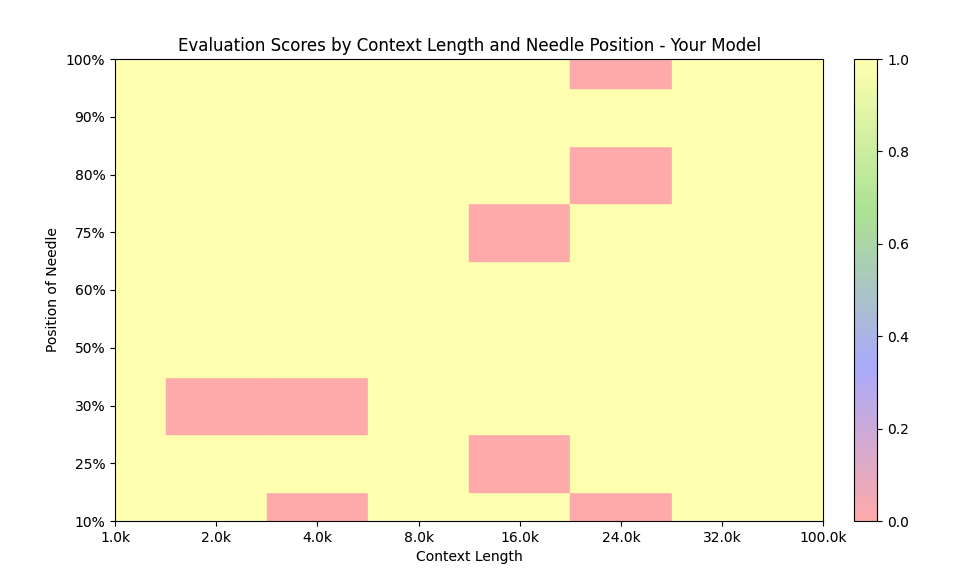

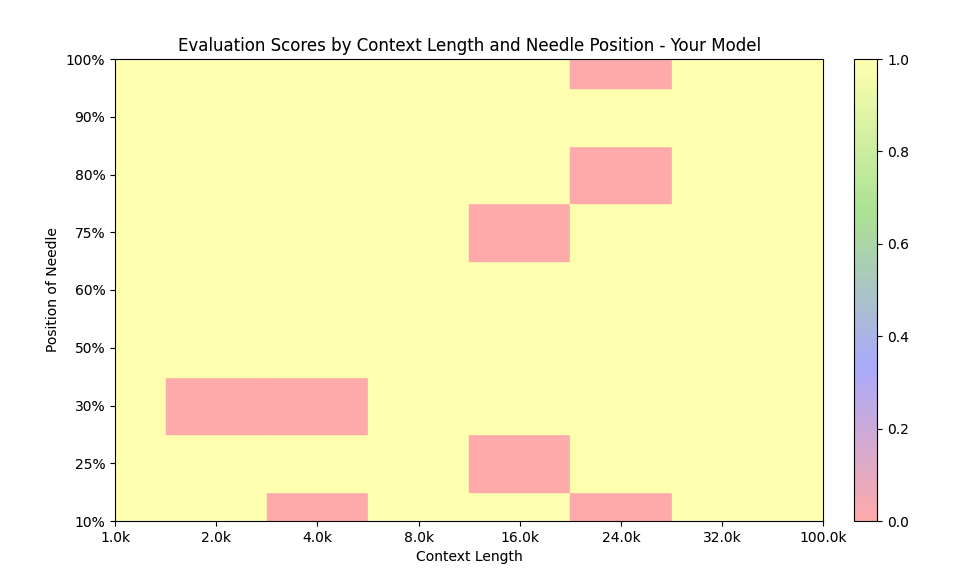

## Sample plot

## Contribute

bash

pip install pre-commit

then (in the repository, just once)

bash

pre-commit install

## before commit (optional)

bash

pre-commit run --all-files

Raw data

{

"_id": null,

"home_page": "https://github.com/melvinebenezer/Liah-Lie_in_a_haystack",

"name": "liah",

"maintainer": null,

"docs_url": null,

"requires_python": ">=3.6",

"maintainer_email": null,

"keywords": "llm, needle in a haystack",

"author": "James Melvin Priyarajan",

"author_email": "melvinebenezer@gmail.com",

"download_url": "https://files.pythonhosted.org/packages/31/15/18a7d169bb62d8614c554157ba8a180f2bafe9aa05a576b5a88eb76a4f23/liah-0.1.6.tar.gz",

"platform": null,

"description": "\n# \ud83e\udd25 LIAH - a Lie-in-haystack\n\n\n\nWith longer context lengths for LLMs. It is increasingly difficult to test\nif fine tuned models attend to all depths of the context.\n\nThe needle in haystack is a popular approach. However since the LLMs can also answer\nabout the needle instead of the needle. Tests have shown that a \"Lie\" works well in\nthis context \ud83d\ude0a\n\n[Lost in the Middle - Paper](https://arxiv.org/abs/2307.03172)\n\nlie: **\"Picasso painted the Mona Lisa\"**\n\nretrieve: **\"Who painted the Mona Lisa?\"**\n\n## Installation\n\n pip install liah\n\n## Example Usage\n\n # update OPENAI_API_KEY in the env with your token.\n # If you need Open AI models for the final evaluation\n from liah import Liah\n from vllm import LLM, SamplingParams\n\n # Create a sampling params object.\n sampling_params = SamplingParams(temperature=0.8, top_p=0.95, max_tokens=4096)\n llm = LLM(model=\"meta-llama/Llama-2-70b-hf\", tensor_parallel_size=4, max_model_len=1500) # need 4 A100s 40GB\n\n #Create Liah\n liah = Liah(max_context_length=2000)\n\n #Get a sample from different depths and context_lengths\n for i, sample in enumerate(liah.getSample()):\n # test the sample text with your model\n output = llm.generate([sample[\"prompt\"]], sampling_params)[0]\n #Update liah with the response\n liah.update(sample, output.outputs[0].text)\n\n #Contains the plot file from Liah\n plotFilePath = liah.evaluate()\n\n## Sample plot\n\n\n\n## Contribute\n\n bash\n pip install pre-commit\n\n then (in the repository, just once)\n\n bash\n pre-commit install\n\n## before commit (optional)\n\n bash\n pre-commit run --all-files\n",

"bugtrack_url": null,

"license": "MIT",

"summary": "Insert a Lie in a Haystack and evaluate the model's ability to detect it.",

"version": "0.1.6",

"project_urls": {

"Homepage": "https://github.com/melvinebenezer/Liah-Lie_in_a_haystack"

},

"split_keywords": [

"llm",

" needle in a haystack"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "990162b60bafd08cdd256453c82820a37617481011a820f82aad5479a32ba01a",

"md5": "c80cb8d8e82addec4e0f856be4c0e4e6",

"sha256": "80b87022b4d4f0ee8f254909dbf9bd17fc9fcf33b66ab41b46572f2e61b49d84"

},

"downloads": -1,

"filename": "liah-0.1.6-py3-none-any.whl",

"has_sig": false,

"md5_digest": "c80cb8d8e82addec4e0f856be4c0e4e6",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.6",

"size": 253582,

"upload_time": "2024-04-15T09:27:03",

"upload_time_iso_8601": "2024-04-15T09:27:03.898272Z",

"url": "https://files.pythonhosted.org/packages/99/01/62b60bafd08cdd256453c82820a37617481011a820f82aad5479a32ba01a/liah-0.1.6-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "311518a7d169bb62d8614c554157ba8a180f2bafe9aa05a576b5a88eb76a4f23",

"md5": "891459d07f94f86f704f9db494a1b58e",

"sha256": "d337c5c9c050aabca0da1473afede741de5fccc6f4a7ccba81e730205f2ca488"

},

"downloads": -1,

"filename": "liah-0.1.6.tar.gz",

"has_sig": false,

"md5_digest": "891459d07f94f86f704f9db494a1b58e",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.6",

"size": 250998,

"upload_time": "2024-04-15T09:27:08",

"upload_time_iso_8601": "2024-04-15T09:27:08.498415Z",

"url": "https://files.pythonhosted.org/packages/31/15/18a7d169bb62d8614c554157ba8a180f2bafe9aa05a576b5a88eb76a4f23/liah-0.1.6.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2024-04-15 09:27:08",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "melvinebenezer",

"github_project": "Liah-Lie_in_a_haystack",

"travis_ci": false,

"coveralls": false,

"github_actions": true,

"requirements": [

{

"name": "openai",

"specs": [

[

">=",

"1.16.1"

]

]

},

{

"name": "matplotlib",

"specs": []

},

{

"name": "tiktoken",

"specs": []

},

{

"name": "tqdm",

"specs": []

}

],

"lcname": "liah"

}