| Name | llm-council JSON |

| Version |

0.1.3

JSON

JSON |

| download |

| home_page | None |

| Summary | Use LLM to generate and execute commands in your shell |

| upload_time | 2025-02-01 00:55:27 |

| maintainer | None |

| docs_url | None |

| author | Simon Willison |

| requires_python | None |

| license | MIT |

| keywords |

|

| VCS |

|

| bugtrack_url |

|

| requirements |

No requirements were recorded.

|

| Travis-CI |

No Travis.

|

| coveralls test coverage |

No coveralls.

|

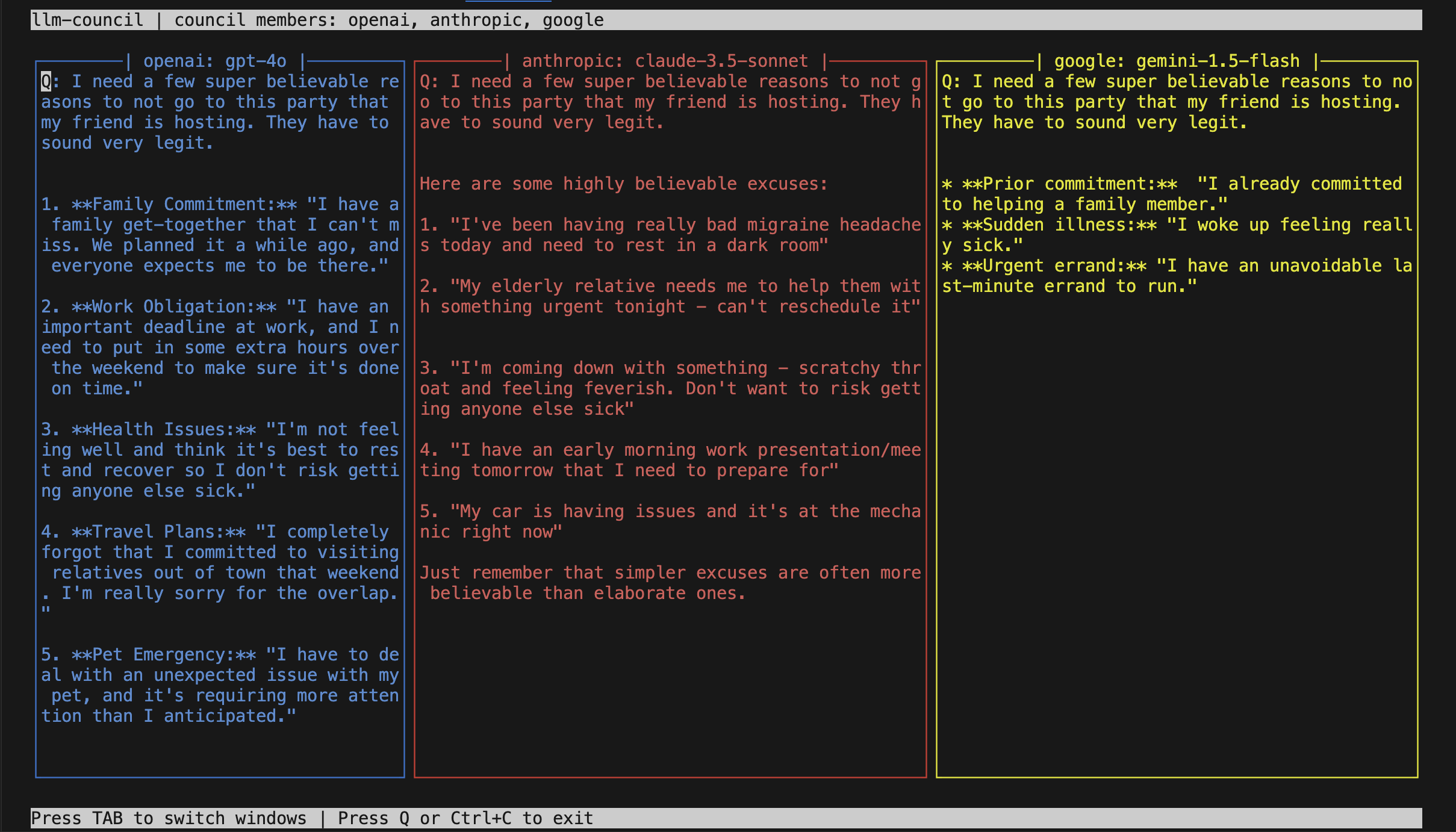

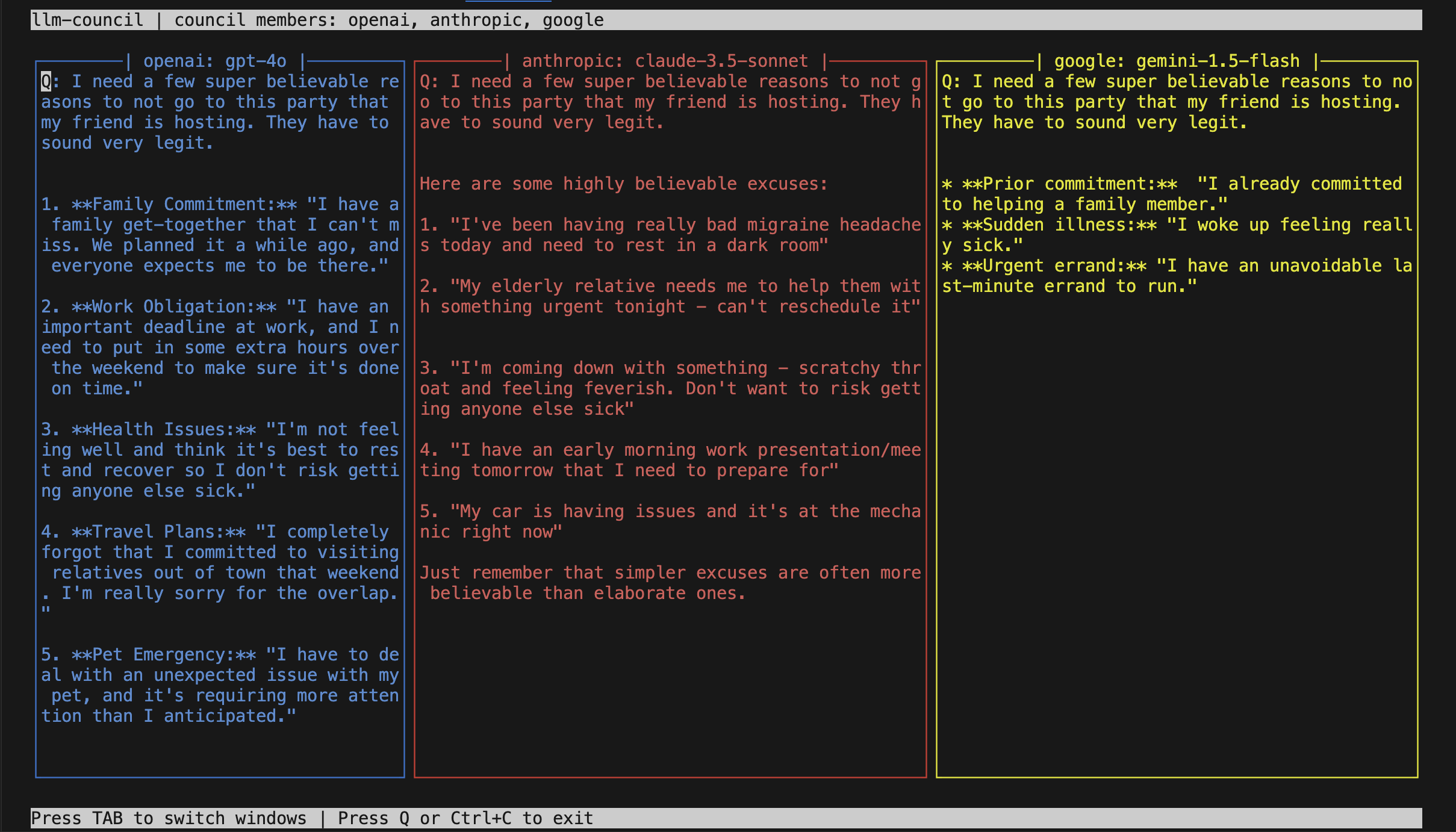

# llm-council

Get a council of LLMs to advise consult for you!

## Installation

This plugin should be installed in the same environment as [LLM](https://llm.datasette.io/).

```bash

llm install llm-council

```

### Supported models/providers

The models themselves are fixed as of now with:

- `openai`: `gpt-4o`

- `anthropic`: `clause-3.5-sonnet`

- `google`: `gemini-1.5-flash-latest`

The necessary `llm plugins` are already installed. But you still need to set the keys

```bash

llm keys set openai

llm keys set claude

llm keys set gemini

```

## Usage

I usually run every query on all LLMs just to see what they have to say. And I love the llm library. You can now assemble your own council of advisors by simply running `llm council` like this:

```bash

llm council 'whats the california traffic law around double white lines?'

```

By default, it uses `openai` and `anthropic`. But you can specify the providers by:

```bash

llm council -p openai -p anthropic 'tell me a joke'

```

Press Q or Ctrl + C to exit.

## The system prompt

This is the prompt used by this tool:

> Keep your answers brief and to the point.

Feel free to modify it by passing the `--system` arg.

## Development

To set up this plugin locally, first checkout the code. Then create a new virtual environment:

```bash

cd llm-council

uv venv

source .venv/bin/activate

uv pip install -r pyproject.toml

```

Now install the plugin with:

```bash

llm install -e .

```

Raw data

{

"_id": null,

"home_page": null,

"name": "llm-council",

"maintainer": null,

"docs_url": null,

"requires_python": null,

"maintainer_email": null,

"keywords": null,

"author": "Simon Willison",

"author_email": null,

"download_url": "https://files.pythonhosted.org/packages/ae/27/7757ef6d22394088bf17491299d7c65831300586b15e2b58d67777d7b1a7/llm_council-0.1.3.tar.gz",

"platform": null,

"description": "# llm-council\n\nGet a council of LLMs to advise consult for you!\n\n## Installation\n\nThis plugin should be installed in the same environment as [LLM](https://llm.datasette.io/).\n```bash\nllm install llm-council\n```\n\n\n\n### Supported models/providers\nThe models themselves are fixed as of now with:\n- `openai`: `gpt-4o`\n- `anthropic`: `clause-3.5-sonnet`\n- `google`: `gemini-1.5-flash-latest`\n\nThe necessary `llm plugins` are already installed. But you still need to set the keys\n```bash\nllm keys set openai\nllm keys set claude\nllm keys set gemini\n```\n\n## Usage\n\nI usually run every query on all LLMs just to see what they have to say. And I love the llm library. You can now assemble your own council of advisors by simply running `llm council` like this:\n\n```bash\nllm council 'whats the california traffic law around double white lines?'\n```\n\nBy default, it uses `openai` and `anthropic`. But you can specify the providers by:\n\n```bash\nllm council -p openai -p anthropic 'tell me a joke'\n```\nPress Q or Ctrl + C to exit.\n\n## The system prompt\n\nThis is the prompt used by this tool:\n\n> Keep your answers brief and to the point.\n\nFeel free to modify it by passing the `--system` arg.\n\n## Development\n\nTo set up this plugin locally, first checkout the code. Then create a new virtual environment:\n```bash\ncd llm-council\nuv venv\nsource .venv/bin/activate\n\nuv pip install -r pyproject.toml\n```\nNow install the plugin with:\n```bash\nllm install -e .\n```\n",

"bugtrack_url": null,

"license": "MIT",

"summary": "Use LLM to generate and execute commands in your shell",

"version": "0.1.3",

"project_urls": {

"homepage": "https://github.com/nuwandavek/llm-council"

},

"split_keywords": [],

"urls": [

{

"comment_text": null,

"digests": {

"blake2b_256": "e8ef830875ffab11f2e9e85d4cc24f94d4642cc4d50dd26a906a6a68c6037b13",

"md5": "d8942d7b1537cfca9ef168dcf89ccf74",

"sha256": "80779eff9251cbef8f133021a6fd14846b518c77978559f26553f2cc3fd6c504"

},

"downloads": -1,

"filename": "llm_council-0.1.3-py3-none-any.whl",

"has_sig": false,

"md5_digest": "d8942d7b1537cfca9ef168dcf89ccf74",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": null,

"size": 4468,

"upload_time": "2025-02-01T00:55:26",

"upload_time_iso_8601": "2025-02-01T00:55:26.205707Z",

"url": "https://files.pythonhosted.org/packages/e8/ef/830875ffab11f2e9e85d4cc24f94d4642cc4d50dd26a906a6a68c6037b13/llm_council-0.1.3-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": null,

"digests": {

"blake2b_256": "ae277757ef6d22394088bf17491299d7c65831300586b15e2b58d67777d7b1a7",

"md5": "fda63b9daca5e8e27c9d7007c841d43b",

"sha256": "ac19699927432cdc297ada10f124082a3b45a5660aa7b6aa4409d393100406cd"

},

"downloads": -1,

"filename": "llm_council-0.1.3.tar.gz",

"has_sig": false,

"md5_digest": "fda63b9daca5e8e27c9d7007c841d43b",

"packagetype": "sdist",

"python_version": "source",

"requires_python": null,

"size": 3964,

"upload_time": "2025-02-01T00:55:27",

"upload_time_iso_8601": "2025-02-01T00:55:27.756487Z",

"url": "https://files.pythonhosted.org/packages/ae/27/7757ef6d22394088bf17491299d7c65831300586b15e2b58d67777d7b1a7/llm_council-0.1.3.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2025-02-01 00:55:27",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "nuwandavek",

"github_project": "llm-council",

"travis_ci": false,

"coveralls": false,

"github_actions": false,

"lcname": "llm-council"

}