# llmgraph

[](https://opensource.org/licenses/MIT)

[](https://badge.fury.io/py/llmgraph)

[](https://github.com/dylanhogg/llmgraph/actions/workflows/python-poetry-app.yml)

[](https://github.com/dylanhogg/llmgraph/tags)

[](https://pepy.tech/project/llmgraph)

[](https://colab.research.google.com/github/dylanhogg/llmgraph/blob/master/notebooks/llmgraph_example.ipynb)

<!-- [](https://libraries.io/github/dylanhogg/llmgraph) -->

Create knowledge graphs with LLMs.

llmgraph enables you to create knowledge graphs in [GraphML](http://graphml.graphdrawing.org/), [GEXF](https://gexf.net/), and HTML formats (generated via [pyvis](https://github.com/WestHealth/pyvis)) from a given source entity Wikipedia page. The knowledge graphs are generated by extracting world knowledge from ChatGPT or other large language models (LLMs) as supported by [LiteLLM](https://github.com/BerriAI/litellm).

For a background on knowledge graphs see a [youtube overview by Computerphile](https://www.youtube.com/watch?v=PZBm7M0HGzw)

## Features

- Create knowledge graphs, given a source entity.

- Uses ChatGPT (or another specified LLM) to extract world knowledge.

- Generate knowledge graphs in HTML, GraphML, and GEXF formats.

- Many entity types and relationships supported by [customised prompts](https://github.com/dylanhogg/llmgraph/blob/main/llmgraph/prompts.yaml).

- Cache support to iteratively grow a knowledge graph, efficiently.

- Outputs `total tokens` used to understand LLM costs (even though a default run is only about 1 cent).

- Customisable model (default is OpenAI `gpt-4o-mini` for speed and cost).

## Installation

You can install llmgraph using pip, ideally into a Python [virtual environment](https://realpython.com/python-virtual-environments-a-primer/#create-it):

```bash

pip install llmgraph

```

Alternatively, checkout [an example notebook](https://github.com/dylanhogg/llmgraph/blob/main/notebooks/llmgraph_example.ipynb) that uses llmgraph and you can run directly in Google Colab.

[](https://colab.research.google.com/github/dylanhogg/llmgraph/blob/master/notebooks/llmgraph_example.ipynb)

## Example Output

In addition to GraphML and GEXF formats, an HTML [pyvis](https://github.com/WestHealth/pyvis) physics enabled graph can be viewed:

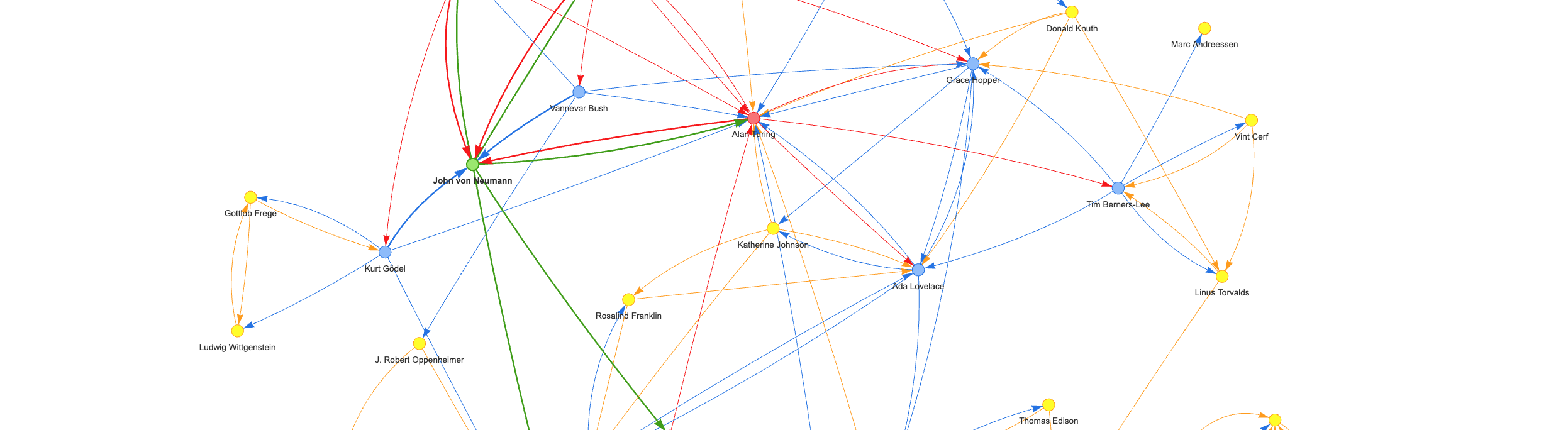

### Artificial Intelligence example

<sub>Generate above machine-learning graph:<br />`llmgraph machine-learning "https://en.wikipedia.org/wiki/Artificial_intelligence" --levels 4`

<br />View entire graph: <a target="_blank" href="https://blog.infocruncher.com/html/llmgraph/machine-learning_artificial-intelligence_v1.0.0_level4_fully_connected.html">machine-learning_artificial-intelligence_v1.0.0_level4_fully_connected.html</a></sub>

## llmgraph Usage

### Example Usage

The example above was generated with the following command, which requires an `entity_type` and a quoted `entity_wikipedia` souce url:

```bash

llmgraph machine-learning "https://en.wikipedia.org/wiki/Artificial_intelligence" --levels 3

```

This example creates a 3 level graph, based on the given start node `Artificial Intelligence`.

By default OpenAI is used and you will need to set an environment variable '`OPENAI_API_KEY`' prior to running. See the [OpenAI docs](https://platform.openai.com/docs/quickstart/step-2-setup-your-api-key) for more info. The `total tokens used` is output as the run progresses. For reference this 3 level example used a total of 7,650 gpt-4o-mini tokens, which is approx 1.5 cents as of Oct 2023.

You can also specify a different LLM provider, including running with a local [ollama](https://github.com/jmorganca/ollama) model. You should be able to specify anything supported by [LiteLLM](https://github.com/BerriAI/litellm) as described here: https://docs.litellm.ai/docs/providers. Note that the prompts to extract related entities were tested with OpenAI and may not work as well with other models.

Local [ollama/llama2](https://ollama.ai/library/llama2) model example:

```bash

llmgraph machine-learning "https://en.wikipedia.org/wiki/Artificial_intelligence" --levels 3 --llm-model ollama/llama2 --llm-base-url http://localhost:<your_port>

```

The `entity_type` sets the LLM prompt used to find related entities to include in the graph. The full list can be seen in [prompts.yaml](https://github.com/dylanhogg/llmgraph/blob/main/llmgraph/prompts.yaml) and include the following entity types:

- `automobile`

- `book`

- `computer-game`

- `concepts-general`

- `concepts-science`

- `creative-general`

- `documentary`

- `food`

- `machine-learning`

- `movie`

- `music`

- `people-historical`

- `podcast`

- `software-engineering`

- `tv`

### Required Arguments

- `entity_type` (TEXT): Entity type (e.g. movie)

- `entity_wikipedia` (TEXT): Full Wikipedia link to the root entity

### Optional Arguments

- `--entity-root` (TEXT): Optional root entity name override if different from the Wikipedia page title [default: None]

- `--levels` (INTEGER): Number of levels deep to construct from the central root entity [default: 2]

- `--max-sum-total-tokens` (INTEGER): Maximum sum of tokens for graph generation [default: 200000]

- `--output-folder` (TEXT): Folder location to write outputs [default: ./_output/]

- `--llm-model` (TEXT): The model name [default: gpt-4o-mini]

- `--llm-temp` (FLOAT): LLM temperature value [default: 0.0]

- `--llm-base-url` (TEXT): LLM will use custom base URL instead of the automatic one [default: None]

- `--version`: Display llmgraph version and exit.

- `--help`: Show this message and exit.

## More Examples of HTML Output

Here are some more examples of the HTML graph output for different entity types and root entities (with commands to generate and links to view full interactive graphs).

Install llmgraph to create your own knowledge graphs! Feel free to share interesting results in the [issue section](https://github.com/dylanhogg/llmgraph/issues/new) above with a documentation label :)

### Knowledge graph concept example

<sub>Command to generate above concepts-general graph:<br />`llmgraph concepts-general "https://en.wikipedia.org/wiki/Knowledge_graph" --levels 4`

<br />View entire graph: <a target="_blank" href="https://blog.infocruncher.com/html/llmgraph/concepts-general_knowledge-graph_v1.0.0_level4_fully_connected.html">concepts-general_knowledge-graph_v1.0.0_level4_fully_connected.html</a></sub>

### Inception movie example

<sub>Command to generate above movie graph:<br />`llmgraph movie "https://en.wikipedia.org/wiki/Inception" --levels 4`

<br />View entire graph: <a target="_blank" href="https://blog.infocruncher.com/html/llmgraph/movie_inception_v1.0.0_level4_fully_connected.html">movie_inception_v1.0.0_level4_fully_connected.html</a></sub>

### OpenAI company example

<sub>Command to generate above company graph:<br />`llmgraph company "https://en.wikipedia.org/wiki/OpenAI" --levels 4`

<br />View entire graph: <a target="_blank" href="https://blog.infocruncher.com/html/llmgraph/company_openai_v1.0.0_level4_fully_connected.html">company_openai_v1.0.0_level4_fully_connected.html</a></sub>

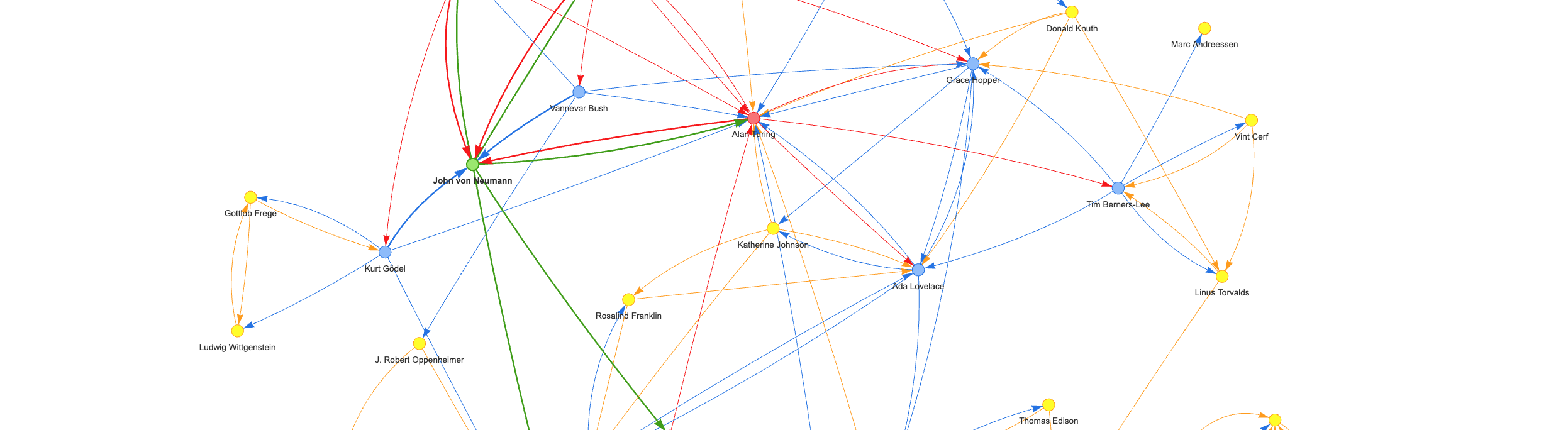

### John von Neumann people example

<sub>Command to generate above people-historical graph:<br />`llmgraph people-historical "https://en.wikipedia.org/wiki/John_von_Neumann" --levels 4`

<br />View entire graph: <a target="_blank" href="https://blog.infocruncher.com/html/llmgraph/people-historical_john-von-neumann_v1.0.0_level4_fully_connected.html">people-historical_john-von-neumann_v1.0.0_level4_fully_connected.html</a></sub>

## Example of Prompt Used to Generate Graph

Here is an example of the prompt template, with place holders, used to generate related entities from a given source entity. This is applied recursively to create a knowledge graph, merging duplicated nodes as required.

```

You are knowledgeable about {knowledgeable_about}.

List, in json array format, the top {top_n} {entities} most like '{{entity_root}}'

with Wikipedia link, reasons for similarity, similarity on scale of 0 to 1.

Format your response in json array format as an array with column names: 'name', 'wikipedia_link', 'reason_for_similarity', and 'similarity'.

Example response: {{{{"name": "Example {entity}","wikipedia_link": "https://en.wikipedia.org/wiki/Example_{entity_underscored}","reason_for_similarity": "Reason for similarity","similarity": 0.5}}}}

```

It works well on the primary tested LLM, being OpenAI gpt-4o-mini. Results are ok, but not as good using Llama2. The prompt source of truth and additional details can be see in [prompts.yaml](https://github.com/dylanhogg/llmgraph/blob/main/llmgraph/prompts.yaml).

Each entity type has custom placeholders, for example `concepts-general` and `documentary`:

```

concepts-general:

system: You are a highly knowledgeable ontologist and creator of knowledge graphs.

knowledgeable_about: many concepts and ontologies.

entities: concepts

entity: concept name

top_n: 5

documentary:

system: You are knowledgeable about documentaries of all types, and genres.

knowledgeable_about: documentaries of all types, and genres

entities: Documentaries

entity: Documentary

top_n: 5

```

## Cached LLM API calls

Each call to the LLM API (and Wikipedia) is cached locally in a `.joblib_cache` folder. This allows an interrupted run to be resumed without duplicating identical calls. It also allows a re-run with a higher `--level` option to re-use results from the lower level run (assuming the same entity type and source).

## Future Improvements

- Contrast graph output from different LLM models (e.g. [Llama2](https://huggingface.co/docs/transformers/model_doc/llama2) vs [Mistral](https://huggingface.co/docs/transformers/model_doc/mistral) vs [ChatGPT-4](https://openai.com/chatgpt))

- Investigate the hypothosis that this approach provides insight into how an LLM views the world.

- Include more examples in this documentation and make examples available for easy browsing.

- Instructions for running locally and adding a custom `entity_type` prompt.

- Better pyviz html output, in particular including reasons for entity relationship in UI and arguments for pixel size etc.

- Parallelise API calls and result processing.

- Remove dependency on Wikipedia entities as a source.

- Contrast results from llmgraphg with other non-LLM graph construction e.g. using wikipedia page links, or [direct article embeddings](https://txt.cohere.com/embedding-archives-wikipedia/).

## Contributing

Contributions to llmgraph are welcome. Please follow these steps:

1. Fork the repository.

2. Create a new branch for your feature or bug fix.

3. Make your changes and commit them.

4. Create a pull request with a description of your changes.

## Thanks 🙏

Thanks to @breitburg for implementing the LiteLLM updates.

## References

- https://arxiv.org/abs/2211.10511 - Knowledge Graph Generation From Text

- https://arxiv.org/abs/2310.04562 - Towards Foundation Models for Knowledge Graph Reasoning

- https://arxiv.org/abs/2206.14268 - BertNet: Harvesting Knowledge Graphs with Arbitrary Relations from Pretrained Language Models

- https://arxiv.org/abs/2312.02783 - Large Language Models on Graphs: A Comprehensive Survey

- https://github.com/aws/graph-notebook - Graph Notebook: easily query and visualize graphs

- https://github.com/KiddoZhu/NBFNet-PyG - PyG re-implementation of Neural Bellman-Ford Networks

- https://caminao.blog/knowledge-management-booklet/a-hitchhikers-guide-to-knowledge-galaxies/ - A Hitchhiker’s Guide to Knowledge Galaxies

- https://github.com/PeterGriffinJin/Awesome-Language-Model-on-Graphs - A curated list of papers and resources based on "Large Language Models on Graphs: A Comprehensive Survey".

Raw data

{

"_id": null,

"home_page": "https://github.com/dylanhogg/llmgraph",

"name": "llmgraph",

"maintainer": null,

"docs_url": null,

"requires_python": "<4.0,>=3.10",

"maintainer_email": null,

"keywords": "Knowledge graph, LLM",

"author": "Dylan Hogg",

"author_email": "dylanhogg@gmail.com",

"download_url": "https://files.pythonhosted.org/packages/d1/a9/381209e400c15805a695ba7948712234ebd50a96acfd3b36a24bf0d569bb/llmgraph-1.3.2.tar.gz",

"platform": null,

"description": "# llmgraph\n\n[](https://opensource.org/licenses/MIT)\n[](https://badge.fury.io/py/llmgraph)\n[](https://github.com/dylanhogg/llmgraph/actions/workflows/python-poetry-app.yml)\n[](https://github.com/dylanhogg/llmgraph/tags)\n[](https://pepy.tech/project/llmgraph)\n[](https://colab.research.google.com/github/dylanhogg/llmgraph/blob/master/notebooks/llmgraph_example.ipynb)\n\n<!-- [](https://libraries.io/github/dylanhogg/llmgraph) -->\n\nCreate knowledge graphs with LLMs.\n\n\n\nllmgraph enables you to create knowledge graphs in [GraphML](http://graphml.graphdrawing.org/), [GEXF](https://gexf.net/), and HTML formats (generated via [pyvis](https://github.com/WestHealth/pyvis)) from a given source entity Wikipedia page. The knowledge graphs are generated by extracting world knowledge from ChatGPT or other large language models (LLMs) as supported by [LiteLLM](https://github.com/BerriAI/litellm).\n\nFor a background on knowledge graphs see a [youtube overview by Computerphile](https://www.youtube.com/watch?v=PZBm7M0HGzw)\n\n## Features\n\n- Create knowledge graphs, given a source entity.\n- Uses ChatGPT (or another specified LLM) to extract world knowledge.\n- Generate knowledge graphs in HTML, GraphML, and GEXF formats.\n- Many entity types and relationships supported by [customised prompts](https://github.com/dylanhogg/llmgraph/blob/main/llmgraph/prompts.yaml).\n- Cache support to iteratively grow a knowledge graph, efficiently.\n- Outputs `total tokens` used to understand LLM costs (even though a default run is only about 1 cent).\n- Customisable model (default is OpenAI `gpt-4o-mini` for speed and cost).\n\n## Installation\n\nYou can install llmgraph using pip, ideally into a Python [virtual environment](https://realpython.com/python-virtual-environments-a-primer/#create-it):\n\n```bash\npip install llmgraph\n```\n\nAlternatively, checkout [an example notebook](https://github.com/dylanhogg/llmgraph/blob/main/notebooks/llmgraph_example.ipynb) that uses llmgraph and you can run directly in Google Colab.\n\n[](https://colab.research.google.com/github/dylanhogg/llmgraph/blob/master/notebooks/llmgraph_example.ipynb)\n\n## Example Output\n\nIn addition to GraphML and GEXF formats, an HTML [pyvis](https://github.com/WestHealth/pyvis) physics enabled graph can be viewed:\n\n### Artificial Intelligence example\n\n\n<sub>Generate above machine-learning graph:<br />`llmgraph machine-learning \"https://en.wikipedia.org/wiki/Artificial_intelligence\" --levels 4`\n<br />View entire graph: <a target=\"_blank\" href=\"https://blog.infocruncher.com/html/llmgraph/machine-learning_artificial-intelligence_v1.0.0_level4_fully_connected.html\">machine-learning_artificial-intelligence_v1.0.0_level4_fully_connected.html</a></sub>\n\n## llmgraph Usage\n\n### Example Usage\n\nThe example above was generated with the following command, which requires an `entity_type` and a quoted `entity_wikipedia` souce url:\n\n```bash\nllmgraph machine-learning \"https://en.wikipedia.org/wiki/Artificial_intelligence\" --levels 3\n```\n\nThis example creates a 3 level graph, based on the given start node `Artificial Intelligence`.\n\nBy default OpenAI is used and you will need to set an environment variable '`OPENAI_API_KEY`' prior to running. See the [OpenAI docs](https://platform.openai.com/docs/quickstart/step-2-setup-your-api-key) for more info. The `total tokens used` is output as the run progresses. For reference this 3 level example used a total of 7,650 gpt-4o-mini tokens, which is approx 1.5 cents as of Oct 2023.\n\nYou can also specify a different LLM provider, including running with a local [ollama](https://github.com/jmorganca/ollama) model. You should be able to specify anything supported by [LiteLLM](https://github.com/BerriAI/litellm) as described here: https://docs.litellm.ai/docs/providers. Note that the prompts to extract related entities were tested with OpenAI and may not work as well with other models.\n\nLocal [ollama/llama2](https://ollama.ai/library/llama2) model example:\n\n```bash\nllmgraph machine-learning \"https://en.wikipedia.org/wiki/Artificial_intelligence\" --levels 3 --llm-model ollama/llama2 --llm-base-url http://localhost:<your_port>\n```\n\nThe `entity_type` sets the LLM prompt used to find related entities to include in the graph. The full list can be seen in [prompts.yaml](https://github.com/dylanhogg/llmgraph/blob/main/llmgraph/prompts.yaml) and include the following entity types:\n\n- `automobile`\n- `book`\n- `computer-game`\n- `concepts-general`\n- `concepts-science`\n- `creative-general`\n- `documentary`\n- `food`\n- `machine-learning`\n- `movie`\n- `music`\n- `people-historical`\n- `podcast`\n- `software-engineering`\n- `tv`\n\n### Required Arguments\n\n- `entity_type` (TEXT): Entity type (e.g. movie)\n- `entity_wikipedia` (TEXT): Full Wikipedia link to the root entity\n\n### Optional Arguments\n\n- `--entity-root` (TEXT): Optional root entity name override if different from the Wikipedia page title [default: None]\n- `--levels` (INTEGER): Number of levels deep to construct from the central root entity [default: 2]\n- `--max-sum-total-tokens` (INTEGER): Maximum sum of tokens for graph generation [default: 200000]\n- `--output-folder` (TEXT): Folder location to write outputs [default: ./_output/]\n- `--llm-model` (TEXT): The model name [default: gpt-4o-mini]\n- `--llm-temp` (FLOAT): LLM temperature value [default: 0.0]\n- `--llm-base-url` (TEXT): LLM will use custom base URL instead of the automatic one [default: None]\n- `--version`: Display llmgraph version and exit.\n- `--help`: Show this message and exit.\n\n## More Examples of HTML Output\n\nHere are some more examples of the HTML graph output for different entity types and root entities (with commands to generate and links to view full interactive graphs).\n\nInstall llmgraph to create your own knowledge graphs! Feel free to share interesting results in the [issue section](https://github.com/dylanhogg/llmgraph/issues/new) above with a documentation label :)\n\n### Knowledge graph concept example\n\n\n<sub>Command to generate above concepts-general graph:<br />`llmgraph concepts-general \"https://en.wikipedia.org/wiki/Knowledge_graph\" --levels 4`\n<br />View entire graph: <a target=\"_blank\" href=\"https://blog.infocruncher.com/html/llmgraph/concepts-general_knowledge-graph_v1.0.0_level4_fully_connected.html\">concepts-general_knowledge-graph_v1.0.0_level4_fully_connected.html</a></sub>\n\n### Inception movie example\n\n\n<sub>Command to generate above movie graph:<br />`llmgraph movie \"https://en.wikipedia.org/wiki/Inception\" --levels 4`\n<br />View entire graph: <a target=\"_blank\" href=\"https://blog.infocruncher.com/html/llmgraph/movie_inception_v1.0.0_level4_fully_connected.html\">movie_inception_v1.0.0_level4_fully_connected.html</a></sub>\n\n### OpenAI company example\n\n\n<sub>Command to generate above company graph:<br />`llmgraph company \"https://en.wikipedia.org/wiki/OpenAI\" --levels 4`\n<br />View entire graph: <a target=\"_blank\" href=\"https://blog.infocruncher.com/html/llmgraph/company_openai_v1.0.0_level4_fully_connected.html\">company_openai_v1.0.0_level4_fully_connected.html</a></sub>\n\n### John von Neumann people example\n\n\n<sub>Command to generate above people-historical graph:<br />`llmgraph people-historical \"https://en.wikipedia.org/wiki/John_von_Neumann\" --levels 4`\n<br />View entire graph: <a target=\"_blank\" href=\"https://blog.infocruncher.com/html/llmgraph/people-historical_john-von-neumann_v1.0.0_level4_fully_connected.html\">people-historical_john-von-neumann_v1.0.0_level4_fully_connected.html</a></sub>\n\n## Example of Prompt Used to Generate Graph\n\nHere is an example of the prompt template, with place holders, used to generate related entities from a given source entity. This is applied recursively to create a knowledge graph, merging duplicated nodes as required.\n\n```\nYou are knowledgeable about {knowledgeable_about}.\nList, in json array format, the top {top_n} {entities} most like '{{entity_root}}'\nwith Wikipedia link, reasons for similarity, similarity on scale of 0 to 1.\nFormat your response in json array format as an array with column names: 'name', 'wikipedia_link', 'reason_for_similarity', and 'similarity'.\nExample response: {{{{\"name\": \"Example {entity}\",\"wikipedia_link\": \"https://en.wikipedia.org/wiki/Example_{entity_underscored}\",\"reason_for_similarity\": \"Reason for similarity\",\"similarity\": 0.5}}}}\n```\n\nIt works well on the primary tested LLM, being OpenAI gpt-4o-mini. Results are ok, but not as good using Llama2. The prompt source of truth and additional details can be see in [prompts.yaml](https://github.com/dylanhogg/llmgraph/blob/main/llmgraph/prompts.yaml).\n\nEach entity type has custom placeholders, for example `concepts-general` and `documentary`:\n\n```\nconcepts-general:\n system: You are a highly knowledgeable ontologist and creator of knowledge graphs.\n knowledgeable_about: many concepts and ontologies.\n entities: concepts\n entity: concept name\n top_n: 5\n\ndocumentary:\n system: You are knowledgeable about documentaries of all types, and genres.\n knowledgeable_about: documentaries of all types, and genres\n entities: Documentaries\n entity: Documentary\n top_n: 5\n```\n\n## Cached LLM API calls\n\nEach call to the LLM API (and Wikipedia) is cached locally in a `.joblib_cache` folder. This allows an interrupted run to be resumed without duplicating identical calls. It also allows a re-run with a higher `--level` option to re-use results from the lower level run (assuming the same entity type and source).\n\n## Future Improvements\n\n- Contrast graph output from different LLM models (e.g. [Llama2](https://huggingface.co/docs/transformers/model_doc/llama2) vs [Mistral](https://huggingface.co/docs/transformers/model_doc/mistral) vs [ChatGPT-4](https://openai.com/chatgpt))\n- Investigate the hypothosis that this approach provides insight into how an LLM views the world.\n- Include more examples in this documentation and make examples available for easy browsing.\n- Instructions for running locally and adding a custom `entity_type` prompt.\n- Better pyviz html output, in particular including reasons for entity relationship in UI and arguments for pixel size etc.\n- Parallelise API calls and result processing.\n- Remove dependency on Wikipedia entities as a source.\n- Contrast results from llmgraphg with other non-LLM graph construction e.g. using wikipedia page links, or [direct article embeddings](https://txt.cohere.com/embedding-archives-wikipedia/).\n\n## Contributing\n\nContributions to llmgraph are welcome. Please follow these steps:\n\n1. Fork the repository.\n2. Create a new branch for your feature or bug fix.\n3. Make your changes and commit them.\n4. Create a pull request with a description of your changes.\n\n## Thanks \ud83d\ude4f\n\nThanks to @breitburg for implementing the LiteLLM updates.\n\n## References\n\n- https://arxiv.org/abs/2211.10511 - Knowledge Graph Generation From Text\n- https://arxiv.org/abs/2310.04562 - Towards Foundation Models for Knowledge Graph Reasoning\n- https://arxiv.org/abs/2206.14268 - BertNet: Harvesting Knowledge Graphs with Arbitrary Relations from Pretrained Language Models\n- https://arxiv.org/abs/2312.02783 - Large Language Models on Graphs: A Comprehensive Survey\n- https://github.com/aws/graph-notebook - Graph Notebook: easily query and visualize graphs\n- https://github.com/KiddoZhu/NBFNet-PyG - PyG re-implementation of Neural Bellman-Ford Networks\n- https://caminao.blog/knowledge-management-booklet/a-hitchhikers-guide-to-knowledge-galaxies/ - A Hitchhiker\u2019s Guide to Knowledge Galaxies\n- https://github.com/PeterGriffinJin/Awesome-Language-Model-on-Graphs - A curated list of papers and resources based on \"Large Language Models on Graphs: A Comprehensive Survey\".\n",

"bugtrack_url": null,

"license": "MIT",

"summary": "Create knowledge graphs with LLMs",

"version": "1.3.2",

"project_urls": {

"Homepage": "https://github.com/dylanhogg/llmgraph",

"Repository": "https://github.com/dylanhogg/llmgraph"

},

"split_keywords": [

"knowledge graph",

" llm"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "7d3fd9b9bdd651b1a5d128e97934c0f4f6858f0b1df38cbac2984dd59f54c03f",

"md5": "13fcdd349f319944f037dd6690d30611",

"sha256": "117214ef67ac12c831b4c5f0b36109817662175ee0fc0fd949a23a005f498115"

},

"downloads": -1,

"filename": "llmgraph-1.3.2-py3-none-any.whl",

"has_sig": false,

"md5_digest": "13fcdd349f319944f037dd6690d30611",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": "<4.0,>=3.10",

"size": 19884,

"upload_time": "2025-02-12T01:40:10",

"upload_time_iso_8601": "2025-02-12T01:40:10.380963Z",

"url": "https://files.pythonhosted.org/packages/7d/3f/d9b9bdd651b1a5d128e97934c0f4f6858f0b1df38cbac2984dd59f54c03f/llmgraph-1.3.2-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "d1a9381209e400c15805a695ba7948712234ebd50a96acfd3b36a24bf0d569bb",

"md5": "52164d5156d19e1c7d42925d348a5dc0",

"sha256": "51b82f790dba5df832e67698db76b6515a058d9a5a736812900a0b04c76b054b"

},

"downloads": -1,

"filename": "llmgraph-1.3.2.tar.gz",

"has_sig": false,

"md5_digest": "52164d5156d19e1c7d42925d348a5dc0",

"packagetype": "sdist",

"python_version": "source",

"requires_python": "<4.0,>=3.10",

"size": 20285,

"upload_time": "2025-02-12T01:40:12",

"upload_time_iso_8601": "2025-02-12T01:40:12.362292Z",

"url": "https://files.pythonhosted.org/packages/d1/a9/381209e400c15805a695ba7948712234ebd50a96acfd3b36a24bf0d569bb/llmgraph-1.3.2.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2025-02-12 01:40:12",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "dylanhogg",

"github_project": "llmgraph",

"travis_ci": false,

"coveralls": false,

"github_actions": true,

"requirements": [],

"lcname": "llmgraph"

}