| Name | mmreact JSON |

| Version |

0.0.1.dev0

JSON

JSON |

| download |

| home_page | |

| Summary | MM-REACT: Prompting ChatGPT for Multimodal Reasoning and Action |

| upload_time | 2023-03-22 06:34:11 |

| maintainer | |

| docs_url | None |

| author | Shadow Walker |

| requires_python | |

| license | |

| keywords |

mmreact

|

| VCS |

|

| bugtrack_url |

|

| requirements |

No requirements were recorded.

|

| Travis-CI |

No Travis.

|

| coveralls test coverage |

No coveralls.

|

# MM-REACT: Prompting ChatGPT for Multimodal Reasoning and Action

## :fire: News

* **[2023.03.21]** We build MM-REACT, a system paradigm that integrates ChatGPT with a pool of vision experts to achieve multimodal reasoning and action.

* **[2023.03.21]** Feel free to explore various demo videos on our [website](https://multimodal-react.github.io/)!

* **[2023.03.21]** Try our [live demo](https://huggingface.co/spaces/microsoft-cognitive-service/mm-react)!

## :notes: Introduction

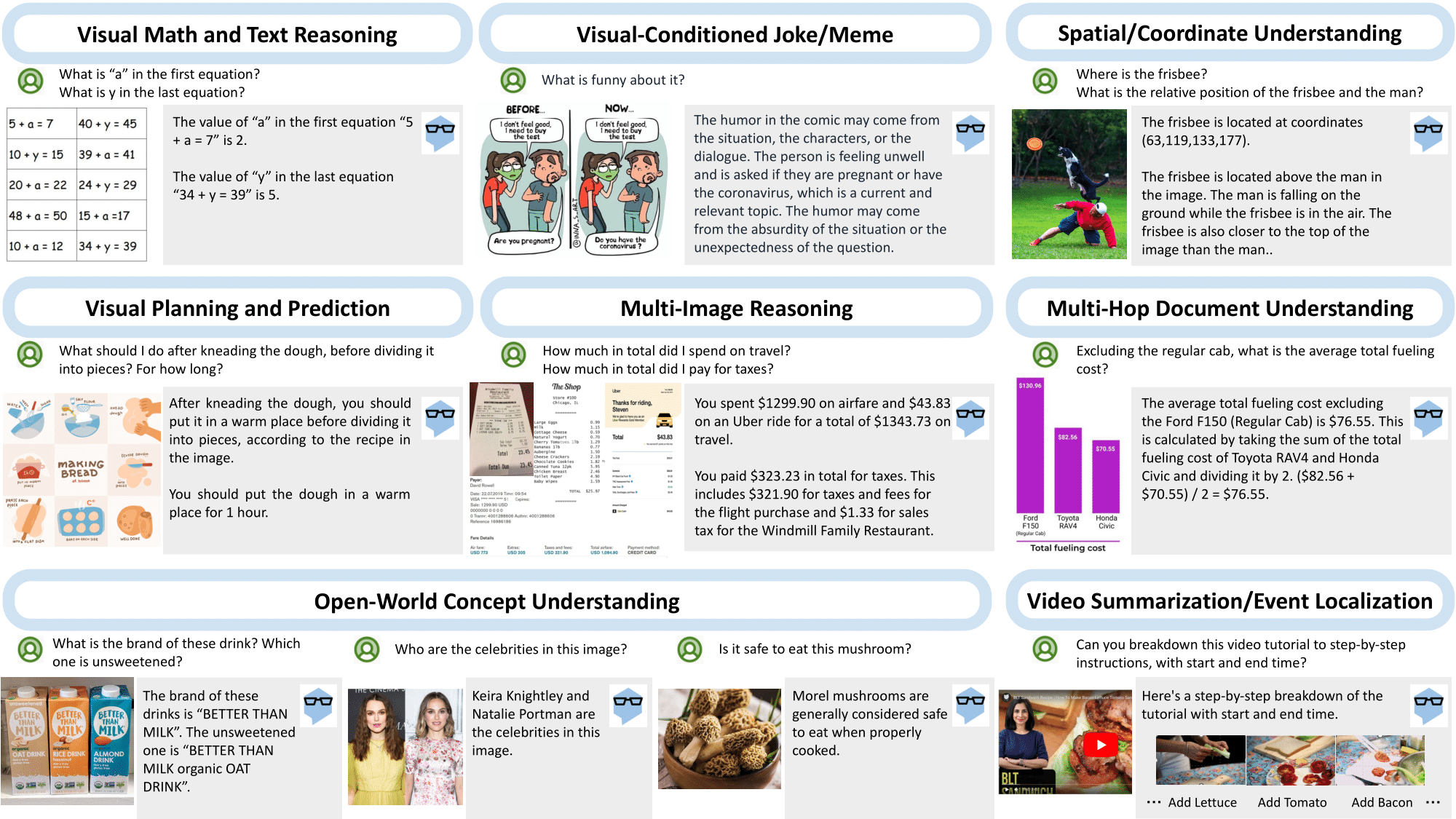

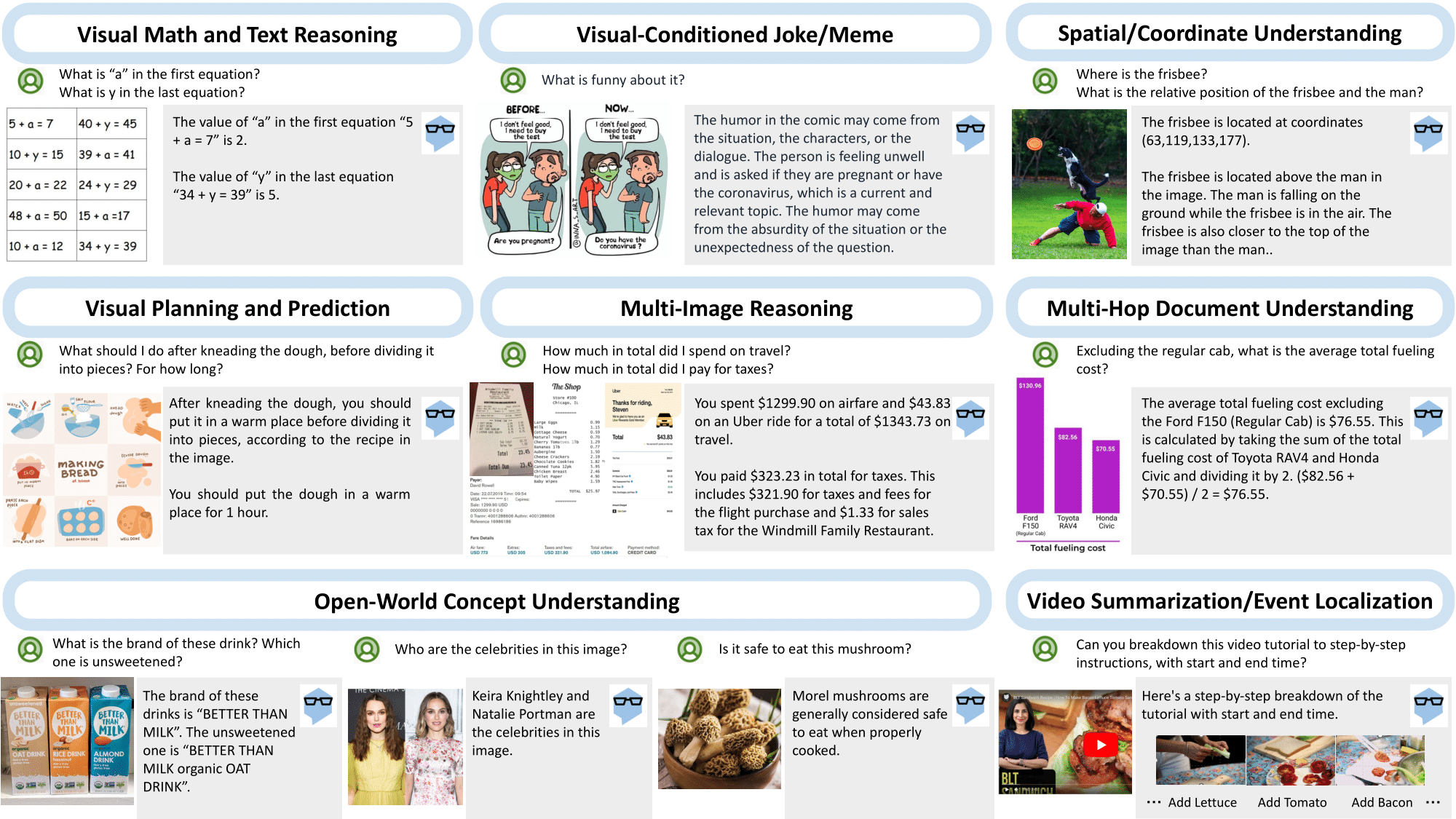

MM-REACT allocates specialized vision experts with ChatGPT to solve challenging visual understanding tasks through multimodal reasoning and action.

### MM-ReAct Design

* To enable the image as input, we simply use the file path as the input to ChatGPT. The file path functions as a placeholder, allowing ChatGPT to treat it as a black box.

* Whenever a specific property, such as celebrity names or box coordinates, is required, ChatGPT is expected to seek help from a specific vision expert to identify the desired information.

* The expert output is serialized as text and combined with the input to further activate ChatGPT.

* If no external experts are needed, we directly return the response to the user.

## Getting Started

MM-REACT code is bases on langchain.

Please refer to [langchain](https://github.com/hwchase17/langchain) for [instructions on installation](https://github.com/hwchase17/langchain#quick-install) and [documentation](https://github.com/hwchase17/langchain#-documentation).

### Additional packages needed for MM-REACT

```bash

pip install PIL imagesize

```

### Here are the list of resources you need to set up in Azure and their environment variables

1. Computer Vision service, for Tags, Objects, Faces and Celebrity.

```bash

export IMUN_URL="https://yourazureendpoint.cognitiveservices.azure.com/vision/v3.2/analyze"

export IMUN_PARAMS="visualFeatures=Tags,Objects,Faces"

export IMUN_CELEB_URL="https://yourazureendpoint.cognitiveservices.azure.com/vision/v3.2/models/celebrities/analyze"

export IMUN_CELEB_PARAMS=""

export IMUN_SUBSCRIPTION_KEY=

```

2. Computer Vision service for dense captioning. With a potentially different subscription key (e.g. westus region supports this)

```bash

export IMUN_URL2="https://yourazureendpoint.cognitiveservices.azure.com/computervision/imageanalysis:analyze"

export IMUN_PARAMS2="api-version=2023-02-01-preview&model-version=latest&features=denseCaptions"

export IMUN_SUBSCRIPTION_KEY2=

```

3. Form Recogizer (OCR) prebuilt services

```bash

export IMUN_OCR_READ_URL="https://yourazureendpoint.cognitiveservices.azure.com/formrecognizer/documentModels/prebuilt-read:analyze"

export IMUN_OCR_RECEIPT_URL="https://yourazureendpoint.cognitiveservices.azure.com/formrecognizer/documentModels/prebuilt-receipt:analyze"

export IMUN_OCR_BC_URL="https://yourazureendpoint.cognitiveservices.azure.com/formrecognizer/documentModels/prebuilt-businessCard:analyze"

export IMUN_OCR_LAYOUT_URL="https://yourazureendpoint.cognitiveservices.azure.com/formrecognizer/documentModels/prebuilt-layout:analyze"

export IMUN_OCR_PARAMS="api-version=2022-08-31"

export IMUN_OCR_SUBSCRIPTION_KEY=

```

4. Bing search service

```bash

export BING_SEARCH_URL="https://api.bing.microsoft.com/v7.0/search"

export BING_SUBSCRIPTION_KEY=

```

5. Bing visual search service (available on a separate pricing)

```bash

export BING_VIS_SEARCH_URL="https://api.bing.microsoft.com/v7.0/images/visualsearch"

export BING_SUBSCRIPTION_KEY_VIS=

```

6. Azure OpenAI service

```bash

export OPENAI_API_TYPE=azure

export OPENAI_API_VERSION=2022-12-01

export OPENAI_API_BASE=https://yourazureendpoint.openai.azure.com/

export OPENAI_API_KEY=

```

Note: At the time of writing, we use and test against private endpoint. The public endpoint is now released and we plan to add support for it later.

7. Photo editting local service

```bash

export PHOTO_EDIT_ENDPOINT_URL="http://127.0.0.1:123/"

export PHOTO_EDIT_ENDPOINT_URL_SHORT=127.0.0.1

```

### Sample code to run conversational-assistant agent against an image

[conversational-assistant sample](sample.py)

## Acknowledgement

We are highly inspired by [langchain](https://github.com/hwchase17/langchain).

## Citation

```

@article{yang2023mmreact,

author = {Zhengyuan Yang* and Linjie Li* and Jianfeng Wang* and Kevin Lin* and Ehsan Azarnasab* and Faisal Ahmed* and Zicheng Liu and Ce Liu and Michael Zeng and Lijuan Wang^},

title = {MM-REACT: Prompting ChatGPT for Multimodal Reasoning and Action},

publisher = {arXiv},

year = {2023},

}

```

## Contributing

This project welcomes contributions and suggestions. Most contributions require you to agree to a

Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us

the rights to use your contribution. For details, visit https://cla.opensource.microsoft.com.

When you submit a pull request, a CLA bot will automatically determine whether you need to provide

a CLA and decorate the PR appropriately (e.g., status check, comment). Simply follow the instructions

provided by the bot. You will only need to do this once across all repos using our CLA.

This project has adopted the [Microsoft Open Source Code of Conduct](https://opensource.microsoft.com/codeofconduct/).

For more information see the [Code of Conduct FAQ](https://opensource.microsoft.com/codeofconduct/faq/) or

contact [opencode@microsoft.com](mailto:opencode@microsoft.com) with any additional questions or comments.

## Trademarks

This project may contain trademarks or logos for projects, products, or services. Authorized use of Microsoft

trademarks or logos is subject to and must follow

[Microsoft's Trademark & Brand Guidelines](https://www.microsoft.com/en-us/legal/intellectualproperty/trademarks/usage/general).

Use of Microsoft trademarks or logos in modified versions of this project must not cause confusion or imply Microsoft sponsorship.

Any use of third-party trademarks or logos are subject to those third-party's policies.

Raw data

{

"_id": null,

"home_page": "",

"name": "mmreact",

"maintainer": "",

"docs_url": null,

"requires_python": "",

"maintainer_email": "",

"keywords": "mmreact",

"author": "Shadow Walker",

"author_email": "",

"download_url": "",

"platform": null,

"description": "# MM-REACT: Prompting ChatGPT for Multimodal Reasoning and Action\n\n## :fire: News\n* **[2023.03.21]** We build MM-REACT, a system paradigm that integrates ChatGPT with a pool of vision experts to achieve multimodal reasoning and action.\n* **[2023.03.21]** Feel free to explore various demo videos on our [website](https://multimodal-react.github.io/)!\n* **[2023.03.21]** Try our [live demo](https://huggingface.co/spaces/microsoft-cognitive-service/mm-react)!\n\n## :notes: Introduction\n\nMM-REACT allocates specialized vision experts with ChatGPT to solve challenging visual understanding tasks through multimodal reasoning and action.\n\n### MM-ReAct Design\n\n* To enable the image as input, we simply use the file path as the input to ChatGPT. The file path functions as a placeholder, allowing ChatGPT to treat it as a black box.\n* Whenever a specific property, such as celebrity names or box coordinates, is required, ChatGPT is expected to seek help from a specific vision expert to identify the desired information.\n* The expert output is serialized as text and combined with the input to further activate ChatGPT.\n* If no external experts are needed, we directly return the response to the user.\n\n## Getting Started\nMM-REACT code is bases on langchain.\n\nPlease refer to [langchain](https://github.com/hwchase17/langchain) for [instructions on installation](https://github.com/hwchase17/langchain#quick-install) and [documentation](https://github.com/hwchase17/langchain#-documentation).\n\n### Additional packages needed for MM-REACT\n\n```bash\npip install PIL imagesize\n```\n\n### Here are the list of resources you need to set up in Azure and their environment variables\n\n1. Computer Vision service, for Tags, Objects, Faces and Celebrity.\n\n```bash\nexport IMUN_URL=\"https://yourazureendpoint.cognitiveservices.azure.com/vision/v3.2/analyze\"\nexport IMUN_PARAMS=\"visualFeatures=Tags,Objects,Faces\"\nexport IMUN_CELEB_URL=\"https://yourazureendpoint.cognitiveservices.azure.com/vision/v3.2/models/celebrities/analyze\"\nexport IMUN_CELEB_PARAMS=\"\"\nexport IMUN_SUBSCRIPTION_KEY=\n```\n\n2. Computer Vision service for dense captioning. With a potentially different subscription key (e.g. westus region supports this)\n\n```bash\nexport IMUN_URL2=\"https://yourazureendpoint.cognitiveservices.azure.com/computervision/imageanalysis:analyze\"\nexport IMUN_PARAMS2=\"api-version=2023-02-01-preview&model-version=latest&features=denseCaptions\"\nexport IMUN_SUBSCRIPTION_KEY2=\n```\n\n3. Form Recogizer (OCR) prebuilt services\n\n```bash\nexport IMUN_OCR_READ_URL=\"https://yourazureendpoint.cognitiveservices.azure.com/formrecognizer/documentModels/prebuilt-read:analyze\"\nexport IMUN_OCR_RECEIPT_URL=\"https://yourazureendpoint.cognitiveservices.azure.com/formrecognizer/documentModels/prebuilt-receipt:analyze\"\nexport IMUN_OCR_BC_URL=\"https://yourazureendpoint.cognitiveservices.azure.com/formrecognizer/documentModels/prebuilt-businessCard:analyze\"\nexport IMUN_OCR_LAYOUT_URL=\"https://yourazureendpoint.cognitiveservices.azure.com/formrecognizer/documentModels/prebuilt-layout:analyze\"\nexport IMUN_OCR_PARAMS=\"api-version=2022-08-31\"\nexport IMUN_OCR_SUBSCRIPTION_KEY=\n```\n\n4. Bing search service\n\n```bash\nexport BING_SEARCH_URL=\"https://api.bing.microsoft.com/v7.0/search\"\nexport BING_SUBSCRIPTION_KEY=\n```\n\n5. Bing visual search service (available on a separate pricing)\n\n```bash\nexport BING_VIS_SEARCH_URL=\"https://api.bing.microsoft.com/v7.0/images/visualsearch\"\nexport BING_SUBSCRIPTION_KEY_VIS=\n```\n\n6. Azure OpenAI service\n\n```bash\nexport OPENAI_API_TYPE=azure\nexport OPENAI_API_VERSION=2022-12-01\nexport OPENAI_API_BASE=https://yourazureendpoint.openai.azure.com/\nexport OPENAI_API_KEY=\n```\n\nNote: At the time of writing, we use and test against private endpoint. The public endpoint is now released and we plan to add support for it later.\n\n7. Photo editting local service\n\n```bash\nexport PHOTO_EDIT_ENDPOINT_URL=\"http://127.0.0.1:123/\"\nexport PHOTO_EDIT_ENDPOINT_URL_SHORT=127.0.0.1\n```\n\n### Sample code to run conversational-assistant agent against an image\n\n[conversational-assistant sample](sample.py)\n\n\n## Acknowledgement\n\nWe are highly inspired by [langchain](https://github.com/hwchase17/langchain).\n\n\n## Citation\n```\n@article{yang2023mmreact,\n author = {Zhengyuan Yang* and Linjie Li* and Jianfeng Wang* and Kevin Lin* and Ehsan Azarnasab* and Faisal Ahmed* and Zicheng Liu and Ce Liu and Michael Zeng and Lijuan Wang^},\n title = {MM-REACT: Prompting ChatGPT for Multimodal Reasoning and Action},\n publisher = {arXiv},\n year = {2023},\n}\n```\n\n## Contributing\n\nThis project welcomes contributions and suggestions. Most contributions require you to agree to a\nContributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us\nthe rights to use your contribution. For details, visit https://cla.opensource.microsoft.com.\n\nWhen you submit a pull request, a CLA bot will automatically determine whether you need to provide\na CLA and decorate the PR appropriately (e.g., status check, comment). Simply follow the instructions\nprovided by the bot. You will only need to do this once across all repos using our CLA.\n\nThis project has adopted the [Microsoft Open Source Code of Conduct](https://opensource.microsoft.com/codeofconduct/).\nFor more information see the [Code of Conduct FAQ](https://opensource.microsoft.com/codeofconduct/faq/) or\ncontact [opencode@microsoft.com](mailto:opencode@microsoft.com) with any additional questions or comments.\n\n## Trademarks\n\nThis project may contain trademarks or logos for projects, products, or services. Authorized use of Microsoft \ntrademarks or logos is subject to and must follow \n[Microsoft's Trademark & Brand Guidelines](https://www.microsoft.com/en-us/legal/intellectualproperty/trademarks/usage/general).\nUse of Microsoft trademarks or logos in modified versions of this project must not cause confusion or imply Microsoft sponsorship.\nAny use of third-party trademarks or logos are subject to those third-party's policies.\n\n\n\n",

"bugtrack_url": null,

"license": "",

"summary": "MM-REACT: Prompting ChatGPT for Multimodal Reasoning and Action",

"version": "0.0.1.dev0",

"split_keywords": [

"mmreact"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "54d7f7a0b68670a49cbe5bffccadcbdb2ba3db861b30580c157ec4064aac5027",

"md5": "9658ce5e0c6b95b0378b90bc7a67741b",

"sha256": "48d83b6115a1dc255f9055c9816bc412cedf079554dbc7d12d0c622a2fbc7002"

},

"downloads": -1,

"filename": "mmreact-0.0.1.dev0-py3-none-any.whl",

"has_sig": false,

"md5_digest": "9658ce5e0c6b95b0378b90bc7a67741b",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": null,

"size": 3748,

"upload_time": "2023-03-22T06:34:11",

"upload_time_iso_8601": "2023-03-22T06:34:11.903209Z",

"url": "https://files.pythonhosted.org/packages/54/d7/f7a0b68670a49cbe5bffccadcbdb2ba3db861b30580c157ec4064aac5027/mmreact-0.0.1.dev0-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2023-03-22 06:34:11",

"github": false,

"gitlab": false,

"bitbucket": false,

"lcname": "mmreact"

}