<div align="center">

<h1>multimodal-maestro</h1>

<br>

[](https://badge.fury.io/py/multimodal-maestro)

[](https://github.com/roboflow/multimodal-maestro/blob/main/LICENSE)

[](https://badge.fury.io/py/multimodal-maestro)

[](https://huggingface.co/spaces/Roboflow/SoM)

[](https://colab.research.google.com/github/roboflow/multimodal-maestro/blob/main/cookbooks/multimodal_maestro_gpt_4_vision.ipynb

)

</div>

## 👋 hello

Multimodal-Maestro gives you more control over large multimodal models to get the

outputs you want. With more effective prompting tactics, you can get multimodal models

to do tasks you didn't know (or think!) were possible. Curious how it works? Try our

HF [space](https://huggingface.co/spaces/Roboflow/SoM)!

🚧 The project is still under construction and the API is prone to change.

## 💻 install

Pip install the supervision package in a

[**3.11>=Python>=3.8**](https://www.python.org/) environment.

```bash

pip install multimodal-maestro

```

## 🚀 examples

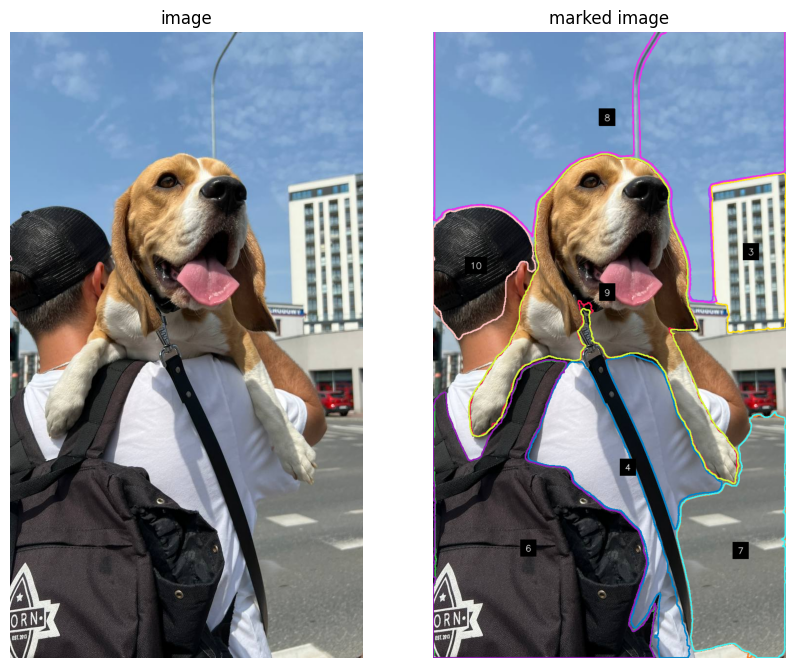

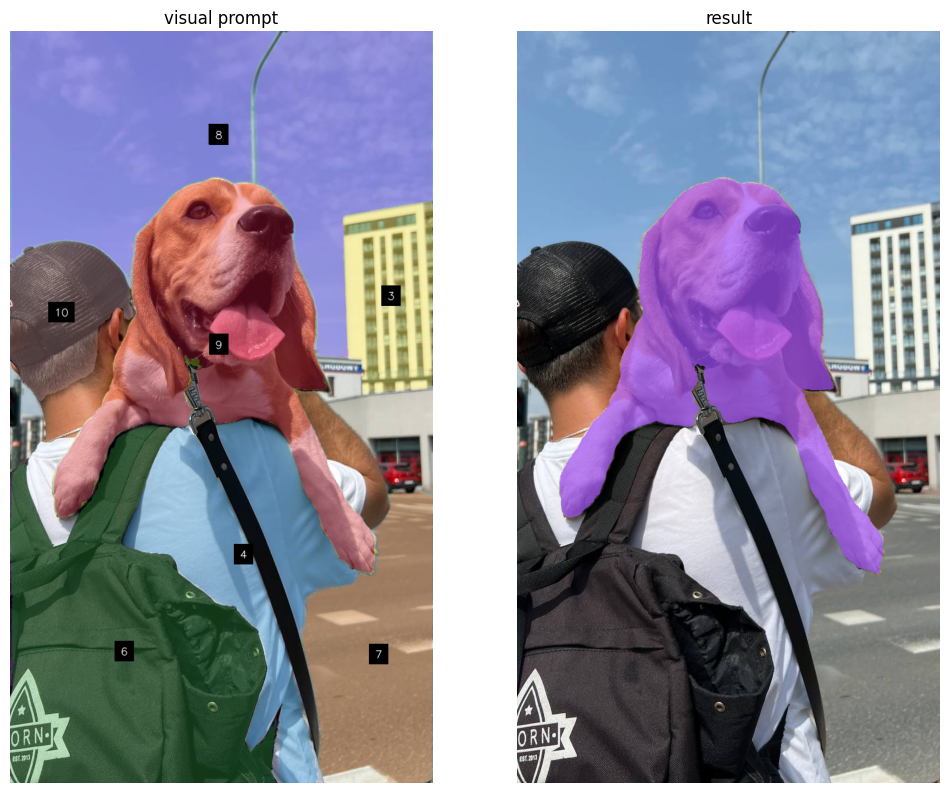

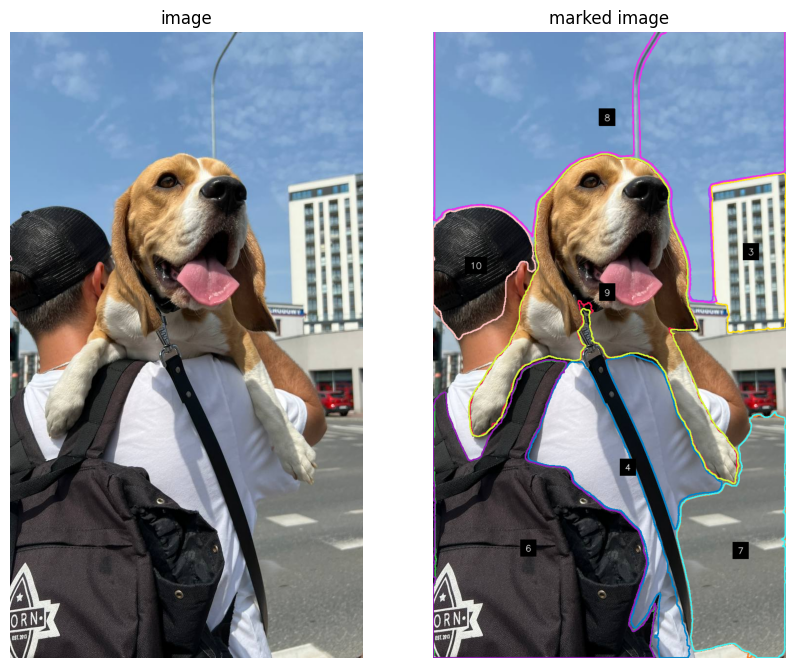

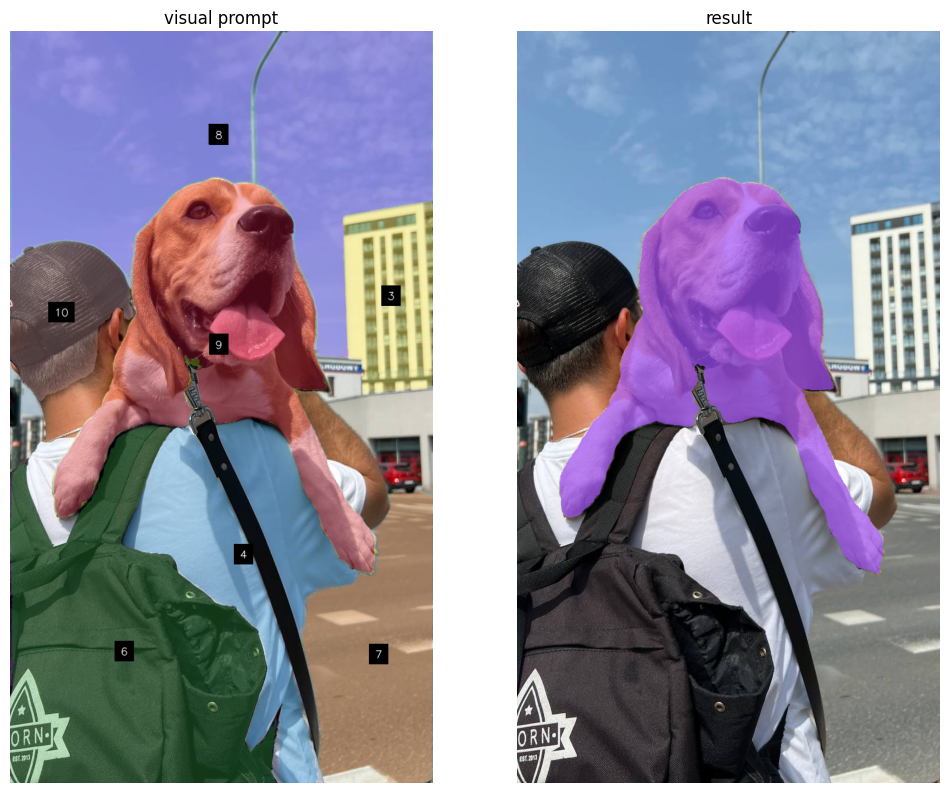

### GPT-4 Vision

```

Find dog.

>>> The dog is prominently featured in the center of the image with the label [9].

```

<details close>

<summary>👉 read more</summary>

<br>

- **load image**

```python

import cv2

image = cv2.imread("...")

```

- **create and refine marks**

```python

import multimodalmaestro as mm

generator = mm.SegmentAnythingMarkGenerator(device='cuda')

marks = generator.generate(image=image)

marks = mm.refine_marks(marks=marks)

```

- **visualize marks**

```python

mark_visualizer = mm.MarkVisualizer()

marked_image = mark_visualizer.visualize(image=image, marks=marks)

```

- **prompt**

```python

prompt = "Find dog."

response = mm.prompt_image(api_key=api_key, image=marked_image, prompt=prompt)

```

```

>>> "The dog is prominently featured in the center of the image with the label [9]."

```

- **extract related marks**

```python

masks = mm.extract_relevant_masks(text=response, detections=refined_marks)

```

```

>>> {'6': array([

... [False, False, False, ..., False, False, False],

... [False, False, False, ..., False, False, False],

... [False, False, False, ..., False, False, False],

... ...,

... [ True, True, True, ..., False, False, False],

... [ True, True, True, ..., False, False, False],

... [ True, True, True, ..., False, False, False]])

... }

```

</details>

## 🚧 roadmap

- [ ] Documentation page.

- [ ] Segment Anything guided marks generation.

- [ ] Non-Max Suppression marks refinement.

- [ ] LLaVA demo.

## 💜 acknowledgement

- [Set-of-Mark Prompting Unleashes Extraordinary Visual Grounding

in GPT-4V](https://arxiv.org/abs/2310.11441) by Jianwei Yang, Hao Zhang, Feng Li, Xueyan

Zou, Chunyuan Li, Jianfeng Gao.

## 🦸 contribution

We would love your help in making this repository even better! If you noticed any bug,

or if you have any suggestions for improvement, feel free to open an

[issue](https://github.com/roboflow/set-of-mark/issues) or submit a

[pull request](https://github.com/roboflow/set-of-mark/pulls).

Raw data

{

"_id": null,

"home_page": "https://github.com/roboflow/set-of-mark",

"name": "multimodal-maestro",

"maintainer": "Piotr Skalski",

"docs_url": null,

"requires_python": ">=3.8,<3.12.0",

"maintainer_email": "piotr.skalski92@gmail.com",

"keywords": "",

"author": "Piotr Skalski",

"author_email": "piotr.skalski92@gmail.com",

"download_url": "https://files.pythonhosted.org/packages/06/09/6ef2e07b42b9659cd62e82b07e22e8ed3a26c5884cd843b581715a9cdb78/multimodal_maestro-0.1.0.tar.gz",

"platform": null,

"description": "\n<div align=\"center\">\n\n <h1>multimodal-maestro</h1>\n\n <br>\n\n [](https://badge.fury.io/py/multimodal-maestro)\n [](https://github.com/roboflow/multimodal-maestro/blob/main/LICENSE)\n [](https://badge.fury.io/py/multimodal-maestro)\n [](https://huggingface.co/spaces/Roboflow/SoM)\n [](https://colab.research.google.com/github/roboflow/multimodal-maestro/blob/main/cookbooks/multimodal_maestro_gpt_4_vision.ipynb\n)\n\n</div>\n\n## \ud83d\udc4b hello\n\nMultimodal-Maestro gives you more control over large multimodal models to get the \noutputs you want. With more effective prompting tactics, you can get multimodal models \nto do tasks you didn't know (or think!) were possible. Curious how it works? Try our \nHF [space](https://huggingface.co/spaces/Roboflow/SoM)!\n\n\ud83d\udea7 The project is still under construction and the API is prone to change.\n\n## \ud83d\udcbb install\n\nPip install the supervision package in a\n[**3.11>=Python>=3.8**](https://www.python.org/) environment.\n\n```bash\npip install multimodal-maestro\n```\n\n## \ud83d\ude80 examples\n\n### GPT-4 Vision\n\n```\nFind dog.\n\n>>> The dog is prominently featured in the center of the image with the label [9].\n```\n\n<details close>\n<summary>\ud83d\udc49 read more</summary>\n\n<br>\n\n- **load image**\n\n ```python\n import cv2\n \n image = cv2.imread(\"...\")\n ```\n\n- **create and refine marks**\n\n ```python\n import multimodalmaestro as mm\n \n generator = mm.SegmentAnythingMarkGenerator(device='cuda')\n marks = generator.generate(image=image)\n marks = mm.refine_marks(marks=marks)\n ```\n\n- **visualize marks**\n\n ```python\n mark_visualizer = mm.MarkVisualizer()\n marked_image = mark_visualizer.visualize(image=image, marks=marks)\n ```\n \n\n- **prompt**\n\n ```python\n prompt = \"Find dog.\"\n \n response = mm.prompt_image(api_key=api_key, image=marked_image, prompt=prompt)\n ```\n \n ```\n >>> \"The dog is prominently featured in the center of the image with the label [9].\"\n ```\n\n- **extract related marks**\n\n ```python\n masks = mm.extract_relevant_masks(text=response, detections=refined_marks)\n ```\n \n ```\n >>> {'6': array([\n ... [False, False, False, ..., False, False, False],\n ... [False, False, False, ..., False, False, False],\n ... [False, False, False, ..., False, False, False],\n ... ...,\n ... [ True, True, True, ..., False, False, False],\n ... [ True, True, True, ..., False, False, False],\n ... [ True, True, True, ..., False, False, False]])\n ... }\n ```\n\n</details>\n\n\n\n## \ud83d\udea7 roadmap\n\n- [ ] Documentation page.\n- [ ] Segment Anything guided marks generation.\n- [ ] Non-Max Suppression marks refinement.\n- [ ] LLaVA demo.\n\n## \ud83d\udc9c acknowledgement\n\n- [Set-of-Mark Prompting Unleashes Extraordinary Visual Grounding\nin GPT-4V](https://arxiv.org/abs/2310.11441) by Jianwei Yang, Hao Zhang, Feng Li, Xueyan\nZou, Chunyuan Li, Jianfeng Gao.\n\n## \ud83e\uddb8 contribution\n\nWe would love your help in making this repository even better! If you noticed any bug, \nor if you have any suggestions for improvement, feel free to open an \n[issue](https://github.com/roboflow/set-of-mark/issues) or submit a \n[pull request](https://github.com/roboflow/set-of-mark/pulls).\n",

"bugtrack_url": null,

"license": "MIT",

"summary": "Visual Prompting for Large Multimodal Models (LMMs)",

"version": "0.1.0",

"project_urls": {

"Documentation": "https://github.com/roboflow/set-of-mark/blob/main/README.md",

"Homepage": "https://github.com/roboflow/set-of-mark",

"Repository": "https://github.com/roboflow/set-of-mark"

},

"split_keywords": [],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "c0e319463106d1c459fb981418dc71b7f2c19ecbd799915bb1047db14425457e",

"md5": "5f86e18a8040043510dfab04ba3855fc",

"sha256": "10836ab4608a4e32108b8cc174352b6ab66b816711dd55fd02a56ad68ecbf40b"

},

"downloads": -1,

"filename": "multimodal_maestro-0.1.0-py3-none-any.whl",

"has_sig": false,

"md5_digest": "5f86e18a8040043510dfab04ba3855fc",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.8,<3.12.0",

"size": 11926,

"upload_time": "2023-11-29T11:56:25",

"upload_time_iso_8601": "2023-11-29T11:56:25.577781Z",

"url": "https://files.pythonhosted.org/packages/c0/e3/19463106d1c459fb981418dc71b7f2c19ecbd799915bb1047db14425457e/multimodal_maestro-0.1.0-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "06096ef2e07b42b9659cd62e82b07e22e8ed3a26c5884cd843b581715a9cdb78",

"md5": "71f4bf2b90261129a5592260b6184b93",

"sha256": "799bea920f212f8ba1aeb42ca7473efae1ebff4c6185b1d7593c0887c2a61252"

},

"downloads": -1,

"filename": "multimodal_maestro-0.1.0.tar.gz",

"has_sig": false,

"md5_digest": "71f4bf2b90261129a5592260b6184b93",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.8,<3.12.0",

"size": 10743,

"upload_time": "2023-11-29T11:56:26",

"upload_time_iso_8601": "2023-11-29T11:56:26.737147Z",

"url": "https://files.pythonhosted.org/packages/06/09/6ef2e07b42b9659cd62e82b07e22e8ed3a26c5884cd843b581715a9cdb78/multimodal_maestro-0.1.0.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2023-11-29 11:56:26",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "roboflow",

"github_project": "set-of-mark",

"travis_ci": false,

"coveralls": false,

"github_actions": false,

"lcname": "multimodal-maestro"

}