| Name | nosmpl JSON |

| Version |

0.1.4

JSON

JSON |

| download |

| home_page | https://github.com/jinfagang/nosmpl |

| Summary | NoSMPL: Optimized common used SMPL operation. |

| upload_time | 2023-04-05 13:47:59 |

| maintainer | |

| docs_url | None |

| author | Lucas Jin |

| requires_python | |

| license | |

| keywords |

|

| VCS |

|

| bugtrack_url |

|

| requirements |

No requirements were recorded.

|

| Travis-CI |

No Travis.

|

| coveralls test coverage |

No coveralls.

|

# NoSMPL

An enchanced and accelerated SMPL operation which commonly used in 3D human mesh generation. It takes a poses, shapes, cam_trans as inputs, outputs a high-dimensional 3D mesh verts.

However, SMPL codes and models are so messy out there, they have a lot of codes do calculation, some of them can not be easily deployed or accerlarated. So we have `nosmpl` here, it provides:

- build on smplx, but with onnx support;

- can be inference via onnx;

- we also demantrated some using scenarios infer with `nosmpl` but without any model, only onnx.

This packages provides:

- [ ] Highly optimized pytorch acceleration with FP16 infer enabled;

- [x] Supported ONNX export and infer via ort, so that it might able used into TensorRT or OpenVINO on cpu;

- [x] Support STAR, next generation of SMPL.

- [x] Provide commonly used geoemtry built-in support without torchgeometry or kornia.

STAR model download from: https://star.is.tue.mpg.de

## SMPL ONNX Model Downloads

I have exported 2 models, include `SMPL-H` and `SMPL`, which can cover most using scenarios:

- `smpl`: [link](https://github.com/jinfagang/nosmpl/releases/download/v1.1/smpl.onnx)

- `smpl-h`: [link](https://github.com/jinfagang/nosmpl/releases/download/v1.0/smplh_sim_w_orien.onnx)

They can also be found at github release.

For usage, you can take examples like `examples/demo_smplh_onnx.py`.

## Quick Start

Now you can using `nosmpl` to visualize smpl with just few line of codes without **download any SMPL file**:

```python

from nosmpl.smpl_onnx import SMPLOnnxRuntime

import numpy as np

smpl = SMPLOnnxRuntime()

body = np.random.randn(1, 23, 3).astype(np.float32)

global_orient = np.random.randn(1, 1, 3).astype(np.float32)

outputs = smpl.forward(body, global_orient)

print(outputs)

# you can visualize the verts with Open3D now.

```

So your predicted 3d pose such as SPIN, HMR, PARE etc, grap your model ouput, and through this `nosmpl` func, you will get your SMPL vertices!

## Updates

- **`2023.02.28`**: An SMPL-H ONNX model released! Now You can using ONNXRuntime to get a 3D SMPL Mesh from a pose!

- **`2022.05.16`**: Now added `human_prior` inside `nosmpl`, you don't need install that lib anymore, or install torchgeometry either:

```python

from nosmpl.vpose.tools.model_loader import load_vposer

self.vposer, _ = load_vposer(VPOSER_PATH, vp_model="snapshot")

```

then you can load vpose to use.

- **`2022.05.10`**: Add BHV reader, you can now read and write bvh file:

```python

from nosmpl.parsers import bvh_io

import sys

animation = bvh_io.load(sys.argv[1])

print(animation.names)

print(animation.frametime)

print(animation.parent)

print(animation.offsets)

print(animation.shape)

```

- **`2022.05.07`**: Added a visualization for Human36m GT, you can using like this to visualize h36m data now:

```

import nosmpl.datasets.h36m_data_utils as data_utils

from nosmpl.datasets.h36m_vis import h36m_vis_on_gt_file

import sys

if __name__ == "__main__":

h36m_vis_on_gt_file(sys.argv[1])

```

Just send a h36m txt annotation file, and you can see the animation result. Also, you can using `from nosmpl.datasets.h36m_vis import h36m_load_gt_3d_data` to load 3d data in 3D space.

- **`2022.03.03`**: I add some `box_transform` code into `nosmpl`, no we can get box_scale info when recover cropped img predicted 3d vertices back to original image. This is helpful when you project 3d vertices back to original image when using `realrender`.

the usage like:

```

from nosmpl.box_trans import get_box_scale_info, convert_vertices_to_ori_img

box_scale_o2n, box_topleft, _ = get_box_scale_info(img, bboxes)

frame_verts = convert_vertices_to_ori_img(

frame_verts, s, t, box_scale_o2n, box_topleft

)

```

- **`2022.03.05`**: More to go.

## Features

The most exciting feature in `nosmpl` is **you don't need download any SMPL files anymore**, you just need to download my exported `SMPL.onnx` or `SMPLH.onnx`, then you can using numpy to generate a Mesh!!!

`nosmpl` also provided a script to visualize it~!

```python

import onnxruntime as rt

import torch

import numpy as np

from nosmpl.vis.vis_o3d import vis_mesh_o3d

def gen():

sess = rt.InferenceSession("smplh_sim.onnx")

for i in range(5):

body_pose = (

torch.randn([1, 63], dtype=torch.float32).clamp(0, 0.4).cpu().numpy()

)

left_hand_pose = (

torch.randn([1, 6], dtype=torch.float32).clamp(0, 0.4).cpu().numpy()

)

right_hand_pose = (

torch.randn([1, 6], dtype=torch.float32).clamp(0, 0.4).cpu().numpy()

)

outputs = sess.run(

None, {"body": body_pose, "lhand": left_hand_pose, "rhand": right_hand_pose}

)

vertices, joints, faces = outputs

vertices = vertices[0].squeeze()

joints = joints[0].squeeze()

faces = faces.astype(np.int32)

vis_mesh_o3d(vertices, faces)

if __name__ == "__main__":

gen()

```

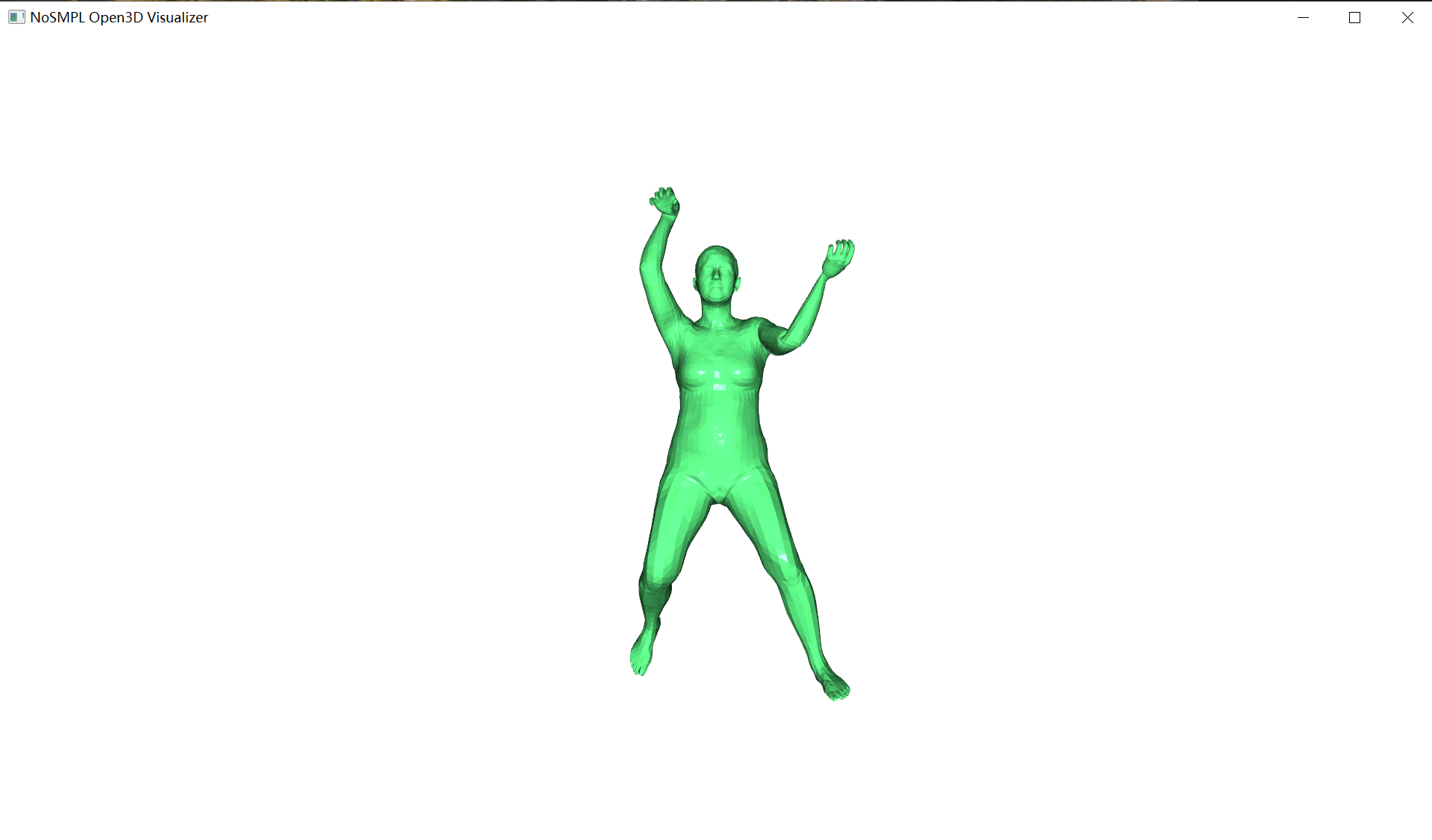

You will see a mesh with your pose, generated:

As you can see, we are using a single ONNX model, by some randome poses, you can generated a visualized mesh.

**this is useful when you wanna test your predict pose is right or not!**

If you using this in your project, your code will be decrease 190%, if it helps, consider cite `nosmpl` in your project!

More details you can join our Metaverse Wechat group for discussion! QQ join link:

## Examples

an example to call `nosmlp`:

```python

from nosmpl.smpl import SMPL

smpl = SMPL(smplModelPath, extra_regressor='extra_data/body_module/data_from_spin/J_regressor_extra.npy').to(device)

# get your betas and rotmat

pred_vertices, pred_joints_3d, faces = smpl(

pred_betas, pred_rotmat

)

# note that we returned faces in SMPL model, you can use for visualization

# joints3d will add extra joints if you have extra_regressor like in SPIN or VIBE

```

The output shape of onnx model like:

```

basicModel_neutral_lbs_10_207_0_v1.0.0.onnx Detail

╭───────────────┬────────────────────────────┬──────────────────────────┬────────────────╮

│ Name │ Shape │ Input/Output │ Dtype │

├───────────────┼────────────────────────────┼──────────────────────────┼────────────────┤

│ 0 │ [1, 10] │ input │ float32 │

│ 1 │ [1, 24, 3, 3] │ input │ float32 │

│ verts │ [-1, -1, -1] │ output │ float32 │

│ joints │ [-1, -1, -1] │ output │ float32 │

│ faces │ [13776, 3] │ output │ int32 │

╰───────────────┴────────────────────────────┴──────────────────────────┴────────────────╯

Table generated by onnxexplorer

```

## Notes

1. About quaternion

the `aa2quat` function, will converts quaternion in `wxyz` as default order. This is **different** from scipy. It's consistent as mostly 3d software such as Blender or UE.

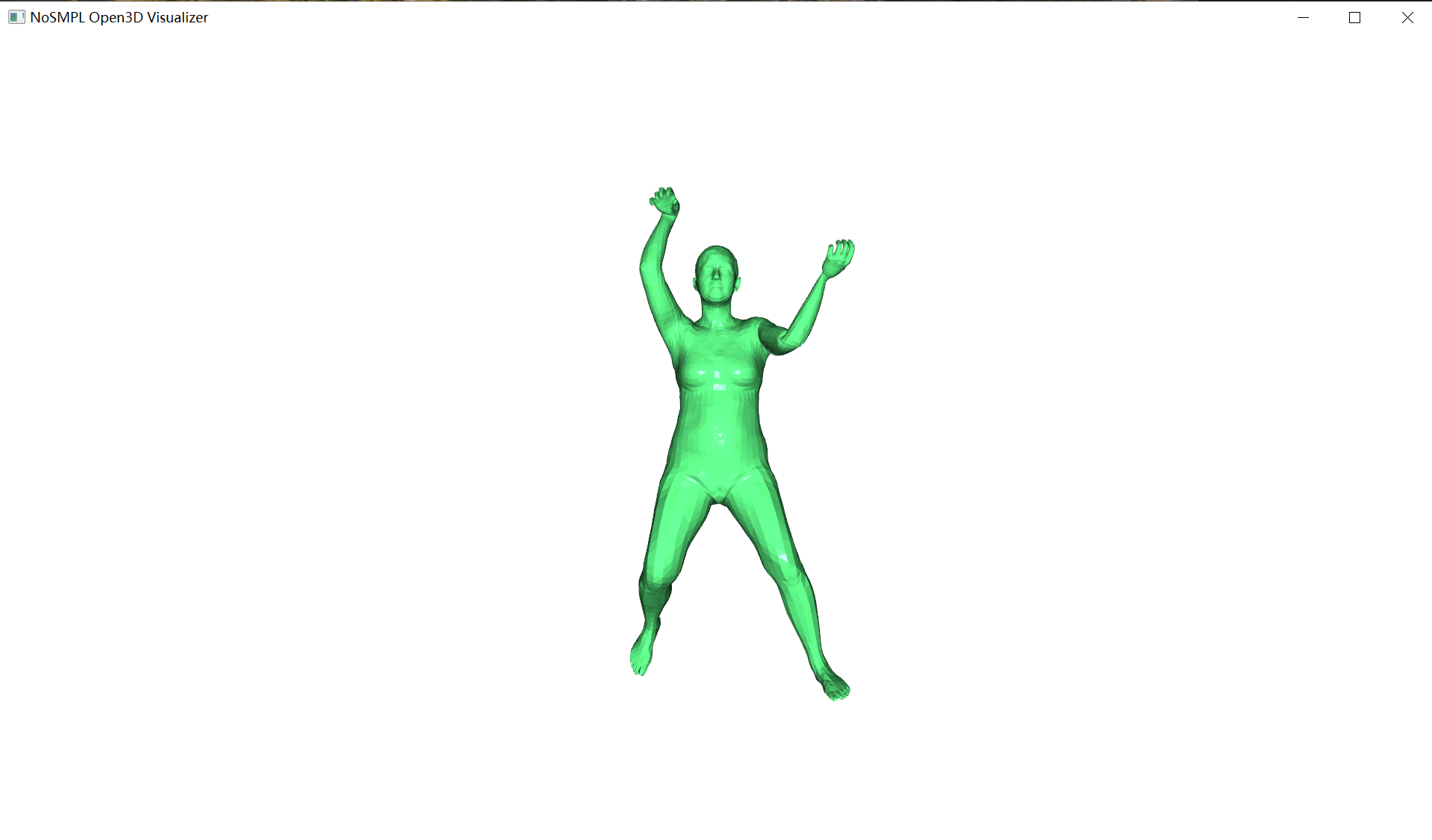

## Results

Some pipelines build with `nosmpl` support.

## Copyrights

Copyrights belongs to Copyright (C) 2020 Max-Planck-Gesellschaft zur Förderung der Wissenschaften e.V. (MPG) and Lucas Jin

Raw data

{

"_id": null,

"home_page": "https://github.com/jinfagang/nosmpl",

"name": "nosmpl",

"maintainer": "",

"docs_url": null,

"requires_python": "",

"maintainer_email": "",

"keywords": "",

"author": "Lucas Jin",

"author_email": "11@qq.com",

"download_url": "https://files.pythonhosted.org/packages/af/5a/b036ef277548d5a7ca5b693b0f8ad6e362c02d93c346f8dc0039a18039f8/nosmpl-0.1.4.tar.gz",

"platform": null,

"description": "# NoSMPL\r\n\r\nAn enchanced and accelerated SMPL operation which commonly used in 3D human mesh generation. It takes a poses, shapes, cam_trans as inputs, outputs a high-dimensional 3D mesh verts.\r\n\r\nHowever, SMPL codes and models are so messy out there, they have a lot of codes do calculation, some of them can not be easily deployed or accerlarated. So we have `nosmpl` here, it provides:\r\n\r\n- build on smplx, but with onnx support;\r\n- can be inference via onnx;\r\n- we also demantrated some using scenarios infer with `nosmpl` but without any model, only onnx.\r\n\r\nThis packages provides:\r\n\r\n- [ ] Highly optimized pytorch acceleration with FP16 infer enabled;\r\n- [x] Supported ONNX export and infer via ort, so that it might able used into TensorRT or OpenVINO on cpu;\r\n- [x] Support STAR, next generation of SMPL.\r\n- [x] Provide commonly used geoemtry built-in support without torchgeometry or kornia.\r\n\r\nSTAR model download from: https://star.is.tue.mpg.de\r\n\r\n\r\n## SMPL ONNX Model Downloads\r\n\r\nI have exported 2 models, include `SMPL-H` and `SMPL`, which can cover most using scenarios:\r\n\r\n- `smpl`: [link](https://github.com/jinfagang/nosmpl/releases/download/v1.1/smpl.onnx)\r\n- `smpl-h`: [link](https://github.com/jinfagang/nosmpl/releases/download/v1.0/smplh_sim_w_orien.onnx)\r\n\r\nThey can also be found at github release.\r\n\r\nFor usage, you can take examples like `examples/demo_smplh_onnx.py`.\r\n\r\n\r\n## Quick Start\r\n\r\nNow you can using `nosmpl` to visualize smpl with just few line of codes without **download any SMPL file**:\r\n\r\n```python\r\nfrom nosmpl.smpl_onnx import SMPLOnnxRuntime\r\nimport numpy as np\r\n\r\n\r\nsmpl = SMPLOnnxRuntime()\r\n\r\nbody = np.random.randn(1, 23, 3).astype(np.float32)\r\nglobal_orient = np.random.randn(1, 1, 3).astype(np.float32)\r\noutputs = smpl.forward(body, global_orient)\r\nprint(outputs)\r\n# you can visualize the verts with Open3D now.\r\n```\r\n\r\nSo your predicted 3d pose such as SPIN, HMR, PARE etc, grap your model ouput, and through this `nosmpl` func, you will get your SMPL vertices!\r\n\r\n\r\n## Updates\r\n\r\n- **`2023.02.28`**: An SMPL-H ONNX model released! Now You can using ONNXRuntime to get a 3D SMPL Mesh from a pose!\r\n- **`2022.05.16`**: Now added `human_prior` inside `nosmpl`, you don't need install that lib anymore, or install torchgeometry either:\r\n ```python\r\n from nosmpl.vpose.tools.model_loader import load_vposer\r\n self.vposer, _ = load_vposer(VPOSER_PATH, vp_model=\"snapshot\")\r\n ```\r\n then you can load vpose to use.\r\n- **`2022.05.10`**: Add BHV reader, you can now read and write bvh file:\r\n\r\n ```python\r\n from nosmpl.parsers import bvh_io\r\n import sys\r\n\r\n\r\n animation = bvh_io.load(sys.argv[1])\r\n print(animation.names)\r\n print(animation.frametime)\r\n print(animation.parent)\r\n print(animation.offsets)\r\n print(animation.shape)\r\n ```\r\n\r\n- **`2022.05.07`**: Added a visualization for Human36m GT, you can using like this to visualize h36m data now:\r\n\r\n ```\r\n import nosmpl.datasets.h36m_data_utils as data_utils\r\n from nosmpl.datasets.h36m_vis import h36m_vis_on_gt_file\r\n import sys\r\n\r\n if __name__ == \"__main__\":\r\n h36m_vis_on_gt_file(sys.argv[1])\r\n ```\r\n\r\n Just send a h36m txt annotation file, and you can see the animation result. Also, you can using `from nosmpl.datasets.h36m_vis import h36m_load_gt_3d_data` to load 3d data in 3D space.\r\n\r\n- **`2022.03.03`**: I add some `box_transform` code into `nosmpl`, no we can get box_scale info when recover cropped img predicted 3d vertices back to original image. This is helpful when you project 3d vertices back to original image when using `realrender`.\r\n the usage like:\r\n ```\r\n from nosmpl.box_trans import get_box_scale_info, convert_vertices_to_ori_img\r\n box_scale_o2n, box_topleft, _ = get_box_scale_info(img, bboxes)\r\n frame_verts = convert_vertices_to_ori_img(\r\n frame_verts, s, t, box_scale_o2n, box_topleft\r\n )\r\n ```\r\n- **`2022.03.05`**: More to go.\r\n\r\n## Features\r\n\r\nThe most exciting feature in `nosmpl` is **you don't need download any SMPL files anymore**, you just need to download my exported `SMPL.onnx` or `SMPLH.onnx`, then you can using numpy to generate a Mesh!!!\r\n\r\n`nosmpl` also provided a script to visualize it~!\r\n\r\n```python\r\n\r\nimport onnxruntime as rt\r\nimport torch\r\nimport numpy as np\r\nfrom nosmpl.vis.vis_o3d import vis_mesh_o3d\r\n\r\n\r\ndef gen():\r\n sess = rt.InferenceSession(\"smplh_sim.onnx\")\r\n\r\n for i in range(5):\r\n body_pose = (\r\n torch.randn([1, 63], dtype=torch.float32).clamp(0, 0.4).cpu().numpy()\r\n )\r\n left_hand_pose = (\r\n torch.randn([1, 6], dtype=torch.float32).clamp(0, 0.4).cpu().numpy()\r\n )\r\n right_hand_pose = (\r\n torch.randn([1, 6], dtype=torch.float32).clamp(0, 0.4).cpu().numpy()\r\n )\r\n\r\n outputs = sess.run(\r\n None, {\"body\": body_pose, \"lhand\": left_hand_pose, \"rhand\": right_hand_pose}\r\n )\r\n\r\n vertices, joints, faces = outputs\r\n vertices = vertices[0].squeeze()\r\n joints = joints[0].squeeze()\r\n\r\n faces = faces.astype(np.int32)\r\n vis_mesh_o3d(vertices, faces)\r\n\r\n\r\nif __name__ == \"__main__\":\r\n gen()\r\n```\r\n\r\nYou will see a mesh with your pose, generated:\r\n\r\n\r\n\r\nAs you can see, we are using a single ONNX model, by some randome poses, you can generated a visualized mesh.\r\n\r\n**this is useful when you wanna test your predict pose is right or not!**\r\n\r\nIf you using this in your project, your code will be decrease 190%, if it helps, consider cite `nosmpl` in your project!\r\n\r\nMore details you can join our Metaverse Wechat group for discussion! QQ join link:\r\n\r\n## Examples\r\n\r\nan example to call `nosmlp`:\r\n\r\n```python\r\nfrom nosmpl.smpl import SMPL\r\n\r\nsmpl = SMPL(smplModelPath, extra_regressor='extra_data/body_module/data_from_spin/J_regressor_extra.npy').to(device)\r\n\r\n# get your betas and rotmat\r\npred_vertices, pred_joints_3d, faces = smpl(\r\n pred_betas, pred_rotmat\r\n )\r\n\r\n# note that we returned faces in SMPL model, you can use for visualization\r\n# joints3d will add extra joints if you have extra_regressor like in SPIN or VIBE\r\n\r\n```\r\n\r\nThe output shape of onnx model like:\r\n\r\n```\r\n basicModel_neutral_lbs_10_207_0_v1.0.0.onnx Detail\r\n\u256d\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u252c\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u252c\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u252c\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u256e\r\n\u2502 Name \u2502 Shape \u2502 Input/Output \u2502 Dtype \u2502\r\n\u251c\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u253c\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u253c\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u253c\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2524\r\n\u2502 0 \u2502 [1, 10] \u2502 input \u2502 float32 \u2502\r\n\u2502 1 \u2502 [1, 24, 3, 3] \u2502 input \u2502 float32 \u2502\r\n\u2502 verts \u2502 [-1, -1, -1] \u2502 output \u2502 float32 \u2502\r\n\u2502 joints \u2502 [-1, -1, -1] \u2502 output \u2502 float32 \u2502\r\n\u2502 faces \u2502 [13776, 3] \u2502 output \u2502 int32 \u2502\r\n\u2570\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2534\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2534\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2534\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u2500\u256f\r\n Table generated by onnxexplorer\r\n```\r\n\r\n## Notes\r\n\r\n1. About quaternion\r\n\r\nthe `aa2quat` function, will converts quaternion in `wxyz` as default order. This is **different** from scipy. It's consistent as mostly 3d software such as Blender or UE.\r\n\r\n## Results\r\n\r\nSome pipelines build with `nosmpl` support.\r\n\r\n\r\n\r\n## Copyrights\r\n\r\nCopyrights belongs to Copyright (C) 2020 Max-Planck-Gesellschaft zur F\u00f6rderung der Wissenschaften e.V. (MPG) and Lucas Jin\r\n",

"bugtrack_url": null,

"license": "",

"summary": "NoSMPL: Optimized common used SMPL operation.",

"version": "0.1.4",

"split_keywords": [],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "af5ab036ef277548d5a7ca5b693b0f8ad6e362c02d93c346f8dc0039a18039f8",

"md5": "f71a2c48f9170616e33af25a9958c5f4",

"sha256": "3c2d6e4d57056f5c63975d8835b63355e93bebb08a4bf09166d4a383ac9a9105"

},

"downloads": -1,

"filename": "nosmpl-0.1.4.tar.gz",

"has_sig": false,

"md5_digest": "f71a2c48f9170616e33af25a9958c5f4",

"packagetype": "sdist",

"python_version": "source",

"requires_python": null,

"size": 67746,

"upload_time": "2023-04-05T13:47:59",

"upload_time_iso_8601": "2023-04-05T13:47:59.260209Z",

"url": "https://files.pythonhosted.org/packages/af/5a/b036ef277548d5a7ca5b693b0f8ad6e362c02d93c346f8dc0039a18039f8/nosmpl-0.1.4.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2023-04-05 13:47:59",

"github": true,

"gitlab": false,

"bitbucket": false,

"github_user": "jinfagang",

"github_project": "nosmpl",

"travis_ci": false,

"coveralls": false,

"github_actions": false,

"lcname": "nosmpl"

}