| Name | pluk JSON |

| Version |

0.6.1

JSON

JSON |

| download |

| home_page | None |

| Summary | Symbol lookup / search engine CLI |

| upload_time | 2025-09-08 08:24:07 |

| maintainer | None |

| docs_url | None |

| author | None |

| requires_python | >=3.11 |

| license | None |

| keywords |

symbol

search

cli

index

lookup

|

| VCS |

|

| bugtrack_url |

|

| requirements |

No requirements were recorded.

|

| Travis-CI |

No Travis.

|

| coveralls test coverage |

No coveralls.

|

# Pluk

Git-commit–aware symbol lookup & impact analysis engine

---

## What is a "symbol"?

In Pluk, a **symbol** is any named entity in your codebase that can be referenced, defined, or impacted by changes. This includes functions, classes, methods, variables, and other identifiers that appear in your source code. Pluk tracks symbols across commits and repositories to enable powerful queries like "go to definition", "find all references", and "impact analysis".

Pluk gives developers “go-to-definition”, “find-all-references”, and “blast-radius” impact queries across one or more Git repositories. Heavy lifting (indexing, querying, storage) runs in Docker containers; a lightweight host shim (`pluk`) boots the stack and delegates commands into a thin CLI container (`plukd`) that talks to an internal API.

---

## Key Features

- **Fuzzy symbol search** (`pluk search`) for finding symbols in the current commit

- **Definition lookup** (`pluk define`)

- **Impact analysis** (`pluk impact`) to trace downstream dependents

- **Commit-aware indexing** (`pluk diff`) across Git history

- **Containerized backend**: PostgreSQL (graph) + Redis (broker/cache)

- **Strict lifecycle**: `pluk start` (host shim) is required before any containerized commands; use the shim on the host to manage services (`start`, `status`, `cleanup`).

- **Host controls**: `pluk status` to check, `pluk cleanup` to stop services

---

## Quickstart

1. **Install**

```bash

pip install pluk

```

2. **Start services (required)**

```bash

pluk start

```

This creates/updates `~/.pluk/docker-compose.yml`, **pulls latest images**, and brings up: `postgres`, `redis`, `api` (FastAPI), `worker` (Celery), and `cli` (idle exec target). The API stays **internal** to the Docker network. Note: service lifecycle commands (`start`, `status`, `cleanup`) are implemented in the host shim; run them on your host shell using the `pluk` command.

3. **Index and query**

```bash

pluk init /path/to/repo # queue full index (host shim extracts repo's origin URL and commit and forwards them into the containerized CLI)

pluk search MyClass # fuzzy lookup; symbol matches branch-wide

pluk define my_function # show definition (file:line@commit)

pluk impact computeFoo # direct dependents; blast radius

pluk diff symbol abc123 def456 # symbol changes between commits abc123 → def456, local to the current branch

```

Important: the repository you index must be public (or otherwise directly reachable by the worker container). The worker clones repositories inside the container environment using the repository URL; private repositories that require credentials are not supported by the host shim workflow.

**Note:** CLI commands that poll for job status (like `pluk init`) now display real-time output, thanks to unbuffered Python output in the CLI container.

4. **Check / stop (host-side)**

```bash

pluk status # tells you if services are running

pluk cleanup # stops services (containers stay; fast restart)

```

If you want a full teardown (remove containers/network), use:

```bash

docker compose -f ~/.pluk/docker-compose.yml down -v

```

---

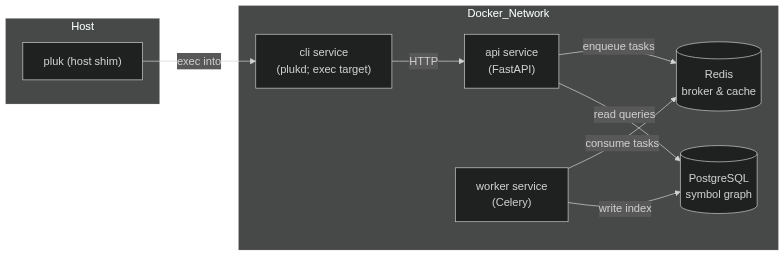

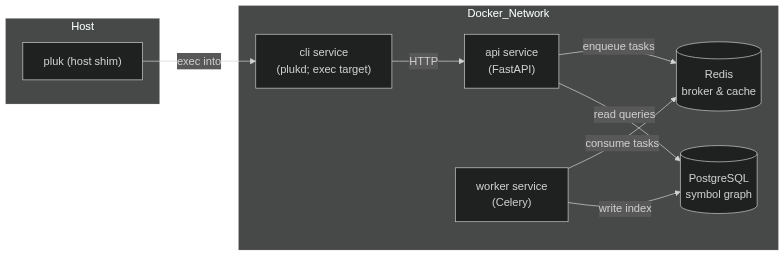

## Data Flow

[](https://mermaid.live/edit#pako:eNp9UtGO2jAQ_BVrHyqQAiKBhCSVKrWg6irRit6dVKmkqkyyl0Qkdmo7BUr4967D0eNe-rT27OzsztonSGWGEMNTJfdpwZVhq_tEMKbbba54U7A7qY0FGHsoynqTQFO1OzYoCGaakGECP2weRZaIV5VLme5Q_fyCZi_V7qKxWH0iibQqmUb1u0wxEQMrmL1leMCUGa5yNFdNxt6vLZ83t_yPXBvCX0jfSB4V8fb94Ya6wArV8YV5j1mpN4M-JGKrpKW_YSlPCxw-c5YfNoM1ucsVPnxdJUIf662sWO9p-Nqq3Qgbjd51_eylMLKzDm2KQp-5e3xcd9aGBSlc6OJXiy2SW73THXse52qkp6RS6Lb-L2WvSoPUNcNDR1PfNlDIM0Y9VIna5sCBXJUZxEa16ECNqub2CidblYApsMYEYjpmnN4KEnGmmoaL71LW1zIl27yA-IlXmm5tk3GDy5LTZup_qKLloFrIVhiIg8j3ehWIT3CAeOSF_tibzqahO43mURDNZw4cIXa9YBzOCJyEfjQJvDA4O_Cn7-yOPd-fBG7ou-48CN25A7QKI9Xny7_tv-_5L0GP5fk)

**How it works**

- **Host shim (`pluk`)** writes the Compose file, **pulls images**, and runs `docker compose up`.

- **CLI container (`plukd`)** is the exec target; it calls the API at `http://api:8000`.

- **API (FastAPI)** serves read endpoints (`/search`, `/define`, `/impact`, `/diff`) and enqueues write jobs (`/reindex`) to **Redis**.

- **Worker (Celery)** consumes jobs from **Redis**, clones/pulls repos into a volume (`/var/pluk/repos`), parses deltas, and writes to **Postgres**.

- Reads never block on indexing; write progress can be polled via job status endpoints (planned).

---

## Architecture (current)

- **Single image, multiple roles**: Compose selects per-service `command`

- `api` → `uvicorn pluk.api:app --host 0.0.0.0 --port 8000`

- `worker` → `celery -A pluk.worker worker -l info`

- `cli` → `sleep infinity` (keeps container up for `docker compose exec`)

- **Internal networking**: API is _not_ exposed to the host; CLI calls it over Docker DNS (`PLUK_API_URL=http://api:8000`).

- **Config**: `PLUK_DATABASE_URL`, `PLUK_REDIS_URL` injected via Compose; worker uses `PLUK_REPOS_DIR=/var/pluk/repos`.

- **Images**: by default the shim uses `jorstors/pluk:latest`, `postgres:16-alpine`, and `redis-alpine`

---

## Development

- **Project layout** (`src/pluk`):

- `shim.py` — host shim entrypoint (`pluk`)

- `cli.py` — container CLI (`plukd`)

- `api.py` — FastAPI app (internal API)

- `worker.py` — Celery app & tasks

- **Entry points** (`pyproject.toml`):

```toml

[project.scripts]

pluk = "pluk.shim:main"

plukd = "pluk.cli:main"

```

---

## Testing

```bash

pytest

```

Docker must be running; services must be started via `pluk start` for integration tests.

---

## License

MIT License

Raw data

{

"_id": null,

"home_page": null,

"name": "pluk",

"maintainer": null,

"docs_url": null,

"requires_python": ">=3.11",

"maintainer_email": null,

"keywords": "symbol, search, cli, index, lookup",

"author": null,

"author_email": "Justus Jones <Justus1274@gmail.com>",

"download_url": "https://files.pythonhosted.org/packages/bc/49/c8dc337199771fafb191df97e08785bd43b0b5b584f23c3fb5947b2ab4b8/pluk-0.6.1.tar.gz",

"platform": null,

"description": "# Pluk\n\nGit-commit\u2013aware symbol lookup & impact analysis engine\n\n---\n\n## What is a \"symbol\"?\n\nIn Pluk, a **symbol** is any named entity in your codebase that can be referenced, defined, or impacted by changes. This includes functions, classes, methods, variables, and other identifiers that appear in your source code. Pluk tracks symbols across commits and repositories to enable powerful queries like \"go to definition\", \"find all references\", and \"impact analysis\".\n\nPluk gives developers \u201cgo-to-definition\u201d, \u201cfind-all-references\u201d, and \u201cblast-radius\u201d impact queries across one or more Git repositories. Heavy lifting (indexing, querying, storage) runs in Docker containers; a lightweight host shim (`pluk`) boots the stack and delegates commands into a thin CLI container (`plukd`) that talks to an internal API.\n\n---\n\n## Key Features\n\n- **Fuzzy symbol search** (`pluk search`) for finding symbols in the current commit\n- **Definition lookup** (`pluk define`)\n- **Impact analysis** (`pluk impact`) to trace downstream dependents\n- **Commit-aware indexing** (`pluk diff`) across Git history\n- **Containerized backend**: PostgreSQL (graph) + Redis (broker/cache)\n- **Strict lifecycle**: `pluk start` (host shim) is required before any containerized commands; use the shim on the host to manage services (`start`, `status`, `cleanup`).\n- **Host controls**: `pluk status` to check, `pluk cleanup` to stop services\n\n---\n\n## Quickstart\n\n1. **Install**\n\n```bash\npip install pluk\n```\n\n2. **Start services (required)**\n\n```bash\npluk start\n```\n\nThis creates/updates `~/.pluk/docker-compose.yml`, **pulls latest images**, and brings up: `postgres`, `redis`, `api` (FastAPI), `worker` (Celery), and `cli` (idle exec target). The API stays **internal** to the Docker network. Note: service lifecycle commands (`start`, `status`, `cleanup`) are implemented in the host shim; run them on your host shell using the `pluk` command.\n\n3. **Index and query**\n\n```bash\npluk init /path/to/repo # queue full index (host shim extracts repo's origin URL and commit and forwards them into the containerized CLI)\npluk search MyClass # fuzzy lookup; symbol matches branch-wide\npluk define my_function # show definition (file:line@commit)\npluk impact computeFoo # direct dependents; blast radius\npluk diff symbol abc123 def456 # symbol changes between commits abc123 \u2192 def456, local to the current branch\n```\n\nImportant: the repository you index must be public (or otherwise directly reachable by the worker container). The worker clones repositories inside the container environment using the repository URL; private repositories that require credentials are not supported by the host shim workflow.\n\n**Note:** CLI commands that poll for job status (like `pluk init`) now display real-time output, thanks to unbuffered Python output in the CLI container.\n\n4. **Check / stop (host-side)**\n\n```bash\npluk status # tells you if services are running\npluk cleanup # stops services (containers stay; fast restart)\n```\n\nIf you want a full teardown (remove containers/network), use:\n\n```bash\ndocker compose -f ~/.pluk/docker-compose.yml down -v\n```\n\n---\n\n## Data Flow\n\n[](https://mermaid.live/edit#pako:eNp9UtGO2jAQ_BVrHyqQAiKBhCSVKrWg6irRit6dVKmkqkyyl0Qkdmo7BUr4967D0eNe-rT27OzsztonSGWGEMNTJfdpwZVhq_tEMKbbba54U7A7qY0FGHsoynqTQFO1OzYoCGaakGECP2weRZaIV5VLme5Q_fyCZi_V7qKxWH0iibQqmUb1u0wxEQMrmL1leMCUGa5yNFdNxt6vLZ83t_yPXBvCX0jfSB4V8fb94Ya6wArV8YV5j1mpN4M-JGKrpKW_YSlPCxw-c5YfNoM1ucsVPnxdJUIf662sWO9p-Nqq3Qgbjd51_eylMLKzDm2KQp-5e3xcd9aGBSlc6OJXiy2SW73THXse52qkp6RS6Lb-L2WvSoPUNcNDR1PfNlDIM0Y9VIna5sCBXJUZxEa16ECNqub2CidblYApsMYEYjpmnN4KEnGmmoaL71LW1zIl27yA-IlXmm5tk3GDy5LTZup_qKLloFrIVhiIg8j3ehWIT3CAeOSF_tibzqahO43mURDNZw4cIXa9YBzOCJyEfjQJvDA4O_Cn7-yOPd-fBG7ou-48CN25A7QKI9Xny7_tv-_5L0GP5fk)\n\n**How it works**\n\n- **Host shim (`pluk`)** writes the Compose file, **pulls images**, and runs `docker compose up`.\n- **CLI container (`plukd`)** is the exec target; it calls the API at `http://api:8000`.\n- **API (FastAPI)** serves read endpoints (`/search`, `/define`, `/impact`, `/diff`) and enqueues write jobs (`/reindex`) to **Redis**.\n- **Worker (Celery)** consumes jobs from **Redis**, clones/pulls repos into a volume (`/var/pluk/repos`), parses deltas, and writes to **Postgres**.\n- Reads never block on indexing; write progress can be polled via job status endpoints (planned).\n\n---\n\n## Architecture (current)\n\n- **Single image, multiple roles**: Compose selects per-service `command`\n - `api` \u2192 `uvicorn pluk.api:app --host 0.0.0.0 --port 8000`\n - `worker` \u2192 `celery -A pluk.worker worker -l info`\n - `cli` \u2192 `sleep infinity` (keeps container up for `docker compose exec`)\n- **Internal networking**: API is _not_ exposed to the host; CLI calls it over Docker DNS (`PLUK_API_URL=http://api:8000`).\n- **Config**: `PLUK_DATABASE_URL`, `PLUK_REDIS_URL` injected via Compose; worker uses `PLUK_REPOS_DIR=/var/pluk/repos`.\n- **Images**: by default the shim uses `jorstors/pluk:latest`, `postgres:16-alpine`, and `redis-alpine`\n\n---\n\n## Development\n\n- **Project layout** (`src/pluk`):\n - `shim.py` \u2014 host shim entrypoint (`pluk`)\n - `cli.py` \u2014 container CLI (`plukd`)\n - `api.py` \u2014 FastAPI app (internal API)\n - `worker.py` \u2014 Celery app & tasks\n- **Entry points** (`pyproject.toml`):\n\n```toml\n[project.scripts]\npluk = \"pluk.shim:main\"\nplukd = \"pluk.cli:main\"\n```\n\n---\n\n## Testing\n\n```bash\npytest\n```\n\nDocker must be running; services must be started via `pluk start` for integration tests.\n\n---\n\n## License\n\nMIT License\n",

"bugtrack_url": null,

"license": null,

"summary": "Symbol lookup / search engine CLI",

"version": "0.6.1",

"project_urls": null,

"split_keywords": [

"symbol",

" search",

" cli",

" index",

" lookup"

],

"urls": [

{

"comment_text": null,

"digests": {

"blake2b_256": "23459fab788b8972ab797259dc74d0bbd52cd1e7eea699e405ab4ce8626db1ba",

"md5": "8cc1e956968a8e4f087777d9b31d4b1f",

"sha256": "9a645903965bc55cd4da84bb959244dc7f3c0e762b7739b7518f77d52c34fe39"

},

"downloads": -1,

"filename": "pluk-0.6.1-py3-none-any.whl",

"has_sig": false,

"md5_digest": "8cc1e956968a8e4f087777d9b31d4b1f",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.11",

"size": 20837,

"upload_time": "2025-09-08T08:24:04",

"upload_time_iso_8601": "2025-09-08T08:24:04.879799Z",

"url": "https://files.pythonhosted.org/packages/23/45/9fab788b8972ab797259dc74d0bbd52cd1e7eea699e405ab4ce8626db1ba/pluk-0.6.1-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": null,

"digests": {

"blake2b_256": "bc49c8dc337199771fafb191df97e08785bd43b0b5b584f23c3fb5947b2ab4b8",

"md5": "858c153039327e219965ce27155f2312",

"sha256": "c6eef48680ee4d746bb8f6589536aaf40ddb2941ca75aa6a215ccd010d6d9300"

},

"downloads": -1,

"filename": "pluk-0.6.1.tar.gz",

"has_sig": false,

"md5_digest": "858c153039327e219965ce27155f2312",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.11",

"size": 23543,

"upload_time": "2025-09-08T08:24:07",

"upload_time_iso_8601": "2025-09-08T08:24:07.634716Z",

"url": "https://files.pythonhosted.org/packages/bc/49/c8dc337199771fafb191df97e08785bd43b0b5b584f23c3fb5947b2ab4b8/pluk-0.6.1.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2025-09-08 08:24:07",

"github": false,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"lcname": "pluk"

}