# py-netty :rocket:

An event-driven TCP networking framework.

Ideas and concepts under the hood are build upon those of [Netty](https://netty.io/), especially the IO and executor model.

APIs are intuitive to use if you are a Netty alcoholic.

# Features

- callback based application invocation

- non blocking IO

- recv/write is performed only in IO thread

- adaptive read buffer

- low/higher water mark to indicate writability (default low water mark is 32K and high water mark is 64K)

- all platform supported (linux: epoll, mac: kqueue, windows: select)

## Installation

```bash

pip install py-netty

```

## Getting Started

Start an echo server:

```python

from py_netty import ServerBootstrap

ServerBootstrap().bind(address='0.0.0.0', port=8080).close_future().sync()

```

Start an echo server (TLS):

```python

from py_netty import ServerBootstrap

ServerBootstrap(certfile='/path/to/cert/file', keyfile='/path/to/cert/file').bind(address='0.0.0.0', port=9443).close_future().sync()

```

As TCP client:

```python

from py_netty import Bootstrap, ChannelHandlerAdapter

class HttpHandler(ChannelHandlerAdapter):

def channel_read(self, ctx, buffer):

print(buffer.decode('utf-8'))

remote_address, remote_port = 'www.google.com', 80

b = Bootstrap(handler_initializer=HttpHandler)

channel = b.connect(remote_address, remote_port).sync().channel()

request = f'GET / HTTP/1.1\r\nHost: {remote_address}\r\n\r\n'

channel.write(request.encode('utf-8'))

input() # pause

channel.close()

```

As TCP client (TLS):

```python

from py_netty import Bootstrap, ChannelHandlerAdapter

class HttpHandler(ChannelHandlerAdapter):

def channel_read(self, ctx, buffer):

print(buffer.decode('utf-8'))

remote_address, remote_port = 'www.google.com', 443

b = Bootstrap(handler_initializer=HttpHandler, tls=True, verify=True)

channel = b.connect(remote_address, remote_port).sync().channel()

request = f'GET / HTTP/1.1\r\nHost: {remote_address}\r\n\r\n'

channel.write(request.encode('utf-8'))

input() # pause

channel.close()

```

TCP port forwarding:

```python

from py_netty import ServerBootstrap, Bootstrap, ChannelHandlerAdapter, EventLoopGroup

class ProxyChannelHandler(ChannelHandlerAdapter):

def __init__(self, remote_host, remote_port, client_eventloop_group):

self._remote_host = remote_host

self._remote_port = remote_port

self._client_eventloop_group = client_eventloop_group

self._client = None

def _client_channel(self, ctx0):

class __ChannelHandler(ChannelHandlerAdapter):

def channel_read(self, ctx, bytebuf):

ctx0.write(bytebuf)

def channel_inactive(self, ctx):

ctx0.close()

if self._client is None:

self._client = Bootstrap(

eventloop_group=self._client_eventloop_group,

handler_initializer=__ChannelHandler

).connect(self._remote_host, self._remote_port).sync().channel()

return self._client

def exception_caught(self, ctx, exception):

ctx.close()

def channel_read(self, ctx, bytebuf):

self._client_channel(ctx).write(bytebuf)

def channel_inactive(self, ctx):

if self._client:

self._client.close()

proxied_server, proxied_port = 'www.google.com', 443

client_eventloop_group = EventLoopGroup(1, 'ClientEventloopGroup')

sb = ServerBootstrap(

parant_group=EventLoopGroup(1, 'Acceptor'),

child_group=EventLoopGroup(1, 'Worker'),

child_handler_initializer=lambda: ProxyChannelHandler(proxied_server, proxied_port, client_eventloop_group)

)

sb.bind(port=8443).close_future().sync()

```

## Event-driven callbacks

Create handler with callbacks for interested events:

``` python

from py_netty import ChannelHandlerAdapter

class MyChannelHandler(ChannelHandlerAdapter):

def channel_active(self, ctx: 'ChannelHandlerContext') -> None:

# invoked when channel is active (TCP connection ready)

pass

def channel_read(self, ctx: 'ChannelHandlerContext', msg: Union[bytes, socket.socket]) -> None:

# invoked when there is data ready to process

pass

def channel_inactive(self, ctx: 'ChannelHandlerContext') -> None:

# invoked when channel is inactive (TCP connection is broken)

pass

def channel_registered(self, ctx: 'ChannelHandlerContext') -> None:

# invoked when the channel is registered with a eventloop

pass

def channel_unregistered(self, ctx: 'ChannelHandlerContext') -> None:

# invoked when the channel is unregistered from a eventloop

pass

def channel_handshake_complete(self, ctx: 'ChannelHandlerContext') -> None:

# invoked when ssl handshake is complete, this only applies to client side

pass

def channel_writability_changed(self, ctx: 'ChannelHandlerContext') -> None:

# invoked when pending data > high water mark or < low water mark

pass

def exception_caught(self, ctx: 'ChannelHandlerContext', exception: Exception) -> None:

# invoked when there is any exception raised during process

pass

```

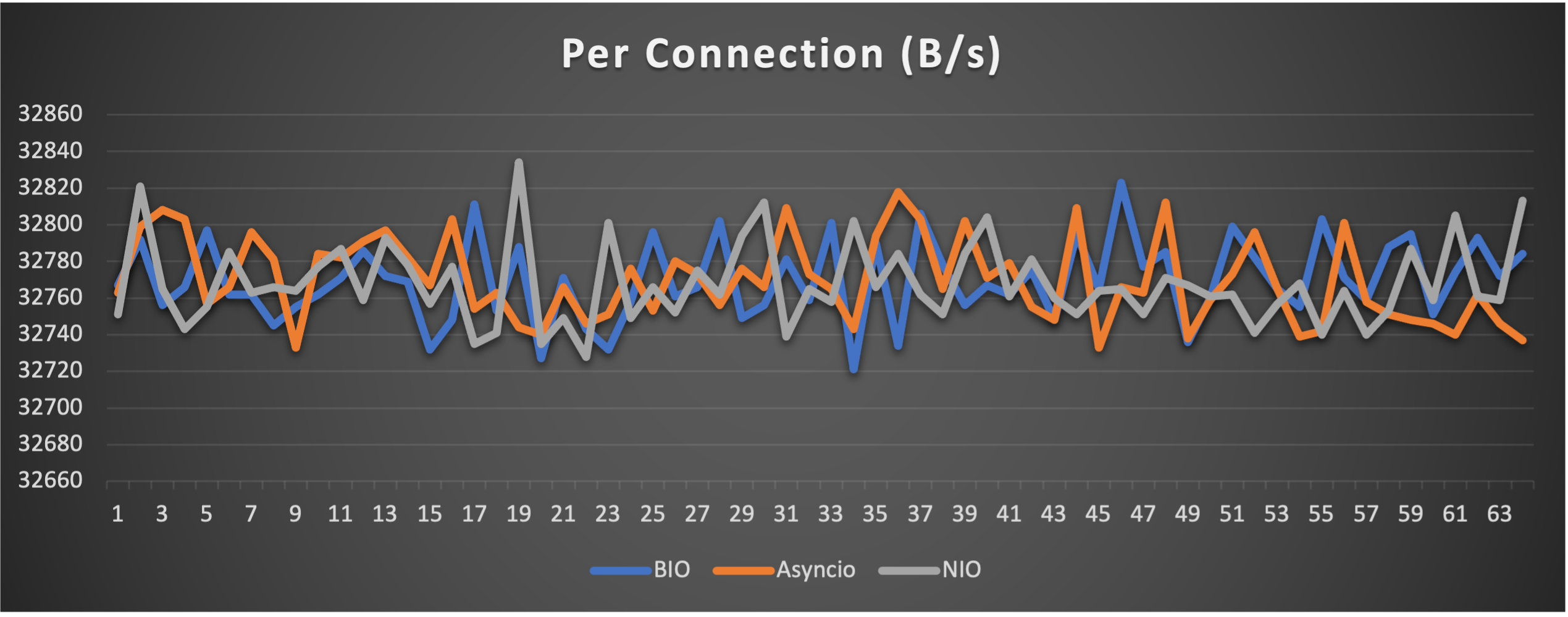

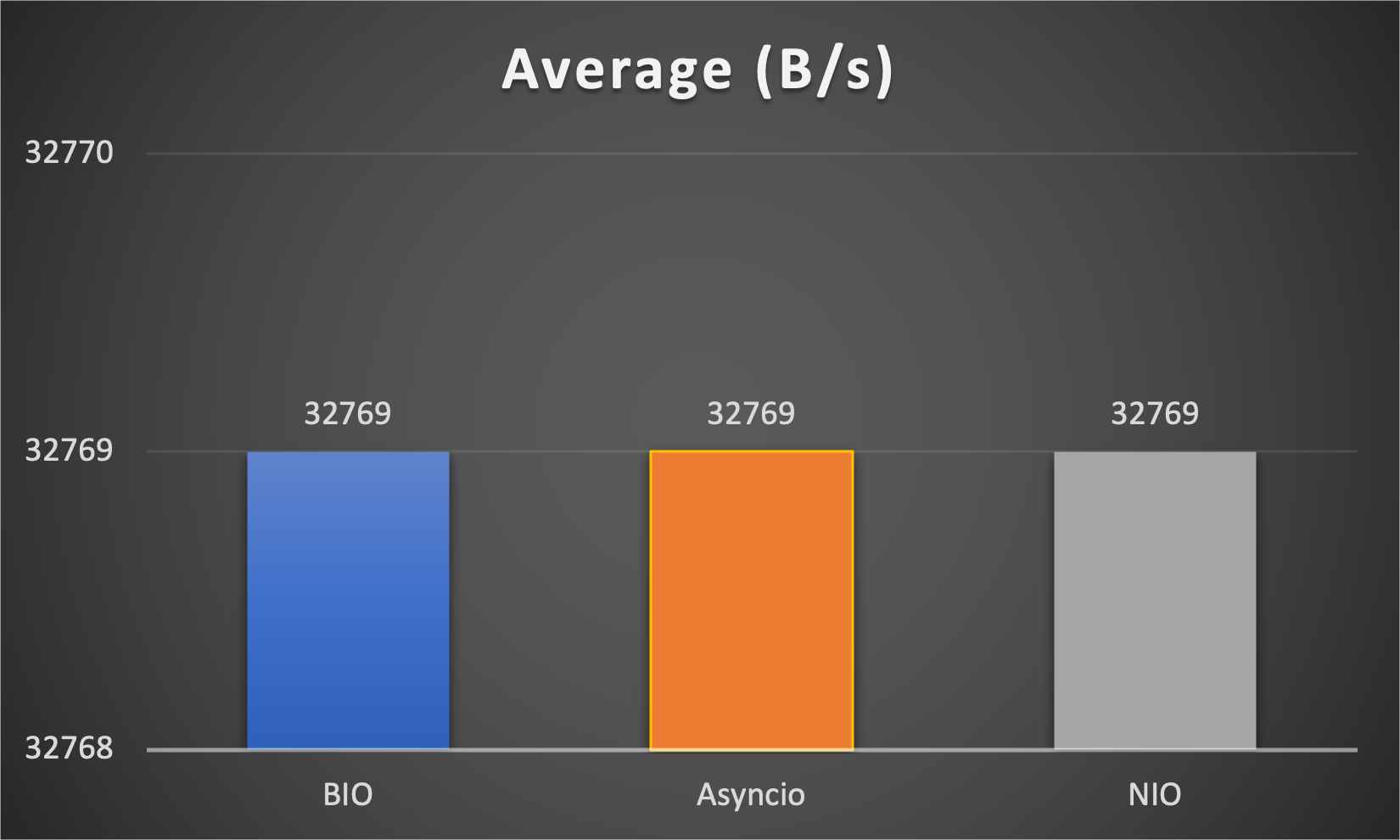

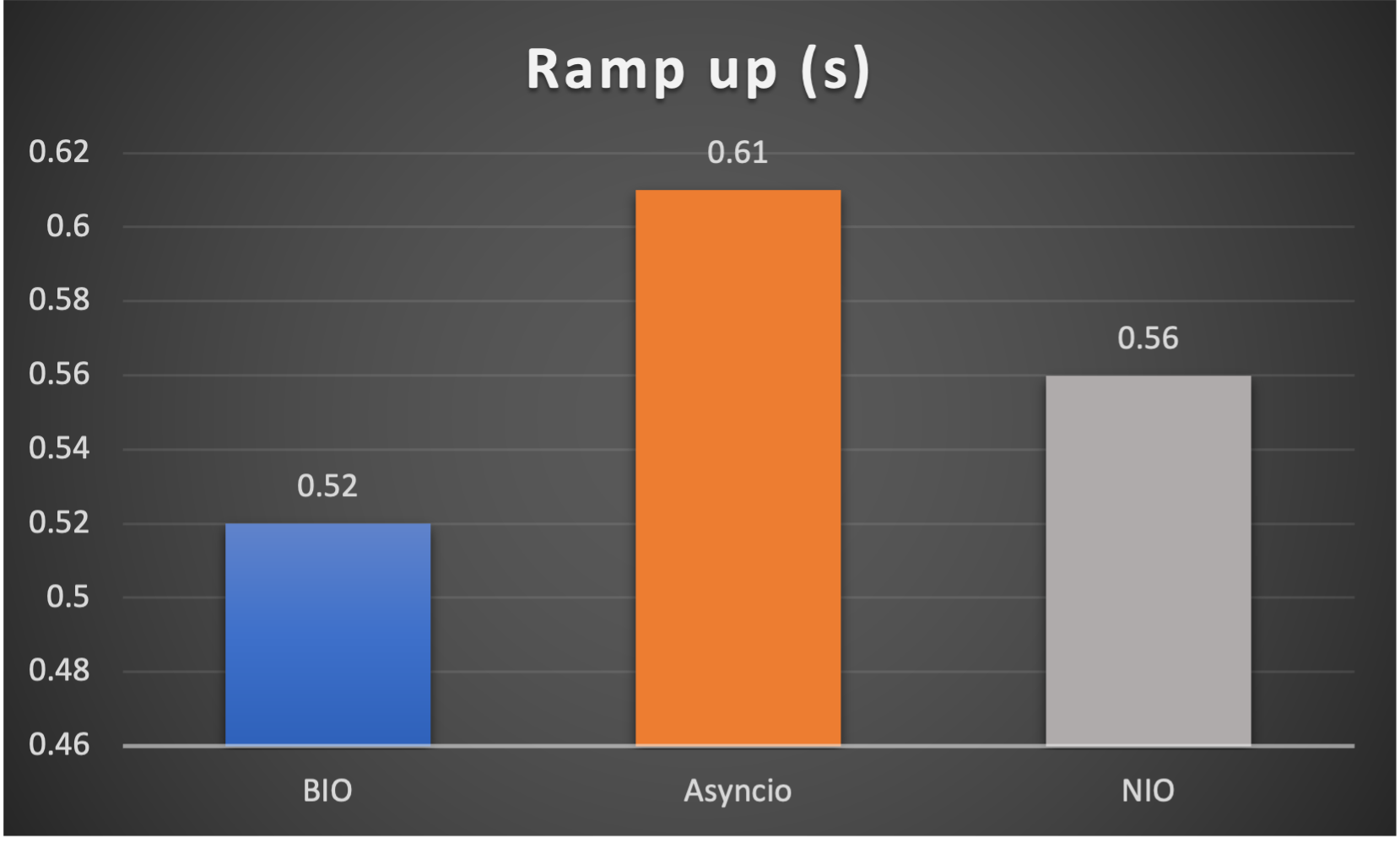

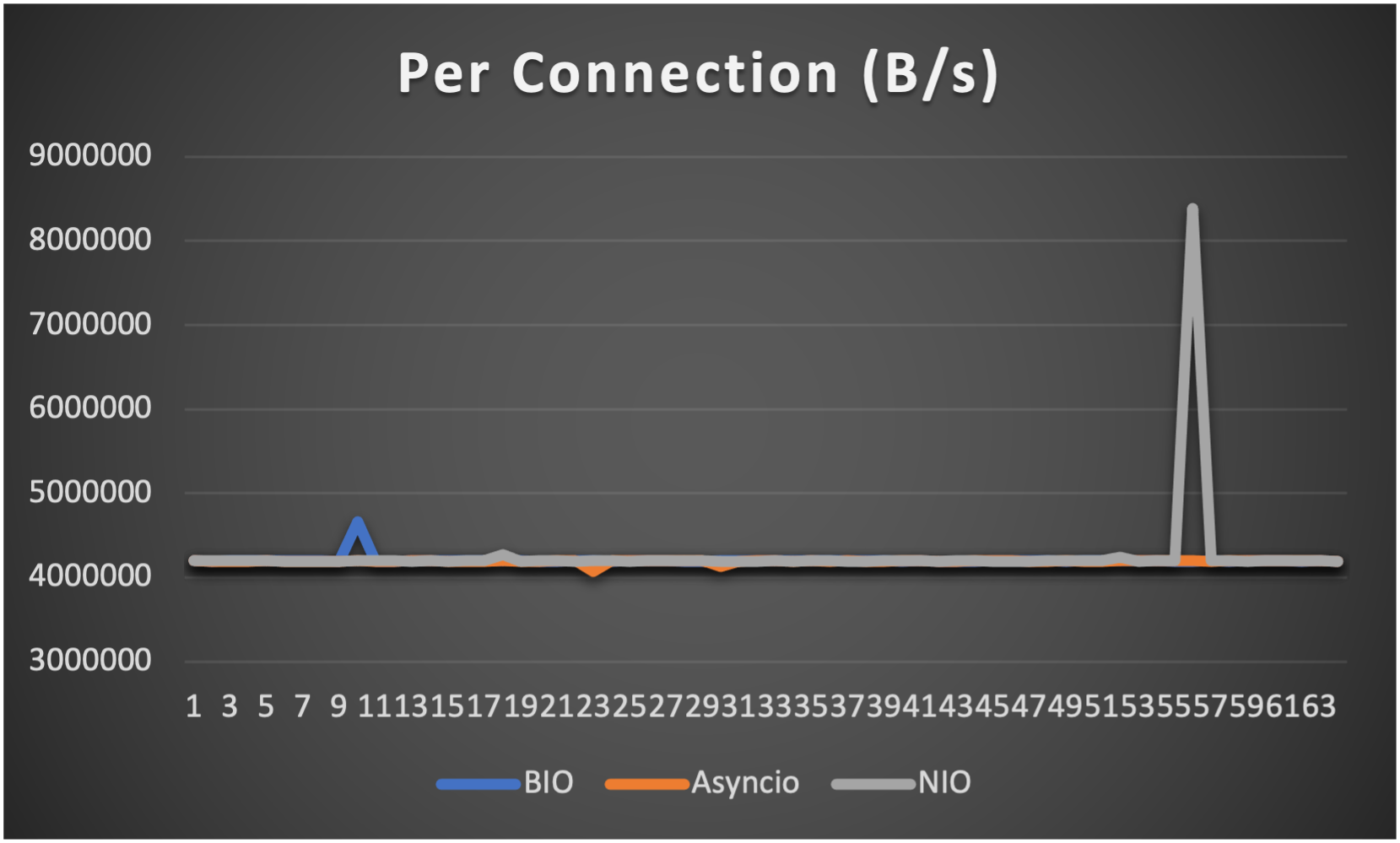

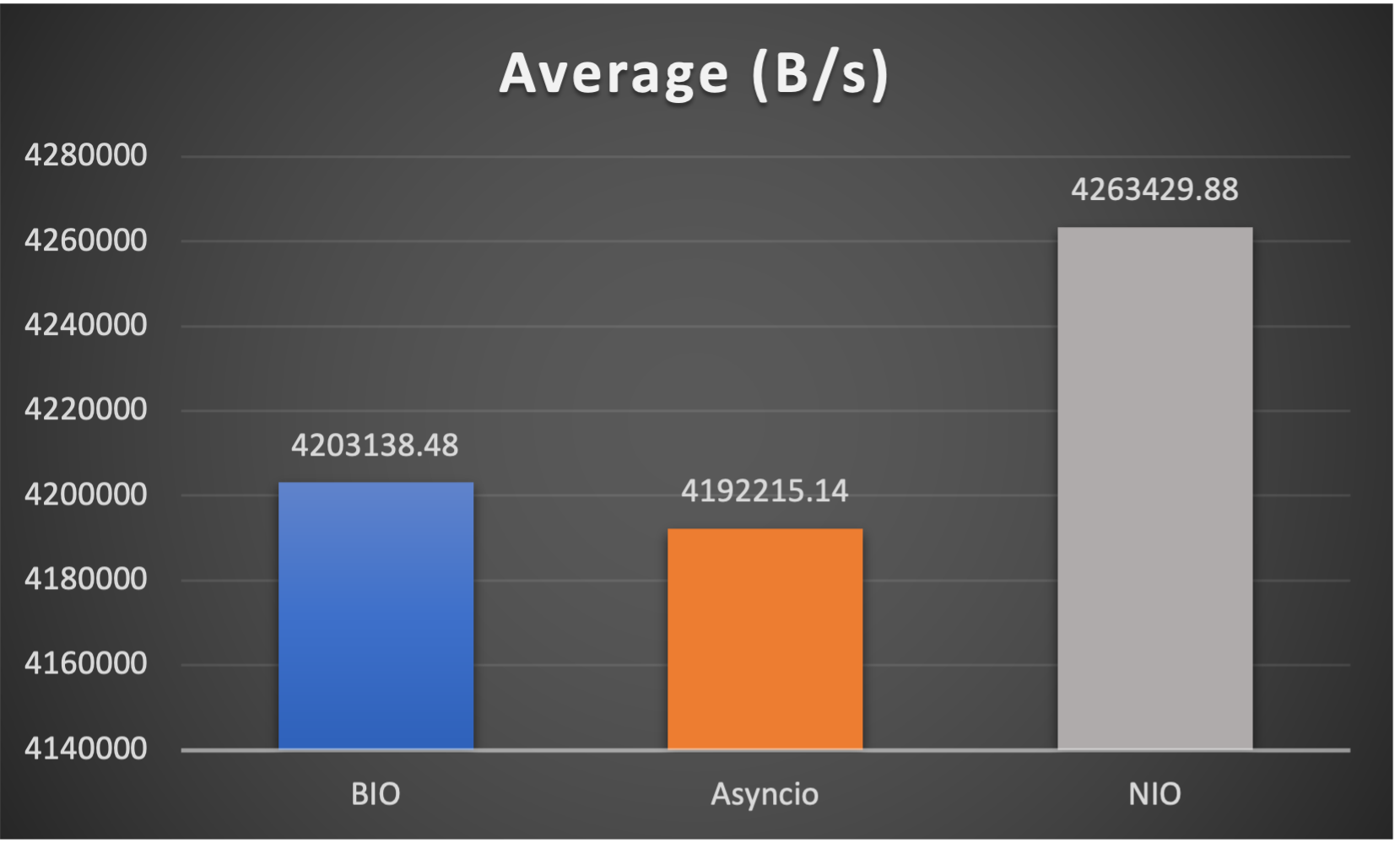

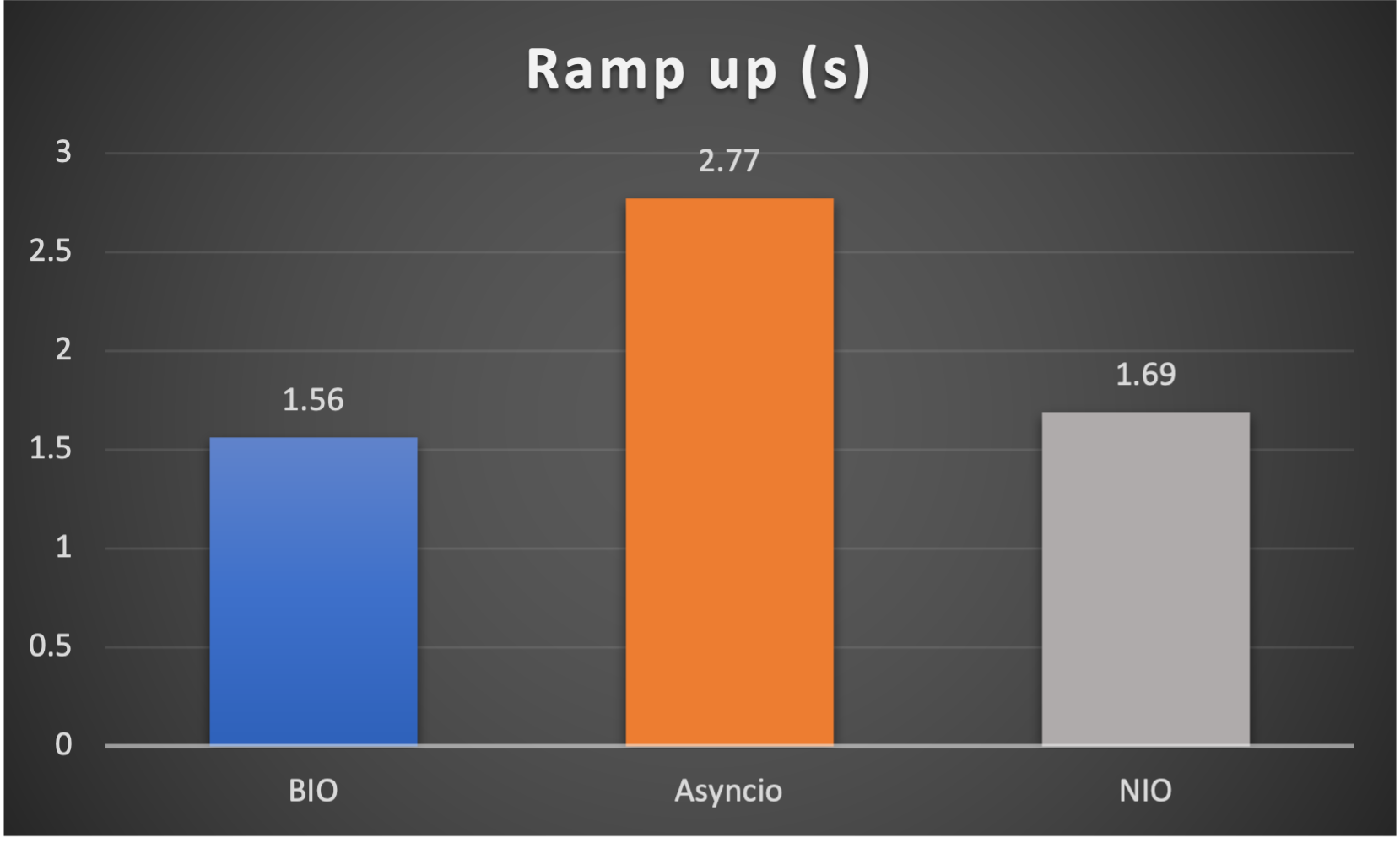

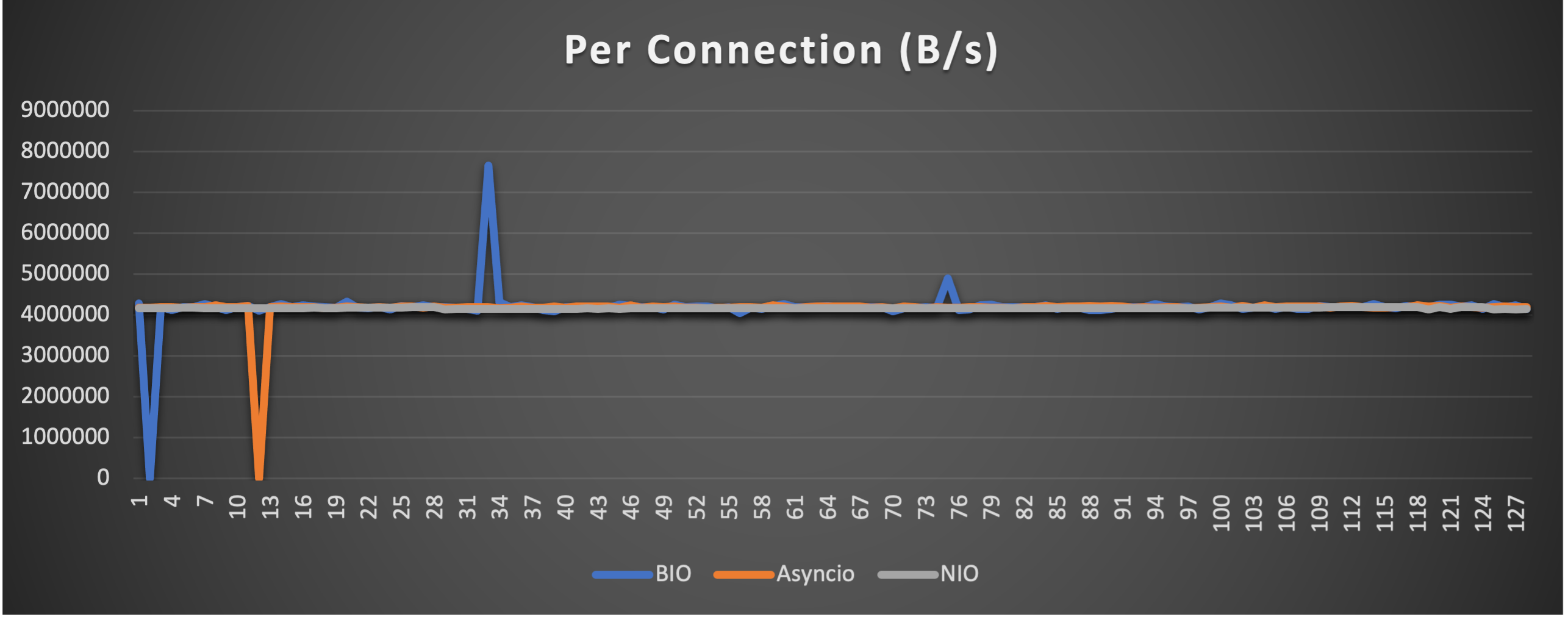

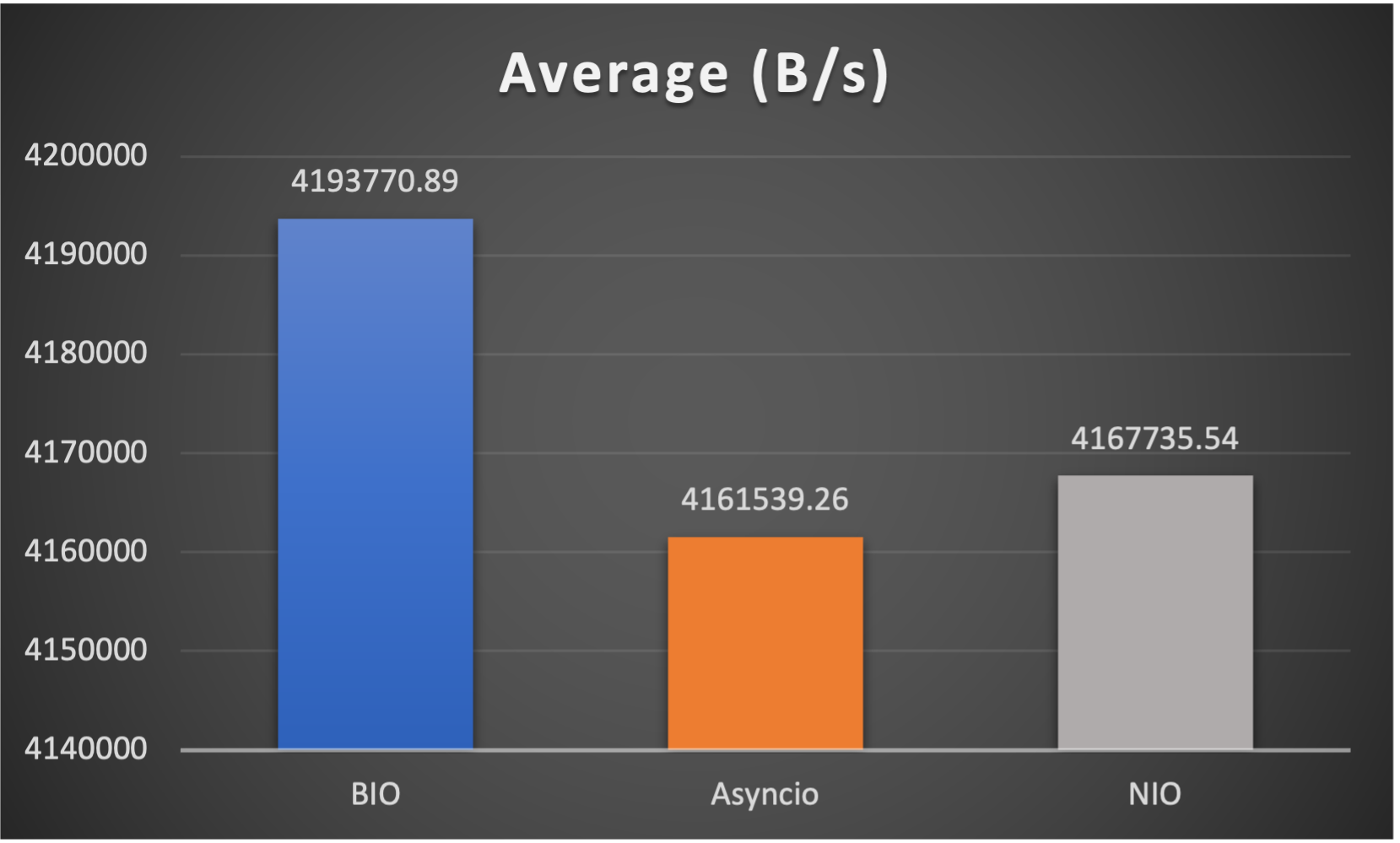

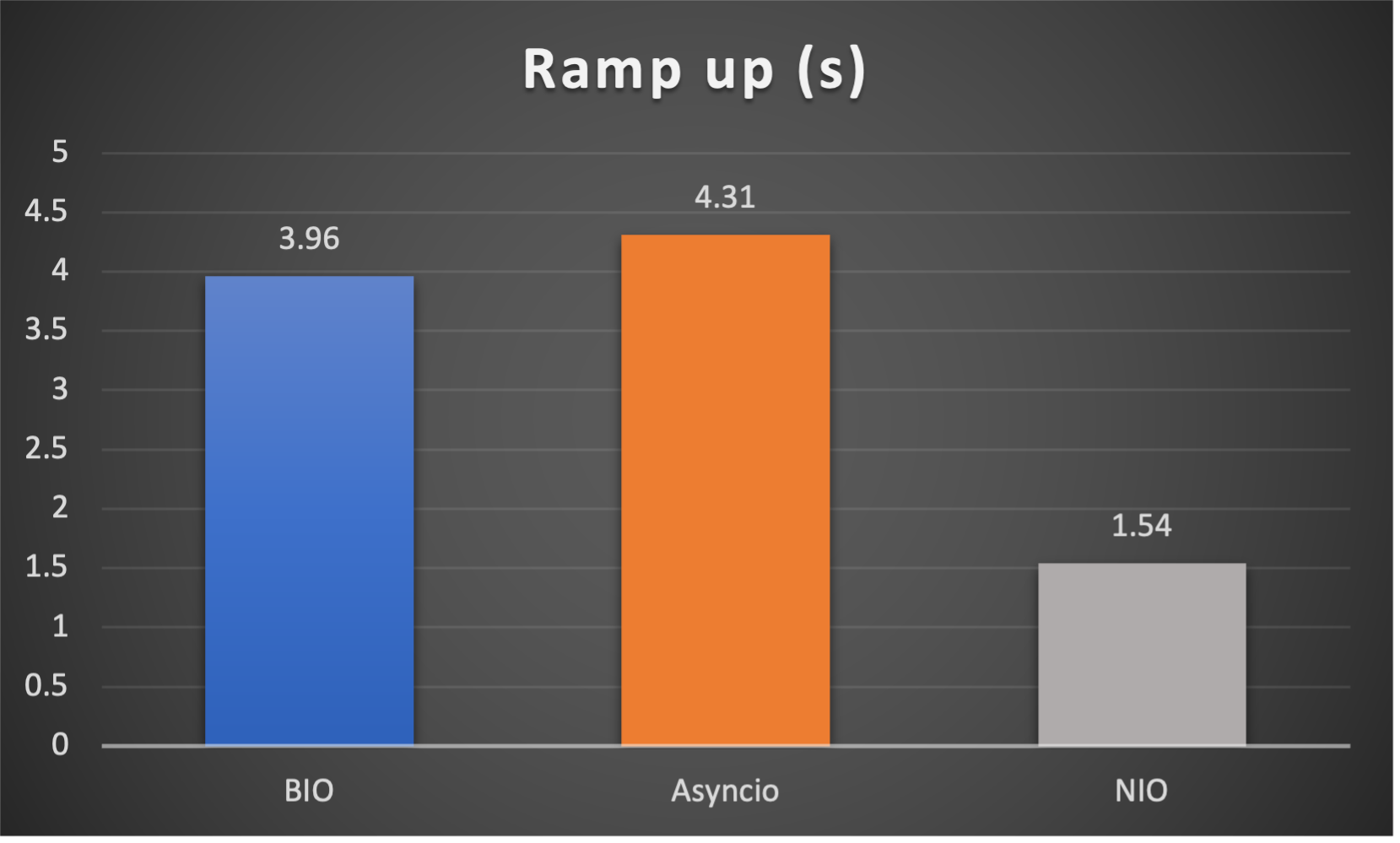

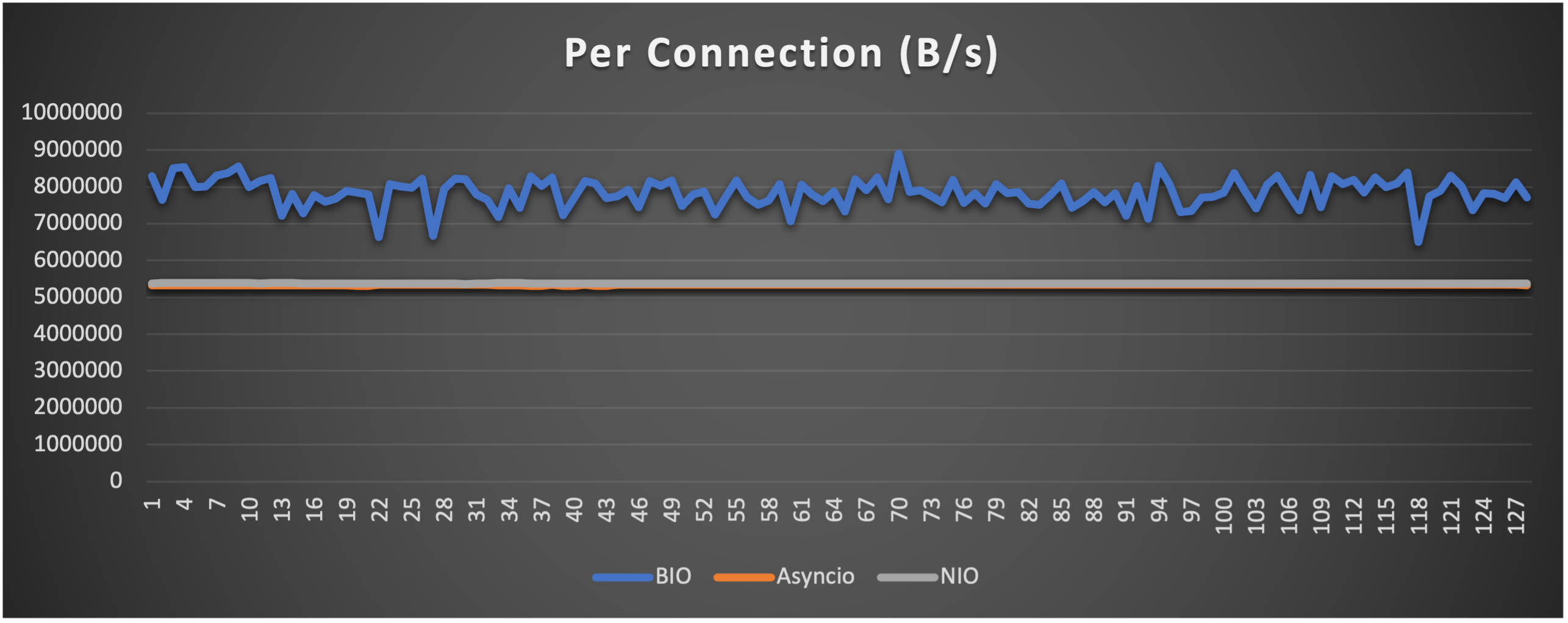

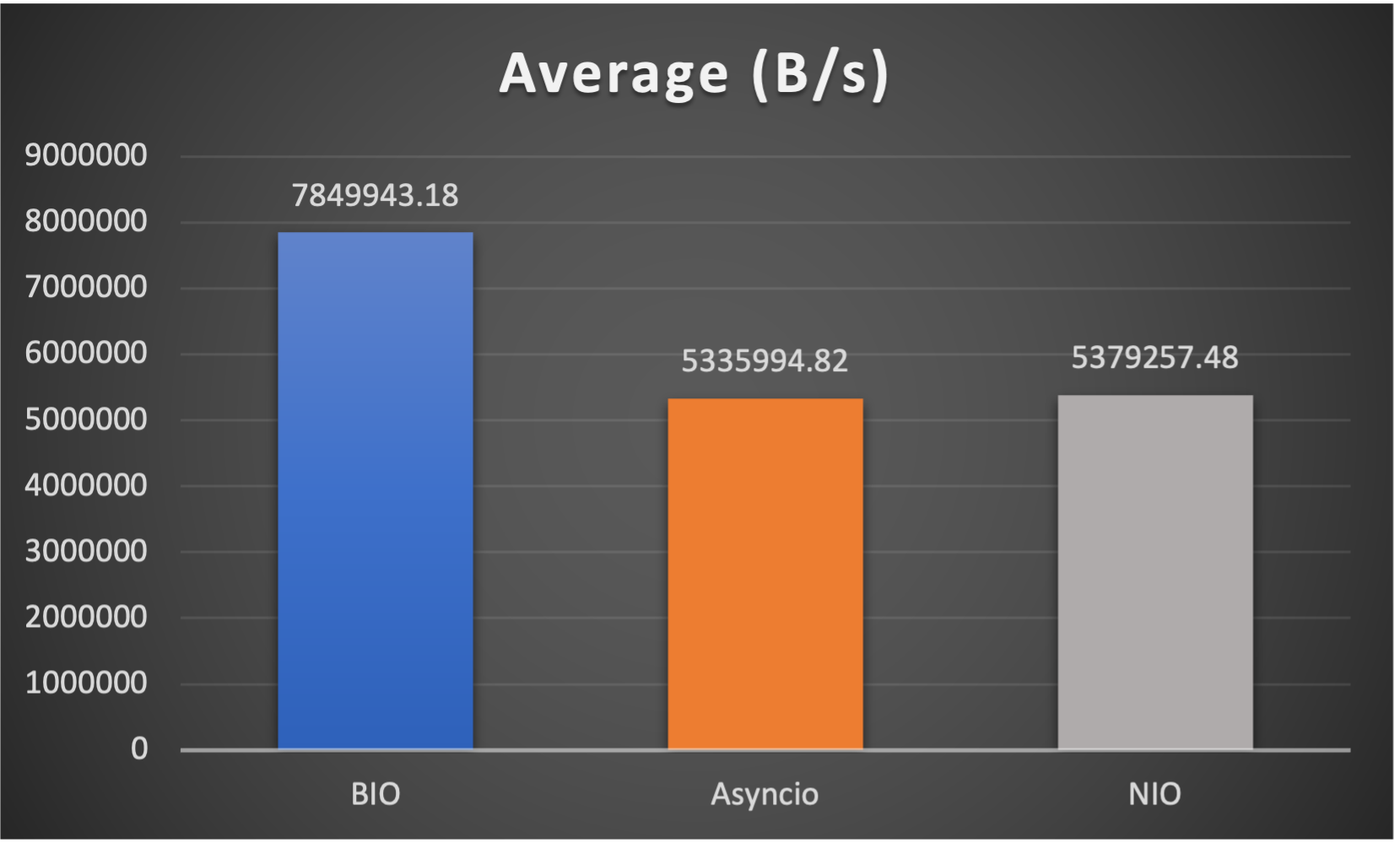

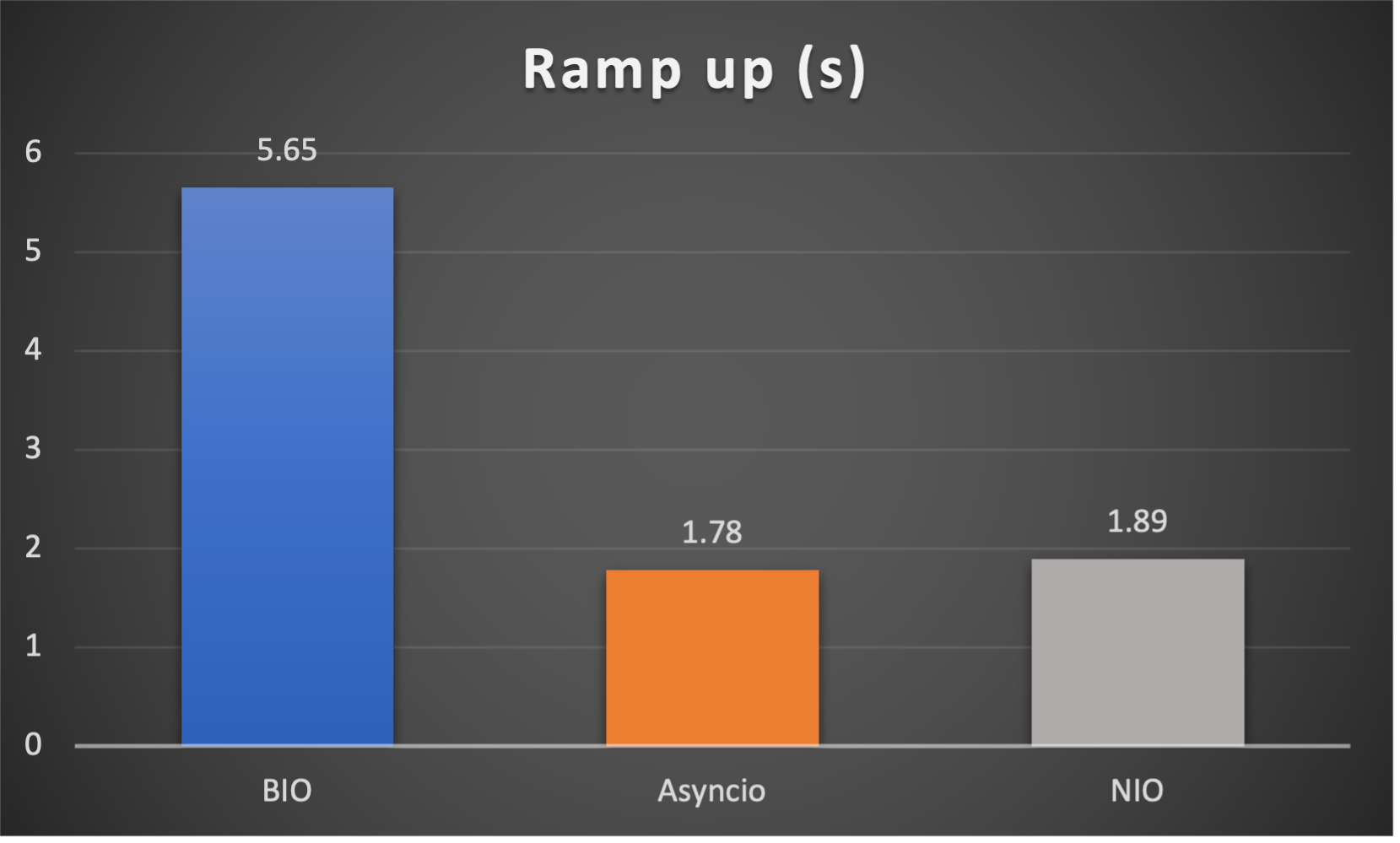

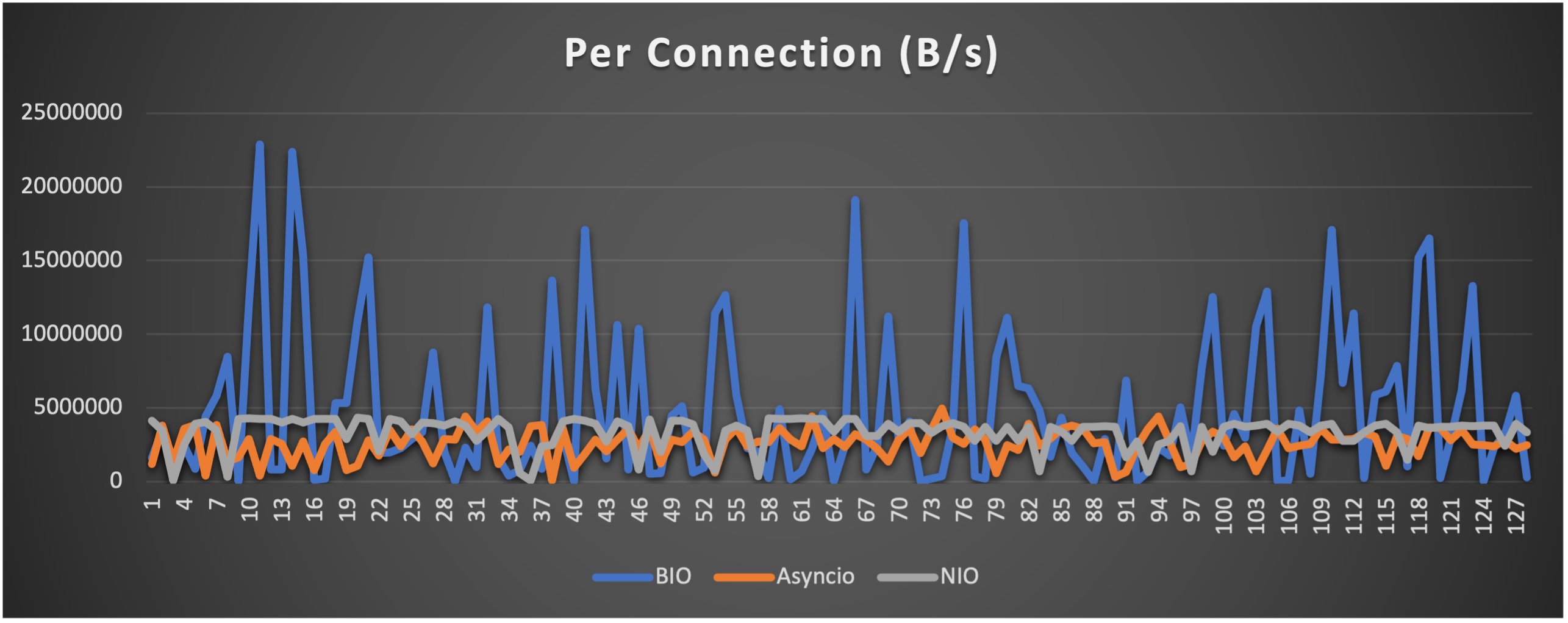

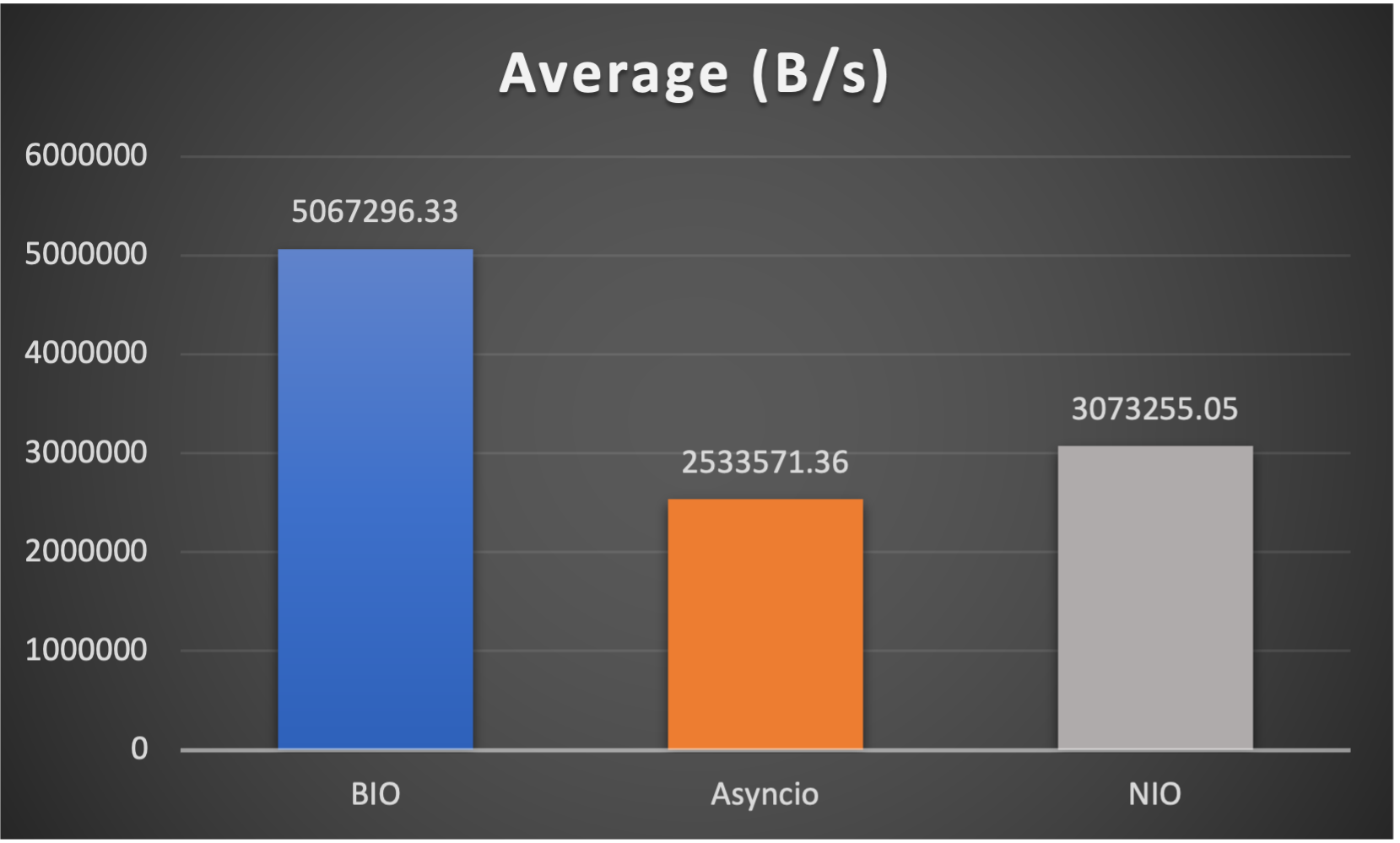

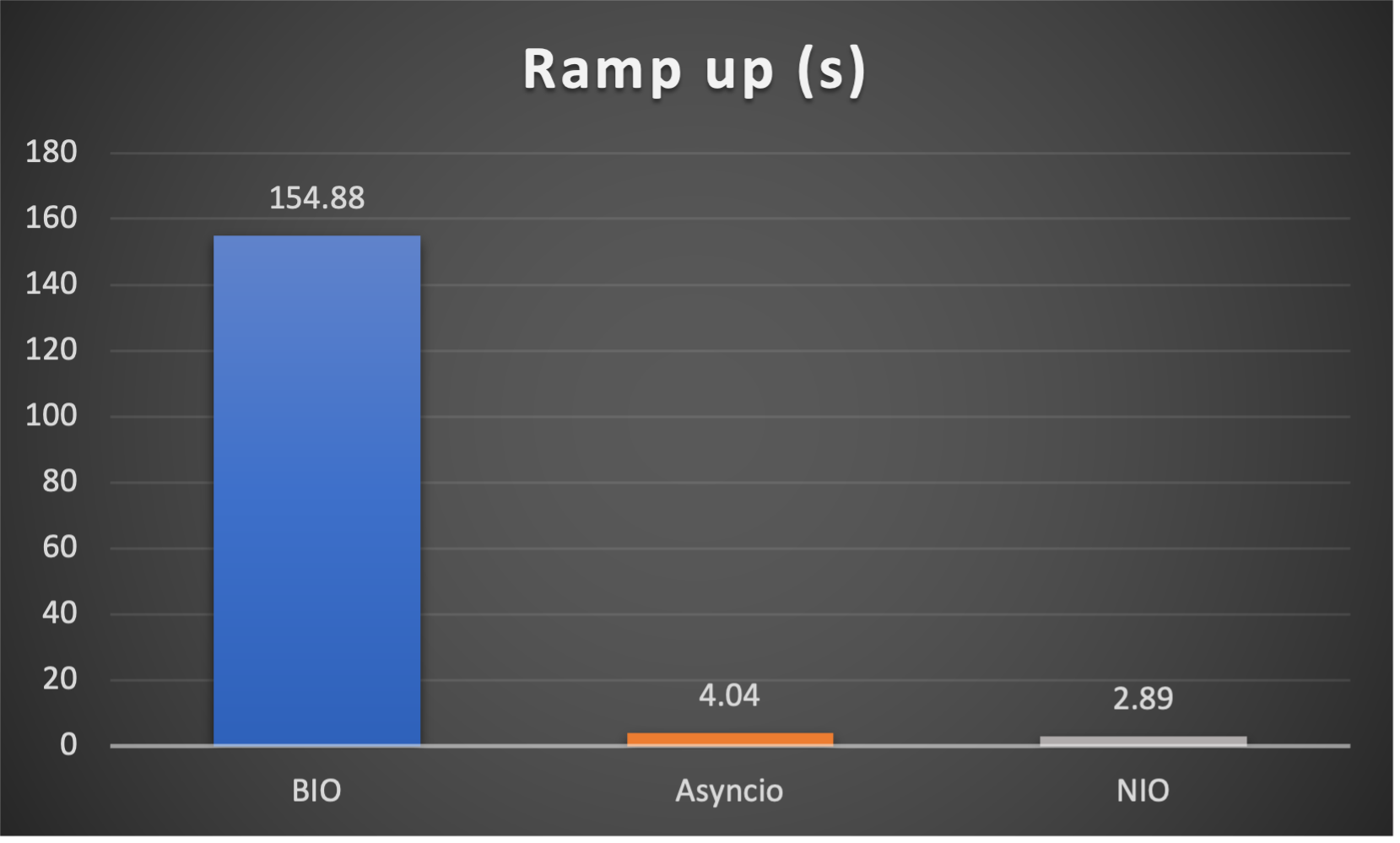

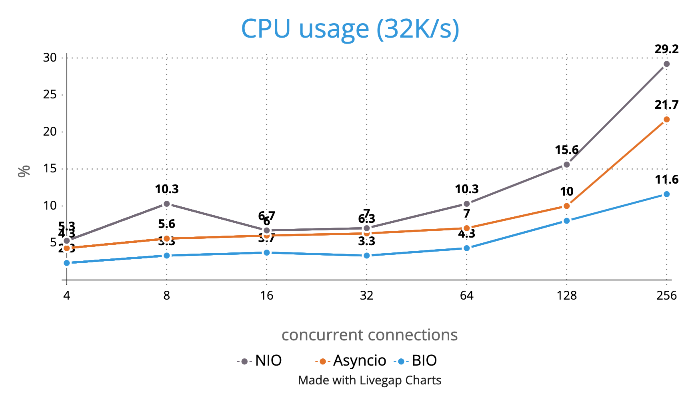

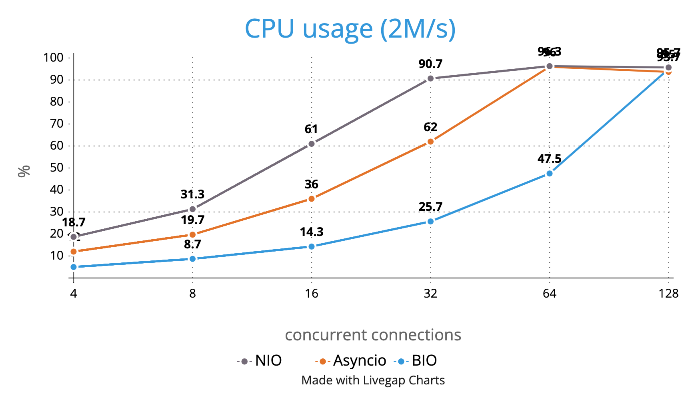

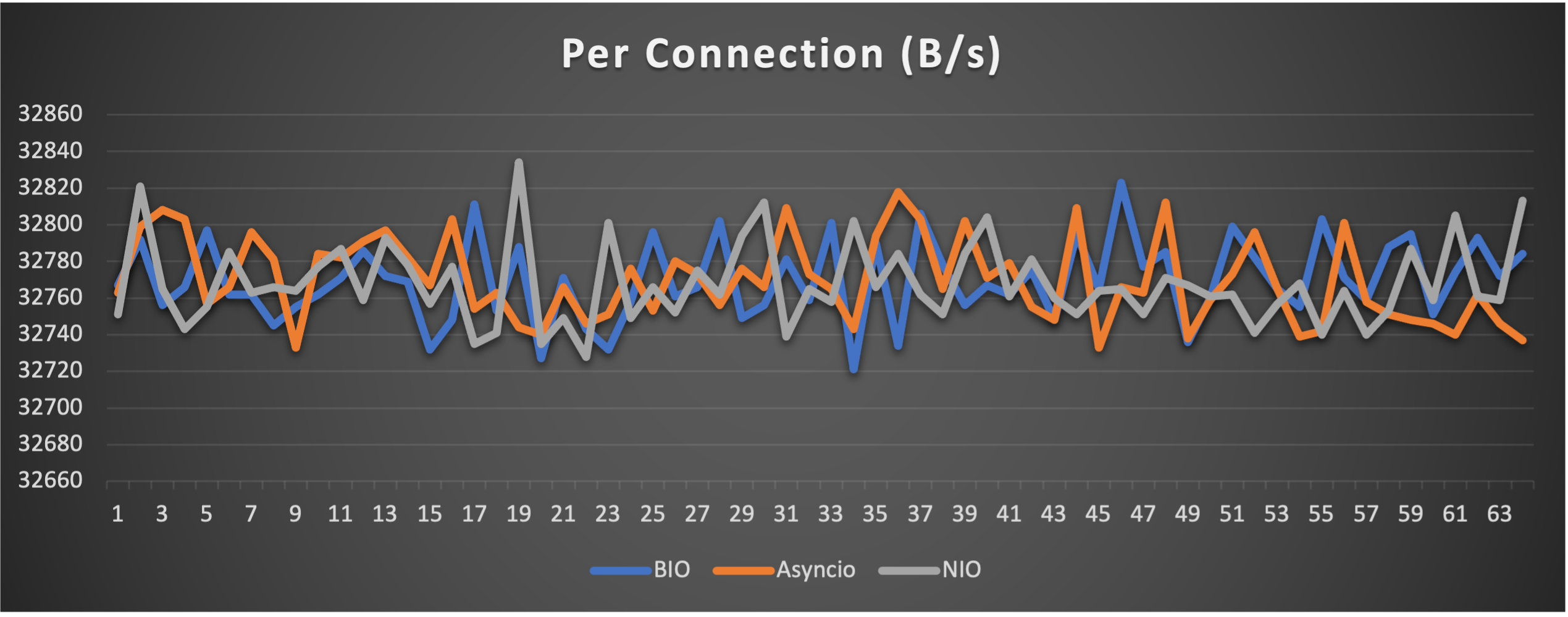

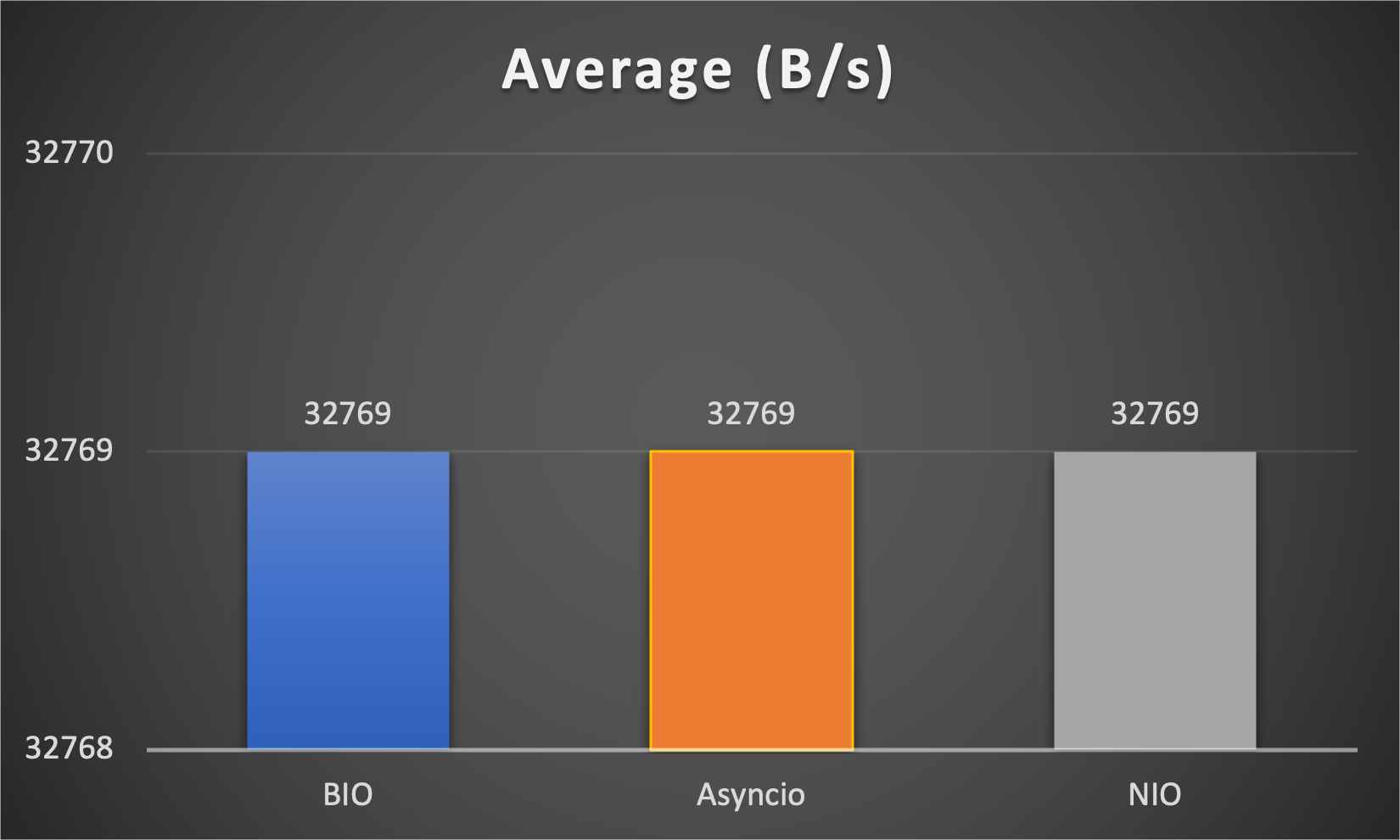

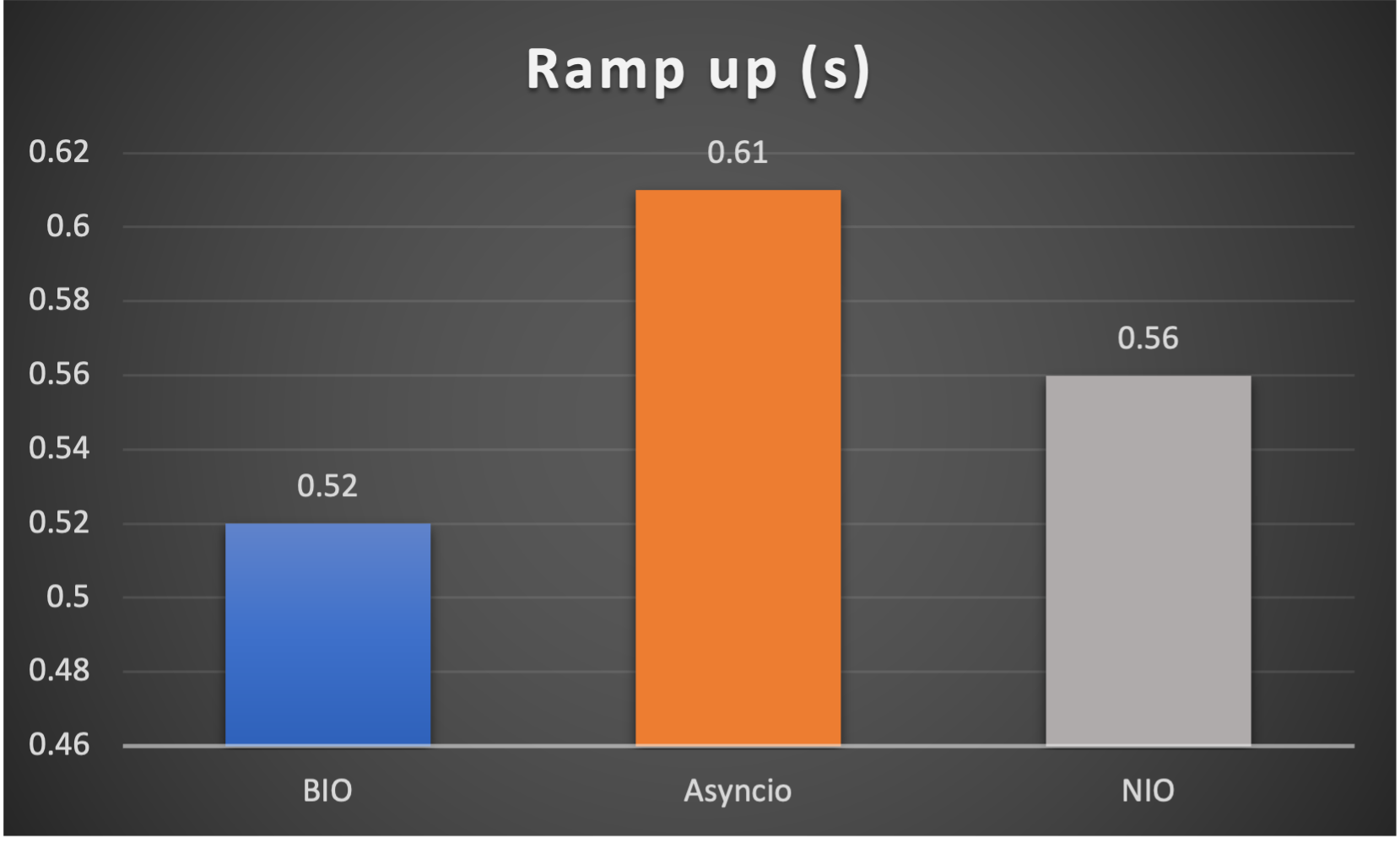

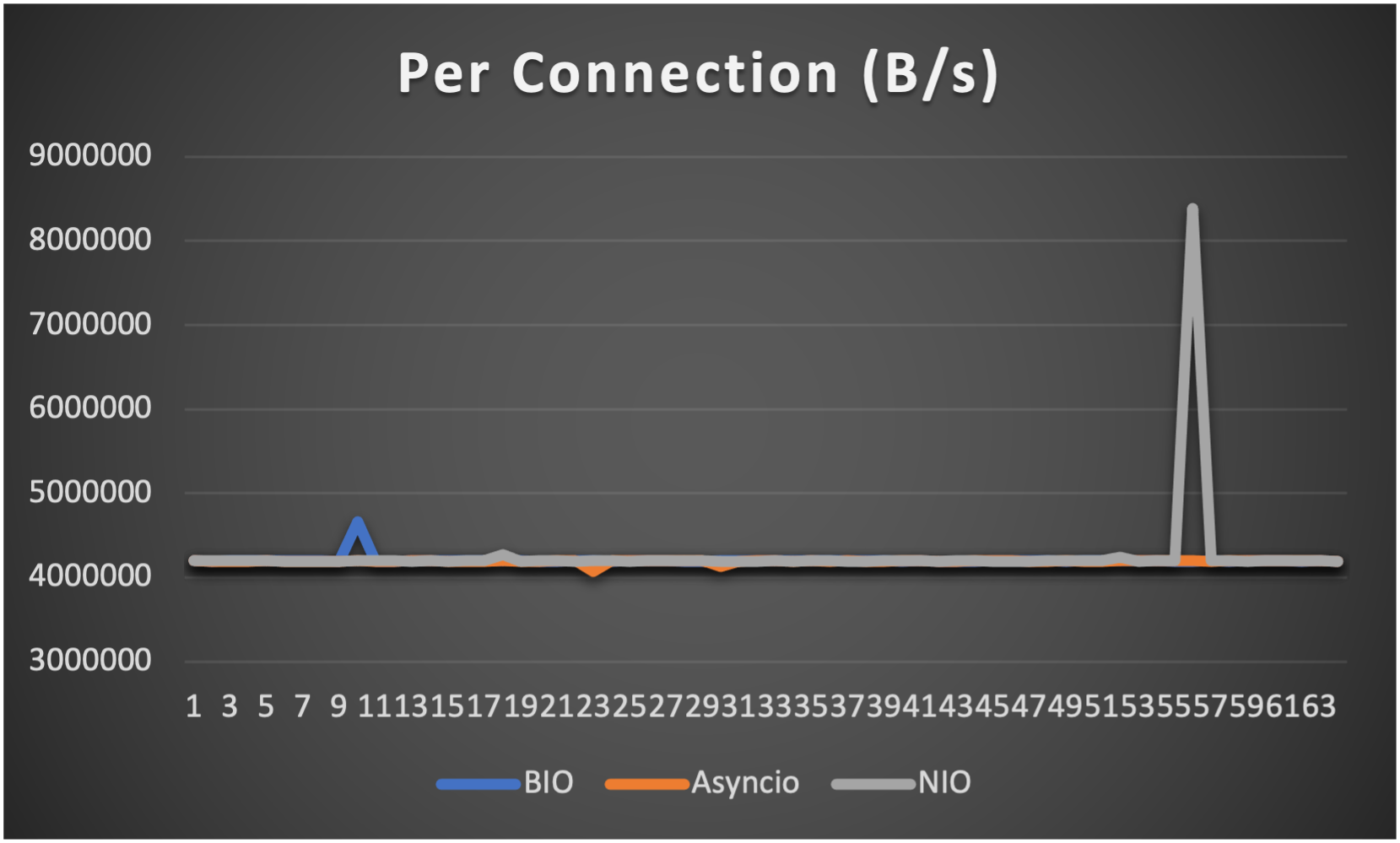

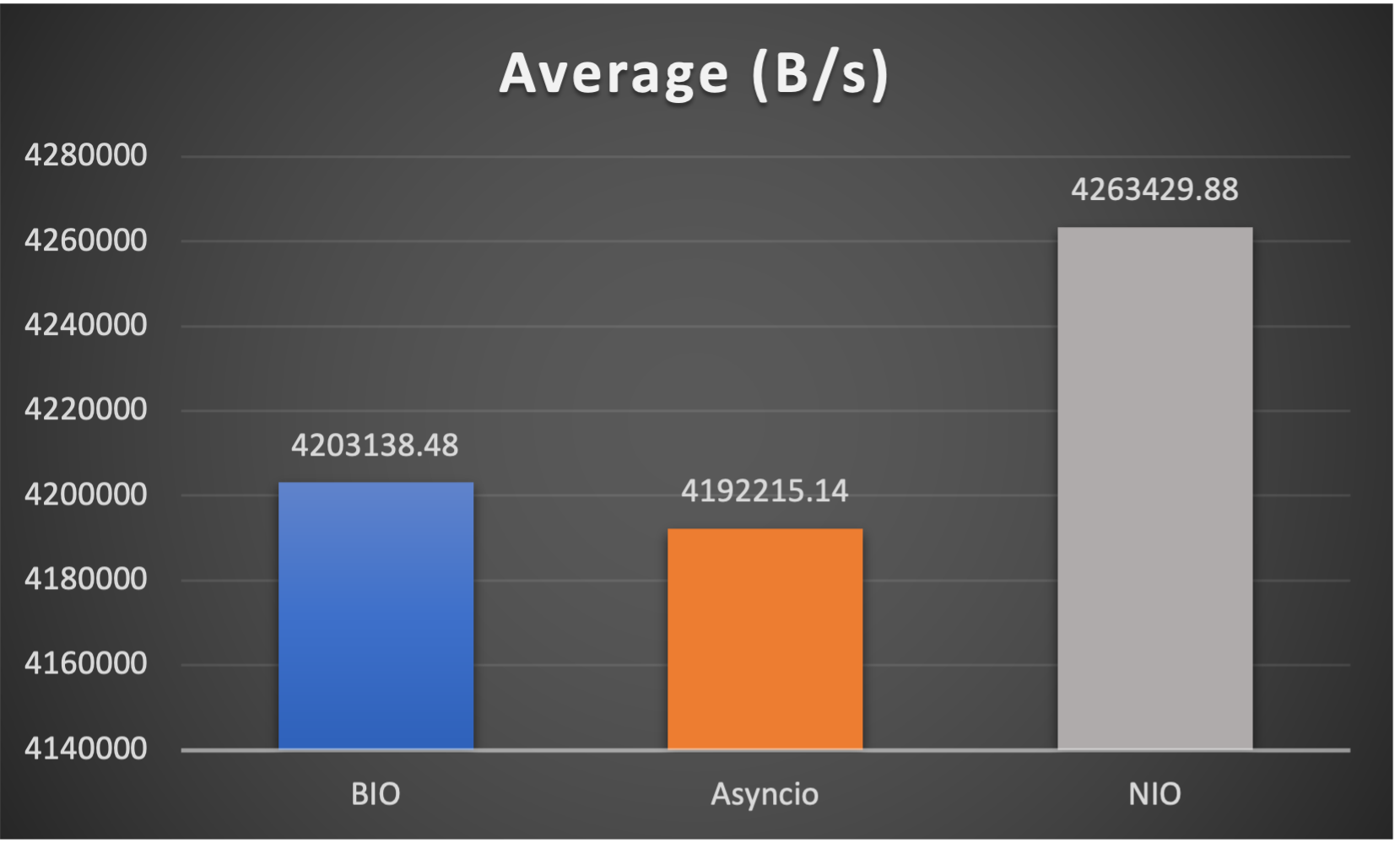

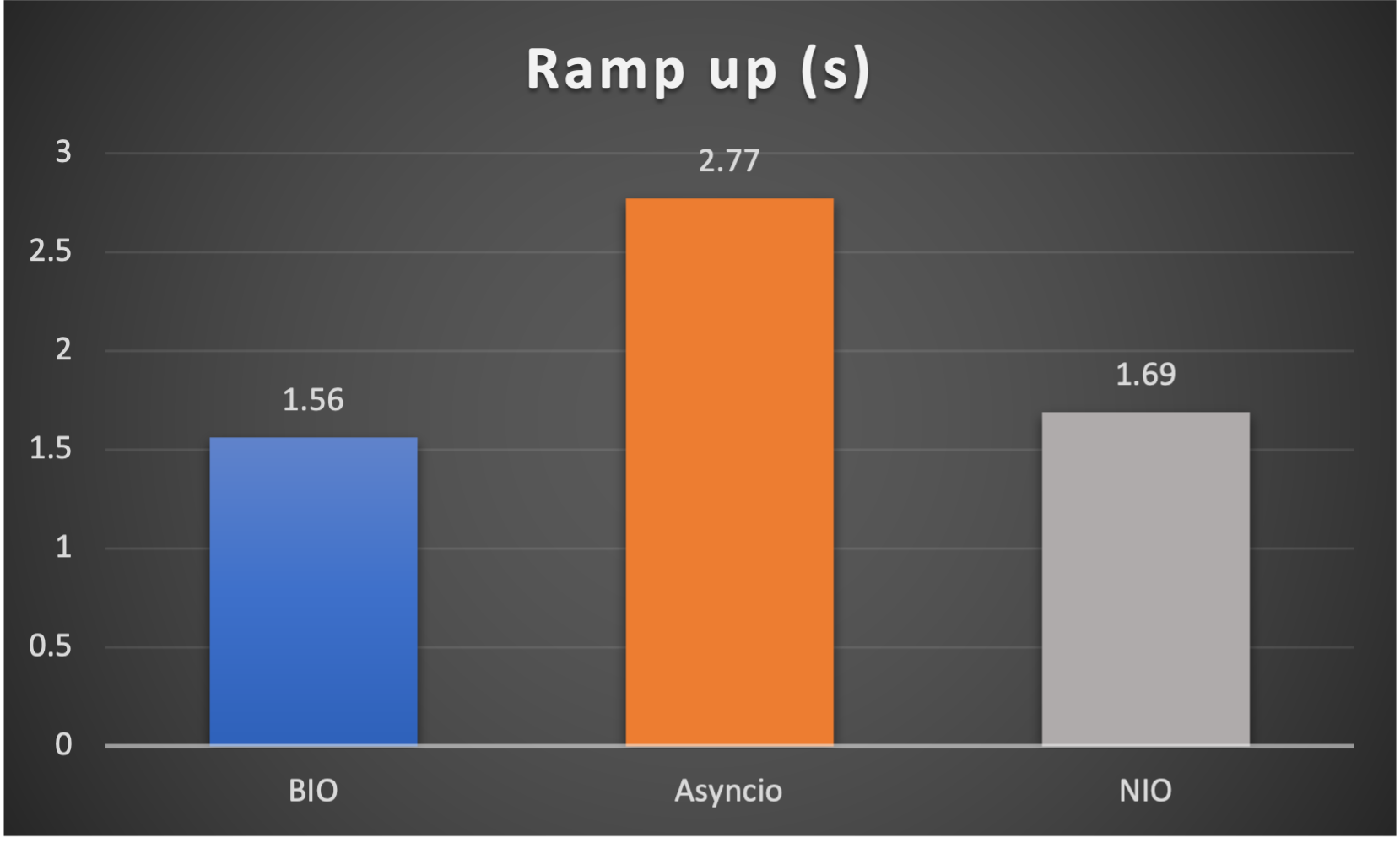

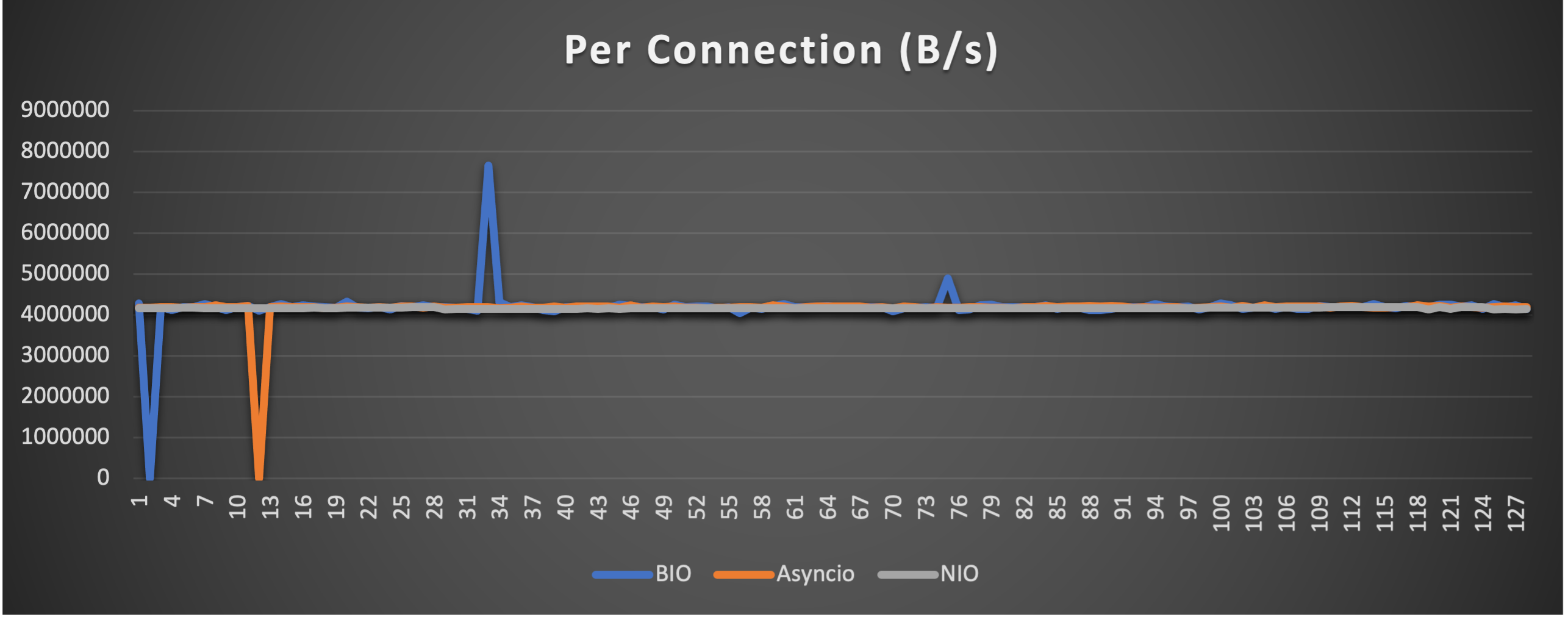

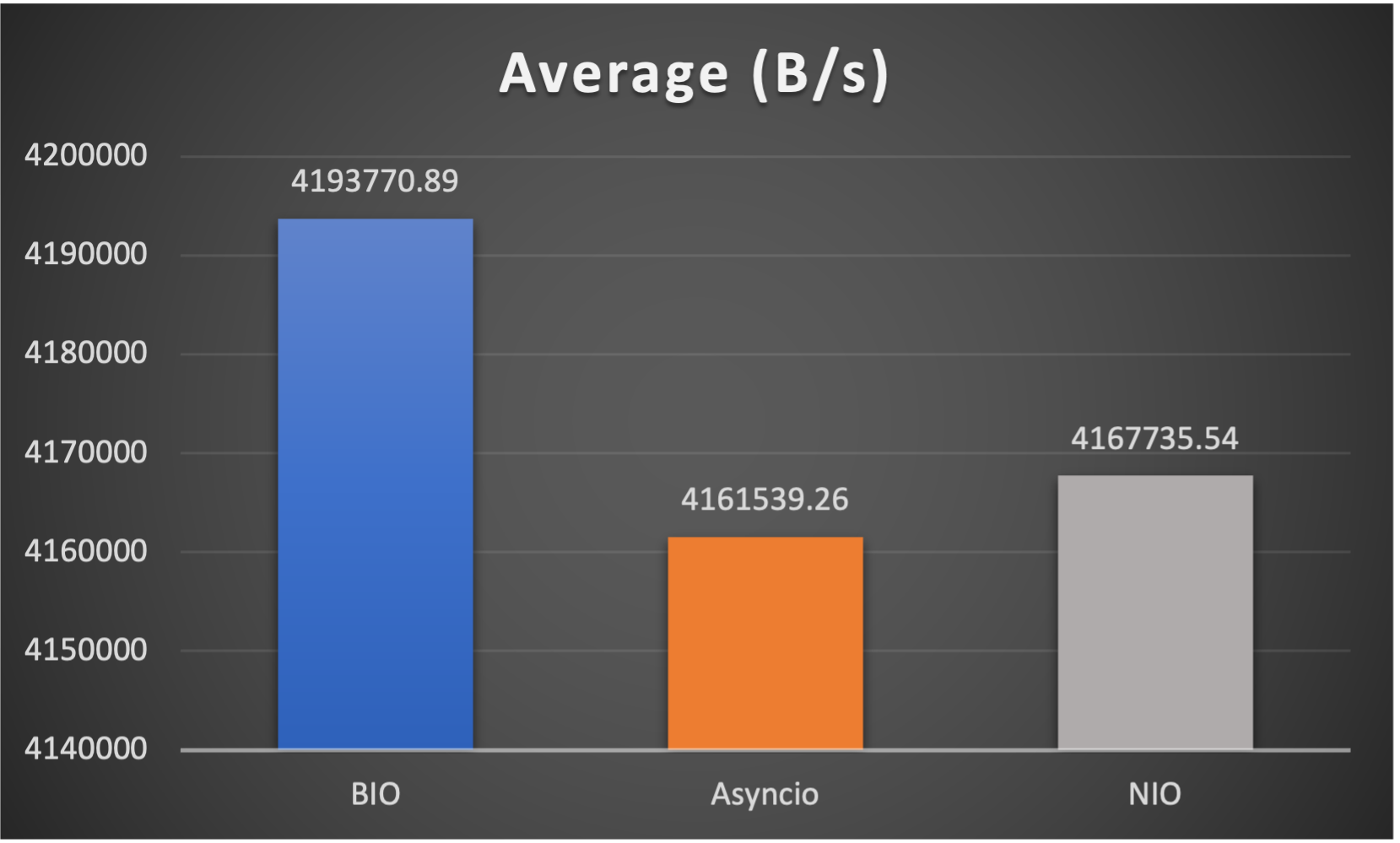

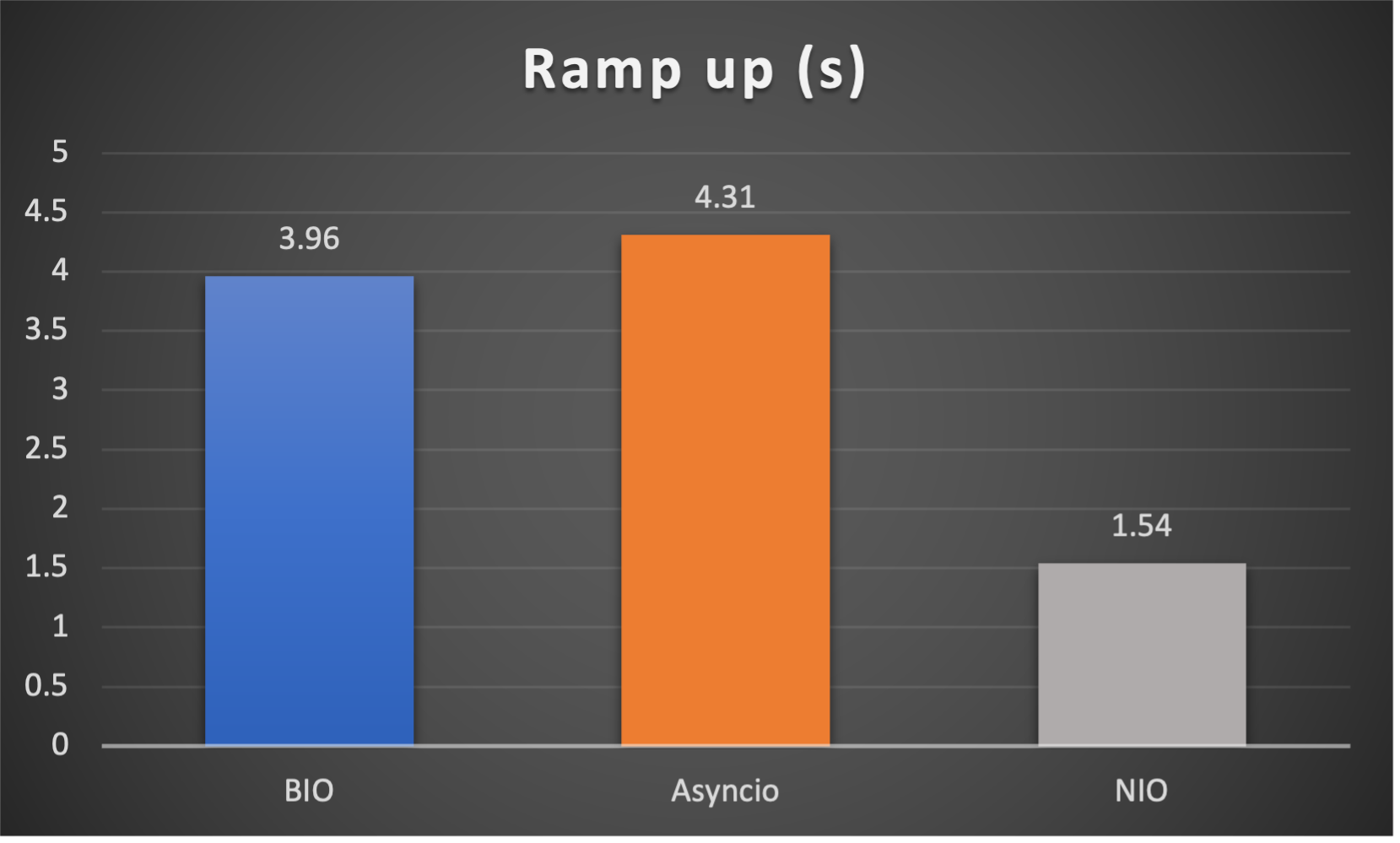

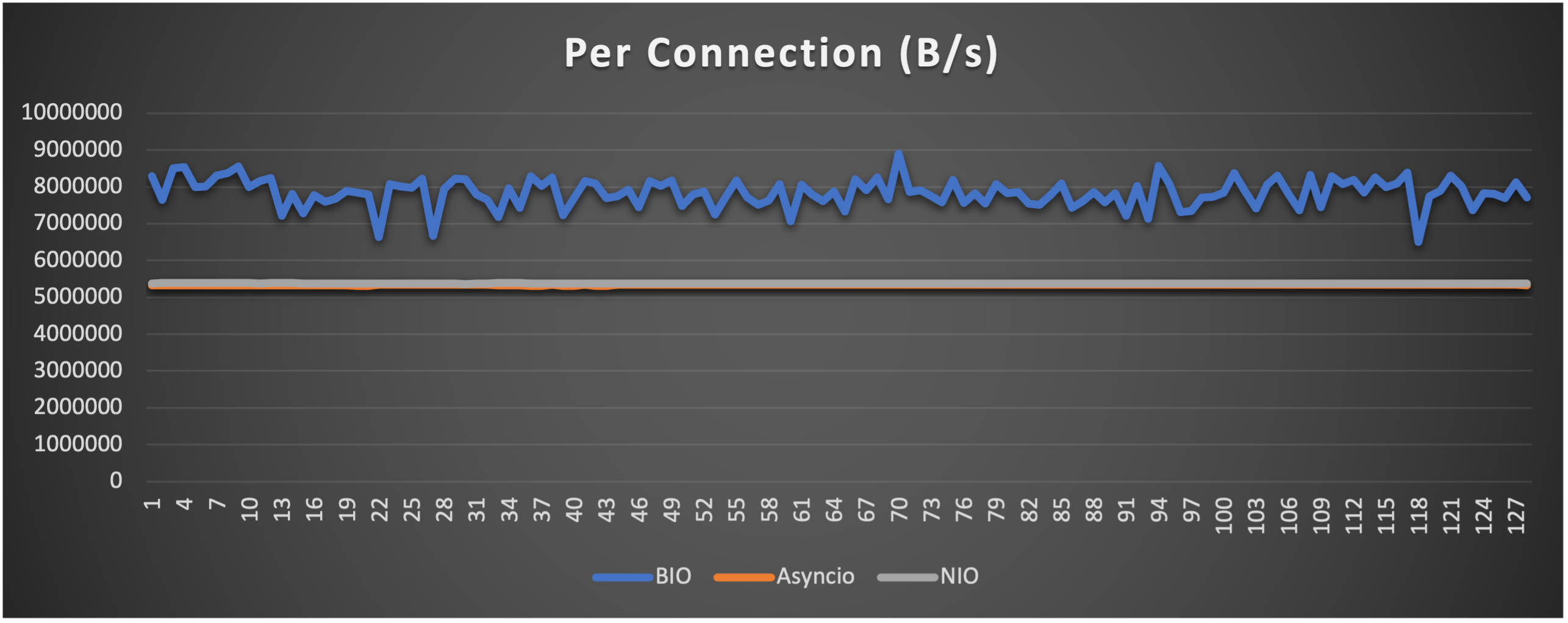

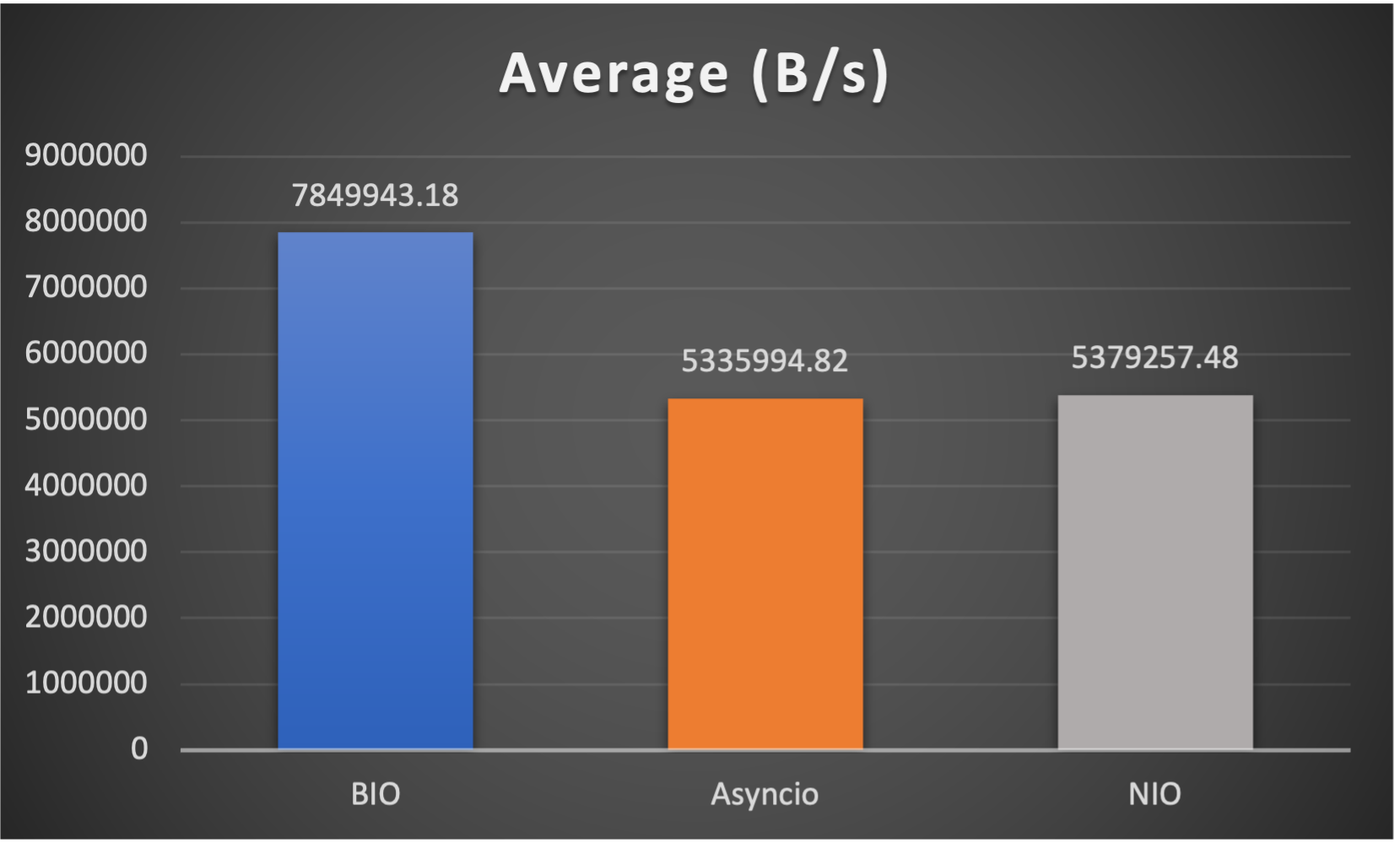

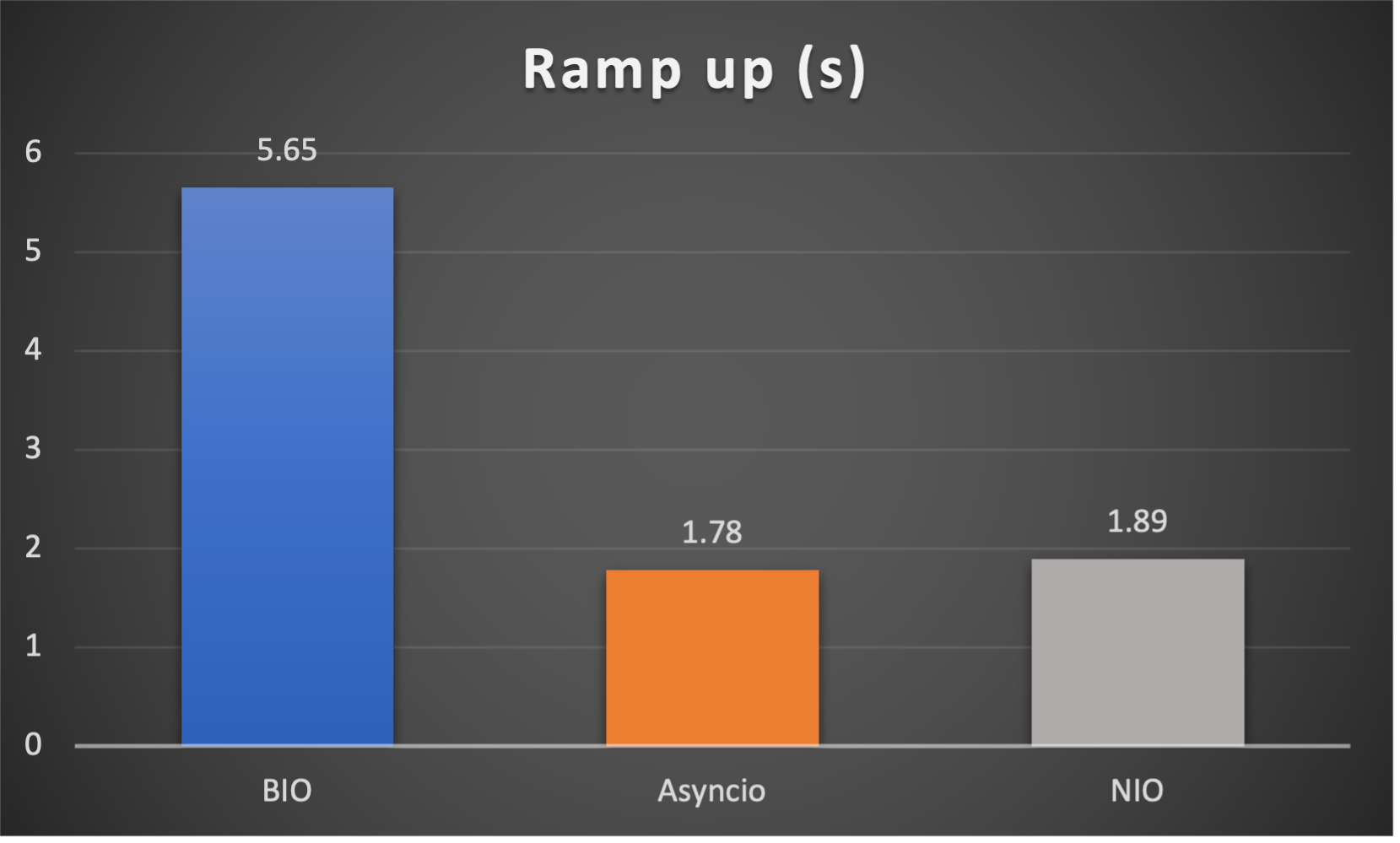

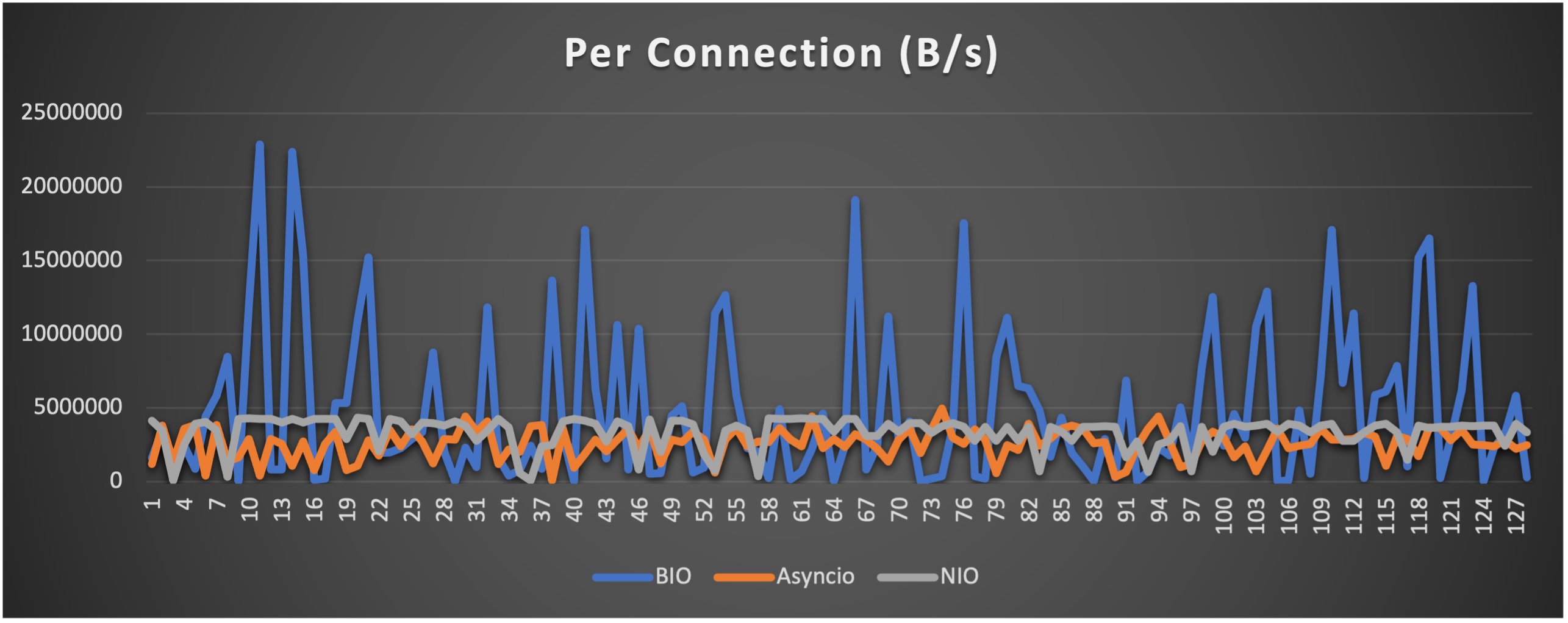

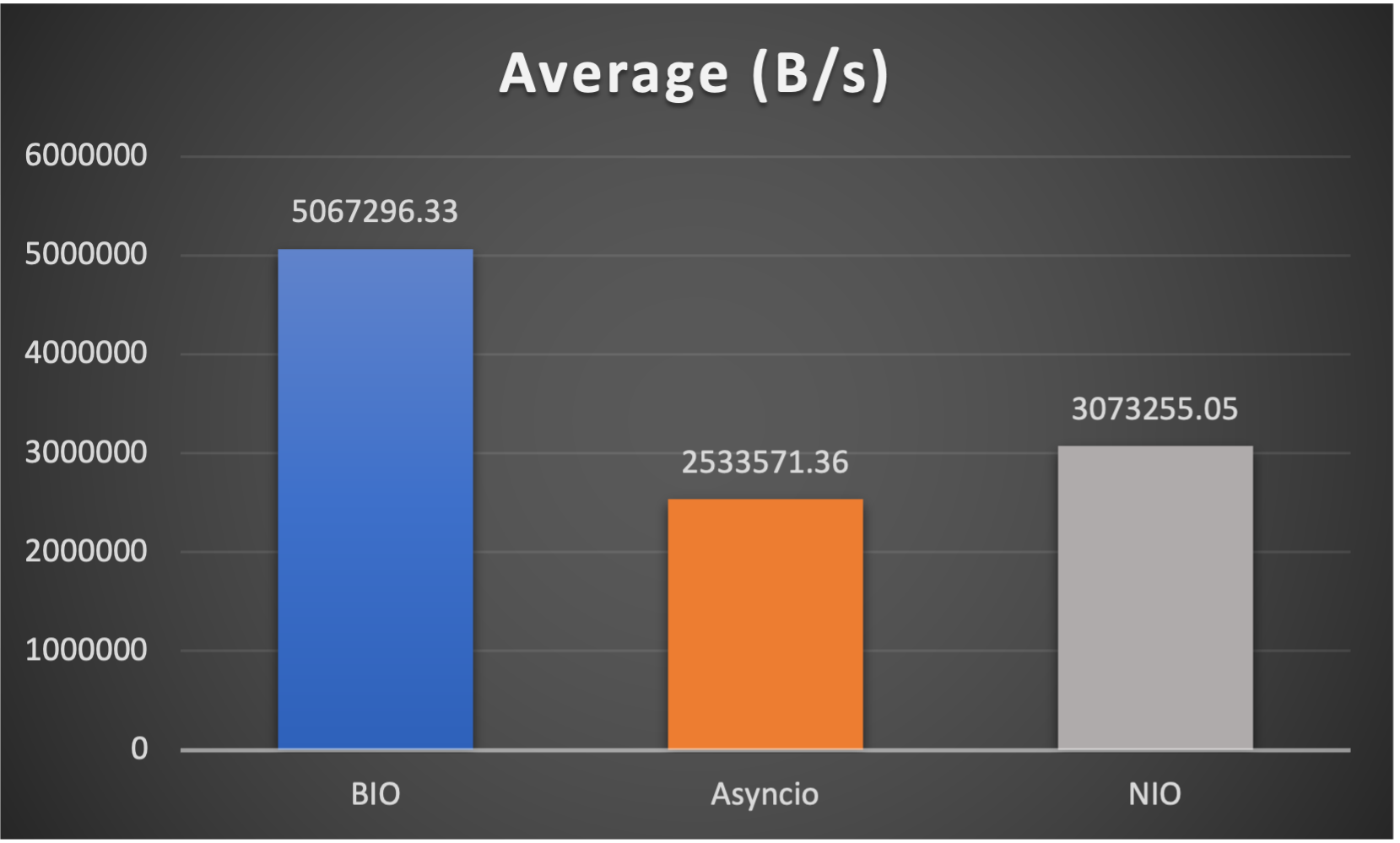

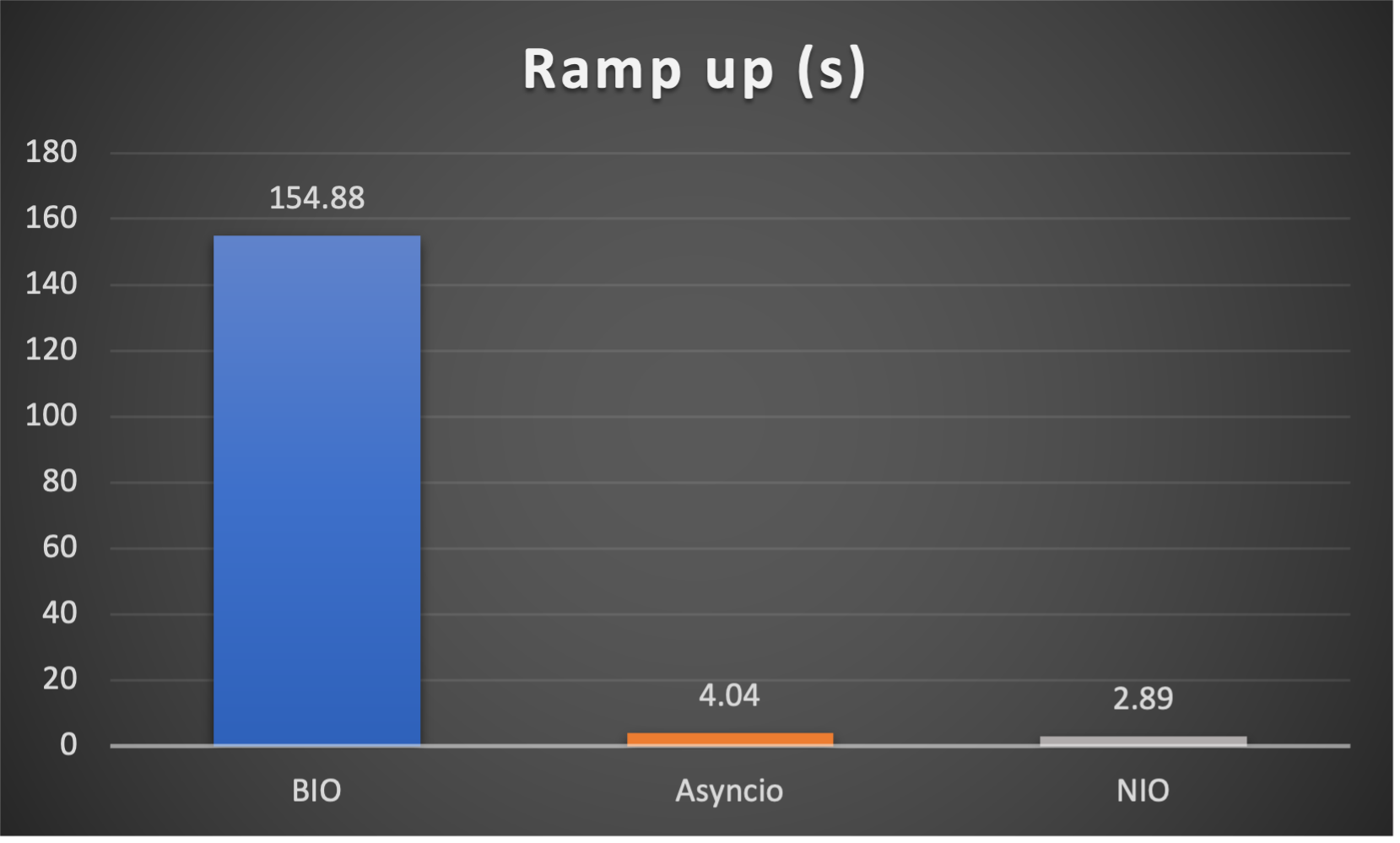

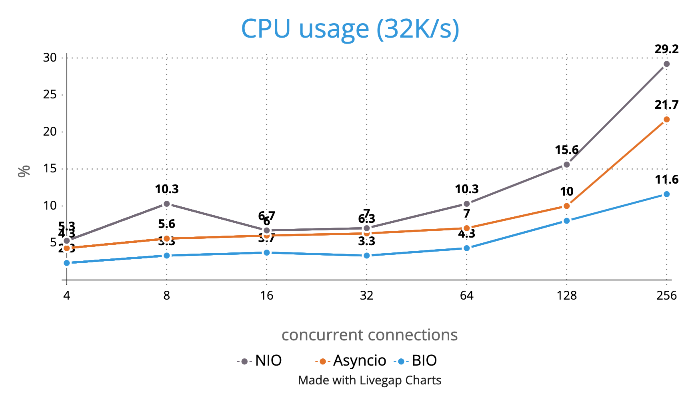

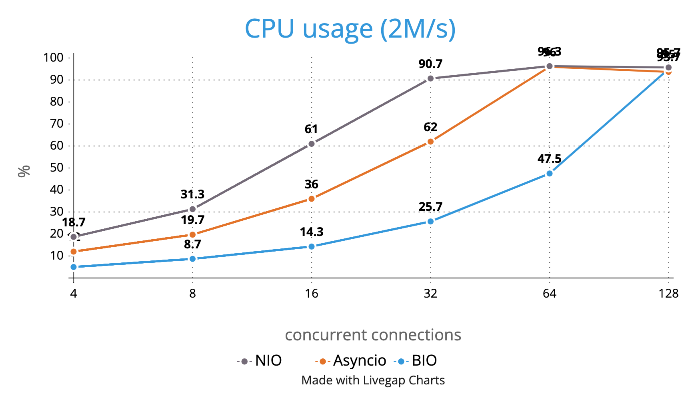

## Benchmark

Test is performed using echo client/server mechanism on a 1-Core 2.0GHz Intel(R) Xeon(R) Platinum 8452Y with 4GB memory, Ubuntu 22.04.

(Please see `bm_echo_server.py` for details.)

3 methods are tested:

1. BIO (Traditional thread based blocking IO)

2. Asyncio (Python built-in async IO)

3. NIO (py-netty with 1 eventloop)

3 metrics are collected:

1. Throughput (of each connection) to indicate overall stability

2. Average throughput (of all connections) to indicate overall performance

3. Ramp up time (seconds consumed after all connections established) to indicate responsiveness

### Case 1: Concurrent 64 connections with 32K/s

### Case 2: Concurrent 64 connections with 4M/s

### Case 3: Concurrent 128 connections with 4M/s

### Case 4: Concurrent 128 connections with 8M/s

### Case 5: Concurrent 256 connections with 8M/s

### CPU Usage

## Caveats

- No pipeline, supports only one handler FOR NOW

- No batteries-included codecs FOR NOW

- No pool or refcnt for bytes buffer, bytes objects are created and consumed at your disposal

Raw data

{

"_id": null,

"home_page": "https://github.com/ruanhao/py-netty",

"name": "py-netty",

"maintainer": null,

"docs_url": null,

"requires_python": "<4,>=3.7",

"maintainer_email": null,

"keywords": "network, tcp, non-blocking, epoll, nio, netty",

"author": "Hao Ruan",

"author_email": "ruanhao1116@gmail.com",

"download_url": "https://files.pythonhosted.org/packages/20/17/8c2bec14398c2ad3cf92e1d265e9f8866efb13333d6ef8ec92c22280aee9/py-netty-1.0.6.tar.gz",

"platform": null,

"description": "# py-netty :rocket:\n\nAn event-driven TCP networking framework.\n\nIdeas and concepts under the hood are build upon those of [Netty](https://netty.io/), especially the IO and executor model.\n\nAPIs are intuitive to use if you are a Netty alcoholic.\n\n\n# Features\n\n- callback based application invocation\n- non blocking IO\n- recv/write is performed only in IO thread\n- adaptive read buffer \n- low/higher water mark to indicate writability (default low water mark is 32K and high water mark is 64K)\n- all platform supported (linux: epoll, mac: kqueue, windows: select)\n\n## Installation\n\n```bash\npip install py-netty\n```\n\n## Getting Started\n\nStart an echo server:\n\n```python\nfrom py_netty import ServerBootstrap\nServerBootstrap().bind(address='0.0.0.0', port=8080).close_future().sync()\n```\n\nStart an echo server (TLS):\n\n```python\nfrom py_netty import ServerBootstrap\nServerBootstrap(certfile='/path/to/cert/file', keyfile='/path/to/cert/file').bind(address='0.0.0.0', port=9443).close_future().sync()\n```\n\nAs TCP client:\n\n```python\nfrom py_netty import Bootstrap, ChannelHandlerAdapter\n\n\nclass HttpHandler(ChannelHandlerAdapter):\n def channel_read(self, ctx, buffer):\n print(buffer.decode('utf-8'))\n \n\nremote_address, remote_port = 'www.google.com', 80\nb = Bootstrap(handler_initializer=HttpHandler)\nchannel = b.connect(remote_address, remote_port).sync().channel()\nrequest = f'GET / HTTP/1.1\\r\\nHost: {remote_address}\\r\\n\\r\\n'\nchannel.write(request.encode('utf-8'))\ninput() # pause\nchannel.close()\n```\n\n\nAs TCP client (TLS):\n\n```python\nfrom py_netty import Bootstrap, ChannelHandlerAdapter\n\n\nclass HttpHandler(ChannelHandlerAdapter):\n def channel_read(self, ctx, buffer):\n print(buffer.decode('utf-8'))\n \n\nremote_address, remote_port = 'www.google.com', 443\nb = Bootstrap(handler_initializer=HttpHandler, tls=True, verify=True)\nchannel = b.connect(remote_address, remote_port).sync().channel()\nrequest = f'GET / HTTP/1.1\\r\\nHost: {remote_address}\\r\\n\\r\\n'\nchannel.write(request.encode('utf-8'))\ninput() # pause\nchannel.close()\n```\n\nTCP port forwarding:\n\n```python\nfrom py_netty import ServerBootstrap, Bootstrap, ChannelHandlerAdapter, EventLoopGroup\n\n\nclass ProxyChannelHandler(ChannelHandlerAdapter):\n\n def __init__(self, remote_host, remote_port, client_eventloop_group):\n self._remote_host = remote_host\n self._remote_port = remote_port\n self._client_eventloop_group = client_eventloop_group\n self._client = None\n\n def _client_channel(self, ctx0):\n\n class __ChannelHandler(ChannelHandlerAdapter):\n def channel_read(self, ctx, bytebuf):\n ctx0.write(bytebuf)\n\n def channel_inactive(self, ctx):\n ctx0.close()\n\n if self._client is None:\n self._client = Bootstrap(\n eventloop_group=self._client_eventloop_group,\n handler_initializer=__ChannelHandler\n ).connect(self._remote_host, self._remote_port).sync().channel()\n return self._client\n\n def exception_caught(self, ctx, exception):\n ctx.close()\n\n def channel_read(self, ctx, bytebuf):\n self._client_channel(ctx).write(bytebuf)\n\n def channel_inactive(self, ctx):\n if self._client:\n self._client.close()\n\n\nproxied_server, proxied_port = 'www.google.com', 443\nclient_eventloop_group = EventLoopGroup(1, 'ClientEventloopGroup')\nsb = ServerBootstrap(\n parant_group=EventLoopGroup(1, 'Acceptor'),\n child_group=EventLoopGroup(1, 'Worker'),\n child_handler_initializer=lambda: ProxyChannelHandler(proxied_server, proxied_port, client_eventloop_group)\n)\nsb.bind(port=8443).close_future().sync()\n```\n\n## Event-driven callbacks\n\nCreate handler with callbacks for interested events:\n\n``` python\nfrom py_netty import ChannelHandlerAdapter\n\n\nclass MyChannelHandler(ChannelHandlerAdapter):\n def channel_active(self, ctx: 'ChannelHandlerContext') -> None:\n # invoked when channel is active (TCP connection ready)\n pass\n\n def channel_read(self, ctx: 'ChannelHandlerContext', msg: Union[bytes, socket.socket]) -> None:\n # invoked when there is data ready to process\n pass\n\n def channel_inactive(self, ctx: 'ChannelHandlerContext') -> None:\n # invoked when channel is inactive (TCP connection is broken)\n pass\n\n def channel_registered(self, ctx: 'ChannelHandlerContext') -> None:\n # invoked when the channel is registered with a eventloop\n pass\n\n def channel_unregistered(self, ctx: 'ChannelHandlerContext') -> None:\n # invoked when the channel is unregistered from a eventloop\n pass\n\n def channel_handshake_complete(self, ctx: 'ChannelHandlerContext') -> None:\n # invoked when ssl handshake is complete, this only applies to client side\n pass\n\n def channel_writability_changed(self, ctx: 'ChannelHandlerContext') -> None:\n # invoked when pending data > high water mark or < low water mark\n pass\n\n def exception_caught(self, ctx: 'ChannelHandlerContext', exception: Exception) -> None:\n # invoked when there is any exception raised during process\n pass\n```\n\n\n## Benchmark\n\nTest is performed using echo client/server mechanism on a 1-Core 2.0GHz Intel(R) Xeon(R) Platinum 8452Y with 4GB memory, Ubuntu 22.04.\n(Please see `bm_echo_server.py` for details.)\n\n3 methods are tested: \n1. BIO (Traditional thread based blocking IO)\n2. Asyncio (Python built-in async IO)\n3. NIO (py-netty with 1 eventloop)\n\n3 metrics are collected:\n1. Throughput (of each connection) to indicate overall stability\n2. Average throughput (of all connections) to indicate overall performance\n3. Ramp up time (seconds consumed after all connections established) to indicate responsiveness\n\n### Case 1: Concurrent 64 connections with 32K/s \n\n\n\n\n### Case 2: Concurrent 64 connections with 4M/s \n\n\n\n\n### Case 3: Concurrent 128 connections with 4M/s \n\n\n\n\n### Case 4: Concurrent 128 connections with 8M/s \n\n\n\n\n\n### Case 5: Concurrent 256 connections with 8M/s \n\n\n\n\n### CPU Usage\n\n\n\n## Caveats\n\n- No pipeline, supports only one handler FOR NOW\n- No batteries-included codecs FOR NOW\n- No pool or refcnt for bytes buffer, bytes objects are created and consumed at your disposal\n\n\n",

"bugtrack_url": null,

"license": "MIT",

"summary": "TCP framework in flavor of Netty",

"version": "1.0.6",

"project_urls": {

"Homepage": "https://github.com/ruanhao/py-netty"

},

"split_keywords": [

"network",

" tcp",

" non-blocking",

" epoll",

" nio",

" netty"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "aa9a47aebe4b610cd368ab580ad387a4e24976a5d9388e3c7046c01b874c8883",

"md5": "23f52a17822fac0d20c1449ec5fe90e1",

"sha256": "2fe4c87db2f7921eb1a1a017dd240e13c4cb0d440f80b631e89f012e0428b413"

},

"downloads": -1,

"filename": "py_netty-1.0.6-py3-none-any.whl",

"has_sig": false,

"md5_digest": "23f52a17822fac0d20c1449ec5fe90e1",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": "<4,>=3.7",

"size": 17884,

"upload_time": "2024-07-29T13:19:13",

"upload_time_iso_8601": "2024-07-29T13:19:13.681111Z",

"url": "https://files.pythonhosted.org/packages/aa/9a/47aebe4b610cd368ab580ad387a4e24976a5d9388e3c7046c01b874c8883/py_netty-1.0.6-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "20178c2bec14398c2ad3cf92e1d265e9f8866efb13333d6ef8ec92c22280aee9",

"md5": "5fb30fa32080fed120d57b96a008b3ce",

"sha256": "5972fcee30eff9725679c393bbe6c172932a5dfaa559ddab8eb987e2b358d84c"

},

"downloads": -1,

"filename": "py-netty-1.0.6.tar.gz",

"has_sig": false,

"md5_digest": "5fb30fa32080fed120d57b96a008b3ce",

"packagetype": "sdist",

"python_version": "source",

"requires_python": "<4,>=3.7",

"size": 17873,

"upload_time": "2024-07-29T13:19:15",

"upload_time_iso_8601": "2024-07-29T13:19:15.320165Z",

"url": "https://files.pythonhosted.org/packages/20/17/8c2bec14398c2ad3cf92e1d265e9f8866efb13333d6ef8ec92c22280aee9/py-netty-1.0.6.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2024-07-29 13:19:15",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "ruanhao",

"github_project": "py-netty",

"travis_ci": false,

"coveralls": false,

"github_actions": false,

"lcname": "py-netty"

}