| Name | pylangdb JSON |

| Version |

0.3.6

JSON

JSON |

| download |

| home_page | None |

| Summary | Comprehensive LangDB integration package with enhanced agent support, LLM integration, and distributed tracing. |

| upload_time | 2025-07-11 04:53:18 |

| maintainer | None |

| docs_url | None |

| author | None |

| requires_python | >=3.10 |

| license | None |

| keywords |

|

| VCS |

|

| bugtrack_url |

|

| requirements |

No requirements were recorded.

|

| Travis-CI |

No Travis.

|

| coveralls test coverage |

No coveralls.

|

# LangDB - Multi-Framework AI Agent Tracing and Client

[](https://badge.fury.io/py/pylangdb)

[](https://opensource.org/licenses/MIT)

## Key Features

LangDB exposes **two complementary capabilities**:

1. **Chat Completions Client** – Call LLMs using the `LangDb` Python client. This works as a drop-in replacement for `openai.ChatCompletion` while adding automatic usage, cost and latency reporting.

2. **Agent Tracing** – Instrument your existing AI framework (ADK, LangChain, CrewAI, etc.) with a single `init()` call. All calls are routed through the LangDB collector and are enriched with additional metadata regarding the framework is visible on the LangDB dashboard.

---

## ⚡ Quick Start (Chat Completions)

```bash

pip install pylangdb[client]

```

```python

from pylangdb.client import LangDb

# Initialize LangDB client

client = LangDb(api_key="your_api_key", project_id="your_project_id")

# Simple chat completion

resp = client.chat.completions.create(

model="openai/gpt-4o-mini",

messages=[{"role": "user", "content": "Hello!"}]

)

print(resp.choices[0].message.content)

```

---

## 🚀 Agent Tracing Quick Start

```bash

# Install the package with Google ADK support

pip install pylangdb[adk]

```

```python

# Import and initialize LangDB tracing

# First initialize LangDB before defining any agents

from pylangdb.adk import init

init()

import datetime

from zoneinfo import ZoneInfo

from google.adk.agents import Agent

def get_weather(city: str) -> dict:

if city.lower() != "new york":

return {"status": "error", "error_message": f"Weather information for '{city}' is not available."}

return {"status": "success", "report": "The weather in New York is sunny with a temperature of 25 degrees Celsius (77 degrees Fahrenheit)."}

def get_current_time(city: str) -> dict:

if city.lower() != "new york":

return {"status": "error", "error_message": f"Sorry, I don't have timezone information for {city}."}

tz = ZoneInfo("America/New_York")

now = datetime.datetime.now(tz)

return {"status": "success", "report": f'The current time in {city} is {now.strftime("%Y-%m-%d %H:%M:%S %Z%z")}'}

root_agent = Agent(

name="weather_time_agent",

model="gemini-2.0-flash",

description=("Agent to answer questions about the time and weather in a city." ),

instruction=("You are a helpful agent who can answer user questions about the time and weather in a city."),

tools=[get_weather, get_current_time],

)

```

> **Note:** Always initialize LangDB **before** importing any framework-specific classes to ensure proper instrumentation.

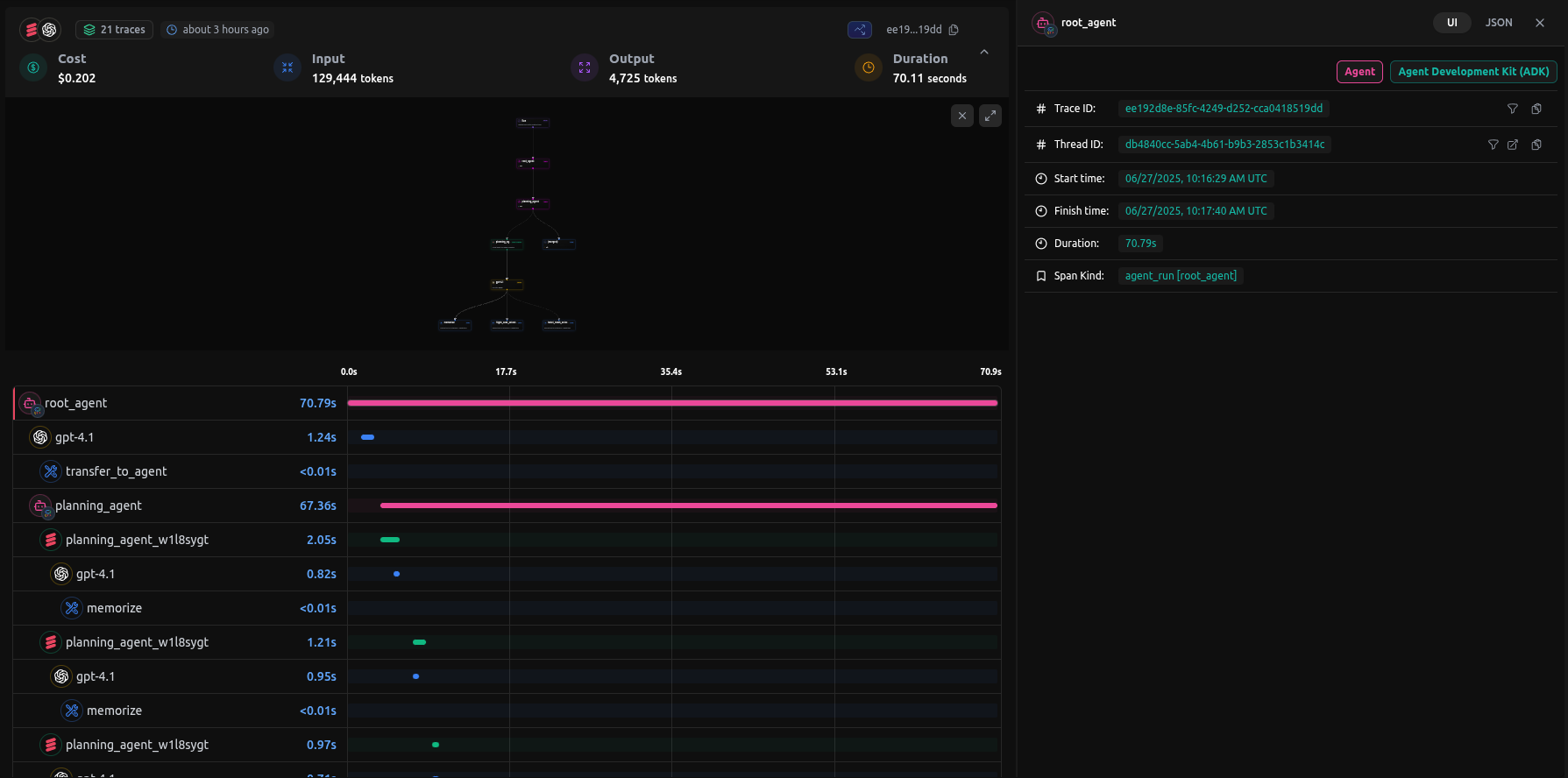

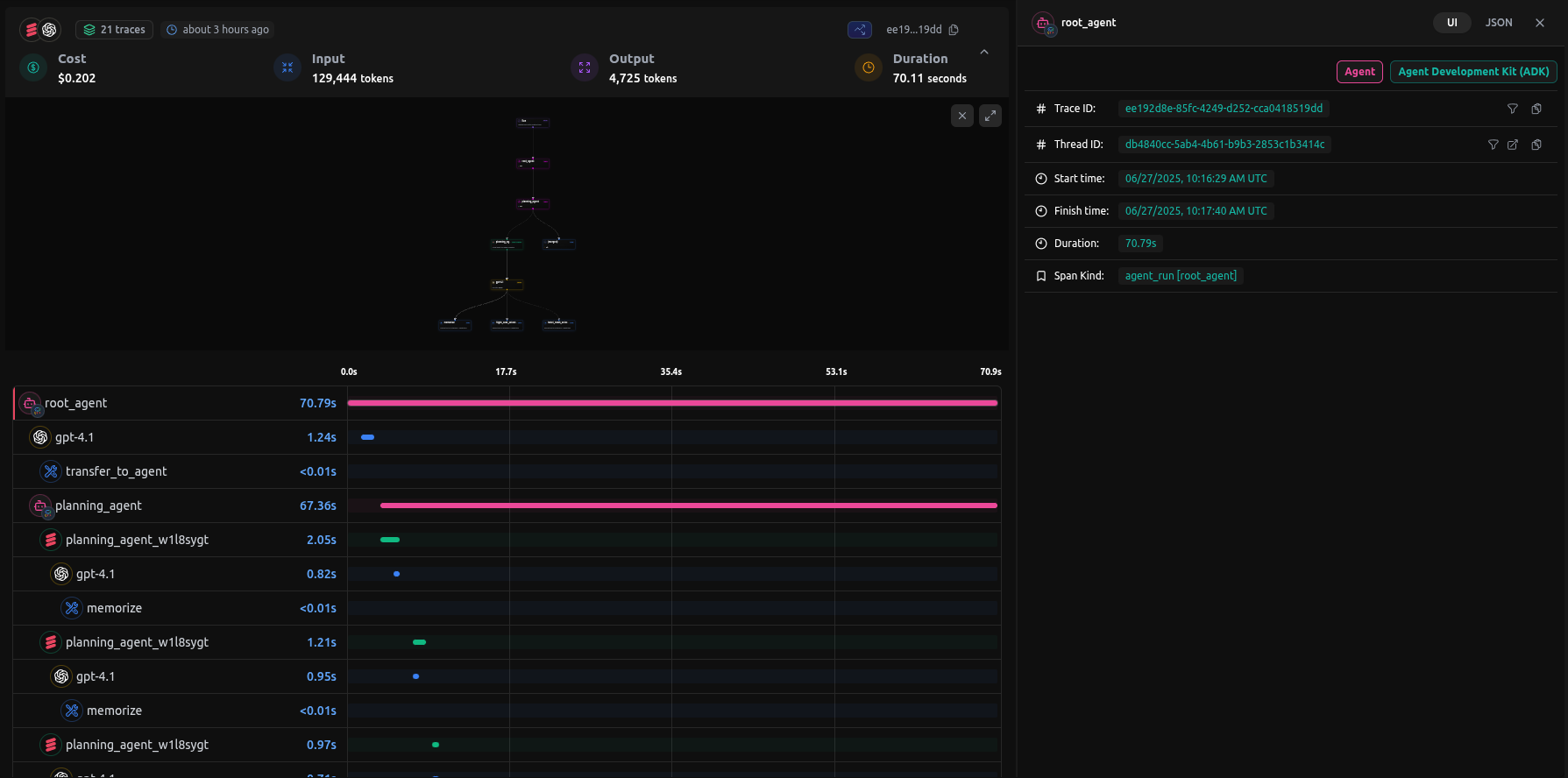

#### Example Trace Screenshot

## 🛠️ Supported Frameworks (Tracing)

| Framework | Installation | Import Pattern | Key Features |

| --- | --- | --- | --- |

| Google ADK | `pip install pylangdb[adk]` | `from pylangdb.adk import init` | Automatic sub-agent discovery |

| OpenAI | `pip install pylangdb[openai]` | `from pylangdb.openai import init` | Custom model provider support |

| LangChain | `pip install pylangdb[langchain]` | `from pylangdb.langchain import init` | Automatic chain tracing |

| CrewAI | `pip install pylangdb[crewai]` | `from pylangdb.crewai import init` | Multi-agent crew tracing |

| Agno | `pip install pylangdb[agno]` | `from pylangdb.agno import init` | Tool usage tracing, model interactions |

## 🔧 How It Works

LangDB uses intelligent monkey patching to instrument your AI frameworks at runtime:

<details>

<summary><b>👉 Click to see technical details for each framework</b></summary>

### Google ADK

- Patches `Agent.__init__` to inject callbacks

- Tracks agent hierarchies and tool usage

- Maintains thread context across invocations

### OpenAI

- Intercepts HTTP requests via `AsyncOpenAI.post`

- Propagates trace context via headers

- Correlates spans across agent interactions

### LangChain

- Modifies `httpx.Client.send` for request tracing

- Automatically tracks chains and agents

- Injects trace headers into all requests

### CrewAI

- Intercepts `litellm.completion` for LLM calls

- Tracks crew members and task delegation

- Propagates context through LiteLLM headers

### Agno

- Patches `LangDB.invoke` and client parameters

- Traces workflows and model interactions

- Maintains consistent session context

</details>

## 📦 Installation

```bash

# For client library functionality (chat completions, analytics, etc.)

pip install pylangdb[client]

# For framework tracing - install specific framework extras

pip install pylangdb[adk] # Google ADK tracing

pip install pylangdb[openai] # OpenAI agents tracing

pip install pylangdb[langchain] # LangChain tracing

pip install pylangdb[crewai] # CrewAI tracing

pip install pylangdb[agno] # Agno tracing

```

## 🔑 Configuration

Set your credentials (or pass them directly to the `init()` function):

```bash

export LANGDB_API_KEY="your-api-key"

export LANGDB_PROJECT_ID="your-project-id"

```

## Client Usage (Chat Completions)

### Initialize LangDb Client

```python

from pylangdb import LangDb

# Initialize with API key and project ID

client = LangDb(api_key="your_api_key", project_id="your_project_id")

```

### Chat Completions

```python

messages = [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Say hello!"}

]

response = client.completion(

model="gemini-1.5-pro-latest",

messages=messages,

temperature=0.7,

max_tokens=100

)

```

### Thread Operations

#### Get Messages

Retrieve messages from a specific thread:

```python

messages = client.get_messages(thread_id="your_thread_id")

# Access message details

for message in messages:

print(f"Type: {message.type}")

print(f"Content: {message.content}")

if message.tool_calls:

for tool_call in message.tool_calls:

print(f"Tool: {tool_call.function.name}")

```

#### Get Thread Cost

Get cost and token usage information for a thread:

```python

usage = client.get_usage(thread_id="your_thread_id")

print(f"Total cost: ${usage.total_cost:.4f}")

print(f"Input tokens: {usage.total_input_tokens}")

print(f"Output tokens: {usage.total_output_tokens}")

```

### Analytics

Get analytics data for specific tags:

```python

# Get raw analytics data

analytics = client.get_analytics(

tags="model1,model2",

start_time_us=None, # Optional: defaults to 24 hours ago

end_time_us=None # Optional: defaults to current time

)

# Get analytics as a pandas DataFrame

df = client.get_analytics_dataframe(

tags="model1,model2",

start_time_us=None,

end_time_us=None

)

```

### Evaluate Multiple Threads

```python

df = client.create_evaluation_df(thread_ids=["thread1", "thread2"])

print(df.head())

```

### List Available Models

```python

models = client.list_models()

print(models)

```

## 🧩 Framework-Specific Examples (Tracing)

### Google ADK

```python

from pylangdb.adk import init

# Monkey-patch the client for tracing

init()

# Import your agents after initializing tracing

from google.adk.agents import Agent

from travel_concierge.sub_agents.booking.agent import booking_agent

from travel_concierge.sub_agents.in_trip.agent import in_trip_agent

from travel_concierge.sub_agents.inspiration.agent import inspiration_agent

from travel_concierge.sub_agents.planning.agent import planning_agent

from travel_concierge.sub_agents.post_trip.agent import post_trip_agent

from travel_concierge.sub_agents.pre_trip.agent import pre_trip_agent

from travel_concierge.tools.memory import _load_precreated_itinerary

root_agent = Agent(

model="openai/gpt-4.1",

name="root_agent",

description="A Travel Conceirge using the services of multiple sub-agents",

instruction="Instruct the travel concierge to plan a trip for the user.",

sub_agents=[

inspiration_agent,

planning_agent,

booking_agent,

pre_trip_agent,

in_trip_agent,

post_trip_agent,

],

before_agent_callback=_load_precreated_itinerary,

)

```

### OpenAI

```python

import uuid

import os

# Import LangDB tracing

from pylangdb.openai import init

# Initialize tracing

init()

# Import agent components

from agents import (

Agent,

Runner,

set_default_openai_client,

RunConfig,

ModelProvider,

Model,

OpenAIChatCompletionsModel

)

# Configure OpenAI client with environment variables

from openai import AsyncOpenAI

client = AsyncOpenAI(

api_key=os.environ.get("LANGDB_API_KEY"),

base_url=os.environ.get("LANGDB_API_BASE_URL"),

default_headers={

"x-project-id": os.environ.get("LANGDB_PROJECT_ID")

}

)

set_default_openai_client(client)

# Create a custom model provider

class CustomModelProvider(ModelProvider):

def get_model(self, model_name: str | None) -> Model:

return OpenAIChatCompletionsModel(model=model_name, openai_client=client)

CUSTOM_MODEL_PROVIDER = CustomModelProvider()

agent = Agent(

name="Math Tutor",

model="gpt-4.1",

instruction="You are a math tutor who can help students with their math homework.",

)

group_id = str(uuid.uuid4())

# Use the model provider with a unique group_id for tracing

async def run_agent():

response = await Runner.run(

triage_agent,

input="Hello World",

run_config=RunConfig(

model_provider=CUSTOM_MODEL_PROVIDER, # Inject custom model provider

group_id=group_id # Link all steps to the same trace

)

)

print(response.final_output)

# Run the async function with asyncio

asyncio.run(run_agent())

```

### LangChain

```python

import os

from pylangdb.langchain import init

init()

# Get environment variables for configuration

api_base = os.getenv("LANGDB_API_BASE_URL")

api_key = os.getenv("LANGDB_API_KEY")

if not api_key:

raise ValueError("Please set the LANGDB_API_KEY environment variable")

project_id = os.getenv("LANGDB_PROJECT_ID")

# Default headers for API requests

default_headers: dict[str, str] = {

"x-project-id": project-id

}

# Your existing LangChain code works with proper configuration

from langchain.chat_models import ChatOpenAI

from langchain.schema import HumanMessage

# Initialize OpenAI LLM with proper configuration

llm = ChatOpenAI(

model_name="gpt-4",

temperature=0.3,

openai_api_base=api_base,

openai_api_key=api_key,

default_headers=default_headers,

)

result = llm.invoke([HumanMessage(content="Hello, LangChain!")])

```

### CrewAI

```python

import os

from crewai import Agent, Task, Crew, LLM

from dotenv import load_dotenv

load_dotenv()

# Import and initialize LangDB tracing

from pylangdb.crewai import init

# Initialize tracing before importing or creating any agents

init()

# Initialize API credentials

api_key = os.environ.get("LANGDB_API_KEY")

api_base = os.environ.get("LANGDB_API_BASE_URL")

project_id = os.environ.get("LANGDB_PROJECT_ID")

# Create LLM with proper headers

llm = LLM(

model="gpt-4",

api_key=api_key,

base_url=api_base,

extra_headers={

"x-project-id": project_id

}

)

# Create and use your CrewAI components as usual

# They will be automatically traced by LangDB

researcher = Agent(

role="researcher",

goal="Research the topic thoroughly",

backstory="You are an expert researcher",

llm=llm,

verbose=True

)

task = Task(

description="Research the given topic",

agent=researcher

)

crew = Crew(agents=[researcher], tasks=[task])

result = crew.kickoff()

```

### Agno

```python

import os

from agno.agent import Agent

from agno.tools.duckduckgo import DuckDuckGoTools

# Import and initialize LangDB tracing

from pylangdb.agno import init

init()

# Import LangDB model after initializing tracing

from agno.models.langdb import LangDB

# Create agent with LangDB model

agent = Agent(

name="Web Agent",

role="Search the web for information",

model=LangDB(

id="openai/gpt-4",

base_url=os.getenv("LANGDB_API_BASE_URL") + '/' + os.getenv("LANGDB_PROJECT_ID") + '/v1',

api_key=os.getenv("LANGDB_API_KEY"),

project_id=os.getenv("LANGDB_PROJECT_ID"),

),

tools=[DuckDuckGoTools()],

instructions="Answer questions using web search",

show_tool_calls=True,

markdown=True,

)

# Use the agent

response = agent.run("What is LangDB?")

```

## ⚙️ Advanced Configuration

### Environment Variables

| Variable | Description | Default |

|----------|-------------|---------|

| `LANGDB_API_KEY` | Your LangDB API key | Required |

| `LANGDB_PROJECT_ID` | Your LangDB project ID | Required |

| `LANGDB_API_BASE_URL` | LangDB API base URL | `https://api.us-east-1.langdb.ai` |

| `LANGDB_TRACING_BASE_URL` | Tracing collector endpoint | `https://api.us-east-1.langdb.ai:4317` |

| `LANGDB_TRACING` | Enable/disable tracing | `true` |

| `LANGDB_TRACING_EXPORTERS` | Comma-separated list of exporters | `otlp`, `console` |

### Custom Configuration

All `init()` functions accept the same optional parameters:

```python

from langdb.openai import init

init(

collector_endpoint='https://api.us-east-1.langdb.ai:4317',

api_key="langdb-api-key",

project_id="langdb-project-id"

)

```

## 🔬 Technical Details

### Session and Thread Management

- **Thread ID**: Maintains consistent session identifiers across agent calls

- **Run ID**: Unique identifier for each execution trace

- **Invocation Tracking**: Tracks the sequence of agent invocations

- **State Persistence**: Maintains context across callbacks and sub-agent interactions

### Distributed Tracing

- **OpenTelemetry Integration**: Uses OpenTelemetry for standardized tracing

- **Attribute Propagation**: Automatically propagates LangDB-specific attributes

- **Span Correlation**: Links related spans across different agents and frameworks

- **Custom Exporters**: Supports multiple export formats (OTLP, Console)

## API Reference

### Initialization Functions

Each framework has a simple `init()` function that handles all necessary setup:

- `langdb.adk.init()`: Patches Google ADK Agent class with LangDB callbacks

- `langdb.openai.init()`: Initializes OpenAI agents tracing

- `langdb.langchain.init()`: Initializes LangChain tracing

- `langdb.crewai.init()`: Initializes CrewAI tracing

- `langdb.agno.init()`: Initializes Agno tracing

All init functions accept optional parameters for custom configuration (collector_endpoint, api_key, project_id)

## 🛟 Troubleshooting

### Common Issues

1. **Missing API Key**: Ensure `LANGDB_API_KEY` and `LANGDB_PROJECT_ID` are set

2. **Tracing Not Working**: Check that initialization functions are called before creating agents

3. **Network Issues**: Verify collector endpoint is accessible

4. **Framework Conflicts**: Initialize LangDB integration before other instrumentation

### Debug Mode

Enable console output for debugging:

```bash

export LANGDB_TRACING_EXPORTERS="otlp,console"

```

Disable tracing entirely:

```bash

export LANGDB_TRACING="false"

```

## Development

### Setting up the environment

1. Clone the repository

2. Create a `.env` file with your credentials:

```bash

LANGDB_API_KEY="your_api_key"

LANGDB_PROJECT_ID="your_project_id"

```

### Running Tests

```bash

python -m unittest tests/client.py -v

```

## Publishing

```bash

poetry build

poetry publish

```

## 📋 Requirements

- Python >= 3.10

- Framework-specific dependencies (installed automatically)

- OpenTelemetry libraries (installed automatically)

## 📄 License

This project is licensed under the MIT License - see the [LICENSE](LICENSE) file for details.

## 🤝 Support

- **GitHub Issues**: [Report bugs and feature requests](https://github.com/langdb/pylangdb/issues)

- **Documentation**: [LangDB Documentation](https://docs.langdb.ai)

Raw data

{

"_id": null,

"home_page": null,

"name": "pylangdb",

"maintainer": null,

"docs_url": null,

"requires_python": ">=3.10",

"maintainer_email": null,

"keywords": null,

"author": null,

"author_email": null,

"download_url": "https://files.pythonhosted.org/packages/dd/9d/a97bc244ba95185d1e682485443d1d5e6b23caa45a8e9191a7fff78be61f/pylangdb-0.3.6.tar.gz",

"platform": null,

"description": "# LangDB - Multi-Framework AI Agent Tracing and Client\n\n[](https://badge.fury.io/py/pylangdb)\n[](https://opensource.org/licenses/MIT)\n\n## Key Features\n\nLangDB exposes **two complementary capabilities**:\n\n1. **Chat Completions Client** \u2013 Call LLMs using the `LangDb` Python client. This works as a drop-in replacement for `openai.ChatCompletion` while adding automatic usage, cost and latency reporting.\n2. **Agent Tracing** \u2013 Instrument your existing AI framework (ADK, LangChain, CrewAI, etc.) with a single `init()` call. All calls are routed through the LangDB collector and are enriched with additional metadata regarding the framework is visible on the LangDB dashboard.\n\n---\n\n## \u26a1 Quick Start (Chat Completions)\n\n```bash\npip install pylangdb[client]\n```\n\n```python\nfrom pylangdb.client import LangDb\n\n# Initialize LangDB client\nclient = LangDb(api_key=\"your_api_key\", project_id=\"your_project_id\")\n\n# Simple chat completion\nresp = client.chat.completions.create(\n model=\"openai/gpt-4o-mini\",\n messages=[{\"role\": \"user\", \"content\": \"Hello!\"}]\n)\nprint(resp.choices[0].message.content)\n```\n\n---\n\n## \ud83d\ude80 Agent Tracing Quick Start\n\n```bash\n# Install the package with Google ADK support\npip install pylangdb[adk]\n```\n\n```python\n# Import and initialize LangDB tracing\n# First initialize LangDB before defining any agents\nfrom pylangdb.adk import init\ninit()\n\nimport datetime\nfrom zoneinfo import ZoneInfo\nfrom google.adk.agents import Agent\n\ndef get_weather(city: str) -> dict:\n if city.lower() != \"new york\":\n return {\"status\": \"error\", \"error_message\": f\"Weather information for '{city}' is not available.\"}\n return {\"status\": \"success\", \"report\": \"The weather in New York is sunny with a temperature of 25 degrees Celsius (77 degrees Fahrenheit).\"}\n\ndef get_current_time(city: str) -> dict:\n if city.lower() != \"new york\":\n return {\"status\": \"error\", \"error_message\": f\"Sorry, I don't have timezone information for {city}.\"}\n tz = ZoneInfo(\"America/New_York\")\n now = datetime.datetime.now(tz)\n return {\"status\": \"success\", \"report\": f'The current time in {city} is {now.strftime(\"%Y-%m-%d %H:%M:%S %Z%z\")}'}\n\nroot_agent = Agent(\n name=\"weather_time_agent\",\n model=\"gemini-2.0-flash\",\n description=(\"Agent to answer questions about the time and weather in a city.\" ),\n instruction=(\"You are a helpful agent who can answer user questions about the time and weather in a city.\"),\n tools=[get_weather, get_current_time],\n)\n```\n\n> **Note:** Always initialize LangDB **before** importing any framework-specific classes to ensure proper instrumentation.\n\n\n#### Example Trace Screenshot\n\n\n\n## \ud83d\udee0\ufe0f Supported Frameworks (Tracing)\n\n| Framework | Installation | Import Pattern | Key Features |\n| --- | --- | --- | --- |\n| Google ADK | `pip install pylangdb[adk]` | `from pylangdb.adk import init` | Automatic sub-agent discovery |\n| OpenAI | `pip install pylangdb[openai]` | `from pylangdb.openai import init` | Custom model provider support |\n| LangChain | `pip install pylangdb[langchain]` | `from pylangdb.langchain import init` | Automatic chain tracing |\n| CrewAI | `pip install pylangdb[crewai]` | `from pylangdb.crewai import init` | Multi-agent crew tracing |\n| Agno | `pip install pylangdb[agno]` | `from pylangdb.agno import init` | Tool usage tracing, model interactions |\n\n## \ud83d\udd27 How It Works\n\nLangDB uses intelligent monkey patching to instrument your AI frameworks at runtime:\n\n<details>\n<summary><b>\ud83d\udc49 Click to see technical details for each framework</b></summary>\n\n### Google ADK\n- Patches `Agent.__init__` to inject callbacks\n- Tracks agent hierarchies and tool usage\n- Maintains thread context across invocations\n\n### OpenAI\n- Intercepts HTTP requests via `AsyncOpenAI.post`\n- Propagates trace context via headers\n- Correlates spans across agent interactions\n\n### LangChain\n- Modifies `httpx.Client.send` for request tracing\n- Automatically tracks chains and agents\n- Injects trace headers into all requests\n\n### CrewAI\n- Intercepts `litellm.completion` for LLM calls\n- Tracks crew members and task delegation\n- Propagates context through LiteLLM headers\n\n### Agno\n- Patches `LangDB.invoke` and client parameters\n- Traces workflows and model interactions\n- Maintains consistent session context\n</details>\n\n## \ud83d\udce6 Installation\n\n```bash\n# For client library functionality (chat completions, analytics, etc.)\npip install pylangdb[client]\n\n# For framework tracing - install specific framework extras\npip install pylangdb[adk] # Google ADK tracing\npip install pylangdb[openai] # OpenAI agents tracing\npip install pylangdb[langchain] # LangChain tracing\npip install pylangdb[crewai] # CrewAI tracing\npip install pylangdb[agno] # Agno tracing\n```\n\n## \ud83d\udd11 Configuration\n\nSet your credentials (or pass them directly to the `init()` function):\n\n```bash\nexport LANGDB_API_KEY=\"your-api-key\"\nexport LANGDB_PROJECT_ID=\"your-project-id\"\n```\n\n## Client Usage (Chat Completions)\n\n### Initialize LangDb Client\n\n```python\nfrom pylangdb import LangDb\n\n# Initialize with API key and project ID\nclient = LangDb(api_key=\"your_api_key\", project_id=\"your_project_id\")\n```\n\n### Chat Completions\n\n```python\nmessages = [\n {\"role\": \"system\", \"content\": \"You are a helpful assistant.\"},\n {\"role\": \"user\", \"content\": \"Say hello!\"}\n]\n\nresponse = client.completion(\n model=\"gemini-1.5-pro-latest\",\n messages=messages,\n temperature=0.7,\n max_tokens=100\n)\n```\n\n### Thread Operations\n\n#### Get Messages\nRetrieve messages from a specific thread:\n\n```python\nmessages = client.get_messages(thread_id=\"your_thread_id\")\n\n# Access message details\nfor message in messages:\n print(f\"Type: {message.type}\")\n print(f\"Content: {message.content}\")\n if message.tool_calls:\n for tool_call in message.tool_calls:\n print(f\"Tool: {tool_call.function.name}\")\n```\n\n#### Get Thread Cost\nGet cost and token usage information for a thread:\n\n```python\nusage = client.get_usage(thread_id=\"your_thread_id\")\nprint(f\"Total cost: ${usage.total_cost:.4f}\")\nprint(f\"Input tokens: {usage.total_input_tokens}\")\nprint(f\"Output tokens: {usage.total_output_tokens}\")\n```\n\n### Analytics\n\nGet analytics data for specific tags:\n\n```python\n# Get raw analytics data\nanalytics = client.get_analytics(\n tags=\"model1,model2\",\n start_time_us=None, # Optional: defaults to 24 hours ago\n end_time_us=None # Optional: defaults to current time\n)\n\n# Get analytics as a pandas DataFrame\ndf = client.get_analytics_dataframe(\n tags=\"model1,model2\",\n start_time_us=None,\n end_time_us=None\n)\n```\n\n### Evaluate Multiple Threads\n```python\ndf = client.create_evaluation_df(thread_ids=[\"thread1\", \"thread2\"])\nprint(df.head())\n```\n\n### List Available Models\n```python\nmodels = client.list_models()\nprint(models)\n```\n\n## \ud83e\udde9 Framework-Specific Examples (Tracing)\n\n### Google ADK\n\n```python\nfrom pylangdb.adk import init\n\n# Monkey-patch the client for tracing\ninit()\n\n# Import your agents after initializing tracing\nfrom google.adk.agents import Agent\nfrom travel_concierge.sub_agents.booking.agent import booking_agent\nfrom travel_concierge.sub_agents.in_trip.agent import in_trip_agent\nfrom travel_concierge.sub_agents.inspiration.agent import inspiration_agent\nfrom travel_concierge.sub_agents.planning.agent import planning_agent\nfrom travel_concierge.sub_agents.post_trip.agent import post_trip_agent\nfrom travel_concierge.sub_agents.pre_trip.agent import pre_trip_agent\nfrom travel_concierge.tools.memory import _load_precreated_itinerary\n\n\nroot_agent = Agent(\n model=\"openai/gpt-4.1\",\n name=\"root_agent\",\n description=\"A Travel Conceirge using the services of multiple sub-agents\",\n instruction=\"Instruct the travel concierge to plan a trip for the user.\",\n sub_agents=[\n inspiration_agent,\n planning_agent,\n booking_agent,\n pre_trip_agent,\n in_trip_agent,\n post_trip_agent,\n ],\n before_agent_callback=_load_precreated_itinerary,\n)\n```\n\n### OpenAI\n\n```python\nimport uuid\nimport os\n\n# Import LangDB tracing\nfrom pylangdb.openai import init\n\n# Initialize tracing\ninit()\n\n# Import agent components\nfrom agents import (\n Agent,\n Runner,\n set_default_openai_client,\n RunConfig,\n ModelProvider,\n Model,\n OpenAIChatCompletionsModel\n)\n\n# Configure OpenAI client with environment variables\nfrom openai import AsyncOpenAI\n\nclient = AsyncOpenAI(\n api_key=os.environ.get(\"LANGDB_API_KEY\"),\n base_url=os.environ.get(\"LANGDB_API_BASE_URL\"),\n default_headers={\n \"x-project-id\": os.environ.get(\"LANGDB_PROJECT_ID\")\n }\n)\nset_default_openai_client(client)\n\n# Create a custom model provider\nclass CustomModelProvider(ModelProvider):\n def get_model(self, model_name: str | None) -> Model:\n return OpenAIChatCompletionsModel(model=model_name, openai_client=client)\n\nCUSTOM_MODEL_PROVIDER = CustomModelProvider()\n\nagent = Agent(\n name=\"Math Tutor\",\n model=\"gpt-4.1\",\n instruction=\"You are a math tutor who can help students with their math homework.\",\n)\n\ngroup_id = str(uuid.uuid4())\n# Use the model provider with a unique group_id for tracing\nasync def run_agent():\n response = await Runner.run(\n triage_agent,\n input=\"Hello World\",\n run_config=RunConfig(\n model_provider=CUSTOM_MODEL_PROVIDER, # Inject custom model provider\n group_id=group_id # Link all steps to the same trace\n )\n )\n print(response.final_output)\n\n# Run the async function with asyncio\nasyncio.run(run_agent())\n```\n\n### LangChain\n\n```python\nimport os\nfrom pylangdb.langchain import init\n\ninit()\n\n# Get environment variables for configuration\napi_base = os.getenv(\"LANGDB_API_BASE_URL\")\napi_key = os.getenv(\"LANGDB_API_KEY\")\nif not api_key:\n raise ValueError(\"Please set the LANGDB_API_KEY environment variable\")\n\nproject_id = os.getenv(\"LANGDB_PROJECT_ID\")\n\n# Default headers for API requests\ndefault_headers: dict[str, str] = {\n \"x-project-id\": project-id\n}\n\n# Your existing LangChain code works with proper configuration\nfrom langchain.chat_models import ChatOpenAI\nfrom langchain.schema import HumanMessage\n\n# Initialize OpenAI LLM with proper configuration\nllm = ChatOpenAI(\n model_name=\"gpt-4\",\n temperature=0.3,\n openai_api_base=api_base,\n openai_api_key=api_key,\n default_headers=default_headers,\n)\nresult = llm.invoke([HumanMessage(content=\"Hello, LangChain!\")])\n```\n\n### CrewAI\n\n```python\nimport os\nfrom crewai import Agent, Task, Crew, LLM\nfrom dotenv import load_dotenv\n\nload_dotenv()\n\n# Import and initialize LangDB tracing\nfrom pylangdb.crewai import init\n\n# Initialize tracing before importing or creating any agents\ninit()\n\n# Initialize API credentials\napi_key = os.environ.get(\"LANGDB_API_KEY\")\napi_base = os.environ.get(\"LANGDB_API_BASE_URL\")\nproject_id = os.environ.get(\"LANGDB_PROJECT_ID\")\n\n# Create LLM with proper headers\nllm = LLM(\n model=\"gpt-4\",\n api_key=api_key,\n base_url=api_base,\n extra_headers={\n \"x-project-id\": project_id\n }\n)\n\n# Create and use your CrewAI components as usual\n# They will be automatically traced by LangDB\nresearcher = Agent(\n role=\"researcher\",\n goal=\"Research the topic thoroughly\",\n backstory=\"You are an expert researcher\",\n llm=llm,\n verbose=True\n)\n\ntask = Task(\n description=\"Research the given topic\",\n agent=researcher\n)\n\ncrew = Crew(agents=[researcher], tasks=[task])\nresult = crew.kickoff()\n```\n\n### Agno\n\n```python\nimport os\nfrom agno.agent import Agent\nfrom agno.tools.duckduckgo import DuckDuckGoTools\n\n# Import and initialize LangDB tracing\nfrom pylangdb.agno import init\ninit()\n\n# Import LangDB model after initializing tracing\nfrom agno.models.langdb import LangDB\n\n# Create agent with LangDB model\nagent = Agent(\n name=\"Web Agent\",\n role=\"Search the web for information\",\n model=LangDB(\n id=\"openai/gpt-4\",\n base_url=os.getenv(\"LANGDB_API_BASE_URL\") + '/' + os.getenv(\"LANGDB_PROJECT_ID\") + '/v1',\n api_key=os.getenv(\"LANGDB_API_KEY\"),\n project_id=os.getenv(\"LANGDB_PROJECT_ID\"),\n ),\n tools=[DuckDuckGoTools()],\n instructions=\"Answer questions using web search\",\n show_tool_calls=True,\n markdown=True,\n)\n\n# Use the agent\nresponse = agent.run(\"What is LangDB?\")\n```\n\n## \u2699\ufe0f Advanced Configuration\n\n### Environment Variables\n\n| Variable | Description | Default |\n|----------|-------------|---------|\n| `LANGDB_API_KEY` | Your LangDB API key | Required |\n| `LANGDB_PROJECT_ID` | Your LangDB project ID | Required |\n| `LANGDB_API_BASE_URL` | LangDB API base URL | `https://api.us-east-1.langdb.ai` |\n| `LANGDB_TRACING_BASE_URL` | Tracing collector endpoint | `https://api.us-east-1.langdb.ai:4317` |\n| `LANGDB_TRACING` | Enable/disable tracing | `true` |\n| `LANGDB_TRACING_EXPORTERS` | Comma-separated list of exporters | `otlp`, `console` |\n\n### Custom Configuration\n\nAll `init()` functions accept the same optional parameters:\n\n```python\nfrom langdb.openai import init\n\ninit(\n collector_endpoint='https://api.us-east-1.langdb.ai:4317',\n api_key=\"langdb-api-key\",\n project_id=\"langdb-project-id\"\n)\n```\n\n## \ud83d\udd2c Technical Details\n\n### Session and Thread Management\n\n- **Thread ID**: Maintains consistent session identifiers across agent calls\n- **Run ID**: Unique identifier for each execution trace\n- **Invocation Tracking**: Tracks the sequence of agent invocations\n- **State Persistence**: Maintains context across callbacks and sub-agent interactions\n\n### Distributed Tracing\n\n- **OpenTelemetry Integration**: Uses OpenTelemetry for standardized tracing\n- **Attribute Propagation**: Automatically propagates LangDB-specific attributes\n- **Span Correlation**: Links related spans across different agents and frameworks\n- **Custom Exporters**: Supports multiple export formats (OTLP, Console)\n\n## API Reference\n\n### Initialization Functions\n\nEach framework has a simple `init()` function that handles all necessary setup:\n\n- `langdb.adk.init()`: Patches Google ADK Agent class with LangDB callbacks\n- `langdb.openai.init()`: Initializes OpenAI agents tracing\n- `langdb.langchain.init()`: Initializes LangChain tracing\n- `langdb.crewai.init()`: Initializes CrewAI tracing\n- `langdb.agno.init()`: Initializes Agno tracing\n\nAll init functions accept optional parameters for custom configuration (collector_endpoint, api_key, project_id)\n\n## \ud83d\udedf Troubleshooting\n\n### Common Issues\n\n1. **Missing API Key**: Ensure `LANGDB_API_KEY` and `LANGDB_PROJECT_ID` are set\n2. **Tracing Not Working**: Check that initialization functions are called before creating agents\n3. **Network Issues**: Verify collector endpoint is accessible\n4. **Framework Conflicts**: Initialize LangDB integration before other instrumentation\n\n### Debug Mode\n\nEnable console output for debugging:\n```bash\nexport LANGDB_TRACING_EXPORTERS=\"otlp,console\"\n```\n\nDisable tracing entirely:\n```bash\nexport LANGDB_TRACING=\"false\"\n```\n\n## Development\n\n### Setting up the environment\n\n1. Clone the repository\n2. Create a `.env` file with your credentials:\n```bash\nLANGDB_API_KEY=\"your_api_key\"\nLANGDB_PROJECT_ID=\"your_project_id\"\n```\n\n### Running Tests\n\n```bash\npython -m unittest tests/client.py -v\n```\n\n## Publishing\n\n```bash\npoetry build\npoetry publish\n```\n\n## \ud83d\udccb Requirements\n\n- Python >= 3.10\n- Framework-specific dependencies (installed automatically)\n- OpenTelemetry libraries (installed automatically)\n\n## \ud83d\udcc4 License\n\nThis project is licensed under the MIT License - see the [LICENSE](LICENSE) file for details.\n\n## \ud83e\udd1d Support\n\n- **GitHub Issues**: [Report bugs and feature requests](https://github.com/langdb/pylangdb/issues)\n- **Documentation**: [LangDB Documentation](https://docs.langdb.ai)\n",

"bugtrack_url": null,

"license": null,

"summary": "Comprehensive LangDB integration package with enhanced agent support, LLM integration, and distributed tracing.",

"version": "0.3.6",

"project_urls": null,

"split_keywords": [],

"urls": [

{

"comment_text": null,

"digests": {

"blake2b_256": "7b62538fedb5fbb96712f07edb7b504cb02e4110525845ac66896b612032d9e9",

"md5": "1e727698730517a51c74c53659d7e4fd",

"sha256": "db5c2eaa3c5b211b65c5e1d530557817049ebdc63b4315f0d778592026f4bc41"

},

"downloads": -1,

"filename": "pylangdb-0.3.6-py3-none-any.whl",

"has_sig": false,

"md5_digest": "1e727698730517a51c74c53659d7e4fd",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.10",

"size": 23297,

"upload_time": "2025-07-11T04:53:15",

"upload_time_iso_8601": "2025-07-11T04:53:15.967026Z",

"url": "https://files.pythonhosted.org/packages/7b/62/538fedb5fbb96712f07edb7b504cb02e4110525845ac66896b612032d9e9/pylangdb-0.3.6-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": null,

"digests": {

"blake2b_256": "dd9da97bc244ba95185d1e682485443d1d5e6b23caa45a8e9191a7fff78be61f",

"md5": "09700c1bf9950a65ae3745eca776c285",

"sha256": "47d265c60e3d6f6d7d26c1cf38266145c157cf572251c1eb02d5402ee2bbb9f7"

},

"downloads": -1,

"filename": "pylangdb-0.3.6.tar.gz",

"has_sig": false,

"md5_digest": "09700c1bf9950a65ae3745eca776c285",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.10",

"size": 25901,

"upload_time": "2025-07-11T04:53:18",

"upload_time_iso_8601": "2025-07-11T04:53:18.534526Z",

"url": "https://files.pythonhosted.org/packages/dd/9d/a97bc244ba95185d1e682485443d1d5e6b23caa45a8e9191a7fff78be61f/pylangdb-0.3.6.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2025-07-11 04:53:18",

"github": false,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"lcname": "pylangdb"

}