## Quick Topic Mining Toolkit (QTMT)

The `quick-topic` toolkit allows us to quickly evaluate topic models in various methods.

### Features

1. Topic Prevalence Trends Analysis

2. Topic Interaction Strength Analysis

3. Topic Transition Analysis

4. Topic Trends of Numbers of Document Containing Keywords

5. Topic Trends Correlation Analysis

6. Topic Similarity between Trends

7. Summarize Sentence Numbers By Keywords

8. Topic visualization

* This version supports topic modeling from both English and Chinese text.

### Basic Usage

Example: generate topic models for each category in the dataset files

```python

from quick_topic.topic_modeling.lda import build_lda_models

# step 1: data file

meta_csv_file="datasets/list_country.csv"

raw_text_folder="datasets/raw_text"

# term files used for word segementation

list_term_file = [

"../datasets/keywords/countries.csv",

"../datasets/keywords/leaders_unique_names.csv",

"../datasets/keywords/carbon2.csv"

]

# removed words

stop_words_path = "../datasets/stopwords/hit_stopwords.txt"

# run shell

list_category = build_lda_models(

meta_csv_file=meta_csv_file,

raw_text_folder=raw_text_folder,

output_folder="results/topic_modeling",

list_term_file=list_term_file,

stopwords_path=stop_words_path,

prefix_filename="text_",

num_topics=6

)

```

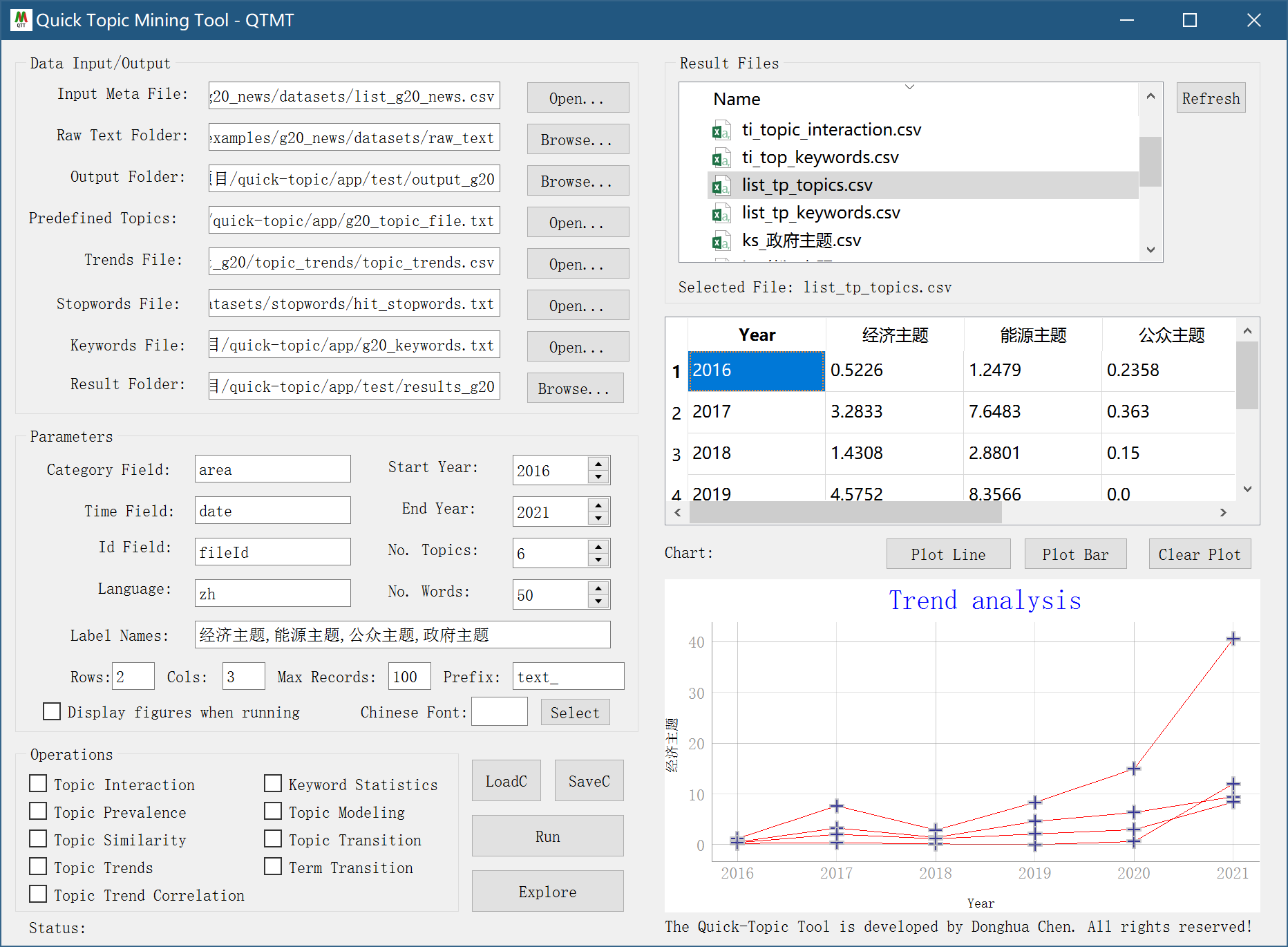

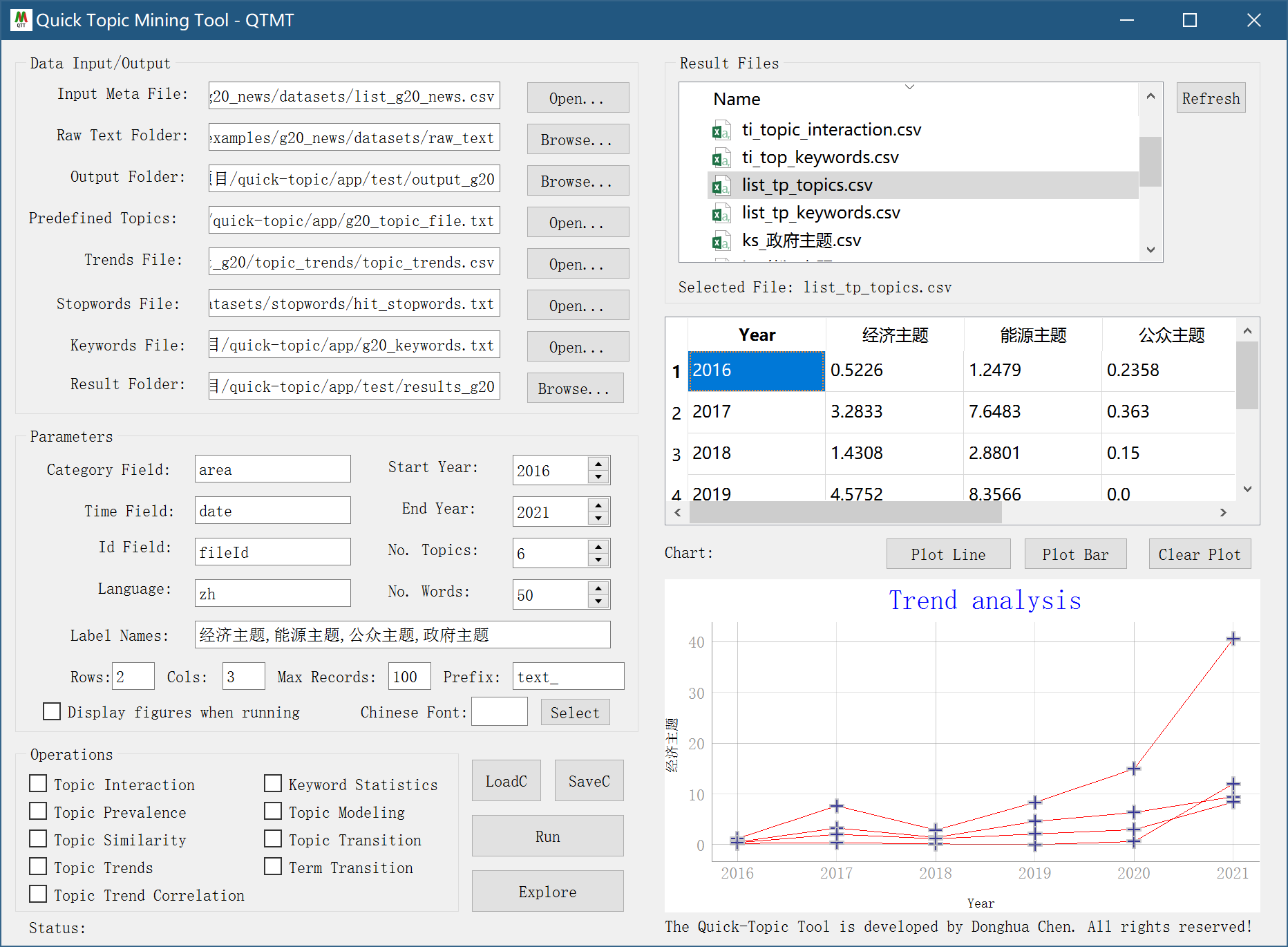

## GUI

After installing the `quick-topic` package, run `qtmt` in the Terminal to call the GUI application.

```

$ qtmt

```

### Advanced Usage

Example 1: Topic Prevalence over Time

```python

from quick_topic.topic_prevalence.main import *

# data file: a csv file; a folder with txt files named the same as the ID field in the csv file

meta_csv_file = "datasets/list_country.csv"

text_root = r"datasets/raw_text"

# word segmentation data files

list_keywords_path = [

"../datasets/keywords/countries.csv",

"../datasets/keywords/leaders_unique_names.csv",

"../datasets/keywords/carbon2.csv"

]

# remove keywords

stop_words_path = "../datasets/stopwords/hit_stopwords.txt"

# date range for analysis

start_year=2010

end_year=2021

# used topics

label_names = ['经济主题', '能源主题', '公众主题', '政府主题']

topic_economics = ['投资', '融资', '经济', '租金', '政府', '就业', '岗位', '工作', '职业', '技能']

topic_energy = ['绿色', '排放', '氢能', '生物能', '天然气', '风能', '石油', '煤炭', '电力', '能源', '消耗', '矿产', '燃料', '电网', '发电']

topic_people = ['健康', '空气污染', '家庭', '能源支出', '行为', '价格', '空气排放物', '死亡', '烹饪', '支出', '可再生', '液化石油气', '污染物', '回收',

'收入', '公民', '民众']

topic_government = ['安全', '能源安全', '石油安全', '天然气安全', '电力安全', '基础设施', '零售业', '国际合作', '税收', '电网', '出口', '输电', '电网扩建',

'政府', '规模经济']

list_topics = [

topic_economics,

topic_energy,

topic_people,

topic_government

]

# run-all

run_topic_prevalence(

meta_csv_file=meta_csv_file,

raw_text_folder=text_root,

save_root_folder="results/topic_prevalence",

list_keywords_path=list_keywords_path,

stop_words_path=stop_words_path,

start_year=start_year,

end_year=end_year,

label_names=label_names,

list_topics=list_topics,

tag_field="area",

time_field="date",

id_field="fileId",

prefix_filename="text_",

)

```

Example 2: Estimate the strength of topic interaction (shared keywords) from different topics

```python

from quick_topic.topic_interaction.main import *

# step 1: data file

meta_csv_file = "datasets/list_country.csv"

text_root = r"datasets/raw_text"

# step2: jieba cut words file

list_keywords_path = [

"../datasets/keywords/countries.csv",

"../datasets/keywords/leaders_unique_names.csv",

"../datasets/keywords/carbon2.csv"

]

# remove files

stopwords_path = "../datasets/stopwords/hit_stopwords.txt"

# set predefined topic labels

label_names = ['经济主题', '能源主题', '公众主题', '政府主题']

# set keywords for each topic

topic_economics = ['投资', '融资', '经济', '租金', '政府', '就业', '岗位', '工作', '职业', '技能']

topic_energy = ['绿色', '排放', '氢能', '生物能', '天然气', '风能', '石油', '煤炭', '电力', '能源', '消耗', '矿产', '燃料', '电网', '发电']

topic_people = ['健康', '空气污染', '家庭', '能源支出', '行为', '价格', '空气排放物', '死亡', '烹饪', '支出', '可再生', '液化石油气', '污染物', '回收',

'收入', '公民', '民众']

topic_government = ['安全', '能源安全', '石油安全', '天然气安全', '电力安全', '基础设施', '零售业', '国际合作', '税收', '电网', '出口', '输电', '电网扩建',

'政府', '规模经济']

# a list of topics above

list_topics = [

topic_economics,

topic_energy,

topic_people,

topic_government

]

# if any keyword is the below one, then the keyword is removed from our consideration

filter_words = ['中国', '国家', '工作', '领域', '社会', '发展', '目标', '全国', '方式', '技术', '产业', '全球', '生活', '行动', '服务', '君联',

'研究', '利用', '意见']

# dictionaries

list_country=[

'巴西','印度','俄罗斯','南非'

]

# run shell

run_topic_interaction(

meta_csv_file=meta_csv_file,

raw_text_folder=text_root,

output_folder="results/topic_interaction/divided",

list_category=list_country, # a dictionary where each record contain a group of keywords

stopwords_path=stopwords_path,

weights_folder='results/topic_interaction/weights',

list_keywords_path=list_keywords_path,

label_names=label_names,

list_topics=list_topics,

filter_words=filter_words,

# set field names

tag_field="area",

keyword_field="", # ignore if keyword from csv exists in the text

time_field="date",

id_field="fileId",

prefix_filename="text_",

)

```

Example 3: Divide datasets by year or year-month

By year:

```python

from quick_topic.topic_transition.divide_by_year import *

divide_by_year(

meta_csv_file="../datasets/list_g20_news_all_clean.csv",

raw_text_folder=r"datasets\g20_news_processed",

output_folder="results/test1/divided_by_year",

start_year=2000,

end_year=2021,

)

```

By year-month:

```python

from quick_topic.topic_transition.divide_by_year_month import *

divide_by_year_month(

meta_csv_file="../datasets/list_g20_news_all_clean.csv",

raw_text_folder=r"datasets\g20_news_processed",

output_folder="results/test1/divided_by_year_month",

start_year=2000,

end_year=2021

)

```

Example 4: Show topic transition by year

```python

from quick_topic.topic_transition.transition_by_year_month_topic import *

label="经济"

keywords=['投资','融资','经济','租金','政府', '就业','岗位','工作','职业','技能']

show_transition_by_year_month_topic(

root_path="results/test1/divided_by_year_month",

label=label,

keywords=keywords,

start_year=2000,

end_year=2021

)

```

Example 5: Show keyword-based topic transition by year-month for keywords in addition to mean lines

```python

from quick_topic.topic_transition.transition_by_year_month_term import *

root_path = "results/news_by_year_month"

select_keywords = ['燃煤', '储能', '电动汽车', '氢能', '脱碳', '风电', '水电', '天然气', '光伏', '可再生', '清洁能源', '核电']

list_all_range = [

[[2010, 2015], [2016, 2021]],

[[2011, 2017], [2018, 2021]],

[[2009, 2017], [2018, 2021]],

[[2011, 2016], [2017, 2021]],

[[2017, 2018], [2019, 2021]],

[[2009, 2014], [2015, 2021]],

[[2009, 2014], [2015, 2021]],

[[2009, 2015], [2016, 2021]],

[[2008, 2011], [2012, 2015], [2016, 2021]],

[[2011, 2016], [2017, 2021]],

[[2009, 2012], [2013, 2016], [2017, 2021]],

[[2009, 2015], [2016, 2021]]

]

output_figure_folder="results/figures"

show_transition_by_year_month_term(

root_path="results/test1/divided_by_year_month",

select_keywords=select_keywords,

list_all_range=list_all_range,

output_figure_folder=output_figure_folder,

start_year=2000,

end_year=2021

)

```

Example 6: Get time trends of numbers of documents containing topic keywords with full text.

```python

from quick_topic.topic_trends.trends_by_year_month_fulltext import *

# define a group of topics with keywords, each topic has a label

label_names=['经济','能源','公民','政府']

keywords_economics = ['投资', '融资', '经济', '租金', '政府', '就业', '岗位', '工作', '职业', '技能']

keywords_energy = ['绿色', '排放', '氢能', '生物能', '天然气', '风能', '石油', '煤炭', '电力', '能源', '消耗', '矿产', '燃料', '电网', '发电']

keywords_people = ['健康', '空气污染', '家庭', '能源支出', '行为', '价格', '空气排放物', '死亡', '烹饪', '支出', '可再生', '液化石油气', '污染物', '回收',

'收入', '公民', '民众']

keywords_government = ['安全', '能源安全', '石油安全', '天然气安全', '电力安全', '基础设施', '零售业', '国际合作', '税收', '电网', '出口', '输电', '电网扩建',

'政府', '规模经济']

list_topics = [

keywords_economics,

keywords_energy,

keywords_people,

keywords_government

]

# call function to show trends of number of documents containing topic keywords each year-month

show_year_month_trends_with_fulltext(

meta_csv_file="datasets/list_country.csv",

list_topics=list_topics,

label_names=label_names,

save_result_path="results/topic_trends/trends_fulltext.csv",

minimum_year=2010,

raw_text_path=r"datasets/raw_text",

id_field='fileId',

time_field='date',

prefix_filename="text_"

)

```

Example 7: Estimate the correlation between two trends

```python

from quick_topic.topic_trends_correlation.topic_trends_correlation_two import *

trends_file="results/topic_trends/trends_fulltext.csv"

label_names=['经济','能源','公民','政府']

list_result=[]

list_line=[]

for i in range(0,len(label_names)-1):

for j in range(i+1,len(label_names)):

label1=label_names[i]

label2=label_names[j]

result=estimate_topic_trends_correlation_single_file(

trend_file=trends_file,

selected_field1=label1,

selected_field2=label2,

start_year=2010,

end_year=2021,

show_figure=False,

time_field='Time'

)

list_result=[]

line=f"({label1},{label2})\t{result['pearson'][0]}\t{result['pearson'][1]}"

list_line.append(line)

print()

print("Correlation analysis resutls:")

print("Pair\tPearson-Stat\tP-value")

for line in list_line:

print(line)

```

Example 8: Estimate topic similarity between two groups of LDA topics

```python

from quick_topic.topic_modeling.lda import build_lda_models

from quick_topic.topic_similarity.topic_similarity_by_category import *

# Step 1: build topic models

meta_csv_file="datasets/list_country.csv"

raw_text_folder="datasets/raw_text"

list_term_file = [

"../datasets/keywords/countries.csv",

"../datasets/keywords/leaders_unique_names.csv",

"../datasets/keywords/carbon2.csv"

]

stop_words_path = "../datasets/stopwords/hit_stopwords.txt"

list_category = build_lda_models(

meta_csv_file=meta_csv_file,

raw_text_folder=raw_text_folder,

output_folder="results/topic_similarity_two/topics",

list_term_file=list_term_file,

stopwords_path=stop_words_path,

prefix_filename="text_",

num_topics=6,

num_words=50

)

# Step 2: estimate similarity

output_folder = "results/topic_similarity_two/topics"

keywords_file="../datasets/keywords/carbon2.csv"

estimate_topic_similarity(

list_topic=list_category,

topic_folder=output_folder,

list_keywords_file=keywords_file,

)

```

Example 9: Stat sentence numbers by keywords

```python

from quick_topic.topic_stat.stat_by_keyword import *

meta_csv_file='datasets/list_country.csv'

raw_text_folder="datasets/raw_text"

keywords_energy = ['煤炭', '天然气', '石油', '生物', '太阳能', '风能', '氢能', '水力', '核能']

stat_sentence_by_keywords(

meta_csv_file=meta_csv_file,

keywords=keywords_energy,

id_field="fileId",

raw_text_folder=raw_text_folder,

contains_keyword_in_sentence='',

prefix_file_name='text_'

)

```

Example 9: English topic modeling

```python

from quick_topic.topic_visualization.topic_modeling_pipeline import *

## data

meta_csv_file = "datasets_en/list_blog_meta.csv"

raw_text_folder = f"datasets_en/raw_text"

stopwords_path = ""

## parameters

chinese_font_file = ""

num_topics=8

num_words=20

n_rows=2

n_cols=4

max_records=-1

## output

result_output_folder=f"results/en_topic{num_topics}"

if not os.path.exists(result_output_folder):

os.mkdir(result_output_folder)

run_topic_modeling_pipeline(

meta_csv_file=meta_csv_file,

raw_text_folder=raw_text_folder,

stopwords_path=stopwords_path,

top_record_num=max_records,

chinese_font_file="",

num_topics=num_topics,

num_words=num_words,

n_rows=n_rows,n_cols=n_cols,

result_output_folder = result_output_folder,

load_existing_models=False,

lang='en',

prefix_filename=''

)

```

## License

The `quick-topic` toolkit is provided by [Donghua Chen](https://github.com/dhchenx) with MIT License.

Raw data

{

"_id": null,

"home_page": "https://github.com/dhchenx/quick-topic",

"name": "quick-topic",

"maintainer": "",

"docs_url": null,

"requires_python": ">=3.6, <4",

"maintainer_email": "",

"keywords": "topic mining,text analysis",

"author": "Donghua Chen",

"author_email": "douglaschan@126.com",

"download_url": "https://files.pythonhosted.org/packages/20/54/ce0c4eb13ce6a95c50f6605bfdc7ea799042b37abb39ec10972a3b32c071/quick-topic-1.0.2.tar.gz",

"platform": null,

"description": "## Quick Topic Mining Toolkit (QTMT)\r\nThe `quick-topic` toolkit allows us to quickly evaluate topic models in various methods.\r\n\r\n### Features\r\n\r\n1. Topic Prevalence Trends Analysis\r\n2. Topic Interaction Strength Analysis\r\n3. Topic Transition Analysis\r\n4. Topic Trends of Numbers of Document Containing Keywords\r\n5. Topic Trends Correlation Analysis\r\n6. Topic Similarity between Trends\r\n7. Summarize Sentence Numbers By Keywords\r\n8. Topic visualization\r\n\r\n* This version supports topic modeling from both English and Chinese text.\r\n\r\n### Basic Usage\r\n\r\nExample: generate topic models for each category in the dataset files\r\n\r\n```python\r\nfrom quick_topic.topic_modeling.lda import build_lda_models\r\n# step 1: data file\r\nmeta_csv_file=\"datasets/list_country.csv\"\r\nraw_text_folder=\"datasets/raw_text\"\r\n# term files used for word segementation\r\nlist_term_file = [\r\n \"../datasets/keywords/countries.csv\",\r\n \"../datasets/keywords/leaders_unique_names.csv\",\r\n \"../datasets/keywords/carbon2.csv\"\r\n ]\r\n# removed words\r\nstop_words_path = \"../datasets/stopwords/hit_stopwords.txt\"\r\n# run shell\r\nlist_category = build_lda_models(\r\n meta_csv_file=meta_csv_file,\r\n raw_text_folder=raw_text_folder,\r\n output_folder=\"results/topic_modeling\",\r\n list_term_file=list_term_file,\r\n stopwords_path=stop_words_path,\r\n prefix_filename=\"text_\",\r\n num_topics=6\r\n)\r\n```\r\n\r\n## GUI\r\nAfter installing the `quick-topic` package, run `qtmt` in the Terminal to call the GUI application.\r\n\r\n```\r\n$ qtmt\r\n```\r\n\r\n\r\n\r\n\r\n### Advanced Usage\r\n\r\nExample 1: Topic Prevalence over Time\r\n\r\n```python\r\nfrom quick_topic.topic_prevalence.main import *\r\n# data file: a csv file; a folder with txt files named the same as the ID field in the csv file\r\nmeta_csv_file = \"datasets/list_country.csv\"\r\ntext_root = r\"datasets/raw_text\"\r\n# word segmentation data files\r\nlist_keywords_path = [\r\n \"../datasets/keywords/countries.csv\",\r\n \"../datasets/keywords/leaders_unique_names.csv\",\r\n \"../datasets/keywords/carbon2.csv\"\r\n ]\r\n# remove keywords\r\nstop_words_path = \"../datasets/stopwords/hit_stopwords.txt\"\r\n# date range for analysis\r\nstart_year=2010\r\nend_year=2021\r\n# used topics\r\nlabel_names = ['\u7ecf\u6d4e\u4e3b\u9898', '\u80fd\u6e90\u4e3b\u9898', '\u516c\u4f17\u4e3b\u9898', '\u653f\u5e9c\u4e3b\u9898']\r\ntopic_economics = ['\u6295\u8d44', '\u878d\u8d44', '\u7ecf\u6d4e', '\u79df\u91d1', '\u653f\u5e9c', '\u5c31\u4e1a', '\u5c97\u4f4d', '\u5de5\u4f5c', '\u804c\u4e1a', '\u6280\u80fd']\r\ntopic_energy = ['\u7eff\u8272', '\u6392\u653e', '\u6c22\u80fd', '\u751f\u7269\u80fd', '\u5929\u7136\u6c14', '\u98ce\u80fd', '\u77f3\u6cb9', '\u7164\u70ad', '\u7535\u529b', '\u80fd\u6e90', '\u6d88\u8017', '\u77ff\u4ea7', '\u71c3\u6599', '\u7535\u7f51', '\u53d1\u7535']\r\ntopic_people = ['\u5065\u5eb7', '\u7a7a\u6c14\u6c61\u67d3', '\u5bb6\u5ead', '\u80fd\u6e90\u652f\u51fa', '\u884c\u4e3a', '\u4ef7\u683c', '\u7a7a\u6c14\u6392\u653e\u7269', '\u6b7b\u4ea1', '\u70f9\u996a', '\u652f\u51fa', '\u53ef\u518d\u751f', '\u6db2\u5316\u77f3\u6cb9\u6c14', '\u6c61\u67d3\u7269', '\u56de\u6536',\r\n '\u6536\u5165', '\u516c\u6c11', '\u6c11\u4f17']\r\ntopic_government = ['\u5b89\u5168', '\u80fd\u6e90\u5b89\u5168', '\u77f3\u6cb9\u5b89\u5168', '\u5929\u7136\u6c14\u5b89\u5168', '\u7535\u529b\u5b89\u5168', '\u57fa\u7840\u8bbe\u65bd', '\u96f6\u552e\u4e1a', '\u56fd\u9645\u5408\u4f5c', '\u7a0e\u6536', '\u7535\u7f51', '\u51fa\u53e3', '\u8f93\u7535', '\u7535\u7f51\u6269\u5efa',\r\n '\u653f\u5e9c', '\u89c4\u6a21\u7ecf\u6d4e']\r\nlist_topics = [\r\n topic_economics,\r\n topic_energy,\r\n topic_people,\r\n topic_government\r\n]\r\n# run-all\r\nrun_topic_prevalence(\r\n meta_csv_file=meta_csv_file,\r\n raw_text_folder=text_root,\r\n save_root_folder=\"results/topic_prevalence\",\r\n list_keywords_path=list_keywords_path,\r\n stop_words_path=stop_words_path,\r\n start_year=start_year,\r\n end_year=end_year,\r\n label_names=label_names,\r\n list_topics=list_topics,\r\n tag_field=\"area\",\r\n time_field=\"date\",\r\n id_field=\"fileId\",\r\n prefix_filename=\"text_\",\r\n)\r\n```\r\n\r\nExample 2: Estimate the strength of topic interaction (shared keywords) from different topics \r\n\r\n```python\r\nfrom quick_topic.topic_interaction.main import *\r\n# step 1: data file\r\nmeta_csv_file = \"datasets/list_country.csv\"\r\ntext_root = r\"datasets/raw_text\"\r\n# step2: jieba cut words file\r\nlist_keywords_path = [\r\n \"../datasets/keywords/countries.csv\",\r\n \"../datasets/keywords/leaders_unique_names.csv\",\r\n \"../datasets/keywords/carbon2.csv\"\r\n ]\r\n# remove files\r\nstopwords_path = \"../datasets/stopwords/hit_stopwords.txt\"\r\n# set predefined topic labels\r\nlabel_names = ['\u7ecf\u6d4e\u4e3b\u9898', '\u80fd\u6e90\u4e3b\u9898', '\u516c\u4f17\u4e3b\u9898', '\u653f\u5e9c\u4e3b\u9898']\r\n# set keywords for each topic\r\ntopic_economics = ['\u6295\u8d44', '\u878d\u8d44', '\u7ecf\u6d4e', '\u79df\u91d1', '\u653f\u5e9c', '\u5c31\u4e1a', '\u5c97\u4f4d', '\u5de5\u4f5c', '\u804c\u4e1a', '\u6280\u80fd']\r\ntopic_energy = ['\u7eff\u8272', '\u6392\u653e', '\u6c22\u80fd', '\u751f\u7269\u80fd', '\u5929\u7136\u6c14', '\u98ce\u80fd', '\u77f3\u6cb9', '\u7164\u70ad', '\u7535\u529b', '\u80fd\u6e90', '\u6d88\u8017', '\u77ff\u4ea7', '\u71c3\u6599', '\u7535\u7f51', '\u53d1\u7535']\r\ntopic_people = ['\u5065\u5eb7', '\u7a7a\u6c14\u6c61\u67d3', '\u5bb6\u5ead', '\u80fd\u6e90\u652f\u51fa', '\u884c\u4e3a', '\u4ef7\u683c', '\u7a7a\u6c14\u6392\u653e\u7269', '\u6b7b\u4ea1', '\u70f9\u996a', '\u652f\u51fa', '\u53ef\u518d\u751f', '\u6db2\u5316\u77f3\u6cb9\u6c14', '\u6c61\u67d3\u7269', '\u56de\u6536',\r\n '\u6536\u5165', '\u516c\u6c11', '\u6c11\u4f17']\r\ntopic_government = ['\u5b89\u5168', '\u80fd\u6e90\u5b89\u5168', '\u77f3\u6cb9\u5b89\u5168', '\u5929\u7136\u6c14\u5b89\u5168', '\u7535\u529b\u5b89\u5168', '\u57fa\u7840\u8bbe\u65bd', '\u96f6\u552e\u4e1a', '\u56fd\u9645\u5408\u4f5c', '\u7a0e\u6536', '\u7535\u7f51', '\u51fa\u53e3', '\u8f93\u7535', '\u7535\u7f51\u6269\u5efa',\r\n '\u653f\u5e9c', '\u89c4\u6a21\u7ecf\u6d4e']\r\n# a list of topics above\r\nlist_topics = [\r\n topic_economics,\r\n topic_energy,\r\n topic_people,\r\n topic_government\r\n]\r\n# if any keyword is the below one, then the keyword is removed from our consideration\r\nfilter_words = ['\u4e2d\u56fd', '\u56fd\u5bb6', '\u5de5\u4f5c', '\u9886\u57df', '\u793e\u4f1a', '\u53d1\u5c55', '\u76ee\u6807', '\u5168\u56fd', '\u65b9\u5f0f', '\u6280\u672f', '\u4ea7\u4e1a', '\u5168\u7403', '\u751f\u6d3b', '\u884c\u52a8', '\u670d\u52a1', '\u541b\u8054',\r\n '\u7814\u7a76', '\u5229\u7528', '\u610f\u89c1']\r\n# dictionaries\r\nlist_country=[\r\n '\u5df4\u897f','\u5370\u5ea6','\u4fc4\u7f57\u65af','\u5357\u975e'\r\n]\r\n# run shell\r\nrun_topic_interaction(\r\n meta_csv_file=meta_csv_file,\r\n raw_text_folder=text_root,\r\n output_folder=\"results/topic_interaction/divided\",\r\n list_category=list_country, # a dictionary where each record contain a group of keywords\r\n stopwords_path=stopwords_path,\r\n weights_folder='results/topic_interaction/weights',\r\n list_keywords_path=list_keywords_path,\r\n label_names=label_names,\r\n list_topics=list_topics,\r\n filter_words=filter_words,\r\n # set field names\r\n tag_field=\"area\",\r\n keyword_field=\"\", # ignore if keyword from csv exists in the text\r\n time_field=\"date\",\r\n id_field=\"fileId\",\r\n prefix_filename=\"text_\",\r\n)\r\n```\r\n\r\nExample 3: Divide datasets by year or year-month\r\n\r\nBy year:\r\n```python\r\nfrom quick_topic.topic_transition.divide_by_year import *\r\ndivide_by_year(\r\n meta_csv_file=\"../datasets/list_g20_news_all_clean.csv\",\r\n raw_text_folder=r\"datasets\\g20_news_processed\",\r\n output_folder=\"results/test1/divided_by_year\",\r\n start_year=2000,\r\n end_year=2021,\r\n)\r\n```\r\n\r\nBy year-month:\r\n```python\r\nfrom quick_topic.topic_transition.divide_by_year_month import *\r\ndivide_by_year_month(\r\n meta_csv_file=\"../datasets/list_g20_news_all_clean.csv\",\r\n raw_text_folder=r\"datasets\\g20_news_processed\",\r\n output_folder=\"results/test1/divided_by_year_month\",\r\n start_year=2000,\r\n end_year=2021\r\n)\r\n```\r\n\r\nExample 4: Show topic transition by year\r\n\r\n```python\r\nfrom quick_topic.topic_transition.transition_by_year_month_topic import *\r\nlabel=\"\u7ecf\u6d4e\"\r\nkeywords=['\u6295\u8d44','\u878d\u8d44','\u7ecf\u6d4e','\u79df\u91d1','\u653f\u5e9c', '\u5c31\u4e1a','\u5c97\u4f4d','\u5de5\u4f5c','\u804c\u4e1a','\u6280\u80fd']\r\nshow_transition_by_year_month_topic(\r\n root_path=\"results/test1/divided_by_year_month\",\r\n label=label,\r\n keywords=keywords,\r\n start_year=2000,\r\n end_year=2021\r\n)\r\n```\r\n\r\nExample 5: Show keyword-based topic transition by year-month for keywords in addition to mean lines\r\n```python\r\nfrom quick_topic.topic_transition.transition_by_year_month_term import *\r\nroot_path = \"results/news_by_year_month\"\r\nselect_keywords = ['\u71c3\u7164', '\u50a8\u80fd', '\u7535\u52a8\u6c7d\u8f66', '\u6c22\u80fd', '\u8131\u78b3', '\u98ce\u7535', '\u6c34\u7535', '\u5929\u7136\u6c14', '\u5149\u4f0f', '\u53ef\u518d\u751f', '\u6e05\u6d01\u80fd\u6e90', '\u6838\u7535']\r\nlist_all_range = [\r\n [[2010, 2015], [2016, 2021]],\r\n [[2011, 2017], [2018, 2021]],\r\n [[2009, 2017], [2018, 2021]],\r\n [[2011, 2016], [2017, 2021]],\r\n [[2017, 2018], [2019, 2021]],\r\n [[2009, 2014], [2015, 2021]],\r\n [[2009, 2014], [2015, 2021]],\r\n [[2009, 2015], [2016, 2021]],\r\n [[2008, 2011], [2012, 2015], [2016, 2021]],\r\n [[2011, 2016], [2017, 2021]],\r\n [[2009, 2012], [2013, 2016], [2017, 2021]],\r\n [[2009, 2015], [2016, 2021]]\r\n]\r\noutput_figure_folder=\"results/figures\"\r\nshow_transition_by_year_month_term(\r\n root_path=\"results/test1/divided_by_year_month\",\r\n select_keywords=select_keywords,\r\n list_all_range=list_all_range,\r\n output_figure_folder=output_figure_folder,\r\n start_year=2000,\r\n end_year=2021\r\n)\r\n```\r\n\r\nExample 6: Get time trends of numbers of documents containing topic keywords with full text.\r\n\r\n```python\r\nfrom quick_topic.topic_trends.trends_by_year_month_fulltext import *\r\n# define a group of topics with keywords, each topic has a label\r\nlabel_names=['\u7ecf\u6d4e','\u80fd\u6e90','\u516c\u6c11','\u653f\u5e9c']\r\nkeywords_economics = ['\u6295\u8d44', '\u878d\u8d44', '\u7ecf\u6d4e', '\u79df\u91d1', '\u653f\u5e9c', '\u5c31\u4e1a', '\u5c97\u4f4d', '\u5de5\u4f5c', '\u804c\u4e1a', '\u6280\u80fd']\r\nkeywords_energy = ['\u7eff\u8272', '\u6392\u653e', '\u6c22\u80fd', '\u751f\u7269\u80fd', '\u5929\u7136\u6c14', '\u98ce\u80fd', '\u77f3\u6cb9', '\u7164\u70ad', '\u7535\u529b', '\u80fd\u6e90', '\u6d88\u8017', '\u77ff\u4ea7', '\u71c3\u6599', '\u7535\u7f51', '\u53d1\u7535']\r\nkeywords_people = ['\u5065\u5eb7', '\u7a7a\u6c14\u6c61\u67d3', '\u5bb6\u5ead', '\u80fd\u6e90\u652f\u51fa', '\u884c\u4e3a', '\u4ef7\u683c', '\u7a7a\u6c14\u6392\u653e\u7269', '\u6b7b\u4ea1', '\u70f9\u996a', '\u652f\u51fa', '\u53ef\u518d\u751f', '\u6db2\u5316\u77f3\u6cb9\u6c14', '\u6c61\u67d3\u7269', '\u56de\u6536',\r\n '\u6536\u5165', '\u516c\u6c11', '\u6c11\u4f17']\r\nkeywords_government = ['\u5b89\u5168', '\u80fd\u6e90\u5b89\u5168', '\u77f3\u6cb9\u5b89\u5168', '\u5929\u7136\u6c14\u5b89\u5168', '\u7535\u529b\u5b89\u5168', '\u57fa\u7840\u8bbe\u65bd', '\u96f6\u552e\u4e1a', '\u56fd\u9645\u5408\u4f5c', '\u7a0e\u6536', '\u7535\u7f51', '\u51fa\u53e3', '\u8f93\u7535', '\u7535\u7f51\u6269\u5efa',\r\n '\u653f\u5e9c', '\u89c4\u6a21\u7ecf\u6d4e']\r\nlist_topics = [\r\n keywords_economics,\r\n keywords_energy,\r\n keywords_people,\r\n keywords_government\r\n]\r\n# call function to show trends of number of documents containing topic keywords each year-month\r\nshow_year_month_trends_with_fulltext(\r\n meta_csv_file=\"datasets/list_country.csv\",\r\n list_topics=list_topics,\r\n label_names=label_names,\r\n save_result_path=\"results/topic_trends/trends_fulltext.csv\",\r\n minimum_year=2010,\r\n raw_text_path=r\"datasets/raw_text\",\r\n id_field='fileId',\r\n time_field='date',\r\n prefix_filename=\"text_\"\r\n)\r\n```\r\n\r\nExample 7: Estimate the correlation between two trends\r\n```python\r\nfrom quick_topic.topic_trends_correlation.topic_trends_correlation_two import *\r\ntrends_file=\"results/topic_trends/trends_fulltext.csv\"\r\nlabel_names=['\u7ecf\u6d4e','\u80fd\u6e90','\u516c\u6c11','\u653f\u5e9c']\r\nlist_result=[]\r\nlist_line=[]\r\nfor i in range(0,len(label_names)-1):\r\n for j in range(i+1,len(label_names)):\r\n label1=label_names[i]\r\n label2=label_names[j]\r\n result=estimate_topic_trends_correlation_single_file(\r\n trend_file=trends_file,\r\n selected_field1=label1,\r\n selected_field2=label2,\r\n start_year=2010,\r\n end_year=2021,\r\n show_figure=False,\r\n time_field='Time'\r\n )\r\n list_result=[]\r\n line=f\"({label1},{label2})\\t{result['pearson'][0]}\\t{result['pearson'][1]}\"\r\n list_line.append(line)\r\n print()\r\nprint(\"Correlation analysis resutls:\")\r\nprint(\"Pair\\tPearson-Stat\\tP-value\")\r\nfor line in list_line:\r\n print(line)\r\n```\r\n\r\nExample 8: Estimate topic similarity between two groups of LDA topics\r\n```python\r\nfrom quick_topic.topic_modeling.lda import build_lda_models\r\nfrom quick_topic.topic_similarity.topic_similarity_by_category import *\r\n# Step 1: build topic models\r\nmeta_csv_file=\"datasets/list_country.csv\"\r\nraw_text_folder=\"datasets/raw_text\"\r\nlist_term_file = [\r\n \"../datasets/keywords/countries.csv\",\r\n \"../datasets/keywords/leaders_unique_names.csv\",\r\n \"../datasets/keywords/carbon2.csv\"\r\n ]\r\nstop_words_path = \"../datasets/stopwords/hit_stopwords.txt\"\r\nlist_category = build_lda_models(\r\n meta_csv_file=meta_csv_file,\r\n raw_text_folder=raw_text_folder,\r\n output_folder=\"results/topic_similarity_two/topics\",\r\n list_term_file=list_term_file,\r\n stopwords_path=stop_words_path,\r\n prefix_filename=\"text_\",\r\n num_topics=6,\r\n num_words=50\r\n)\r\n# Step 2: estimate similarity\r\noutput_folder = \"results/topic_similarity_two/topics\"\r\nkeywords_file=\"../datasets/keywords/carbon2.csv\"\r\nestimate_topic_similarity(\r\n list_topic=list_category,\r\n topic_folder=output_folder,\r\n list_keywords_file=keywords_file,\r\n)\r\n```\r\n\r\nExample 9: Stat sentence numbers by keywords\r\n\r\n```python\r\nfrom quick_topic.topic_stat.stat_by_keyword import *\r\nmeta_csv_file='datasets/list_country.csv'\r\nraw_text_folder=\"datasets/raw_text\"\r\nkeywords_energy = ['\u7164\u70ad', '\u5929\u7136\u6c14', '\u77f3\u6cb9', '\u751f\u7269', '\u592a\u9633\u80fd', '\u98ce\u80fd', '\u6c22\u80fd', '\u6c34\u529b', '\u6838\u80fd']\r\nstat_sentence_by_keywords(\r\n meta_csv_file=meta_csv_file,\r\n keywords=keywords_energy,\r\n id_field=\"fileId\",\r\n raw_text_folder=raw_text_folder,\r\n contains_keyword_in_sentence='',\r\n prefix_file_name='text_'\r\n)\r\n```\r\n\r\nExample 9: English topic modeling \r\n\r\n```python\r\nfrom quick_topic.topic_visualization.topic_modeling_pipeline import *\r\n## data\r\nmeta_csv_file = \"datasets_en/list_blog_meta.csv\"\r\nraw_text_folder = f\"datasets_en/raw_text\"\r\nstopwords_path = \"\"\r\n## parameters\r\nchinese_font_file = \"\"\r\nnum_topics=8\r\nnum_words=20\r\nn_rows=2\r\nn_cols=4\r\nmax_records=-1\r\n## output\r\nresult_output_folder=f\"results/en_topic{num_topics}\"\r\n\r\nif not os.path.exists(result_output_folder):\r\n os.mkdir(result_output_folder)\r\n\r\nrun_topic_modeling_pipeline(\r\n meta_csv_file=meta_csv_file,\r\n raw_text_folder=raw_text_folder,\r\n stopwords_path=stopwords_path,\r\n top_record_num=max_records,\r\n chinese_font_file=\"\",\r\n num_topics=num_topics,\r\n num_words=num_words,\r\n n_rows=n_rows,n_cols=n_cols,\r\n result_output_folder = result_output_folder,\r\n load_existing_models=False,\r\n lang='en',\r\n prefix_filename=''\r\n )\r\n```\r\n\r\n## License\r\n\r\nThe `quick-topic` toolkit is provided by [Donghua Chen](https://github.com/dhchenx) with MIT License.\r\n\r\n",

"bugtrack_url": null,

"license": "MIT",

"summary": "A toolkit to quickly analyze topic models using various methods",

"version": "1.0.2",

"project_urls": {

"Bug Reports": "https://github.com/dhchenx/quick-topic/issues",

"Homepage": "https://github.com/dhchenx/quick-topic",

"Source": "https://github.com/dhchenx/quick-topic"

},

"split_keywords": [

"topic mining",

"text analysis"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "4d4bf739ea1a10a5bdf627083e5f47e14035d75a21025f9e997e549c71b53cb5",

"md5": "5cb9ab8fbad13cc9df720c16c4aabb0c",

"sha256": "0aa240f47672ec236a84a5a547ca32351a3476acdf30c7594821aea97a00eb30"

},

"downloads": -1,

"filename": "quick_topic-1.0.2-py3-none-any.whl",

"has_sig": false,

"md5_digest": "5cb9ab8fbad13cc9df720c16c4aabb0c",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.6, <4",

"size": 248700,

"upload_time": "2023-07-16T00:39:35",

"upload_time_iso_8601": "2023-07-16T00:39:35.858670Z",

"url": "https://files.pythonhosted.org/packages/4d/4b/f739ea1a10a5bdf627083e5f47e14035d75a21025f9e997e549c71b53cb5/quick_topic-1.0.2-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "2054ce0c4eb13ce6a95c50f6605bfdc7ea799042b37abb39ec10972a3b32c071",

"md5": "2b330eb6bafc1f364ab023891243326f",

"sha256": "4cc52832fece7fd0f7dfafc8f15f8264631007a3fe5dba3a5a34d846bd9ed57e"

},

"downloads": -1,

"filename": "quick-topic-1.0.2.tar.gz",

"has_sig": false,

"md5_digest": "2b330eb6bafc1f364ab023891243326f",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.6, <4",

"size": 159833,

"upload_time": "2023-07-16T00:39:38",

"upload_time_iso_8601": "2023-07-16T00:39:38.155806Z",

"url": "https://files.pythonhosted.org/packages/20/54/ce0c4eb13ce6a95c50f6605bfdc7ea799042b37abb39ec10972a3b32c071/quick-topic-1.0.2.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2023-07-16 00:39:38",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "dhchenx",

"github_project": "quick-topic",

"github_not_found": true,

"lcname": "quick-topic"

}