# RAGulate

A tool for evaluating RAG pipelines

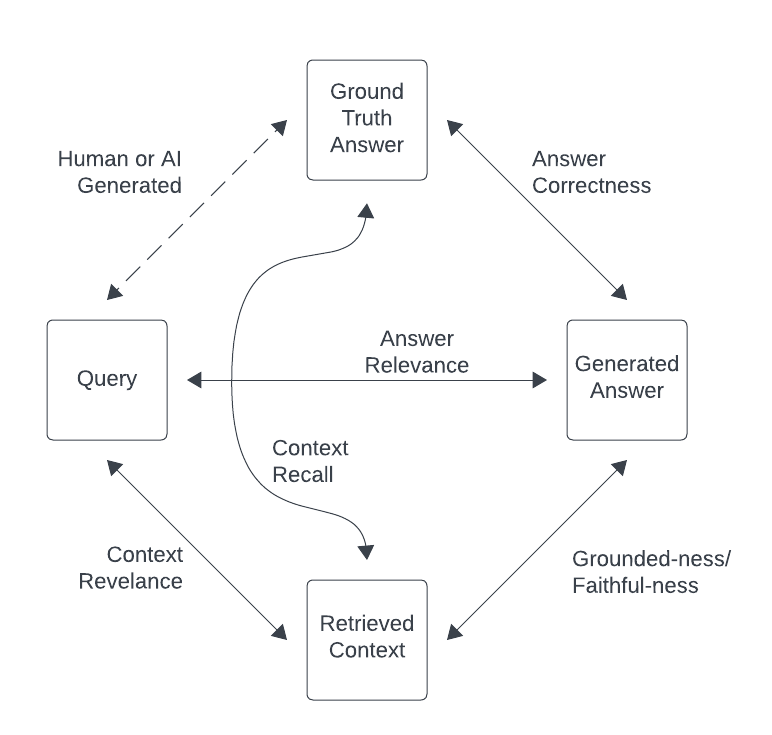

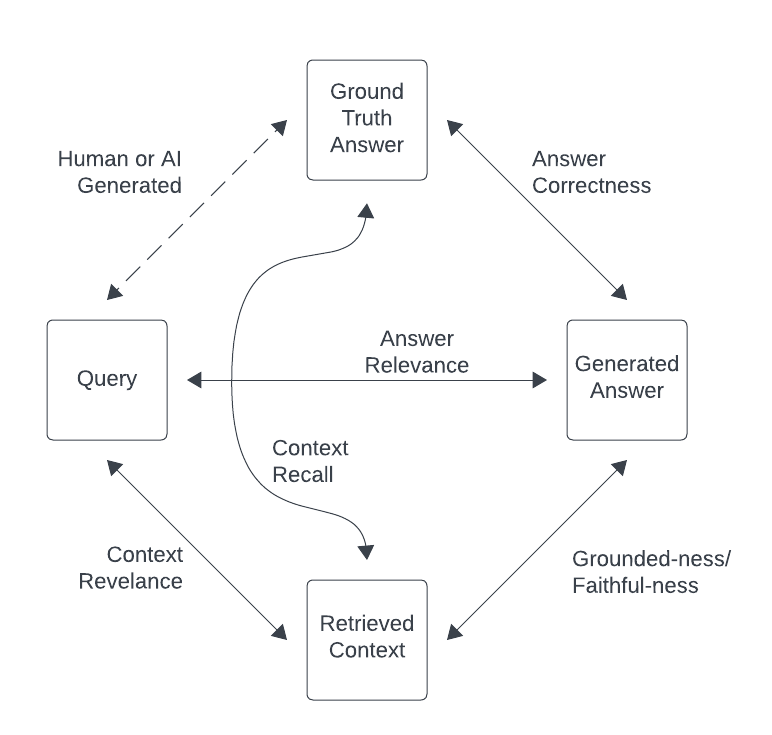

## The Metrics

The RAGulate currently reports 4 relevancy metrics: Answer Correctness, Answer Relevance, Context Relevance, and Groundedness.

* Answer Correctness

* How well does the generated answer match the ground-truth answer?

* This confirms how well the full system performed.

* Answer Relevance

* Is the generated answer relevant to the query?

* This shows if the LLM is responding in a way that is helpful to answer the query.

* Context Relevance:

* Does the retrieved context contain information to answer the query?

* This shows how well the retrieval part of the process is performing.

* Groundedness:

* Is the generated response supported by the context?

* Low scores here indicate that the LLM is hallucinating.

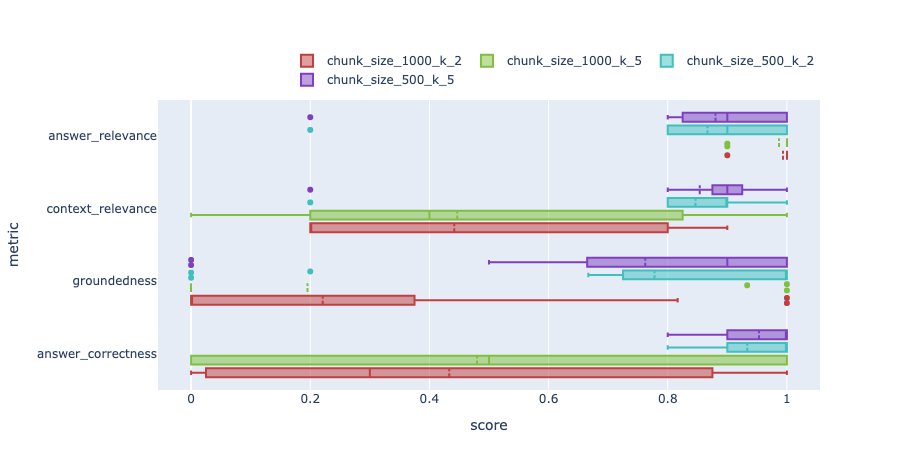

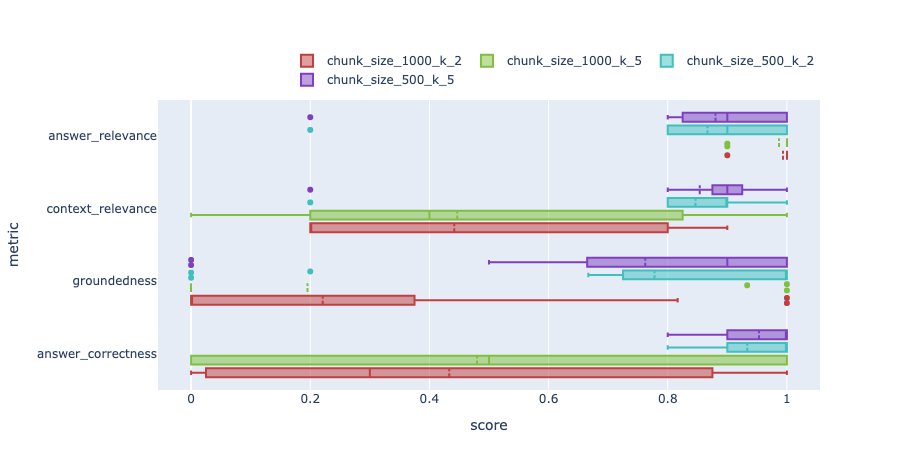

## Example Output

The tool outputs results as images like this:

These images show distribution box plots of the metrics for different test runs.

## Installation

```sh

pip install ragulate

```

## Initial Setup

1. Set your environment variables or create a `.env` file. You will need to set `OPENAI_API_KEY` and

any other environment variables needed by your ingest and query pipelines.

1. Wrap your ingest pipeline in a single python method. The method should take a `file_path` parameter and

any other variables that you will pass during your experimentation. The method should ingest the passed

file into your vector store.

See the `ingest()` method in [open_ai_chunk_size_and_k.py](open_ai_chunk_size_and_k.py) as an example.

This method configures an ingest pipeline using the parameter `chunk_size` and ingests the file passed.

1. Wrap your query pipeline in a single python method, and return it. The method should have parameters for

any variables that you will pass during your experimentation. Currently only LangChain LCEL query pipelines

are supported.

See the `query()` method in [open_ai_chunk_size_and_k.py](open_ai_chunk_size_and_k.py) as an example.

This method returns a LangChain LCEL pipeline configured by the parameters `chunk_size` and `k`.

Note: It is helpful to have a `**kwargs` param in your pipeline method definitions, so that if extra params

are passed, they can be safely ignored.

## Usage

### Summary

```sh

usage: ragulate [-h] {download,ingest,query,compare} ...

RAGu-late CLI tool.

options:

-h, --help show this help message and exit

commands:

download Download a dataset

ingest Run an ingest pipeline

query Run an query pipeline

compare Compare results from 2 (or more) recipes

run Run an experiment from a config file

```

### Example

For the examples below, we will use the example experiment [open_ai_chunk_size_and_k.py](open_ai_chunk_size_and_k.py)

and see how the RAG metrics change for changes in `chunk_size` and `k` (number of documents retrieved).

There are two ways to run Ragulate to run an experiment. Either define an experiment with a config file or execute it manually step by step.

#### Via Config File

**Note: Running via config file is a new feature and it is not as stable as running manually.**

1. Create a yaml config file with a similar format to the example config: [example_config.yaml](example_config.yaml). This defines the same test as shown manually below.

1. Execute it with a single command:

```

ragulate run example_config.yaml

```

This will:

* Download the test datasets

* Run the ingest pipelines

* Run the query pipelines

* Output an analysis of the results.

#### Manually

1. Download a dataset. See available datasets here: https://llamahub.ai/?tab=llama_datasets

* If you are unsure where to start, recommended datasets are:

* `BraintrustCodaHelpDesk`

* `BlockchainSolana`

Examples:

* `ragulate download -k llama BraintrustCodaHelpDesk`

* `ragulate download -k llama BlockchainSolana`

2. Ingest the datasets using different methods:

Examples:

* Ingest with `chunk_size=200`:

```

ragulate ingest -n chunk_size_200 -s open_ai_chunk_size_and_k.py -m ingest \

--var-name chunk_size --var-value 200 --dataset BraintrustCodaHelpDesk --dataset BlockchainSolana

```

* Ingest with `chunk_size=100`:

```

ragulate ingest -n chunk_size_100 -s open_ai_chunk_size_and_k.py -m ingest \

--var-name chunk_size --var-value 100 --dataset BraintrustCodaHelpDesk --dataset BlockchainSolana

```

3. Run query and evaluations on the datasets using methods:

Examples:

* Query with `chunk_size=200` and `k=2`

```

ragulate query -n chunk_size_200_k_2 -s open_ai_chunk_size_and_k.py -m query_pipeline \

--var-name chunk_size --var-value 200 --var-name k --var-value 2 --dataset BraintrustCodaHelpDesk --dataset BlockchainSolana

```

* Query with `chunk_size=100` and `k=2`

```

ragulate query -n chunk_size_100_k_2 -s open_ai_chunk_size_and_k.py -m query_pipeline \

--var-name chunk_size --var-value 100 --var-name k --var-value 2 --dataset BraintrustCodaHelpDesk --dataset BlockchainSolana

```

* Query with `chunk_size=200` and `k=5`

```

ragulate query -n chunk_size_200_k_5 -s open_ai_chunk_size_and_k.py -m query_pipeline \

--var-name chunk_size --var-value 200 --var-name k --var-value 5 --dataset BraintrustCodaHelpDesk --dataset BlockchainSolana

```

* Query with `chunk_size=100` and `k=5`

```

ragulate query -n chunk_size_100_k_5 -s open_ai_chunk_size_and_k.py -m query_pipeline \

--var-name chunk_size --var-value 100 --var-name k --var-value 5 --dataset BraintrustCodaHelpDesk --dataset BlockchainSolana

```

1. Run a compare to get the results:

Example:

```

ragulate compare -r chunk_size_100_k_2 -r chunk_size_200_k_2 -r chunk_size_100_k_5 -r chunk_size_200_k_5

```

This will output 2 png files. one for each dataset.

## Current Limitations

* The evaluation model is locked to OpenAI gpt3.5

* Only LangChain query pipelines are supported

* Only LlamaIndex datasets are supported

* There is no way to specify which metrics to evaluate.

Raw data

{

"_id": null,

"home_page": "https://github.com/datastax/ragstack-ai",

"name": "ragstack-ai-ragulate",

"maintainer": null,

"docs_url": null,

"requires_python": "<3.13,>=3.10",

"maintainer_email": null,

"keywords": null,

"author": "DataStax",

"author_email": null,

"download_url": "https://files.pythonhosted.org/packages/03/49/5440c41bf20d8ca6aa4486a46528a3eec5005efe49280579f1417c49297f/ragstack_ai_ragulate-0.0.13.tar.gz",

"platform": null,

"description": "# RAGulate\n\nA tool for evaluating RAG pipelines\n\n\n\n## The Metrics\n\nThe RAGulate currently reports 4 relevancy metrics: Answer Correctness, Answer Relevance, Context Relevance, and Groundedness.\n\n\n\n\n* Answer Correctness\n * How well does the generated answer match the ground-truth answer?\n * This confirms how well the full system performed.\n* Answer Relevance\n * Is the generated answer relevant to the query?\n * This shows if the LLM is responding in a way that is helpful to answer the query.\n* Context Relevance:\n * Does the retrieved context contain information to answer the query?\n * This shows how well the retrieval part of the process is performing.\n* Groundedness:\n * Is the generated response supported by the context?\n * Low scores here indicate that the LLM is hallucinating.\n\n## Example Output\n\nThe tool outputs results as images like this:\n\n\n\nThese images show distribution box plots of the metrics for different test runs.\n\n## Installation\n\n```sh\npip install ragulate\n```\n\n## Initial Setup\n\n1. Set your environment variables or create a `.env` file. You will need to set `OPENAI_API_KEY` and\n any other environment variables needed by your ingest and query pipelines.\n\n1. Wrap your ingest pipeline in a single python method. The method should take a `file_path` parameter and\n any other variables that you will pass during your experimentation. The method should ingest the passed\n file into your vector store.\n\n See the `ingest()` method in [open_ai_chunk_size_and_k.py](open_ai_chunk_size_and_k.py) as an example.\n This method configures an ingest pipeline using the parameter `chunk_size` and ingests the file passed.\n\n1. Wrap your query pipeline in a single python method, and return it. The method should have parameters for\n any variables that you will pass during your experimentation. Currently only LangChain LCEL query pipelines\n are supported.\n\n See the `query()` method in [open_ai_chunk_size_and_k.py](open_ai_chunk_size_and_k.py) as an example.\n This method returns a LangChain LCEL pipeline configured by the parameters `chunk_size` and `k`.\n\nNote: It is helpful to have a `**kwargs` param in your pipeline method definitions, so that if extra params\n are passed, they can be safely ignored.\n\n## Usage\n\n### Summary\n\n```sh\nusage: ragulate [-h] {download,ingest,query,compare} ...\n\nRAGu-late CLI tool.\n\noptions:\n -h, --help show this help message and exit\n\ncommands:\n download Download a dataset\n ingest Run an ingest pipeline\n query Run an query pipeline\n compare Compare results from 2 (or more) recipes\n run Run an experiment from a config file\n```\n\n### Example\n\nFor the examples below, we will use the example experiment [open_ai_chunk_size_and_k.py](open_ai_chunk_size_and_k.py)\nand see how the RAG metrics change for changes in `chunk_size` and `k` (number of documents retrieved).\n\nThere are two ways to run Ragulate to run an experiment. Either define an experiment with a config file or execute it manually step by step.\n\n#### Via Config File\n\n**Note: Running via config file is a new feature and it is not as stable as running manually.**\n\n1. Create a yaml config file with a similar format to the example config: [example_config.yaml](example_config.yaml). This defines the same test as shown manually below.\n\n1. Execute it with a single command:\n\n ```\n ragulate run example_config.yaml\n ```\n\n This will:\n * Download the test datasets\n * Run the ingest pipelines\n * Run the query pipelines\n * Output an analysis of the results.\n\n\n#### Manually\n\n1. Download a dataset. See available datasets here: https://llamahub.ai/?tab=llama_datasets\n * If you are unsure where to start, recommended datasets are:\n * `BraintrustCodaHelpDesk`\n * `BlockchainSolana`\n\n Examples:\n * `ragulate download -k llama BraintrustCodaHelpDesk`\n * `ragulate download -k llama BlockchainSolana`\n\n2. Ingest the datasets using different methods:\n\n Examples:\n * Ingest with `chunk_size=200`:\n ```\n ragulate ingest -n chunk_size_200 -s open_ai_chunk_size_and_k.py -m ingest \\\n --var-name chunk_size --var-value 200 --dataset BraintrustCodaHelpDesk --dataset BlockchainSolana\n ```\n * Ingest with `chunk_size=100`:\n ```\n ragulate ingest -n chunk_size_100 -s open_ai_chunk_size_and_k.py -m ingest \\\n --var-name chunk_size --var-value 100 --dataset BraintrustCodaHelpDesk --dataset BlockchainSolana\n ```\n\n3. Run query and evaluations on the datasets using methods:\n\n Examples:\n * Query with `chunk_size=200` and `k=2`\n ```\n ragulate query -n chunk_size_200_k_2 -s open_ai_chunk_size_and_k.py -m query_pipeline \\\n --var-name chunk_size --var-value 200 --var-name k --var-value 2 --dataset BraintrustCodaHelpDesk --dataset BlockchainSolana\n ```\n\n * Query with `chunk_size=100` and `k=2`\n ```\n ragulate query -n chunk_size_100_k_2 -s open_ai_chunk_size_and_k.py -m query_pipeline \\\n --var-name chunk_size --var-value 100 --var-name k --var-value 2 --dataset BraintrustCodaHelpDesk --dataset BlockchainSolana\n ```\n\n * Query with `chunk_size=200` and `k=5`\n ```\n ragulate query -n chunk_size_200_k_5 -s open_ai_chunk_size_and_k.py -m query_pipeline \\\n --var-name chunk_size --var-value 200 --var-name k --var-value 5 --dataset BraintrustCodaHelpDesk --dataset BlockchainSolana\n ```\n\n * Query with `chunk_size=100` and `k=5`\n ```\n ragulate query -n chunk_size_100_k_5 -s open_ai_chunk_size_and_k.py -m query_pipeline \\\n --var-name chunk_size --var-value 100 --var-name k --var-value 5 --dataset BraintrustCodaHelpDesk --dataset BlockchainSolana\n ```\n\n1. Run a compare to get the results:\n\n Example:\n ```\n ragulate compare -r chunk_size_100_k_2 -r chunk_size_200_k_2 -r chunk_size_100_k_5 -r chunk_size_200_k_5\n ```\n\n This will output 2 png files. one for each dataset.\n\n## Current Limitations\n\n* The evaluation model is locked to OpenAI gpt3.5\n* Only LangChain query pipelines are supported\n* Only LlamaIndex datasets are supported\n* There is no way to specify which metrics to evaluate.\n\n",

"bugtrack_url": null,

"license": "Apache 2.0",

"summary": "A tool for evaluating RAG pipelines",

"version": "0.0.13",

"project_urls": {

"Documentation": "https://docs.datastax.com/en/ragstack",

"Homepage": "https://github.com/datastax/ragstack-ai",

"Repository": "https://github.com/datastax/ragstack-ai"

},

"split_keywords": [],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "6e9985f4eacc1400f442b2cc54333de9847be27171be715a1b56c0ecd5750312",

"md5": "aeb9126e4d14c493cae10f2f083e42f7",

"sha256": "c4a77328e76932abcd11c2ac2c2f09d7dbe3bac397aa64f5281f76e212ff02d3"

},

"downloads": -1,

"filename": "ragstack_ai_ragulate-0.0.13-py3-none-any.whl",

"has_sig": false,

"md5_digest": "aeb9126e4d14c493cae10f2f083e42f7",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": "<3.13,>=3.10",

"size": 27454,

"upload_time": "2024-06-28T11:28:33",

"upload_time_iso_8601": "2024-06-28T11:28:33.061939Z",

"url": "https://files.pythonhosted.org/packages/6e/99/85f4eacc1400f442b2cc54333de9847be27171be715a1b56c0ecd5750312/ragstack_ai_ragulate-0.0.13-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "03495440c41bf20d8ca6aa4486a46528a3eec5005efe49280579f1417c49297f",

"md5": "2a93695e685058ddb45af3e2f7f488d7",

"sha256": "084bfbc551c9d86e909bcf4ee62bb0260a59f61fdc2f72993e301fcbc885c223"

},

"downloads": -1,

"filename": "ragstack_ai_ragulate-0.0.13.tar.gz",

"has_sig": false,

"md5_digest": "2a93695e685058ddb45af3e2f7f488d7",

"packagetype": "sdist",

"python_version": "source",

"requires_python": "<3.13,>=3.10",

"size": 20058,

"upload_time": "2024-06-28T11:28:34",

"upload_time_iso_8601": "2024-06-28T11:28:34.644897Z",

"url": "https://files.pythonhosted.org/packages/03/49/5440c41bf20d8ca6aa4486a46528a3eec5005efe49280579f1417c49297f/ragstack_ai_ragulate-0.0.13.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2024-06-28 11:28:34",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "datastax",

"github_project": "ragstack-ai",

"travis_ci": false,

"coveralls": false,

"github_actions": true,

"tox": true,

"lcname": "ragstack-ai-ragulate"

}