| Name | rlportfolio JSON |

| Version |

0.2.1

JSON

JSON |

| download |

| home_page | None |

| Summary | Reinforcement learning framework for portfolio optimization tasks. |

| upload_time | 2025-01-16 04:39:00 |

| maintainer | None |

| docs_url | None |

| author | None |

| requires_python | >=3.9 |

| license | The MIT License (MIT) Copyright (c) 2024 Caio de Souza Barbosa Costa. Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions: The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software. THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE. |

| keywords |

deep-learning

reinforcement-learning

pytorch

finance

portfolio-optimization

portfolio-management

asset-allocation

|

| VCS |

|

| bugtrack_url |

|

| requirements |

No requirements were recorded.

|

| Travis-CI |

No Travis.

|

| coveralls test coverage |

No coveralls.

|

------------------------------------------

RLPortfolio is a Python package which provides several features to implement, train and test reinforcement learning agents that optimize a financial portfolio:

- A training simulation environment that implements the state-of-the-art mathematical formulation commonly used in the research field.

- Two policy gradient training algorithms that are specifically built to solve the portfolio optimization task.

- Four cutting-edge deep neural networks implemented in PyTorch that can be used as the agent policy.

[Click here to access the library documentation!](https://rlportfolio.readthedocs.io/en/latest/)

**Note**: This project is mainly intended for academic purposes. Therefore, be careful if using RLPortfolio to trade real money and consult a professional before investing, if possible.

## About RLPortfolio

This library is composed by the following components:

| Component | Description |

| ---- | --- |

| **rlportfolio.algorithm** | A compilation of specific training algorithms to portfolio optimization agents. |

| **rlportfolio.data** | Functions and classes to perform data preprocessing. |

| **rlportfolio.environment** | Training reinforcement learning environment. |

| **rlportfolio.policy** | A collection of deep neural networks to be used in the agent. |

| **rlportfolio.utils** | Utility functions for convenience. |

### A Modular Library

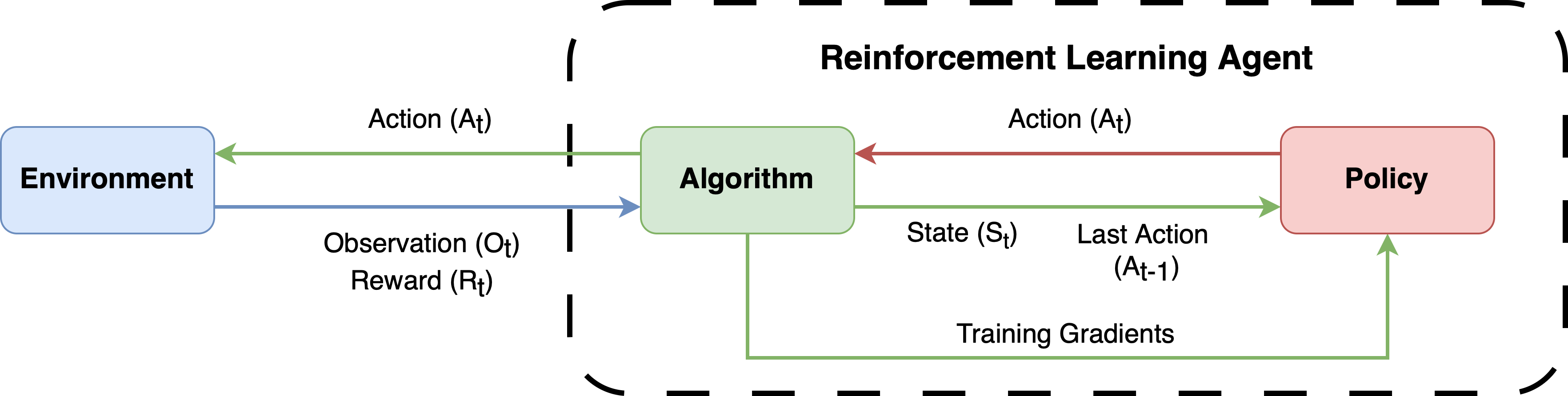

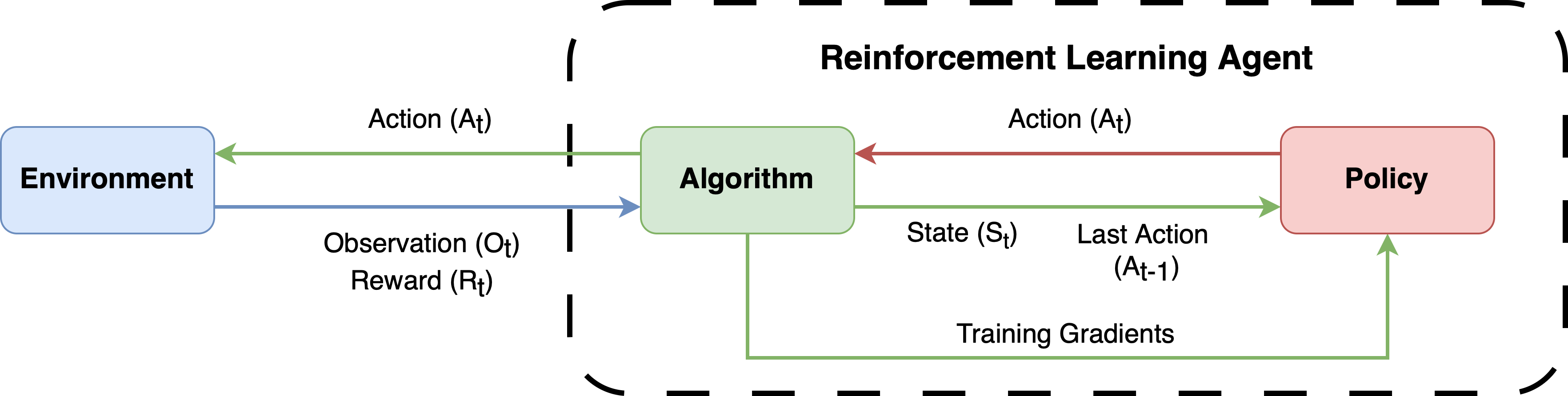

RLPortfolio is implemented with a modular architecture in mind so that it can be used in conjunction with several other libraries. To effectively train an agent, you need three constituents:

- A training algorithm.

- A simulation environment.

- A policy neural network (depending on the algorithm, a critic neural network might be necessary tools).

The figure below shows the dynamics between those components. All of them are present in this library, but users are free to use other libraries or custom implementations.

### Modern Standards and Libraries

Differently than other implementations of the research field, this library utilizes modern versions of libraries ([PyTorch](https://pytorch.org/), [Gymnasium](https://gymnasium.farama.org/), [Numpy](https://numpy.org/) and [Pandas](https://pandas.pydata.org/)) and follows standards that allows its utilization in conjunction with other libraries.

### Easy to Use and Customizable

RLPortfolio aims to be easy to use and its code is heavily documented using [Google Python Style](https://google.github.io/styleguide/pyguide.html) so that users can understand how to utilize the classes and functions. Additionaly, the training components are very customizable and, thus, different training routines can be run without the need to directly modify the code.

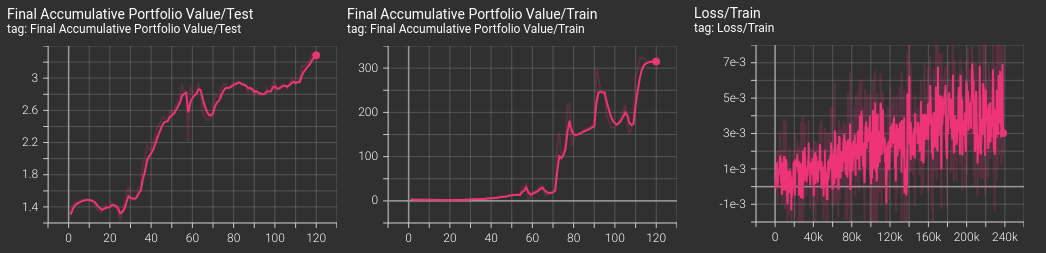

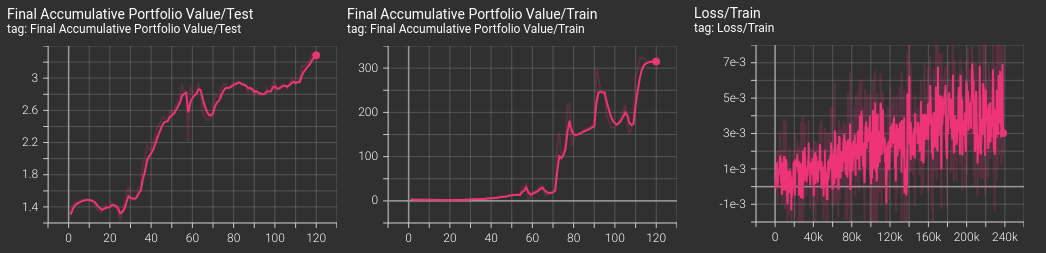

### Integration with Tensorboard

The algorithms implemented in the package are integrated with [Tensorboard](https://www.tensorflow.org/tensorboard/get_started), automatically providing graphs of the main metrics during training, validation and testing.

### Focus on Reliability

In order to be as reliable as possible, this project has a strong focus in implementing unit tests for new implementations. Therefore, RLPortfolio can be easily used to reproduce and compare research studies.

## Installation

You can install this package using pip with:

```bash

$ pip install rlportfolio

```

Additionally, you can also install it by cloning this repository and running the following command:

```bash

$ pip install .

```

## Interface

RLPortfolio's interface is very easy to use. In order to train an agent, you need to instantiate an environment object. The environment makes use of a dataframe which contains the time series of price of stocks.

```python

import pandas as pd

from rlportfolio.environment import PortfolioOptimizationEnv

# dataframe with training data (market price time series)

df_train = pd.read_csv("train_data.csv")

environment = PortfolioOptimizationEnv(

df_train, # data to be used

100000 # initial value of the portfolio

)

```

Then, it is possible to instantiate the policy gradient algorithm to generate an agent that actuates in the created environment.

```python

from rlportfolio.algorithm import PolicyGradient

algorithm = PolicyGradient(environment)

```

Finally, you can train the agent using the defined algorithm through the following method:

```python

# train the algorithm for 10000

algorithm.train(10000)

```

It's now possible to test the agent's performance in another environment which contains data of a different time period.

```python

# dataframe with testing data (market price time series)

df_test = pd.read_csv("test_data.csv")

environment_test = PortfolioOptimizationEnv(

df_test, # data to be used

100000 # initial value of the portfolio

)

# test the agent in the test environment

algorithm.test(environment_test)

```

The test function will return a dictionary with the metrics of the test.

Raw data

{

"_id": null,

"home_page": null,

"name": "rlportfolio",

"maintainer": null,

"docs_url": null,

"requires_python": ">=3.9",

"maintainer_email": "Caio de Souza Barbosa Costa <csbc326@gmail.com>",

"keywords": "deep-learning, reinforcement-learning, pytorch, finance, portfolio-optimization, portfolio-management, asset-allocation",

"author": null,

"author_email": "Caio de Souza Barbosa Costa <csbc326@gmail.com>",

"download_url": "https://files.pythonhosted.org/packages/6e/c0/d3925e94cac7150755f4015fcbf35492d13f2f5adfe51f04fa86ba6037b8/rlportfolio-0.2.1.tar.gz",

"platform": null,

"description": "\n\n------------------------------------------\n\nRLPortfolio is a Python package which provides several features to implement, train and test reinforcement learning agents that optimize a financial portfolio:\n\n- A training simulation environment that implements the state-of-the-art mathematical formulation commonly used in the research field.\n- Two policy gradient training algorithms that are specifically built to solve the portfolio optimization task.\n- Four cutting-edge deep neural networks implemented in PyTorch that can be used as the agent policy.\n\n[Click here to access the library documentation!](https://rlportfolio.readthedocs.io/en/latest/)\n\n**Note**: This project is mainly intended for academic purposes. Therefore, be careful if using RLPortfolio to trade real money and consult a professional before investing, if possible.\n\n## About RLPortfolio\n\nThis library is composed by the following components:\n\n| Component | Description |\n| ---- | --- |\n| **rlportfolio.algorithm** | A compilation of specific training algorithms to portfolio optimization agents. |\n| **rlportfolio.data** | Functions and classes to perform data preprocessing. |\n| **rlportfolio.environment** | Training reinforcement learning environment. |\n| **rlportfolio.policy** | A collection of deep neural networks to be used in the agent. |\n| **rlportfolio.utils** | Utility functions for convenience. |\n\n### A Modular Library\n\nRLPortfolio is implemented with a modular architecture in mind so that it can be used in conjunction with several other libraries. To effectively train an agent, you need three constituents:\n\n- A training algorithm.\n- A simulation environment.\n- A policy neural network (depending on the algorithm, a critic neural network might be necessary tools).\n\nThe figure below shows the dynamics between those components. All of them are present in this library, but users are free to use other libraries or custom implementations.\n\n\n\n### Modern Standards and Libraries\n\nDifferently than other implementations of the research field, this library utilizes modern versions of libraries ([PyTorch](https://pytorch.org/), [Gymnasium](https://gymnasium.farama.org/), [Numpy](https://numpy.org/) and [Pandas](https://pandas.pydata.org/)) and follows standards that allows its utilization in conjunction with other libraries.\n\n### Easy to Use and Customizable\n\nRLPortfolio aims to be easy to use and its code is heavily documented using [Google Python Style](https://google.github.io/styleguide/pyguide.html) so that users can understand how to utilize the classes and functions. Additionaly, the training components are very customizable and, thus, different training routines can be run without the need to directly modify the code.\n\n### Integration with Tensorboard\n\nThe algorithms implemented in the package are integrated with [Tensorboard](https://www.tensorflow.org/tensorboard/get_started), automatically providing graphs of the main metrics during training, validation and testing.\n\n\n\n\n### Focus on Reliability\n\nIn order to be as reliable as possible, this project has a strong focus in implementing unit tests for new implementations. Therefore, RLPortfolio can be easily used to reproduce and compare research studies.\n\n## Installation\n\nYou can install this package using pip with:\n\n```bash\n$ pip install rlportfolio\n```\n\nAdditionally, you can also install it by cloning this repository and running the following command:\n\n```bash\n$ pip install .\n```\n\n## Interface\n\nRLPortfolio's interface is very easy to use. In order to train an agent, you need to instantiate an environment object. The environment makes use of a dataframe which contains the time series of price of stocks.\n\n```python\nimport pandas as pd\nfrom rlportfolio.environment import PortfolioOptimizationEnv\n\n# dataframe with training data (market price time series)\ndf_train = pd.read_csv(\"train_data.csv\")\n\nenvironment = PortfolioOptimizationEnv(\n df_train, # data to be used\n 100000 # initial value of the portfolio\n )\n```\n\nThen, it is possible to instantiate the policy gradient algorithm to generate an agent that actuates in the created environment.\n\n```python\nfrom rlportfolio.algorithm import PolicyGradient\n\nalgorithm = PolicyGradient(environment)\n```\n\nFinally, you can train the agent using the defined algorithm through the following method:\n\n```python\n# train the algorithm for 10000\nalgorithm.train(10000)\n```\n\nIt's now possible to test the agent's performance in another environment which contains data of a different time period.\n\n```python\n# dataframe with testing data (market price time series)\ndf_test = pd.read_csv(\"test_data.csv\")\n\nenvironment_test = PortfolioOptimizationEnv(\n df_test, # data to be used\n 100000 # initial value of the portfolio\n )\n\n# test the agent in the test environment\nalgorithm.test(environment_test)\n```\n\nThe test function will return a dictionary with the metrics of the test.\n",

"bugtrack_url": null,

"license": "The MIT License (MIT) Copyright (c) 2024 Caio de Souza Barbosa Costa. Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the \"Software\"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions: The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software. THE SOFTWARE IS PROVIDED \"AS IS\", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.",

"summary": "Reinforcement learning framework for portfolio optimization tasks.",

"version": "0.2.1",

"project_urls": {

"Documentation": "https://rlportfolio.readthedocs.io/en/latest/",

"Homepage": "https://github.com/CaioSBC/RLPortfolio",

"Issues": "https://github.com/CaioSBC/RLPortfolio/issues"

},

"split_keywords": [

"deep-learning",

" reinforcement-learning",

" pytorch",

" finance",

" portfolio-optimization",

" portfolio-management",

" asset-allocation"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "33725105db8b98ada5b11677ad70537467436d1560a1ffdd6926e51308148c35",

"md5": "a5d28f848f23deaf1917e8300b852d48",

"sha256": "b3350829a45fff9062cffcd8aa7532a3aaa5e42e148b6e26e18f6f8def84b425"

},

"downloads": -1,

"filename": "rlportfolio-0.2.1-py3-none-any.whl",

"has_sig": false,

"md5_digest": "a5d28f848f23deaf1917e8300b852d48",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.9",

"size": 37475,

"upload_time": "2025-01-16T04:38:58",

"upload_time_iso_8601": "2025-01-16T04:38:58.924900Z",

"url": "https://files.pythonhosted.org/packages/33/72/5105db8b98ada5b11677ad70537467436d1560a1ffdd6926e51308148c35/rlportfolio-0.2.1-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "6ec0d3925e94cac7150755f4015fcbf35492d13f2f5adfe51f04fa86ba6037b8",

"md5": "75283502e30ac10b0ded1a2d33ba2e78",

"sha256": "1d907802dc171d345a1356a0d9a500df89518d0ffa9e1285ac14f1da52c4afdf"

},

"downloads": -1,

"filename": "rlportfolio-0.2.1.tar.gz",

"has_sig": false,

"md5_digest": "75283502e30ac10b0ded1a2d33ba2e78",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.9",

"size": 35229,

"upload_time": "2025-01-16T04:39:00",

"upload_time_iso_8601": "2025-01-16T04:39:00.155729Z",

"url": "https://files.pythonhosted.org/packages/6e/c0/d3925e94cac7150755f4015fcbf35492d13f2f5adfe51f04fa86ba6037b8/rlportfolio-0.2.1.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2025-01-16 04:39:00",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "CaioSBC",

"github_project": "RLPortfolio",

"travis_ci": false,

"coveralls": false,

"github_actions": false,

"lcname": "rlportfolio"

}