| Name | samexporter JSON |

| Version |

0.4.1

JSON

JSON |

| download |

| home_page | None |

| Summary | Exporting Segment Anything models ONNX format |

| upload_time | 2024-08-03 04:36:01 |

| maintainer | None |

| docs_url | None |

| author | None |

| requires_python | >=3.10 |

| license | MIT License Copyright (c) 2023 Viet Anh Nguyen Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions: The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software. THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE. |

| keywords |

|

| VCS |

|

| bugtrack_url |

|

| requirements |

No requirements were recorded.

|

| Travis-CI |

No Travis.

|

| coveralls test coverage |

No coveralls.

|

# SAM Exporter - Now with Segment Anything 2!~~

Exporting [Segment Anything](https://github.com/facebookresearch/segment-anything), [MobileSAM](https://github.com/ChaoningZhang/MobileSAM), and [Segment Anything 2](https://github.com/facebookresearch/segment-anything-2) into ONNX format for easy deployment.

[](https://badge.fury.io/py/samexporter)

[](https://pepy.tech/project/samexporter)

[](https://pepy.tech/project/samexporter)

[](https://pepy.tech/project/samexporter)

**Supported models:**

- Segment Anything 2 (Tiny, Small, Base, Large) - **Note:** Experimental. Only image input is supported for now.

- Segment Anything (SAM ViT-B, SAM ViT-L, SAM ViT-H)

- MobileSAM

## Installation

Requirements:

- Python 3.10+

From PyPi:

```bash

pip install torch==2.4.0 torchvision --index-url https://download.pytorch.org/whl/cpu

pip install samexporter

```

From source:

```bash

pip install torch==2.4.0 torchvision --index-url https://download.pytorch.org/whl/cpu

git clone https://github.com/vietanhdev/samexporter

cd samexporter

pip install -e .

```

## Convert Segment Anything, MobileSAM to ONNX

- Download Segment Anything from [https://github.com/facebookresearch/segment-anything](https://github.com/facebookresearch/segment-anything).

- Download MobileSAM from [https://github.com/ChaoningZhang/MobileSAM](https://github.com/ChaoningZhang/MobileSAM).

```text

original_models

+ sam_vit_b_01ec64.pth

+ sam_vit_h_4b8939.pth

+ sam_vit_l_0b3195.pth

+ mobile_sam.pt

...

```

- Convert encoder SAM-H to ONNX format:

```bash

python -m samexporter.export_encoder --checkpoint original_models/sam_vit_h_4b8939.pth \

--output output_models/sam_vit_h_4b8939.encoder.onnx \

--model-type vit_h \

--quantize-out output_models/sam_vit_h_4b8939.encoder.quant.onnx \

--use-preprocess

```

- Convert decoder SAM-H to ONNX format:

```bash

python -m samexporter.export_decoder --checkpoint original_models/sam_vit_h_4b8939.pth \

--output output_models/sam_vit_h_4b8939.decoder.onnx \

--model-type vit_h \

--quantize-out output_models/sam_vit_h_4b8939.decoder.quant.onnx \

--return-single-mask

```

Remove `--return-single-mask` if you want to return multiple masks.

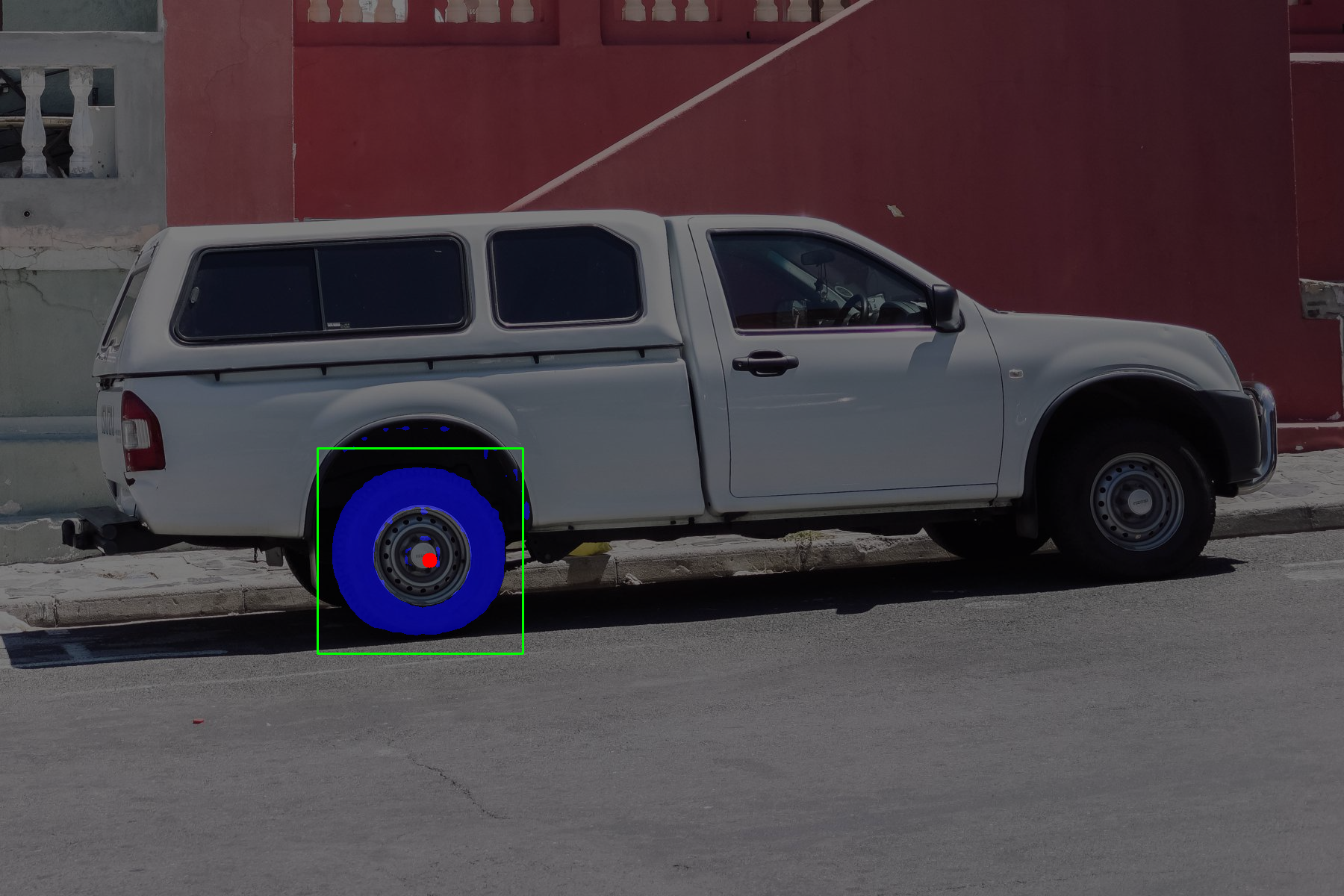

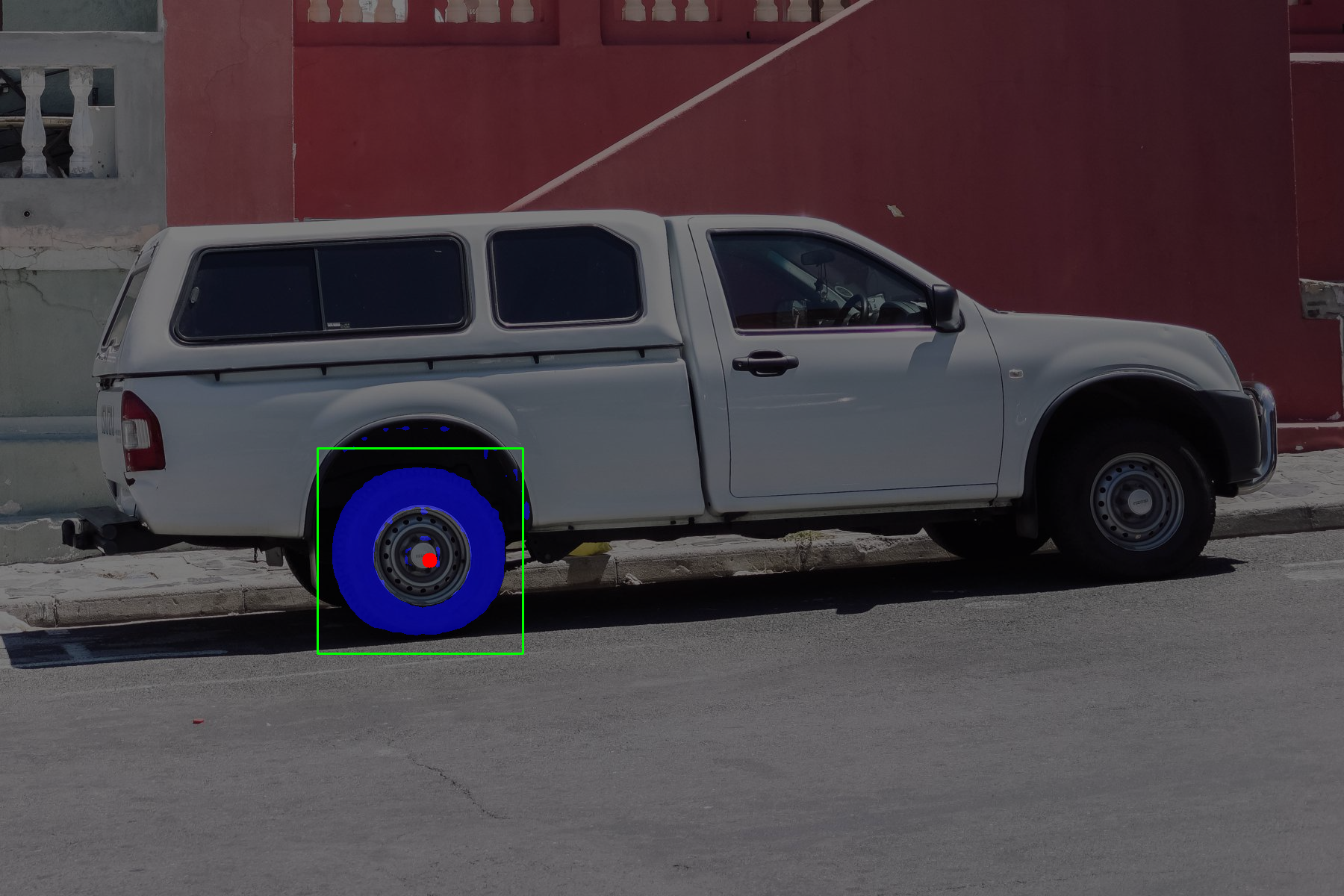

- Inference using the exported ONNX model:

```bash

python -m samexporter.inference \

--encoder_model output_models/sam_vit_h_4b8939.encoder.onnx \

--decoder_model output_models/sam_vit_h_4b8939.decoder.onnx \

--image images/truck.jpg \

--prompt images/truck_prompt.json \

--output output_images/truck.png \

--show

```

```bash

python -m samexporter.inference \

--encoder_model output_models/sam_vit_h_4b8939.encoder.onnx \

--decoder_model output_models/sam_vit_h_4b8939.decoder.onnx \

--image images/plants.png \

--prompt images/plants_prompt1.json \

--output output_images/plants_01.png \

--show

```

```bash

python -m samexporter.inference \

--encoder_model output_models/sam_vit_h_4b8939.encoder.onnx \

--decoder_model output_models/sam_vit_h_4b8939.decoder.onnx \

--image images/plants.png \

--prompt images/plants_prompt2.json \

--output output_images/plants_02.png \

--show

```

**Short options:**

- Convert all Segment Anything models to ONNX format:

```bash

bash convert_all_meta_sam.sh

```

- Convert MobileSAM to ONNX format:

```bash

bash convert_mobile_sam.sh

```

## Convert Segment Anything 2 to ONNX

- Download Segment Anything 2 from [https://github.com/facebookresearch/segment-anything-2.git](https://github.com/facebookresearch/segment-anything-2.git). You can do it by:

```bash

cd original_models

bash download_sam2.sh

```

The models will be downloaded to the `original_models` folder:

```text

original_models

+ sam2_hiera_tiny.pt

+ sam2_hiera_small.pt

+ sam2_hiera_base_plus.pt

+ sam2_hiera_large.pt

...

```

- Install dependencies:

```bash

pip install git+https://github.com/facebookresearch/segment-anything-2.git

```

- Convert all Segment Anything models to ONNX format:

```bash

bash convert_all_meta_sam2.sh

```

- Inference using the exported ONNX model (only image input is supported for now):

```bash

python -m samexporter.inference \

--encoder_model output_models/sam2_hiera_tiny.encoder.onnx \

--decoder_model output_models/sam2_hiera_tiny.decoder.onnx \

--image images/plants.png \

--prompt images/truck_prompt_2.json \

--output output_images/plants_prompt_2_sam2.png \

--sam_variant sam2 \

--show

```

## Tips

- Use "quantized" models for faster inference and smaller model size. However, the accuracy may be lower than the original models.

- SAM-B is the most lightweight model, but it has the lowest accuracy. SAM-H is the most accurate model, but it has the largest model size. SAM-M is a good trade-off between accuracy and model size.

## AnyLabeling

This package was originally developed for auto labeling feature in [AnyLabeling](https://github.com/vietanhdev/anylabeling) project. However, you can use it for other purposes.

[](https://youtu.be/5qVJiYNX5Kk)

## License

This project is licensed under the MIT License - see the [LICENSE](LICENSE) file for details.

## References

- ONNX-SAM2-Segment-Anything: [https://github.com/ibaiGorordo/ONNX-SAM2-Segment-Anything](https://github.com/ibaiGorordo/ONNX-SAM2-Segment-Anything).

Raw data

{

"_id": null,

"home_page": null,

"name": "samexporter",

"maintainer": null,

"docs_url": null,

"requires_python": ">=3.10",

"maintainer_email": null,

"keywords": null,

"author": null,

"author_email": "Viet Anh Nguyen <vietanh.dev@gmail.com>",

"download_url": null,

"platform": null,

"description": "# SAM Exporter - Now with Segment Anything 2!~~\n\nExporting [Segment Anything](https://github.com/facebookresearch/segment-anything), [MobileSAM](https://github.com/ChaoningZhang/MobileSAM), and [Segment Anything 2](https://github.com/facebookresearch/segment-anything-2) into ONNX format for easy deployment.\n\n[](https://badge.fury.io/py/samexporter)\n[](https://pepy.tech/project/samexporter)\n[](https://pepy.tech/project/samexporter)\n[](https://pepy.tech/project/samexporter)\n\n**Supported models:**\n\n- Segment Anything 2 (Tiny, Small, Base, Large) - **Note:** Experimental. Only image input is supported for now.\n- Segment Anything (SAM ViT-B, SAM ViT-L, SAM ViT-H)\n- MobileSAM\n\n## Installation\n\nRequirements:\n\n- Python 3.10+\n\nFrom PyPi:\n\n```bash\npip install torch==2.4.0 torchvision --index-url https://download.pytorch.org/whl/cpu\npip install samexporter\n```\n\nFrom source:\n\n```bash\npip install torch==2.4.0 torchvision --index-url https://download.pytorch.org/whl/cpu\ngit clone https://github.com/vietanhdev/samexporter\ncd samexporter\npip install -e .\n```\n\n## Convert Segment Anything, MobileSAM to ONNX\n\n- Download Segment Anything from [https://github.com/facebookresearch/segment-anything](https://github.com/facebookresearch/segment-anything).\n- Download MobileSAM from [https://github.com/ChaoningZhang/MobileSAM](https://github.com/ChaoningZhang/MobileSAM).\n\n```text\noriginal_models\n + sam_vit_b_01ec64.pth\n + sam_vit_h_4b8939.pth\n + sam_vit_l_0b3195.pth\n + mobile_sam.pt\n ...\n```\n\n- Convert encoder SAM-H to ONNX format:\n\n```bash\npython -m samexporter.export_encoder --checkpoint original_models/sam_vit_h_4b8939.pth \\\n --output output_models/sam_vit_h_4b8939.encoder.onnx \\\n --model-type vit_h \\\n --quantize-out output_models/sam_vit_h_4b8939.encoder.quant.onnx \\\n --use-preprocess\n```\n\n- Convert decoder SAM-H to ONNX format:\n\n```bash\npython -m samexporter.export_decoder --checkpoint original_models/sam_vit_h_4b8939.pth \\\n --output output_models/sam_vit_h_4b8939.decoder.onnx \\\n --model-type vit_h \\\n --quantize-out output_models/sam_vit_h_4b8939.decoder.quant.onnx \\\n --return-single-mask\n```\n\nRemove `--return-single-mask` if you want to return multiple masks.\n\n- Inference using the exported ONNX model:\n\n```bash\npython -m samexporter.inference \\\n --encoder_model output_models/sam_vit_h_4b8939.encoder.onnx \\\n --decoder_model output_models/sam_vit_h_4b8939.decoder.onnx \\\n --image images/truck.jpg \\\n --prompt images/truck_prompt.json \\\n --output output_images/truck.png \\\n --show\n```\n\n\n\n```bash\npython -m samexporter.inference \\\n --encoder_model output_models/sam_vit_h_4b8939.encoder.onnx \\\n --decoder_model output_models/sam_vit_h_4b8939.decoder.onnx \\\n --image images/plants.png \\\n --prompt images/plants_prompt1.json \\\n --output output_images/plants_01.png \\\n --show\n```\n\n\n\n```bash\npython -m samexporter.inference \\\n --encoder_model output_models/sam_vit_h_4b8939.encoder.onnx \\\n --decoder_model output_models/sam_vit_h_4b8939.decoder.onnx \\\n --image images/plants.png \\\n --prompt images/plants_prompt2.json \\\n --output output_images/plants_02.png \\\n --show\n```\n\n\n\n\n**Short options:**\n\n- Convert all Segment Anything models to ONNX format:\n\n```bash\nbash convert_all_meta_sam.sh\n```\n\n- Convert MobileSAM to ONNX format:\n\n```bash\nbash convert_mobile_sam.sh\n```\n\n## Convert Segment Anything 2 to ONNX\n\n- Download Segment Anything 2 from [https://github.com/facebookresearch/segment-anything-2.git](https://github.com/facebookresearch/segment-anything-2.git). You can do it by:\n\n```bash\ncd original_models\nbash download_sam2.sh\n```\n\nThe models will be downloaded to the `original_models` folder:\n\n```text\noriginal_models\n + sam2_hiera_tiny.pt\n + sam2_hiera_small.pt\n + sam2_hiera_base_plus.pt\n + sam2_hiera_large.pt\n ...\n```\n\n- Install dependencies:\n\n```bash\npip install git+https://github.com/facebookresearch/segment-anything-2.git\n```\n\n- Convert all Segment Anything models to ONNX format:\n\n```bash\nbash convert_all_meta_sam2.sh\n```\n\n- Inference using the exported ONNX model (only image input is supported for now):\n\n```bash\npython -m samexporter.inference \\\n --encoder_model output_models/sam2_hiera_tiny.encoder.onnx \\\n --decoder_model output_models/sam2_hiera_tiny.decoder.onnx \\\n --image images/plants.png \\\n --prompt images/truck_prompt_2.json \\\n --output output_images/plants_prompt_2_sam2.png \\\n --sam_variant sam2 \\\n --show\n```\n\n\n\n## Tips\n\n- Use \"quantized\" models for faster inference and smaller model size. However, the accuracy may be lower than the original models.\n- SAM-B is the most lightweight model, but it has the lowest accuracy. SAM-H is the most accurate model, but it has the largest model size. SAM-M is a good trade-off between accuracy and model size.\n\n## AnyLabeling\n\nThis package was originally developed for auto labeling feature in [AnyLabeling](https://github.com/vietanhdev/anylabeling) project. However, you can use it for other purposes.\n\n[](https://youtu.be/5qVJiYNX5Kk)\n\n## License\n\nThis project is licensed under the MIT License - see the [LICENSE](LICENSE) file for details.\n\n## References\n\n- ONNX-SAM2-Segment-Anything: [https://github.com/ibaiGorordo/ONNX-SAM2-Segment-Anything](https://github.com/ibaiGorordo/ONNX-SAM2-Segment-Anything).\n",

"bugtrack_url": null,

"license": "MIT License Copyright (c) 2023 Viet Anh Nguyen Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the \"Software\"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions: The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software. THE SOFTWARE IS PROVIDED \"AS IS\", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE. ",

"summary": "Exporting Segment Anything models ONNX format",

"version": "0.4.1",

"project_urls": {

"Bug Tracker": "https://github.com/vietanhdev/samexporter/issues",

"Homepage": "https://github.com/vietanhdev/samexporter"

},

"split_keywords": [],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "38c7707754e16d5c7d4a79e3405a3bc98d251669c4f2d6e1ffbadd4b9ad33c57",

"md5": "f4da7151ad3e41b98c869c4b256e85e2",

"sha256": "a6bec5deba4bc92599088ac18789b5b72784d189d596500aca5de5eaf804ae09"

},

"downloads": -1,

"filename": "samexporter-0.4.1-py3-none-any.whl",

"has_sig": false,

"md5_digest": "f4da7151ad3e41b98c869c4b256e85e2",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.10",

"size": 18227,

"upload_time": "2024-08-03T04:36:01",

"upload_time_iso_8601": "2024-08-03T04:36:01.258154Z",

"url": "https://files.pythonhosted.org/packages/38/c7/707754e16d5c7d4a79e3405a3bc98d251669c4f2d6e1ffbadd4b9ad33c57/samexporter-0.4.1-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2024-08-03 04:36:01",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "vietanhdev",

"github_project": "samexporter",

"travis_ci": false,

"coveralls": false,

"github_actions": true,

"lcname": "samexporter"

}