# Segment Anything

[](https://pypi.python.org/pypi/segment-anything-py)

[](https://anaconda.org/conda-forge/segment-anything)

[](https://anaconda.org/conda-forge/segment-anything)

[](https://anaconda.org/conda-forge/segment-anything)

**[Meta AI Research, FAIR](https://ai.facebook.com/research/)**

[Alexander Kirillov](https://alexander-kirillov.github.io/), [Eric Mintun](https://ericmintun.github.io/), [Nikhila Ravi](https://nikhilaravi.com/), [Hanzi Mao](https://hanzimao.me/), Chloe Rolland, Laura Gustafson, [Tete Xiao](https://tetexiao.com), [Spencer Whitehead](https://www.spencerwhitehead.com/), Alex Berg, Wan-Yen Lo, [Piotr Dollar](https://pdollar.github.io/), [Ross Girshick](https://www.rossgirshick.info/)

[[`Paper`](https://ai.facebook.com/research/publications/segment-anything/)] [[`Project`](https://segment-anything.com/)] [[`Demo`](https://segment-anything.com/demo)] [[`Dataset`](https://segment-anything.com/dataset/index.html)] [[`Blog`](https://ai.facebook.com/blog/segment-anything-foundation-model-image-segmentation/)] [[`BibTeX`](#citing-segment-anything)]

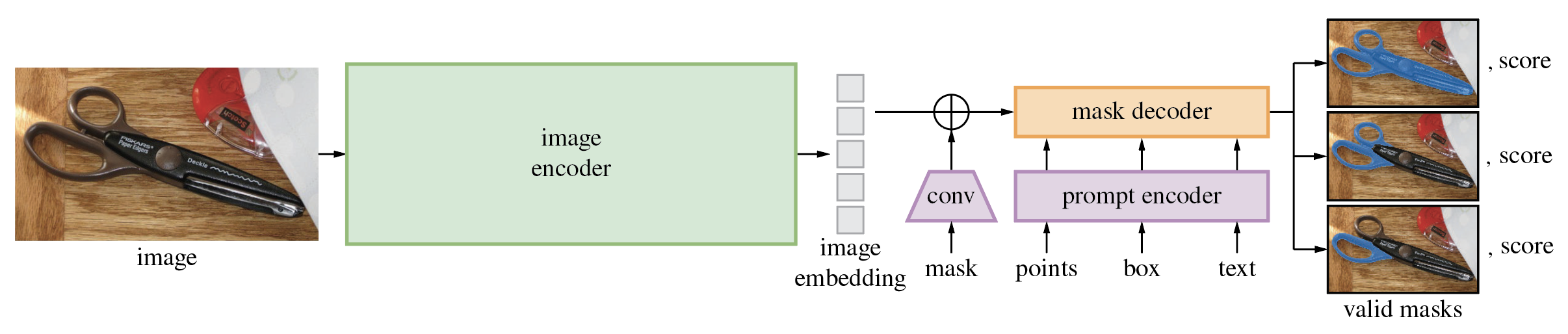

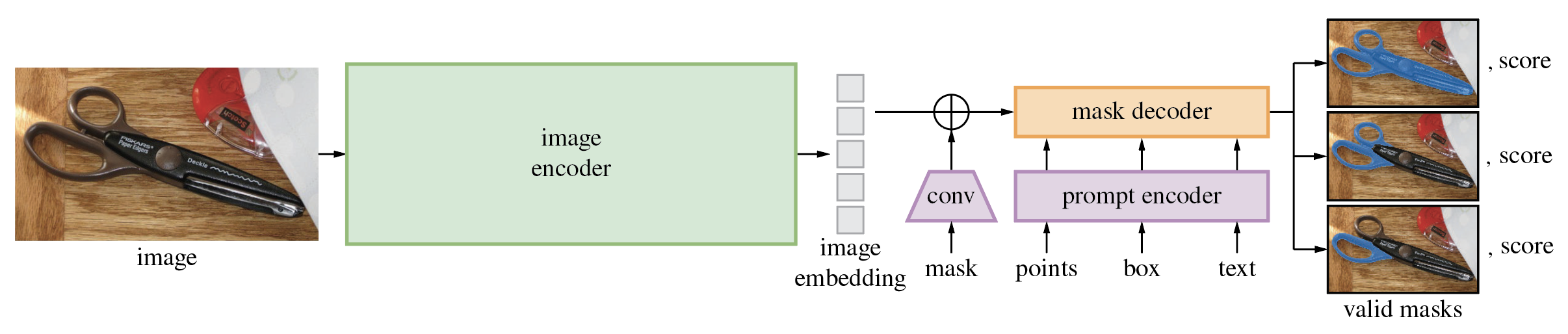

The **Segment Anything Model (SAM)** produces high quality object masks from input prompts such as points or boxes, and it can be used to generate masks for all objects in an image. It has been trained on a [dataset](https://segment-anything.com/dataset/index.html) of 11 million images and 1.1 billion masks, and has strong zero-shot performance on a variety of segmentation tasks.

<p float="left">

<img src="https://raw.githubusercontent.com/opengeos/segment-anything/pypi/assets/masks1.png?raw=true" width="37.25%" />

<img src="https://raw.githubusercontent.com/opengeos/segment-anything/pypi/assets/masks2.jpg?raw=true" width="61.5%" />

</p>

## Disclaimer

I am not the author of the original implementation. This repository is a Python package that wraps the original implementation into a package. If you have any questions about the original implementation, please refer to the [facebookresearch/segment-anything](https://github.com/facebookresearch/segment-anything). The original implementation is licensed under the Apache License, Version 2.0.

## Installation

The code requires `python>=3.8`, as well as `pytorch>=1.7` and `torchvision>=0.8`. Please follow the instructions [here](https://pytorch.org/get-started/locally/) to install both PyTorch and TorchVision dependencies. Installing both PyTorch and TorchVision with CUDA support is strongly recommended.

Install Segment Anything using pip:

```bash

pip install segment-anything-py

```

or install Segment Anything using mamba:

```bash

mamba install -c conda-forge segment-anything

```

or clone the repository locally and install with

```bash

pip install git+https://github.com/facebookresearch/segment-anything.git

```

The following optional dependencies are necessary for mask post-processing, saving masks in COCO format, the example notebooks, and exporting the model in ONNX format. `jupyter` is also required to run the example notebooks.

```

pip install opencv-python pycocotools matplotlib onnxruntime onnx

```

## <a name="GettingStarted"></a>Getting Started

First download a [model checkpoint](#model-checkpoints). Then the model can be used in just a few lines to get masks from a given prompt:

```

from segment_anything import SamPredictor, sam_model_registry

sam = sam_model_registry["<model_type>"](checkpoint="<path/to/checkpoint>")

predictor = SamPredictor(sam)

predictor.set_image(<your_image>)

masks, _, _ = predictor.predict(<input_prompts>)

```

or generate masks for an entire image:

```

from segment_anything import SamAutomaticMaskGenerator, sam_model_registry

sam = sam_model_registry["<model_type>"](checkpoint="<path/to/checkpoint>")

mask_generator = SamAutomaticMaskGenerator(sam)

masks = mask_generator.generate(<your_image>)

```

Additionally, masks can be generated for images from the command line:

```

python scripts/amg.py --checkpoint <path/to/checkpoint> --model-type <model_type> --input <image_or_folder> --output <path/to/output>

```

See the examples notebooks on [using SAM with prompts](https://github.com/opengeos/segment-anything/blob/pypi/notebooks/predictor_example.ipynb) and [automatically generating masks](https://github.com/opengeos/segment-anything/blob/pypi/notebooks/automatic_mask_generator_example.ipynb) for more details.

<p float="left">

<img src="https://raw.githubusercontent.com/opengeos/segment-anything/pypi/assets/notebook1.png?raw=true" width="49.1%" />

<img src="https://raw.githubusercontent.com/opengeos/segment-anything/pypi/assets/notebook2.png?raw=true" width="48.9%" />

</p>

## ONNX Export

SAM's lightweight mask decoder can be exported to ONNX format so that it can be run in any environment that supports ONNX runtime, such as in-browser as showcased in the [demo](https://segment-anything.com/demo). Export the model with

```

python scripts/export_onnx_model.py --checkpoint <path/to/checkpoint> --model-type <model_type> --output <path/to/output>

```

See the [example notebook](https://github.com/facebookresearch/segment-anything/blob/main/notebooks/onnx_model_example.ipynb) for details on how to combine image preprocessing via SAM's backbone with mask prediction using the ONNX model. It is recommended to use the latest stable version of PyTorch for ONNX export.

### Web demo

The `demo/` folder has a simple one page React app which shows how to run mask prediction with the exported ONNX model in a web browser with multithreading. Please see [`demo/README.md`](https://github.com/facebookresearch/segment-anything/blob/main/demo/README.md) for more details.

## <a name="Models"></a>Model Checkpoints

Three model versions of the model are available with different backbone sizes. These models can be instantiated by running

```

from segment_anything import sam_model_registry

sam = sam_model_registry["<model_type>"](checkpoint="<path/to/checkpoint>")

```

Click the links below to download the checkpoint for the corresponding model type.

- **`default` or `vit_h`: [ViT-H SAM model.](https://dl.fbaipublicfiles.com/segment_anything/sam_vit_h_4b8939.pth)**

- `vit_l`: [ViT-L SAM model.](https://dl.fbaipublicfiles.com/segment_anything/sam_vit_l_0b3195.pth)

- `vit_b`: [ViT-B SAM model.](https://dl.fbaipublicfiles.com/segment_anything/sam_vit_b_01ec64.pth)

## Dataset

See [here](https://ai.facebook.com/datasets/segment-anything/) for an overview of the datastet. The dataset can be downloaded [here](https://ai.facebook.com/datasets/segment-anything-downloads/). By downloading the datasets you agree that you have read and accepted the terms of the SA-1B Dataset Research License.

We save masks per image as a json file. It can be loaded as a dictionary in python in the below format.

```python

{

"image" : image_info,

"annotations" : [annotation],

}

image_info {

"image_id" : int, # Image id

"width" : int, # Image width

"height" : int, # Image height

"file_name" : str, # Image filename

}

annotation {

"id" : int, # Annotation id

"segmentation" : dict, # Mask saved in COCO RLE format.

"bbox" : [x, y, w, h], # The box around the mask, in XYWH format

"area" : int, # The area in pixels of the mask

"predicted_iou" : float, # The model's own prediction of the mask's quality

"stability_score" : float, # A measure of the mask's quality

"crop_box" : [x, y, w, h], # The crop of the image used to generate the mask, in XYWH format

"point_coords" : [[x, y]], # The point coordinates input to the model to generate the mask

}

```

Image ids can be found in sa_images_ids.txt which can be downloaded using the above [link](https://ai.facebook.com/datasets/segment-anything-downloads/) as well.

To decode a mask in COCO RLE format into binary:

```

from pycocotools import mask as mask_utils

mask = mask_utils.decode(annotation["segmentation"])

```

See [here](https://github.com/cocodataset/cocoapi/blob/master/PythonAPI/pycocotools/mask.py) for more instructions to manipulate masks stored in RLE format.

## License

The model is licensed under the [Apache 2.0 license](LICENSE).

## Contributing

See [contributing](CONTRIBUTING.md) and the [code of conduct](CODE_OF_CONDUCT.md).

## Contributors

The Segment Anything project was made possible with the help of many contributors (alphabetical):

Aaron Adcock, Vaibhav Aggarwal, Morteza Behrooz, Cheng-Yang Fu, Ashley Gabriel, Ahuva Goldstand, Allen Goodman, Sumanth Gurram, Jiabo Hu, Somya Jain, Devansh Kukreja, Robert Kuo, Joshua Lane, Yanghao Li, Lilian Luong, Jitendra Malik, Mallika Malhotra, William Ngan, Omkar Parkhi, Nikhil Raina, Dirk Rowe, Neil Sejoor, Vanessa Stark, Bala Varadarajan, Bram Wasti, Zachary Winstrom

## Citing Segment Anything

If you use SAM or SA-1B in your research, please use the following BibTeX entry.

```

@article{kirillov2023segany,

title={Segment Anything},

author={Kirillov, Alexander and Mintun, Eric and Ravi, Nikhila and Mao, Hanzi and Rolland, Chloe and Gustafson, Laura and Xiao, Tete and Whitehead, Spencer and Berg, Alexander C. and Lo, Wan-Yen and Doll{\'a}r, Piotr and Girshick, Ross},

journal={arXiv:2304.02643},

year={2023}

}

```

Raw data

{

"_id": null,

"home_page": "https://github.com/opengeos/segment-anything",

"name": "segment-anything-py",

"maintainer": null,

"docs_url": null,

"requires_python": ">=3.8",

"maintainer_email": null,

"keywords": null,

"author": "Qiusheng Wu",

"author_email": "giswqs@gmail.com",

"download_url": "https://files.pythonhosted.org/packages/9b/2a/c29459219e4760315298301d65ec8bf902e75d2827e22212835cbbdd4052/segment_anything_py-1.0.1.tar.gz",

"platform": null,

"description": "# Segment Anything\n\n[](https://pypi.python.org/pypi/segment-anything-py)\n[](https://anaconda.org/conda-forge/segment-anything)\n[](https://anaconda.org/conda-forge/segment-anything)\n[](https://anaconda.org/conda-forge/segment-anything)\n\n**[Meta AI Research, FAIR](https://ai.facebook.com/research/)**\n\n[Alexander Kirillov](https://alexander-kirillov.github.io/), [Eric Mintun](https://ericmintun.github.io/), [Nikhila Ravi](https://nikhilaravi.com/), [Hanzi Mao](https://hanzimao.me/), Chloe Rolland, Laura Gustafson, [Tete Xiao](https://tetexiao.com), [Spencer Whitehead](https://www.spencerwhitehead.com/), Alex Berg, Wan-Yen Lo, [Piotr Dollar](https://pdollar.github.io/), [Ross Girshick](https://www.rossgirshick.info/)\n\n[[`Paper`](https://ai.facebook.com/research/publications/segment-anything/)] [[`Project`](https://segment-anything.com/)] [[`Demo`](https://segment-anything.com/demo)] [[`Dataset`](https://segment-anything.com/dataset/index.html)] [[`Blog`](https://ai.facebook.com/blog/segment-anything-foundation-model-image-segmentation/)] [[`BibTeX`](#citing-segment-anything)]\n\n\n\nThe **Segment Anything Model (SAM)** produces high quality object masks from input prompts such as points or boxes, and it can be used to generate masks for all objects in an image. It has been trained on a [dataset](https://segment-anything.com/dataset/index.html) of 11 million images and 1.1 billion masks, and has strong zero-shot performance on a variety of segmentation tasks.\n\n<p float=\"left\">\n <img src=\"https://raw.githubusercontent.com/opengeos/segment-anything/pypi/assets/masks1.png?raw=true\" width=\"37.25%\" />\n <img src=\"https://raw.githubusercontent.com/opengeos/segment-anything/pypi/assets/masks2.jpg?raw=true\" width=\"61.5%\" /> \n</p>\n\n## Disclaimer\n\nI am not the author of the original implementation. This repository is a Python package that wraps the original implementation into a package. If you have any questions about the original implementation, please refer to the [facebookresearch/segment-anything](https://github.com/facebookresearch/segment-anything). The original implementation is licensed under the Apache License, Version 2.0.\n\n## Installation\n\nThe code requires `python>=3.8`, as well as `pytorch>=1.7` and `torchvision>=0.8`. Please follow the instructions [here](https://pytorch.org/get-started/locally/) to install both PyTorch and TorchVision dependencies. Installing both PyTorch and TorchVision with CUDA support is strongly recommended.\n\nInstall Segment Anything using pip:\n\n```bash\npip install segment-anything-py\n```\n\nor install Segment Anything using mamba:\n\n```bash\nmamba install -c conda-forge segment-anything\n```\n\nor clone the repository locally and install with\n\n```bash\npip install git+https://github.com/facebookresearch/segment-anything.git\n```\n\nThe following optional dependencies are necessary for mask post-processing, saving masks in COCO format, the example notebooks, and exporting the model in ONNX format. `jupyter` is also required to run the example notebooks.\n\n```\npip install opencv-python pycocotools matplotlib onnxruntime onnx\n```\n\n## <a name=\"GettingStarted\"></a>Getting Started\n\nFirst download a [model checkpoint](#model-checkpoints). Then the model can be used in just a few lines to get masks from a given prompt:\n\n```\nfrom segment_anything import SamPredictor, sam_model_registry\nsam = sam_model_registry[\"<model_type>\"](checkpoint=\"<path/to/checkpoint>\")\npredictor = SamPredictor(sam)\npredictor.set_image(<your_image>)\nmasks, _, _ = predictor.predict(<input_prompts>)\n```\n\nor generate masks for an entire image:\n\n```\nfrom segment_anything import SamAutomaticMaskGenerator, sam_model_registry\nsam = sam_model_registry[\"<model_type>\"](checkpoint=\"<path/to/checkpoint>\")\nmask_generator = SamAutomaticMaskGenerator(sam)\nmasks = mask_generator.generate(<your_image>)\n```\n\nAdditionally, masks can be generated for images from the command line:\n\n```\npython scripts/amg.py --checkpoint <path/to/checkpoint> --model-type <model_type> --input <image_or_folder> --output <path/to/output>\n```\n\nSee the examples notebooks on [using SAM with prompts](https://github.com/opengeos/segment-anything/blob/pypi/notebooks/predictor_example.ipynb) and [automatically generating masks](https://github.com/opengeos/segment-anything/blob/pypi/notebooks/automatic_mask_generator_example.ipynb) for more details.\n\n<p float=\"left\">\n <img src=\"https://raw.githubusercontent.com/opengeos/segment-anything/pypi/assets/notebook1.png?raw=true\" width=\"49.1%\" />\n <img src=\"https://raw.githubusercontent.com/opengeos/segment-anything/pypi/assets/notebook2.png?raw=true\" width=\"48.9%\" />\n</p>\n\n## ONNX Export\n\nSAM's lightweight mask decoder can be exported to ONNX format so that it can be run in any environment that supports ONNX runtime, such as in-browser as showcased in the [demo](https://segment-anything.com/demo). Export the model with\n\n```\npython scripts/export_onnx_model.py --checkpoint <path/to/checkpoint> --model-type <model_type> --output <path/to/output>\n```\n\nSee the [example notebook](https://github.com/facebookresearch/segment-anything/blob/main/notebooks/onnx_model_example.ipynb) for details on how to combine image preprocessing via SAM's backbone with mask prediction using the ONNX model. It is recommended to use the latest stable version of PyTorch for ONNX export.\n\n### Web demo\n\nThe `demo/` folder has a simple one page React app which shows how to run mask prediction with the exported ONNX model in a web browser with multithreading. Please see [`demo/README.md`](https://github.com/facebookresearch/segment-anything/blob/main/demo/README.md) for more details.\n\n## <a name=\"Models\"></a>Model Checkpoints\n\nThree model versions of the model are available with different backbone sizes. These models can be instantiated by running\n\n```\nfrom segment_anything import sam_model_registry\nsam = sam_model_registry[\"<model_type>\"](checkpoint=\"<path/to/checkpoint>\")\n```\n\nClick the links below to download the checkpoint for the corresponding model type.\n\n- **`default` or `vit_h`: [ViT-H SAM model.](https://dl.fbaipublicfiles.com/segment_anything/sam_vit_h_4b8939.pth)**\n- `vit_l`: [ViT-L SAM model.](https://dl.fbaipublicfiles.com/segment_anything/sam_vit_l_0b3195.pth)\n- `vit_b`: [ViT-B SAM model.](https://dl.fbaipublicfiles.com/segment_anything/sam_vit_b_01ec64.pth)\n\n## Dataset\n\nSee [here](https://ai.facebook.com/datasets/segment-anything/) for an overview of the datastet. The dataset can be downloaded [here](https://ai.facebook.com/datasets/segment-anything-downloads/). By downloading the datasets you agree that you have read and accepted the terms of the SA-1B Dataset Research License.\n\nWe save masks per image as a json file. It can be loaded as a dictionary in python in the below format.\n\n```python\n{\n \"image\" : image_info,\n \"annotations\" : [annotation],\n}\n\nimage_info {\n \"image_id\" : int, # Image id\n \"width\" : int, # Image width\n \"height\" : int, # Image height\n \"file_name\" : str, # Image filename\n}\n\nannotation {\n \"id\" : int, # Annotation id\n \"segmentation\" : dict, # Mask saved in COCO RLE format.\n \"bbox\" : [x, y, w, h], # The box around the mask, in XYWH format\n \"area\" : int, # The area in pixels of the mask\n \"predicted_iou\" : float, # The model's own prediction of the mask's quality\n \"stability_score\" : float, # A measure of the mask's quality\n \"crop_box\" : [x, y, w, h], # The crop of the image used to generate the mask, in XYWH format\n \"point_coords\" : [[x, y]], # The point coordinates input to the model to generate the mask\n}\n```\n\nImage ids can be found in sa_images_ids.txt which can be downloaded using the above [link](https://ai.facebook.com/datasets/segment-anything-downloads/) as well.\n\nTo decode a mask in COCO RLE format into binary:\n\n```\nfrom pycocotools import mask as mask_utils\nmask = mask_utils.decode(annotation[\"segmentation\"])\n```\n\nSee [here](https://github.com/cocodataset/cocoapi/blob/master/PythonAPI/pycocotools/mask.py) for more instructions to manipulate masks stored in RLE format.\n\n## License\n\nThe model is licensed under the [Apache 2.0 license](LICENSE).\n\n## Contributing\n\nSee [contributing](CONTRIBUTING.md) and the [code of conduct](CODE_OF_CONDUCT.md).\n\n## Contributors\n\nThe Segment Anything project was made possible with the help of many contributors (alphabetical):\n\nAaron Adcock, Vaibhav Aggarwal, Morteza Behrooz, Cheng-Yang Fu, Ashley Gabriel, Ahuva Goldstand, Allen Goodman, Sumanth Gurram, Jiabo Hu, Somya Jain, Devansh Kukreja, Robert Kuo, Joshua Lane, Yanghao Li, Lilian Luong, Jitendra Malik, Mallika Malhotra, William Ngan, Omkar Parkhi, Nikhil Raina, Dirk Rowe, Neil Sejoor, Vanessa Stark, Bala Varadarajan, Bram Wasti, Zachary Winstrom\n\n## Citing Segment Anything\n\nIf you use SAM or SA-1B in your research, please use the following BibTeX entry.\n\n```\n@article{kirillov2023segany,\n title={Segment Anything},\n author={Kirillov, Alexander and Mintun, Eric and Ravi, Nikhila and Mao, Hanzi and Rolland, Chloe and Gustafson, Laura and Xiao, Tete and Whitehead, Spencer and Berg, Alexander C. and Lo, Wan-Yen and Doll{\\'a}r, Piotr and Girshick, Ross},\n journal={arXiv:2304.02643},\n year={2023}\n}\n```\n",

"bugtrack_url": null,

"license": "Apache Software License 2.0",

"summary": "An unofficial Python package for Meta AI's Segment Anything Model",

"version": "1.0.1",

"project_urls": {

"Homepage": "https://github.com/opengeos/segment-anything"

},

"split_keywords": [],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "80c6ce82fea654a390f33ac4650619c97c705fca2aaef18722328813b7a30a06",

"md5": "8d2833699cddd60215be55fd9ceb31fa",

"sha256": "708fdaddecd4f2d1e4d6f27ac21763497026bb938df50f993caeaf1f5c3c99b8"

},

"downloads": -1,

"filename": "segment_anything_py-1.0.1-py3-none-any.whl",

"has_sig": false,

"md5_digest": "8d2833699cddd60215be55fd9ceb31fa",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.8",

"size": 40461,

"upload_time": "2024-07-20T01:08:02",

"upload_time_iso_8601": "2024-07-20T01:08:02.046888Z",

"url": "https://files.pythonhosted.org/packages/80/c6/ce82fea654a390f33ac4650619c97c705fca2aaef18722328813b7a30a06/segment_anything_py-1.0.1-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "9b2ac29459219e4760315298301d65ec8bf902e75d2827e22212835cbbdd4052",

"md5": "0e078bb125dbf147ed862631ea8b78ab",

"sha256": "91d7d65804dd3a9030e26cd269991c4d24099008fb82e5ae98c9f6d9163b4b75"

},

"downloads": -1,

"filename": "segment_anything_py-1.0.1.tar.gz",

"has_sig": false,

"md5_digest": "0e078bb125dbf147ed862631ea8b78ab",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.8",

"size": 36469,

"upload_time": "2024-07-20T01:08:03",

"upload_time_iso_8601": "2024-07-20T01:08:03.595953Z",

"url": "https://files.pythonhosted.org/packages/9b/2a/c29459219e4760315298301d65ec8bf902e75d2827e22212835cbbdd4052/segment_anything_py-1.0.1.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2024-07-20 01:08:03",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "opengeos",

"github_project": "segment-anything",

"travis_ci": false,

"coveralls": false,

"github_actions": true,

"lcname": "segment-anything-py"

}