| Name | smart-llm-loader JSON |

| Version |

0.1.0

JSON

JSON |

| download |

| home_page | https://github.com/drmingler/smart-llm-loader |

| Summary | A powerful PDF processing toolkit that seamlessly integrates with LLMs for intelligent document chunking and RAG applications. Features smart context-aware segmentation, multi-LLM support, and optimized content extraction for enhanced RAG performance. |

| upload_time | 2025-02-14 12:42:55 |

| maintainer | None |

| docs_url | None |

| author | drmingler |

| requires_python | <4.0,>=3.9 |

| license | MIT |

| keywords |

pdf

llm

rag

document-processing

ai

|

| VCS |

|

| bugtrack_url |

|

| requirements |

No requirements were recorded.

|

| Travis-CI |

No Travis.

|

| coveralls test coverage |

No coveralls.

|

# SmartLLMLoader

smart-llm-loader is a lightweight yet powerful Python package that transforms any document into LLM-ready chunks. It handles the entire document processing pipeline:

- 📄 Converts documents to clean markdown

- 🔍 Built-in OCR for scanned documents and images

- ✂️ Smart, context-aware text chunking

- 🔌 Seamless integration with LangChain and LlamaIndex

- 📦 Ready for vector stores and LLM ingestion

Spend less time on preprocessing headaches and more time building what matters. From RAG systems to chatbots to document Q&A,

SmartLLMLoader handles the heavy lifting so you can focus on creating exceptional AI applications.

SmartLLMLoader's chunking approach has been benchmarked against traditional methods, showing superior performance particularly when paired with Google's Gemini Flash model. This combination offers an efficient and cost-effective solution for document chunking in RAG systems. View the detailed performance comparison [here](https://www.sergey.fyi/articles/gemini-flash-2).

## Features

- Support for multiple LLM providers

- In-built OCR for scanned documents and images

- Flexible document type support

- Supports different chunking strategies such as: context-aware chunking and page-based chunking

- Supports custom prompts and custom chunking

## Installation

### System Dependencies

First, install Poppler if you don't have it already (required for PDF processing):

**Ubuntu/Debian:**

```bash

sudo apt-get install poppler-utils

```

**macOS:**

```bash

brew install poppler

```

**Windows:**

1. Download the latest [Poppler for Windows](https://github.com/oschwartz10612/poppler-windows/releases/)

2. Extract the downloaded file

3. Add the `bin` directory to your system PATH

### Package Installation

You can install SmartLLMLoader using pip:

```bash

pip install smart-llm-loader

```

Or using Poetry:

```bash

poetry add smart-llm-loader

```

## Quick Start

smart-llm-loader package uses litellm to call the LLM so any arguments supported by litellm can be used. You can find the litellm documentation [here](https://docs.litellm.ai/docs/providers).

You can use any multi-modal model supported by litellm.

```python

from smart_llm_loader import SmartLLMLoader

# Using Gemini Flash model

os.environ["GEMINI_API_KEY"] = "YOUR_GEMINI_API_KEY"

model = "gemini/gemini-1.5-flash"

# Using openai model

os.environ["OPENAI_API_KEY"] = "YOUR_OPENAI_API_KEY"

model = "openai/gpt-4o"

# Using anthropic model

os.environ["ANTHROPIC_API_KEY"] = "YOUR_ANTHROPIC_API_KEY"

model = "anthropic/claude-3-5-sonnet"

# Initialize the document loader

loader = SmartLLMLoader(

file_path="your_document.pdf",

chunk_strategy="contextual",

model=model,

)

# Load and split the document into chunks

documents = loader.load_and_split()

```

## Parameters

```python

class SmartLLMLoader(BaseLoader):

"""A flexible document loader that supports multiple input types."""

def __init__(

self,

file_path: Optional[Union[str, Path]] = None, # path to the document to load

url: Optional[str] = None, # url to the document to load

chunk_strategy: str = 'contextual', # chunking strategy to use (page, contextual, custom)

custom_prompt: Optional[str] = None, # custom prompt to use

model: str = "gemini/gemini-2.0-flash", # LLM model to use

save_output: bool = False, # whether to save the output to a file

output_dir: Optional[Union[str, Path]] = None, # directory to save the output to

api_key: Optional[str] = None, # API key to use

**kwargs,

):

```

## Comparison with Traditional Methods

Let's see SmartLLMLoader in action! We'll compare it with PyMuPDF (a popular traditional document loader) to demonstrate why SmartLLMLoader's intelligent chunking makes such a difference in real-world applications.

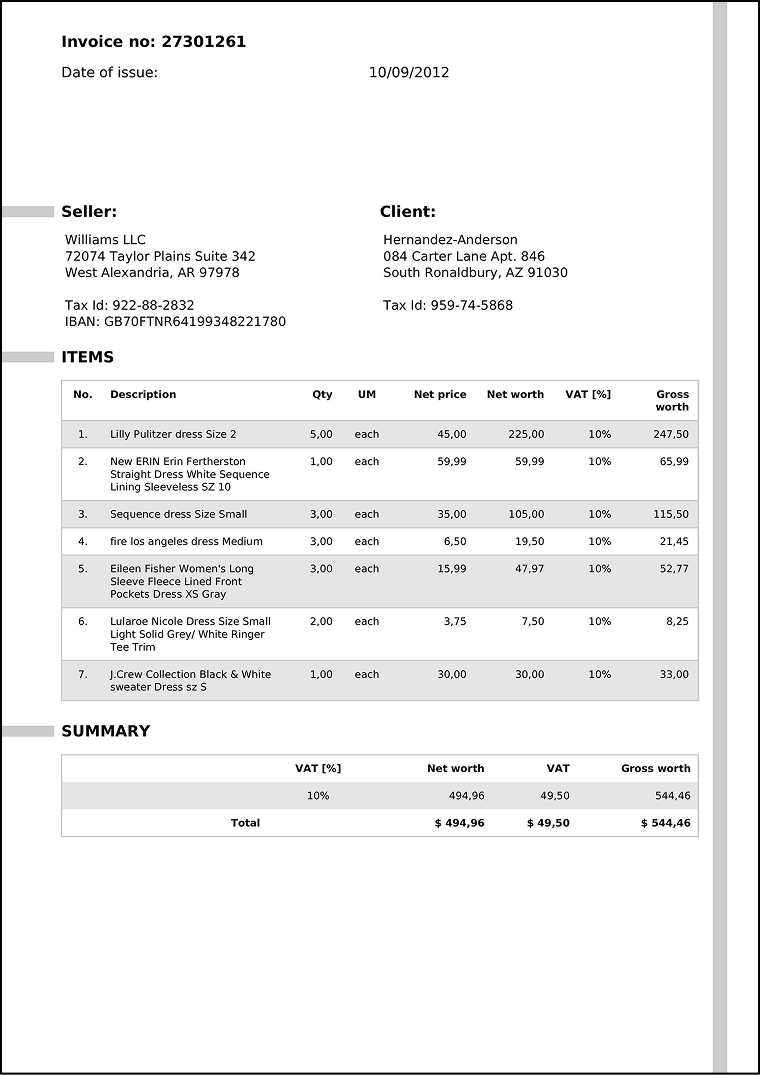

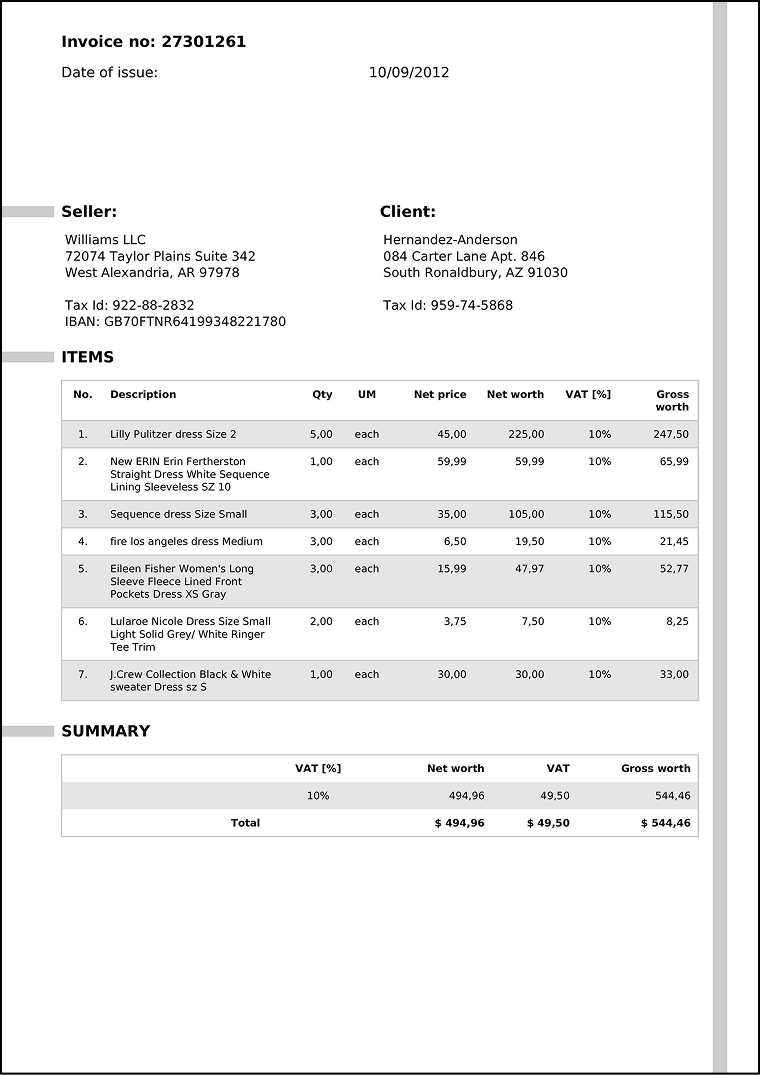

### The Challenge: Processing an Invoice

We'll process this sample invoice that includes headers, tables, and complex formatting:

### Head-to-Head Comparison

#### 1. SmartLLMLoader Output

SmartLLMLoader intelligently breaks down the document into semantic chunks, preserving structure and meaning (note that the json output below has been formatted for readability):

```json

[

{

"content": "Invoice no: 27301261\nDate of issue: 10/09/2012",

"metadata": {

"page": 0,

"semantic_theme": "invoice_header",

"source": "data/test_ocr_doc.pdf"

}

},

{

"content": "Seller:\nWilliams LLC\n72074 Taylor Plains Suite 342\nWest Alexandria, AR 97978\nTax Id: 922-88-2832\nIBAN: GB70FTNR64199348221780",

"metadata": {

"page": 0,

"semantic_theme": "seller_information",

"source": "data/test_ocr_doc.pdf"

}

},

{

"content": "Client:\nHernandez-Anderson\n084 Carter Lane Apt. 846\nSouth Ronaldbury, AZ 91030\nTax Id: 959-74-5868",

"metadata": {

"page": 0,

"semantic_theme": "client_information",

"source": "data/test_ocr_doc.pdf"

}

},

{

"content":

"Item table:\n"

"| No. | Description | Qty | UM | Net price | Net worth | VAT [%] | Gross worth |\n"

"|-----|-----------------------------------------------------------|------|------|-----------|-----------|---------|-------------|\n"

"| 1 | Lilly Pulitzer dress Size 2 | 5.00 | each | 45.00 | 225.00 | 10% | 247.50 |\n"

"| 2 | New ERIN Erin Fertherston Straight Dress White Sequence Lining Sleeveless SZ 10 | 1.00 | each | 59.99 | 59.99 | 10% | 65.99 |\n"

"| 3 | Sequence dress Size Small | 3.00 | each | 35.00 | 105.00 | 10% | 115.50 |\n"

"| 4 | fire los angeles dress Medium | 3.00 | each | 6.50 | 19.50 | 10% | 21.45 |\n"

"| 5 | Eileen Fisher Women's Long Sleeve Fleece Lined Front Pockets Dress XS Gray | 3.00 | each | 15.99 | 47.97 | 10% | 52.77 |\n"

"| 6 | Lularoe Nicole Dress Size Small Light Solid Grey/White Ringer Tee Trim | 2.00 | each | 3.75 | 7.50 | 10% | 8.25 |\n"

"| 7 | J.Crew Collection Black & White sweater Dress sz S | 1.00 | each | 30.00 | 30.00 | 10% | 33.00 |",

"metadata": {

"page": 0,

"semantic_theme": "items_table",

"source": "data/test_ocr_doc.pdf"

}

},

{

"content": "Summary table:\n"

"| VAT [%] | Net worth | VAT | Gross worth |\n"

"|---------|-----------|--------|-------------|\n"

"| 10% | 494,96 | 49,50 | 544,46 |\n"

"| Total | $ 494,96 | $ 49,50| $ 544,46 |",

"metadata": {

"page": 0,

"semantic_theme": "summary_table",

"source": "data/test_ocr_doc.pdf"

}

}

]

```

**Key Benefits:**

- ✨ Clean, structured chunks

- 🎯 Semantic understanding

- 📊 Preserved table formatting

- 🏷️ Intelligent metadata tagging

#### 2. Traditional PyMuPDF Output

PyMuPDF provides a basic text extraction without semantic understanding:

```json

[

{

"page": 0,

"content": "Invoice no: 27301261 \nDate of issue: \nSeller: \nWilliams LLC \n72074 Taylor Plains Suite 342 \nWest

Alexandria, AR 97978 \nTax Id: 922-88-2832 \nIBAN: GB70FTNR64199348221780 \nITEMS \nNo. \nDescription \n2l \nLilly

Pulitzer dress Size 2 \n2. \nNew ERIN Erin Fertherston \nStraight Dress White Sequence \nLining Sleeveless SZ 10

\n3. \n Sequence dress Size Small \n4. \nfire los angeles dress Medium \nL \nEileen Fisher Women's Long \nSleeve

Fleece Lined Front \nPockets Dress XS Gray \n6. \nLularoe Nicole Dress Size Small \nLight Solid Grey/ White

Ringer \nTee Trim \nT \nJ.Crew Collection Black & White \nsweater Dress sz S \nSUMMARY \nTotal \n2,00 \n1,00

\nVAT [%] \n10% \n10/09/2012 \neach \neach \nClient: \nHernandez-Anderson \n084 Carter Lane Apt. 846 \nSouth

Ronaldbury, AZ 91030 \nTax Id: 959-74-5868 \nNet price \n Net worth \nVAT [%] \n45,00 \n225,00 \n10% \n59,99

\n59,99 \n10% \n35,00 \n105,00 \n10% \n6,50 \n19,50 \n10% \n15,99 \n47,97 \n10% \n3,75 \n7.50 \n10% \n30,00

\n30,00 \n10% \nNet worth \nVAT \n494,96 \n49,50 \n$ 494,96 \n$49,50 \nGross \nworth \n247,50 \n65,99 \n115,50

\n21,45 \n52,77 \n8,25 \n33,00 \nGross worth \n544,46 \n$ 544,46 \n",

"metadata": {

"source": "./data/test_ocr_doc.pdf",

"file_path": "./data/test_ocr_doc.pdf",

"page": 0,

"total_pages": 1,

"format": "PDF 1.5",

"title": "",

"author": "",

"subject": "",

"keywords": "",

"creator": "",

"producer": "AskYourPDF.com",

"creationDate": "",

"modDate": "D:20250213152908Z",

"trapped": ""

}

}

]

```

### Real-World Impact: RAG Performance

Let's see how this difference affects a real Question-Answering system:

```python

question = "What is the total gross worth for item 1 and item 7?"

# SmartLLMLoader Result ✅

"The total gross worth for item 1 (Lilly Pulitzer dress) is $247.50 and for item 7

(J.Crew Collection sweater dress) is $33.00.

Total: $280.50"

# PyMuPDF Result ❌

"The total gross worth for item 1 is $45.00, and for item 7 it is $33.00.

Total: $78.00"

```

**Why SmartLLMLoader Won:**

- 🎯 Maintained table structure

- 💡 Preserved relationships between data

- 📊 Accurate calculations

- 🤖 Better context for the LLM

You can try it yourself by running the complete [RAG example](./examples/rag_example.py) to see the difference in action!

## License

This project is licensed under the MIT License - see the LICENSE file for details.

## Contributing

Contributions are welcome! Please feel free to submit a Pull Request.

## Authors

- David Emmanuel ([@drmingler](https://github.com/drmingler))

Raw data

{

"_id": null,

"home_page": "https://github.com/drmingler/smart-llm-loader",

"name": "smart-llm-loader",

"maintainer": null,

"docs_url": null,

"requires_python": "<4.0,>=3.9",

"maintainer_email": null,

"keywords": "pdf, llm, rag, document-processing, ai",

"author": "drmingler",

"author_email": "davidemmanuel75@gmail.com",

"download_url": "https://files.pythonhosted.org/packages/eb/63/d0e52781a1b4a0851c43265f512a0d56c1655d3cf20f60b51ebeb8c2c5f8/smart_llm_loader-0.1.0.tar.gz",

"platform": null,

"description": "# SmartLLMLoader\n\nsmart-llm-loader is a lightweight yet powerful Python package that transforms any document into LLM-ready chunks. It handles the entire document processing pipeline:\n\n- \ud83d\udcc4 Converts documents to clean markdown\n- \ud83d\udd0d Built-in OCR for scanned documents and images\n- \u2702\ufe0f Smart, context-aware text chunking\n- \ud83d\udd0c Seamless integration with LangChain and LlamaIndex\n- \ud83d\udce6 Ready for vector stores and LLM ingestion\n\nSpend less time on preprocessing headaches and more time building what matters. From RAG systems to chatbots to document Q&A, \nSmartLLMLoader handles the heavy lifting so you can focus on creating exceptional AI applications. \n\nSmartLLMLoader's chunking approach has been benchmarked against traditional methods, showing superior performance particularly when paired with Google's Gemini Flash model. This combination offers an efficient and cost-effective solution for document chunking in RAG systems. View the detailed performance comparison [here](https://www.sergey.fyi/articles/gemini-flash-2).\n\n\n## Features\n\n- Support for multiple LLM providers\n- In-built OCR for scanned documents and images\n- Flexible document type support\n- Supports different chunking strategies such as: context-aware chunking and page-based chunking\n- Supports custom prompts and custom chunking\n\n## Installation\n\n### System Dependencies\n\nFirst, install Poppler if you don't have it already (required for PDF processing):\n\n**Ubuntu/Debian:**\n```bash\nsudo apt-get install poppler-utils\n```\n\n**macOS:**\n```bash\nbrew install poppler\n```\n\n**Windows:**\n1. Download the latest [Poppler for Windows](https://github.com/oschwartz10612/poppler-windows/releases/)\n2. Extract the downloaded file\n3. Add the `bin` directory to your system PATH\n\n### Package Installation\n\nYou can install SmartLLMLoader using pip:\n\n```bash\npip install smart-llm-loader\n```\n\nOr using Poetry:\n\n```bash\npoetry add smart-llm-loader\n```\n\n## Quick Start\nsmart-llm-loader package uses litellm to call the LLM so any arguments supported by litellm can be used. You can find the litellm documentation [here](https://docs.litellm.ai/docs/providers).\nYou can use any multi-modal model supported by litellm.\n\n```python\nfrom smart_llm_loader import SmartLLMLoader\n\n\n# Using Gemini Flash model\nos.environ[\"GEMINI_API_KEY\"] = \"YOUR_GEMINI_API_KEY\"\nmodel = \"gemini/gemini-1.5-flash\"\n\n# Using openai model\nos.environ[\"OPENAI_API_KEY\"] = \"YOUR_OPENAI_API_KEY\"\nmodel = \"openai/gpt-4o\"\n\n# Using anthropic model\nos.environ[\"ANTHROPIC_API_KEY\"] = \"YOUR_ANTHROPIC_API_KEY\"\nmodel = \"anthropic/claude-3-5-sonnet\"\n\n\n# Initialize the document loader\nloader = SmartLLMLoader(\n file_path=\"your_document.pdf\",\n chunk_strategy=\"contextual\",\n model=model,\n)\n# Load and split the document into chunks\ndocuments = loader.load_and_split()\n```\n\n## Parameters\n\n```python\nclass SmartLLMLoader(BaseLoader):\n \"\"\"A flexible document loader that supports multiple input types.\"\"\"\n\n def __init__(\n self,\n file_path: Optional[Union[str, Path]] = None, # path to the document to load\n url: Optional[str] = None, # url to the document to load\n chunk_strategy: str = 'contextual', # chunking strategy to use (page, contextual, custom)\n custom_prompt: Optional[str] = None, # custom prompt to use\n model: str = \"gemini/gemini-2.0-flash\", # LLM model to use\n save_output: bool = False, # whether to save the output to a file\n output_dir: Optional[Union[str, Path]] = None, # directory to save the output to\n api_key: Optional[str] = None, # API key to use\n **kwargs,\n ):\n```\n\n## Comparison with Traditional Methods\n\nLet's see SmartLLMLoader in action! We'll compare it with PyMuPDF (a popular traditional document loader) to demonstrate why SmartLLMLoader's intelligent chunking makes such a difference in real-world applications.\n\n### The Challenge: Processing an Invoice\nWe'll process this sample invoice that includes headers, tables, and complex formatting:\n\n\n\n### Head-to-Head Comparison\n\n#### 1. SmartLLMLoader Output\nSmartLLMLoader intelligently breaks down the document into semantic chunks, preserving structure and meaning (note that the json output below has been formatted for readability):\n\n```json\n[\n {\n \"content\": \"Invoice no: 27301261\\nDate of issue: 10/09/2012\",\n \"metadata\": {\n \"page\": 0,\n \"semantic_theme\": \"invoice_header\",\n \"source\": \"data/test_ocr_doc.pdf\"\n }\n },\n {\n \"content\": \"Seller:\\nWilliams LLC\\n72074 Taylor Plains Suite 342\\nWest Alexandria, AR 97978\\nTax Id: 922-88-2832\\nIBAN: GB70FTNR64199348221780\",\n \"metadata\": {\n \"page\": 0,\n \"semantic_theme\": \"seller_information\",\n \"source\": \"data/test_ocr_doc.pdf\"\n }\n },\n {\n \"content\": \"Client:\\nHernandez-Anderson\\n084 Carter Lane Apt. 846\\nSouth Ronaldbury, AZ 91030\\nTax Id: 959-74-5868\",\n \"metadata\": {\n \"page\": 0,\n \"semantic_theme\": \"client_information\",\n \"source\": \"data/test_ocr_doc.pdf\"\n }\n },\n {\n \"content\":\n \"Item table:\\n\"\n \"| No. | Description | Qty | UM | Net price | Net worth | VAT [%] | Gross worth |\\n\"\n \"|-----|-----------------------------------------------------------|------|------|-----------|-----------|---------|-------------|\\n\"\n \"| 1 | Lilly Pulitzer dress Size 2 | 5.00 | each | 45.00 | 225.00 | 10% | 247.50 |\\n\"\n \"| 2 | New ERIN Erin Fertherston Straight Dress White Sequence Lining Sleeveless SZ 10 | 1.00 | each | 59.99 | 59.99 | 10% | 65.99 |\\n\"\n \"| 3 | Sequence dress Size Small | 3.00 | each | 35.00 | 105.00 | 10% | 115.50 |\\n\"\n \"| 4 | fire los angeles dress Medium | 3.00 | each | 6.50 | 19.50 | 10% | 21.45 |\\n\"\n \"| 5 | Eileen Fisher Women's Long Sleeve Fleece Lined Front Pockets Dress XS Gray | 3.00 | each | 15.99 | 47.97 | 10% | 52.77 |\\n\"\n \"| 6 | Lularoe Nicole Dress Size Small Light Solid Grey/White Ringer Tee Trim | 2.00 | each | 3.75 | 7.50 | 10% | 8.25 |\\n\"\n \"| 7 | J.Crew Collection Black & White sweater Dress sz S | 1.00 | each | 30.00 | 30.00 | 10% | 33.00 |\",\n \"metadata\": {\n \"page\": 0,\n \"semantic_theme\": \"items_table\",\n \"source\": \"data/test_ocr_doc.pdf\"\n }\n },\n {\n \"content\": \"Summary table:\\n\"\n \"| VAT [%] | Net worth | VAT | Gross worth |\\n\"\n \"|---------|-----------|--------|-------------|\\n\"\n \"| 10% | 494,96 | 49,50 | 544,46 |\\n\"\n \"| Total | $ 494,96 | $ 49,50| $ 544,46 |\",\n \"metadata\": {\n \"page\": 0,\n \"semantic_theme\": \"summary_table\",\n \"source\": \"data/test_ocr_doc.pdf\"\n }\n }\n]\n```\n\n**Key Benefits:**\n- \u2728 Clean, structured chunks\n- \ud83c\udfaf Semantic understanding\n- \ud83d\udcca Preserved table formatting\n- \ud83c\udff7\ufe0f Intelligent metadata tagging\n\n#### 2. Traditional PyMuPDF Output\nPyMuPDF provides a basic text extraction without semantic understanding:\n\n```json\n[\n {\n \"page\": 0,\n \"content\": \"Invoice no: 27301261 \\nDate of issue: \\nSeller: \\nWilliams LLC \\n72074 Taylor Plains Suite 342 \\nWest\n Alexandria, AR 97978 \\nTax Id: 922-88-2832 \\nIBAN: GB70FTNR64199348221780 \\nITEMS \\nNo. \\nDescription \\n2l \\nLilly\n Pulitzer dress Size 2 \\n2. \\nNew ERIN Erin Fertherston \\nStraight Dress White Sequence \\nLining Sleeveless SZ 10\n \\n3. \\n Sequence dress Size Small \\n4. \\nfire los angeles dress Medium \\nL \\nEileen Fisher Women's Long \\nSleeve\n Fleece Lined Front \\nPockets Dress XS Gray \\n6. \\nLularoe Nicole Dress Size Small \\nLight Solid Grey/ White \n Ringer \\nTee Trim \\nT \\nJ.Crew Collection Black & White \\nsweater Dress sz S \\nSUMMARY \\nTotal \\n2,00 \\n1,00\n \\nVAT [%] \\n10% \\n10/09/2012 \\neach \\neach \\nClient: \\nHernandez-Anderson \\n084 Carter Lane Apt. 846 \\nSouth \n Ronaldbury, AZ 91030 \\nTax Id: 959-74-5868 \\nNet price \\n Net worth \\nVAT [%] \\n45,00 \\n225,00 \\n10% \\n59,99 \n \\n59,99 \\n10% \\n35,00 \\n105,00 \\n10% \\n6,50 \\n19,50 \\n10% \\n15,99 \\n47,97 \\n10% \\n3,75 \\n7.50 \\n10% \\n30,00 \n \\n30,00 \\n10% \\nNet worth \\nVAT \\n494,96 \\n49,50 \\n$ 494,96 \\n$49,50 \\nGross \\nworth \\n247,50 \\n65,99 \\n115,50\n \\n21,45 \\n52,77 \\n8,25 \\n33,00 \\nGross worth \\n544,46 \\n$ 544,46 \\n\",\n \"metadata\": {\n \"source\": \"./data/test_ocr_doc.pdf\",\n \"file_path\": \"./data/test_ocr_doc.pdf\",\n \"page\": 0,\n \"total_pages\": 1,\n \"format\": \"PDF 1.5\",\n \"title\": \"\",\n \"author\": \"\",\n \"subject\": \"\",\n \"keywords\": \"\",\n \"creator\": \"\",\n \"producer\": \"AskYourPDF.com\",\n \"creationDate\": \"\",\n \"modDate\": \"D:20250213152908Z\",\n \"trapped\": \"\"\n }\n }\n]\n```\n\n### Real-World Impact: RAG Performance\n\nLet's see how this difference affects a real Question-Answering system:\n\n```python\nquestion = \"What is the total gross worth for item 1 and item 7?\"\n\n# SmartLLMLoader Result \u2705\n\"The total gross worth for item 1 (Lilly Pulitzer dress) is $247.50 and for item 7 \n(J.Crew Collection sweater dress) is $33.00. \nTotal: $280.50\"\n\n# PyMuPDF Result \u274c\n\"The total gross worth for item 1 is $45.00, and for item 7 it is $33.00. \nTotal: $78.00\"\n```\n\n**Why SmartLLMLoader Won:**\n- \ud83c\udfaf Maintained table structure\n- \ud83d\udca1 Preserved relationships between data\n- \ud83d\udcca Accurate calculations\n- \ud83e\udd16 Better context for the LLM\n\nYou can try it yourself by running the complete [RAG example](./examples/rag_example.py) to see the difference in action!\n\n## License\n\nThis project is licensed under the MIT License - see the LICENSE file for details.\n\n## Contributing\n\nContributions are welcome! Please feel free to submit a Pull Request.\n\n## Authors\n\n- David Emmanuel ([@drmingler](https://github.com/drmingler))",

"bugtrack_url": null,

"license": "MIT",

"summary": "A powerful PDF processing toolkit that seamlessly integrates with LLMs for intelligent document chunking and RAG applications. Features smart context-aware segmentation, multi-LLM support, and optimized content extraction for enhanced RAG performance.",

"version": "0.1.0",

"project_urls": {

"Homepage": "https://github.com/drmingler/smart-llm-loader",

"Repository": "https://github.com/drmingler/smart-llm-loader"

},

"split_keywords": [

"pdf",

" llm",

" rag",

" document-processing",

" ai"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "94bf4e69740611aa45c08ce793d85ca90eb86bec97729cbcd4b044bd898ca333",

"md5": "33b206e1883aa27909593ead20aed39c",

"sha256": "caa4b804c3dd6178ccc15082d2403fcb0f4f67621ec0057a7926f7686c092b77"

},

"downloads": -1,

"filename": "smart_llm_loader-0.1.0-py3-none-any.whl",

"has_sig": false,

"md5_digest": "33b206e1883aa27909593ead20aed39c",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": "<4.0,>=3.9",

"size": 13281,

"upload_time": "2025-02-14T12:42:53",

"upload_time_iso_8601": "2025-02-14T12:42:53.552920Z",

"url": "https://files.pythonhosted.org/packages/94/bf/4e69740611aa45c08ce793d85ca90eb86bec97729cbcd4b044bd898ca333/smart_llm_loader-0.1.0-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "eb63d0e52781a1b4a0851c43265f512a0d56c1655d3cf20f60b51ebeb8c2c5f8",

"md5": "79e49b4f57982faf1200d1d987f707d8",

"sha256": "8d6cbc9a8546d5ab228645c78bbe3f354c682e8ef780edad4fea03508e54dd93"

},

"downloads": -1,

"filename": "smart_llm_loader-0.1.0.tar.gz",

"has_sig": false,

"md5_digest": "79e49b4f57982faf1200d1d987f707d8",

"packagetype": "sdist",

"python_version": "source",

"requires_python": "<4.0,>=3.9",

"size": 14005,

"upload_time": "2025-02-14T12:42:55",

"upload_time_iso_8601": "2025-02-14T12:42:55.500564Z",

"url": "https://files.pythonhosted.org/packages/eb/63/d0e52781a1b4a0851c43265f512a0d56c1655d3cf20f60b51ebeb8c2c5f8/smart_llm_loader-0.1.0.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2025-02-14 12:42:55",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "drmingler",

"github_project": "smart-llm-loader",

"travis_ci": false,

"coveralls": false,

"github_actions": true,

"lcname": "smart-llm-loader"

}