# Spender

_Neural spectrum encoder and decoder_

* Paper I (SDSS): https://arxiv.org/abs/2211.07890

* Paper II (SDSS): https://arxiv.org/abs/2302.02496

* Paper III (DESI EDR): https://arxiv.org/abs/2307.07664

From a data-driven side, galaxy spectra have two fundamental degrees of freedom: their instrinsic spectral properties (or type, if you believe in such a thing) and their redshift. The latter makes them awkward to ingest because it stretches everything, which means spectral features don't appear at the same places. This is why most analyses of the intrinsic properties are done by transforming the observed spectrum to restframe.

We decided to do the opposite. We build a custom architecture, which describes the restframe spectrum by an autoencoder and transforms the restframe model to the observed redshift. While we're at it we also match the spectral resolution and line spread function of the instrument:

Doing so clearly separates the responsibilities in the architecture. Spender establishes a restframe that has higher resolution and larger wavelength range than the spectra from which it is trained. The model can be trained from spectra at different redshifts or even from different instruments without the need to standardize the observations. Spender also has an explicit, differentiable redshift dependence, which can be coupled with a redshift estimator for a fully data-driven spectrum analysis pipeline.

## Installation

The easiest way is `pip install spender`. When installing from a downloaded code repo, run `pip install -e .`.

## Pretrained models

We make the best-fitting models discussed in the paper available through the Astro Data Lab Hub. Here's the workflow:

```python

import os

import spender

# show list of pretrained models

spender.hub.list()

# print out details for SDSS model from paper II

print(spender.hub.help('sdss_II'))

# load instrument and spectrum model from the hub

sdss, model = spender.hub.load('sdss_II')

# if your machine does not have GPUs, specify the device

from accelerate import Accelerator

accelerator = Accelerator(mixed_precision='fp16')

sdss, model = spender.hub.load('sdss_II', map_location=accelerator.device)

```

## Outliers Catalogs

catalog of latent-space probabilities for

* [SDSS-I main galaxy sample](https://hub.pmelchior.net/spender.sdss.paperII.logP.txt.bz2); see Liang et al. (2023a) for details

* [DESI EDR BGS sample](https://hub.pmelchior.net/spender.desi-edr.full-bgs-objects-logP.txt.bz2); see Liang et al. (2023b) for details

## Use

Documentation and tutorials are forthcoming. In the meantime, check out `train/diagnostics.ipynb` for a worked through example that generates the figures from the paper.

In short, you can run spender like this:

```python

import os

import spender

import torch

from accelerate import Accelerator

# hardware optimization

accelerator = Accelerator(mixed_precision='fp16')

# get code, instrument, and pretrained spectrum model from the hub

sdss, model = spender.hub.load('sdss_II', map_location=accelerator.device)

# get some SDSS spectra from the ids, store locally in data_path

data_path = "./DATA"

ids = ((412, 52254, 308), (412, 52250, 129))

spec, w, z, norm, zerr = sdss.make_batch(data_path, ids)

# run spender end-to-end

with torch.no_grad():

spec_reco = model(spec, instrument=sdss, z=z)

# for more fine-grained control, run spender's internal _forward method

# which return the latents s, the model for the restframe, and the observed spectrum

with torch.no_grad():

s, spec_rest, spec_reco = model._forward(spec, instrument=sdss, z=z)

# only encode into latents

with torch.no_grad():

s = model.encode(spec)

```

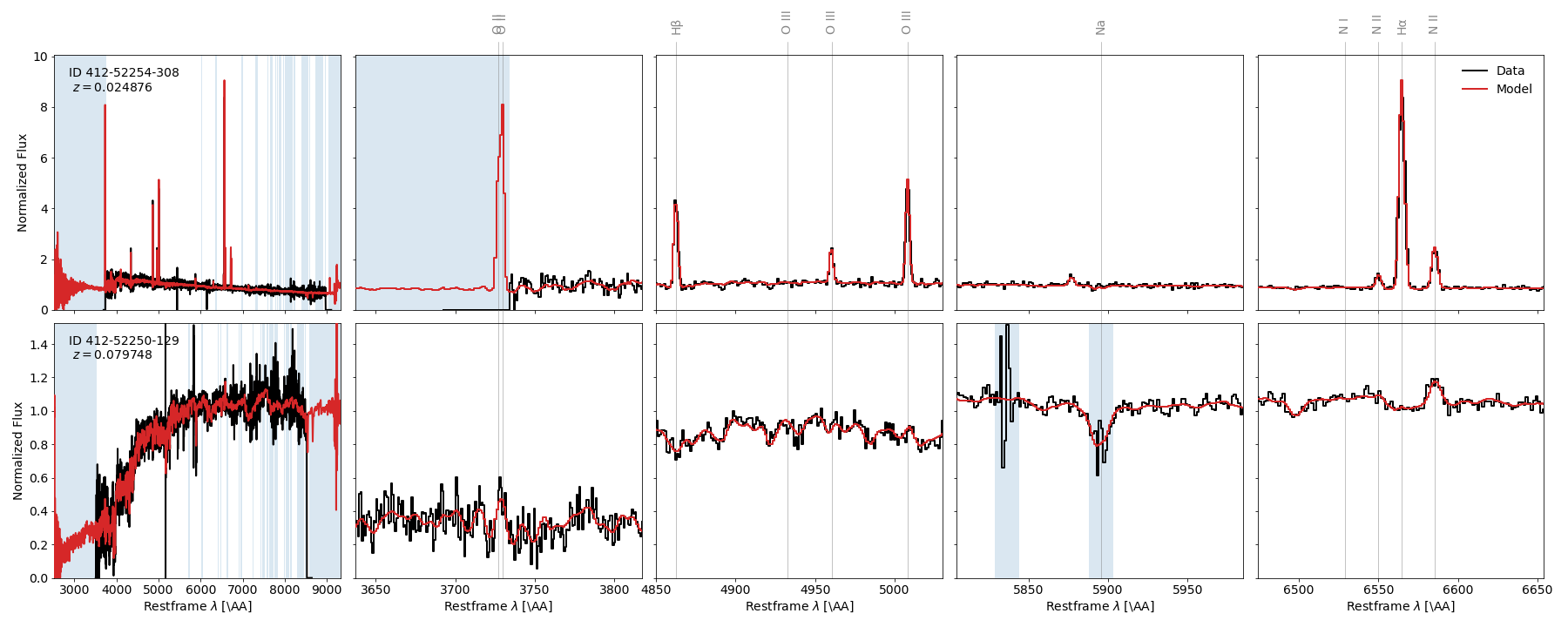

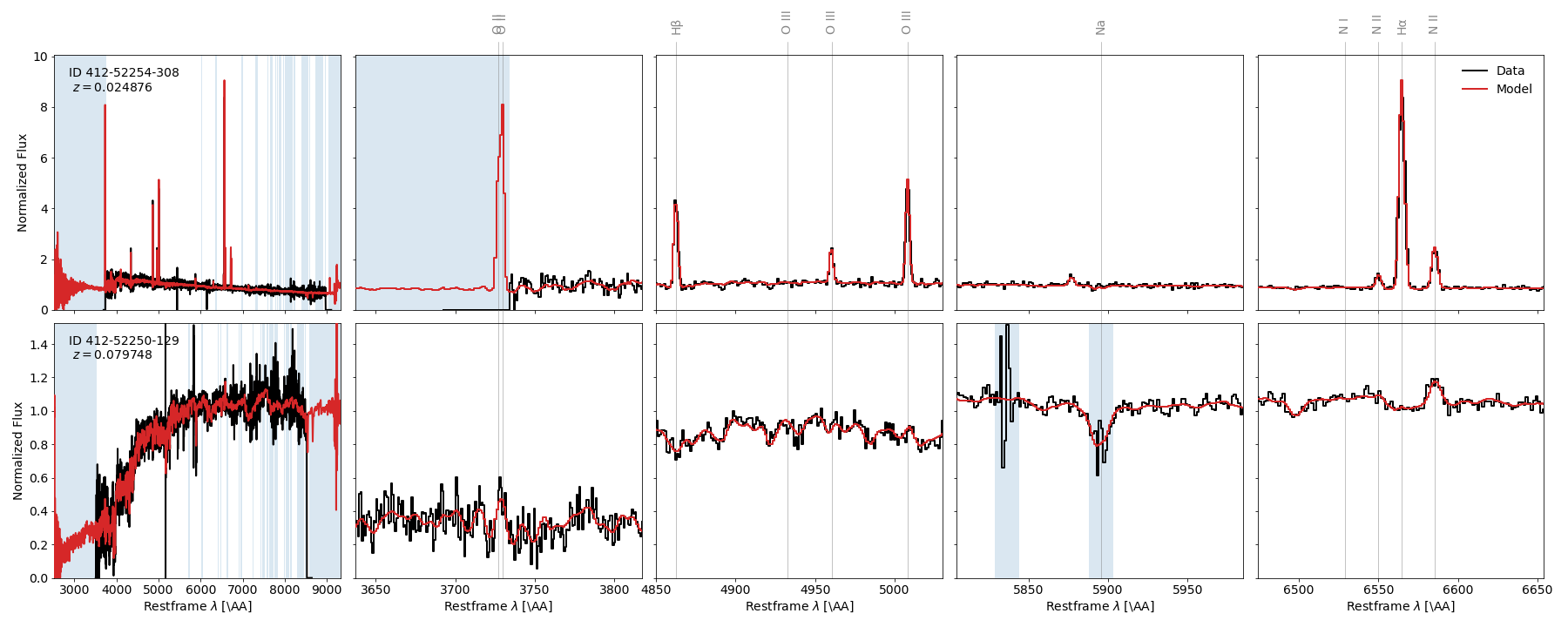

Plotting the results of the above nicely shows what spender can do:

Noteworthy aspects: The restframe model has an extended wavelength range, e.g. predicting the [O II] doublet that was not observed in the first example, and being unaffected by glitches like the skyline residuals at about 5840 A in the second example.

Raw data

{

"_id": null,

"home_page": "https://github.com/pmelchior/spender",

"name": "spender",

"maintainer": null,

"docs_url": null,

"requires_python": null,

"maintainer_email": null,

"keywords": "spectroscopy, autoencoder",

"author": "Peter Melchior",

"author_email": "peter.m.melchior@gmail.com",

"download_url": "https://files.pythonhosted.org/packages/09/b4/54081b2ecbe6663ae3ff4fb5ad9442f16fce728c754951b3c43640987ae6/spender-0.2.6.tar.gz",

"platform": null,

"description": "# Spender\n\n_Neural spectrum encoder and decoder_\n\n* Paper I (SDSS): https://arxiv.org/abs/2211.07890\n* Paper II (SDSS): https://arxiv.org/abs/2302.02496\n* Paper III (DESI EDR): https://arxiv.org/abs/2307.07664\n\nFrom a data-driven side, galaxy spectra have two fundamental degrees of freedom: their instrinsic spectral properties (or type, if you believe in such a thing) and their redshift. The latter makes them awkward to ingest because it stretches everything, which means spectral features don't appear at the same places. This is why most analyses of the intrinsic properties are done by transforming the observed spectrum to restframe.\n\nWe decided to do the opposite. We build a custom architecture, which describes the restframe spectrum by an autoencoder and transforms the restframe model to the observed redshift. While we're at it we also match the spectral resolution and line spread function of the instrument:\n\n\nDoing so clearly separates the responsibilities in the architecture. Spender establishes a restframe that has higher resolution and larger wavelength range than the spectra from which it is trained. The model can be trained from spectra at different redshifts or even from different instruments without the need to standardize the observations. Spender also has an explicit, differentiable redshift dependence, which can be coupled with a redshift estimator for a fully data-driven spectrum analysis pipeline.\n\n## Installation\n\nThe easiest way is `pip install spender`. When installing from a downloaded code repo, run `pip install -e .`.\n\n## Pretrained models\n\nWe make the best-fitting models discussed in the paper available through the Astro Data Lab Hub. Here's the workflow:\n\n```python\nimport os\nimport spender\n\n# show list of pretrained models\nspender.hub.list()\n\n# print out details for SDSS model from paper II\nprint(spender.hub.help('sdss_II'))\n\n# load instrument and spectrum model from the hub\nsdss, model = spender.hub.load('sdss_II')\n\n# if your machine does not have GPUs, specify the device\nfrom accelerate import Accelerator\naccelerator = Accelerator(mixed_precision='fp16')\nsdss, model = spender.hub.load('sdss_II', map_location=accelerator.device)\n```\n \n## Outliers Catalogs\n\ncatalog of latent-space probabilities for\n* [SDSS-I main galaxy sample](https://hub.pmelchior.net/spender.sdss.paperII.logP.txt.bz2); see Liang et al. (2023a) for details\n* [DESI EDR BGS sample](https://hub.pmelchior.net/spender.desi-edr.full-bgs-objects-logP.txt.bz2); see Liang et al. (2023b) for details\n\n## Use\n\nDocumentation and tutorials are forthcoming. In the meantime, check out `train/diagnostics.ipynb` for a worked through example that generates the figures from the paper.\n\nIn short, you can run spender like this:\n```python\nimport os\nimport spender\nimport torch\nfrom accelerate import Accelerator\n\n# hardware optimization\naccelerator = Accelerator(mixed_precision='fp16')\n\n# get code, instrument, and pretrained spectrum model from the hub\nsdss, model = spender.hub.load('sdss_II', map_location=accelerator.device)\n\n# get some SDSS spectra from the ids, store locally in data_path\ndata_path = \"./DATA\"\nids = ((412, 52254, 308), (412, 52250, 129))\nspec, w, z, norm, zerr = sdss.make_batch(data_path, ids)\n\n# run spender end-to-end\nwith torch.no_grad():\n spec_reco = model(spec, instrument=sdss, z=z)\n\n# for more fine-grained control, run spender's internal _forward method\n# which return the latents s, the model for the restframe, and the observed spectrum\nwith torch.no_grad():\n s, spec_rest, spec_reco = model._forward(spec, instrument=sdss, z=z)\n\n# only encode into latents\nwith torch.no_grad():\n s = model.encode(spec)\n```\n\nPlotting the results of the above nicely shows what spender can do:\n\n\n\nNoteworthy aspects: The restframe model has an extended wavelength range, e.g. predicting the [O II] doublet that was not observed in the first example, and being unaffected by glitches like the skyline residuals at about 5840 A in the second example.\n",

"bugtrack_url": null,

"license": "MIT",

"summary": "Spectrum encoder and decoder",

"version": "0.2.6",

"project_urls": {

"Homepage": "https://github.com/pmelchior/spender"

},

"split_keywords": [

"spectroscopy",

" autoencoder"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "43adc18a1ed610aa01444443a3f8724b90f718f75abc112dbbe3fda7c0050dc7",

"md5": "20d6bc6a4bf1a66d39b3f293042cf871",

"sha256": "1af26863cfb6378794b90e498da89fc705d2bda13dfe27af6e84a6c314520a56"

},

"downloads": -1,

"filename": "spender-0.2.6-py2.py3-none-any.whl",

"has_sig": false,

"md5_digest": "20d6bc6a4bf1a66d39b3f293042cf871",

"packagetype": "bdist_wheel",

"python_version": "py2.py3",

"requires_python": null,

"size": 36327,

"upload_time": "2024-05-27T21:56:07",

"upload_time_iso_8601": "2024-05-27T21:56:07.823811Z",

"url": "https://files.pythonhosted.org/packages/43/ad/c18a1ed610aa01444443a3f8724b90f718f75abc112dbbe3fda7c0050dc7/spender-0.2.6-py2.py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "09b454081b2ecbe6663ae3ff4fb5ad9442f16fce728c754951b3c43640987ae6",

"md5": "715938a0eec5d1b54310a56657cc6730",

"sha256": "6581bdeaa4a9672727c7f181cf639c117899c87fd54dd2929482aed5a80d32ef"

},

"downloads": -1,

"filename": "spender-0.2.6.tar.gz",

"has_sig": false,

"md5_digest": "715938a0eec5d1b54310a56657cc6730",

"packagetype": "sdist",

"python_version": "source",

"requires_python": null,

"size": 33197,

"upload_time": "2024-05-27T21:56:09",

"upload_time_iso_8601": "2024-05-27T21:56:09.007470Z",

"url": "https://files.pythonhosted.org/packages/09/b4/54081b2ecbe6663ae3ff4fb5ad9442f16fce728c754951b3c43640987ae6/spender-0.2.6.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2024-05-27 21:56:09",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "pmelchior",

"github_project": "spender",

"travis_ci": false,

"coveralls": false,

"github_actions": false,

"lcname": "spender"

}