| Name | struct-strm JSON |

| Version |

0.0.12

JSON

JSON |

| download |

| home_page | None |

| Summary | Stream partial json generated by LLMs into valid json responses |

| upload_time | 2025-08-10 23:02:51 |

| maintainer | None |

| docs_url | None |

| author | None |

| requires_python | >=3.10 |

| license | None |

| keywords |

htmx

json

llm

streaming

web components

|

| VCS |

|

| bugtrack_url |

|

| requirements |

No requirements were recorded.

|

| Travis-CI |

No Travis.

|

| coveralls test coverage |

No coveralls.

|

<div align="center">

<img src="https://raw.githubusercontent.com/PrestonBlackburn/structured_streamer/refs/heads/gh-pages/img/logo_bg_wide.png" alt="Struct Strm Logo" width="750" role="img">

| | |

| --- | --- |

| CI/CD | [](https://github.com/PrestonBlackburn/structured_streamer/actions/workflows/test_versions.yaml) [](https://github.com/PrestonBlackburn/structured_streamer/actions/workflows/release.yaml)|

| Package | [](https://pypi.org/project/struct-strm/) [](https://pypi.org/project/struct-strm/) |

| Meta | [](https://github.com/psf/black) [](https://github.com/PrestonBlackburn/structured_streamer/blob/main/LICENSE)

</div>

<br/>

# Structured Streamer

**struct_strm** (structured streamer) is a Python package that makes it easy to stream partial json generated by LLMs into valid json responses. This enables partial rendering of UI components without needing to wait for a full response, drastically reducing the time to the first word on the user's screen.

## Why Use Structured Streamer?

JSON format is the standard when dealing with structured responses from LLMs. In the early days of LLM structured generation we had to validate the JSON response only after the whole JSON response had been returned. Modern approaches use constrained decoding to ensure that only valid json is returned, eliminating the need for post generation validation, and allowing us to use the response imediately. However, the streamed json response is incomplete, so it can't be parsed using traditional methods. This library aims to make it easier to handle this partially generated json to provide a better end user experience.

See the [benchmarks](./benchmarks.md) section in the docs for more details about how this library can speed up your structured response processing.

<br/>

You can learn more about constrained decoding and context free grammar here: [XGrammar - Achieving Efficient, Flexible, and Portable Structured Generation with XGrammar](https://blog.mlc.ai/2024/11/22/achieving-efficient-flexible-portable-structured-generation-with-xgrammar)

### Installation

```bash

pip install struct-strm

```

<br/>

## Main Features

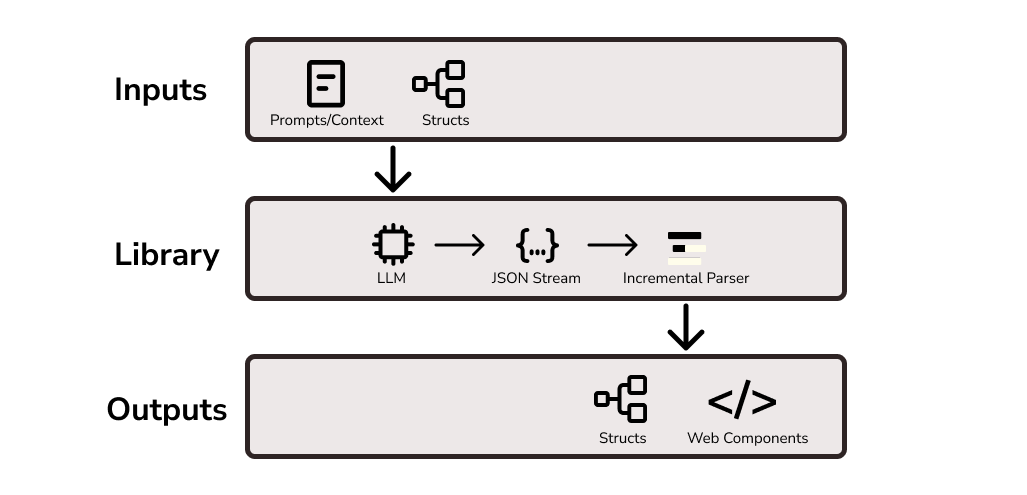

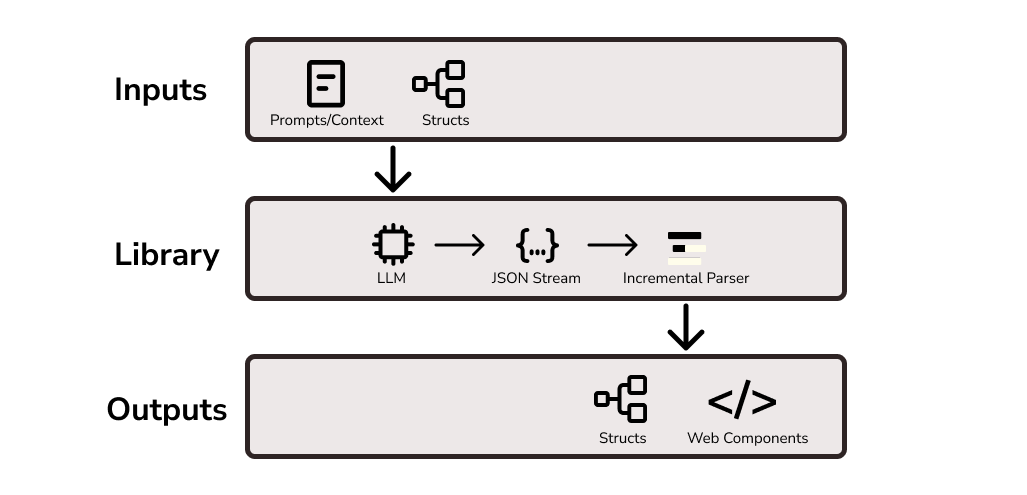

The primary feature is to wrap LLM outputs to produce valid incremental JSON from partial invalid JSON based on user provided structures. Effectively this acts as a wrapper for your LLM calls. Due to the nature of this library (it is primarily inteded for use in web servers), it is expected that it will be used in async workflows, and is async first.

The library also provides simple HTML templates that serve as examples of how you can integrate the streams in your own components.

Due to the nature of partial json streaming, there can be "wrong" ways to stream responses that are not effective for partial rendering of responeses in the UI. The library also provides examples of tested ways to apply the library to get good results.

**High Level Flow**

## Example Component

This is an example of a form component being incrementally rendered. By using a structured query response from an LLM, in this case a form with form field names and field placeholders, we can stream the form results directly to a HTML component. This drastically reduces the time to first token, and the precieved time that a user needs to wait. More advanced components are under development.

```python

from stuct_strm import parse_openai

from pydantic import BaseModel

from openai import AsyncOpenAI

...

class DefaultFormItem(BaseModel):

field_name: str = ""

field_placeholder: str = ""

class DefaultFormStruct(BaseModel):

form_fields: List[DefaultFormItem] = []

stream_response = client.beta.chat.completions.stream(

model="gpt-4.1",

messages=messages,

response_format=DefaultFormStruct,

temperature=0.0,

)

form_struct_response = parse_openai(DefaultFormStruct, stream_response)

async for instance in form_struct_response:

async for formstruct in instance:

print(formstruct)

```

Fully formed python classes are returned:

```bash

>>> DefaultFormStruct(form_fields=[DefaultFormItem(field_name="fruits", field_placeholder="")])

>>> DefaultFormStruct(form_fields=[DefaultFormItem(field_name="fruits", field_placeholder="apple ")])

>>> DefaultFormStruct(form_fields=[DefaultFormItem(field_name="fruits", field_placeholder="apple orange strawberry")])

>>> etc....

```

And the corresponding incomplete json string streams would have looked like:

```txt

>>> "{"form_fields": [{"field_name": "fruits"

>>> "{"form_fields": [{"field_name": "fruits", "field_placeholder": "apple "

>>> "{"form_fields": [{"field_name": "fruits", "field_placeholder": "apple orange strawberry"}

>>> etc...

```

### Component Streaming

The structured responses can then be easily used to generate incrementally rendered web components.

For example this form:

<br/>

Or we can return data in a grid in more interesting ways.

For example this rubric:

## Other

I started **struct_strm** to support another project I'm working on to provide an easy entrypoint for Teachers to use LLM tools in their workflows. Check it out if you're interested - [Teachers PET](https://www.teacherspet.tech/)

Raw data

{

"_id": null,

"home_page": null,

"name": "struct-strm",

"maintainer": null,

"docs_url": null,

"requires_python": ">=3.10",

"maintainer_email": null,

"keywords": "htmx, json, llm, streaming, web components",

"author": null,

"author_email": "Preston Blackburn <prestonblckbrn@gmail.com>",

"download_url": "https://files.pythonhosted.org/packages/73/e6/53a0c22d73887f2bcf58613ee5f0b8ecd9a2dc56fe92243cd0a0f594ca2b/struct_strm-0.0.12.tar.gz",

"platform": null,

"description": "\n<div align=\"center\">\n\n<img src=\"https://raw.githubusercontent.com/PrestonBlackburn/structured_streamer/refs/heads/gh-pages/img/logo_bg_wide.png\" alt=\"Struct Strm Logo\" width=\"750\" role=\"img\">\n\n| | |\n| --- | --- |\n| CI/CD | [](https://github.com/PrestonBlackburn/structured_streamer/actions/workflows/test_versions.yaml) [](https://github.com/PrestonBlackburn/structured_streamer/actions/workflows/release.yaml)| \n| Package | [](https://pypi.org/project/struct-strm/) [](https://pypi.org/project/struct-strm/) |\n| Meta | [](https://github.com/psf/black) [](https://github.com/PrestonBlackburn/structured_streamer/blob/main/LICENSE) \n\n\n</div>\n\n<br/>\n\n\n# Structured Streamer\n\n**struct_strm** (structured streamer) is a Python package that makes it easy to stream partial json generated by LLMs into valid json responses. This enables partial rendering of UI components without needing to wait for a full response, drastically reducing the time to the first word on the user's screen.\n\n## Why Use Structured Streamer?\n\nJSON format is the standard when dealing with structured responses from LLMs. In the early days of LLM structured generation we had to validate the JSON response only after the whole JSON response had been returned. Modern approaches use constrained decoding to ensure that only valid json is returned, eliminating the need for post generation validation, and allowing us to use the response imediately. However, the streamed json response is incomplete, so it can't be parsed using traditional methods. This library aims to make it easier to handle this partially generated json to provide a better end user experience. \nSee the [benchmarks](./benchmarks.md) section in the docs for more details about how this library can speed up your structured response processing. \n\n<br/>\n\nYou can learn more about constrained decoding and context free grammar here: [XGrammar - Achieving Efficient, Flexible, and Portable Structured Generation with XGrammar](https://blog.mlc.ai/2024/11/22/achieving-efficient-flexible-portable-structured-generation-with-xgrammar) \n\n\n### Installation\n\n```bash\npip install struct-strm\n```\n\n<br/>\n\n## Main Features\n\nThe primary feature is to wrap LLM outputs to produce valid incremental JSON from partial invalid JSON based on user provided structures. Effectively this acts as a wrapper for your LLM calls. Due to the nature of this library (it is primarily inteded for use in web servers), it is expected that it will be used in async workflows, and is async first. \n\nThe library also provides simple HTML templates that serve as examples of how you can integrate the streams in your own components. \n\nDue to the nature of partial json streaming, there can be \"wrong\" ways to stream responses that are not effective for partial rendering of responeses in the UI. The library also provides examples of tested ways to apply the library to get good results. \n\n**High Level Flow** \n\n\n\n\n## Example Component\nThis is an example of a form component being incrementally rendered. By using a structured query response from an LLM, in this case a form with form field names and field placeholders, we can stream the form results directly to a HTML component. This drastically reduces the time to first token, and the precieved time that a user needs to wait. More advanced components are under development. \n\n\n```python\nfrom stuct_strm import parse_openai\nfrom pydantic import BaseModel\nfrom openai import AsyncOpenAI\n\n...\n\nclass DefaultFormItem(BaseModel):\n field_name: str = \"\"\n field_placeholder: str = \"\"\n\nclass DefaultFormStruct(BaseModel):\n form_fields: List[DefaultFormItem] = []\n\n\nstream_response = client.beta.chat.completions.stream(\n model=\"gpt-4.1\",\n messages=messages,\n response_format=DefaultFormStruct,\n temperature=0.0,\n) \n\nform_struct_response = parse_openai(DefaultFormStruct, stream_response)\nasync for instance in form_struct_response:\n async for formstruct in instance:\n print(formstruct)\n```\n\n\nFully formed python classes are returned:\n```bash\n>>> DefaultFormStruct(form_fields=[DefaultFormItem(field_name=\"fruits\", field_placeholder=\"\")])\n>>> DefaultFormStruct(form_fields=[DefaultFormItem(field_name=\"fruits\", field_placeholder=\"apple \")])\n>>> DefaultFormStruct(form_fields=[DefaultFormItem(field_name=\"fruits\", field_placeholder=\"apple orange strawberry\")])\n>>> etc....\n```\n\nAnd the corresponding incomplete json string streams would have looked like:\n```txt\n>>> \"{\"form_fields\": [{\"field_name\": \"fruits\"\n>>> \"{\"form_fields\": [{\"field_name\": \"fruits\", \"field_placeholder\": \"apple \"\n>>> \"{\"form_fields\": [{\"field_name\": \"fruits\", \"field_placeholder\": \"apple orange strawberry\"}\n>>> etc...\n```\n\n### Component Streaming\nThe structured responses can then be easily used to generate incrementally rendered web components. \nFor example this form: \n\n\n\n<br/>\n\nOr we can return data in a grid in more interesting ways. \nFor example this rubric: \n\n\n\n## Other\n\nI started **struct_strm** to support another project I'm working on to provide an easy entrypoint for Teachers to use LLM tools in their workflows. Check it out if you're interested - [Teachers PET](https://www.teacherspet.tech/)",

"bugtrack_url": null,

"license": null,

"summary": "Stream partial json generated by LLMs into valid json responses",

"version": "0.0.12",

"project_urls": {

"Documentation": "https://prestonblackburn.github.io/structured_streamer/",

"Homepage": "https://prestonblackburn.github.io/structured_streamer/",

"Issues": "https://github.com/PrestonBlackburn/structured_streamer/issues",

"Repository": "https://github.com/PrestonBlackburn/structured_streamer"

},

"split_keywords": [

"htmx",

" json",

" llm",

" streaming",

" web components"

],

"urls": [

{

"comment_text": null,

"digests": {

"blake2b_256": "77e1e8bd855b9c02e07a1c8bc37655620168fb158ac4d9e86d3e7f201223e8ff",

"md5": "ffc9932715fa8d2527d4a1895d09d6e9",

"sha256": "6e89df40275f844fc08baa8fc14590c307572d31a378a9a797eafcc24fc3d3eb"

},

"downloads": -1,

"filename": "struct_strm-0.0.12-py3-none-any.whl",

"has_sig": false,

"md5_digest": "ffc9932715fa8d2527d4a1895d09d6e9",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.10",

"size": 29574,

"upload_time": "2025-08-10T23:02:50",

"upload_time_iso_8601": "2025-08-10T23:02:50.580732Z",

"url": "https://files.pythonhosted.org/packages/77/e1/e8bd855b9c02e07a1c8bc37655620168fb158ac4d9e86d3e7f201223e8ff/struct_strm-0.0.12-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": null,

"digests": {

"blake2b_256": "73e653a0c22d73887f2bcf58613ee5f0b8ecd9a2dc56fe92243cd0a0f594ca2b",

"md5": "b4727a87b02d1e2bbd3fb5daeeb89bd0",

"sha256": "811183116277b89c0c0b06bb1dbefc9b28e5891ba255c0bfdaafa3911f9e8af9"

},

"downloads": -1,

"filename": "struct_strm-0.0.12.tar.gz",

"has_sig": false,

"md5_digest": "b4727a87b02d1e2bbd3fb5daeeb89bd0",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.10",

"size": 17219,

"upload_time": "2025-08-10T23:02:51",

"upload_time_iso_8601": "2025-08-10T23:02:51.939695Z",

"url": "https://files.pythonhosted.org/packages/73/e6/53a0c22d73887f2bcf58613ee5f0b8ecd9a2dc56fe92243cd0a0f594ca2b/struct_strm-0.0.12.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2025-08-10 23:02:51",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "PrestonBlackburn",

"github_project": "structured_streamer",

"travis_ci": false,

"coveralls": false,

"github_actions": true,

"lcname": "struct-strm"

}