<pre style="color: lime; background-color: black;">

███████╗██╗ ██╗██████╗ ██╗ ██╗██╗███████╗

██╔════╝██║ ██║██╔══██╗ ██║ ██║██║╚══███╔╝

███████╗██║ ██║██████╔╝ ██║ █╗ ██║██║ ███╔╝

╚════██║██║ ██║██╔══██╗ ██║███╗██║██║ ███╔╝

███████║╚██████╔╝██████╔╝ ╚███╔███╔╝██║███████╗

╚══════╝ ╚═════╝ ╚═════╝ ╚══╝╚══╝ ╚═╝╚══════╝

</pre>

A lightweight GPT model, trained to discover subdomains.

### Installation

```pipx install subwiz```

OR

```pip install subwiz```

### Recommended Use

Use [subfinder](https://github.com/projectdiscovery/subfinder) ❤️ to find subdomains from passive sources:

```subfinder -d example.com -o subdomains.txt```

Seed subwiz with these subdomains:

```subwiz -i subdomains.txt```

### Supported Switches

```commandline

usage: cli.py [-h] -i INPUT_FILE [-o OUTPUT_FILE] [-n NUM_PREDICTIONS] [--no-resolve]

[--force-download] [--max-recursion MAX_RECURSION] [-t TEMPERATURE]

[-d {auto,cpu,cuda,mps}] [-q MAX_NEW_TOKENS]

[--resolution-concurrency RESOLUTION_CONCURRENCY] [--multi-apex]

options:

-h, --help show this help message and exit

-i INPUT_FILE, --input-file INPUT_FILE

file containing new-line-separated subdomains. (default: None)

-o OUTPUT_FILE, --output-file OUTPUT_FILE

output file to write new-line separated subdomains to. (default: None)

-n NUM_PREDICTIONS, --num-predictions NUM_PREDICTIONS

number of subdomains to predict. (default: 500)

--no-resolve do not resolve the output subdomains. (default: False)

--force-download download model and tokenizer files, even if cached. (default: False)

--max-recursion MAX_RECURSION

maximum number of times the inference process will recursively re-run

after finding new subdomains. (default: 5)

-t TEMPERATURE, --temperature TEMPERATURE

add randomness to the model (recommended ≤ 0.3). (default: 0.0)

-d {auto,cpu,cuda,mps}, --device {auto,cpu,cuda,mps}

hardware to run the transformer model on. (default: auto)

-q MAX_NEW_TOKENS, --max-new-tokens MAX_NEW_TOKENS

maximum length of predicted subdomains in tokens. (default: 10)

--resolution-concurrency RESOLUTION_CONCURRENCY

number of concurrent resolutions. (default: 128)

--multi-apex allow multiple apex domains in the input file. runs inference for each

apex separately. (default: False)

```

### In Python

Use subwiz in Python, with the same parameters as the command line interface.

```

import subwiz

known_subdomains = ['test1.example.com', 'test2.example.com']

new_subdomains = subwiz.run(input_domains=known_subdomains)

```

---

### Model

Use the `--no-resolve` flag to inspect model outputs without checking if they resolve.

#### Architecture

Subwiz is a ultra-lightweight transformer model based on [nanoGPT](https://github.com/karpathy/nanoGPT/tree/master) ❤️:

- 17.3M parameters.

- Trained on 26M tokens, lists of subdomains from passive sources.

- Tokenizer trained on same lists of subdomains (8192 tokens).

#### Hugging Face

The model is saved in Hugging Face as [HadrianSecurity/subwiz](https://huggingface.co/HadrianSecurity/subwiz).

It is downloaded when you first run subwiz.

#### Inference

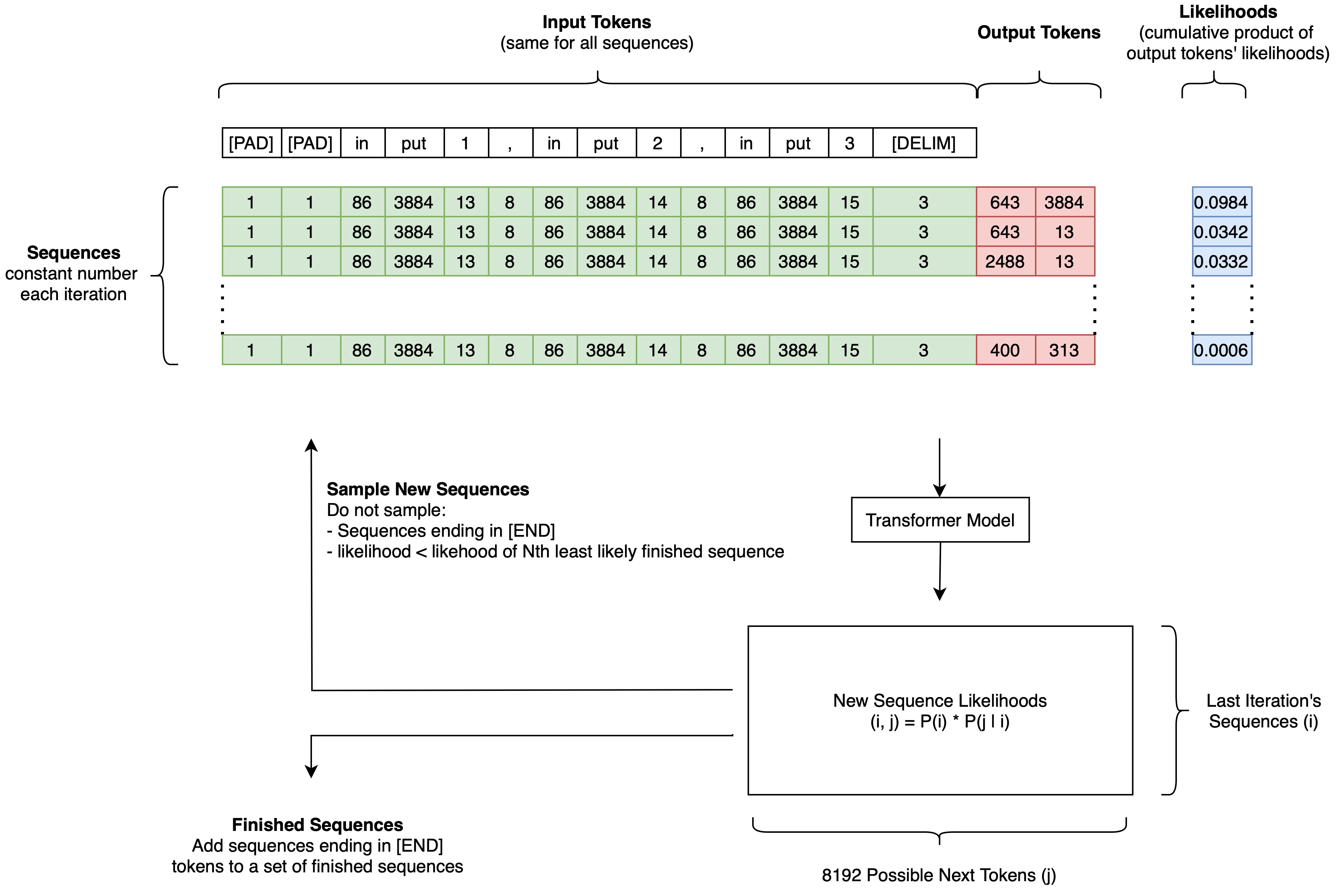

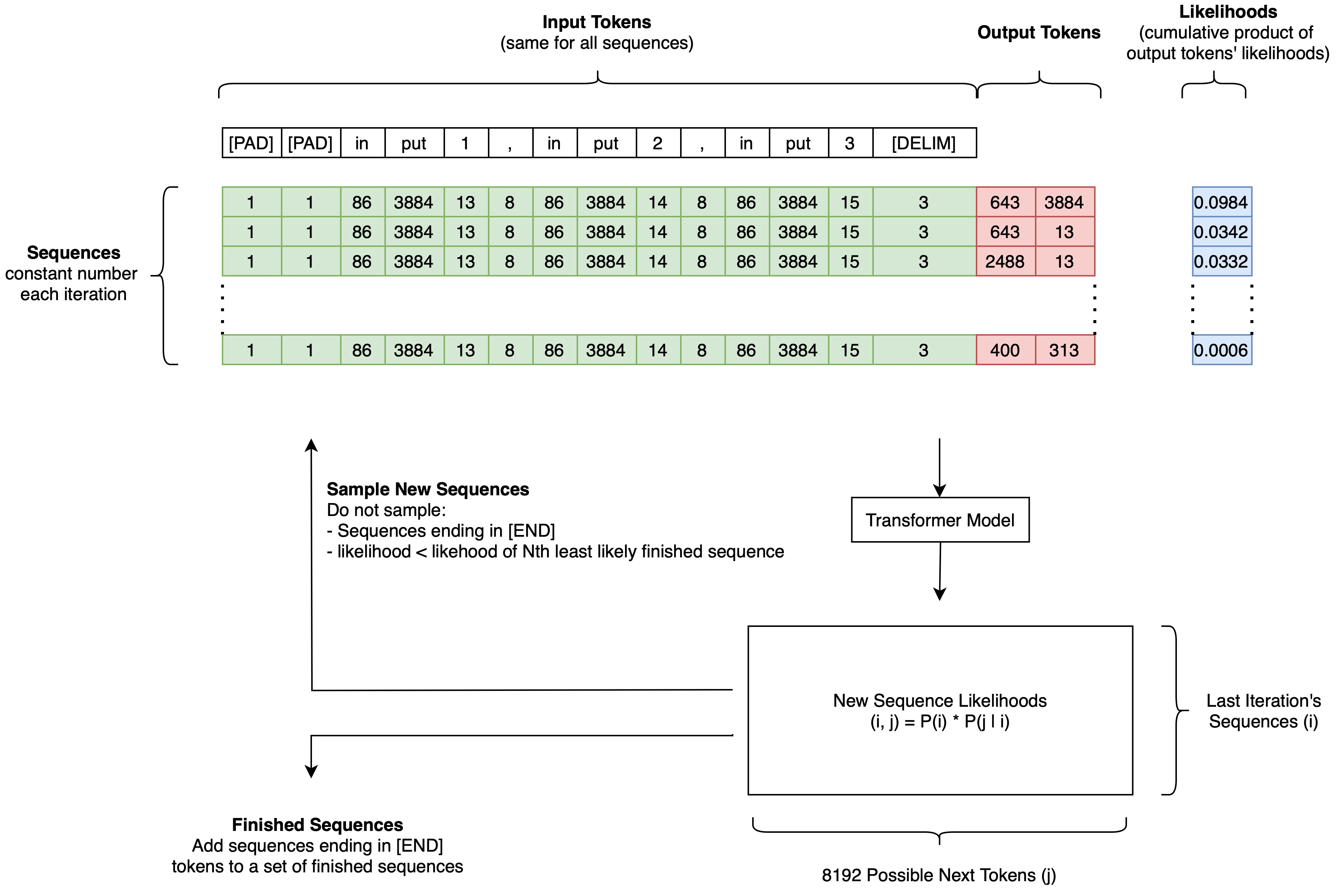

Typically, generative transformer models (e.g. ChatGPT) predict a single output sequence.

Subwiz predicts the N most likely sequences using a beam search algorithm.

*Beam search algorithm to predict the N most likely sequences using a generative transformer model.*

Raw data

{

"_id": null,

"home_page": null,

"name": "subwiz",

"maintainer": null,

"docs_url": null,

"requires_python": "<3.14,>=3.9",

"maintainer_email": null,

"keywords": "machine learning, recon, subdomains, transformers",

"author": null,

"author_email": "Klaas Meinke <klaas@hadrian.io>",

"download_url": "https://files.pythonhosted.org/packages/cf/3b/fe02a9291bfa08a9da9707fcda3043e31f707106b5427758430e1ad0595e/subwiz-0.4.1.tar.gz",

"platform": null,

"description": "<pre style=\"color: lime; background-color: black;\">\n\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2557\u2588\u2588\u2557 \u2588\u2588\u2557\u2588\u2588\u2588\u2588\u2588\u2588\u2557 \u2588\u2588\u2557 \u2588\u2588\u2557\u2588\u2588\u2557\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2557\n\u2588\u2588\u2554\u2550\u2550\u2550\u2550\u255d\u2588\u2588\u2551 \u2588\u2588\u2551\u2588\u2588\u2554\u2550\u2550\u2588\u2588\u2557 \u2588\u2588\u2551 \u2588\u2588\u2551\u2588\u2588\u2551\u255a\u2550\u2550\u2588\u2588\u2588\u2554\u255d\n\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2557\u2588\u2588\u2551 \u2588\u2588\u2551\u2588\u2588\u2588\u2588\u2588\u2588\u2554\u255d \u2588\u2588\u2551 \u2588\u2557 \u2588\u2588\u2551\u2588\u2588\u2551 \u2588\u2588\u2588\u2554\u255d \n\u255a\u2550\u2550\u2550\u2550\u2588\u2588\u2551\u2588\u2588\u2551 \u2588\u2588\u2551\u2588\u2588\u2554\u2550\u2550\u2588\u2588\u2557 \u2588\u2588\u2551\u2588\u2588\u2588\u2557\u2588\u2588\u2551\u2588\u2588\u2551 \u2588\u2588\u2588\u2554\u255d \n\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2551\u255a\u2588\u2588\u2588\u2588\u2588\u2588\u2554\u255d\u2588\u2588\u2588\u2588\u2588\u2588\u2554\u255d \u255a\u2588\u2588\u2588\u2554\u2588\u2588\u2588\u2554\u255d\u2588\u2588\u2551\u2588\u2588\u2588\u2588\u2588\u2588\u2588\u2557\n\u255a\u2550\u2550\u2550\u2550\u2550\u2550\u255d \u255a\u2550\u2550\u2550\u2550\u2550\u255d \u255a\u2550\u2550\u2550\u2550\u2550\u255d \u255a\u2550\u2550\u255d\u255a\u2550\u2550\u255d \u255a\u2550\u255d\u255a\u2550\u2550\u2550\u2550\u2550\u2550\u255d\n</pre>\n\nA lightweight GPT model, trained to discover subdomains.\n\n### Installation\n\n```pipx install subwiz```\n\nOR\n\n```pip install subwiz```\n\n### Recommended Use\n\nUse [subfinder](https://github.com/projectdiscovery/subfinder) \u2764\ufe0f to find subdomains from passive sources:\n\n```subfinder -d example.com -o subdomains.txt```\n\nSeed subwiz with these subdomains:\n\n```subwiz -i subdomains.txt```\n\n### Supported Switches\n\n```commandline\nusage: cli.py [-h] -i INPUT_FILE [-o OUTPUT_FILE] [-n NUM_PREDICTIONS] [--no-resolve]\n [--force-download] [--max-recursion MAX_RECURSION] [-t TEMPERATURE]\n [-d {auto,cpu,cuda,mps}] [-q MAX_NEW_TOKENS]\n [--resolution-concurrency RESOLUTION_CONCURRENCY] [--multi-apex]\n\noptions:\n -h, --help show this help message and exit\n -i INPUT_FILE, --input-file INPUT_FILE\n file containing new-line-separated subdomains. (default: None)\n -o OUTPUT_FILE, --output-file OUTPUT_FILE\n output file to write new-line separated subdomains to. (default: None)\n -n NUM_PREDICTIONS, --num-predictions NUM_PREDICTIONS\n number of subdomains to predict. (default: 500)\n --no-resolve do not resolve the output subdomains. (default: False)\n --force-download download model and tokenizer files, even if cached. (default: False)\n --max-recursion MAX_RECURSION\n maximum number of times the inference process will recursively re-run\n after finding new subdomains. (default: 5)\n -t TEMPERATURE, --temperature TEMPERATURE\n add randomness to the model (recommended \u2264 0.3). (default: 0.0)\n -d {auto,cpu,cuda,mps}, --device {auto,cpu,cuda,mps}\n hardware to run the transformer model on. (default: auto)\n -q MAX_NEW_TOKENS, --max-new-tokens MAX_NEW_TOKENS\n maximum length of predicted subdomains in tokens. (default: 10)\n --resolution-concurrency RESOLUTION_CONCURRENCY\n number of concurrent resolutions. (default: 128)\n --multi-apex allow multiple apex domains in the input file. runs inference for each\n apex separately. (default: False)\n```\n\n### In Python\n\nUse subwiz in Python, with the same parameters as the command line interface.\n\n```\nimport subwiz\n\nknown_subdomains = ['test1.example.com', 'test2.example.com']\nnew_subdomains = subwiz.run(input_domains=known_subdomains)\n```\n\n---\n### Model\n\nUse the `--no-resolve` flag to inspect model outputs without checking if they resolve.\n\n#### Architecture\n\nSubwiz is a ultra-lightweight transformer model based on [nanoGPT](https://github.com/karpathy/nanoGPT/tree/master) \u2764\ufe0f:\n\n- 17.3M parameters.\n- Trained on 26M tokens, lists of subdomains from passive sources.\n- Tokenizer trained on same lists of subdomains (8192 tokens).\n\n#### Hugging Face\nThe model is saved in Hugging Face as [HadrianSecurity/subwiz](https://huggingface.co/HadrianSecurity/subwiz).\nIt is downloaded when you first run subwiz.\n\n#### Inference\n\nTypically, generative transformer models (e.g. ChatGPT) predict a single output sequence.\nSubwiz predicts the N most likely sequences using a beam search algorithm.\n\n\n\n*Beam search algorithm to predict the N most likely sequences using a generative transformer model.*\n",

"bugtrack_url": null,

"license": "MIT License",

"summary": "A lightweight GPT model, trained to discover subdomains.",

"version": "0.4.1",

"project_urls": {

"Source": "https://github.com/hadriansecurity/subwiz"

},

"split_keywords": [

"machine learning",

" recon",

" subdomains",

" transformers"

],

"urls": [

{

"comment_text": null,

"digests": {

"blake2b_256": "daa2cf44515428b3876dadee094b580a36048ea69437f6dcbca5775f0a6a77be",

"md5": "ddeb0f5b7cadb002b4e78e9a924389a6",

"sha256": "5cd1aa969fc9fb8f2325e61a402f99f637ce9513a314cf074d9feac0f390e0b5"

},

"downloads": -1,

"filename": "subwiz-0.4.1-py3-none-any.whl",

"has_sig": false,

"md5_digest": "ddeb0f5b7cadb002b4e78e9a924389a6",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": "<3.14,>=3.9",

"size": 16065,

"upload_time": "2025-08-22T13:34:57",

"upload_time_iso_8601": "2025-08-22T13:34:57.686545Z",

"url": "https://files.pythonhosted.org/packages/da/a2/cf44515428b3876dadee094b580a36048ea69437f6dcbca5775f0a6a77be/subwiz-0.4.1-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": null,

"digests": {

"blake2b_256": "cf3bfe02a9291bfa08a9da9707fcda3043e31f707106b5427758430e1ad0595e",

"md5": "ab228d0d6cc490c6641782a65db05493",

"sha256": "d635b9a3286a60a1fb6fba83cd4ff4dfd81ebe069fbff93d6711726ceb03c324"

},

"downloads": -1,

"filename": "subwiz-0.4.1.tar.gz",

"has_sig": false,

"md5_digest": "ab228d0d6cc490c6641782a65db05493",

"packagetype": "sdist",

"python_version": "source",

"requires_python": "<3.14,>=3.9",

"size": 329274,

"upload_time": "2025-08-22T13:34:59",

"upload_time_iso_8601": "2025-08-22T13:34:59.687657Z",

"url": "https://files.pythonhosted.org/packages/cf/3b/fe02a9291bfa08a9da9707fcda3043e31f707106b5427758430e1ad0595e/subwiz-0.4.1.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2025-08-22 13:34:59",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "hadriansecurity",

"github_project": "subwiz",

"travis_ci": false,

"coveralls": false,

"github_actions": true,

"requirements": [

{

"name": "aiodns",

"specs": [

[

">=",

"1.0.0"

]

]

},

{

"name": "huggingface-hub",

"specs": [

[

">=",

"0.5.0"

]

]

},

{

"name": "pydantic",

"specs": [

[

">=",

"1.10.0"

]

]

},

{

"name": "pytest",

"specs": [

[

">=",

"8.0.0"

]

]

},

{

"name": "tldextract",

"specs": [

[

">=",

"3.0.0"

]

]

},

{

"name": "torch",

"specs": [

[

">=",

"2.3.0"

]

]

},

{

"name": "transformers",

"specs": [

[

">=",

"4.34.0"

]

]

}

],

"lcname": "subwiz"

}