# tableschema-py

[](https://github.com/frictionlessdata/tableschema-py/actions)

[](https://coveralls.io/r/frictionlessdata/tableschema-py?branch=master)

[](https://pypi.python.org/pypi/tableschema)

[](https://github.com/frictionlessdata/tableschema-py)

[](https://discord.com/channels/695635777199145130/695635777199145133)

A Python implementation of the [Table Schema](http://specs.frictionlessdata.io/table-schema/) standard.

> **[Important Notice]** We have released [Frictionless Framework](https://github.com/frictionlessdata/framework). This framework provides improved `tableschema` functionality extended to be a complete data solution. The change in not breaking for the existing software so no actions are required. Please read the [Migration Guide](https://framework.frictionlessdata.io/docs/codebase/migration.html) from `tableschema` to Frictionless Framework.

## Features

- `Table` to work with data tables described by Table Schema

- `Schema` representing Table Schema

- `Field` representing Table Schema field

- `validate` to validate Table Schema

- `infer` to infer Table Schema from data

- built-in command-line interface to validate and infer schemas

- storage/plugins system to connect tables to different storage backends like SQL Database

## Contents

<!--TOC-->

- [Getting Started](#getting-started)

- [Installation](#installation)

- [Documentation](#documentation)

- [Introduction](#introduction)

- [Working with Table](#working-with-table)

- [Working with Schema](#working-with-schema)

- [Working with Field](#working-with-field)

- [API Reference](#api-reference)

- [`cli`](#cli)

- [`Table`](#table)

- [`Schema`](#schema)

- [`Field`](#field)

- [`Storage`](#storage)

- [`validate`](#validate)

- [`infer`](#infer)

- [`FailedCast`](#failedcast)

- [`DataPackageException`](#datapackageexception)

- [`TableSchemaException`](#tableschemaexception)

- [`LoadError`](#loaderror)

- [`ValidationError`](#validationerror)

- [`CastError`](#casterror)

- [`IntegrityError`](#integrityerror)

- [`UniqueKeyError`](#uniquekeyerror)

- [`RelationError`](#relationerror)

- [`UnresolvedFKError`](#unresolvedfkerror)

- [`StorageError`](#storageerror)

- [Experimental](#experimental)

- [Contributing](#contributing)

- [Changelog](#changelog)

<!--TOC-->

## Getting Started

### Installation

The package uses semantic versioning. It means that major versions could include breaking changes. It's highly recommended to specify `tableschema` version range in your `setup/requirements` file e.g. `tableschema>=1.0,<2.0`.

```bash

$ pip install tableschema

```

## Documentation

### Introduction

Let's start with a simple example:

```python

from tableschema import Table

# Create table

table = Table('path.csv', schema='schema.json')

# Print schema descriptor

print(table.schema.descriptor)

# Print cast rows in a dict form

for keyed_row in table.iter(keyed=True):

print(keyed_row)

```

### Working with Table

A table is a core concept in a tabular data world. It represents data with metadata (Table Schema). Let's see how we can use it in practice.

Consider we have some local csv file. It could be inline data or from a remote link - all supported by the `Table` class (except local files for in-brower usage of course). But say it's `data.csv` for now:

```csv

city,location

london,"51.50,-0.11"

paris,"48.85,2.30"

rome,N/A

```

Let's create and read a table instance. We use the static `Table.load` method and the `table.read` method with the `keyed` option to get an array of keyed rows:

```python

table = Table('data.csv')

table.headers # ['city', 'location']

table.read(keyed=True)

# [

# {city: 'london', location: '51.50,-0.11'},

# {city: 'paris', location: '48.85,2.30'},

# {city: 'rome', location: 'N/A'},

# ]

```

As we can see, our locations are just strings. But they should be geopoints. Also, Rome's location is not available, but it's just a string `N/A` instead of `None`. First we have to infer Table Schema:

```python

table.infer()

table.schema.descriptor

# { fields:

# [ { name: 'city', type: 'string', format: 'default' },

# { name: 'location', type: 'geopoint', format: 'default' } ],

# missingValues: [ '' ] }

table.read(keyed=True)

# Fails with a data validation error

```

Let's fix the "not available" location. There is a `missingValues` property in Table Schema specification. As a first try we set `missingValues` to `N/A` in `table.schema.descriptor`. The schema descriptor can be changed in-place, but all changes should also be committed using `table.schema.commit()`:

```python

table.schema.descriptor['missingValues'] = 'N/A'

table.schema.commit()

table.schema.valid # false

table.schema.errors

# [<ValidationError: "'N/A' is not of type 'array'">]

```

As a good citizens we've decided to check our schema descriptor's validity. And it's not valid! We should use an array for the `missingValues` property. Also, don't forget to include "empty string" as a valid missing value:

```python

table.schema.descriptor['missingValues'] = ['', 'N/A']

table.schema.commit()

table.schema.valid # true

```

All good. It looks like we're ready to read our data again:

```python

table.read(keyed=True)

# [

# {city: 'london', location: [51.50,-0.11]},

# {city: 'paris', location: [48.85,2.30]},

# {city: 'rome', location: null},

# ]

```

Now we see that:

- locations are arrays with numeric latitude and longitude

- Rome's location is a native Python `None`

And because there are no errors after reading, we can be sure that our data is valid against our schema. Let's save it:

```python

table.schema.save('schema.json')

table.save('data.csv')

```

Our `data.csv` looks the same because it has been stringified back to `csv` format. But now we have `schema.json`:

```json

{

"fields": [

{

"name": "city",

"type": "string",

"format": "default"

},

{

"name": "location",

"type": "geopoint",

"format": "default"

}

],

"missingValues": [

"",

"N/A"

]

}

```

If we decide to improve it even more we could update the schema file and then open it again. But now providing a schema path:

```python

table = Table('data.csv', schema='schema.json')

# Continue the work

```

As already mentioned a given schema can be used to *validate* data (see the [Schema](#schema) section for schema specification details). In default mode invalid data rows immediately trigger an [exception](#exceptions) in the `table.iter()`/`table.write()` methods.

Suppose this schema-invalid local file `invalid_data.csv`:

```csv

key,value

zero,0

one,not_an_integer

two,2

```

We're going to validate the data against the following schema:

```python

table = Table(

'invalid_data.csv',

schema={'fields': [{'name': 'key'}, {'name': 'value', 'type': 'integer'}]})

```

Iterating over the data triggers an exception due to the failed cast of `'not_an_integer'` to `int`:

```python

for row in table.iter():

print(row)

# Traceback (most recent call last):

# ...

# tableschema.exceptions.CastError: There are 1 cast errors (see exception.errors) for row "3"

```

Hint: The row number count starts with 1 and also includes header lines.

(Note: You can optionally switch off `iter()`/`read()` value casting using the cast parameter, see reference below.)

By providing a custom exception handler (a callable) to those methods you can treat occurring exceptions at your own discretion, i.e. to "fail late" and e.g. gather a validation report on the whole data:

```python

errors = []

def exc_handler(exc, row_number=None, row_data=None, error_data=None):

errors.append((exc, row_number, row_data, error_data))

for row in table.iter(exc_handler=exc_handler):

print(row)

# ['zero', 0]

# ['one', FailedCast('not_an_integer')]

# ['two', 2]

print(errors)

# [(CastError('There are 1 cast errors (see exception.errors) for row "3"',),

# 3,

# OrderedDict([('key', 'one'), ('value', 'not_an_integer')]),

# OrderedDict([('value', 'not_an_integer')]))]

```

Note that

- Data rows are yielded even though the data is schema-invalid; this is due to our custom expression handler choosing not to raise exceptions (but rather collect them in the errors list).

- Data field values that can't get casted properly (if `iter()`/`read()` cast parameter is set to True, which is the default) are wrapped into a `FailedCast` "value holder". This allows for distinguishing uncasted values from successfully casted values on the data consumer side. `FailedCast` instances can only get yielded when custom exception handling is in place.

- The custom exception handler callable must support a function signature as specified in the `iter()`/`read()` sections of the `Table` class API reference.

### Working with Schema

A model of a schema with helpful methods for working with the schema and supported data. Schema instances can be initialized with a schema source as a url to a JSON file or a JSON object. The schema is initially validated (see [validate](#validate) below). By default validation errors will be stored in `schema.errors` but in a strict mode it will be instantly raised.

Let's create a blank schema. It's not valid because `descriptor.fields` property is required by the [Table Schema](http://specs.frictionlessdata.io/table-schema/) specification:

```python

schema = Schema()

schema.valid # false

schema.errors

# [<ValidationError: "'fields' is a required property">]

```

To avoid creating a schema descriptor by hand we will use a `schema.infer` method to infer the descriptor from given data:

```python

schema.infer([

['id', 'age', 'name'],

['1','39','Paul'],

['2','23','Jimmy'],

['3','36','Jane'],

['4','28','Judy'],

])

schema.valid # true

schema.descriptor

#{ fields:

# [ { name: 'id', type: 'integer', format: 'default' },

# { name: 'age', type: 'integer', format: 'default' },

# { name: 'name', type: 'string', format: 'default' } ],

# missingValues: [ '' ] }

```

Now we have an inferred schema and it's valid. We can cast data rows against our schema. We provide a string input which will be cast correspondingly:

```python

schema.cast_row(['5', '66', 'Sam'])

# [ 5, 66, 'Sam' ]

```

But if we try provide some missing value to the `age` field, the cast will fail because the only valid "missing" value is an empty string. Let's update our schema:

```python

schema.cast_row(['6', 'N/A', 'Walt'])

# Cast error

schema.descriptor['missingValues'] = ['', 'N/A']

schema.commit()

schema.cast_row(['6', 'N/A', 'Walt'])

# [ 6, None, 'Walt' ]

```

We can save the schema to a local file, and resume work on it at any time by loading it from that file:

```python

schema.save('schema.json')

schema = Schema('schema.json')

```

### Working with Field

```python

from tableschema import Field

# Init field

field = Field({'name': 'name', 'type': 'number'})

# Cast a value

field.cast_value('12345') # -> 12345

```

Data values can be cast to native Python objects with a Field instance. Type instances can be initialized with [field descriptors](https://specs.frictionlessdata.io/table-schema/). This allows formats and constraints to be defined.

Casting a value will check the value is of the expected type, is in the correct format, and complies with any constraints imposed by a schema. E.g. a date value (in ISO 8601 format) can be cast with a DateType instance. Values that can't be cast will raise an `InvalidCastError` exception.

Casting a value that doesn't meet the constraints will raise a `ConstraintError` exception.

## API Reference

### `cli`

```python

cli()

```

Command-line interface

```

Usage: tableschema [OPTIONS] COMMAND [ARGS]...

Options:

--help Show this message and exit.

Commands:

infer Infer a schema from data.

info Return info on this version of Table Schema

validate Validate that a supposed schema is in fact a Table Schema.

```

### `Table`

```python

Table(self,

source,

schema=None,

strict=False,

post_cast=[],

storage=None,

**options)

```

Table representation

__Arguments__

- __source (str/list[])__: data source one of:

- local file (path)

- remote file (url)

- array of arrays representing the rows

- __schema (any)__: data schema in all forms supported by `Schema` class

- __strict (bool)__: strictness option to pass to `Schema` constructor

- __post_cast (function[])__: list of post cast processors

- __storage (None)__: storage name like `sql` or `bigquery`

- __options (dict)__: `tabulator` or storage's options

__Raises__

- `TableSchemaException`: raises on any error

#### `table.hash`

Table's SHA256 hash if it's available.

If it's already read using e.g. `table.read`, otherwise returns `None`.

In the middle of an iteration it returns hash of already read contents

__Returns__

`str/None`: SHA256 hash

#### `table.headers`

Table's headers is available

__Returns__

`str[]`: headers

#### `table.schema`

Returns schema class instance if available

__Returns__

`Schema`: schema

#### `table.size`

Table's size in BYTES if it's available

If it's already read using e.g. `table.read`, otherwise returns `None`.

In the middle of an iteration it returns size of already read contents

__Returns__

`int/None`: size in BYTES

#### `table.iter`

```python

table.iter(keyed=False,

extended=False,

cast=True,

integrity=False,

relations=False,

foreign_keys_values=False,

exc_handler=None)

```

Iterates through the table data and emits rows cast based on table schema.

__Arguments__

keyed (bool):

yield keyed rows in a form of `{header1: value1, header2: value2}`

(default is false; the form of rows is `[value1, value2]`)

extended (bool):

yield extended rows in a for of `[rowNumber, [header1, header2], [value1, value2]]`

(default is false; the form of rows is `[value1, value2]`)

cast (bool):

disable data casting if false

(default is true)

integrity (dict):

dictionary in a form of `{'size': <bytes>, 'hash': '<sha256>'}`

to check integrity of the table when it's read completely.

Both keys are optional.

relations (dict):

dictionary of foreign key references in a form

of `{resource1: [{field1: value1, field2: value2}, ...], ...}`.

If provided, foreign key fields will checked and resolved

to one of their references (/!\ one-to-many fk are not completely resolved).

foreign_keys_values (dict):

three-level dictionary of foreign key references optimized

to speed up validation process in a form of

`{resource1: {(fk_field1, fk_field2): {(value1, value2): {one_keyedrow}, ... }}}`.

If not provided but relations is true, it will be created

before the validation process by *index_foreign_keys_values* method

exc_handler (func):

optional custom exception handler callable.

Can be used to defer raising errors (i.e. "fail late"), e.g.

for data validation purposes. Must support the signature below

__Custom exception handler__

```python

def exc_handler(exc, row_number=None, row_data=None, error_data=None):

'''Custom exception handler (example)

# Arguments:

exc(Exception):

Deferred exception instance

row_number(int):

Data row number that triggers exception exc

row_data(OrderedDict):

Invalid data row source data

error_data(OrderedDict):

Data row source data field subset responsible for the error, if

applicable (e.g. invalid primary or foreign key fields). May be

identical to row_data.

'''

# ...

```

__Raises__

- `TableSchemaException`: base class of any error

- `CastError`: data cast error

- `IntegrityError`: integrity checking error

- `UniqueKeyError`: unique key constraint violation

- `UnresolvedFKError`: unresolved foreign key reference error

__Returns__

`Iterator[list]`: yields rows

#### `table.read`

```python

table.read(keyed=False,

extended=False,

cast=True,

limit=None,

integrity=False,

relations=False,

foreign_keys_values=False,

exc_handler=None)

```

Read the whole table and return as array of rows

> It has the same API as `table.iter` except for

__Arguments__

- __limit (int)__: limit count of rows to read and return

__Returns__

`list[]`: returns rows

#### `table.infer`

```python

table.infer(limit=100,

confidence=0.75,

missing_values=[''],

guesser_cls=None,

resolver_cls=None)

```

Infer a schema for the table.

It will infer and set Table Schema to `table.schema` based on table data.

__Arguments__

- __limit (int)__: limit rows sample size

- __confidence (float)__: how many casting errors are allowed (as a ratio, between 0 and 1)

- __missing_values (str[])__: list of missing values (by default `['']`)

- __guesser_cls (class)__: you can implement inferring strategies by

providing type-guessing and type-resolving classes [experimental]

- __resolver_cls (class)__: you can implement inferring strategies by

providing type-guessing and type-resolving classes [experimental]

__Returns__

`dict`: Table Schema descriptor

#### `table.save`

```python

table.save(target, storage=None, **options)

```

Save data source to file locally in CSV format with `,` (comma) delimiter

> To save schema use `table.schema.save()`

__Arguments__

- __target (str)__: saving target (e.g. file path)

- __storage (None/str)__: storage name like `sql` or `bigquery`

- __options (dict)__: `tabulator` or storage options

__Raises__

- `TableSchemaException`: raises an error if there is saving problem

__Returns__

`True/Storage`: returns true or storage instance

#### `table.index_foreign_keys_values`

```python

table.index_foreign_keys_values(relations)

```

Creates a three-level dictionary of foreign key references

We create them optimized to speed up validation process in a form of

`{resource1: {(fk_field1, fk_field2): {(value1, value2): {one_keyedrow}, ... }}}`.

For each foreign key of the schema it will iterate through the corresponding

`relations['resource']` to create an index (i.e. a dict) of existing values

for the foreign fields and store on keyed row for each value combination.

The optimization relies on the indexation of possible values for one foreign key

in a hashmap to later speed up resolution.

This method is public to allow creating the index once to apply it

on multiple tables charing the same schema

(typically [grouped resources in datapackage](https://github.com/frictionlessdata/datapackage-py#group))

__Notes__

- the second key of the output is a tuple of the foreign fields,

a proxy identifier of the foreign key

- the same relation resource can be indexed multiple times

as a schema can contain more than one Foreign Keys

pointing to the same resource

__Arguments__

- __relations (dict)__:

dict of foreign key references in a form of

`{resource1: [{field1: value1, field2: value2}, ...], ...}`.

It must contain all resources pointed in the foreign keys schema definition.

__Returns__

`dict`:

returns a three-level dictionary of foreign key references

optimized to speed up validation process in a form of

`{resource1: {(fk_field1, fk_field2): {(value1, value2): {one_keyedrow}, ... }}})`

### `Schema`

```python

Schema(self, descriptor={}, strict=False)

```

Schema representation

__Arguments__

- __descriptor (str/dict)__: schema descriptor one of:

- local path

- remote url

- dictionary

- __strict (bool)__: flag to specify validation behaviour:

- if false, errors will not be raised but instead collected in `schema.errors`

- if true, validation errors are raised immediately

__Raises__

- `TableSchemaException`: raise any error that occurs during the process

#### `schema.descriptor`

Schema's descriptor

__Returns__

`dict`: descriptor

#### `schema.errors`

Validation errors

Always empty in strict mode.

__Returns__

`Exception[]`: validation errors

#### `schema.field_names`

Schema's field names

__Returns__

`str[]`: an array of field names

#### `schema.fields`

Schema's fields

__Returns__

`Field[]`: an array of field instances

#### `schema.foreign_keys`

Schema's foreign keys

__Returns__

`dict[]`: foreign keys

#### `schema.headers`

Schema's field names

__Returns__

`str[]`: an array of field names

#### `schema.missing_values`

Schema's missing values

__Returns__

`str[]`: missing values

#### `schema.primary_key`

Schema's primary keys

__Returns__

`str[]`: primary keys

#### `schema.valid`

Validation status

Always true in strict mode.

__Returns__

`bool`: validation status

#### `schema.get_field`

```python

schema.get_field(name)

```

Get schema's field by name.

> Use `table.update_field` if you want to modify the field descriptor

__Arguments__

- __name (str)__: schema field name

__Returns__

`Field/None`: `Field` instance or `None` if not found

#### `schema.get_field`

```python

schema.get_field(name)

```

Get schema's field by name.

> Use `table.update_field` if you want to modify the field descriptor

__Arguments__

- __name (str)__: schema field name

__Returns__

`Field/None`: `Field` instance or `None` if not found

#### `schema.add_field`

```python

schema.add_field(descriptor)

```

Add new field to schema.

The schema descriptor will be validated with newly added field descriptor.

__Arguments__

- __descriptor (dict)__: field descriptor

__Raises__

- `TableSchemaException`: raises any error that occurs during the process

__Returns__

`Field/None`: added `Field` instance or `None` if not added

#### `schema.update_field`

```python

schema.update_field(name, update)

```

Update existing descriptor field by name

__Arguments__

- __name (str)__: schema field name

- __update (dict)__: update to apply to field's descriptor

__Returns__

`bool`: true on success and false if no field is found to be modified

#### `schema.remove_field`

```python

schema.remove_field(name)

```

Remove field resource by name.

The schema descriptor will be validated after field descriptor removal.

__Arguments__

- __name (str)__: schema field name

__Raises__

- `TableSchemaException`: raises any error that occurs during the process

__Returns__

`Field/None`: removed `Field` instances or `None` if not found

#### `schema.cast_row`

```python

schema.cast_row(row, fail_fast=False, row_number=None, exc_handler=None)

```

Cast row based on field types and formats.

__Arguments__

- __row (any[]__: data row as an array of values

__Returns__

`any[]`: returns cast data row

#### `schema.infer`

```python

schema.infer(rows,

headers=1,

confidence=0.75,

guesser_cls=None,

resolver_cls=None)

```

Infer and set `schema.descriptor` based on data sample.

__Arguments__

- __rows (list[])__: array of arrays representing rows.

- __headers (int/str[])__: data sample headers (one of):

- row number containing headers (`rows` should contain headers rows)

- array of headers (`rows` should NOT contain headers rows)

- __confidence (float)__: how many casting errors are allowed (as a ratio, between 0 and 1)

- __guesser_cls (class)__: you can implement inferring strategies by

providing type-guessing and type-resolving classes [experimental]

- __resolver_cls (class)__: you can implement inferring strategies by

providing type-guessing and type-resolving classes [experimental]

__Returns__

`dict`: Table Schema descriptor

#### `schema.commit`

```python

schema.commit(strict=None)

```

Update schema instance if there are in-place changes in the descriptor.

__Example__

```python

from tableschema import Schema

descriptor = {'fields': [{'name': 'my_field', 'title': 'My Field', 'type': 'string'}]}

schema = Schema(descriptor)

print(schema.get_field('my_field').descriptor['type']) # string

# Update descriptor by field position

schema.descriptor['fields'][0]['type'] = 'number'

# Update descriptor by field name

schema.update_field('my_field', {'title': 'My Pretty Field'}) # True

# Change are not committed

print(schema.get_field('my_field').descriptor['type']) # string

print(schema.get_field('my_field').descriptor['title']) # My Field

# Commit change

schema.commit()

print(schema.get_field('my_field').descriptor['type']) # number

print(schema.get_field('my_field').descriptor['title']) # My Pretty Field

```

__Arguments__

- __strict (bool)__: alter `strict` mode for further work

__Raises__

- `TableSchemaException`: raises any error that occurs during the process

__Returns__

`bool`: true on success and false if not modified

#### `schema.save`

```python

schema.save(target, ensure_ascii=True)

```

Save schema descriptor to target destination.

__Arguments__

- __target (str)__: path where to save a descriptor

__Raises__

- `TableSchemaException`: raises any error that occurs during the process

__Returns__

`bool`: true on success

### `Field`

```python

Field(self, descriptor, missing_values=[''], schema=None)

```

Field representaion

__Arguments__

- __descriptor (dict)__: schema field descriptor

- __missingValues (str[])__: an array with string representing missing values

__Raises__

- `TableSchemaException`: raises any error that occurs during the process

#### `field.constraints`

Field constraints

__Returns__

`dict`: dict of field constraints

#### `field.descriptor`

Fields's descriptor

__Returns__

`dict`: descriptor

#### `field.format`

Field format

__Returns__

`str`: field format

#### `field.missing_values`

Field's missing values

__Returns__

`str[]`: missing values

#### `field.name`

Field name

__Returns__

`str`: field name

#### `field.required`

Whether field is required

__Returns__

`bool`: true if required

#### `field.schema`

Returns a schema instance if the field belongs to some schema

__Returns__

`Schema`: field's schema

#### `field.type`

Field type

__Returns__

`str`: field type

#### `field.cast_value`

```python

field.cast_value(value, constraints=True)

```

Cast given value according to the field type and format.

__Arguments__

- __value (any)__: value to cast against field

- __constraints (boll/str[])__: gets constraints configuration

- it could be set to true to disable constraint checks

- it could be an Array of constraints to check e.g. ['minimum', 'maximum']

__Raises__

- `TableSchemaException`: raises any error that occurs during the process

__Returns__

`any`: returns cast value

#### `field.test_value`

```python

field.test_value(value, constraints=True)

```

Test whether value is compliant to the field.

__Arguments__

- __value (any)__: value to cast against field

- __constraints (bool/str[])__: constraints configuration

__Returns__

`bool`: returns if value is compliant to the field

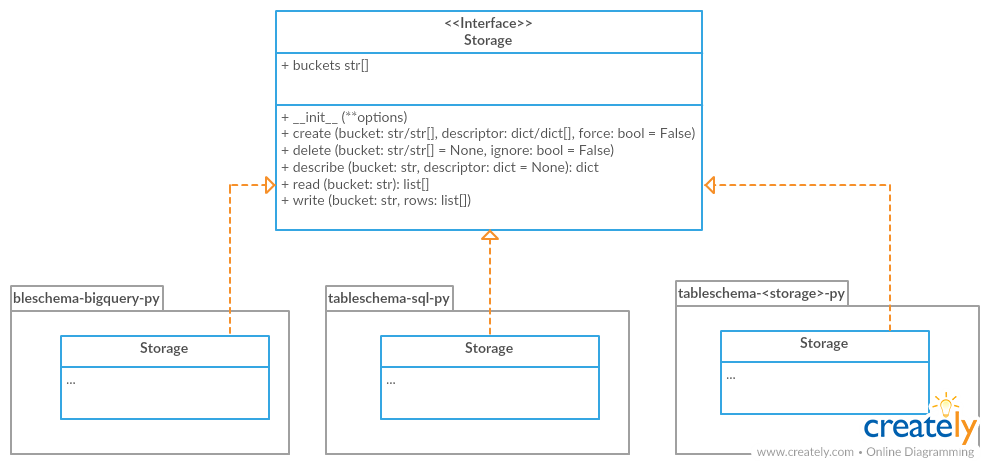

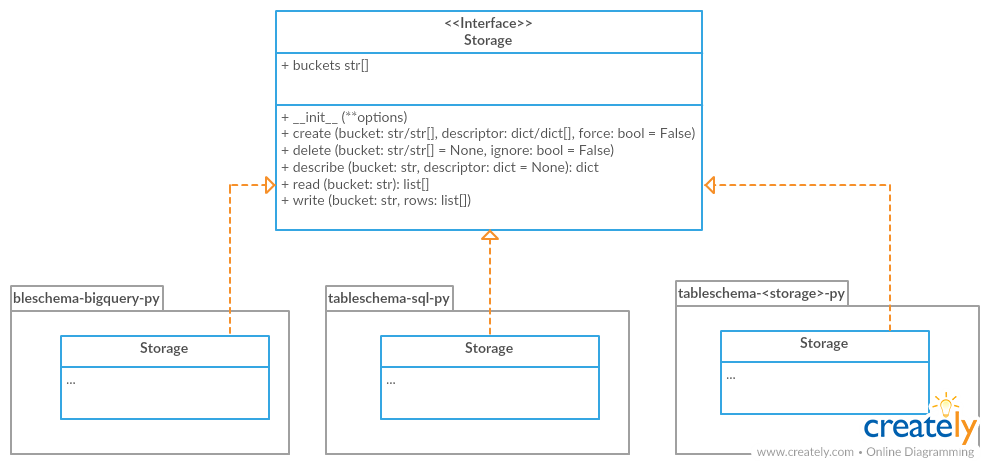

### `Storage`

```python

Storage(self, **options)

```

Storage factory/interface

__For users__

> Use `Storage.connect` to instantiate a storage

For instantiation of concrete storage instances,

`tableschema.Storage` provides a unified factory method `connect`

(which uses the plugin system under the hood):

```python

# pip install tableschema_sql

from tableschema import Storage

storage = Storage.connect('sql', **options)

storage.create('bucket', descriptor)

storage.write('bucket', rows)

storage.read('bucket')

```

__For integrators__

The library includes interface declaration to implement tabular `Storage`.

This interface allow to use different data storage systems like SQL

with `tableschema.Table` class (load/save) as well as on the data package level:

An implementor must follow `tableschema.Storage` interface

to write his own storage backend. Concrete storage backends

could include additional functionality specific to conrete storage system.

See `plugins` below to know how to integrate custom storage plugin into your workflow.

#### `storage.buckets`

Return list of storage bucket names.

A `bucket` is a special term which has almost the same meaning as `table`.

You should consider `bucket` as a `table` stored in the `storage`.

__Raises__

- `exceptions.StorageError`: raises on any error

__Returns__

`str[]`: return list of bucket names

#### `storage.connect`

```python

storage.connect(name, **options)

```

Create tabular `storage` based on storage name.

> This method is statis: `Storage.connect()`

__Arguments__

- __name (str)__: storage name like `sql`

- __options (dict)__: concrete storage options

__Raises__

- `StorageError`: raises on any error

__Returns__

`Storage`: returns `Storage` instance

#### `storage.create`

```python

storage.create(bucket, descriptor, force=False)

```

Create one/multiple buckets.

__Arguments__

- __bucket (str/list)__: bucket name or list of bucket names

- __descriptor (dict/dict[])__: schema descriptor or list of descriptors

- __force (bool)__: whether to delete and re-create already existing buckets

__Raises__

- `exceptions.StorageError`: raises on any error

#### `storage.delete`

```python

storage.delete(bucket=None, ignore=False)

```

Delete one/multiple/all buckets.

__Arguments__

- __bucket (str/list/None)__: bucket name or list of bucket names to delete.

If `None`, all buckets will be deleted

- __descriptor (dict/dict[])__: schema descriptor or list of descriptors

- __ignore (bool)__: don't raise an error on non-existent bucket deletion

__Raises__

- `exceptions.StorageError`: raises on any error

#### `storage.describe`

```python

storage.describe(bucket, descriptor=None)

```

Get/set bucket's Table Schema descriptor

__Arguments__

- __bucket (str)__: bucket name

- __descriptor (dict/None)__: schema descriptor to set

__Raises__

- `exceptions.StorageError`: raises on any error

__Returns__

`dict`: returns Table Schema descriptor

#### `storage.iter`

```python

storage.iter(bucket)

```

Return an iterator of typed values based on the schema of this bucket.

__Arguments__

- __bucket (str)__: bucket name

__Raises__

- `exceptions.StorageError`: raises on any error

__Returns__

`list[]`: yields data rows

#### `storage.read`

```python

storage.read(bucket)

```

Read typed values based on the schema of this bucket.

__Arguments__

- __bucket (str)__: bucket name

__Raises__

- `exceptions.StorageError`: raises on any error

__Returns__

`list[]`: returns data rows

#### `storage.write`

```python

storage.write(bucket, rows)

```

This method writes data rows into `storage`.

It should store values of unsupported types as strings internally (like csv does).

__Arguments__

- __bucket (str)__: bucket name

- __rows (list[])__: data rows to write

__Raises__

- `exceptions.StorageError`: raises on any error

### `validate`

```python

validate(descriptor)

```

Validate descriptor

__Arguments__

- __dict__: descriptor

__Raises__

- `ValidationError`: on validation errors

__Returns__

`bool`: True

### `infer`

```python

infer(source,

headers=1,

limit=100,

confidence=0.75,

missing_values=[''],

guesser_cls=None,

resolver_cls=None,

**options)

```

Infer source schema.

__Arguments__

- __source (any)__: source as path, url or inline data

- __headers (int/str[])__: headers rows number or headers list

- __confidence (float)__: how many casting errors are allowed (as a ratio, between 0 and 1)

- __missing_values (str[])__: list of missing values (by default `['']`)

- __guesser_cls (class)__: you can implement inferring strategies by

providing type-guessing and type-resolving classes [experimental]

- __resolver_cls (class)__: you can implement inferring strategies by

providing type-guessing and type-resolving classes [experimental]

__Raises__

- `TableSchemaException`: raises any error that occurs during the process

__Returns__

`dict`: returns schema descriptor

### `FailedCast`

```python

FailedCast(self, value)

```

Wrap an original data field value that failed to be properly casted.

FailedCast allows for further processing/yielding values but still be able

to distinguish uncasted values on the consuming side.

Delegates attribute access and the basic rich comparison methods to the

underlying object. Supports default user-defined classes hashability i.e.

is hashable based on object identity (not based on the wrapped value).

__Arguments__

- __value (any)__: value

### `DataPackageException`

```python

DataPackageException(self, message, errors=[])

```

Base class for all DataPackage/TableSchema exceptions.

If there are multiple errors, they can be read from the exception object:

```python

try:

# lib action

except DataPackageException as exception:

if exception.multiple:

for error in exception.errors:

# handle error

```

#### `datapackageexception.errors`

List of nested errors

__Returns__

`DataPackageException[]`: list of nested errors

#### `datapackageexception.multiple`

Whether it's a nested exception

__Returns__

`bool`: whether it's a nested exception

### `TableSchemaException`

```python

TableSchemaException(self, message, errors=[])

```

Base class for all TableSchema exceptions.

### `LoadError`

```python

LoadError(self, message, errors=[])

```

All loading errors.

### `ValidationError`

```python

ValidationError(self, message, errors=[])

```

All validation errors.

### `CastError`

```python

CastError(self, message, errors=[])

```

All value cast errors.

### `IntegrityError`

```python

IntegrityError(self, message, errors=[])

```

All integrity errors.

### `UniqueKeyError`

```python

UniqueKeyError(self, message, errors=[])

```

Unique key constraint violation (CastError subclass)

### `RelationError`

```python

RelationError(self, message, errors=[])

```

All relations errors.

### `UnresolvedFKError`

```python

UnresolvedFKError(self, message, errors=[])

```

Unresolved foreign key reference error (RelationError subclass).

### `StorageError`

```python

StorageError(self, message, errors=[])

```

All storage errors.

## Experimental

> This API is experimental and can be changed/removed in the future

There is an experimental environment variable `TABLESCHEMA_PRESERVE_MISSING_VALUES` which, if it is set, affects how data casting works.

By default, missing values are resolved to `None` values. When this flag is set, missing values are passed through as it is. For example:

> missing_values.py

```python

from tableschema import Field

field = Field({'type': 'number'}, missing_values=['-'])

print(field.cast_value('3'))

print(field.cast_value('-'))

```

Running this script in different modes:

```bash

$ python missing_values.py

3

None

$ TABLESCHEMA_PRESERVE_MISSING_VALUES=1 python missing_values.py

3

-

```

The flags affects all the library's APIs and software built on top of `tableschema`. For example, Data Package Pipelines:

```bash

$ TABLESCHEMA_PRESERVE_MISSING_VALUES=1 dpp run ./my_pipeline

```

## Contributing

> The project follows the [Open Knowledge International coding standards](https://github.com/okfn/coding-standards).

Recommended way to get started is to create and activate a project virtual environment.

To install package and development dependencies into active environment:

```bash

$ make install

```

To run tests with linting and coverage:

```bash

$ make test

```

## Changelog

Here described only breaking and the most important changes. The full changelog and documentation for all released versions can be found in the nicely formatted [commit history](https://github.com/frictionlessdata/tableschema-py/commits/master).

#### v1.20

- Added --json flag to the CLI (#287)

#### v1.19

- Deduplicate field names if guessing in infer

#### v1.18

- Publish `field.ERROR/cast_function/check_functions`

#### v1.17

- Added `schema.missing_values` and `field.missing_values`

#### v1.16

- Fixed the way we parse `geopoint`:

- as a string it can be in 3 forms ("default", "array", "object") BUT

- as native object it can only be a list/tuple

#### v1.15

- Added an experimental `TABLESCHEMA_PRESERVE_MISSING_VALUES` environment variable flag

#### v1.14

- Allow providing custom guesser and resolver to `table.infer` and `infer`

#### v1.13

- Added `missing_values` argument to the `infer` function (#269)

#### v1.12

- Support optional custom exception handling for table.iter/read (#259)

#### v1.11

- Added `preserve_missing_values` parameter to `field.cast_value`

#### v1.10

- Added an ability to check table's integrity while reading

#### v1.9

- Implemented the `table.size` and `table.hash` properties

#### v1.8

- Added `table.index_foreign_keys_values` and improved foreign key checks performance

#### v1.7

- Added `field.schema` property

#### v1.6

- In `strict` mode raise an exception if there are problems in field construction

#### v1.5

- Allow providing custom guesser and resolver to schema infer

#### v1.4

- Added `schema.update_field` method

#### v1.3

- Support datetime with no time for date casting

#### v1.2

- Support floats like 1.0 for integer casting

#### v1.1

- Added the `confidence` parameter to `infer`

#### v1.0

- The library has been rebased on the Frictionless Data specs v1 - https://frictionlessdata.io/specs/table-schema/

Raw data

{

"_id": null,

"home_page": "https://github.com/frictionlessdata/tableschema-py",

"name": "tableschema",

"maintainer": null,

"docs_url": null,

"requires_python": null,

"maintainer_email": null,

"keywords": "frictionless data, open data, json schema, table schema, data package, tabular data package",

"author": "Open Knowledge Foundation",

"author_email": "info@okfn.org",

"download_url": "https://files.pythonhosted.org/packages/43/b1/3a431edd163ff328211aa4c1779e43f392c738bc996fc4b6ef4e2e9060e8/tableschema-1.21.0.tar.gz",

"platform": null,