# VBPR-PyTorch

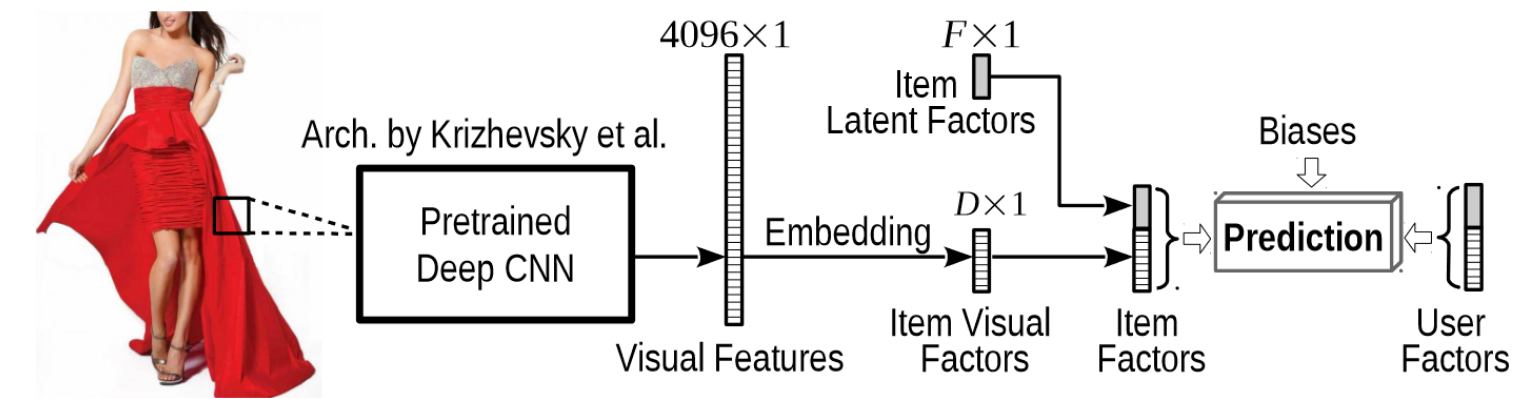

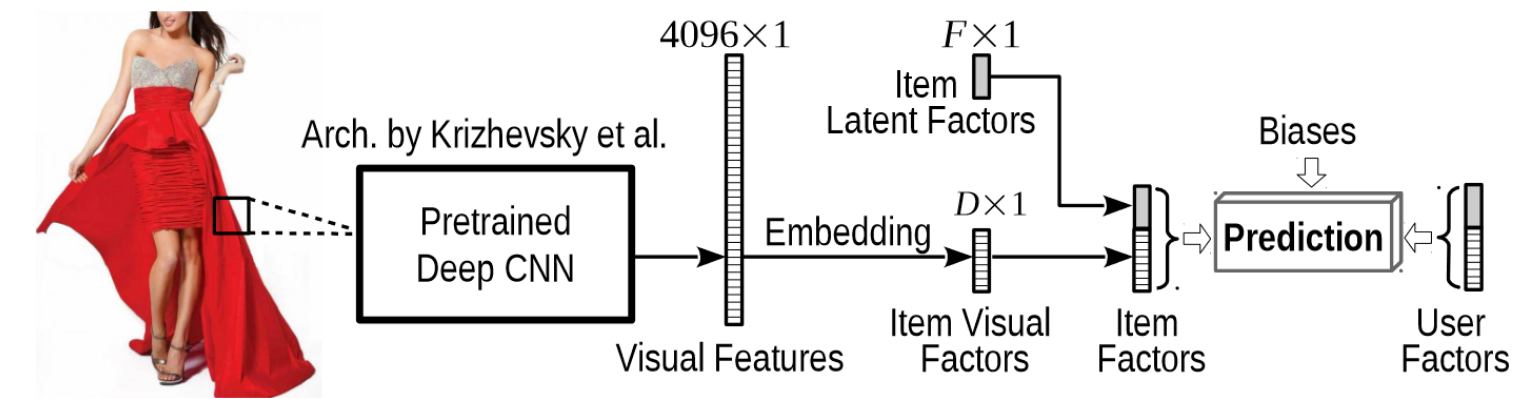

Implementation of VBPR, a visual recommender model, from the paper ["VBPR: Visual Bayesian Personalized Ranking from Implicit Feedback"](https://arxiv.org/abs/1510.01784).

The implementation has a good enough training time: ~50 seconds per epoch in a regular Google Colab machine (GPU, free) in the Tradesy dataset (+410k interactions, using `batch_size=64`). Also, the whole source code includes typing annotations that should help to improve readibility and detect unexpected behaviors. Finally, the performance reached by the implementation is similar to the metrics reported in the paper:

| **Dataset** | **Setting** | **Paper** | **This repo** | **% diff.** |

|-------------|-------------|:---------:|:-------------:|:-----------:|

| Tradesy | All Items | 0.7834 | 0.7628 | -2.6% |

| Tradesy | Cold Start | 0.7594 | 0.7489 | -1.4% |

```bibtex

@inproceedings{he2016vbpr,

title={VBPR: visual bayesian personalized ranking from implicit feedback},

author={He, Ruining and McAuley, Julian},

booktitle={Proceedings of the AAAI conference on artificial intelligence},

volume={30},

number={1},

year={2016}

}

```

## Install

```

pip install vbpr-pytorch

```

You can also install `vbpr-pytorch` with their optional dependencies:

```

# Development dependencies

pip install vbpr-pytorch[dev]

```

```

# Weight and biases dependency

pip install vbpr-pytorch[wandb]

```

## Usage

This is a simplified version of the training script on the Tradesy dataset:

```python

import torch

from torch import optim

from vbpr import VBPR, Trainer

from vbpr.datasets import TradesyDataset

dataset, features = TradesyDataset.from_files(

"/path/to/transactions.json.gz",

"/path/to/features.b",

)

model = VBPR(

dataset.n_users,

dataset.n_items,

torch.tensor(features),

dim_gamma=20,

dim_theta=20,

)

optimizer = optim.SGD(model.parameters(), lr=5e-04)

trainer = Trainer(model, optimizer)

trainer.fit(dataset, n_epochs=10)

```

You can use a custom dataset by writing a subclass of `TradesyDataset` or making sure the the `__getitem__` method of your dataset returns a tuple containing: user ID, positive item ID, and negative item ID.

## Notes

Notice that the original VBPR implementation (available at [He's website](https://sites.google.com/view/ruining-he/)) contains a different regularization than the one used in this implementation. The authors update the `gamma_items` embedding in a stronger way for positive than for negative items (dividing the lambda by 10). Notice that [Cornac](https://github.com/PreferredAI/cornac) applies this regularization in their implementation ([code](https://github.com/PreferredAI/cornac/blob/93058c04a15348de60b5190bda90a82dafa9d8b6/cornac/models/vbpr/recom_vbpr.py#L249)). In this implementation, it was not considered because it is hard to do without having to manually calculate the regularization as Cornac (I am prioritizing simplicity and portability of the implementation) or without having to use a custom loss that includes the regularization term (a future version might do this). In the future, I will implement the authors' regularization to check if the results change significantly, but it might not be significant (I assume this regularization was not included in the paper for a reason).

Relevant code:

```cpp

// adjust latent factors

for (int f = 0; f < K; f ++) {

double w_uf = gamma_user[user_id][f];

double h_if = gamma_item[pos_item_id][f];

double h_jf = gamma_item[neg_item_id][f];

gamma_user[user_id][f] += learn_rate * ( deri * (h_if - h_jf) - lambda * w_uf);

gamma_item[pos_item_id][f] += learn_rate * ( deri * w_uf - lambda * h_if);

gamma_item[neg_item_id][f] += learn_rate * (-deri * w_uf - lambda / 10.0 * h_jf);

}

```

Raw data

{

"_id": null,

"home_page": "https://github.com/aaossa/VBPR-PyTorch",

"name": "vbpr-pytorch",

"maintainer": "",

"docs_url": null,

"requires_python": ">=3.8.1,<3.12",

"maintainer_email": "",

"keywords": "artificial intelligence,recommender systems,vbpr",

"author": "Antonio Ossa-Guerra",

"author_email": "aaossa@uc.cl",

"download_url": "https://files.pythonhosted.org/packages/82/ac/334ccf967d10253a8192e421eec9a4630ae79a7b68d8748296956d17fc08/vbpr_pytorch-0.1.2.tar.gz",

"platform": null,

"description": "\n\n# VBPR-PyTorch\n\nImplementation of VBPR, a visual recommender model, from the paper [\"VBPR: Visual Bayesian Personalized Ranking from Implicit Feedback\"](https://arxiv.org/abs/1510.01784).\n\nThe implementation has a good enough training time: ~50 seconds per epoch in a regular Google Colab machine (GPU, free) in the Tradesy dataset (+410k interactions, using `batch_size=64`). Also, the whole source code includes typing annotations that should help to improve readibility and detect unexpected behaviors. Finally, the performance reached by the implementation is similar to the metrics reported in the paper:\n\n| **Dataset** | **Setting** | **Paper** | **This repo** | **% diff.** |\n|-------------|-------------|:---------:|:-------------:|:-----------:|\n| Tradesy | All Items | 0.7834 | 0.7628 | -2.6% |\n| Tradesy | Cold Start | 0.7594 | 0.7489 | -1.4% |\n\n\n```bibtex\n@inproceedings{he2016vbpr,\n title={VBPR: visual bayesian personalized ranking from implicit feedback},\n author={He, Ruining and McAuley, Julian},\n booktitle={Proceedings of the AAAI conference on artificial intelligence},\n volume={30},\n number={1},\n year={2016}\n}\n```\n\n\n## Install\n\n```\npip install vbpr-pytorch\n```\n\nYou can also install `vbpr-pytorch` with their optional dependencies:\n\n```\n# Development dependencies\npip install vbpr-pytorch[dev]\n```\n\n```\n# Weight and biases dependency\npip install vbpr-pytorch[wandb]\n```\n\n\n## Usage\n\nThis is a simplified version of the training script on the Tradesy dataset:\n\n```python\nimport torch\nfrom torch import optim\nfrom vbpr import VBPR, Trainer\nfrom vbpr.datasets import TradesyDataset\n\n\ndataset, features = TradesyDataset.from_files(\n \"/path/to/transactions.json.gz\",\n \"/path/to/features.b\",\n)\nmodel = VBPR(\n dataset.n_users,\n dataset.n_items,\n torch.tensor(features),\n dim_gamma=20,\n dim_theta=20,\n)\noptimizer = optim.SGD(model.parameters(), lr=5e-04)\n\ntrainer = Trainer(model, optimizer)\ntrainer.fit(dataset, n_epochs=10)\n\n```\n\nYou can use a custom dataset by writing a subclass of `TradesyDataset` or making sure the the `__getitem__` method of your dataset returns a tuple containing: user ID, positive item ID, and negative item ID.\n\n\n## Notes\n\nNotice that the original VBPR implementation (available at [He's website](https://sites.google.com/view/ruining-he/)) contains a different regularization than the one used in this implementation. The authors update the `gamma_items` embedding in a stronger way for positive than for negative items (dividing the lambda by 10). Notice that [Cornac](https://github.com/PreferredAI/cornac) applies this regularization in their implementation ([code](https://github.com/PreferredAI/cornac/blob/93058c04a15348de60b5190bda90a82dafa9d8b6/cornac/models/vbpr/recom_vbpr.py#L249)). In this implementation, it was not considered because it is hard to do without having to manually calculate the regularization as Cornac (I am prioritizing simplicity and portability of the implementation) or without having to use a custom loss that includes the regularization term (a future version might do this). In the future, I will implement the authors' regularization to check if the results change significantly, but it might not be significant (I assume this regularization was not included in the paper for a reason).\n\nRelevant code:\n\n```cpp\n // adjust latent factors\n for (int f = 0; f < K; f ++) {\n double w_uf = gamma_user[user_id][f];\n double h_if = gamma_item[pos_item_id][f];\n double h_jf = gamma_item[neg_item_id][f];\n\n gamma_user[user_id][f] += learn_rate * ( deri * (h_if - h_jf) - lambda * w_uf);\n gamma_item[pos_item_id][f] += learn_rate * ( deri * w_uf - lambda * h_if);\n gamma_item[neg_item_id][f] += learn_rate * (-deri * w_uf - lambda / 10.0 * h_jf);\n }\n```\n",

"bugtrack_url": null,

"license": "MIT",

"summary": "Implementation of VBPR, a visual recommender model, from the paper 'VBPR: Visual Bayesian Personalized Ranking from Implicit Feedback'",

"version": "0.1.2",

"project_urls": {

"Homepage": "https://github.com/aaossa/VBPR-PyTorch",

"Repository": "https://github.com/aaossa/VBPR-PyTorch"

},

"split_keywords": [

"artificial intelligence",

"recommender systems",

"vbpr"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "421a0922287040c210d8c97d3e1ba1ce9fbe60927e89e261255b8795f6d5d6f5",

"md5": "b4efd9f5041873846815af0f2c1e7b70",

"sha256": "3dadbaa0742265396c7102db40ddab0e9402ba5b9e64f9bc2f82b925d81663df"

},

"downloads": -1,

"filename": "vbpr_pytorch-0.1.2-py3-none-any.whl",

"has_sig": false,

"md5_digest": "b4efd9f5041873846815af0f2c1e7b70",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.8.1,<3.12",

"size": 11815,

"upload_time": "2023-10-10T20:59:17",

"upload_time_iso_8601": "2023-10-10T20:59:17.374643Z",

"url": "https://files.pythonhosted.org/packages/42/1a/0922287040c210d8c97d3e1ba1ce9fbe60927e89e261255b8795f6d5d6f5/vbpr_pytorch-0.1.2-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "82ac334ccf967d10253a8192e421eec9a4630ae79a7b68d8748296956d17fc08",

"md5": "f043e13df4c125f408485134b4473865",

"sha256": "6f01435ffdb79844a43b39f6bc54179b51ad4e959d9be303a69261d8ea360a7f"

},

"downloads": -1,

"filename": "vbpr_pytorch-0.1.2.tar.gz",

"has_sig": false,

"md5_digest": "f043e13df4c125f408485134b4473865",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.8.1,<3.12",

"size": 12756,

"upload_time": "2023-10-10T20:59:18",

"upload_time_iso_8601": "2023-10-10T20:59:18.612728Z",

"url": "https://files.pythonhosted.org/packages/82/ac/334ccf967d10253a8192e421eec9a4630ae79a7b68d8748296956d17fc08/vbpr_pytorch-0.1.2.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2023-10-10 20:59:18",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "aaossa",

"github_project": "VBPR-PyTorch",

"travis_ci": false,

"coveralls": false,

"github_actions": true,

"lcname": "vbpr-pytorch"

}