# sdwebuiapi

API client for AUTOMATIC1111/stable-diffusion-webui

Supports txt2img, img2img, extra-single-image, extra-batch-images API calls.

API support have to be enabled from webui. Add --api when running webui.

It's explained [here](https://github.com/AUTOMATIC1111/stable-diffusion-webui/wiki/API).

You can use --api-auth user1:pass1,user2:pass2 option to enable authentication for api access.

(Since it's basic http authentication the password is transmitted in cleartext)

API calls are (almost) direct translation from http://127.0.0.1:7860/docs as of 2022/11/21.

# Install

```

pip install webuiapi

```

# Usage

webuiapi_demo.ipynb contains example code with original images. Images are compressed as jpeg in this document.

## create API client

```

import webuiapi

# create API client

api = webuiapi.WebUIApi()

# create API client with custom host, port

#api = webuiapi.WebUIApi(host='127.0.0.1', port=7860)

# create API client with custom host, port and https

#api = webuiapi.WebUIApi(host='webui.example.com', port=443, use_https=True)

# create API client with default sampler, steps.

#api = webuiapi.WebUIApi(sampler='Euler a', steps=20)

# optionally set username, password when --api-auth=username:password is set on webui.

# username, password are not protected and can be derived easily if the communication channel is not encrypted.

# you can also pass username, password to the WebUIApi constructor.

api.set_auth('username', 'password')

```

## txt2img

```

result1 = api.txt2img(prompt="cute squirrel",

negative_prompt="ugly, out of frame",

seed=1003,

styles=["anime"],

cfg_scale=7,

# sampler_index='DDIM',

# steps=30,

# enable_hr=True,

# hr_scale=2,

# hr_upscaler=webuiapi.HiResUpscaler.Latent,

# hr_second_pass_steps=20,

# hr_resize_x=1536,

# hr_resize_y=1024,

# denoising_strength=0.4,

)

# images contains the returned images (PIL images)

result1.images

# image is shorthand for images[0]

result1.image

# info contains text info about the api call

result1.info

# info contains paramteres of the api call

result1.parameters

result1.image

```

## img2img

```

result2 = api.img2img(images=[result1.image], prompt="cute cat", seed=5555, cfg_scale=6.5, denoising_strength=0.6)

result2.image

```

## img2img inpainting

```

from PIL import Image, ImageDraw

mask = Image.new('RGB', result2.image.size, color = 'black')

# mask = result2.image.copy()

draw = ImageDraw.Draw(mask)

draw.ellipse((210,150,310,250), fill='white')

draw.ellipse((80,120,160,120+80), fill='white')

mask

```

```

inpainting_result = api.img2img(images=[result2.image],

mask_image=mask,

inpainting_fill=1,

prompt="cute cat",

seed=104,

cfg_scale=5.0,

denoising_strength=0.7)

inpainting_result.image

```

## extra-single-image

```

result3 = api.extra_single_image(image=result2.image,

upscaler_1=webuiapi.Upscaler.ESRGAN_4x,

upscaling_resize=1.5)

print(result3.image.size)

result3.image

```

(768, 768)

## extra-batch-images

```

result4 = api.extra_batch_images(images=[result1.image, inpainting_result.image],

upscaler_1=webuiapi.Upscaler.ESRGAN_4x,

upscaling_resize=1.5)

result4.images[0]

```

```

result4.images[1]

```

### Async API support

txt2img, img2img, extra_single_image, extra_batch_images support async api call with use_async=True parameter. You need asyncio, aiohttp packages installed.

```

result = await api.txt2img(prompt="cute kitten",

seed=1001,

use_async=True

)

result.image

```

### Scripts support

Scripts from AUTOMATIC1111's Web UI are supported, but there aren't official models that define a script's interface.

To find out the list of arguments that are accepted by a particular script look up the associated python file from

AUTOMATIC1111's repo `scripts/[script_name].py`. Search for its `run(p, **args)` function and the arguments that come

after 'p' is the list of accepted arguments

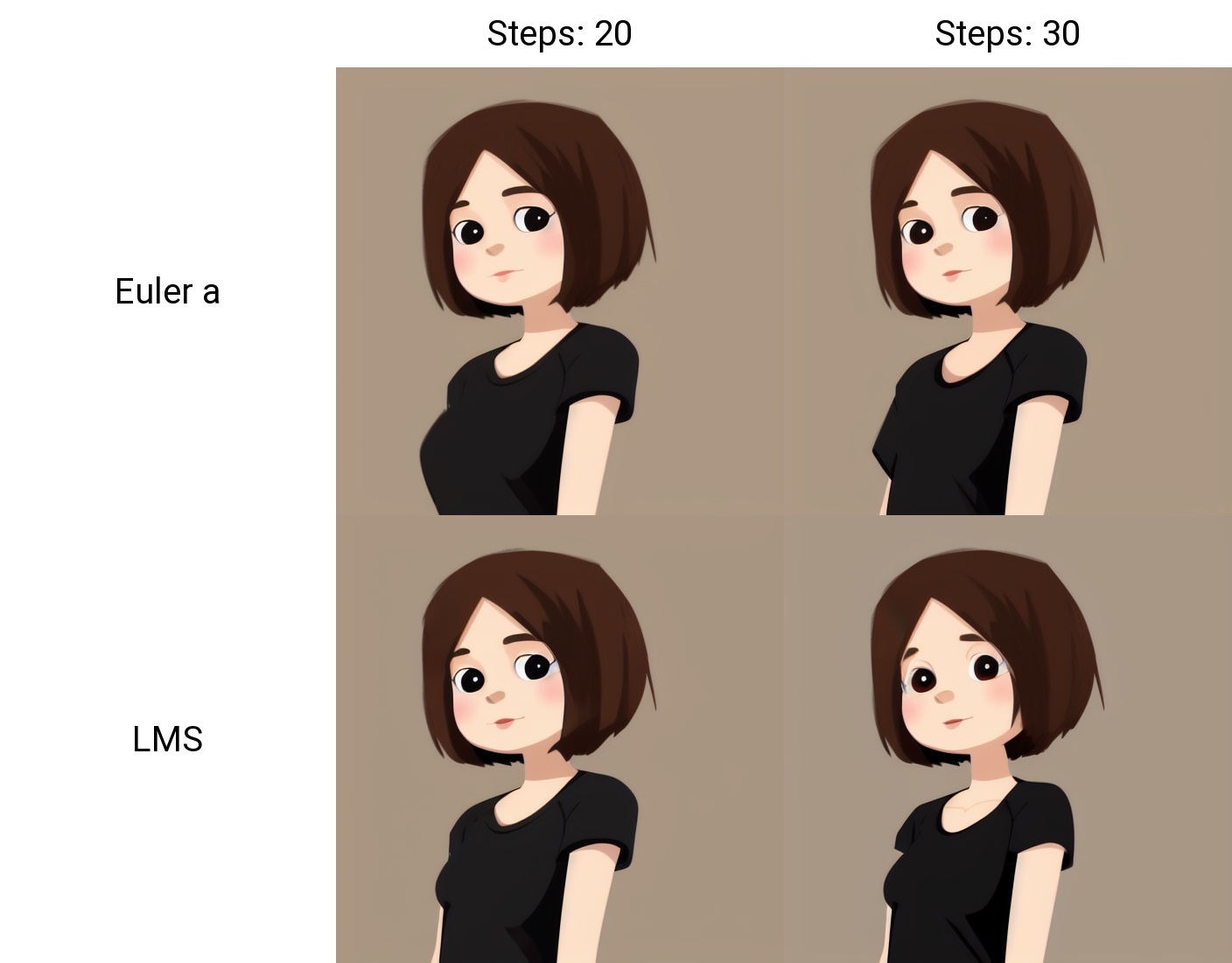

#### Example for X/Y/Z Plot script:

```

(scripts/xyz_grid.py file from AUTOMATIC1111's repo)

def run(self, p, x_type, x_values, y_type, y_values, z_type, z_values, draw_legend, include_lone_images, include_sub_grids, no_fixed_seeds, margin_size):

...

```

List of accepted arguments:

* _x_type_: Index of the axis for X axis. Indexes start from [0: Nothing]

* _x_values_: String of comma-separated values for the X axis

* _y_type_: Index of the axis type for Y axis. As the X axis, indexes start from [0: Nothing]

* _y_values_: String of comma-separated values for the Y axis

* _z_type_: Index of the axis type for Z axis. As the X axis, indexes start from [0: Nothing]

* _z_values_: String of comma-separated values for the Z axis

* _draw_legend_: "True" or "False". IMPORTANT: It needs to be a string and not a Boolean value

* _include_lone_images_: "True" or "False". IMPORTANT: It needs to be a string and not a Boolean value

* _include_sub_grids_: "True" or "False". IMPORTANT: It needs to be a string and not a Boolean value

* _no_fixed_seeds_: "True" or "False". IMPORTANT: It needs to be a string and not a Boolean value

* margin_size: int value

```

# Available Axis options (Different for txt2img and img2img!)

XYZPlotAvailableTxt2ImgScripts = [

"Nothing",

"Seed",

"Var. seed",

"Var. strength",

"Steps",

"Hires steps",

"CFG Scale",

"Prompt S/R",

"Prompt order",

"Sampler",

"Checkpoint name",

"Sigma Churn",

"Sigma min",

"Sigma max",

"Sigma noise",

"Eta",

"Clip skip",

"Denoising",

"Hires upscaler",

"VAE",

"Styles",

]

XYZPlotAvailableImg2ImgScripts = [

"Nothing",

"Seed",

"Var. seed",

"Var. strength",

"Steps",

"CFG Scale",

"Image CFG Scale",

"Prompt S/R",

"Prompt order",

"Sampler",

"Checkpoint name",

"Sigma Churn",

"Sigma min",

"Sigma max",

"Sigma noise",

"Eta",

"Clip skip",

"Denoising",

"Cond. Image Mask Weight",

"VAE",

"Styles",

]

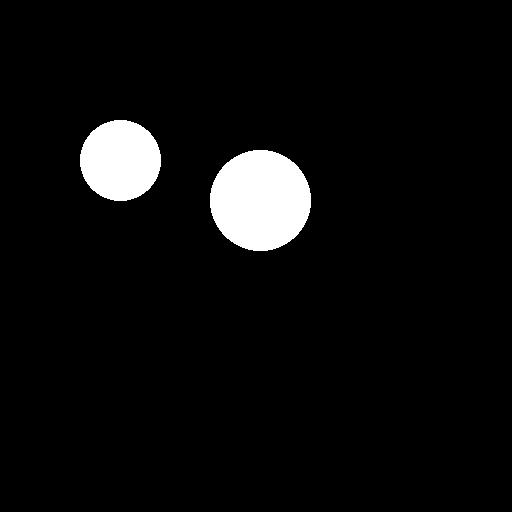

# Example call

XAxisType = "Steps"

XAxisValues = "20,30"

XAxisValuesDropdown = ""

YAxisType = "Sampler"

YAxisValues = "Euler a, LMS"

YAxisValuesDropdown = ""

ZAxisType = "Nothing"

ZAxisValues = ""

ZAxisValuesDropdown = ""

drawLegend = "True"

includeLoneImages = "False"

includeSubGrids = "False"

noFixedSeeds = "False"

marginSize = 0

# x_type, x_values, y_type, y_values, z_type, z_values, draw_legend, include_lone_images, include_sub_grids, no_fixed_seeds, margin_size

result = api.txt2img(

prompt="cute girl with short brown hair in black t-shirt in animation style",

seed=1003,

script_name="X/Y/Z Plot",

script_args=[

XYZPlotAvailableTxt2ImgScripts.index(XAxisType),

XAxisValues,

XAxisValuesDropdown,

XYZPlotAvailableTxt2ImgScripts.index(YAxisType),

YAxisValues,

YAxisValuesDropdown,

XYZPlotAvailableTxt2ImgScripts.index(ZAxisType),

ZAxisValues,

ZAxisValuesDropdown,

drawLegend,

includeLoneImages,

includeSubGrids,

noFixedSeeds,

marginSize, ]

)

result.image

```

### Configuration APIs

```

# return map of current options

options = api.get_options()

# change sd model

options = {}

options['sd_model_checkpoint'] = 'model.ckpt [7460a6fa]'

api.set_options(options)

# when calling set_options, do not pass all options returned by get_options().

# it makes webui unusable (2022/11/21).

# get available sd models

api.get_sd_models()

# misc get apis

api.get_samplers()

api.get_cmd_flags()

api.get_hypernetworks()

api.get_face_restorers()

api.get_realesrgan_models()

api.get_prompt_styles()

api.get_artist_categories() # deprecated ?

api.get_artists() # deprecated ?

api.get_progress()

api.get_embeddings()

api.get_cmd_flags()

api.get_scripts()

api.get_schedulers()

api.get_memory()

# misc apis

api.interrupt()

api.skip()

```

### Utility methods

```

# save current model name

old_model = api.util_get_current_model()

# get list of available models

models = api.util_get_model_names()

# get list of available samplers

api.util_get_sampler_names()

# get list of available schedulers

api.util_get_scheduler_names()

# refresh list of models

api.refresh_checkpoints()

# set model (use exact name)

api.util_set_model(models[0])

# set model (find closest match)

api.util_set_model('robodiffusion')

# wait for job complete

api.util_wait_for_ready()

```

### LORA and alwayson_scripts example

```

r = api.txt2img(prompt='photo of a cute girl with green hair <lora:Moxin_10:0.6> shuimobysim __juice__',

seed=1000,

save_images=True,

alwayson_scripts={"Simple wildcards":[]} # wildcards extension doesn't accept more parameters.

)

r.image

```

### Extension support - Model-Keyword

```

# https://github.com/mix1009/model-keyword

mki = webuiapi.ModelKeywordInterface(api)

mki.get_keywords()

```

ModelKeywordResult(keywords=['nousr robot'], model='robo-diffusion-v1.ckpt', oldhash='41fef4bd', match_source='model-keyword.txt')

### Extension support - Instruct-Pix2Pix

```

# Instruct-Pix2Pix extension is now deprecated and is now part of webui.

# You can use normal img2img with image_cfg_scale when instruct-pix2pix model is loaded.

r = api.img2img(prompt='sunset', images=[pil_img], cfg_scale=7.5, image_cfg_scale=1.5)

r.image

```

### Extension support - ControlNet

```

# https://github.com/Mikubill/sd-webui-controlnet

api.controlnet_model_list()

```

<pre>

['control_v11e_sd15_ip2p [c4bb465c]',

'control_v11e_sd15_shuffle [526bfdae]',

'control_v11f1p_sd15_depth [cfd03158]',

'control_v11p_sd15_canny [d14c016b]',

'control_v11p_sd15_inpaint [ebff9138]',

'control_v11p_sd15_lineart [43d4be0d]',

'control_v11p_sd15_mlsd [aca30ff0]',

'control_v11p_sd15_normalbae [316696f1]',

'control_v11p_sd15_openpose [cab727d4]',

'control_v11p_sd15_scribble [d4ba51ff]',

'control_v11p_sd15_seg [e1f51eb9]',

'control_v11p_sd15_softedge [a8575a2a]',

'control_v11p_sd15s2_lineart_anime [3825e83e]',

'control_v11u_sd15_tile [1f041471]']

</pre>

```

api.controlnet_version()

api.controlnet_module_list()

```

```

# normal txt2img

r = api.txt2img(prompt="photo of a beautiful girl with blonde hair", height=512, seed=100)

img = r.image

img

```

```

# txt2img with ControlNet

# input_image parameter is changed to image (change in ControlNet API)

unit1 = webuiapi.ControlNetUnit(image=img, module='canny', model='control_v11p_sd15_canny [d14c016b]')

r = api.txt2img(prompt="photo of a beautiful girl", controlnet_units=[unit1])

r.image

```

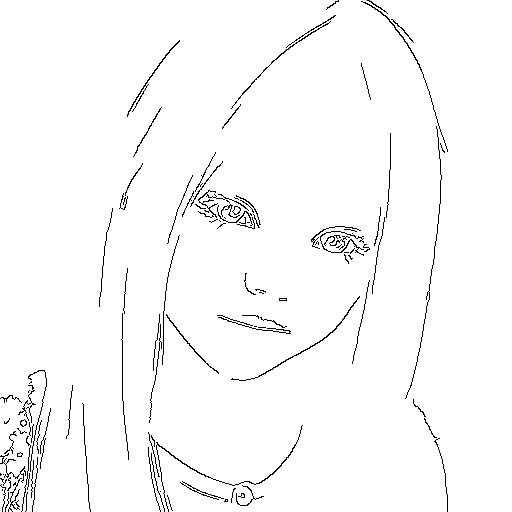

```

# img2img with multiple ControlNets

unit1 = webuiapi.ControlNetUnit(image=img, module='canny', model='control_v11p_sd15_canny [d14c016b]')

unit2 = webuiapi.ControlNetUnit(image=img, module='depth', model='control_v11f1p_sd15_depth [cfd03158]', weight=0.5)

r2 = api.img2img(prompt="girl",

images=[img],

width=512,

height=512,

controlnet_units=[unit1, unit2],

sampler_name="Euler a",

cfg_scale=7,

)

r2.image

```

```

r2.images[1]

```

```

r2.images[2]

```

```

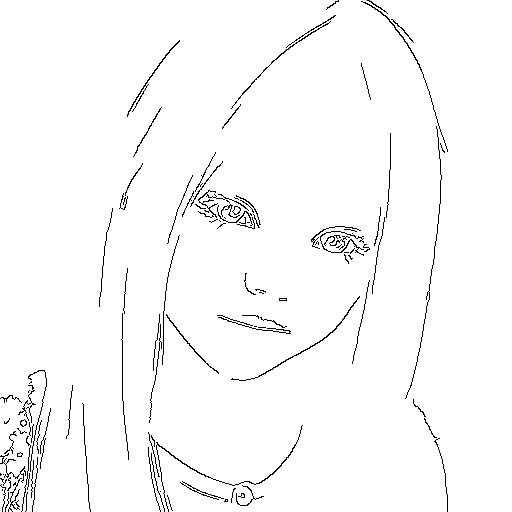

r = api.controlnet_detect(images=[img], module='canny')

r.image

```

### Extension support - AnimateDiff

```

# https://github.com/continue-revolution/sd-webui-animatediff

adiff = webuiapi.AnimateDiff(model='mm_sd15_v3.safetensors',

video_length=24,

closed_loop='R+P',

format=['GIF'])

r = api.txt2img(prompt='cute puppy', animatediff=adiff)

# save GIF file. need save_all=True to save animated GIF.

r.image.save('puppy.gif', save_all=True)

# Display animated GIF in Jupyter notebook

from IPython.display import HTML

HTML('<img src="data:image/gif;base64,{0}"/>'.format(r.json['images'][0]))

```

### Extension support - RemBG (contributed by webcoderz)

```

# https://github.com/AUTOMATIC1111/stable-diffusion-webui-rembg

rembg = webuiapi.RemBGInterface(api)

r = rembg.rembg(input_image=img, model='u2net', return_mask=False)

r.image

```

### Extension support - SegmentAnything (contributed by TimNekk)

```python

# https://github.com/continue-revolution/sd-webui-segment-anything

segment = webuiapi.SegmentAnythingInterface(api)

# Perform a segmentation prediction using the SAM model using points

sam_result = segment.sam_predict(

image=img,

sam_positive_points=[(0.5, 0.25), (0.75, 0.75)],

# add other parameters as needed

)

# Perform a segmentation prediction using the SAM model using GroundingDINO

sam_result2 = segment.sam_predict(

image=img,

dino_enabled=True,

dino_text_prompt="A text prompt for GroundingDINO",

# add other parameters as needed

)

# Example of dilating a mask

dilation_result = segment.dilate_mask(

image=img,

mask=sam_result.masks[0], # using the first mask from the SAM prediction

dilate_amount=30

)

# Example of generating semantic segmentation with category IDs

semantic_seg_result = segment.sam_and_semantic_seg_with_cat_id(

image=img,

category="1+2+3", # Category IDs separated by '+'

# add other parameters as needed

)

```

### Extension support - Tagger (contributed by C-BP)

```python

# https://github.com/Akegarasu/sd-webui-wd14-tagger

tagger = webuiapi.TaggerInterface(api)

result = tagger.tagger_interrogate(image)

print(result)

# {"caption": {"additionalProp1":0.9,"additionalProp2": 0.8,"additionalProp3": 0.7}}

```

### Extension support - ADetailer (contributed by tomj2ee and davidmartinrius)

#### txt2img with ADetailer

```

# https://github.com/Bing-su/adetailer

import webuiapi

api = webuiapi.WebUIApi()

ads = webuiapi.ADetailer(ad_model="face_yolov8n.pt")

result1 = api.txt2img(prompt="cute squirrel",

negative_prompt="ugly, out of frame",

seed=-1,

styles=["anime"],

cfg_scale=7,

adetailer=[ads],

steps=30,

enable_hr=True,

denoising_strength=0.5

)

img = result1.image

img

# OR

file_path = "output_image.png"

result1.image.save(file_path)

```

#### img2img with ADetailer

```

import webuiapi

from PIL import Image

img = Image.open("/path/to/your/image.jpg")

ads = webuiapi.ADetailer(ad_model="face_yolov8n.pt")

api = webuiapi.WebUIApi()

result1 = api.img2img(

images=[img],

prompt="a cute squirrel",

steps=25,

seed=-1,

cfg_scale=7,

denoising_strength=0.5,

resize_mode=2,

width=512,

height=512,

adetailer=[ads],

)

file_path = "img2img_output_image.png"

result1.image.save(file_path)

```

### Support for interrogate with "deepdanbooru / deepbooru" (contributed by davidmartinrius)

```

import webuiapi

from PIL import Image

api = webuiapi.WebUIApi()

img = Image.open("/path/to/your/image.jpg")

interrogate_result = api.interrogate(image=img, model="deepdanbooru")

# also you can use clip. clip is set by default

#interrogate_result = api.interrogate(image=img, model="clip")

#interrogate_result = api.interrogate(image=img)

prompt = interrogate_result.info

prompt

# OR

print(prompt)

```

### Support for ReActor, for face swapping (contributed by davidmartinrius)

```

import webuiapi

from PIL import Image

img = Image.open("/path/to/your/image.jpg")

api = webuiapi.WebUIApi()

your_desired_face = Image.open("/path/to/your/desired/face.jpeg")

reactor = webuiapi.ReActor(

img=your_desired_face,

enable=True

)

result1 = api.img2img(

images=[img],

prompt="a cute squirrel",

steps=25,

seed=-1,

cfg_scale=7,

denoising_strength=0.5,

resize_mode=2,

width=512,

height=512,

reactor=reactor

)

file_path = "face_swapped_image.png"

result1.image.save(file_path)

```

### Support for Self Attention Guidance (contributed by yano)

https://github.com/ashen-sensored/sd_webui_SAG

```

import webuiapi

from PIL import Image

img = Image.open("/path/to/your/image.jpg")

api = webuiapi.WebUIApi()

your_desired_face = Image.open("/path/to/your/desired/face.jpeg")

sag = webuiapi.Sag(

enable=True,

scale=0.75,

mask_threshold=1.00

)

result1 = api.img2img(

images=[img],

prompt="a cute squirrel",

steps=25,

seed=-1,

cfg_scale=7,

denoising_strength=0.5,

resize_mode=2,

width=512,

height=512,

sag=sag

)

file_path = "face_swapped_image.png"

result1.image.save(file_path)

```

### Prompt generator API by [David Martin Rius](https://github.com/davidmartinrius/):

This is an unofficial implementation to use the api of promptgen.

Before installing the extension you have to check if you already have an extension called Promptgen. If so, you need to uninstall it.

Once uninstalled you can install it in two ways:

#### 1. From the user interface

#### 2. From the command line

cd stable-diffusion-webui/extensions

git clone -b api-implementation https://github.com/davidmartinrius/stable-diffusion-webui-promptgen.git

Once installed:

```

api = webuiapi.WebUIApi()

result = api.list_prompt_gen_models()

print("list of models")

print(result)

# you will get something like this:

#['AUTOMATIC/promptgen-lexart', 'AUTOMATIC/promptgen-majinai-safe', 'AUTOMATIC/promptgen-majinai-unsafe']

text = "a box"

To create a prompt from a text:

# by default model_name is "AUTOMATIC/promptgen-lexart"

result = api.prompt_gen(text=text)

# Using a different model

result = api.prompt_gen(text=text, model_name="AUTOMATIC/promptgen-majinai-unsafe")

#Complete usage

result = api.prompt_gen(

text=text,

model_name="AUTOMATIC/promptgen-majinai-unsafe",

batch_count= 1,

batch_size=10,

min_length=20,

max_length=150,

num_beams=1,

temperature=1,

repetition_penalty=1,

length_preference=1,

sampling_mode="Top K",

top_k=12,

top_p=0.15

)

# result is a list of prompts. You can iterate the list or just get the first result like this: result[0]

```

### TIPS for using Flux [David Martin Rius](https://github.com/davidmartinrius/):

In both cases, it is needed cfg_scale = 1, sampler_name = "Euler", scheduler = "Simple" and in txt2img enable_hr=False

## For txt2img

```

import webuiapi

result1 = api.txt2img(prompt="cute squirrel",

negative_prompt="ugly, out of frame",

seed=-1,

styles=["anime"],

cfg_scale=1,

steps=20,

enable_hr=False,

denoising_strength=0.5,

sampler_name= "Euler",

scheduler= "Simple"

)

img = result1.image

img

# OR

file_path = "output_image.png"

result1.image.save(file_path)

```

## For img2img

```

import webuiapi

from PIL import Image

img = Image.open("/path/to/your/image.jpg")

api = webuiapi.WebUIApi()

result1 = api.img2img(

images=[img],

prompt="a cute squirrel",

steps=20,

seed=-1,

cfg_scale=1,

denoising_strength=0.5,

resize_mode=2,

width=512,

height=512,

sampler_name= "Euler",

scheduler= "Simple"

)

file_path = "face_swapped_image.png"

result1.image.save(file_path)

```

Raw data

{

"_id": null,

"home_page": "https://github.com/mix1009/sdwebuiapi",

"name": "webuiapi",

"maintainer": null,

"docs_url": null,

"requires_python": "<4,>=3.7",

"maintainer_email": null,

"keywords": "stable-diffuion-webui, AUTOMATIC1111, stable-diffusion, api",

"author": "ChunKoo Park",

"author_email": "mix100f9@gmail.com",

"download_url": "https://files.pythonhosted.org/packages/53/a7/5d6c6a10456a642568e3c02c3400963d305b2b3caccdaa305397396a41ea/webuiapi-0.9.17.tar.gz",

"platform": null,

"description": "# sdwebuiapi\nAPI client for AUTOMATIC1111/stable-diffusion-webui\n\nSupports txt2img, img2img, extra-single-image, extra-batch-images API calls.\n\nAPI support have to be enabled from webui. Add --api when running webui.\nIt's explained [here](https://github.com/AUTOMATIC1111/stable-diffusion-webui/wiki/API).\n\nYou can use --api-auth user1:pass1,user2:pass2 option to enable authentication for api access.\n(Since it's basic http authentication the password is transmitted in cleartext)\n\nAPI calls are (almost) direct translation from http://127.0.0.1:7860/docs as of 2022/11/21.\n\n# Install\n\n```\npip install webuiapi\n```\n\n# Usage\n\nwebuiapi_demo.ipynb contains example code with original images. Images are compressed as jpeg in this document.\n\n## create API client\n```\nimport webuiapi\n\n# create API client\napi = webuiapi.WebUIApi()\n\n# create API client with custom host, port\n#api = webuiapi.WebUIApi(host='127.0.0.1', port=7860)\n\n# create API client with custom host, port and https\n#api = webuiapi.WebUIApi(host='webui.example.com', port=443, use_https=True)\n\n# create API client with default sampler, steps.\n#api = webuiapi.WebUIApi(sampler='Euler a', steps=20)\n\n# optionally set username, password when --api-auth=username:password is set on webui.\n# username, password are not protected and can be derived easily if the communication channel is not encrypted.\n# you can also pass username, password to the WebUIApi constructor.\napi.set_auth('username', 'password')\n```\n\n## txt2img\n```\nresult1 = api.txt2img(prompt=\"cute squirrel\",\n negative_prompt=\"ugly, out of frame\",\n seed=1003,\n styles=[\"anime\"],\n cfg_scale=7,\n# sampler_index='DDIM',\n# steps=30,\n# enable_hr=True,\n# hr_scale=2,\n# hr_upscaler=webuiapi.HiResUpscaler.Latent,\n# hr_second_pass_steps=20,\n# hr_resize_x=1536,\n# hr_resize_y=1024,\n# denoising_strength=0.4,\n\n )\n# images contains the returned images (PIL images)\nresult1.images\n\n# image is shorthand for images[0]\nresult1.image\n\n# info contains text info about the api call\nresult1.info\n\n# info contains paramteres of the api call\nresult1.parameters\n\nresult1.image\n```\n\n\n\n## img2img\n```\nresult2 = api.img2img(images=[result1.image], prompt=\"cute cat\", seed=5555, cfg_scale=6.5, denoising_strength=0.6)\nresult2.image\n```\n\n\n## img2img inpainting\n```\nfrom PIL import Image, ImageDraw\n\nmask = Image.new('RGB', result2.image.size, color = 'black')\n# mask = result2.image.copy()\ndraw = ImageDraw.Draw(mask)\ndraw.ellipse((210,150,310,250), fill='white')\ndraw.ellipse((80,120,160,120+80), fill='white')\n\nmask\n```\n\n\n```\ninpainting_result = api.img2img(images=[result2.image],\n mask_image=mask,\n inpainting_fill=1,\n prompt=\"cute cat\",\n seed=104,\n cfg_scale=5.0,\n denoising_strength=0.7)\ninpainting_result.image\n```\n\n\n## extra-single-image\n```\nresult3 = api.extra_single_image(image=result2.image,\n upscaler_1=webuiapi.Upscaler.ESRGAN_4x,\n upscaling_resize=1.5)\nprint(result3.image.size)\nresult3.image\n```\n(768, 768)\n\n\n\n## extra-batch-images\n```\nresult4 = api.extra_batch_images(images=[result1.image, inpainting_result.image],\n upscaler_1=webuiapi.Upscaler.ESRGAN_4x,\n upscaling_resize=1.5)\nresult4.images[0]\n```\n\n```\nresult4.images[1]\n```\n\n\n### Async API support\ntxt2img, img2img, extra_single_image, extra_batch_images support async api call with use_async=True parameter. You need asyncio, aiohttp packages installed.\n```\nresult = await api.txt2img(prompt=\"cute kitten\",\n seed=1001,\n use_async=True\n )\nresult.image\n```\n\n### Scripts support\nScripts from AUTOMATIC1111's Web UI are supported, but there aren't official models that define a script's interface.\n\nTo find out the list of arguments that are accepted by a particular script look up the associated python file from\nAUTOMATIC1111's repo `scripts/[script_name].py`. Search for its `run(p, **args)` function and the arguments that come\nafter 'p' is the list of accepted arguments\n\n#### Example for X/Y/Z Plot script:\n```\n(scripts/xyz_grid.py file from AUTOMATIC1111's repo)\n\n def run(self, p, x_type, x_values, y_type, y_values, z_type, z_values, draw_legend, include_lone_images, include_sub_grids, no_fixed_seeds, margin_size):\n ...\n```\nList of accepted arguments:\n* _x_type_: Index of the axis for X axis. Indexes start from [0: Nothing]\n* _x_values_: String of comma-separated values for the X axis \n* _y_type_: Index of the axis type for Y axis. As the X axis, indexes start from [0: Nothing]\n* _y_values_: String of comma-separated values for the Y axis\n* _z_type_: Index of the axis type for Z axis. As the X axis, indexes start from [0: Nothing]\n* _z_values_: String of comma-separated values for the Z axis\n* _draw_legend_: \"True\" or \"False\". IMPORTANT: It needs to be a string and not a Boolean value\n* _include_lone_images_: \"True\" or \"False\". IMPORTANT: It needs to be a string and not a Boolean value\n* _include_sub_grids_: \"True\" or \"False\". IMPORTANT: It needs to be a string and not a Boolean value\n* _no_fixed_seeds_: \"True\" or \"False\". IMPORTANT: It needs to be a string and not a Boolean value\n* margin_size: int value\n```\n# Available Axis options (Different for txt2img and img2img!)\nXYZPlotAvailableTxt2ImgScripts = [\n \"Nothing\",\n \"Seed\",\n \"Var. seed\",\n \"Var. strength\",\n \"Steps\",\n \"Hires steps\",\n \"CFG Scale\",\n \"Prompt S/R\",\n \"Prompt order\",\n \"Sampler\",\n \"Checkpoint name\",\n \"Sigma Churn\",\n \"Sigma min\",\n \"Sigma max\",\n \"Sigma noise\",\n \"Eta\",\n \"Clip skip\",\n \"Denoising\",\n \"Hires upscaler\",\n \"VAE\",\n \"Styles\",\n]\n\nXYZPlotAvailableImg2ImgScripts = [\n \"Nothing\",\n \"Seed\",\n \"Var. seed\",\n \"Var. strength\",\n \"Steps\",\n \"CFG Scale\",\n \"Image CFG Scale\",\n \"Prompt S/R\",\n \"Prompt order\",\n \"Sampler\",\n \"Checkpoint name\",\n \"Sigma Churn\",\n \"Sigma min\",\n \"Sigma max\",\n \"Sigma noise\",\n \"Eta\",\n \"Clip skip\",\n \"Denoising\",\n \"Cond. Image Mask Weight\",\n \"VAE\",\n \"Styles\",\n]\n\n# Example call\nXAxisType = \"Steps\"\nXAxisValues = \"20,30\"\nXAxisValuesDropdown = \"\"\nYAxisType = \"Sampler\"\nYAxisValues = \"Euler a, LMS\"\nYAxisValuesDropdown = \"\"\nZAxisType = \"Nothing\"\nZAxisValues = \"\"\nZAxisValuesDropdown = \"\"\ndrawLegend = \"True\"\nincludeLoneImages = \"False\"\nincludeSubGrids = \"False\"\nnoFixedSeeds = \"False\"\nmarginSize = 0\n\n\n# x_type, x_values, y_type, y_values, z_type, z_values, draw_legend, include_lone_images, include_sub_grids, no_fixed_seeds, margin_size\n\nresult = api.txt2img(\n prompt=\"cute girl with short brown hair in black t-shirt in animation style\",\n seed=1003,\n script_name=\"X/Y/Z Plot\",\n script_args=[\n XYZPlotAvailableTxt2ImgScripts.index(XAxisType),\n XAxisValues,\n XAxisValuesDropdown,\n XYZPlotAvailableTxt2ImgScripts.index(YAxisType),\n YAxisValues,\n YAxisValuesDropdown,\n XYZPlotAvailableTxt2ImgScripts.index(ZAxisType),\n ZAxisValues,\n ZAxisValuesDropdown,\n drawLegend,\n includeLoneImages,\n includeSubGrids,\n noFixedSeeds,\n marginSize, ]\n )\n\nresult.image\n```\n\n\n\n### Configuration APIs\n```\n# return map of current options\noptions = api.get_options()\n\n# change sd model\noptions = {}\noptions['sd_model_checkpoint'] = 'model.ckpt [7460a6fa]'\napi.set_options(options)\n\n# when calling set_options, do not pass all options returned by get_options().\n# it makes webui unusable (2022/11/21).\n\n# get available sd models\napi.get_sd_models()\n\n# misc get apis\napi.get_samplers()\napi.get_cmd_flags() \napi.get_hypernetworks()\napi.get_face_restorers()\napi.get_realesrgan_models()\napi.get_prompt_styles()\napi.get_artist_categories() # deprecated ?\napi.get_artists() # deprecated ?\napi.get_progress()\napi.get_embeddings()\napi.get_cmd_flags()\napi.get_scripts()\napi.get_schedulers()\napi.get_memory()\n\n# misc apis\napi.interrupt()\napi.skip()\n```\n\n### Utility methods\n```\n# save current model name\nold_model = api.util_get_current_model()\n\n# get list of available models\nmodels = api.util_get_model_names()\n\n# get list of available samplers\napi.util_get_sampler_names()\n\n# get list of available schedulers\napi.util_get_scheduler_names()\n\n# refresh list of models\napi.refresh_checkpoints()\n\n# set model (use exact name)\napi.util_set_model(models[0])\n\n# set model (find closest match)\napi.util_set_model('robodiffusion')\n\n# wait for job complete\napi.util_wait_for_ready()\n\n```\n\n### LORA and alwayson_scripts example\n\n```\nr = api.txt2img(prompt='photo of a cute girl with green hair <lora:Moxin_10:0.6> shuimobysim __juice__',\n seed=1000,\n save_images=True,\n alwayson_scripts={\"Simple wildcards\":[]} # wildcards extension doesn't accept more parameters.\n )\nr.image\n```\n\n### Extension support - Model-Keyword\n```\n# https://github.com/mix1009/model-keyword\nmki = webuiapi.ModelKeywordInterface(api)\nmki.get_keywords()\n```\nModelKeywordResult(keywords=['nousr robot'], model='robo-diffusion-v1.ckpt', oldhash='41fef4bd', match_source='model-keyword.txt')\n\n\n### Extension support - Instruct-Pix2Pix\n```\n# Instruct-Pix2Pix extension is now deprecated and is now part of webui.\n# You can use normal img2img with image_cfg_scale when instruct-pix2pix model is loaded.\nr = api.img2img(prompt='sunset', images=[pil_img], cfg_scale=7.5, image_cfg_scale=1.5)\nr.image\n```\n\n### Extension support - ControlNet\n```\n# https://github.com/Mikubill/sd-webui-controlnet\n\napi.controlnet_model_list()\n```\n<pre>\n['control_v11e_sd15_ip2p [c4bb465c]',\n 'control_v11e_sd15_shuffle [526bfdae]',\n 'control_v11f1p_sd15_depth [cfd03158]',\n 'control_v11p_sd15_canny [d14c016b]',\n 'control_v11p_sd15_inpaint [ebff9138]',\n 'control_v11p_sd15_lineart [43d4be0d]',\n 'control_v11p_sd15_mlsd [aca30ff0]',\n 'control_v11p_sd15_normalbae [316696f1]',\n 'control_v11p_sd15_openpose [cab727d4]',\n 'control_v11p_sd15_scribble [d4ba51ff]',\n 'control_v11p_sd15_seg [e1f51eb9]',\n 'control_v11p_sd15_softedge [a8575a2a]',\n 'control_v11p_sd15s2_lineart_anime [3825e83e]',\n 'control_v11u_sd15_tile [1f041471]']\n </pre>\n\n```\napi.controlnet_version()\napi.controlnet_module_list()\n```\n\n```\n# normal txt2img\nr = api.txt2img(prompt=\"photo of a beautiful girl with blonde hair\", height=512, seed=100)\nimg = r.image\nimg\n```\n\n\n```\n# txt2img with ControlNet\n# input_image parameter is changed to image (change in ControlNet API)\nunit1 = webuiapi.ControlNetUnit(image=img, module='canny', model='control_v11p_sd15_canny [d14c016b]')\n\nr = api.txt2img(prompt=\"photo of a beautiful girl\", controlnet_units=[unit1])\nr.image\n```\n\n\n\n\n```\n# img2img with multiple ControlNets\nunit1 = webuiapi.ControlNetUnit(image=img, module='canny', model='control_v11p_sd15_canny [d14c016b]')\nunit2 = webuiapi.ControlNetUnit(image=img, module='depth', model='control_v11f1p_sd15_depth [cfd03158]', weight=0.5)\n\nr2 = api.img2img(prompt=\"girl\",\n images=[img], \n width=512,\n height=512,\n controlnet_units=[unit1, unit2],\n sampler_name=\"Euler a\",\n cfg_scale=7,\n )\nr2.image\n```\n\n\n```\nr2.images[1]\n```\n\n\n```\nr2.images[2]\n```\n\n\n\n```\nr = api.controlnet_detect(images=[img], module='canny')\nr.image\n```\n\n\n### Extension support - AnimateDiff\n\n```\n# https://github.com/continue-revolution/sd-webui-animatediff\nadiff = webuiapi.AnimateDiff(model='mm_sd15_v3.safetensors',\n video_length=24,\n closed_loop='R+P',\n format=['GIF'])\n\nr = api.txt2img(prompt='cute puppy', animatediff=adiff)\n\n# save GIF file. need save_all=True to save animated GIF.\nr.image.save('puppy.gif', save_all=True)\n\n# Display animated GIF in Jupyter notebook\nfrom IPython.display import HTML\nHTML('<img src=\"data:image/gif;base64,{0}\"/>'.format(r.json['images'][0]))\n```\n\n### Extension support - RemBG (contributed by webcoderz)\n```\n# https://github.com/AUTOMATIC1111/stable-diffusion-webui-rembg\nrembg = webuiapi.RemBGInterface(api)\nr = rembg.rembg(input_image=img, model='u2net', return_mask=False)\nr.image\n```\n\n\n### Extension support - SegmentAnything (contributed by TimNekk)\n```python\n# https://github.com/continue-revolution/sd-webui-segment-anything\n\nsegment = webuiapi.SegmentAnythingInterface(api)\n\n# Perform a segmentation prediction using the SAM model using points\nsam_result = segment.sam_predict(\n image=img,\n sam_positive_points=[(0.5, 0.25), (0.75, 0.75)],\n # add other parameters as needed\n)\n\n# Perform a segmentation prediction using the SAM model using GroundingDINO\nsam_result2 = segment.sam_predict(\n image=img,\n dino_enabled=True,\n dino_text_prompt=\"A text prompt for GroundingDINO\",\n # add other parameters as needed\n)\n\n# Example of dilating a mask\ndilation_result = segment.dilate_mask(\n image=img,\n mask=sam_result.masks[0], # using the first mask from the SAM prediction\n dilate_amount=30\n)\n\n# Example of generating semantic segmentation with category IDs\nsemantic_seg_result = segment.sam_and_semantic_seg_with_cat_id(\n image=img,\n category=\"1+2+3\", # Category IDs separated by '+'\n # add other parameters as needed\n)\n```\n\n### Extension support - Tagger (contributed by C-BP)\n\n```python\n# https://github.com/Akegarasu/sd-webui-wd14-tagger\n\ntagger = webuiapi.TaggerInterface(api)\nresult = tagger.tagger_interrogate(image)\nprint(result)\n# {\"caption\": {\"additionalProp1\":0.9,\"additionalProp2\": 0.8,\"additionalProp3\": 0.7}}\n```\n### Extension support - ADetailer (contributed by tomj2ee and davidmartinrius)\n#### txt2img with ADetailer\n```\n# https://github.com/Bing-su/adetailer\n\nimport webuiapi\n\napi = webuiapi.WebUIApi()\n\nads = webuiapi.ADetailer(ad_model=\"face_yolov8n.pt\")\n\nresult1 = api.txt2img(prompt=\"cute squirrel\",\n negative_prompt=\"ugly, out of frame\",\n seed=-1,\n styles=[\"anime\"],\n cfg_scale=7,\n adetailer=[ads],\n steps=30,\n enable_hr=True,\n denoising_strength=0.5\n )\n \n \n \nimg = result1.image\nimg\n\n# OR\n\nfile_path = \"output_image.png\"\nresult1.image.save(file_path)\n```\n\n#### img2img with ADetailer\n\n```\nimport webuiapi\nfrom PIL import Image\n\nimg = Image.open(\"/path/to/your/image.jpg\")\n\nads = webuiapi.ADetailer(ad_model=\"face_yolov8n.pt\")\n\napi = webuiapi.WebUIApi()\n\nresult1 = api.img2img(\n images=[img], \n prompt=\"a cute squirrel\", \n steps=25, \n seed=-1, \n cfg_scale=7, \n denoising_strength=0.5, \n resize_mode=2,\n width=512,\n height=512,\n adetailer=[ads],\n)\n\nfile_path = \"img2img_output_image.png\"\nresult1.image.save(file_path)\n```\n### Support for interrogate with \"deepdanbooru / deepbooru\" (contributed by davidmartinrius)\n\n```\nimport webuiapi\nfrom PIL import Image\n\napi = webuiapi.WebUIApi()\n\nimg = Image.open(\"/path/to/your/image.jpg\")\n\ninterrogate_result = api.interrogate(image=img, model=\"deepdanbooru\")\n# also you can use clip. clip is set by default\n#interrogate_result = api.interrogate(image=img, model=\"clip\")\n#interrogate_result = api.interrogate(image=img)\n\nprompt = interrogate_result.info\nprompt\n\n# OR\nprint(prompt)\n```\n\n### Support for ReActor, for face swapping (contributed by davidmartinrius)\n\n```\nimport webuiapi\nfrom PIL import Image\n\nimg = Image.open(\"/path/to/your/image.jpg\")\n\napi = webuiapi.WebUIApi()\n\nyour_desired_face = Image.open(\"/path/to/your/desired/face.jpeg\")\n\nreactor = webuiapi.ReActor(\n img=your_desired_face,\n enable=True\n)\n\nresult1 = api.img2img(\n images=[img], \n prompt=\"a cute squirrel\", \n steps=25, \n seed=-1, \n cfg_scale=7, \n denoising_strength=0.5, \n resize_mode=2,\n width=512,\n height=512,\n reactor=reactor\n)\n\nfile_path = \"face_swapped_image.png\"\nresult1.image.save(file_path)\n```\n\n\n### Support for Self Attention Guidance (contributed by yano)\n\nhttps://github.com/ashen-sensored/sd_webui_SAG\n\n```\nimport webuiapi\nfrom PIL import Image\n\nimg = Image.open(\"/path/to/your/image.jpg\")\n\napi = webuiapi.WebUIApi()\n\nyour_desired_face = Image.open(\"/path/to/your/desired/face.jpeg\")\n\nsag = webuiapi.Sag(\n enable=True,\n scale=0.75,\n mask_threshold=1.00\n)\n\nresult1 = api.img2img(\n images=[img], \n prompt=\"a cute squirrel\", \n steps=25, \n seed=-1, \n cfg_scale=7, \n denoising_strength=0.5, \n resize_mode=2,\n width=512,\n height=512,\n sag=sag\n)\n\nfile_path = \"face_swapped_image.png\"\nresult1.image.save(file_path)\n```\n\n### Prompt generator API by [David Martin Rius](https://github.com/davidmartinrius/):\n\n\nThis is an unofficial implementation to use the api of promptgen. \nBefore installing the extension you have to check if you already have an extension called Promptgen. If so, you need to uninstall it.\nOnce uninstalled you can install it in two ways:\n\n#### 1. From the user interface\n\n\n#### 2. From the command line\n\ncd stable-diffusion-webui/extensions\n\ngit clone -b api-implementation https://github.com/davidmartinrius/stable-diffusion-webui-promptgen.git\n\nOnce installed:\n```\napi = webuiapi.WebUIApi()\n\nresult = api.list_prompt_gen_models()\nprint(\"list of models\")\nprint(result)\n# you will get something like this:\n#['AUTOMATIC/promptgen-lexart', 'AUTOMATIC/promptgen-majinai-safe', 'AUTOMATIC/promptgen-majinai-unsafe']\n\ntext = \"a box\"\n\nTo create a prompt from a text:\n# by default model_name is \"AUTOMATIC/promptgen-lexart\"\nresult = api.prompt_gen(text=text)\n\n# Using a different model\nresult = api.prompt_gen(text=text, model_name=\"AUTOMATIC/promptgen-majinai-unsafe\")\n\n#Complete usage\nresult = api.prompt_gen(\n text=text, \n model_name=\"AUTOMATIC/promptgen-majinai-unsafe\",\n batch_count= 1,\n batch_size=10,\n min_length=20,\n max_length=150,\n num_beams=1,\n temperature=1,\n repetition_penalty=1,\n length_preference=1,\n sampling_mode=\"Top K\",\n top_k=12,\n top_p=0.15\n )\n\n# result is a list of prompts. You can iterate the list or just get the first result like this: result[0]\n\n```\n\n### TIPS for using Flux [David Martin Rius](https://github.com/davidmartinrius/):\n\nIn both cases, it is needed cfg_scale = 1, sampler_name = \"Euler\", scheduler = \"Simple\" and in txt2img enable_hr=False\n\n## For txt2img\n```\nimport webuiapi\n\nresult1 = api.txt2img(prompt=\"cute squirrel\",\n negative_prompt=\"ugly, out of frame\",\n seed=-1,\n styles=[\"anime\"],\n cfg_scale=1,\n steps=20,\n enable_hr=False,\n denoising_strength=0.5,\n sampler_name= \"Euler\",\n scheduler= \"Simple\"\n )\n \n \n \nimg = result1.image\nimg\n\n# OR\n\nfile_path = \"output_image.png\"\nresult1.image.save(file_path)\n\n```\n\n## For img2img\n\n```\nimport webuiapi\nfrom PIL import Image\n\nimg = Image.open(\"/path/to/your/image.jpg\")\n\napi = webuiapi.WebUIApi()\n\nresult1 = api.img2img(\n images=[img], \n prompt=\"a cute squirrel\", \n steps=20, \n seed=-1, \n cfg_scale=1, \n denoising_strength=0.5, \n resize_mode=2,\n width=512,\n height=512,\n sampler_name= \"Euler\",\n scheduler= \"Simple\"\n)\n\nfile_path = \"face_swapped_image.png\"\nresult1.image.save(file_path)\n\n```\n\n\n",

"bugtrack_url": null,

"license": "MIT",

"summary": "Python API client for AUTOMATIC1111/stable-diffusion-webui",

"version": "0.9.17",

"project_urls": {

"Homepage": "https://github.com/mix1009/sdwebuiapi"

},

"split_keywords": [

"stable-diffuion-webui",

" automatic1111",

" stable-diffusion",

" api"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "b479bd7390a49e0bc7cfc348cbc3469d50424a2eba3fdac9378e325852399433",

"md5": "8e4b303e36f4a5c9b6e898a8ba4702ad",

"sha256": "5ee61943405b6a9b889e36114a6c1e386d83597c1e369796ab871080489443e9"

},

"downloads": -1,

"filename": "webuiapi-0.9.17-py3-none-any.whl",

"has_sig": false,

"md5_digest": "8e4b303e36f4a5c9b6e898a8ba4702ad",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": "<4,>=3.7",

"size": 22563,

"upload_time": "2024-12-14T00:59:12",

"upload_time_iso_8601": "2024-12-14T00:59:12.514700Z",

"url": "https://files.pythonhosted.org/packages/b4/79/bd7390a49e0bc7cfc348cbc3469d50424a2eba3fdac9378e325852399433/webuiapi-0.9.17-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "53a75d6c6a10456a642568e3c02c3400963d305b2b3caccdaa305397396a41ea",

"md5": "2d170402e09269c7c0a27014d96e0aff",

"sha256": "b37effbbd27dea550152391285dc26a9b15e2a7395b0d1af320e637e20b425a7"

},

"downloads": -1,

"filename": "webuiapi-0.9.17.tar.gz",

"has_sig": false,

"md5_digest": "2d170402e09269c7c0a27014d96e0aff",

"packagetype": "sdist",

"python_version": "source",

"requires_python": "<4,>=3.7",

"size": 28865,

"upload_time": "2024-12-14T00:59:15",

"upload_time_iso_8601": "2024-12-14T00:59:15.147538Z",

"url": "https://files.pythonhosted.org/packages/53/a7/5d6c6a10456a642568e3c02c3400963d305b2b3caccdaa305397396a41ea/webuiapi-0.9.17.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2024-12-14 00:59:15",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "mix1009",

"github_project": "sdwebuiapi",

"travis_ci": false,

"coveralls": false,

"github_actions": false,

"lcname": "webuiapi"

}