# Whispy - Fast Speech Recognition CLI

A fast and efficient command-line interface for [whisper.cpp](https://github.com/ggerganov/whisper.cpp), providing automatic speech recognition with GPU acceleration.

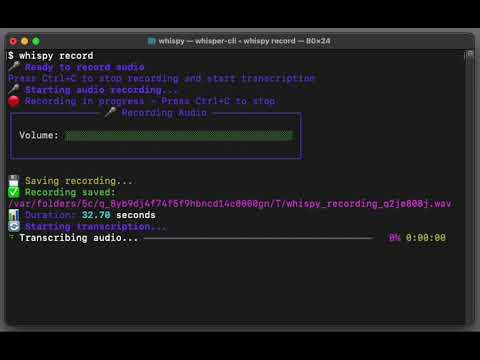

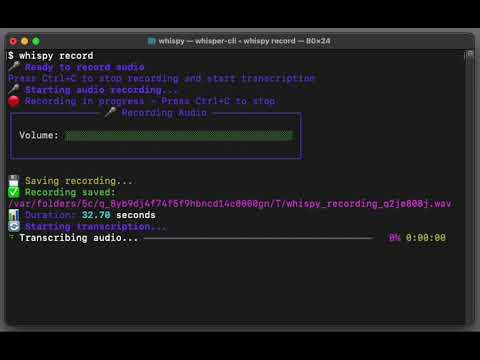

[](https://www.youtube.com/watch?v=O6lGEreTbzg)

## Features

- 🚀 **Fast transcription** using whisper.cpp with GPU acceleration (Metal on macOS, CUDA on Linux/Windows)

- 🎯 **Simple CLI interface** for easy audio transcription

- 📁 **Multiple audio formats** supported (WAV, MP3, FLAC, OGG)

- 🌍 **Multi-language support** with automatic language detection

- 📝 **Flexible output** options (stdout, file)

- 🔧 **Auto-detection** of models and whisper-cli binary

- 🏗️ **Automatic building** of whisper.cpp if needed

## Installation

### Quick Install (Recommended)

Install directly from GitHub with automatic setup:

```bash

pip install git+https://github.com/amarder/whispy.git

```

This will automatically:

- Clone whisper.cpp to `~/.whispy/whisper.cpp`

- Build the whisper-cli binary with GPU acceleration

- Install the whispy CLI

### Manual Install

If you prefer to install manually:

#### Prerequisites

- Python 3.7+

- CMake 3.10+ (for building whisper.cpp)

- C++ compiler with C++17 support

- Git (for cloning whisper.cpp)

#### Steps

```bash

# Clone repository

git clone https://github.com/amarder/whispy.git

cd whispy

# Install whispy

pip install -e .

# Clone whisper.cpp if you don't have it

git clone https://github.com/ggerganov/whisper.cpp.git

# Build whisper-cli (or use: whispy build)

cd whisper.cpp

cmake -B build

cmake --build build -j --config Release

cd ..

```

### Requirements

**Basic requirements:**

- Python 3.7+

- CMake (for building whisper.cpp)

- C++ compiler (gcc, clang, or MSVC)

- Git

**For audio recording features:**

- Microphone access

- Audio drivers (pre-installed on most systems)

- Additional Python packages: `sounddevice`, `numpy`, `scipy`

**Supported platforms:**

- 🍎 macOS (Intel & Apple Silicon) with CoreAudio

- 🐧 Linux (with ALSA/PulseAudio)

- 🪟 Windows (with DirectSound)

### Download a model

After installation, download a model to use for transcription:

```bash

# For pip installs from GitHub

cd ~/.whispy/whisper.cpp

sh ./models/download-ggml-model.sh base.en

# For manual installs

cd whisper.cpp

sh ./models/download-ggml-model.sh base.en

# Alternative: Download directly to models/

mkdir -p models

curl -L -o models/ggml-base.en.bin https://huggingface.co/ggerganov/whisper.cpp/resolve/main/ggml-base.en.bin

```

## Usage

### Basic transcription

```bash

# Transcribe an audio file

whispy transcribe audio.wav

# Transcribe with explicit model

whispy transcribe audio.wav --model models/ggml-base.en.bin

# Transcribe with language specification

whispy transcribe audio.wav --language en

# Save transcript to file

whispy transcribe audio.wav --output transcript.txt

# Verbose output

whispy transcribe audio.wav --verbose

```

### Record and transcribe

Record audio from your microphone and transcribe it in real-time:

```bash

# Record and transcribe (press Ctrl+C to stop recording)

whispy record-and-transcribe

# Test microphone before recording

whispy record-and-transcribe --test-mic

# Record with specific model and language

whispy record-and-transcribe --model models/ggml-base.en.bin --language en

# Save both transcript and audio

whispy record-and-transcribe --output transcript.txt --save-audio recording.wav

# Verbose output with device information

whispy record-and-transcribe --verbose

```

### Real-time transcription

Transcribe audio from your microphone in real-time using streaming chunks:

```bash

# Start real-time transcription (press Ctrl+C to stop)

whispy realtime

# With custom settings for faster/slower processing

whispy realtime --chunk-duration 2.0 --overlap-duration 0.5 --silence-threshold 0.02

# Show individual chunks instead of continuous output

whispy realtime --show-chunks

# Save final transcript to file

whispy realtime --output live_transcript.txt

# Test real-time setup

whispy realtime --test-setup

# Verbose mode for debugging

whispy realtime --verbose

```

**Real-time Parameters:**

- `--chunk-duration`: Duration of each audio chunk in seconds (default: 3.0)

- `--overlap-duration`: Overlap between chunks in seconds (default: 1.0)

- `--silence-threshold`: Voice activity detection threshold (default: 0.01)

- `--show-chunks`: Show individual chunk transcripts instead of continuous mode

- `--test-setup`: Test real-time setup without starting transcription

### System information

```bash

# Check system status

whispy info

# Show version

whispy version

# Build whisper-cli if needed

whispy build

```

### Supported audio formats

- WAV

- MP3

- FLAC

- OGG

### Available models

Download models using whisper.cpp's script or directly:

- `tiny.en`, `tiny` - Fastest, least accurate

- `base.en`, `base` - Good balance of speed and accuracy

- `small.en`, `small` - Better accuracy

- `medium.en`, `medium` - High accuracy

- `large-v1`, `large-v2`, `large-v3` - Best accuracy, slower

## Examples

```bash

# Quick transcription with auto-detected model

whispy transcribe meeting.wav

# High-quality transcription

whispy transcribe interview.mp3 --model whisper.cpp/models/ggml-large-v3.bin

# Transcribe non-English audio

whispy transcribe spanish_audio.wav --language es

# Save results and show details

whispy transcribe podcast.mp3 --output transcript.txt --verbose

# Record and transcribe in real-time

whispy record-and-transcribe

# Record with high-quality model and save everything

whispy record-and-transcribe \

--model whisper.cpp/models/ggml-large-v3.bin \

--output meeting-notes.txt \

--save-audio meeting-recording.wav \

--verbose

# Quick voice memo transcription

whispy record-and-transcribe --language en --output memo.txt

# Real-time transcription with live output

whispy realtime

# Real-time transcription with custom settings

whispy realtime --chunk-duration 2.0 --show-chunks --output live_notes.txt

```

## Testing

Whispy includes a comprehensive test suite to ensure the CLI works correctly with different scenarios.

### Running Tests

```bash

# Install development dependencies

pip install -e ".[dev]"

# Run all tests

pytest

# Run tests with verbose output

pytest -v

# Run only unit tests

pytest tests/test_unit.py

# Run only CLI tests

pytest tests/test_cli.py

# Run tests with coverage

pytest --cov=whispy --cov-report=html

# Skip slow tests

pytest --fast

```

### Test Categories

- **Unit tests** (`tests/test_unit.py`): Test individual functions and modules

- **CLI tests** (`tests/test_cli.py`): Test command-line interface functionality

- **Integration tests**: Test full workflows with real audio files

### Using the Test Runner

```bash

# Use the convenience script

python run_tests.py --help

# Run unit tests only

python run_tests.py -t unit -v

# Run with coverage

python run_tests.py -c -v

# Run fast tests only

python run_tests.py -f

```

### Test Requirements

- pytest >= 7.0.0

- pytest-cov >= 4.0.0

- pytest-mock >= 3.10.0

- Sample audio files (JFK sample from whisper.cpp)

### What's Tested

- ✅ CLI commands (help, version, info, transcribe, record-and-transcribe)

- ✅ Audio file transcription with sample files

- ✅ Audio recording from microphone

- ✅ Real-time record-and-transcribe workflow

- ✅ Microphone testing functionality

- ✅ Error handling for invalid files/models/devices

- ✅ Output file generation

- ✅ Language options and verbose modes

- ✅ System requirements and binary detection

- ✅ Model file discovery and validation

## Development

### Project Structure

```

whispy/

├── whispy/

│ ├── __init__.py # Package initialization

│ ├── cli.py # Command-line interface

│ └── transcribe.py # Core transcription logic

├── whisper.cpp/ # Git submodule (whisper.cpp source)

├── models/ # Model files directory

├── pyproject.toml # Project configuration

└── README.md

```

### How it works

Whispy works as a wrapper around the `whisper-cli` binary from whisper.cpp:

1. **Auto-detection**: Finds whisper-cli binary and model files automatically

2. **Subprocess calls**: Runs whisper-cli as a subprocess for transcription

3. **Output parsing**: Captures and returns the transcribed text

4. **Performance**: Gets full GPU acceleration and optimizations from whisper.cpp

### Building from source

```bash

# Clone with whisper.cpp submodule

git clone --recursive https://github.com/your-username/whispy.git

cd whispy

# Install in development mode

pip install -e .

# Build whisper.cpp

whispy build

# OR manually:

# cd whisper.cpp && cmake -B build && cmake --build build -j --config Release

```

### Adding new features

The CLI is built with [Typer](https://typer.tiangolo.com/) and can be easily extended:

```python

@app.command()

def new_command():

"""Add a new command to the CLI"""

console.print("New feature!")

```

## Performance

Whispy automatically uses the best available backend:

- **macOS**: Metal GPU acceleration

- **Linux/Windows**: CUDA GPU acceleration (if available)

- **Fallback**: Optimized CPU with BLAS

Typical performance on Apple M1:

- ~10x faster than real-time for base.en model

- ~5x faster than real-time for large-v3 model

## Troubleshooting

### whisper-cli not found

```bash

# Check if whisper-cli exists

whispy info

# Build whisper-cli

whispy build

# Or build manually

cd whisper.cpp

cmake -B build && cmake --build build -j --config Release

```

### No model found

```bash

# Download a model

cd whisper.cpp

sh ./models/download-ggml-model.sh base.en

# Or specify model explicitly

whispy transcribe audio.wav --model /path/to/model.bin

```

## License

This project is licensed under the MIT License - see the LICENSE file for details.

## Contributing

Contributions are welcome! Please feel free to submit a Pull Request.

### Development setup

```bash

git clone --recursive https://github.com/your-username/whispy.git

cd whispy

pip install -e .

whispy build

```

## Acknowledgments

- [whisper.cpp](https://github.com/ggerganov/whisper.cpp) - Fast C++ implementation of OpenAI's Whisper

- [OpenAI Whisper](https://github.com/openai/whisper) - Original Whisper model

- [Typer](https://typer.tiangolo.com/) - CLI framework

Raw data

{

"_id": null,

"home_page": null,

"name": "whisper.py",

"maintainer": null,

"docs_url": null,

"requires_python": ">=3.7",

"maintainer_email": null,

"keywords": "whisper, speech recognition, ASR, audio, machine learning",

"author": null,

"author_email": "Whispy Team <whispy@example.com>",

"download_url": "https://files.pythonhosted.org/packages/d2/a4/bdd8ee390a1691dc17783b3f3afaca811f4c9cecb9ce406ab725b0116181/whisper_py-0.1.0.tar.gz",

"platform": null,

"description": "# Whispy - Fast Speech Recognition CLI\n\nA fast and efficient command-line interface for [whisper.cpp](https://github.com/ggerganov/whisper.cpp), providing automatic speech recognition with GPU acceleration.\n\n[](https://www.youtube.com/watch?v=O6lGEreTbzg)\n\n## Features\n\n- \ud83d\ude80 **Fast transcription** using whisper.cpp with GPU acceleration (Metal on macOS, CUDA on Linux/Windows)\n- \ud83c\udfaf **Simple CLI interface** for easy audio transcription\n- \ud83d\udcc1 **Multiple audio formats** supported (WAV, MP3, FLAC, OGG)\n- \ud83c\udf0d **Multi-language support** with automatic language detection\n- \ud83d\udcdd **Flexible output** options (stdout, file)\n- \ud83d\udd27 **Auto-detection** of models and whisper-cli binary\n- \ud83c\udfd7\ufe0f **Automatic building** of whisper.cpp if needed\n\n## Installation\n\n### Quick Install (Recommended)\n\nInstall directly from GitHub with automatic setup:\n\n```bash\npip install git+https://github.com/amarder/whispy.git\n```\n\nThis will automatically:\n- Clone whisper.cpp to `~/.whispy/whisper.cpp`\n- Build the whisper-cli binary with GPU acceleration\n- Install the whispy CLI\n\n### Manual Install\n\nIf you prefer to install manually:\n\n#### Prerequisites\n\n- Python 3.7+\n- CMake 3.10+ (for building whisper.cpp)\n- C++ compiler with C++17 support\n- Git (for cloning whisper.cpp)\n\n#### Steps\n\n```bash\n# Clone repository\ngit clone https://github.com/amarder/whispy.git\ncd whispy\n\n# Install whispy\npip install -e .\n\n# Clone whisper.cpp if you don't have it\ngit clone https://github.com/ggerganov/whisper.cpp.git\n\n# Build whisper-cli (or use: whispy build)\ncd whisper.cpp\ncmake -B build\ncmake --build build -j --config Release\ncd ..\n```\n\n### Requirements\n\n**Basic requirements:**\n- Python 3.7+\n- CMake (for building whisper.cpp)\n- C++ compiler (gcc, clang, or MSVC)\n- Git\n\n**For audio recording features:**\n- Microphone access\n- Audio drivers (pre-installed on most systems)\n- Additional Python packages: `sounddevice`, `numpy`, `scipy`\n\n**Supported platforms:**\n- \ud83c\udf4e macOS (Intel & Apple Silicon) with CoreAudio\n- \ud83d\udc27 Linux (with ALSA/PulseAudio) \n- \ud83e\ude9f Windows (with DirectSound)\n\n### Download a model\n\nAfter installation, download a model to use for transcription:\n\n```bash\n# For pip installs from GitHub\ncd ~/.whispy/whisper.cpp\nsh ./models/download-ggml-model.sh base.en\n\n# For manual installs\ncd whisper.cpp\nsh ./models/download-ggml-model.sh base.en\n\n# Alternative: Download directly to models/\nmkdir -p models\ncurl -L -o models/ggml-base.en.bin https://huggingface.co/ggerganov/whisper.cpp/resolve/main/ggml-base.en.bin\n```\n\n## Usage\n\n### Basic transcription\n\n```bash\n# Transcribe an audio file\nwhispy transcribe audio.wav\n\n# Transcribe with explicit model\nwhispy transcribe audio.wav --model models/ggml-base.en.bin\n\n# Transcribe with language specification\nwhispy transcribe audio.wav --language en\n\n# Save transcript to file\nwhispy transcribe audio.wav --output transcript.txt\n\n# Verbose output\nwhispy transcribe audio.wav --verbose\n```\n\n### Record and transcribe\n\nRecord audio from your microphone and transcribe it in real-time:\n\n```bash\n# Record and transcribe (press Ctrl+C to stop recording)\nwhispy record-and-transcribe\n\n# Test microphone before recording\nwhispy record-and-transcribe --test-mic\n\n# Record with specific model and language\nwhispy record-and-transcribe --model models/ggml-base.en.bin --language en\n\n# Save both transcript and audio\nwhispy record-and-transcribe --output transcript.txt --save-audio recording.wav\n\n# Verbose output with device information\nwhispy record-and-transcribe --verbose\n```\n\n### Real-time transcription\n\nTranscribe audio from your microphone in real-time using streaming chunks:\n\n```bash\n# Start real-time transcription (press Ctrl+C to stop)\nwhispy realtime\n\n# With custom settings for faster/slower processing\nwhispy realtime --chunk-duration 2.0 --overlap-duration 0.5 --silence-threshold 0.02\n\n# Show individual chunks instead of continuous output\nwhispy realtime --show-chunks\n\n# Save final transcript to file\nwhispy realtime --output live_transcript.txt\n\n# Test real-time setup\nwhispy realtime --test-setup\n\n# Verbose mode for debugging\nwhispy realtime --verbose\n```\n\n**Real-time Parameters:**\n- `--chunk-duration`: Duration of each audio chunk in seconds (default: 3.0)\n- `--overlap-duration`: Overlap between chunks in seconds (default: 1.0)\n- `--silence-threshold`: Voice activity detection threshold (default: 0.01)\n- `--show-chunks`: Show individual chunk transcripts instead of continuous mode\n- `--test-setup`: Test real-time setup without starting transcription\n\n### System information\n\n```bash\n# Check system status\nwhispy info\n\n# Show version\nwhispy version\n\n# Build whisper-cli if needed\nwhispy build\n```\n\n### Supported audio formats\n\n- WAV\n- MP3 \n- FLAC\n- OGG\n\n### Available models\n\nDownload models using whisper.cpp's script or directly:\n\n- `tiny.en`, `tiny` - Fastest, least accurate\n- `base.en`, `base` - Good balance of speed and accuracy\n- `small.en`, `small` - Better accuracy\n- `medium.en`, `medium` - High accuracy\n- `large-v1`, `large-v2`, `large-v3` - Best accuracy, slower\n\n## Examples\n\n```bash\n# Quick transcription with auto-detected model\nwhispy transcribe meeting.wav\n\n# High-quality transcription\nwhispy transcribe interview.mp3 --model whisper.cpp/models/ggml-large-v3.bin\n\n# Transcribe non-English audio\nwhispy transcribe spanish_audio.wav --language es\n\n# Save results and show details\nwhispy transcribe podcast.mp3 --output transcript.txt --verbose\n\n# Record and transcribe in real-time\nwhispy record-and-transcribe\n\n# Record with high-quality model and save everything\nwhispy record-and-transcribe \\\n --model whisper.cpp/models/ggml-large-v3.bin \\\n --output meeting-notes.txt \\\n --save-audio meeting-recording.wav \\\n --verbose\n\n# Quick voice memo transcription\nwhispy record-and-transcribe --language en --output memo.txt\n\n# Real-time transcription with live output\nwhispy realtime\n\n# Real-time transcription with custom settings\nwhispy realtime --chunk-duration 2.0 --show-chunks --output live_notes.txt\n```\n\n## Testing\n\nWhispy includes a comprehensive test suite to ensure the CLI works correctly with different scenarios.\n\n### Running Tests\n\n```bash\n# Install development dependencies\npip install -e \".[dev]\"\n\n# Run all tests\npytest\n\n# Run tests with verbose output\npytest -v\n\n# Run only unit tests\npytest tests/test_unit.py\n\n# Run only CLI tests\npytest tests/test_cli.py\n\n# Run tests with coverage\npytest --cov=whispy --cov-report=html\n\n# Skip slow tests\npytest --fast\n```\n\n### Test Categories\n\n- **Unit tests** (`tests/test_unit.py`): Test individual functions and modules\n- **CLI tests** (`tests/test_cli.py`): Test command-line interface functionality\n- **Integration tests**: Test full workflows with real audio files\n\n### Using the Test Runner\n\n```bash\n# Use the convenience script\npython run_tests.py --help\n\n# Run unit tests only\npython run_tests.py -t unit -v\n\n# Run with coverage\npython run_tests.py -c -v\n\n# Run fast tests only\npython run_tests.py -f\n```\n\n### Test Requirements\n\n- pytest >= 7.0.0\n- pytest-cov >= 4.0.0 \n- pytest-mock >= 3.10.0\n- Sample audio files (JFK sample from whisper.cpp)\n\n### What's Tested\n\n- \u2705 CLI commands (help, version, info, transcribe, record-and-transcribe)\n- \u2705 Audio file transcription with sample files\n- \u2705 Audio recording from microphone\n- \u2705 Real-time record-and-transcribe workflow\n- \u2705 Microphone testing functionality\n- \u2705 Error handling for invalid files/models/devices\n- \u2705 Output file generation\n- \u2705 Language options and verbose modes\n- \u2705 System requirements and binary detection\n- \u2705 Model file discovery and validation\n\n## Development\n\n### Project Structure\n\n```\nwhispy/\n\u251c\u2500\u2500 whispy/\n\u2502 \u251c\u2500\u2500 __init__.py # Package initialization\n\u2502 \u251c\u2500\u2500 cli.py # Command-line interface\n\u2502 \u2514\u2500\u2500 transcribe.py # Core transcription logic\n\u251c\u2500\u2500 whisper.cpp/ # Git submodule (whisper.cpp source)\n\u251c\u2500\u2500 models/ # Model files directory\n\u251c\u2500\u2500 pyproject.toml # Project configuration\n\u2514\u2500\u2500 README.md\n```\n\n### How it works\n\nWhispy works as a wrapper around the `whisper-cli` binary from whisper.cpp:\n\n1. **Auto-detection**: Finds whisper-cli binary and model files automatically\n2. **Subprocess calls**: Runs whisper-cli as a subprocess for transcription\n3. **Output parsing**: Captures and returns the transcribed text\n4. **Performance**: Gets full GPU acceleration and optimizations from whisper.cpp\n\n### Building from source\n\n```bash\n# Clone with whisper.cpp submodule\ngit clone --recursive https://github.com/your-username/whispy.git\ncd whispy\n\n# Install in development mode\npip install -e .\n\n# Build whisper.cpp\nwhispy build\n# OR manually:\n# cd whisper.cpp && cmake -B build && cmake --build build -j --config Release\n```\n\n### Adding new features\n\nThe CLI is built with [Typer](https://typer.tiangolo.com/) and can be easily extended:\n\n```python\n@app.command()\ndef new_command():\n \"\"\"Add a new command to the CLI\"\"\"\n console.print(\"New feature!\")\n```\n\n## Performance\n\nWhispy automatically uses the best available backend:\n\n- **macOS**: Metal GPU acceleration \n- **Linux/Windows**: CUDA GPU acceleration (if available)\n- **Fallback**: Optimized CPU with BLAS\n\nTypical performance on Apple M1:\n- ~10x faster than real-time for base.en model\n- ~5x faster than real-time for large-v3 model\n\n## Troubleshooting\n\n### whisper-cli not found\n\n```bash\n# Check if whisper-cli exists\nwhispy info\n\n# Build whisper-cli\nwhispy build\n\n# Or build manually\ncd whisper.cpp\ncmake -B build && cmake --build build -j --config Release\n```\n\n### No model found\n\n```bash\n# Download a model\ncd whisper.cpp\nsh ./models/download-ggml-model.sh base.en\n\n# Or specify model explicitly\nwhispy transcribe audio.wav --model /path/to/model.bin\n```\n\n## License\n\nThis project is licensed under the MIT License - see the LICENSE file for details.\n\n## Contributing\n\nContributions are welcome! Please feel free to submit a Pull Request.\n\n### Development setup\n\n```bash\ngit clone --recursive https://github.com/your-username/whispy.git\ncd whispy\npip install -e .\nwhispy build\n```\n\n## Acknowledgments\n\n- [whisper.cpp](https://github.com/ggerganov/whisper.cpp) - Fast C++ implementation of OpenAI's Whisper\n- [OpenAI Whisper](https://github.com/openai/whisper) - Original Whisper model\n- [Typer](https://typer.tiangolo.com/) - CLI framework \n",

"bugtrack_url": null,

"license": null,

"summary": "A Python wrapper for whisper.cpp - fast automatic speech recognition",

"version": "0.1.0",

"project_urls": {

"Bug Reports": "https://github.com/your-username/whispy/issues",

"Homepage": "https://github.com/your-username/whispy",

"Source": "https://github.com/your-username/whispy"

},

"split_keywords": [

"whisper",

" speech recognition",

" asr",

" audio",

" machine learning"

],

"urls": [

{

"comment_text": null,

"digests": {

"blake2b_256": "314c2cb25b11b665aad7fc9cc973b37347dd7cba07a73441efa0d9b0850f5b32",

"md5": "e36cfe5b09ca9abad4f00e7874532c41",

"sha256": "58229f31cd3e3bd4683a4b38ed7762dd9caa771dc3cf5cefc93dd76823af9118"

},

"downloads": -1,

"filename": "whisper_py-0.1.0-py3-none-any.whl",

"has_sig": false,

"md5_digest": "e36cfe5b09ca9abad4f00e7874532c41",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.7",

"size": 21430,

"upload_time": "2025-07-09T10:13:22",

"upload_time_iso_8601": "2025-07-09T10:13:22.069104Z",

"url": "https://files.pythonhosted.org/packages/31/4c/2cb25b11b665aad7fc9cc973b37347dd7cba07a73441efa0d9b0850f5b32/whisper_py-0.1.0-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": null,

"digests": {

"blake2b_256": "d2a4bdd8ee390a1691dc17783b3f3afaca811f4c9cecb9ce406ab725b0116181",

"md5": "c58357e675f9769749c672612e230f95",

"sha256": "01358e2799fb69e9a4865d1de526920cd353300b8778619d441e89a2f286a7de"

},

"downloads": -1,

"filename": "whisper_py-0.1.0.tar.gz",

"has_sig": false,

"md5_digest": "c58357e675f9769749c672612e230f95",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.7",

"size": 31397,

"upload_time": "2025-07-09T10:13:23",

"upload_time_iso_8601": "2025-07-09T10:13:23.741229Z",

"url": "https://files.pythonhosted.org/packages/d2/a4/bdd8ee390a1691dc17783b3f3afaca811f4c9cecb9ce406ab725b0116181/whisper_py-0.1.0.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2025-07-09 10:13:23",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "your-username",

"github_project": "whispy",

"github_not_found": true,

"lcname": "whisper.py"

}