| Name | CmonCrawl JSON |

| Version |

1.1.8

JSON

JSON |

| download |

| home_page | None |

| Summary | None |

| upload_time | 2024-04-07 23:58:03 |

| maintainer | None |

| docs_url | None |

| author | None |

| requires_python | None |

| license | MIT License Copyright (c) [2023] [Hynek Kydlíček] Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions: The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software. THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE. |

| keywords |

common crawl

crawl

extractor

common crawl extractor

web crawler

web extractor

web scraper

|

| VCS |

|

| bugtrack_url |

|

| requirements |

aiofiles

aiohttp

beautifulsoup4

pydantic

stomp.py

tqdm

warcio

aiocsv

aioboto3

tenacity

python-dotenv

|

| Travis-CI |

No Travis.

|

| coveralls test coverage |

No coveralls.

|

## CommonCrawl Extractor with great versatility

[](https://hynky1999.github.io/CmonCrawl/)

[](https://pypi.org/project/cmoncrawl/)

Unlock the full potential of CommonCrawl data with `CmonCrawl`, the most versatile extractor that offers unparalleled modularity and ease of use.

## Why Choose CmonCrawl?

`CmonCrawl` stands out from the crowd with its unique features:

- **High Modularity**: Easily create custom extractors tailored to your specific needs.

- **Comprehensive Access**: Supports all CommonCrawl access methods, including AWS Athena and the CommonCrawl Index API for querying, and S3 and the CommonCrawl API for downloading.

- **Flexible Utility**: Accessible via a Command Line Interface (CLI) or as a Software Development Kit (SDK), catering to your preferred workflow.

- **Type Safety**: Built with type safety in mind, ensuring that your code is robust and reliable.

## Getting Started

### Installation

#### Install From PyPi

```bash

$ pip install cmoncrawl

```

#### Install From source

```bash

$ git clone https://github.com/hynky1999/CmonCrawl

$ cd CmonCrawl

$ pip install -r requirements.txt

$ pip install .

```

## Usage Guide

### Step 1: Extractor preparation

Begin by preparing your custom extractor. Obtain sample HTML files from the CommonCrawl dataset using the command:

```bash

$ cmon download --match_type=domain --limit=100 html_output example.com html

```

This will download a first 100 html files from *example.com* and save them in `html_output`.

### Step 2: Extractor creation

Create a new Python file for your extractor, such as `my_extractor.py`, and place it in the `extractors` directory. Implement your extraction logic as shown below:

```python

from bs4 import BeautifulSoup

from cmoncrawl.common.types import PipeMetadata

from cmoncrawl.processor.pipeline.extractor import BaseExtractor

class MyExtractor(BaseExtractor):

def __init__(self):

# you can force a specific encoding if you know it

super().__init__(encoding=None)

def extract_soup(self, soup: BeautifulSoup, metadata: PipeMetadata):

# here you can extract the data you want from the soup

# and return a dict with the data you want to save

body = soup.select_one("body")

if body is None:

return None

return {

"body": body.get_text()

}

# You can also override the following methods to drop the files you don't want to extracti

# Return True to keep the file, False to drop it

def filter_raw(self, response: str, metadata: PipeMetadata) -> bool:

return True

def filter_soup(self, soup: BeautifulSoup, metadata: PipeMetadata) -> bool:

return True

# Make sure to instantiate your extractor into extractor variable

# The name must match so that the framework can find it

extractor = MyExtractor()

```

### Step 3: Config creation

Set up a configuration file, `config.json`, to specify the behavior of your extractor(s):

```json

{

"extractors_path": "./extractors",

"routes": [

{

# Define which url match the extractor, use regex

"regexes": [".*"],

"extractors": [{

"name": "my_extractor",

# You can use since and to choose the extractor based

on the date of the crawl

# You can ommit either of them

"since": "2009-01-01",

"to": "2025-01-01"

}]

},

# More routes here

]

}

```

Please note that the configuration file `config.json` must be a valid JSON. Therefore, comments as shown in the example above cannot be included directly in the JSON file.

### Step: 4 Run the extractor

Test your extractor with the following command:

```bash

$ cmon extract config.json extracted_output html_output/*.html html

```

### Step 5: Full crawl and extraction

After testing, start the full crawl and extraction process:

#### 1. Retrieve a list of records to extract.

```bash

cmon download --match_type=domain --limit=100 dr_output example.com record

```

This will download the first 100 records from *example.com* and save them in `dr_output`. By default it saves 100_000 records per file, you can change this with the `--max_crawls_per_file` option.

#### 2. Process the records using your custom extractor.

```bash

$ cmon extract --n_proc=4 config.json extracted_output dr_output/*.jsonl record

```

Note that you can use the `--n_proc` option to specify the number of processes to use for the extraction. Multiprocessing is done on file level, so if you have just one file it will not be used.

## Handling CommonCrawl Errors

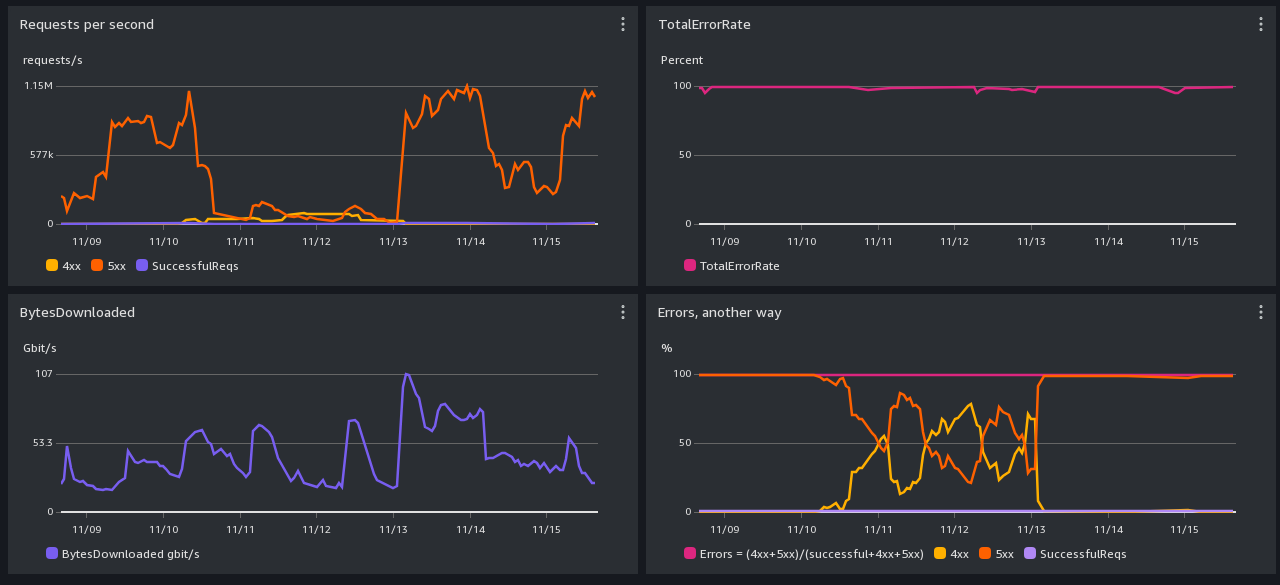

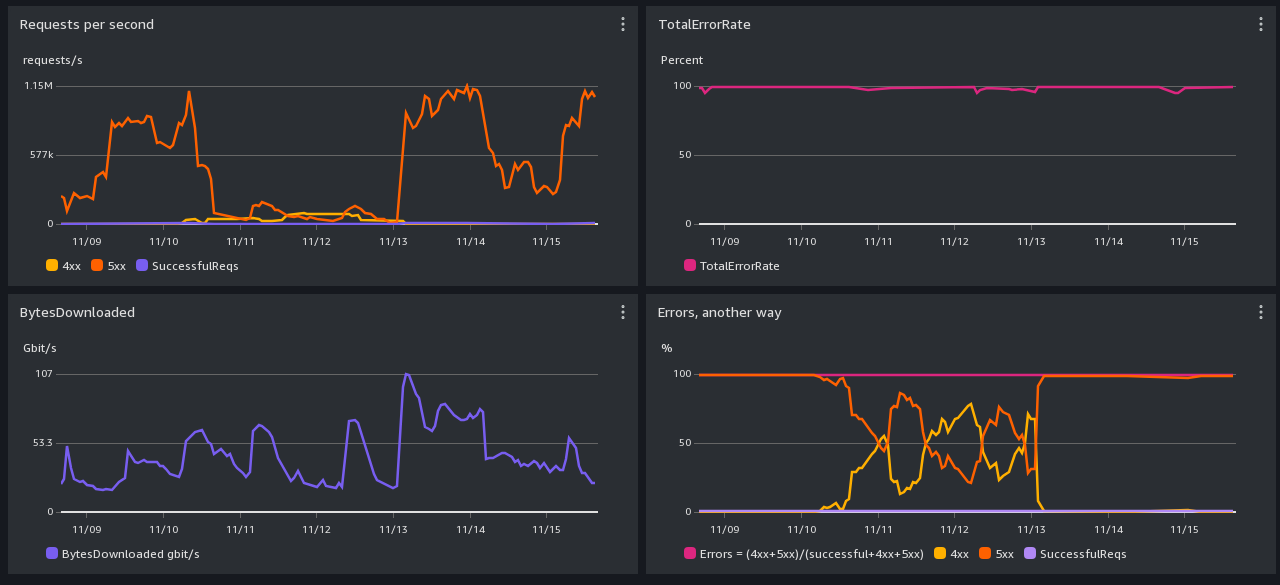

Before implementing any of the strategies to mitigate high error responses, it is crucial to first check the status of the CommonCrawl gateway and S3 buckets. This can provide valuable insights into any ongoing performance issues that might be affecting your access rates. Consult the CommonCrawl status page for the latest updates on performance issues: [Oct-Nov 2023 Performance Issues](https://commoncrawl.org/blog/oct-nov-2023-performance-issues).

Encountering a high number of error responses usually indicates excessive request rates. To mitigate this, consider the following strategies in order:

1. **Switch to S3 Access**: Instead of using the API Gateway, opt for S3 access which allows for higher request rates.

2. **Regulate Request Rate**: The total requests per second are determined by the formula `n_proc * max_requests_per_process`. To reduce the request rate:

- Decrease the number of processes (`n_proc`).

- Reduce the maximum requests per process (`max_requests_per_process`).

Aim to maintain the total request rate below 40 per second.

3. **Adjust Retry Settings**: If errors persist:

- Increase `max_retry` to ensure eventual data retrieval.

- Set a higher `sleep_base` to prevent API overuse and to respect rate limits.

## Advanced Usage

`CmonCrawl` was designed with flexibility in mind, allowing you to tailor the framework to your needs. For distributed extraction and more advanced scenarios, refer to our [documentation](https://hynky1999.github.io/CmonCrawl/) and the [CZE-NEC project](https://github.com/hynky1999/Czech-News-Classification-dataset).

## Examples and Support

For practical examples and further assistance, visit our [examples directory](https://github.com/hynky1999/CmonCrawl/tree/main/examples).

## Contribute

Join our community of contributors on [GitHub](https://github.com/hynky1999/CmonCrawl). Your contributions are welcome!

## License

`CmonCrawl` is open-source software licensed under the MIT license.

Raw data

{

"_id": null,

"home_page": null,

"name": "CmonCrawl",

"maintainer": null,

"docs_url": null,

"requires_python": null,

"maintainer_email": null,

"keywords": "Common Crawl, Crawl, Extractor, Common Crawl Extractor, Web Crawler, Web Extractor, Web Scraper",

"author": null,

"author_email": null,

"download_url": "https://files.pythonhosted.org/packages/92/5d/dec4104edbea4dc0139228225a4625be965caba4bbf03b5ba5e0c1826593/CmonCrawl-1.1.8.tar.gz",

"platform": null,

"description": "\n\n\n## CommonCrawl Extractor with great versatility\n\n\n[](https://hynky1999.github.io/CmonCrawl/)\n\n\n\n[](https://pypi.org/project/cmoncrawl/)\n\nUnlock the full potential of CommonCrawl data with `CmonCrawl`, the most versatile extractor that offers unparalleled modularity and ease of use.\n\n## Why Choose CmonCrawl?\n\n`CmonCrawl` stands out from the crowd with its unique features:\n\n- **High Modularity**: Easily create custom extractors tailored to your specific needs.\n- **Comprehensive Access**: Supports all CommonCrawl access methods, including AWS Athena and the CommonCrawl Index API for querying, and S3 and the CommonCrawl API for downloading.\n- **Flexible Utility**: Accessible via a Command Line Interface (CLI) or as a Software Development Kit (SDK), catering to your preferred workflow.\n- **Type Safety**: Built with type safety in mind, ensuring that your code is robust and reliable.\n\n## Getting Started\n\n### Installation\n\n#### Install From PyPi\n```bash\n$ pip install cmoncrawl\n```\n#### Install From source\n```bash\n$ git clone https://github.com/hynky1999/CmonCrawl\n$ cd CmonCrawl\n$ pip install -r requirements.txt\n$ pip install .\n```\n\n## Usage Guide\n\n### Step 1: Extractor preparation\nBegin by preparing your custom extractor. Obtain sample HTML files from the CommonCrawl dataset using the command:\n\n```bash\n$ cmon download --match_type=domain --limit=100 html_output example.com html\n```\nThis will download a first 100 html files from *example.com* and save them in `html_output`.\n\n\n### Step 2: Extractor creation\nCreate a new Python file for your extractor, such as `my_extractor.py`, and place it in the `extractors` directory. Implement your extraction logic as shown below:\n\n```python\nfrom bs4 import BeautifulSoup\nfrom cmoncrawl.common.types import PipeMetadata\nfrom cmoncrawl.processor.pipeline.extractor import BaseExtractor\nclass MyExtractor(BaseExtractor):\n def __init__(self):\n # you can force a specific encoding if you know it\n super().__init__(encoding=None)\n\n def extract_soup(self, soup: BeautifulSoup, metadata: PipeMetadata):\n # here you can extract the data you want from the soup\n # and return a dict with the data you want to save\n body = soup.select_one(\"body\")\n if body is None:\n return None\n return {\n \"body\": body.get_text()\n }\n\n # You can also override the following methods to drop the files you don't want to extracti\n # Return True to keep the file, False to drop it\n def filter_raw(self, response: str, metadata: PipeMetadata) -> bool:\n return True\n def filter_soup(self, soup: BeautifulSoup, metadata: PipeMetadata) -> bool:\n return True\n\n# Make sure to instantiate your extractor into extractor variable\n# The name must match so that the framework can find it\nextractor = MyExtractor()\n```\n\n### Step 3: Config creation\nSet up a configuration file, `config.json`, to specify the behavior of your extractor(s):\n```json\n{\n \"extractors_path\": \"./extractors\",\n \"routes\": [\n {\n # Define which url match the extractor, use regex\n \"regexes\": [\".*\"],\n \"extractors\": [{\n \"name\": \"my_extractor\",\n # You can use since and to choose the extractor based\n on the date of the crawl\n # You can ommit either of them\n \"since\": \"2009-01-01\",\n \"to\": \"2025-01-01\"\n }]\n },\n # More routes here\n ]\n}\n```\nPlease note that the configuration file `config.json` must be a valid JSON. Therefore, comments as shown in the example above cannot be included directly in the JSON file.\n\n\n### Step: 4 Run the extractor\nTest your extractor with the following command:\n\n```bash\n$ cmon extract config.json extracted_output html_output/*.html html\n```\n\n### Step 5: Full crawl and extraction\nAfter testing, start the full crawl and extraction process:\n\n#### 1. Retrieve a list of records to extract.\n\n```bash\ncmon download --match_type=domain --limit=100 dr_output example.com record\n```\n\nThis will download the first 100 records from *example.com* and save them in `dr_output`. By default it saves 100_000 records per file, you can change this with the `--max_crawls_per_file` option.\n\n#### 2. Process the records using your custom extractor.\n```bash\n$ cmon extract --n_proc=4 config.json extracted_output dr_output/*.jsonl record\n```\n\nNote that you can use the `--n_proc` option to specify the number of processes to use for the extraction. Multiprocessing is done on file level, so if you have just one file it will not be used.\n\n## Handling CommonCrawl Errors\n\nBefore implementing any of the strategies to mitigate high error responses, it is crucial to first check the status of the CommonCrawl gateway and S3 buckets. This can provide valuable insights into any ongoing performance issues that might be affecting your access rates. Consult the CommonCrawl status page for the latest updates on performance issues: [Oct-Nov 2023 Performance Issues](https://commoncrawl.org/blog/oct-nov-2023-performance-issues).\n\n\n\nEncountering a high number of error responses usually indicates excessive request rates. To mitigate this, consider the following strategies in order:\n\n1. **Switch to S3 Access**: Instead of using the API Gateway, opt for S3 access which allows for higher request rates.\n2. **Regulate Request Rate**: The total requests per second are determined by the formula `n_proc * max_requests_per_process`. To reduce the request rate:\n - Decrease the number of processes (`n_proc`).\n - Reduce the maximum requests per process (`max_requests_per_process`).\n\n Aim to maintain the total request rate below 40 per second.\n3. **Adjust Retry Settings**: If errors persist:\n - Increase `max_retry` to ensure eventual data retrieval.\n - Set a higher `sleep_base` to prevent API overuse and to respect rate limits.\n\n## Advanced Usage\n\n`CmonCrawl` was designed with flexibility in mind, allowing you to tailor the framework to your needs. For distributed extraction and more advanced scenarios, refer to our [documentation](https://hynky1999.github.io/CmonCrawl/) and the [CZE-NEC project](https://github.com/hynky1999/Czech-News-Classification-dataset).\n\n## Examples and Support\n\nFor practical examples and further assistance, visit our [examples directory](https://github.com/hynky1999/CmonCrawl/tree/main/examples).\n\n## Contribute\n\nJoin our community of contributors on [GitHub](https://github.com/hynky1999/CmonCrawl). Your contributions are welcome!\n\n## License\n\n`CmonCrawl` is open-source software licensed under the MIT license.\n",

"bugtrack_url": null,

"license": "MIT License Copyright (c) [2023] [Hynek Kydl\u00ed\u010dek] Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the \"Software\"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions: The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software. THE SOFTWARE IS PROVIDED \"AS IS\", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE. ",

"summary": null,

"version": "1.1.8",

"project_urls": {

"Source": "https://github.com/hynky1999/CmonCrawl"

},

"split_keywords": [

"common crawl",

" crawl",

" extractor",

" common crawl extractor",

" web crawler",

" web extractor",

" web scraper"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "14a736296c08c68d2f0063c5a43644cabfcd98f068787bcfef19884e417f8832",

"md5": "62de87385fd4bc626258b299d7c52e08",

"sha256": "099b69600a9f03e043b34a766702cbbb92a069c005b89ce5f6ac16b1957123c1"

},

"downloads": -1,

"filename": "CmonCrawl-1.1.8-py3-none-any.whl",

"has_sig": false,

"md5_digest": "62de87385fd4bc626258b299d7c52e08",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": null,

"size": 54475,

"upload_time": "2024-04-07T23:58:01",

"upload_time_iso_8601": "2024-04-07T23:58:01.322809Z",

"url": "https://files.pythonhosted.org/packages/14/a7/36296c08c68d2f0063c5a43644cabfcd98f068787bcfef19884e417f8832/CmonCrawl-1.1.8-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "925ddec4104edbea4dc0139228225a4625be965caba4bbf03b5ba5e0c1826593",

"md5": "2b68d1a08eac6e484d0456ca05f35282",

"sha256": "83b8f95798938382f6fc498a14f257aafa05ceb2357cce0a11f9324d8164b44a"

},

"downloads": -1,

"filename": "CmonCrawl-1.1.8.tar.gz",

"has_sig": false,

"md5_digest": "2b68d1a08eac6e484d0456ca05f35282",

"packagetype": "sdist",

"python_version": "source",

"requires_python": null,

"size": 669520,

"upload_time": "2024-04-07T23:58:03",

"upload_time_iso_8601": "2024-04-07T23:58:03.865191Z",

"url": "https://files.pythonhosted.org/packages/92/5d/dec4104edbea4dc0139228225a4625be965caba4bbf03b5ba5e0c1826593/CmonCrawl-1.1.8.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2024-04-07 23:58:03",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "hynky1999",

"github_project": "CmonCrawl",

"travis_ci": false,

"coveralls": false,

"github_actions": true,

"requirements": [

{

"name": "aiofiles",

"specs": [

[

"~=",

"23.2.1"

]

]

},

{

"name": "aiohttp",

"specs": [

[

"~=",

"3.9.3"

]

]

},

{

"name": "beautifulsoup4",

"specs": [

[

"~=",

"4.12.3"

]

]

},

{

"name": "pydantic",

"specs": [

[

"~=",

"2.6.4"

]

]

},

{

"name": "stomp.py",

"specs": [

[

"~=",

"8.1.0"

]

]

},

{

"name": "tqdm",

"specs": [

[

"~=",

"4.66.1"

]

]

},

{

"name": "warcio",

"specs": [

[

"~=",

"1.7.4"

]

]

},

{

"name": "aiocsv",

"specs": [

[

"~=",

"1.3.1"

]

]

},

{

"name": "aioboto3",

"specs": [

[

"~=",

"12.3.0"

]

]

},

{

"name": "tenacity",

"specs": [

[

"~=",

"8.2.3"

]

]

},

{

"name": "python-dotenv",

"specs": [

[

"==",

"1.0.0"

]

]

}

],

"lcname": "cmoncrawl"

}