<div align="center">

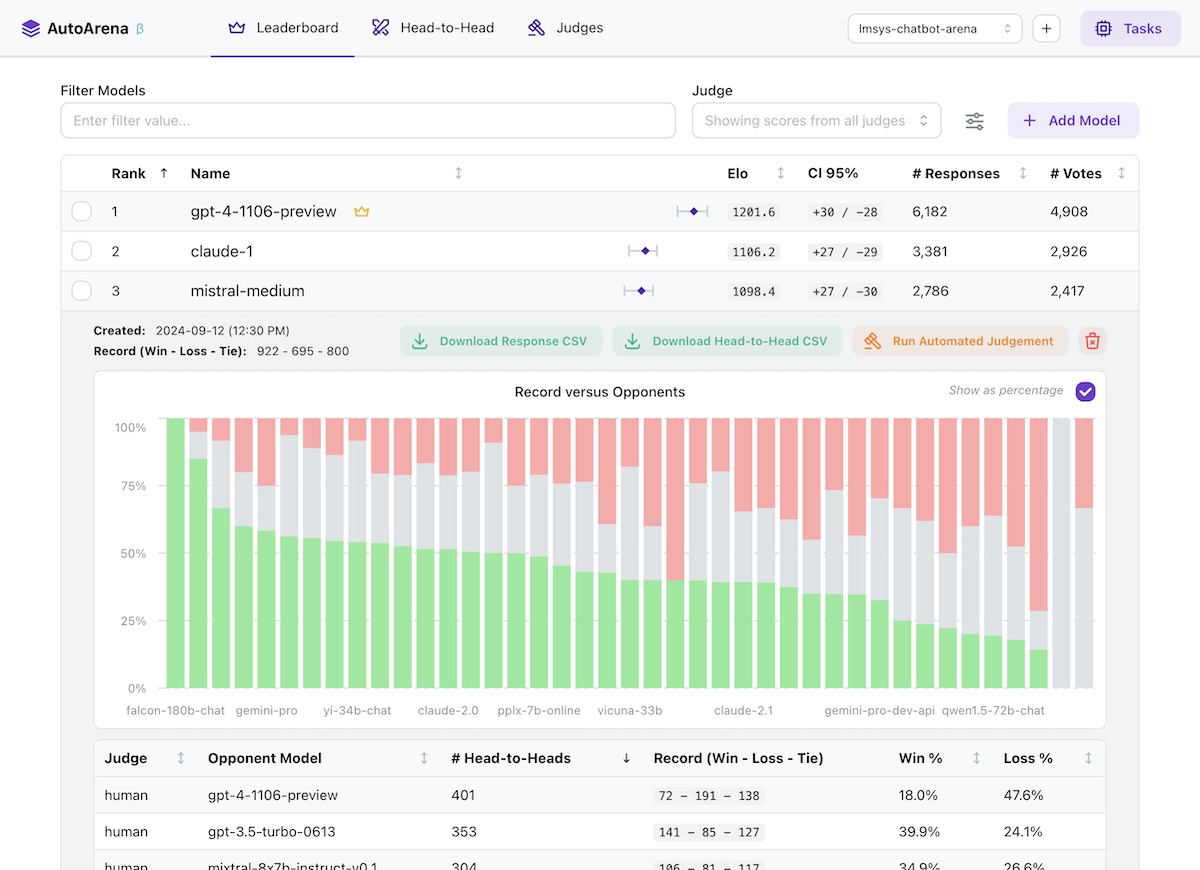

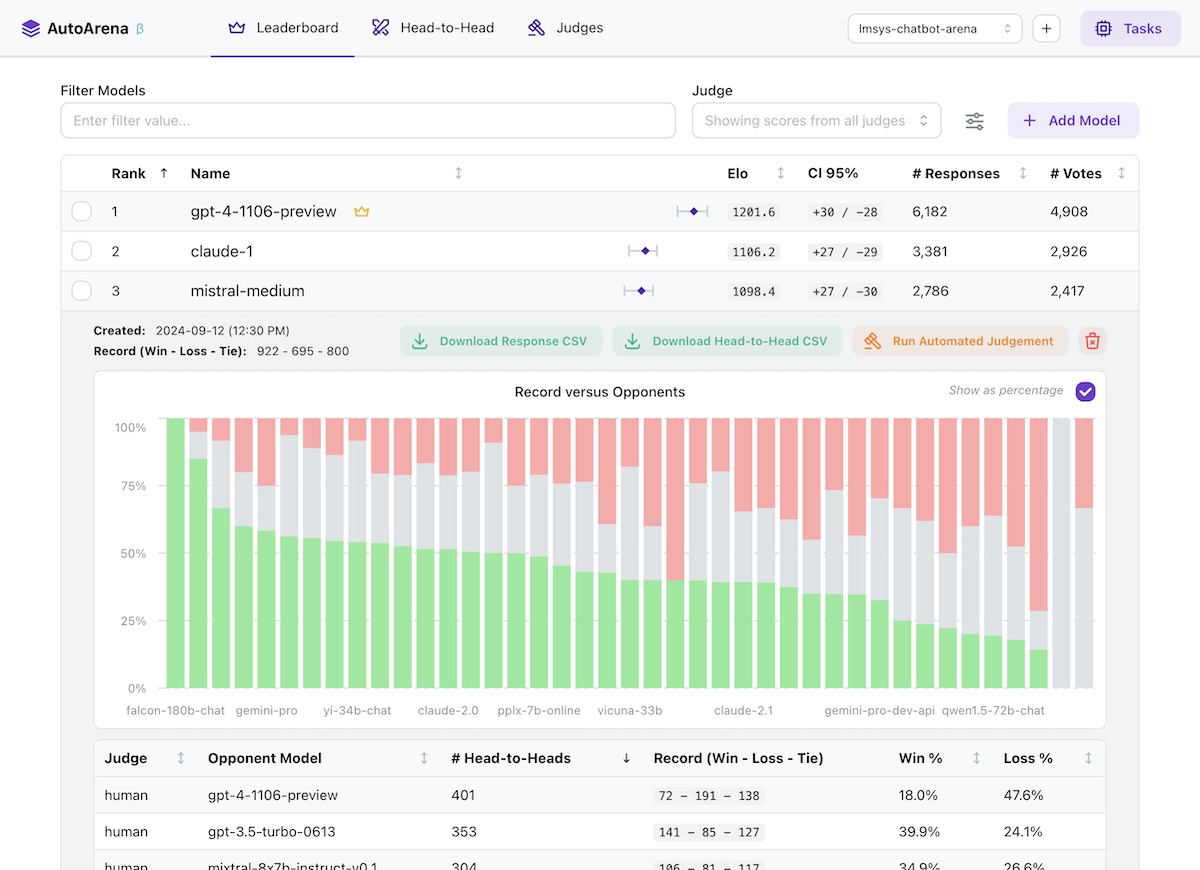

# AutoArena

**Create leaderboards ranking LLM outputs against one another using automated judge evaluation**

[](https://www.apache.org/licenses/LICENSE-2.0)

[](https://github.com/kolenaIO/autoarena/actions)

[](https://app.codecov.io/gh/kolenaIO/autoarena)

[](https://pypi.python.org/pypi/autoarena)

[](https://pypi.org/project/autoarena)

[](https://kolena-autoarena.slack.com)

</div>

---

- 🏆 Rank outputs from different LLMs, RAG setups, and prompts to find the best configuration of your system

- ⚔️ Perform automated head-to-head evaluation using judges from OpenAI, Anthropic, Cohere, and more

- 🤖 Define and run your own custom judges, connecting to internal services or implementing bespoke logic

- 💻 Run application locally, getting full control over your environment and data

[](https://www.youtube.com/watch?v=GMuQPwo-JdU)

## 🤔 Why Head-to-Head Evaluation?

- LLMs are better at judging responses head-to-head than they are in isolation

([arXiv:2408.08688](https://www.arxiv.org/abs/2408.08688v3)) — leaderboard rankings computed using Elo scores from

many automated side-by-side comparisons should be more trustworthy than leaderboards using metrics computed on each

model's responses independently!

- The [LMSYS Chatbot Arena](https://lmarena.ai/) has replaced benchmarks for many people as the trusted true leaderboard

for foundation model performance ([arXiv:2403.04132](https://arxiv.org/abs/2403.04132)). Why not apply this approach

to your own foundation model selection, RAG system setup, or prompt engineering efforts?

- Using a "jury" of multiple smaller models from different model families like `gpt-4o-mini`, `command-r`, and

`claude-3-haiku` generally yields better accuracy than a single frontier judge like `gpt-4o` — while being faster and

_much_ cheaper to run. AutoArena is built around this technique, called PoLL: **P**anel **o**f **LL**M evaluators

([arXiv:2404.18796](https://arxiv.org/abs/2404.18796)).

- Automated side-by-side comparison of model outputs is one of the most prevalent evaluation practices

([arXiv:2402.10524](https://arxiv.org/abs/2402.10524)) — AutoArena makes this process easier than ever to get up

and running.

## 🔥 Getting Started

Install from [PyPI](https://pypi.org/project/autoarena/):

```shell

pip install autoarena

```

Run as a module and visit [localhost:8899](http://localhost:8899/) in your browser:

```shell

python -m autoarena

```

With the application running, getting started is simple:

1. Create a project via the UI.

1. Add responses from a model by selecting a CSV file with `prompt` and `response` columns.

1. Configure an automated judge via the UI. Note that most judges require credentials, e.g. `X_API_KEY` in the

environment where you're running AutoArena.

1. Add responses from a second model to kick off an automated judging task using the judges you configured in the

previous step to decide which of the models you've uploaded provided a better `response` to a given `prompt`.

That's it! After these steps you're fully set up for automated evaluation on AutoArena.

### 📄 Formatting Your Data

AutoArena requires two pieces of information to test a model: the input `prompt` and corresponding model `response`.

- `prompt`: the inputs to your model. When uploading responses, any other models that have been run on the same prompts

are matched and evaluated using the automated judges you have configured.

- `response`: the output from your model. Judges decide which of two models produced a better response, given the same

prompt.

### 📂 Data Storage

Data is stored in `./data/<project>.sqlite` files in the directory where you invoked AutoArena. See

[`data/README.md`](./data/README.md) for more details on data storage in AutoArena.

## 🦾 Development

AutoArena uses [uv](https://github.com/astral-sh/uv) to manage dependencies. To set up this repository for development,

run:

```shell

uv venv && source .venv/bin/activate

uv pip install --all-extras -r pyproject.toml

uv tool run pre-commit install

uv run python3 -m autoarena serve --dev

```

To run AutoArena for development, you will need to run both the backend and frontend service:

- Backend: `uv run python3 -m autoarena serve --dev` (the `--dev`/`-d` flag enables automatic service reloading when

source files change)

- Frontend: see [`ui/README.md`](./ui/README.md)

To build a release tarball in the `./dist` directory:

```shell

./scripts/build.sh

```

Raw data

{

"_id": null,

"home_page": null,

"name": "autoarena",

"maintainer": null,

"docs_url": null,

"requires_python": "<4,>=3.9",

"maintainer_email": null,

"keywords": "AI, AI Evaluation, AI Testing, Artificial Intelligence, LLM, LLM Evaluation, ML, Machine Learning",

"author": null,

"author_email": "Kolena Engineering <eng@kolena.com>",

"download_url": "https://files.pythonhosted.org/packages/cb/1b/9f0533df0b0997776a30b4d7fa03de7e3ef7f98b69e37a4079e177e7b09e/autoarena-0.1.0b11.tar.gz",

"platform": null,

"description": "<div align=\"center\">\n\n# AutoArena\n\n**Create leaderboards ranking LLM outputs against one another using automated judge evaluation**\n\n[](https://www.apache.org/licenses/LICENSE-2.0)\n[](https://github.com/kolenaIO/autoarena/actions)\n[](https://app.codecov.io/gh/kolenaIO/autoarena)\n[](https://pypi.python.org/pypi/autoarena)\n[](https://pypi.org/project/autoarena)\n[](https://kolena-autoarena.slack.com)\n\n</div>\n\n---\n\n- \ud83c\udfc6 Rank outputs from different LLMs, RAG setups, and prompts to find the best configuration of your system\n- \u2694\ufe0f Perform automated head-to-head evaluation using judges from OpenAI, Anthropic, Cohere, and more\n- \ud83e\udd16 Define and run your own custom judges, connecting to internal services or implementing bespoke logic\n- \ud83d\udcbb Run application locally, getting full control over your environment and data\n\n[](https://www.youtube.com/watch?v=GMuQPwo-JdU)\n\n## \ud83e\udd14 Why Head-to-Head Evaluation?\n\n- LLMs are better at judging responses head-to-head than they are in isolation\n ([arXiv:2408.08688](https://www.arxiv.org/abs/2408.08688v3)) \u2014 leaderboard rankings computed using Elo scores from\n many automated side-by-side comparisons should be more trustworthy than leaderboards using metrics computed on each\n model's responses independently!\n- The [LMSYS Chatbot Arena](https://lmarena.ai/) has replaced benchmarks for many people as the trusted true leaderboard\n for foundation model performance ([arXiv:2403.04132](https://arxiv.org/abs/2403.04132)). Why not apply this approach\n to your own foundation model selection, RAG system setup, or prompt engineering efforts?\n- Using a \"jury\" of multiple smaller models from different model families like `gpt-4o-mini`, `command-r`, and\n `claude-3-haiku` generally yields better accuracy than a single frontier judge like `gpt-4o` \u2014 while being faster and\n _much_ cheaper to run. AutoArena is built around this technique, called PoLL: **P**anel **o**f **LL**M evaluators\n ([arXiv:2404.18796](https://arxiv.org/abs/2404.18796)).\n- Automated side-by-side comparison of model outputs is one of the most prevalent evaluation practices\n ([arXiv:2402.10524](https://arxiv.org/abs/2402.10524)) \u2014 AutoArena makes this process easier than ever to get up\n and running.\n\n## \ud83d\udd25 Getting Started\n\nInstall from [PyPI](https://pypi.org/project/autoarena/):\n\n```shell\npip install autoarena\n```\n\nRun as a module and visit [localhost:8899](http://localhost:8899/) in your browser:\n\n```shell\npython -m autoarena\n```\n\nWith the application running, getting started is simple:\n\n1. Create a project via the UI.\n1. Add responses from a model by selecting a CSV file with `prompt` and `response` columns.\n1. Configure an automated judge via the UI. Note that most judges require credentials, e.g. `X_API_KEY` in the\n environment where you're running AutoArena.\n1. Add responses from a second model to kick off an automated judging task using the judges you configured in the\n previous step to decide which of the models you've uploaded provided a better `response` to a given `prompt`.\n\nThat's it! After these steps you're fully set up for automated evaluation on AutoArena.\n\n### \ud83d\udcc4 Formatting Your Data\n\nAutoArena requires two pieces of information to test a model: the input `prompt` and corresponding model `response`.\n\n- `prompt`: the inputs to your model. When uploading responses, any other models that have been run on the same prompts\n are matched and evaluated using the automated judges you have configured.\n- `response`: the output from your model. Judges decide which of two models produced a better response, given the same\n prompt.\n\n### \ud83d\udcc2 Data Storage\n\nData is stored in `./data/<project>.sqlite` files in the directory where you invoked AutoArena. See\n[`data/README.md`](./data/README.md) for more details on data storage in AutoArena.\n\n## \ud83e\uddbe Development\n\nAutoArena uses [uv](https://github.com/astral-sh/uv) to manage dependencies. To set up this repository for development,\nrun:\n\n```shell\nuv venv && source .venv/bin/activate\nuv pip install --all-extras -r pyproject.toml\nuv tool run pre-commit install\nuv run python3 -m autoarena serve --dev\n```\n\nTo run AutoArena for development, you will need to run both the backend and frontend service:\n\n- Backend: `uv run python3 -m autoarena serve --dev` (the `--dev`/`-d` flag enables automatic service reloading when\n source files change)\n- Frontend: see [`ui/README.md`](./ui/README.md)\n\nTo build a release tarball in the `./dist` directory:\n\n```shell\n./scripts/build.sh\n```\n",

"bugtrack_url": null,

"license": null,

"summary": null,

"version": "0.1.0b11",

"project_urls": {

"Homepage": "https://www.kolena.com/autoarena",

"Repository": "https://github.com/kolenaIO/autoarena.git"

},

"split_keywords": [

"ai",

" ai evaluation",

" ai testing",

" artificial intelligence",

" llm",

" llm evaluation",

" ml",

" machine learning"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "cb1b9f0533df0b0997776a30b4d7fa03de7e3ef7f98b69e37a4079e177e7b09e",

"md5": "b0b39549a91eecf804d2dc318eb972a6",

"sha256": "07161e34abb6a0d482a8e15c444707044464f63ed9d178743a9b7827a6f91d60"

},

"downloads": -1,

"filename": "autoarena-0.1.0b11.tar.gz",

"has_sig": false,

"md5_digest": "b0b39549a91eecf804d2dc318eb972a6",

"packagetype": "sdist",

"python_version": "source",

"requires_python": "<4,>=3.9",

"size": 1258106,

"upload_time": "2024-10-07T13:52:19",

"upload_time_iso_8601": "2024-10-07T13:52:19.639260Z",

"url": "https://files.pythonhosted.org/packages/cb/1b/9f0533df0b0997776a30b4d7fa03de7e3ef7f98b69e37a4079e177e7b09e/autoarena-0.1.0b11.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2024-10-07 13:52:19",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "kolenaIO",

"github_project": "autoarena",

"travis_ci": false,

"coveralls": false,

"github_actions": true,

"lcname": "autoarena"

}