| Name | datadreamer JSON |

| Version |

0.2.0

JSON

JSON |

| download |

| home_page | None |

| Summary | A library for dataset generation and knowledge extraction from foundation computer vision models. |

| upload_time | 2024-11-12 12:22:44 |

| maintainer | None |

| docs_url | None |

| author | None |

| requires_python | >=3.8 |

| license | Apache License Version 2.0, January 2004 http://www.apache.org/licenses/ TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION 1. Definitions. "License" shall mean the terms and conditions for use, reproduction, and distribution as defined by Sections 1 through 9 of this document. "Licensor" shall mean the copyright owner or entity authorized by the copyright owner that is granting the License. "Legal Entity" shall mean the union of the acting entity and all other entities that control, are controlled by, or are under common control with that entity. For the purposes of this definition, "control" means (i) the power, direct or indirect, to cause the direction or management of such entity, whether by contract or otherwise, or (ii) ownership of fifty percent (50%) or more of the outstanding shares, or (iii) beneficial ownership of such entity. "You" (or "Your") shall mean an individual or Legal Entity exercising permissions granted by this License. "Source" form shall mean the preferred form for making modifications, including but not limited to software source code, documentation source, and configuration files. "Object" form shall mean any form resulting from mechanical transformation or translation of a Source form, including but not limited to compiled object code, generated documentation, and conversions to other media types. "Work" shall mean the work of authorship, whether in Source or Object form, made available under the License, as indicated by a copyright notice that is included in or attached to the work (an example is provided in the Appendix below). "Derivative Works" shall mean any work, whether in Source or Object form, that is based on (or derived from) the Work and for which the editorial revisions, annotations, elaborations, or other modifications represent, as a whole, an original work of authorship. For the purposes of this License, Derivative Works shall not include works that remain separable from, or merely link (or bind by name) to the interfaces of, the Work and Derivative Works thereof. "Contribution" shall mean any work of authorship, including the original version of the Work and any modifications or additions to that Work or Derivative Works thereof, that is intentionally submitted to Licensor for inclusion in the Work by the copyright owner or by an individual or Legal Entity authorized to submit on behalf of the copyright owner. For the purposes of this definition, "submitted" means any form of electronic, verbal, or written communication sent to the Licensor or its representatives, including but not limited to communication on electronic mailing lists, source code control systems, and issue tracking systems that are managed by, or on behalf of, the Licensor for the purpose of discussing and improving the Work, but excluding communication that is conspicuously marked or otherwise designated in writing by the copyright owner as "Not a Contribution." "Contributor" shall mean Licensor and any individual or Legal Entity on behalf of whom a Contribution has been received by Licensor and subsequently incorporated within the Work. 2. Grant of Copyright License. Subject to the terms and conditions of this License, each Contributor hereby grants to You a perpetual, worldwide, non-exclusive, no-charge, royalty-free, irrevocable copyright license to reproduce, prepare Derivative Works of, publicly display, publicly perform, sublicense, and distribute the Work and such Derivative Works in Source or Object form. 3. Grant of Patent License. Subject to the terms and conditions of this License, each Contributor hereby grants to You a perpetual, worldwide, non-exclusive, no-charge, royalty-free, irrevocable (except as stated in this section) patent license to make, have made, use, offer to sell, sell, import, and otherwise transfer the Work, where such license applies only to those patent claims licensable by such Contributor that are necessarily infringed by their Contribution(s) alone or by combination of their Contribution(s) with the Work to which such Contribution(s) was submitted. If You institute patent litigation against any entity (including a cross-claim or counterclaim in a lawsuit) alleging that the Work or a Contribution incorporated within the Work constitutes direct or contributory patent infringement, then any patent licenses granted to You under this License for that Work shall terminate as of the date such litigation is filed. 4. Redistribution. You may reproduce and distribute copies of the Work or Derivative Works thereof in any medium, with or without modifications, and in Source or Object form, provided that You meet the following conditions: (a) You must give any other recipients of the Work or Derivative Works a copy of this License; and (b) You must cause any modified files to carry prominent notices stating that You changed the files; and (c) You must retain, in the Source form of any Derivative Works that You distribute, all copyright, patent, trademark, and attribution notices from the Source form of the Work, excluding those notices that do not pertain to any part of the Derivative Works; and (d) If the Work includes a "NOTICE" text file as part of its distribution, then any Derivative Works that You distribute must include a readable copy of the attribution notices contained within such NOTICE file, excluding those notices that do not pertain to any part of the Derivative Works, in at least one of the following places: within a NOTICE text file distributed as part of the Derivative Works; within the Source form or documentation, if provided along with the Derivative Works; or, within a display generated by the Derivative Works, if and wherever such third-party notices normally appear. The contents of the NOTICE file are for informational purposes only and do not modify the License. You may add Your own attribution notices within Derivative Works that You distribute, alongside or as an addendum to the NOTICE text from the Work, provided that such additional attribution notices cannot be construed as modifying the License. You may add Your own copyright statement to Your modifications and may provide additional or different license terms and conditions for use, reproduction, or distribution of Your modifications, or for any such Derivative Works as a whole, provided Your use, reproduction, and distribution of the Work otherwise complies with the conditions stated in this License. 5. Submission of Contributions. Unless You explicitly state otherwise, any Contribution intentionally submitted for inclusion in the Work by You to the Licensor shall be under the terms and conditions of this License, without any additional terms or conditions. Notwithstanding the above, nothing herein shall supersede or modify the terms of any separate license agreement you may have executed with Licensor regarding such Contributions. 6. Trademarks. This License does not grant permission to use the trade names, trademarks, service marks, or product names of the Licensor, except as required for reasonable and customary use in describing the origin of the Work and reproducing the content of the NOTICE file. 7. Disclaimer of Warranty. Unless required by applicable law or agreed to in writing, Licensor provides the Work (and each Contributor provides its Contributions) on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied, including, without limitation, any warranties or conditions of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A PARTICULAR PURPOSE. You are solely responsible for determining the appropriateness of using or redistributing the Work and assume any risks associated with Your exercise of permissions under this License. 8. Limitation of Liability. In no event and under no legal theory, whether in tort (including negligence), contract, or otherwise, unless required by applicable law (such as deliberate and grossly negligent acts) or agreed to in writing, shall any Contributor be liable to You for damages, including any direct, indirect, special, incidental, or consequential damages of any character arising as a result of this License or out of the use or inability to use the Work (including but not limited to damages for loss of goodwill, work stoppage, computer failure or malfunction, or any and all other commercial damages or losses), even if such Contributor has been advised of the possibility of such damages. 9. Accepting Warranty or Additional Liability. While redistributing the Work or Derivative Works thereof, You may choose to offer, and charge a fee for, acceptance of support, warranty, indemnity, or other liability obligations and/or rights consistent with this License. However, in accepting such obligations, You may act only on Your own behalf and on Your sole responsibility, not on behalf of any other Contributor, and only if You agree to indemnify, defend, and hold each Contributor harmless for any liability incurred by, or claims asserted against, such Contributor by reason of your accepting any such warranty or additional liability. END OF TERMS AND CONDITIONS APPENDIX: How to apply the Apache License to your work. To apply the Apache License to your work, attach the following boilerplate notice, with the fields enclosed by brackets "[]" replaced with your own identifying information. (Don't include the brackets!) The text should be enclosed in the appropriate comment syntax for the file format. We also recommend that a file or class name and description of purpose be included on the same "printed page" as the copyright notice for easier identification within third-party archives. Copyright 2023 Luxonis Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. |

| keywords |

computer vision

ai

machine learning

generative models

|

| VCS |

|

| bugtrack_url |

|

| requirements |

torch

torchvision

transformers

diffusers

compel

tqdm

Pillow

numpy

matplotlib

opencv-python

accelerate

scipy

bitsandbytes

nltk

luxonis-ml

python-box

gcsfs

|

| Travis-CI |

No Travis.

|

| coveralls test coverage |

No coveralls.

|

# DataDreamer

[](https://opensource.org/licenses/Apache-2.0)

[](https://colab.research.google.com/github/luxonis/datadreamer/blob/main/examples/generate_dataset_and_train_yolo.ipynb)

[](https://www.youtube.com/watch?v=6FcSz3uFqRI)

[](https://discuss.luxonis.com/blog/3272-datadreamer-creating-custom-datasets-made-easy)

<a name="quickstart"></a>

## 🚀 Quickstart

To generate your dataset with custom classes, you need to execute only two commands:

```bash

pip install datadreamer

datadreamer --class_names person moon robot

```

<a name ="overview"></a>

## 🌟 Overview

<img src='https://raw.githubusercontent.com/luxonis/datadreamer/main/images/datadreamer_scheme.gif' align="center">

`DataDreamer` is an advanced toolkit engineered to facilitate the development of edge AI models, irrespective of initial data availability. Distinctive features of DataDreamer include:

- **Synthetic Data Generation**: Eliminate the dependency on extensive datasets for AI training. DataDreamer empowers users to generate synthetic datasets from the ground up, utilizing advanced AI algorithms capable of producing high-quality, diverse images.

- **Knowledge Extraction from Foundational Models**: `DataDreamer` leverages the latent knowledge embedded within sophisticated, pre-trained AI models. This capability allows for the transfer of expansive understanding from these "Foundation models" to smaller, custom-built models, enhancing their capabilities significantly.

- **Efficient and Potent Models**: The primary objective of `DataDreamer` is to enable the creation of compact models that are both size-efficient for integration into any device and robust in performance for specialized tasks.

## 📜 Table of contents

- [🚀 Quickstart](#quickstart)

- [🌟 Overview](#overview)

- [🛠️ Features](#features)

- [💻 Installation](#installation)

- [⚙️ Hardware Requirements](#hardware-requirements)

- [📋 Usage](#usage)

- [🎯 Main Parameters](#main-parameters)

- [🔧 Additional Parameters](#additional-parameters)

- [🤖 Available Models](#available-models)

- [💡 Example](#example)

- [📦 Output](#output)

- [📝 Annotations Format](#annotations-format)

- [⚠️ Limitations](#limitations)

- [📄 License](#license)

- [🙏 Acknowledgements](#acknowledgements)

<a name="features"></a>

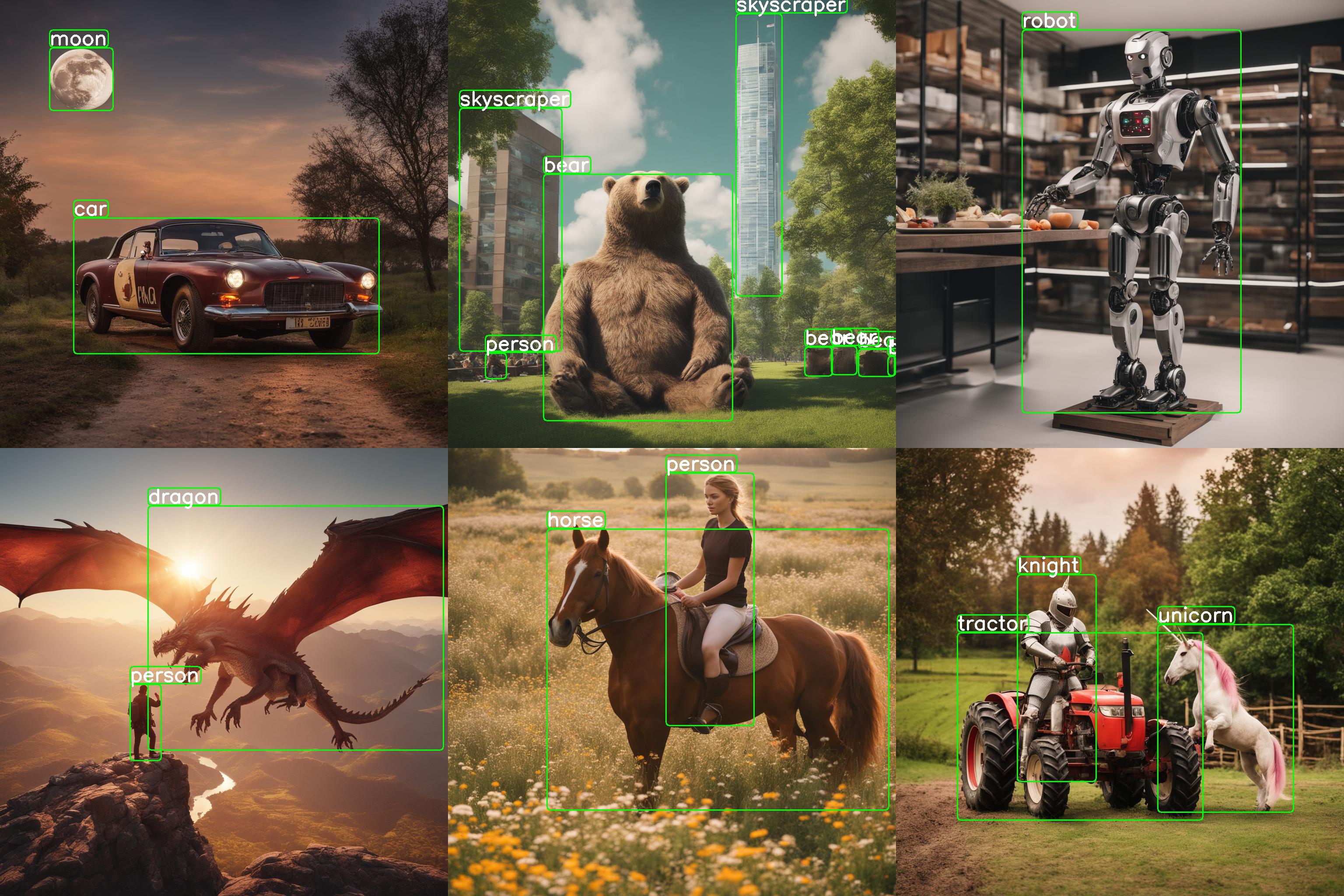

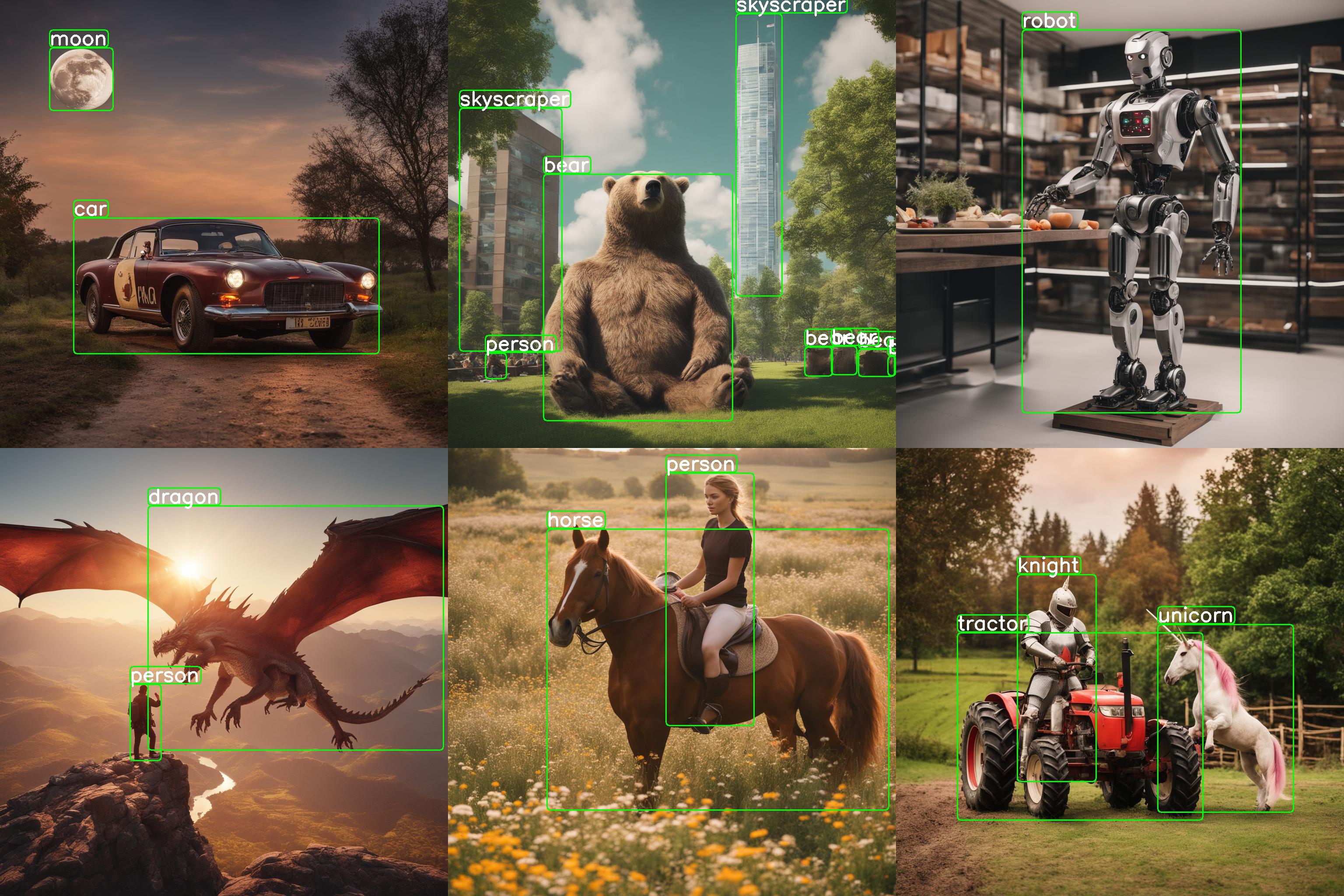

## 🛠️ Features

- **Prompt Generation**: Automate the creation of image prompts using powerful language models.

*Provided class names: \["horse", "robot"\]*

*Generated prompt: "A photo of a horse and a robot coexisting peacefully in the midst of a serene pasture."*

- **Image Generation**: Generate synthetic datasets with state-of-the-art generative models.

- **Dataset Annotation**: Leverage foundation models to label datasets automatically.

- **Edge Model Training**: Train efficient small-scale neural networks for edge deployment. (not part of this library)

<img src="https://raw.githubusercontent.com/luxonis/datadreamer/main/images/generated_image.jpg" width="400"><img src="https://raw.githubusercontent.com/luxonis/datadreamer/main/images/annotated_image.jpg" width="400">

<a name="installation"></a>

## 💻 Installation

There are two ways to install the `datadreamer` library:

**Using pip**:

To install with pip:

```bash

pip install datadreamer

```

**Using Docker (for Linux/Windows)**:

Pull Docker Image from GHCR:

```bash

docker pull ghcr.io/luxonis/datadreamer:latest

```

Or build Docker Image from source:

```bash

# Clone the repository

git clone https://github.com/luxonis/datadreamer.git

cd datadreamer

# Build Docker Image

docker build -t datadreamer .

```

**Run Docker Container (assuming it's GHCR image, otherwise replace `ghcr.io/luxonis/datadreamer:latest` with `datadreamer`)**

Run on CPU:

```bash

docker run --rm -v "$(pwd):/app" ghcr.io/luxonis/datadreamer:latest --save_dir generated_dataset --device cpu

```

Run on GPU, make sure to have nvidia-docker installed:

```bash

docker run --rm --gpus all -v "$(pwd):/app" ghcr.io/luxonis/datadreamer:latest --save_dir generated_dataset --device cuda

```

These commands mount the current directory ($(pwd)) to the /app directory inside the container, allowing you to access files from your local machine.

<a name="hardware-requirements"></a>

## ⚙️ Hardware Requirements

To ensure optimal performance and compatibility with the libraries used in this project, the following hardware specifications are recommended:

- `GPU`: A CUDA-compatible GPU with a minimum of 16 GB memory. This is essential for libraries like `torch`, `torchvision`, `transformers`, and `diffusers`, which leverage CUDA for accelerated computing in machine learning and image processing tasks.

- `RAM`: At least 16 GB of system RAM, although more (32 GB or above) is beneficial for handling large datasets and intensive computations.

<a name="usage"></a>

## 📋 Usage

The `datadreamer/pipelines/generate_dataset_from_scratch.py` (`datadreamer` command) script is a powerful tool for generating and annotating images with specific objects. It uses advanced models to both create images and accurately annotate them with bounding boxes for designated objects.

Run the following command in your terminal to use the script:

```bash

datadreamer --save_dir <directory> --class_names <objects> --prompts_number <number> [additional options]

```

or using a `.yaml` config file

```bash

datadreamer --config <path-to-config>

```

<a name="main-parameters"></a>

### 🎯 Main Parameters

- `--save_dir` (required): Path to the directory for saving generated images and annotations.

- `--class_names` (required): Space-separated list of object names for image generation and annotation. Example: `person moon robot`.

- `--prompts_number` (optional): Number of prompts to generate for each object. Defaults to `10`.

- `--annotate_only` (optional): Only annotate the images without generating new ones, prompt and image generator will be skipped. Defaults to `False`.

<a name="additional-parameters"></a>

### 🔧 Additional Parameters

- `--task`: Choose between detection, classification and instance segmentation. Default is `detection`.

- `--dataset_format`: Format of the dataset. Defaults to `raw`. Supported values: `raw`, `yolo`, `coco`, `luxonis-dataset`, `cls-single`.

- `--split_ratios`: Split ratios for train, validation, and test sets. Defaults to `[0.8, 0.1, 0.1]`.

- `--num_objects_range`: Range of objects in a prompt. Default is 1 to 3.

- `--prompt_generator`: Choose between `simple`, `lm` (Mistral-7B), `tiny` (tiny LM), and `qwen2` (Qwen2.5 LM). Default is `qwen2`.

- `--image_generator`: Choose image generator, e.g., `sdxl`, `sdxl-turbo` or `sdxl-lightning`. Default is `sdxl-turbo`.

- `--image_annotator`: Specify the image annotator, like `owlv2` for object detection or `clip` for image classification or `owlv2-slimsam` for instance segmentation. Default is `owlv2`.

- `--conf_threshold`: Confidence threshold for annotation. Default is `0.15`.

- `--annotation_iou_threshold`: Intersection over Union (IoU) threshold for annotation. Default is `0.2`.

- `--prompt_prefix`: Prefix to add to every image generation prompt. Default is `""`.

- `--prompt_suffix`: Suffix to add to every image generation prompt, e.g., for adding details like resolution. Default is `", hd, 8k, highly detailed"`.

- `--negative_prompt`: Negative prompts to guide the generation away from certain features. Default is `"cartoon, blue skin, painting, scrispture, golden, illustration, worst quality, low quality, normal quality:2, unrealistic dream, low resolution, static, sd character, low quality, low resolution, greyscale, monochrome, nose, cropped, lowres, jpeg artifacts, deformed iris, deformed pupils, bad eyes, semi-realistic worst quality, bad lips, deformed mouth, deformed face, deformed fingers, bad anatomy"`.

- `--use_tta`: Toggle test time augmentation for object detection. Default is `False`.

- `--synonym_generator`: Enhance class names with synonyms. Default is `none`. Other options are `llm`, `wordnet`.

- `--use_image_tester`: Use image tester for image generation. Default is `False`.

- `--image_tester_patience`: Patience level for image tester. Default is `1`.

- `--lm_quantization`: Quantization to use for Mistral language model. Choose between `none` and `4bit`. Default is `none`.

- `--annotator_size`: Size of the annotator model to use. Choose between `base` and `large`. Default is `base`.

- `--disable_lm_filter`: Use only a bad word list for profanity filtering. Default is `False`.

- `--keep_unlabeled_images`: Whether to keep images without any annotations. Default if `False`.

- `--batch_size_prompt`: Batch size for prompt generation. Default is 64.

- `--batch_size_annotation`: Batch size for annotation. Default is `1`.

- `--batch_size_image`: Batch size for image generation. Default is `1`.

- `--device`: Choose between `cuda` and `cpu`. Default is `cuda`.

- `--seed`: Set a random seed for image and prompt generation. Default is `42`.

- `--config`: A path to an optional `.yaml` config file specifying the pipeline's arguments.

<a name="available-models"></a>

### 🤖 Available Models

| Model Category | Model Names | Description/Notes |

| ----------------- | ------------------------------------------------------------------------------------- | --------------------------------------- |

| Prompt Generation | [Mistral-7B-Instruct-v0.1](https://huggingface.co/mistralai/Mistral-7B-Instruct-v0.1) | Semantically rich prompts |

| | [TinyLlama-1.1B-Chat-v1.0](https://huggingface.co/TinyLlama/TinyLlama-1.1B-Chat-v1.0) | Tiny LM |

| | [Qwen2.5-1.5B-Instruct](https://huggingface.co/Qwen/Qwen2.5-1.5B-Instruct) | Qwen2.5 LM |

| | Simple random generator | Joins randomly chosen object names |

| Profanity Filter | [Qwen2.5-1.5B-Instruct](https://huggingface.co/Qwen/Qwen2.5-1.5B-Instruct) | Fast and accurate LM profanity filter |

| Image Generation | [SDXL-1.0](https://huggingface.co/stabilityai/stable-diffusion-xl-base-1.0) | Slow and accurate (1024x1024 images) |

| | [SDXL-Turbo](https://huggingface.co/stabilityai/sdxl-turbo) | Fast and less accurate (512x512 images) |

| | [SDXL-Lightning](https://huggingface.co/ByteDance/SDXL-Lightning) | Fast and accurate (1024x1024 images) |

| Image Annotation | [OWLv2](https://huggingface.co/google/owlv2-base-patch16-ensemble) | Open-Vocabulary object detector |

| | [CLIP](https://huggingface.co/openai/clip-vit-base-patch32) | Zero-shot-image-classification |

| | [SlimSAM](https://huggingface.co/Zigeng/SlimSAM-uniform-50) | Zero-shot-instance-segmentation |

<a name="example"></a>

### 💡 Example

```bash

datadreamer --save_dir path/to/save_directory --class_names person moon robot --prompts_number 20 --prompt_generator simple --num_objects_range 1 3 --image_generator sdxl-turbo

```

or using a `.yaml` config file (if arguments are provided with the config file in the command, they will override the ones in the config file):

```bash

datadreamer --save_dir path/to/save_directory --config configs/det_config.yaml

```

This command generates images for the specified objects, saving them and their annotations in the given directory. The script allows customization of the generation process through various parameters, adapting to different needs and hardware configurations.

See `/configs` folder for some examples of the `.yaml` config files.

<a name="output"></a>

### 📦 Output

The dataset comprises two primary components: images and their corresponding annotations, stored as JSON files.

```bash

save_dir/

│

├── image_1.jpg

├── image_2.jpg

├── ...

├── image_n.jpg

├── prompts.json

└── annotations.json

```

<a name="annotations-format"></a>

### 📝 Annotations Format

1. Detection Annotations (detection_annotations.json):

- Each entry corresponds to an image and contains bounding boxes and labels for objects in the image.

- Format:

```bash

{

"image_path": {

"boxes": [[x_min, y_min, x_max, y_max], ...],

"labels": [label_index, ...]

},

...

"class_names": ["class1", "class2", ...]

}

```

2. Classification Annotations (classification_annotations.json):

- Each entry corresponds to an image and contains labels for the image.

- Format:

```bash

{

"image_path": {

"labels": [label_index, ...]

},

...

"class_names": ["class1", "class2", ...]

}

```

3. Instance Segmentation Annotations (instance_segmentation_annotations.json):

- Each entry corresponds to an image and contains bounding boxes, masks and labels for objects in the image.

- Format:

```bash

{

"image_path": {

"boxes": [[x_min, y_min, x_max, y_max], ...],

"masks": [[[x0, y0],[x1, y1],...], [[x0, y0],[x1, y1],...], ....]

"labels": [label_index, ...]

},

...

"class_names": ["class1", "class2", ...]

}

```

<a name="limitations"></a>

## ⚠️ Limitations

While the datadreamer library leverages advanced Generative models to synthesize datasets and Foundation models for annotation, there are inherent limitations to consider:

- `Incomplete Object Representation`: Occasionally, the generative models might not include all desired objects in the synthetic images. This could result from the complexity of the scene or limitations within the model's learned patterns.

- `Annotation Accuracy`: The annotations created by foundation computer vision models may not always be precise. These models strive for accuracy, but like all automated systems, they are not infallible and can sometimes produce erroneous or ambiguous labels. However, we have implemented several strategies to mitigate these issues, such as Test Time Augmentation (TTA), usage of synonyms for class names and careful selection of the confidence/IOU thresholds.

Despite these limitations, the datasets created by datadreamer provide a valuable foundation for developing and training models, especially for edge computing scenarios where data availability is often a challenge. The synthetic and annotated data should be seen as a stepping stone, granting a significant head start in the model development process.

<a name="license"></a>

## 📄 License

This project is licensed under the [Apache License, Version 2.0](https://opensource.org/license/apache-2-0/) - see the [LICENSE](LICENSE) file for details.

The above license does not cover the models. Please see the license of each model in the table above.

<a name="acknowledgements"></a>

## 🙏 Acknowledgements

This library was made possible by the use of several open-source projects, including Transformers, Diffusers, and others listed in the requirements.txt. Furthermore, we utilized a bad words list from [`@coffeeandfun/google-profanity-words`](https://github.com/coffee-and-fun/google-profanity-words) Node.js module created by Robert James Gabriel from Coffee & Fun LLC.

[SD-XL 1.0 License](https://github.com/Stability-AI/generative-models/blob/main/model_licenses/LICENSE-SDXL1.0)

[SDXL-Turbo License](https://github.com/Stability-AI/generative-models/blob/main/model_licenses/LICENSE-SDXL-Turbo)

Raw data

{

"_id": null,

"home_page": null,

"name": "datadreamer",

"maintainer": null,

"docs_url": null,

"requires_python": ">=3.8",

"maintainer_email": "Luxonis <support@luxonis.com>",

"keywords": "computer vision, AI, machine learning, generative models",

"author": null,

"author_email": null,

"download_url": "https://files.pythonhosted.org/packages/4c/69/3bd48c90b00e487d9571dfcb37a8984ce1670fa2233b840a8815d3c290d9/datadreamer-0.2.0.tar.gz",

"platform": null,

"description": "# DataDreamer\n\n[](https://opensource.org/licenses/Apache-2.0)\n[](https://colab.research.google.com/github/luxonis/datadreamer/blob/main/examples/generate_dataset_and_train_yolo.ipynb)\n[](https://www.youtube.com/watch?v=6FcSz3uFqRI)\n[](https://discuss.luxonis.com/blog/3272-datadreamer-creating-custom-datasets-made-easy)\n\n\n\n<a name=\"quickstart\"></a>\n\n## \ud83d\ude80 Quickstart\n\nTo generate your dataset with custom classes, you need to execute only two commands:\n\n```bash\npip install datadreamer\ndatadreamer --class_names person moon robot\n```\n\n<a name =\"overview\"></a>\n\n## \ud83c\udf1f Overview\n\n<img src='https://raw.githubusercontent.com/luxonis/datadreamer/main/images/datadreamer_scheme.gif' align=\"center\">\n\n`DataDreamer` is an advanced toolkit engineered to facilitate the development of edge AI models, irrespective of initial data availability. Distinctive features of DataDreamer include:\n\n- **Synthetic Data Generation**: Eliminate the dependency on extensive datasets for AI training. DataDreamer empowers users to generate synthetic datasets from the ground up, utilizing advanced AI algorithms capable of producing high-quality, diverse images.\n\n- **Knowledge Extraction from Foundational Models**: `DataDreamer` leverages the latent knowledge embedded within sophisticated, pre-trained AI models. This capability allows for the transfer of expansive understanding from these \"Foundation models\" to smaller, custom-built models, enhancing their capabilities significantly.\n\n- **Efficient and Potent Models**: The primary objective of `DataDreamer` is to enable the creation of compact models that are both size-efficient for integration into any device and robust in performance for specialized tasks.\n\n## \ud83d\udcdc Table of contents\n\n- [\ud83d\ude80 Quickstart](#quickstart)\n- [\ud83c\udf1f Overview](#overview)\n- [\ud83d\udee0\ufe0f Features](#features)\n- [\ud83d\udcbb Installation](#installation)\n- [\u2699\ufe0f Hardware Requirements](#hardware-requirements)\n- [\ud83d\udccb Usage](#usage)\n - [\ud83c\udfaf Main Parameters](#main-parameters)\n - [\ud83d\udd27 Additional Parameters](#additional-parameters)\n - [\ud83e\udd16 Available Models](#available-models)\n - [\ud83d\udca1 Example](#example)\n - [\ud83d\udce6 Output](#output)\n - [\ud83d\udcdd Annotations Format](#annotations-format)\n- [\u26a0\ufe0f Limitations](#limitations)\n- [\ud83d\udcc4 License](#license)\n- [\ud83d\ude4f Acknowledgements](#acknowledgements)\n\n<a name=\"features\"></a>\n\n## \ud83d\udee0\ufe0f Features\n\n- **Prompt Generation**: Automate the creation of image prompts using powerful language models.\n\n *Provided class names: \\[\"horse\", \"robot\"\\]*\n\n *Generated prompt: \"A photo of a horse and a robot coexisting peacefully in the midst of a serene pasture.\"*\n\n- **Image Generation**: Generate synthetic datasets with state-of-the-art generative models.\n\n- **Dataset Annotation**: Leverage foundation models to label datasets automatically.\n\n- **Edge Model Training**: Train efficient small-scale neural networks for edge deployment. (not part of this library)\n\n<img src=\"https://raw.githubusercontent.com/luxonis/datadreamer/main/images/generated_image.jpg\" width=\"400\"><img src=\"https://raw.githubusercontent.com/luxonis/datadreamer/main/images/annotated_image.jpg\" width=\"400\">\n\n<a name=\"installation\"></a>\n\n## \ud83d\udcbb Installation\n\nThere are two ways to install the `datadreamer` library:\n\n**Using pip**:\n\nTo install with pip:\n\n```bash\npip install datadreamer\n```\n\n**Using Docker (for Linux/Windows)**:\n\nPull Docker Image from GHCR:\n\n```bash\ndocker pull ghcr.io/luxonis/datadreamer:latest\n```\n\nOr build Docker Image from source:\n\n```bash\n# Clone the repository\ngit clone https://github.com/luxonis/datadreamer.git\ncd datadreamer\n\n# Build Docker Image\ndocker build -t datadreamer .\n```\n\n**Run Docker Container (assuming it's GHCR image, otherwise replace `ghcr.io/luxonis/datadreamer:latest` with `datadreamer`)**\n\nRun on CPU:\n\n```bash\ndocker run --rm -v \"$(pwd):/app\" ghcr.io/luxonis/datadreamer:latest --save_dir generated_dataset --device cpu\n```\n\nRun on GPU, make sure to have nvidia-docker installed:\n\n```bash\ndocker run --rm --gpus all -v \"$(pwd):/app\" ghcr.io/luxonis/datadreamer:latest --save_dir generated_dataset --device cuda\n```\n\nThese commands mount the current directory ($(pwd)) to the /app directory inside the container, allowing you to access files from your local machine.\n\n<a name=\"hardware-requirements\"></a>\n\n## \u2699\ufe0f Hardware Requirements\n\nTo ensure optimal performance and compatibility with the libraries used in this project, the following hardware specifications are recommended:\n\n- `GPU`: A CUDA-compatible GPU with a minimum of 16 GB memory. This is essential for libraries like `torch`, `torchvision`, `transformers`, and `diffusers`, which leverage CUDA for accelerated computing in machine learning and image processing tasks.\n- `RAM`: At least 16 GB of system RAM, although more (32 GB or above) is beneficial for handling large datasets and intensive computations.\n\n<a name=\"usage\"></a>\n\n## \ud83d\udccb Usage\n\nThe `datadreamer/pipelines/generate_dataset_from_scratch.py` (`datadreamer` command) script is a powerful tool for generating and annotating images with specific objects. It uses advanced models to both create images and accurately annotate them with bounding boxes for designated objects.\n\nRun the following command in your terminal to use the script:\n\n```bash\ndatadreamer --save_dir <directory> --class_names <objects> --prompts_number <number> [additional options]\n```\n\nor using a `.yaml` config file\n\n```bash\ndatadreamer --config <path-to-config>\n```\n\n<a name=\"main-parameters\"></a>\n\n### \ud83c\udfaf Main Parameters\n\n- `--save_dir` (required): Path to the directory for saving generated images and annotations.\n- `--class_names` (required): Space-separated list of object names for image generation and annotation. Example: `person moon robot`.\n- `--prompts_number` (optional): Number of prompts to generate for each object. Defaults to `10`.\n- `--annotate_only` (optional): Only annotate the images without generating new ones, prompt and image generator will be skipped. Defaults to `False`.\n\n<a name=\"additional-parameters\"></a>\n\n### \ud83d\udd27 Additional Parameters\n\n- `--task`: Choose between detection, classification and instance segmentation. Default is `detection`.\n- `--dataset_format`: Format of the dataset. Defaults to `raw`. Supported values: `raw`, `yolo`, `coco`, `luxonis-dataset`, `cls-single`.\n- `--split_ratios`: Split ratios for train, validation, and test sets. Defaults to `[0.8, 0.1, 0.1]`.\n- `--num_objects_range`: Range of objects in a prompt. Default is 1 to 3.\n- `--prompt_generator`: Choose between `simple`, `lm` (Mistral-7B), `tiny` (tiny LM), and `qwen2` (Qwen2.5 LM). Default is `qwen2`.\n- `--image_generator`: Choose image generator, e.g., `sdxl`, `sdxl-turbo` or `sdxl-lightning`. Default is `sdxl-turbo`.\n- `--image_annotator`: Specify the image annotator, like `owlv2` for object detection or `clip` for image classification or `owlv2-slimsam` for instance segmentation. Default is `owlv2`.\n- `--conf_threshold`: Confidence threshold for annotation. Default is `0.15`.\n- `--annotation_iou_threshold`: Intersection over Union (IoU) threshold for annotation. Default is `0.2`.\n- `--prompt_prefix`: Prefix to add to every image generation prompt. Default is `\"\"`.\n- `--prompt_suffix`: Suffix to add to every image generation prompt, e.g., for adding details like resolution. Default is `\", hd, 8k, highly detailed\"`.\n- `--negative_prompt`: Negative prompts to guide the generation away from certain features. Default is `\"cartoon, blue skin, painting, scrispture, golden, illustration, worst quality, low quality, normal quality:2, unrealistic dream, low resolution, static, sd character, low quality, low resolution, greyscale, monochrome, nose, cropped, lowres, jpeg artifacts, deformed iris, deformed pupils, bad eyes, semi-realistic worst quality, bad lips, deformed mouth, deformed face, deformed fingers, bad anatomy\"`.\n- `--use_tta`: Toggle test time augmentation for object detection. Default is `False`.\n- `--synonym_generator`: Enhance class names with synonyms. Default is `none`. Other options are `llm`, `wordnet`.\n- `--use_image_tester`: Use image tester for image generation. Default is `False`.\n- `--image_tester_patience`: Patience level for image tester. Default is `1`.\n- `--lm_quantization`: Quantization to use for Mistral language model. Choose between `none` and `4bit`. Default is `none`.\n- `--annotator_size`: Size of the annotator model to use. Choose between `base` and `large`. Default is `base`.\n- `--disable_lm_filter`: Use only a bad word list for profanity filtering. Default is `False`.\n- `--keep_unlabeled_images`: Whether to keep images without any annotations. Default if `False`.\n- `--batch_size_prompt`: Batch size for prompt generation. Default is 64.\n- `--batch_size_annotation`: Batch size for annotation. Default is `1`.\n- `--batch_size_image`: Batch size for image generation. Default is `1`.\n- `--device`: Choose between `cuda` and `cpu`. Default is `cuda`.\n- `--seed`: Set a random seed for image and prompt generation. Default is `42`.\n- `--config`: A path to an optional `.yaml` config file specifying the pipeline's arguments.\n\n<a name=\"available-models\"></a>\n\n### \ud83e\udd16 Available Models\n\n| Model Category | Model Names | Description/Notes |\n| ----------------- | ------------------------------------------------------------------------------------- | --------------------------------------- |\n| Prompt Generation | [Mistral-7B-Instruct-v0.1](https://huggingface.co/mistralai/Mistral-7B-Instruct-v0.1) | Semantically rich prompts |\n| | [TinyLlama-1.1B-Chat-v1.0](https://huggingface.co/TinyLlama/TinyLlama-1.1B-Chat-v1.0) | Tiny LM |\n| | [Qwen2.5-1.5B-Instruct](https://huggingface.co/Qwen/Qwen2.5-1.5B-Instruct) | Qwen2.5 LM |\n| | Simple random generator | Joins randomly chosen object names |\n| Profanity Filter | [Qwen2.5-1.5B-Instruct](https://huggingface.co/Qwen/Qwen2.5-1.5B-Instruct) | Fast and accurate LM profanity filter |\n| Image Generation | [SDXL-1.0](https://huggingface.co/stabilityai/stable-diffusion-xl-base-1.0) | Slow and accurate (1024x1024 images) |\n| | [SDXL-Turbo](https://huggingface.co/stabilityai/sdxl-turbo) | Fast and less accurate (512x512 images) |\n| | [SDXL-Lightning](https://huggingface.co/ByteDance/SDXL-Lightning) | Fast and accurate (1024x1024 images) |\n| Image Annotation | [OWLv2](https://huggingface.co/google/owlv2-base-patch16-ensemble) | Open-Vocabulary object detector |\n| | [CLIP](https://huggingface.co/openai/clip-vit-base-patch32) | Zero-shot-image-classification |\n| | [SlimSAM](https://huggingface.co/Zigeng/SlimSAM-uniform-50) | Zero-shot-instance-segmentation |\n\n<a name=\"example\"></a>\n\n### \ud83d\udca1 Example\n\n```bash\ndatadreamer --save_dir path/to/save_directory --class_names person moon robot --prompts_number 20 --prompt_generator simple --num_objects_range 1 3 --image_generator sdxl-turbo\n```\n\nor using a `.yaml` config file (if arguments are provided with the config file in the command, they will override the ones in the config file):\n\n```bash\ndatadreamer --save_dir path/to/save_directory --config configs/det_config.yaml\n```\n\nThis command generates images for the specified objects, saving them and their annotations in the given directory. The script allows customization of the generation process through various parameters, adapting to different needs and hardware configurations.\n\nSee `/configs` folder for some examples of the `.yaml` config files.\n\n<a name=\"output\"></a>\n\n### \ud83d\udce6 Output\n\nThe dataset comprises two primary components: images and their corresponding annotations, stored as JSON files.\n\n```bash\n\nsave_dir/\n\u2502\n\u251c\u2500\u2500 image_1.jpg\n\u251c\u2500\u2500 image_2.jpg\n\u251c\u2500\u2500 ...\n\u251c\u2500\u2500 image_n.jpg\n\u251c\u2500\u2500 prompts.json\n\u2514\u2500\u2500 annotations.json\n```\n\n<a name=\"annotations-format\"></a>\n\n### \ud83d\udcdd Annotations Format\n\n1. Detection Annotations (detection_annotations.json):\n\n- Each entry corresponds to an image and contains bounding boxes and labels for objects in the image.\n- Format:\n\n```bash\n{\n \"image_path\": {\n \"boxes\": [[x_min, y_min, x_max, y_max], ...],\n \"labels\": [label_index, ...]\n },\n ...\n \"class_names\": [\"class1\", \"class2\", ...]\n}\n```\n\n2. Classification Annotations (classification_annotations.json):\n\n- Each entry corresponds to an image and contains labels for the image.\n- Format:\n\n```bash\n{\n \"image_path\": {\n \"labels\": [label_index, ...]\n },\n ...\n \"class_names\": [\"class1\", \"class2\", ...]\n}\n```\n\n3. Instance Segmentation Annotations (instance_segmentation_annotations.json):\n\n- Each entry corresponds to an image and contains bounding boxes, masks and labels for objects in the image.\n- Format:\n\n```bash\n{\n \"image_path\": {\n \"boxes\": [[x_min, y_min, x_max, y_max], ...],\n \"masks\": [[[x0, y0],[x1, y1],...], [[x0, y0],[x1, y1],...], ....]\n \"labels\": [label_index, ...]\n },\n ...\n \"class_names\": [\"class1\", \"class2\", ...]\n}\n```\n\n<a name=\"limitations\"></a>\n\n## \u26a0\ufe0f Limitations\n\nWhile the datadreamer library leverages advanced Generative models to synthesize datasets and Foundation models for annotation, there are inherent limitations to consider:\n\n- `Incomplete Object Representation`: Occasionally, the generative models might not include all desired objects in the synthetic images. This could result from the complexity of the scene or limitations within the model's learned patterns.\n\n- `Annotation Accuracy`: The annotations created by foundation computer vision models may not always be precise. These models strive for accuracy, but like all automated systems, they are not infallible and can sometimes produce erroneous or ambiguous labels. However, we have implemented several strategies to mitigate these issues, such as Test Time Augmentation (TTA), usage of synonyms for class names and careful selection of the confidence/IOU thresholds.\n\nDespite these limitations, the datasets created by datadreamer provide a valuable foundation for developing and training models, especially for edge computing scenarios where data availability is often a challenge. The synthetic and annotated data should be seen as a stepping stone, granting a significant head start in the model development process.\n\n<a name=\"license\"></a>\n\n## \ud83d\udcc4 License\n\nThis project is licensed under the [Apache License, Version 2.0](https://opensource.org/license/apache-2-0/) - see the [LICENSE](LICENSE) file for details.\n\nThe above license does not cover the models. Please see the license of each model in the table above.\n\n<a name=\"acknowledgements\"></a>\n\n## \ud83d\ude4f Acknowledgements\n\nThis library was made possible by the use of several open-source projects, including Transformers, Diffusers, and others listed in the requirements.txt. Furthermore, we utilized a bad words list from [`@coffeeandfun/google-profanity-words`](https://github.com/coffee-and-fun/google-profanity-words) Node.js module created by Robert James Gabriel from Coffee & Fun LLC.\n\n[SD-XL 1.0 License](https://github.com/Stability-AI/generative-models/blob/main/model_licenses/LICENSE-SDXL1.0)\n[SDXL-Turbo License](https://github.com/Stability-AI/generative-models/blob/main/model_licenses/LICENSE-SDXL-Turbo)\n",

"bugtrack_url": null,

"license": "Apache License Version 2.0, January 2004 http://www.apache.org/licenses/ TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION 1. Definitions. \"License\" shall mean the terms and conditions for use, reproduction, and distribution as defined by Sections 1 through 9 of this document. \"Licensor\" shall mean the copyright owner or entity authorized by the copyright owner that is granting the License. \"Legal Entity\" shall mean the union of the acting entity and all other entities that control, are controlled by, or are under common control with that entity. For the purposes of this definition, \"control\" means (i) the power, direct or indirect, to cause the direction or management of such entity, whether by contract or otherwise, or (ii) ownership of fifty percent (50%) or more of the outstanding shares, or (iii) beneficial ownership of such entity. \"You\" (or \"Your\") shall mean an individual or Legal Entity exercising permissions granted by this License. \"Source\" form shall mean the preferred form for making modifications, including but not limited to software source code, documentation source, and configuration files. \"Object\" form shall mean any form resulting from mechanical transformation or translation of a Source form, including but not limited to compiled object code, generated documentation, and conversions to other media types. \"Work\" shall mean the work of authorship, whether in Source or Object form, made available under the License, as indicated by a copyright notice that is included in or attached to the work (an example is provided in the Appendix below). \"Derivative Works\" shall mean any work, whether in Source or Object form, that is based on (or derived from) the Work and for which the editorial revisions, annotations, elaborations, or other modifications represent, as a whole, an original work of authorship. For the purposes of this License, Derivative Works shall not include works that remain separable from, or merely link (or bind by name) to the interfaces of, the Work and Derivative Works thereof. \"Contribution\" shall mean any work of authorship, including the original version of the Work and any modifications or additions to that Work or Derivative Works thereof, that is intentionally submitted to Licensor for inclusion in the Work by the copyright owner or by an individual or Legal Entity authorized to submit on behalf of the copyright owner. For the purposes of this definition, \"submitted\" means any form of electronic, verbal, or written communication sent to the Licensor or its representatives, including but not limited to communication on electronic mailing lists, source code control systems, and issue tracking systems that are managed by, or on behalf of, the Licensor for the purpose of discussing and improving the Work, but excluding communication that is conspicuously marked or otherwise designated in writing by the copyright owner as \"Not a Contribution.\" \"Contributor\" shall mean Licensor and any individual or Legal Entity on behalf of whom a Contribution has been received by Licensor and subsequently incorporated within the Work. 2. Grant of Copyright License. Subject to the terms and conditions of this License, each Contributor hereby grants to You a perpetual, worldwide, non-exclusive, no-charge, royalty-free, irrevocable copyright license to reproduce, prepare Derivative Works of, publicly display, publicly perform, sublicense, and distribute the Work and such Derivative Works in Source or Object form. 3. Grant of Patent License. Subject to the terms and conditions of this License, each Contributor hereby grants to You a perpetual, worldwide, non-exclusive, no-charge, royalty-free, irrevocable (except as stated in this section) patent license to make, have made, use, offer to sell, sell, import, and otherwise transfer the Work, where such license applies only to those patent claims licensable by such Contributor that are necessarily infringed by their Contribution(s) alone or by combination of their Contribution(s) with the Work to which such Contribution(s) was submitted. If You institute patent litigation against any entity (including a cross-claim or counterclaim in a lawsuit) alleging that the Work or a Contribution incorporated within the Work constitutes direct or contributory patent infringement, then any patent licenses granted to You under this License for that Work shall terminate as of the date such litigation is filed. 4. Redistribution. You may reproduce and distribute copies of the Work or Derivative Works thereof in any medium, with or without modifications, and in Source or Object form, provided that You meet the following conditions: (a) You must give any other recipients of the Work or Derivative Works a copy of this License; and (b) You must cause any modified files to carry prominent notices stating that You changed the files; and (c) You must retain, in the Source form of any Derivative Works that You distribute, all copyright, patent, trademark, and attribution notices from the Source form of the Work, excluding those notices that do not pertain to any part of the Derivative Works; and (d) If the Work includes a \"NOTICE\" text file as part of its distribution, then any Derivative Works that You distribute must include a readable copy of the attribution notices contained within such NOTICE file, excluding those notices that do not pertain to any part of the Derivative Works, in at least one of the following places: within a NOTICE text file distributed as part of the Derivative Works; within the Source form or documentation, if provided along with the Derivative Works; or, within a display generated by the Derivative Works, if and wherever such third-party notices normally appear. The contents of the NOTICE file are for informational purposes only and do not modify the License. You may add Your own attribution notices within Derivative Works that You distribute, alongside or as an addendum to the NOTICE text from the Work, provided that such additional attribution notices cannot be construed as modifying the License. You may add Your own copyright statement to Your modifications and may provide additional or different license terms and conditions for use, reproduction, or distribution of Your modifications, or for any such Derivative Works as a whole, provided Your use, reproduction, and distribution of the Work otherwise complies with the conditions stated in this License. 5. Submission of Contributions. Unless You explicitly state otherwise, any Contribution intentionally submitted for inclusion in the Work by You to the Licensor shall be under the terms and conditions of this License, without any additional terms or conditions. Notwithstanding the above, nothing herein shall supersede or modify the terms of any separate license agreement you may have executed with Licensor regarding such Contributions. 6. Trademarks. This License does not grant permission to use the trade names, trademarks, service marks, or product names of the Licensor, except as required for reasonable and customary use in describing the origin of the Work and reproducing the content of the NOTICE file. 7. Disclaimer of Warranty. Unless required by applicable law or agreed to in writing, Licensor provides the Work (and each Contributor provides its Contributions) on an \"AS IS\" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied, including, without limitation, any warranties or conditions of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A PARTICULAR PURPOSE. You are solely responsible for determining the appropriateness of using or redistributing the Work and assume any risks associated with Your exercise of permissions under this License. 8. Limitation of Liability. In no event and under no legal theory, whether in tort (including negligence), contract, or otherwise, unless required by applicable law (such as deliberate and grossly negligent acts) or agreed to in writing, shall any Contributor be liable to You for damages, including any direct, indirect, special, incidental, or consequential damages of any character arising as a result of this License or out of the use or inability to use the Work (including but not limited to damages for loss of goodwill, work stoppage, computer failure or malfunction, or any and all other commercial damages or losses), even if such Contributor has been advised of the possibility of such damages. 9. Accepting Warranty or Additional Liability. While redistributing the Work or Derivative Works thereof, You may choose to offer, and charge a fee for, acceptance of support, warranty, indemnity, or other liability obligations and/or rights consistent with this License. However, in accepting such obligations, You may act only on Your own behalf and on Your sole responsibility, not on behalf of any other Contributor, and only if You agree to indemnify, defend, and hold each Contributor harmless for any liability incurred by, or claims asserted against, such Contributor by reason of your accepting any such warranty or additional liability. END OF TERMS AND CONDITIONS APPENDIX: How to apply the Apache License to your work. To apply the Apache License to your work, attach the following boilerplate notice, with the fields enclosed by brackets \"[]\" replaced with your own identifying information. (Don't include the brackets!) The text should be enclosed in the appropriate comment syntax for the file format. We also recommend that a file or class name and description of purpose be included on the same \"printed page\" as the copyright notice for easier identification within third-party archives. Copyright 2023 Luxonis Licensed under the Apache License, Version 2.0 (the \"License\"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an \"AS IS\" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. ",

"summary": "A library for dataset generation and knowledge extraction from foundation computer vision models.",

"version": "0.2.0",

"project_urls": {

"Homepage": "https://github.com/luxonis/datadreamer"

},

"split_keywords": [

"computer vision",

" ai",

" machine learning",

" generative models"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "87a638146ece2a6d49379d0461f74296ecf3fa4fe1cb6a0246ecf3dfd725ee21",

"md5": "30fd0c6b4acb4754750a316bee5bd8d6",

"sha256": "1514775f9b76a845e1e543dc05c418b57e52f02c755aa1334ef0d664a79aa8b8"

},

"downloads": -1,

"filename": "datadreamer-0.2.0-py3-none-any.whl",

"has_sig": false,

"md5_digest": "30fd0c6b4acb4754750a316bee5bd8d6",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.8",

"size": 99772,

"upload_time": "2024-11-12T12:22:43",

"upload_time_iso_8601": "2024-11-12T12:22:43.341396Z",

"url": "https://files.pythonhosted.org/packages/87/a6/38146ece2a6d49379d0461f74296ecf3fa4fe1cb6a0246ecf3dfd725ee21/datadreamer-0.2.0-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "4c693bd48c90b00e487d9571dfcb37a8984ce1670fa2233b840a8815d3c290d9",

"md5": "47f232ed4086654d71ba59ccd06337f3",

"sha256": "405c13f6ec2bdf7d951fd2f03aa7a18318449373f6be7dde5500714cb4cd19d1"

},

"downloads": -1,

"filename": "datadreamer-0.2.0.tar.gz",

"has_sig": false,

"md5_digest": "47f232ed4086654d71ba59ccd06337f3",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.8",

"size": 75967,

"upload_time": "2024-11-12T12:22:44",

"upload_time_iso_8601": "2024-11-12T12:22:44.353552Z",

"url": "https://files.pythonhosted.org/packages/4c/69/3bd48c90b00e487d9571dfcb37a8984ce1670fa2233b840a8815d3c290d9/datadreamer-0.2.0.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2024-11-12 12:22:44",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "luxonis",

"github_project": "datadreamer",

"travis_ci": false,

"coveralls": false,

"github_actions": true,

"requirements": [

{

"name": "torch",

"specs": [

[

">=",

"2.0.0"

]

]

},

{

"name": "torchvision",

"specs": [

[

">=",

"0.16.0"

]

]

},

{

"name": "transformers",

"specs": [

[

">=",

"4.45.2"

]

]

},

{

"name": "diffusers",

"specs": [

[

">=",

"0.31.0"

]

]

},

{

"name": "compel",

"specs": [

[

">=",

"2.0.0"

]

]

},

{

"name": "tqdm",

"specs": [

[

">=",

"4.0.0"

]

]

},

{

"name": "Pillow",

"specs": [

[

">=",

"9.0.0"

]

]

},

{

"name": "numpy",

"specs": [

[

">=",

"1.22.0"

]

]

},

{

"name": "matplotlib",

"specs": [

[

">=",

"3.6.0"

]

]

},

{

"name": "opencv-python",

"specs": [

[

">=",

"4.7.0"

]

]

},

{

"name": "accelerate",

"specs": [

[

">=",

"0.25.0"

]

]

},

{

"name": "scipy",

"specs": [

[

">=",

"1.10.0"

]

]

},

{

"name": "bitsandbytes",

"specs": [

[

">=",

"0.42.0"

]

]

},

{

"name": "nltk",

"specs": [

[

">=",

"3.8.1"

]

]

},

{

"name": "luxonis-ml",

"specs": [

[

">=",

"0.5.0"

]

]

},

{

"name": "python-box",

"specs": [

[

">=",

"7.1.1"

]

]

},

{

"name": "gcsfs",

"specs": [

[

">=",

"2023.1.0"

]

]

}

],

"lcname": "datadreamer"

}