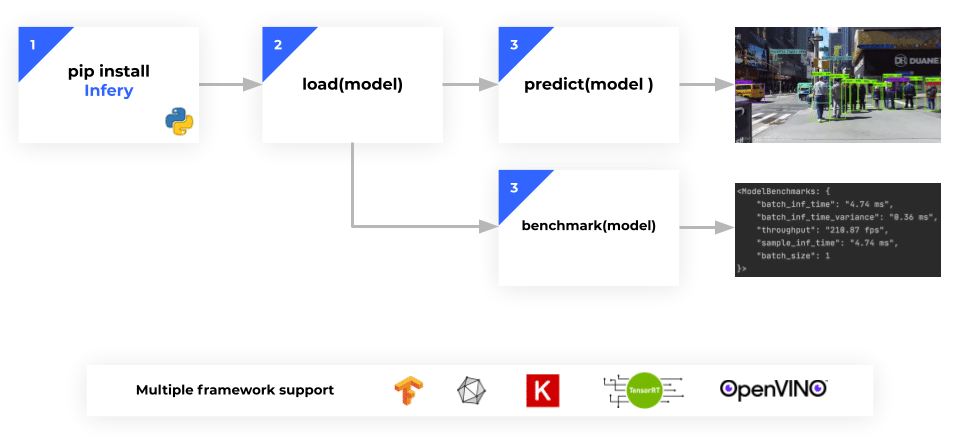

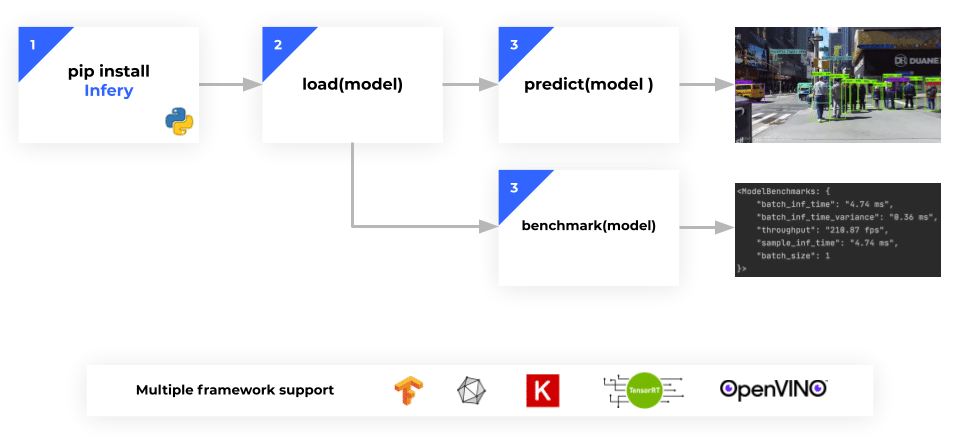

# Infery

`infery` is a runtime engine that simplifies inference, benchmark and profiling.<br>

`infery` supports all major deep learning frameworks, with a <b>unified and simple API</b>.

## Quickstart and Examples

<a href="https://github.com/Deci-AI/infery-examples">

<img src="https://img.shields.io/badge/Public-Infery Examples-green">

</a>

### Installation Instructions

https://docs.deci.ai/docs/installing-infery-1

## Usage

#### 1. Load an example model

```python

>>> import infery, numpy as np

>>> model = infery.load(model_path='MyNewTask_1_0.onnx', framework_type='onnx', inference_hardware='gpu')

```

#### 2. Predict with a random numpy input

```python

>>> inputs = [np.random.random(shape).astype('float32') for shape in [[1, 243, 30, 40], [1, 172, 60, 80], [1, 102, 120, 160], [1, 64, 240, 320], [1, 153, 15, 20]]]

>>> model.predict(inputs)

[array([[[[-3.5920768, -3.5920792, -3.592102 , ..., -3.592099 ,

-3.5920944, -3.5920882],

[-3.592076 , -3.5919113, -3.592086 , ..., -3.5921211,

-3.5921066, -3.5920937],

[-3.592083 , -3.592073 , -3.5920823, ..., -3.5921297,

-3.592109 , -3.5920937],

...,

[-3.5920753, -3.5917826, -3.591444 , ..., -3.580754 ,

-3.5816329, -3.582549 ],

[-3.592073 , -3.5917459, -3.591257 , ..., -3.5817945,

-3.5820704, -3.5835373],

[-3.592073 , -3.5920737, -3.5920737, ..., -3.5853324,

-3.5845006, -3.5856297]],

[[-5.862198 , -5.8567815, -5.851764 , ..., -5.86396 ,

-5.8639617, -5.865011 ],

[-5.858771 , -5.8493323, -5.841462 , ..., -5.8617773,

-5.8614554, -5.8633246],

[-5.8560567, -5.844124 , -5.8351245, ..., -5.8598166,

-5.859674 , -5.8624067],

...,

[-5.8608136, -5.854358 , -5.8467784, ..., -5.8504496,

-5.8563104, -5.8615303],

[-5.86313 , -5.8587003, -5.8531966, ..., -5.8534794,

-5.8581944, -5.8625536],

[-5.865306 , -5.8623176, -5.8593984, ..., -5.8581495,

-5.861572 , -5.865295 ]],

[[-8.843734 , -8.840406 , -8.837172 , ..., -8.840413 ,

-8.842026 , -8.843931 ],

[-8.840792 , -8.836787 , -8.831037 , ..., -8.836103 ,

-8.839954 , -8.842534 ],

[-8.838855 , -8.833998 , -8.82706 , ..., -8.835106 ,

-8.839087 , -8.841538 ],

...,

[-8.842419 , -8.840865 , -8.838625 , ..., -8.83943 ,

-8.843677 , -8.845087 ],

[-8.844379 , -8.84402 , -8.843141 , ..., -8.84185 ,

-8.844696 , -8.845202 ],

[-8.844775 , -8.845572 , -8.845177 , ..., -8.843876 ,

-8.8444395, -8.845926 ]]]], dtype=float32)]

```

#### 3. Benchmark the model on the current hardware

```python

>>> model.benchmark(batch_size=1)

-INFO- Benchmarking the model in batch size 1 and dimensions [(243, 30, 40), (172, 60, 80), (102, 120, 160), (64, 240, 320), (153, 15, 20)]...

<ModelBenchmarks: {

"batch_size": 1,

"batch_inf_time": "6.57 ms",

"batch_inf_time_variance": "0.02 ms",

"model_memory_used": "1536.00 mb",

"system_startpoint_memory_used": "1536.00 mb",

"post_inference_memory_used": "1536.00 mb",

"total_memory_size": "7982.00 mb",

"throughput": "152.32 fps",

"sample_inf_time": "6.57 ms",

"include_io": true,

"framework_type": "onnx",

"framework_version": "1.10.0",

"inference_hardware": "GPU",

"date": "11:16:55__02-03-2022",

"ctime": 1643879815,

"h_to_d_mean": null,

"d_to_h_mean": null,

"h_to_d_variance": null,

"d_to_h_variance": null

}>

```

## Documentation:

https://docs.deci.ai/docs/infery

Raw data

{

"_id": null,

"home_page": "https://github.com/Deci-AI/infery-examples",

"name": "infery-gpu",

"maintainer": "",

"docs_url": null,

"requires_python": ">=3.7.5",

"maintainer_email": "",

"keywords": "Deci,AI,Inference,Deep Learning",

"author": "Deci AI",

"author_email": "rnd@deci.ai",

"download_url": "",

"platform": null,

"description": "# Infery\n`infery` is a runtime engine that simplifies inference, benchmark and profiling.<br>\n`infery` supports all major deep learning frameworks, with a <b>unified and simple API</b>.\n\n## Quickstart and Examples\n<a href=\"https://github.com/Deci-AI/infery-examples\">\n <img src=\"https://img.shields.io/badge/Public-Infery Examples-green\">\n</a>\n\n### Installation Instructions\nhttps://docs.deci.ai/docs/installing-infery-1\n\n## Usage\n\n\n\n#### 1. Load an example model\n```python\n>>> import infery, numpy as np\n>>> model = infery.load(model_path='MyNewTask_1_0.onnx', framework_type='onnx', inference_hardware='gpu')\n```\n\n#### 2. Predict with a random numpy input\n\n```python\n>>> inputs = [np.random.random(shape).astype('float32') for shape in [[1, 243, 30, 40], [1, 172, 60, 80], [1, 102, 120, 160], [1, 64, 240, 320], [1, 153, 15, 20]]]\n>>> model.predict(inputs)\n[array([[[[-3.5920768, -3.5920792, -3.592102 , ..., -3.592099 ,\n -3.5920944, -3.5920882],\n [-3.592076 , -3.5919113, -3.592086 , ..., -3.5921211,\n -3.5921066, -3.5920937],\n [-3.592083 , -3.592073 , -3.5920823, ..., -3.5921297,\n -3.592109 , -3.5920937],\n ...,\n [-3.5920753, -3.5917826, -3.591444 , ..., -3.580754 ,\n -3.5816329, -3.582549 ],\n [-3.592073 , -3.5917459, -3.591257 , ..., -3.5817945,\n -3.5820704, -3.5835373],\n [-3.592073 , -3.5920737, -3.5920737, ..., -3.5853324,\n -3.5845006, -3.5856297]],\n \n [[-5.862198 , -5.8567815, -5.851764 , ..., -5.86396 ,\n -5.8639617, -5.865011 ],\n [-5.858771 , -5.8493323, -5.841462 , ..., -5.8617773,\n -5.8614554, -5.8633246],\n [-5.8560567, -5.844124 , -5.8351245, ..., -5.8598166,\n -5.859674 , -5.8624067],\n ...,\n [-5.8608136, -5.854358 , -5.8467784, ..., -5.8504496,\n -5.8563104, -5.8615303],\n [-5.86313 , -5.8587003, -5.8531966, ..., -5.8534794,\n -5.8581944, -5.8625536],\n [-5.865306 , -5.8623176, -5.8593984, ..., -5.8581495,\n -5.861572 , -5.865295 ]],\n \n [[-8.843734 , -8.840406 , -8.837172 , ..., -8.840413 ,\n -8.842026 , -8.843931 ],\n [-8.840792 , -8.836787 , -8.831037 , ..., -8.836103 ,\n -8.839954 , -8.842534 ],\n [-8.838855 , -8.833998 , -8.82706 , ..., -8.835106 ,\n -8.839087 , -8.841538 ],\n ...,\n [-8.842419 , -8.840865 , -8.838625 , ..., -8.83943 ,\n -8.843677 , -8.845087 ],\n [-8.844379 , -8.84402 , -8.843141 , ..., -8.84185 ,\n -8.844696 , -8.845202 ],\n [-8.844775 , -8.845572 , -8.845177 , ..., -8.843876 ,\n -8.8444395, -8.845926 ]]]], dtype=float32)]\n```\n\n#### 3. Benchmark the model on the current hardware\n```python\n>>> model.benchmark(batch_size=1)\n-INFO- Benchmarking the model in batch size 1 and dimensions [(243, 30, 40), (172, 60, 80), (102, 120, 160), (64, 240, 320), (153, 15, 20)]... \n<ModelBenchmarks: {\n \"batch_size\": 1,\n \"batch_inf_time\": \"6.57 ms\",\n \"batch_inf_time_variance\": \"0.02 ms\",\n \"model_memory_used\": \"1536.00 mb\",\n \"system_startpoint_memory_used\": \"1536.00 mb\",\n \"post_inference_memory_used\": \"1536.00 mb\",\n \"total_memory_size\": \"7982.00 mb\",\n \"throughput\": \"152.32 fps\",\n \"sample_inf_time\": \"6.57 ms\",\n \"include_io\": true,\n \"framework_type\": \"onnx\",\n \"framework_version\": \"1.10.0\",\n \"inference_hardware\": \"GPU\",\n \"date\": \"11:16:55__02-03-2022\",\n \"ctime\": 1643879815,\n \"h_to_d_mean\": null,\n \"d_to_h_mean\": null,\n \"h_to_d_variance\": null,\n \"d_to_h_variance\": null\n}>\n```\n\n## Documentation:\nhttps://docs.deci.ai/docs/infery\n\n\n\n",

"bugtrack_url": null,

"license": "Deci Infery License",

"summary": "Deci Run-Time Engine",

"version": "3.9.0",

"split_keywords": [

"deci",

"ai",

"inference",

"deep learning"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "d852ade9981325752363b9379336d2a27165ec85a17c58c02787bc2ba8db0899",

"md5": "1794db97d10807fc5acb8241bb10260a",

"sha256": "bfcc07837ac57985cbc0d594183054671af30052f70e438b344d83405db450de"

},

"downloads": -1,

"filename": "infery_gpu-3.9.0-cp37-cp37m-manylinux_2_17_x86_64.manylinux2014_x86_64.whl",

"has_sig": false,

"md5_digest": "1794db97d10807fc5acb8241bb10260a",

"packagetype": "bdist_wheel",

"python_version": "cp37",

"requires_python": ">=3.7.5",

"size": 1748450,

"upload_time": "2023-01-12T08:54:15",

"upload_time_iso_8601": "2023-01-12T08:54:15.396956Z",

"url": "https://files.pythonhosted.org/packages/d8/52/ade9981325752363b9379336d2a27165ec85a17c58c02787bc2ba8db0899/infery_gpu-3.9.0-cp37-cp37m-manylinux_2_17_x86_64.manylinux2014_x86_64.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "dcb79c8d438597c434e958d73942ed290564716ca352c7d4144df6e9c5c2028e",

"md5": "1dafc920addfb9ddd9656b0630c9538e",

"sha256": "6fce9d76b703c32411d05223af99e7d39c3227b47cd766dbc6f68fd5a5def8aa"

},

"downloads": -1,

"filename": "infery_gpu-3.9.0-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl",

"has_sig": false,

"md5_digest": "1dafc920addfb9ddd9656b0630c9538e",

"packagetype": "bdist_wheel",

"python_version": "cp38",

"requires_python": ">=3.7.5",

"size": 1850254,

"upload_time": "2023-01-12T08:54:18",

"upload_time_iso_8601": "2023-01-12T08:54:18.686805Z",

"url": "https://files.pythonhosted.org/packages/dc/b7/9c8d438597c434e958d73942ed290564716ca352c7d4144df6e9c5c2028e/infery_gpu-3.9.0-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "cc72d0e374bd72d888d32dc1d677b02958a51a900897db0dfa7ff321665427a0",

"md5": "b3d77099926eca9c4f7e72a6ae383b52",

"sha256": "24e0db428df5d5cbec40568ac9c800b6de4cb0fbbb64acc08d988b26dbbc1113"

},

"downloads": -1,

"filename": "infery_gpu-3.9.0-cp39-cp39-manylinux_2_17_x86_64.manylinux2014_x86_64.whl",

"has_sig": false,

"md5_digest": "b3d77099926eca9c4f7e72a6ae383b52",

"packagetype": "bdist_wheel",

"python_version": "cp39",

"requires_python": ">=3.7.5",

"size": 1858776,

"upload_time": "2023-01-12T08:54:22",

"upload_time_iso_8601": "2023-01-12T08:54:22.039674Z",

"url": "https://files.pythonhosted.org/packages/cc/72/d0e374bd72d888d32dc1d677b02958a51a900897db0dfa7ff321665427a0/infery_gpu-3.9.0-cp39-cp39-manylinux_2_17_x86_64.manylinux2014_x86_64.whl",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2023-01-12 08:54:15",

"github": true,

"gitlab": false,

"bitbucket": false,

"github_user": "Deci-AI",

"github_project": "infery-examples",

"travis_ci": false,

"coveralls": false,

"github_actions": false,

"lcname": "infery-gpu"

}